Abstract

Purpose of Review

A transdisciplinary systems approach to the design of an artificial intelligence (AI) decision support system can more effectively address the limitations of AI systems. By incorporating stakeholder input early in the process, the final product is more likely to improve decision-making and effectively reduce kidney discard.

Recent Findings

Kidney discard is a complex problem that will require increased coordination between transplant stakeholders. An AI decision support system has significant potential, but there are challenges associated with overfitting, poor explainability, and inadequate trust. A transdisciplinary approach provides a holistic perspective that incorporates expertise from engineering, social science, and transplant healthcare. A systems approach leverages techniques for visualizing the system architecture to support solution design from multiple perspectives.

Summary

Developing a systems-based approach to AI decision support involves engaging in a cycle of documenting the system architecture, identifying pain points, developing prototypes, and validating the system. Early efforts have focused on describing process issues to prioritize tasks that would benefit from AI support.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The demand for kidneys far outpaces supply. In the USA, nearly 150,000 people are on the waiting list for kidney transplants, but only 24,273 kidneys were transplanted in 2019 [1]. Notably, despite the large unmet need, 20% of procured deceased donor kidneys are discarded in current practice [2]. Even with lower quality organs, transplantation has been proven to be life-extending, cost-effective, and often cost-saving for appropriate candidates [3]. However, as shown in Fig. 1, the discard rate rises exponentially with measures of organ quality, such as higher Kidney Donor Profile Index (KDPI) scores [4]. The high discard rate for higher KDPI scores represents a substantial opportunity for increased kidney utilization, primarily from older donors with more comorbidity [5]. Artificial intelligence (AI) decision support may improve kidney utilization, if effectively designed to support clinician decision-making and provide better real-time access to data-driven predictions.

Kidney discard (dotted blue) increases for high Kidney Donor Profile Index (KDPI) organs, despite high rates of graft survival after 5 years (solid green) based on Scientific Registry Transplant Recipients (SRTR) data [1]

AI has been applied in many healthcare applications ranging from feature identification for radiology images [6] to prediction of clinical events from electronic health records [7] to classification for mental health diagnoses using social media data [8]. One popular approach to AI is deep learning, which is a subfield of machine learning that uses algorithms inspired by the structure of the brain called artificial neural networks. Essentially, the algorithms process data in layers to extract features. There can be hundreds of hidden layers or “neurons” between the inputs and outputs of the model. Advances in deep learning aim to increase accuracy while minimizing computations, maximizing speed, and reducing pre-processing requirements. For example, deep convolutional networks (also called DenseNet) increase the number of connections between layers in a feed-forward fashion to increase feature reuse [9]. A feature can be any measurable property within the data, similar to the explanatory variables used in linear regression. However, the features and number of layers are determined by the algorithm using the data, rather than by experts. Compared to statistical models, AI models can incorporate more data types (e.g., numerical, image, natural language) with fewer assumptions to improve prediction accuracy. In the context of transplant healthcare, United Network for Organ Sharing (UNOS) maintains extensive records associated with donor and recipient characteristics, donor-recipient matching, and patient outcomes [10]. AI models have the potential to speed up the discovery of insights from these types of large datasets to improve quality of care, reduce misdiagnosis, and optimize treatments.

However, AI systems can suffer from bias and make systematically poor judgments. For example, IBM’s Watson used natural language processing to analyze electronic health records and medical databases to make cancer treatment recommendations. MD Anderson agreed to test the prototype in their leukemia department and canceled the project after spending $62 million because it routinely gave clearly erroneous recommendations, reducing clinician trust [11]. In the context of criminal justice, AI models that predict the probability of recidivism for parole decisions tend to predict higher rates of reoffending for black people, contributing to systemic racism [12,13,14]. Even in cases where AI systems show improved outcomes, they can struggle with low adoption, a phenomenon known as algorithm aversion [15, 16••]. There is reason to believe that AI can improve healthcare, but it must be executed carefully to avoid negative outcomes.

To effectively integrate AI into transplant healthcare, a transdisciplinary systems approach is needed to design and evaluate the use of AI decision support systems in a participatory research framework. A transdisciplinary approach leverages knowledge and methods from engineering, social science, and the decision domain (transplant medicine) [17]. For complex systems, a systems approach provides tools and techniques for quantifying interactions between system elements to discover emergent properties [18]. Participatory research actively engages end-users, as well as decision-makers, early in the research process to build mutual trust within the community and identify potential barriers early [19]. This article highlights the promise and limitations of using AI decision support to motivate the use of a transdisciplinary systems approach. We frame this discussion in the context of our active application of this approach to the problem of kidney discard.

Promise and Limitations of Artificial Intelligence Decision Support

An AI decision support system may be able to increase decision speed, reduce cognitive burden to improve decision outcomes, and facilitate communication between patients and clinicians. Although AI decision support systems have substantial potential, there is limited evidence of benefits. Most existing studies for AI systems are retrospective and have not been validated in a clinical setting [20•]. Integrating AI into healthcare may be particularly helpful for standardizing care when there is high heterogeneity and inconsistency between clinicians. The level of automation will vary depending on the decision context and operator expertise. Automation levels can vary from no assistance, to suggestions, to supervised operation, to unsupervised operation [21]. In many cases, the cost savings associated with AI systems are related to faster operations and reduced labor costs.

In the context of transplant care, researchers have developed models to predict graft outcomes and improve donor-recipient matching. In a 2020 review of 9 liver transplant models, the most common AI approach for predicting graft survival was artificial neural networks, and the number of input variables ranged from 10 to 276. When compared to more standard liver metrics [i.e., Model for End-Stage Liver Disease (MELD), balance of risk (BAR), and survival outcome following liver transplantation (SOFT)], multiple studies demonstrated that the AI predictions were more accurate [22••]. In a 2019 review of 14 kidney transplant models, decision trees and artificial neural networks were the most common and had the highest accuracy for predicting graft failure based on donor and recipient characteristics [23••]. However, another 2019 review of 7 kidney transplant models found mixed evidence that machine learning approaches exceeded the performance of traditional statistical models [24••].

Despite increasing evidence that AI models are more accurate, there has been little progress integrating these tools into transplant healthcare. We highlight three primary barriers, (1) overfitting and bias, (2) explainability, and (3) trust and ethical decisions, which limit the effectiveness of AI models and are being actively studied across engineering and social science disciplines to identify solutions.

Overfitting and Bias

AI systems struggle with overfitting, particularly for unbalanced data. A model is overfitted when it closely matches training data and generates poor predictions for new scenarios. This is often attributed to the data insufficiency problem, where there is inadequate data to properly train a model or the data are highly unbalanced, which also reduces the number of training cases for an event of interest. In the context of kidney transplants, the decision to accept a kidney is a complex calculus and some key data are not available in the dataset (e.g., anticipated ischemic time, biopsy results, and recipient cardiac status). Similarly, a model can be biased if the data are biased (e.g., due to racial or gender discrimination) or the model systematically deviates from the data.

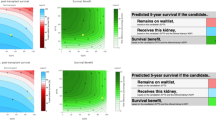

This limitation may be addressed via improved modeling techniques, training human operators to compensate, or some combination. Deep learning models are able to adapt to changing data over time, as shown in Fig. 2. The model output is influenced by both the input data and the training data. As decisions are made, those actions are used as feedback to train the model so it improves over time. For larger shifts, retraining models for new information is a time-consuming and labor-intensive process, but transfer learning and ensemble models can improve real-time predictions. Transfer learning, which only alters the final deep learning layers to accommodate new data and labels, is the most promising approach to rapidly accommodate incremental data changes [25]. Ensemble models are used to reduce the deep learning models’ variance and combine multiple predictions [26]. This is particularly valuable for fusing multiple stakeholder- or task-specific AI models into a higher-level model. However, regardless of the effectiveness of these techniques in theory, there will always be edge cases where the AI model makes poor predictions. In those cases, users need to be able to compensate for model limitations. This will likely require users to understand why a model might make mistakes and what type of information would be out of scope for the AI inputs. For example, when deciding whether to perform a transplant, transplant surgeons often consider information beyond what is available in the UNOS database, such as kidney imaging. When evaluating AI models, the historical record is assumed to be the ground truth, given the lack of available counterfactuals. However, researchers acknowledge that the historical record is likely biased and a research area focused on Fairness, Accountability, and Transparency (FAccT or FAT) is developing [27].

Explainability

AI systems are unlikely to be adopted if they are perceived as black-boxes and difficult to use. The technology acceptance model suggests that adoption is based on the ease of use and usefulness [28], including for AI systems [29] in healthcare contexts [30]. Deep learning models are often perceived as a black-box, making it difficult for clinicians to understand how a model works and how trustworthy it is [31]. In addition, there will always be some measure of uncertainty inherent in the output of an AI model. That uncertainty may be aleatoric (e.g., due to chance, poor compatibility between organ and candidate), epistemic (e.g., measurement error, catch-all rejection reasons), and ontological (e.g., structural, model validation process does not align with model usage) [32].

Explainable models are able to communicate how and why a specific recommendation or outcome has been derived [33]. Deep learning models may include anywhere from several to hundreds of layers and are best known for extracting high-level abstract features. Developers can use feature relevance scores to quantify the contribution of a specific feature to the model’s prediction and communicate that information to the user via linguistic and numerical expressions [34]. However, in some cases, providing additional information can reduce accuracy and increase confidence [35]. Even experts are sensitive to cognitive biases and heuristics, such as confirmation bias, where users are more confident because the AI recommendation aligns with their initial perception, and anchoring effects, where users under (or over)-estimate the effect of choosing a different option because of anchoring on a provided number [36]. Experimental evidence is needed to ensure that the AI system’s communication has the desired effect [37]. For example, preliminary work on communicating uncertainty for deep learning models found that a confidence bar visualization had little effect on decision-making [38].

Trust and Ethical Decisions

Even when there is a human-in-the-loop or in a supervisory role, people do not always trust or collaborate well with recommendations from AI systems. They may not want AI systems to make decisions dealing with ethics, including in medical contexts, even if the AI system is limited to an advisory role [39••]. Even if the AI system makes the same decision as a person, people tend to perceive the AI system as less trustworthy and more blameworthy [40]. In general, trust is reduced and the AI is perceived as morally wrong, if the AI system errs, is biased, or engages in unethical behavior [41].

The way an AI system is framed can enhance trust, even in the context of moral decisions. For example, anthropomorphism and perceptions of an AI having more mental capacity can increase trust [42] but can also enhance perceptions of it being morally wrong if it errs [43]. In addition, when users have the ability to make slight changes to the AI after it errs, those users continue to use it [44]. Trust, confidence, and ultimately use of a system can be highly eroded by the perception that an AI system has errors or is subject to potential errors or bias.

Value of a Transdisciplinary Systems Approach

A transdisciplinary systems approach solves complex problems that occur at the interfaces between disciplines and limit the implementation of new technologies across domains. These domains can be decomposed as a system of systems, with multiple parts that work together and are independent systems in their own right, such as aerospace manufacturing [45]. This is particularly important for complex adaptive systems, which evolve and adapt over time, such as disease dynamics [46]. By conceptualizing transplant healthcare using these systems theories, we can leverage techniques for documenting existing and future states to support the introduction of new technologies, like AI.

Transdisciplinary science is shifting how we scope problems, create models, and evaluate interventions. For example, adequately modeling food and nutrition security requires integrated models of the environment (e.g., to determine impacts of pollution on soil quality), agricultural systems (e.g., to estimate food availability), public health (e.g., to predict malnutrition), and individual behavior (e.g., to anticipate demand for certain types of food). Modeling these aspects separately fails to address the challenges that occur at the interfaces (e.g., increased demand for fresh food may lead to unsustainable farming practices, which further reduces cultivatable land) [47]. Systems dynamics and agent-based modeling techniques are particularly effective for supporting these types of integrated simulations [48]. However, transdisciplinary research is only possible with interdisciplinary teams that bring together their siloed theories, data collection methods, and analytical techniques from engineering, social science, and the humanities into a holistic conceptual framework. In addition, by including domain experts and users in the team, both academics and practitioners benefit from more relevant research, capacity building, and increased potential for implementation [49].

To this end, model-based systems engineering (MBSE), first introduced in 1993, has developed techniques to document and visualize complex systems to identify requirements and anticipate emergent properties of complex systems. MBSE uses formal models to document a system and replaces the traditional document-based approach to product development. This approach increases consistency and scales with complexity for system specification, design, and validation. These methods rely on a system architecture, which centralizes system documentation by articulating various views to represent the “sole source of truth” [50]. The most popular modeling language is Systems Modeling Language (SysML) [51], which can be visualized by Cameo Systems Modeler software [52]. First developed in 2003, SysML is used to describe systems (i.e., making pictures) as well as support an executable system architecture (i.e., running simulations). It is primarily used for design and validation of systems, rather than implementation. In the design phase, SysML models can improve interoperability and support complex concurrent design processes. In the validation phase, SysML models can support evaluation of the effect of a new technology after implementation [53••]. These tools have been extensively adopted in the defense and aerospace industries to guide complex engineering design projects [54]. However, systems architecting practices have historically approached human behavior as an afterthought. MBSE tools can facilitate communication between disciplines by documenting and translating between the different perspectives (e.g., engineers and psychologists) [55]. In the context of designing for human–machine teams, a recent SysML extension articulates the roles and responsibilities of human versus machine team members and supports interdependence analysis to evaluate teamwork outcomes [56•]. There is increasing interest in applying these techniques to other complex problems, especially in the context of healthcare [57, 58].

Applying a Transdisciplinary Systems Approach to Reduce Kidney Discard

We are currently engaged in ongoing work to apply a transdisciplinary systems approach to the design and implementation of an AI decision support system to reduce kidney discard. As summarized in Fig. 3, this approach is developed through a cyclical process wherein we (1) document the current system architecture, (2) identify pain points that would benefit from AI support, (3) develop prototypes of an AI decision support system, and (4) verify and validate the AI system to ensure we achieve desirable outcomes. An iterative cyclical process incorporates multiple opportunities for stakeholder engagement that benefit from a “fail fast” philosophy.

Based on interview data, we have developed a SysML activity diagram to represent the workflow, which is one aspect of the organ allocation system architecture, across and between these stakeholder groups (see Fig. 4). In the transplant context, key stakeholders include organ procurement organizations (OPO), transplant centers, and patients. In addition, we have identified six major process issues in the kidney transplant workflow that could be alleviated via support from an AI decision support system. Much of the work to place less desirable (“lower quality”) kidneys occurs after midnight (process issue 1: environmental stress). Transplant centers receive organ offers via DonorNet and have 1 h to evaluate the offer and enter a decline or provisional acceptance of the offer (2: time pressure). Often, transplant centers will provisionally accept an offer to keep their options open, even if there is a low likelihood that they will ultimately accept the offer (3: local optimization). The transplant team receives access to extensive information including the donor’s medical history, known risk factors for organ function (e.g., age, cause of death, diabetes, hypertension, hepatitis C infection status, KDPI). After the kidney is procured, transplant center staff can adjust their decision as more information becomes available or based on patient input and compatibility (4: evolving information). Ultimately, the surgeon has until the moment of transplantation to decide to decline a kidney offer. Offers that are rejected at this stage are at the highest risk of discard and very difficult to re-allocate.

When evaluating kidney offers, transplant teams must assess the benefits and costs of waiting for a higher quality kidney versus moving forward with a lower quality kidney. In addition, when deciding whether to perform a transplant with a given donor, surgeons consider many factors, ranging from medical compatibility to assessment of complex donor clinical information including imaging and other diagnostics, which can all affect the success of the transplant (5: complex decision space). Further, transplant centers, and individual surgeons, vary in their ability to care for patients with complications (e.g., patients who get hepatitis C infections from donors) and risk posture based on recent failed transplants (6: heterogeneous risk posture). All of these process issues interact with each other to increase the complexity of addressing kidney discard via an AI decision support tool.

In addition to articulating the current system architecture, we are relying on stakeholder input to prioritize which tasks to focus on for an AI decision support system. In the context of online workshops, we engaged small groups of transplant stakeholders to organize tasks in an effort vs. impact prioritization matrix. Focusing on the steps that are high effort to execute and high impact in affecting kidney discard, the top candidates for an AI decision support system include (1) OPO efforts to reallocate denied kidneys and (2) transplant center/patient decision-making to accept/deny high-risk kidney offers.

Conclusions

AI systems can improve healthcare delivery, but it is challenging to design an effective decision support system. In the context of transplant healthcare, kidney discard is a complex problem that will require coordination across stakeholders and shifting clinician behavior. An AI decision support system could support efforts to better leverage real-time data-driven decision-making during this transition toward increased kidney utilization. However, AI systems frequently suffer from overfitting, poor explainability, and low user trust, reducing the likelihood of widespread adoption. Researchers in engineering and social science disciplines are actively identifying strategies to improve human–machine interaction for these types of systems. For overfitting, promising strategies include the use of transfer learning and ensemble models as well as improved training to allow human users to compensate for model limitations. Deep learning models can generate feature relevance scores to communicate how specific features contribute to a prediction, but experimental evidence is needed to determine if these communications have the desired outcomes. Trustworthy models tend to use anthropomorphism to increase perceptions of the AI having mental capacity or allow users to make small changes to the model after it errs. However, there have been few opportunities to examine the effect of combining these approaches in a real-world clinical environment.

Using a transdisciplinary systems approach in a participatory research framework, we can iteratively refine an AI decision support system design to maximize potential effectiveness. Transdisciplinary collaborations support the development of both the operation and interface of an AI decision support system in tandem. Rather than solving a challenge like overfitting with technology alone, there are opportunities to leverage human expertise to increase the system-level effectiveness. Similarly, early engagement of domain experts (e.g., transplant stakeholders) can increase trust and the likelihood of developing an implementable system. A system architecture is useful for visualizing the system for cross-discipline conversations and solution development.

Based on ongoing work to develop an AI decision support system to reduce kidney discard, we outline a cyclical approach for designing and testing the system design that involves (1) documenting the system architecture, (2) identifying pain points, (3) developing prototypes, and (4) validating the system. Based on stakeholder input, we have identified six system characteristics that will inform the design, including environmental stress, time pressure, local optimization, evolving information, complex decision space, and heterogeneous risk posture. We are developing prototype AI systems for (1) OPO efforts to reallocate denied kidneys and (2) transplant center decisions to accept/deny high-risk kidney offers [59]. This approach is time consuming and requires extensive stakeholder engagement. But ultimately, this will lead to a better product and better outcomes for patients.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Hart A, Lentine KL, Smith JM, Miller JM, Skeans MA, Prentice M, Robinson A, Foutz J, Booker SE, Israni AK, Hirose R, Snyder JJ. OPTN/SRTR 2019 annual data report: kidney. Am J Transplant. 2021;21(Suppl 2):21–137. https://doi.org/10.1111/ajt.16502.

Mohan S, Chiles MC, Patzer RE, Pastan SO, Husain SA, Carpenter DJ, Dube GK, Crew RJ, Ratner LE, Cohen DJ. Factors leading to the discard of deceased donor kidneys in the United States. Kidney Int. 2018;1:94(1):187–98.

Axelrod DA, Schnitzler MA, Xiao H, Irish W, Tuttle-Newhall E, Chang SH, Kasiske BL, Alhamad T, Lentine KL. An economic assessment of contemporary kidney contemporary kidney transplant practice. Am J Transplant. 2018;18(5):1168–76.

Alhamad T, Axelrod D, Lentine KL: The epidemiology, outcomes, and costs of contemporary kidney transplantation. In: Himmelfarb J, Ikizler TA, editors. Chronic kidney disease, dialysis, and transplantation: a companion to Brenner and Rector’s the kidney. 4th ed. New York: Elsevier; 2018.

Aubert O, Reese PP, Audry B, Bouatou Y, Raynaud M, Viglietti D, Legendre C, Glotz D, Empana J, Jouven X, Lefaucheur C, Jacquelinet C, Loupy A. Disparities in acceptance of deceased donor kidneys between the United States and France and estimated effects of increased US acceptance. JAMA Intern Med. 2019;179(10):1365–74.

Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18(8):500–10. https://doi.org/10.1038/s41568-018-0016-5.

Xiao C, Choi E, Sun J. Opportunities and challenges in developing deep learning models using electronic health records data: a systematic review. J Am Med Inform Assoc. 2018;25(10):1419–28. https://doi.org/10.1093/jamia/ocy068.

Eichstaedt JC, Smith RJ, Merchant RM, Ungar LH, Crutchley P, Preoţiuc-Pietro D, et al. Facebook language predicts depression in medical records. Proc Natl Acad Sci. 2018;115(44):11203–8.

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition 2017: 4700–4708.

Organ Procurement and Transplantation Network. OPTN database. Available from: https://optn.transplant.hrsa.gov/data/about-data/optn-database/

Strickland E. IBM Watson, Heal thyself: how IBM overpromised and underdelivered on AI health care. IEEE Spectrum. 2019;56(4):24–31. https://ieeexplore.ieee.org/document/8678513.

Rudin C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell. 2019;1(5):206–15. https://doi.org/10.1038/s42256-019-0048-x.

O'Neil C. Weapons of math destruction: how big data increases inequality and threatens democracy. New York: Crown; 2016.

Eubanks V. Automating inequality: how high-tech tools profile, police, and punish the poor. New York: St. Martin's Press, 2018.

Dawes RM, Faust D, Meehl PE. Clinical versus actuarial judgment. Science. 1989;243(4899):1668–74.

•• Burton JW, Stein MK, Jensen TB. A systematic review of algorithm aversion in augmented decision making. J Behav Decis Mak. 2020;33(2):220–39. Review of algorithm aversion that determines need for integrated theory and transdisciplinary research.

Stokols D. Toward a science of transdisciplinary action research. Am J Community Psychol. 2006;38:63–77. https://doi.org/10.1007/s10464-006-9060-5.

Luke DA, Stamatakis KA. Systems science methods in public health: dynamics, networks, and agents. Annu Rev Public Health. 2012;33:357–76. https://doi.org/10.1146/annurev-publhealth-031210-101222.

Cargo M, Mercer SL. The value and challenges of participatory research: strengthening its practice. Annu Rev Public Health. 2008;29(1):325–50. https://doi.org/10.1146/annurev.publhealth.29.091307.083824.

• Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med 2019:25:44–56. Most AI models have not been evaluated in a clinical setting.

Sheridan TB, Parasuraman R. Human-automation interaction. Rev Hum Factors Ergon. 2005;1(1):89–129. https://doi.org/10.1518/155723405783703082.

•• Wingfield LR, Ceresa C, Thorogood S, Fleuriot J, Knight S. Using artificial intelligence for predicting survival of individual grafts in liver transplantation: a systematic review. Liver Transpl 2020:26(7):922-934. Review of existing liver transplant AI models that suggests AI models are more accurate than statistical models.

•• Nursetyo AA, Syed-Abdul S, Uddin M, Li YJ. Graft rejection prediction following kidney transplantation using machine learning techniques: a systematic review and meta-analysis. Stud Health Technol Inform 2019:21:10-14. In review of transplant AI models, decision trees and artificial neural networks had the highest accuracy.

•• Senanayake S, White N, Graves N, Healy H, Baboolal K, Kularatna S. Machine learning in predicting graft failure following kidney transplantation: a systematic review of published predictive models. Int J Med Inform 2019:130:103957. Review of existing kidney transplant AI models that found mixed evidence comparing AI and statistical models.

Phan TV, Sultana S, Nguyen TG, Bauschert T. (2020). Q-TRANSFER: a novel framework for efficient deep transfer learning in networking. In 2020 international conference on artificial intelligence in information and communication 2020:146–151.

Xiao Y, Wu J, Lin Z, Zhao X. A deep learning-based multi-model ensemble method for cancer prediction. Comput Methods Programs Biomed. 2018;153:1–9.

Chiao V. Fairness, accountability and transparency: notes on algorithmic decision-making in criminal justice. Int J Law Context. 2019;15(2):126–39.

Lee Y, Kozar KA, Larsen KR. The technology acceptance model: past, present, and future. Commun Assoc Inf Syst. 2003;12(1):50.

Wright D, Shank DB. Smart home technology diffusion in a living laboratory. J Tech Writ Commun. 2020;50(1):56–90.

Alhashmi SF, Salloum SA, Abdallah S. Critical success factors for implementing artificial intelligence (AI) projects in Dubai Government United Arab Emirates (UAE) health sector: applying the extended technology acceptance model (TAM). In International conference on advanced intelligent systems and informatics 2019: 393–405.

Vuppala SK, Behera M, Jack H, Bussa N. (2020). Explainable deep learning methods for medical imaging applications. In 2020 IEEE 5th international conference on computing communication and automation 2020:334–339.

Spiegelhalter D. Risk and uncertainty communication. Ann Rev Stat Appl. 2017;4(1):31–60.

Lima S, Terán L, Portmann E. (2020). A proposal for an explainable fuzzy-based deep learning system for skin cancer prediction. In 2020 seventh international conference on eDemocracy & eGovernment 2020:29–35.

Panesar A. Machine learning and AI ethics. In Machine learning and AI for healthcare. 2nd ed. Berkeley: Apress; 2021.

Hall CC, Ariss L, Todorov A. The illusion of knowledge: when more information reduces accuracy and increases confidence. Organ Behav Hum Decis Process. 2007;103(2):277–90.

Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185(4157):1124–31.

Van Der Bles AM, Van Der Linden S, Freeman AL, Mitchell J, Galvao AB, Zaval L, Spiegelhalter DJ. Communicating uncertainty about facts, numbers and science. R Soc Open Sci. 2019;6(5):181870.

Subramanian HV, Canfield C, Shank DB, Andrews L, Dagli C. Communicating uncertain information from deep learning models in human machine teams. In Proceedings of the American Society for Engineering Management 2020.

•• Bigman YE, Gray K. (2018). People are averse to machines making moral decisions. Cognition 2018:181:21-34. Even when the AI system is in an advisory role, people avoid using AI for moral decisions.

Young AD, Monroe AE. Autonomous morals: inferences of mind predict acceptance of AI behavior in sacrificial moral dilemmas. J Exp Soc Psychol. 2019;85:103870.

Shank DB, DeSanti A, Maninger T. When are artificial intelligence versus human agents faulted for wrongdoing? Moral attributions after individual and joint decisions. Inf Commun Soc. 2019;22(5):648–63. https://doi.org/10.1080/1369118X.2019.1568515.

Waytz A, Heafner J, Epley N. The mind in the machine: anthropomorphism increases trust in an autonomous vehicle. J Exp Soc Psychol. 2014;52:113–7.

Shank DB, DeSanti A. Attributions of morality and mind to artificial intelligence after real-world moral violations. Comput Hum Behav. 2018;86:401–11. https://doi.org/10.1016/j.chb.2018.05.014.

Dietvorst BJ, Simmons JP, Massey C. Overcoming algorithm aversion: people will use imperfect algorithms if they can (even slightly) modify them. Manage Sci. 2016;64(3):1155–70.

Maier MW. Architecting principles for systems-of-systems. Syst Eng. 1998;1(4):267–84.

Holland JH. Complex adaptive systems. Daedalus. 1992;121(1):17–30.

Hammond RA, Dubé L. A systems science perspective and transdisciplinary models for food and nutrition security. Proc Natl Acad Sci USA. 2012;109(31):12356–63. https://doi.org/10.1073/pnas.0913003109.

Luke DA, Stamatakis KA. Systems science methods in public health: dynamics, networks, and agents. Annu Rev Public Health. 2012;33:357–76. https://doi.org/10.1146/annurev-publhealth-031210-101222.

Fritz L, Schilling T, Binder CR. Participation-effect pathways in transdisciplinary sustainability research: an empirical analysis of researchers’ and practitioners’ perceptions using a systems approach. Environ Sci Policy. 2019;2019(102):65–77. https://doi.org/10.1016/j.envsci.2019.08.010.

Madni AM, Sievers M. Model-based systems engineering: motivation, current status, and research opportunities. Syst Eng. 2018;21(3):172–90. https://doi.org/10.1002/sys.21438.

SysML.org. SysML specifications - current version: OMG SysML 1.6. Retrieved from https://sysml.org/sysml-specs/

Dassault Systems. Cameo Enterprise Architecture. Retrieved from https://www.nomagic.com/products/cameo-enterprise-architecture

•• Wolny S, Mazak A, Carpella C, Geist V, Wimmer M. Thirteen years of SysML: a systematic mapping study. Softw Syst Model. 2020;19(1):111–169. https://doi.org/10.1007/s10270-019-00735-y. Review of SysML applications across domains and the systems engineering lifecycle.

Piaszczyk C. Model based systems engineering with department of defense architectural framework. Syst Eng. 2011;14(3):305–26.

Watson ME, Rusnock CF, Colombi JM, Miller ME. Human-centered design using system modeling language. Journal of Cognitive Engineering and Decision Making. 2017;11(3):252–69. https://doi.org/10.1177/1555343417705255.

• Miller ME, McGuirl JM, Schneider MF, Ford TC. Systems modeling language extension to support modeling of human-agent teams. Syst Eng. 2020;23(5):519–33. https://doi.org/10.1002/sys.21546. Development of SysML extension to better model human behavior to support transdisciplinary research.

Clarkson J, Dean J, Ward J, Komashie A, Bashford T. A systems approach to healthcare: from thinking to practice. Future Healthc J. 2018;5(3):151–5. https://doi.org/10.7861/futurehosp.5-3-151.

Dodds S. Systems engineering in healthcare – a personal UK perspective. Future Healthc J. 2018;5(3):160–3. https://doi.org/10.7861/futurehosp.5-3-160.

Ashiku L, Al Amin M, Madria S, Dagli CH. Machine learning models and big data tools for evaluating kidney acceptance. In Proceedings of complex adaptive systems conference on big data, IoT and AI for a smarter future 2021 (to be published).

Acknowledgements

We thank Hannah Felske Elder, Hari Vasudevanallur Subramanian, and Casey Hines for their roles in designing and conducting the stakeholder interviews. In addition, we are grateful for stakeholder input from Organ Procurement Organizations, Transplant Centers, and patients: Sage Bailey, Diane Brockmeier, Matthew Cooper, Meelie DebRoy, Kevin Doerschug, Jameson Forster, Kevin Fowler, Brittney Gabris, Patrick Gee,Christie Gooden, Amanda Grandinetti, Darla Granger, Stephen Gray, Michael Harmon, Holly Jackson, Nichole Jefferson, Martin Jendrisak, Andrea Koehler, Melissa Lichtenberger, Roslyn Mannon, Lori Markham, Gary Marklin, Mae McCandless, Marc Melcher, Whitni Noyes, Angela Pearson, Sam Pederson, Glenda Roberts, Richard Rothweiler, Brian Scheller, John Stalbaum, Meghan Stephenson, Silla Sumerlin, Curtis Warfield, Jason Wellen, David White, Harry Wilkins, and Cody Wooley.

Funding

Casey Canfield, Daniel Shank, Mark Schnitzler, Krista Lentine, Henry Randall, and Cihan Dagli are supported by National Science Foundation Award #2026324. Mark Schnitzler, Krista Lentine and David Axelrod are supported by National institutes of Health (NIH) grant R01DK102981 and Krista Lentine is supported by the Jane A Beckman/Mid- America Transplant Endowed chair.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Mark Schnitzler, Krista Lentine, and David Axelrod have a consulting arrangement with CareDx. Krista Lentine and David Axelrod are Section Editors for this journal, but were not involved in the review of this manuscript. The other authors declare no conflict of interest.

Human and Animal Rights and Informed Consent

All reported studies/experiments with human or animal subjects performed by the authors have been previously published and complied with all applicable ethical standards (including the Helsinki Declaration and its amendments, institutional/national research committee standards, and international/national/institutional guidelines).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Expanding Role of Technology in Organ Transplant

Richard Threlkeld and Lirim Ashiku are co-first authors.

Henry Randall and Cihan Dagli are co-senior authors.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Threlkeld, R., Ashiku, L., Canfield, C. et al. Reducing Kidney Discard With Artificial Intelligence Decision Support: the Need for a Transdisciplinary Systems Approach. Curr Transpl Rep 8, 263–271 (2021). https://doi.org/10.1007/s40472-021-00351-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40472-021-00351-0