Abstract

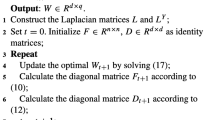

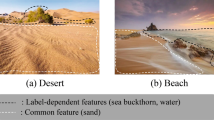

Label Distribution Learning (LDL) is a fine-grained learning paradigm that addresses label ambiguity, yet it confronts the curse of dimensionality. Feature selection is an effective method for dimensionality reduction, and several algorithms have been proposed for LDL that tackle the problem from different views. In this paper, we propose a novel feature selection method for LDL. First, an effective LDL model is trained through a classical LDL loss function, which is composed of the maximum entropy model and KL divergence. Then, to select common and label-specific features, their weights are enhanced by \(l_{21}\)-norm and label correlation, respectively. Considering that direct constraint on the parameter by label correlation will make the label-specific features between relevant labels tend to be the same, we adopt the strategy of constraining the maximum entropy output model. Finally, the proposed method will introduce Mutual Information (MI) for the first time in the optimization model for LDL feature selection, which distinguishes similar features thus reducing the influence of redundant features. Experimental results on twelve datasets validate the effectiveness of the proposed method.

Similar content being viewed by others

Data availability

The relevant experimental data are available from a repository, i.e., http://palm.seu.edu.cn/xgeng/LDL/index.htm.

References

Wang J, Geng X (2023) Label distribution learning by exploiting label distribution manifold. IEEE Trans Neural Netw Learn Syst 34(2):839–852

Dong X, Luo T, Fan R et al (2023) Active label distribution learning via kernel maximum mean discrepancy. Front Comp Sci 17(4):174327

Wang J, Geng X (2021) Label distribution learning machine. In: International Conference on Machine Learning, pp 10749–10759

Tan C, Chen S, Geng X et al (2023) A label distribution manifold learning algorithm. Pattern Recogn 135:109112

Geng X (2016) Label distribution learning. IEEE Trans Knowl Data Eng 28(7):1734–1748

Li W, Lu Y, Chen L et al (2022) Label distribution learning with noisy labels via three-way decisions. Int J Approx Reason 150:19–34

Jia X, Shen X, Li W et al (2023) Label distribution learning by maintaining label ranking relation. IEEE Trans Knowl Data Eng 35(2):1695–1707

Geng X, Qian X, Huo Z et al (2020) Head pose estimation based on multivariate label distribution. IEEE Trans Pattern Anal Mach Intell 44(4):1974–1991

Zhang H, Zhang Y, Geng X (2021) Practical age estimation using deep label distribution learning. Front Comp Sci 15:1–6

Le N, Nguyen K, Tran Q, et al (2023) Uncertainty-aware label distribution learning for facial expression recognition. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp 6088–6097

Nishio M, Matsuo H, Kurata Y et al (2023) Label distribution learning for automatic cancer grading of histopathological images of prostate cancer. Cancers 15(5):1535

Wang J, Zhang F, Jia X et al (2022) Multi-class asd classification via label distribution learning with class-shared and class-specific decomposition. Med Image Anal 75:102294

Ma H, Lu N, Mei J et al (2023) Label distribution learning for scene text detection. Front Comp Sci 17(6):176339

Li H, Huang G, Li Y et al (2022) Concept-based label distribution learning for text classification. Int J Comput Intell Syst 15(1):85

Lin Y, Liu H, Zhao H et al (2023) Hierarchical feature selection based on label distribution learning. IEEE Trans Knowl Data Eng 35(6):5964–5976

Liu J, Lin Y, Du J et al (2023) Asfs: a novel streaming feature selection for multi-label data based on neighborhood rough set. Appl Intell 53(2):1707–1724

Liu J, Lin Y, Ding W et al (2022) Fuzzy mutual information-based multilabel feature selection with label dependency and streaming labels. IEEE Trans Fuzzy Syst 31(1):77–91

Qian W, Xiong Y, Yang J et al (2022) Feature selection for label distribution learning via feature similarity and label correlation. Inf Sci 582:38–59

Ren T, Jia X, Li W, et al (2019) Label distribution learning with label-specific features. In: IJCAI, pp 3318–3324

Gao W, Li Y, Hu L (2021) Multilabel feature selection with constrained latent structure shared term. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2021.3105142

Gao W, Hu L, Zhang P (2020) Feature redundancy term variation for mutual information-based feature selection. Appl Intell 50:1272–1288

Gao W, Hao P, Wu Y et al (2023) A unified low-order information-theoretic feature selection framework for multi-label learning. Pattern Recogn 134:109111

Xiong C, Qian W, Wang Y et al (2021) Feature selection based on label distribution and fuzzy mutual information. Inf Sci 574:297–319

Zhang J, Lin Y, Jiang M, et al (2020) Multi-label feature selection via global relevance and redundancy optimization. In: IJCAI, pp 2512–2518

Cohen I, Huang Y, Chen J et al (2009) Pearson correlation coefficient. Noise reduction in speech processing. Springer, pp 1–4

Zhang J, Wu H, Jiang M et al (2023) Group-preserving label-specific feature selection for multi-label learning. Expert Syst Appl 213:118861

Li W, Chen J, Lu Y, et al (2022) Filling missing labels in label distribution learning by exploiting label-specific feature selection. In: 2022 International Joint Conference on Neural Networks (IJCNN), pp 1–8

Huang J, Li G, Huang Q et al (2017) Joint feature selection and classification for multilabel learning. IEEE Trans Cybern 48(3):876–889

Argyriou A, Evgeniou T, Pontil M (2006) Multi-task feature learning. Advances in neural information processing systems 19

Sun L, Feng S, Liu J et al (2021) Global-local label correlation for partial multi-label learning. IEEE Trans Multimedia 24:581–593

Zhao D, Gao Q, Lu Y et al (2022) Learning multi-label label-specific features via global and local label correlations. Soft Comput 26(5):2225–2239

Kraskov A, Stögbauer H, Grassberger P (2004) Estimating mutual information. Phys Rev E 69(6):066138

Ross BC (2014) Mutual information between discrete and continuous data sets. PLoS ONE 9(2):e87357

Gonçalves EC, Plastino A, Freitas AA (2013) A genetic algorithm for optimizing the label ordering in multi-label classifier chains. In: 2013 IEEE 25th International Conference on Tools with Artificial Intelligence, pp 469–476

Tsoumakas G, Katakis I, Vlahavas I (2008) Effective and efficient multilabel classification in domains with large number of labels. In: Proc. ECML/PKDD 2008 Workshop on Mining Multidimensional Data (MMD’08), pp 53–59

Briggs F, Lakshminarayanan B, Neal L et al (2012) Acoustic classification of multiple simultaneous bird species: a multi-instance multi-label approach. J Acoust Soc Am 131(6):4640–4650

Xu J, Liu J, Yin J et al (2016) A multi-label feature extraction algorithm via maximizing feature variance and feature-label dependence simultaneously. Knowl-Based Syst 98:172–184

Xu N, Liu YP, Geng X (2019) Label enhancement for label distribution learning. IEEE Trans Knowl Data Eng 33(4):1632–1643

Zhang Y, Zhou ZH (2010) Multilabel dimensionality reduction via dependence maximization. ACM Trans Knowl Discov Data (TKDD) 4(3):1–21

Zhang J, Luo Z, Li C et al (2019) Manifold regularized discriminative feature selection for multi-label learning. Pattern Recogn 95:136–150

Kashef S, Nezamabadi-pour H, Nikpour B (2018) Multilabel feature selection: a comprehensive review and guiding experiments. Wiley Interdiscip Rev Data Min Knowl Discov 8(2):e1240

Demiar J, Schuurmans D (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7(1):1–30

Acknowledgements

This work was supported by the National Natural Science Foundation of China through grant No. 62076116, the Natural Science Foundation of Fujian Province through grant No. 2021J02049, and the Open Project Program of Fujian Key Laboratory of Big Data Application and Intellectualization for Tea Industry, Wuyi University through grant No. FKLBDAITI202202.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lin, S., Wang, C., Mao, Y. et al. Feature selection for label distribution learning under feature weight view. Int. J. Mach. Learn. & Cyber. 15, 1827–1840 (2024). https://doi.org/10.1007/s13042-023-02000-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-02000-7