Abstract

We report on the selection process leading to the sixth version of the Mixed Integer Programming Library, MIPLIB 2017. Selected from an initial pool of 5721 instances, the new MIPLIB 2017 collection consists of 1065 instances. A subset of 240 instances was specially selected for benchmarking solver performance. For the first time, these sets were compiled using a data-driven selection process supported by the solution of a sequence of mixed integer optimization problems, which encode requirements on diversity and balancedness with respect to instance features and performance data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computational mixed integer (linear) optimization is an important sub-field of mathematical optimization. Hundreds of papers on the subject are published each year and a multitude of companies provide tools for modeling and solution of mixed integer optimization problems (MIPs) based on state-of-the-art techniques. Measuring performance on benchmark test instances has lain at the heart of computational research since the early days of mathematical optimization. Hoffman et al. [28] first reported on a computational experiment comparing implementations of three algorithms for linear optimization back in 1953. Their observation that “[many] conjectures about the relative merits of the three methods by various criteria could only be verified by actual trial” seems to hold to an even greater extent today. The variety of different techniques and their complex interactions regularly calls for empirical evaluation and motivates the collection and curation of relevant test instances.

Brought into existence in 1992 by Bixby, Boyd, and Indovina [8], the goal of the MIPLIB project has been to provide the research community with a curated set of challenging, real-world instances from academic and industrial applications that are suitable for testing new algorithms and quantifying performance. It has previously been updated four times [3, 9, 32, 39] in order to reflect the increasing diversity and complexity of the MIPs arising in practice and the improved performance of available MIP solvers. In this article, we describe its sixth version, MIPLIB 2017, together with a new selection methodology developed during this process.

The exceptional algorithmic progress in solving real-world MIP instances over the last decades is recorded in various articles [7, 32, 33, 35]. Specifically, this can be observed by examining results on the previous version, MIPLIB 2010, both in terms of solvability and speed. By the end of 2018, the number of unsolved instances was reduced by nearly half. Of the 134 instances for which no solution with provable optimality guarantee was initially known, only 70 remain open. Comparable progress in the overall speed of solvers can be observed in the results of benchmark testing with different versions of available solvers. Since its release in April 2011, the subset of instances of MIPLIB 2010 that form the so-called “benchmark set”, consisting of 87 problem instances, has been the accepted standard for evaluating solvers. Using this benchmark set, Hans Mittelmann has been evaluating a number of MIP solvers, including CPLEX [13], Gurobi [25], and Xpress [51]. When MIPLIB 2010 was released, the version numbers of these three commercial solvers were CPLEX 12.2.0.2, Gurobi 4.5.1, and Xpress 7.2. Aggregating the benchmark results of these three solvers at that time, we can construct results corresponding to a so-called “virtual best” solver and a so-called “virtual worst” solver. These are hypothetical solvers that, for each instance, produce run times that are equal to the best and the worst of the three, respectively. Doing this analysis yields shifted geometric mean runtimes of 36.3 and 113.0 s for the virtual best and virtual worst solver, respectively.Footnote 1 In December 2018, the solver versions were CPLEX 12.8.0, Gurobi 8.1.0, and Xpress 8.5.1. On the same hardware (with a newer operating system) the shifted geometric means of the runtimes had decreased to 13.5 s for the virtual best, and 31.3 s for the virtual worst solver. This corresponds to speed-up factor of 2.70 and 3.62, respectively, which amounts to roughly \(16\%\) per year, just from improvements in the algorithms.

It was because of this development that the MIPLIB 2010 benchmark set was no longer considered to sufficiently reflect the frontier of new challenges in the field and the process of constructing a new MIPLIB began. In November 2016, a public call for contributions was launched and a group of 21 interested researchers, including representatives of the development teams of nine MIP solvers formed a committee in order to steer the process of compiling an updated library.Footnote 2 As with MIPLIB 2010, the overall goal was the compilation of two sets of instances. The MIPLIB 2017 benchmark set was to be suitable, to the extent possible, for performing a meaningful and fair comparison of the average performance of MIP solvers (and different versions of the same solver) across a wide range of instances with different properties, in a reasonable amount of computing time. The larger MIPLIB 2017 collection was to provide additional instances for a broader coverage without restrictions on the total runtime of the test set, including unsolved instances (as a challenge for future research) and instances not suitable for benchmarking due to problematic numerical properties, special constraint types (such as indicators), or exceptionally large memory requirements.

It should be emphasized that the benchmark set we have scientifically constructed is designed for the purpose of comparing the overall performance of general purpose solvers on a wide-ranging set of instances. Average performance on this set is not a suitable criterion to decide which MIP solver to use in a particular application scenario. For such decisions, it is important to consider what specific class(es) of instances are relevant, as well as what criteria beyond the raw speed and the ability to solve a wide range of problems are of interest. This is also underlined by the fact that each of the eight solvers that were used to collect performance data (see Sect. 4.6) proved to be the fastest solver on at least one instance.

Compiling a representative and meaningful instance library is a nontrivial endeavor. Compared to previous editions of MIPLIB, the increased number of submissions, the goals of compiling a significantly larger collection of instances and including a larger number of representatives of solvers posed new challenges to the selection process. In addition, MIPLIB 2017 is the first edition to provide supplementary data regarding the instances, such as the matrix structure and decomposability, as well as the underlying models from which the instances originated, where available. In order to produce a well-balanced library in a fair and transparent manner, we designed a new, heavily data-driven process. The steps applied between the initial submissions and the final MIPLIB 2017 are outlined in Fig. 1. Driven by a diverse set of instance features, our methodology used multiple clusterings to populate a MIP model that was then solved to generate suitable candidates for the final library to be presented to the MIPLIB committee.

We consider this process of selecting instances from a large pool of submissions to be the main new feature of MIPLIB 2017. By contrast, the instances constituting previous versions of MIPLIB were manually selected by the members of the committee, depending heavily on their expertise in benchmarking to avoid common pitfalls like overrepresentation of certain problem classes. As one byproduct of this data-driven approach, we are now able to identify similar instances, which leads to sometimes surprising insights into connections between different, seemingly unrelated instances in the library. In addition to the raw feature data, we provide, for each instance, the five most similar instances in the collection on a newly designed web page (see Sect. 6.3).

Despite the heavy use of quantitative methodology, the overall process inherently requires many manual decisions and heuristic choices. Some examples are the choice of features, the number of clusters to use when clustering instances according to this feature data, the definition of which instances to consider as computationally easy or hard, and our formalizations of diversity and balancedness with respect to the feature data. All of these decisions have a high impact on the final result. Hence, in the remainder of this article we try to describe the collection and selection of MIPLIB 2017 at a sufficiently high level of technical detail in order to make this process transparent to the reader.

The article is organized as follows. Section 2 addresses related work and gives a brief historic overview on standards for computational experiments in mathematical optimization. Section 3 describes the efforts to collect instances and meta data on their background and solvability. Section 4 details the collection of feature data for each instance that forms the basis for quantifying similarity of instances and balancedness of a given selection. In Sect. 5, we describe how this data was used as input in order to compute good candidates for the library by solving a MIP model. Section 6 summarizes the final result. We conclude with our final remarks and acknowledgements in Sect. 7.

2 Related work

With the evolution of computational research, standards and guidelines for conducting computational experiments were proposed and updated. Next to performance measures, software availability and machine specifications, these guidelines also discuss the choice of test problems and the availability of standard test sets.

In 1978, Crowder et al. [14] presented an early list of best practices, emphasizing the necessity of reproducibility of computational experiments. In this course, they also discussed the value of test problems being documented and the importance of using real-world problems. At the same time, they pointed out that sharing test problems is expensive and time-consuming—an issue that we have fortunately overcome.

The work of Jackson et al. [31] was motivated by a controversy around the first published computational results for interior point LP solvers. Besides others, the ad-hoc committee stated: “We recommend that standard test problem sets and standard test problem generators be used wherever possible.” Further, they encouraged researchers that “whenever new problems are used in a computational evaluation, these problems be ...submit[ed] to a standard collection like netlib.”

In his seminal paper from 1993, John Hooker characterized an empirical science of algorithms [29]. He discussed the importance of identifying typical representatives of a problem class to conduct a study on, at the same time mentioning that any choice is open to the criticism of being unrepresentative. There are several resolutions for this issue; a well-known, long-standing and publicly available standard test set is certainly one of them. Another resolution that Hooker pointed out is to make “the issue of problem choice ...one of experimental design”. This means making the question how performance depends on test set characteristics part of the experiment itself. The approach taken to set up MIPLIB 2017—a data-driven instance selection that parameterizes the creation of a test set—can be seen as an extension of this idea.

There are various publications that formalize the problem of compiling a test set. McGeoch [37, 38] developed an abstract model of algorithms and the paradigm of simulation research, partially in response to Hooker’s paper.

A complementary line of research that is not touched on in this paper is the creation of new test instances to fill “gaps” in a test set, by learning from the instance parameter space, see [49]. Smith-Miles et al. [48] use such an approach to work out the strengths and weaknesses of optimization algorithms on an enlarged instance set that they extrapolated from a standard test set. They detect so-called pockets where algorithm performance significantly differs from average performance. This take on instance diversity complements our approach of trying to achieve a best possible coverage of the feature space subject to a fixed set of candidate instances.

Work on standard test sets for benchmarking naturally connects to work on algorithm selection [43]. The work of Bischl et al. [6] brings both fields together by publishing a benchmark library for algorithm selection. They introduce a data format to formally define features, scenarios, working limits and performance metrics. Since 2016, this library contains a MIP section, based on MIPLIB 2010.

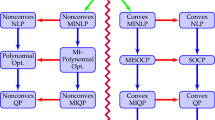

Finally, related work of course includes various other libraries for mathematical optimization problems, including, but not limited to MINLPLib [11], QPLIB [16], Netlib [10], the COR@L collection [34], and OR-Library [4]. The latter is one of the oldest online collections of optimization problems, existing for 30 years now and still being regularly updated.

3 The collection process

The first step in the creation of MIPLIB 2017 was to collect a large set of candidate instances. This section elaborates on the submission process and the origin of the submitted instances, as well as preparatory steps for the subsequent selection process, such as instance cleanup and grouping.

3.1 The submission phase

The collection process started with a call for contributions in November 2016, which was advertised at many conferences, on numerous mailing lists, and in direct communication with partners from industry. Submissions were accepted until August 1, 2017. Overall, we received 128 submissions (each containing multiple instances) from 66 submitters with 54 different affiliations, 38 of them being academic and 16 industrial. Note that the affiliation of the submitter alone does not imply anything about the source or type of the model. Several of the submitters with academic affiliation submitted instances from industry projects and one submitter from industry submitted instances modeling a combinatorial game.

Each submission was automatically committed as a new subdirectory to the public git repository https://git.zib.de/miplib2017/submissions. In the repository, these subdirectories are structured as follows. In the root of the subdirectory there are two files: a bibtex file with references to papers related to the instances, if provided by the submitter, and a meta file containing information about the submitter, creator, and owner of the submitted instances, as well as a description of the instances and licensing information. For the first time, all contributions were required to be submitted under a license in order to explicitly grant the right of redistribution, among others. The default license was chosen to be the Creative Commons CC BY-SA 4.0.Footnote 3 license, but it was possible to specify a different license if desired. In addition to these two files, there are up to three subdirectories. The instances subdirectory contains the instances themselves (.lp or .mps format, possibly compressed). The models subdirectory contains associated model files, which contributors were encouraged to include in order to provide researchers with richer information on the structure of the instances. Finally, additional information is provided in the misc subdirectory, ranging from extended descriptions to log files and MIP start files.

In total, 3670 instances were newly submitted to MIPLIB 2017. Table 1 lists all submitters and the number of instances they submitted. We arrived at a total of 5721 instances by adding 2051 instances that were submitted for inclusion in MIPLIB 2010, keeping their original submission information intact. Those 2051 comprised most of the submissions to MIPLIB 2010 except for a few duplicates already present in other MIPLIB 2017 submissions.

Table 2 reports the origin of the submitted instances. It shows that there are two blocks of new submissions with a high number of instances: the instances submitted to the NEOS server and MIP models of instances that were part of the MiniZinc ChallengesFootnote 4 from 2012 to 2016. Even excluding those instances, the remaining new contributions comprise about as many instances as were submitted to MIPLIB 2010, half of which were collected from publicly available sources back then. Note also that nearly 50 instances originate from submissions to the user support of two commercial solvers.

3.2 Submissions from the NEOS Server

Before moving on, we briefly discuss how the instances originating from the NEOS Server were collected, since this was a separate procedure unto itself. The NEOS Server is a free internet-based service for solving numerical optimization problems hosted by the Wisconsin Institute for Discovery at the University of Wisconsin in Madison, with remote solving services provided by various sites, such as Arizona State University.

In the calendar years 2014–2016, more than 1 million MIP instances were solved using NEOS. A subset of these instances was collected and submitted for inclusion into MIPLIB 2017. To avoid misunderstandings, we note that the reduction methodology described in the following happened prior to the MIPLIB 2017 effort and is not part of the selection procedure conducted for MIPLIB 2017. However, because the NEOS instances account for a large number of newly submitted instances, we outline the process briefly for the sake of completeness and transparency.

To begin, the 14,571 MIP instances that were modeled through the AMPL [15] language and whose solution required more than five minutes of wall clock time to solve were collected. In discussions with the MIPLIB committee, it was decided that a representative, yet diverse, sample of around 700-800 instances would be an appropriate number of submissions from NEOS. The strategy to select the instances was based on clustering the instances with similar traits and then selecting instances from the clusters.

All 14,571 of these instances were re-run using CPLEX 12.6 to collect the optimal solution (if possible within 12 h) and the solution to the root LP relaxation. For each instance, the following properties were collected:

-

the number of variables, constraints, and nonzeros,

-

the percentage of binary variables and of general integer variables,

-

the best solution value found by CPLEX, the root gap, and the percentage of binary or integer variables with fractional value in the root LP solution.

Instances whose number of variables or constraints was more than 3 standard deviations away from the mean were excluded as outliers. In an attempt to eliminate duplicate instances, only one instance in a group that had the same number of constraints, same number of variables, and same optimal solution value, as well as the same associated email address, was retained. After this removal of outliers and suspected duplicates, 6531 instances remained.

Each of these instances was assigned to a multi-labeled category using three independent calls to a \(k\)-means clustering algorithm [26] as follows. First, the instances were divided into four clusters according to properties associated with problem size: the number of constraints, number of variables, and number of nonzeros. Second, the instances were again divided into four clusters, this time according to the percentage of binary and general integer variables in the instance. Finally, the instances were divided into four more clusters based on two properties of the root LP relaxation: root gap and the percentage of integer variables whose root LP relaxation value was not integer-valued.

From these clusterings, each of the 6531 instances was given a label in \(\{0,1,2,3\}^{3}\), all in all giving \(4^3 = 64\) (possibly empty) clusters. Let \(S_i\) be the number of instances in the i-th cluster. The final selections were then made as follows:

-

For each cluster with size \(S_i \le 2\), all instances of the cluster were selected;

-

for each cluster with size \(3 \le S_i \le 10\), \(\lceil |S_i|/2\rceil \) instances were selected at random;

-

for each cluster with size \(S_i > 10\), \(\lceil |S_i|/10\rceil \) instances were selected at random.

This strategy ensured a diverse sample, and resulted in a total of 710 instances to be considered for inclusion in MIPLIB 2017.

Separately, Hans Mittelmann also contributed 75 instances submitted to one of the ASU-hosted servers through the NEOS platform. These servers process requests for the SCIP, FEASPUMP, PROXY, and Qsopt_ex solvers. Using scripts, these user submissions were screened for promising instances with an emphasis on those that contain model information.

In combination, this resulted in a total of 785 new instances arising from NEOS. In addition, the instance pool for MIPLIB 2017 contains 391 NEOS instances that have been either included in previous versions of MIPLIB or were considered during past selection processes.

3.3 Instance cleanup

Following the close of submissions, we performed several cleanup steps, as follows.

Instance renaming While we tried to preserve original file names where possible, some submissions required renaming to create uniquely identifiable names. For instance, some submissions contained multiple instances with the same file name that originated from the same base model populated with different data. We also tried to make the names as short and expressive as possible, containing only lower-case characters, numbers and hyphens. After re-naming, the longest instance name was reduced from 151 to 31 characters. Note that submissions for MIPLIB 2010 were renamed to be consistent with the naming scheme of the final MIPLIB 2010 instances, but no submissions already present in MIPLIB 2010 were renamed.

Making NEOS instances identifiable NEOS instances have traditionally been named neos-xyz, where xyz is a number with up to 7 digits, representing the unique NEOS instance ID of the submitted instance. In order to allow easier identification of the instances in papers and personal communications, we compiled a list of river names from all over the world and appended a river name to the original name, resulting in names of the form neos-xyz-river. As an example, NEOS instance 3075395 has been renamed neos-3075395-nile such that it can be colloquially called the “nile instance”. We excluded river names such as “Amazon” to avoid ambiguous renaming. Note that we applied this renaming procedure only to the 785 NEOS instances newly submitted for MIPLIB 2017 (see Sect. 3.2), leaving all previously available NEOS instances under their old name to avoid confusion.

Format conversion and cleanup All instances in the MIPLIB 2017 collection are provided in MPS format [30, 42]. Therefore, instances submitted in LP format were read into CPLEX and written out in MPS format. Given that different MIP solvers support different extensions of the MPS format, we ensured that all solvers could read all instances by restricting the format to a basic version. Maximization problems were turned into minimization ones by negating objective coefficients; lazy constraint markers were removed so that the constraints are treated as standard constraints; and coefficients stated as hexadecimal numbers were converted to their decimal equivalent. Additionally, we added a NAME section or changed the existing one to the updated instance name. For a small number of instances, it was necessary to change the MPS files because they contained ambiguous definitions or outright incorrect formatting. In those cases, we performed the minimally necessary changes to make the instance file adhere to the basic MPS standard.

3.4 Model groups

In most cases, the instances in a single submission are closely related in the sense that they originate from the same or a very similar MIP model. Some submissions, however, contain many unrelated instances. Therefore, we introduced model groups to keep track of this form of meta information that may not be directly inferable from the submission ID or the numerical instance features described in Sect. 4. A model group represents a set of instances that is based on the same model or a very similar formulation but with different data. This grouping allowed us to avoid overrepresentation of a particular application or model class in the final library by limiting the number of instances with known similar model background during the selection process.

Each instance was assigned to one model group as follows. Initially, a submission of homogeneous instances was assigned to its own model group. If a submission contained multiple sets of instances, each implementing a different model for the same problem and data set, an individual group was created for each of the different model types. This procedure was applied to both the submissions to MIPLIB 2017 and the submissions to MIPLIB 2010. Publicly available instances with known application were grouped by hand by the authors. Examples for such cases are the MiniZinc instances submitted to MIPLIB 2017 and the instances from older MIPLIB versions and public instance sets submitted to MIPLIB 2010.

Instances from the NEOS server, however, are anonymous and lack meta data from the submitters that could be used to infer model groups. Nevertheless, users often submit multiple, similar instances. Hence, we used feature data described in the next section in order to infer synthetic model groups in an automated way. In order to group the NEOS instances, a \(k\)-means clustering was computed with respect to the entire instance feature space (see Sect. 4). The parameter \(k = 110\) was chosen manually to achieve a clustering with very similar instances in each NEOS model group. This clustering was applied to all 1176 NEOS instances both from new submissions and previously available sources.

Table 3 summarizes the number of resulting model groups and the corresponding group sizes for the different sources of instances. These numbers are given with respect to the 5666 instances in \({\mathscr {I}}^{\text {sub}}\) (see Fig. 1). The largest model group cmflsp comprises 360 instances of a capacitated multi-family lot-sizing problem.

4 Feature computation

The ultimate goal of the selection process was to select a representative sample of all available instances with respect to problem structure and computational difficulty, while avoiding overrepresentation of similar models. In the spirit of data-driven decision making and in light of related work in the fields of algorithm selection and machine learning, we based this process both on performance data and on an extensive set of instance features. The first step in the selection process was simply to determine the features of interest. In the terminology of [48], this means that we defined a feature space of measurable characteristics and computed a feature vector associated to each element of the problem space of candidate instances.

Although this may seem straightforward, it is important to note that the feature vector corresponding to an instance can be affected by seemingly irrelevant properties of its representation in MPS format. For instance, some of the raw MPS instances contained modeling language artifacts or artifical redundancies. For this reason, the instance features were computed only after applying some straightforward simplification steps, which we refer to as trivial presolving. We first describe this presolving process before describing what features of the instances were used and how their values were determined for each instance.

4.1 Trivial presolving

As is traditional for MIPLIB, the submitted instances are being made available in their original, unmodified form (with the exception of minor corrections to MPS formatting that were necessary in some cases). This means that the distributed instances were not presolved or simplified in any way for the purposes of distribution. For the purposes of extracting features of the instances, however, so-called “trivial” presolving was applied, as described below. It may seem strange that the version of each instance made publicly available in the final collection may actually be slightly different than the version considered during the selection process, but there are good reasons for this approach that we elaborate on next.

The justification for distributing the instances in their original submitted form is simply that this allows the most complete and realistic testing of the ability of each solver to deal with real-world instances, including all of the idiosyncratic artifacts that may arise in the modeling process. In particular, algorithms for presolving instances are actively developed and have a high impact on the performance of a solver (see [2, 20]). They not only strengthen the original formulation, but also simplify and remove unnecessary artifacts. These procedures are computational in nature and their efficiency and effectiveness also needs testing. In some cases, there may be choices made in the presolving that can only be made with foreknowledge of the properties of the solution algorithm itself. For all these reasons, it was considered highly desirable in promoting test conditions that are as reflective of real-world conditions as possible to avoid modifying the distributed versions of the instances.

On the other hand, because MIP solvers do universally apply certain well-known simplification procedures to an instance before the branch-and-bound search, the unmodified descriptive data of the original instance may not properly reflect the “true” features of that instance for the purposes of clustering instances according to similarity, as we did during the selection process. The features considered that may be affected by presolving include not only obvious properties, such as instance size, but less obvious ones, such as the type of constraints and variables present in the model. A model may, for example, have all variables integer with the exception of one continuous variable whose value is fixed to 1 and whose purpose is to model an objective offset. It would be unreasonable to consider such an instance to be an instance with both integer and continuous variables. At the other extreme, we may have an instance in which all binary and integer variables are implicitly fixed, leaving a purely continuous problem after presolving.

While it seems necessary to do some presolving before computing instance features, the full presolving done by solvers is itself a difficult computational balancing act and each solver does it differently. Too much presolving before feature computation would result in a presolved instance with features no more representative of the “true” ones than the completely unpresolved instance. As a compromise, all instance features introduced in Sects. 4.3–4.5 were collected after applying a reduced set of the most obvious presolving techniques to the instance, but no more sophisticated techniques.

For this trivial presolving, we used SCIP 5.0, but disabled most presolving techniques, applying only simple ones, such as the removal of redundant constraints and fixed variables, activity-based bound tightening, and coefficient tightening. In contrast to standard SCIP presolving, which stops if the problem size could be reduced by only a small percentage during the last presolving round, we applied the simple presolving steps until a fixed point was reached. The complete set of SCIP parameters used to do the presolving is provided on the MIPLIB web page (see Sect. 6.3) as part of the feature extractor download.

For 55 of the 5721 submitted instances, trivial presolving turns the instance into a pure LP or is even able to solve the instance by fixing all variables. These instances were not considered for inclusion and also serve to emphasize the importance of this preprocessing step. Overall, trivial presolving reduced the number of variables on 3782 instances (66% of the submission pool), sometimes by as much as 93% (instance a2864-99blp). For 445 instances (8%), more than 50% of the variables were fixed. On average, trivial presolving reduced the number of variables by 15%.

4.2 Canonical form

Because the feature computation can be affected not only by presolving but also by the exact form in which the instance is represented (equality constraints versus inequalities etc.), we transformed all presolved instances into the following canonical form, which is slightly more general than the usual one, prior to feature computation.

Definition 1

A mixed integer optimization problem P with input

-

\(m,n, n_{b}, n_{i}, n_{c} \in {\mathbb {N}}\), \(n = n_{b} + n_{i} + n_{c}\),

-

coefficient matrix \(A\in {\mathbb {Q}}^{m \times n}\),

-

left-hand and right-hand side vectors \(\ell ^A, u^A\in {\mathbb {Q}}_{\pm \infty }^m\),

-

lower and upper bound vectors \(\ell ^x, u^x\in {\mathbb {Q}}_{\pm \infty }^{n}\), and

-

objective coefficient vector \(c\in {\mathbb {Q}}^{n}\)

is defined to be an optimization problem of the form

It is important to note that transformation to the canonical form of Definition 1 is not uniquely determined for each instance. There remain certain degrees of freedom to formulate equivalent instances by scaling continuous columns, scaling the objective function \(c^{\top }x\), or scaling a constraint \(\ell ^A_{i}\le a_{i}^{\top }x\le u^A_i\). This may cause problems with the computation of some features. For example, some features of interest involve comparison of row coefficients, but this comparison is difficult if rows of the constraint matrix may have coefficients differing by several orders of magnitude. We address these issues by normalizing the objective coefficients \(c\) and every constraint \(\ell ^A_{i} \le a_{i}^{\top }x \le u^A_{i}\) by their maximum absolute coefficient \(\Vert c\Vert _{\infty }\) and \(\Vert a_{i}\Vert _{\infty }\), respectively, so that all objective and matrix coefficients lie in the interval \([-1,1]\) before computing the feature matrix \(F\) (the downloadable instances are not altered).

It is based on this final presolved canonical representation that we define the \(Q= 105\) features we consider. This results in a feature matrix \(F\in {\mathbb {R}}^{N\times Q}\), where \(N\) is the total number of instances submitted. Table 4 lists these features, which were divided into \(K = 11\) feature groups (also listed in the table). Feature groups were used for the selection process, during which instance clusters were computed for each feature group individually. Every feature group was chosen to represent a particular aspect of an instance in the form specified by Definition 1. The computation of features in most of the groups only requires information that can be extracted directly from the input of the (trivially presolved) problem. Two exceptions are the constraint classification and decomposition groups, which need to identify structures in the model. These are described in Sects. 4.4 and 4.5 .

4.3 Instance features

Here, we describe the first nine feature groups in Table 4. We use the shorthand vector statistics to refer to five values summarizing the entries of a vector \(v \in {\mathbb {R}}_{\pm \infty }^{d}\). Let \(d' = |\{j\,:\,|v_{j}| < \infty \}|\) be the number of finite entries of v, which can be smaller than d in the case of, e.g., bound vectors, and let \(v'\) be the restriction of v to its finite entries. We assume without loss of generality that \(v'\) is sorted, \(v'_{1} \le v'_{2} \le \dots \le v'_{d'}\). The five values are

-

\(\min : v \mapsto v'_{1}\),

-

\(\max : v \mapsto v'_{d'}\),

-

\(\text {mean}: v \mapsto \frac{1}{d'} \sum \limits _{j=1}^{d'}v'_{j}\),

-

\(\text {median}: v \mapsto \left( v'_{\lfloor \frac{d' + 1}{2}\rfloor } + v'_{\lceil \frac{d' + 1}{2}\rceil }\right) / 2\), and

-

\(\text {std}: v \mapsto \sqrt{\frac{1}{d'}\sum \limits _{j=1}^{d'}\left( v'_{j} - \text {mean}(v')\right) ^{2}}\).

Note that infinite entries can only occur for the variable bound vectors \(\ell ^x\) and \(u^x\) and the left- and right-hand side vectors \(\ell ^A\), \(u^A\). For a vector v that contains only infinite entries, i.e., for which \(d'=0\), the above vector summaries are not well-defined. If \(d' = 0\), the corresponding statistics were set to 0 in the data. Note that even if the original formulation has infinite bounds on variables, trivial presolving may often infer finite bounds for those variables.

The dynamism of a vector with finite entries is the ratio of the largest and smallest absolute entries, i.e., \({\Vert v\Vert _{\infty }}/{\min \{|v_{j}| : v_{j}\ne 0\}}\). The dynamism is always at least 1. If the dynamism of any single constraint exceeds \(10^{6}\), this is an indication of a numerically difficult formulation. Note that the dynamism is invariant to the normalization procedure. Combining the dynamism of each constraint yields an m-dimensional vector, which can be summarized using vector statistics.

The feature group Matrix coefficients summarizes the nonzero coefficients of the matrix \(A\) as follows. First, each row \(a_i\), \(1\le i \le m\), of \(A\) is normalized by its largest absolute coefficient, such that all coefficients are in the range \([-1,1]\). The nonzero entries of \(a_{i}\) are then summarized by four of the five vector statistics explained above, namely the \(\min \), \(\max \), \(\text {mean}\), and \(\text {std}\). Going through all rows, we obtain four m-dimensional vectors describing the \(\min \), \(\max \), \(\text {mean}\), and \(\text {std}\) per row. Each of these vectors is then summarized via vector statistics, which yields a total of 20 statistics that summarize the cofficients of \(A\). Examples are the mean minimum coefficient over all m rows, or the standard deviation of all m maximum coefficients, etc. The feature group comprises 19 out of these 20 coefficient statistics, because the maximum over all m maximum coefficients is equal to 1 for every instance in our data set.

For the feature group Sides, the m-dimensional left- and right-hand side vectors \(\ell ^A\) and \(u^A\) are summarized individually via vector statistics of all their finite elements. Besides, we compute vector statistics for the finite elements of the concatenated 2m-dimensional vector \((|\ell ^A|,|u^A|)\) that combines the absolute left- and right-hand sides of all rows. Note that the row normalization by the maximum absolute coefficient also affects the row left- and right-hand sides.

For features such as the row or objective dynamism, which may differ by orders of magnitude between instances, we used a logarithmic scaling. While logarithmic scaling is fine for vectors with positive entries, it is not applicable to vectors with potentially negative entries such as the variable lower and upper bound vectors. In those cases, we apply a customized scaling

to every entry of the corresponding column in the feature matrix \(F\). The map \(\text {siglog}\) preserves the sign of each entry.

The collection of the instance features was performed with a small C++ application called the feature extractor, which extends SCIP by the necessary functionality needed to report features after trivial presolving and optionally accepts a settings file to modify the default presolving explained in Sect. 4.1. The feature extractor is a modified version of a code used already by [22] and available for download on the MIPLIB 2017 web page (see Sect. 6.3).Footnote 5

4.4 Constraint classification

Table 5 lists the constraint classification types used for the feature group Constraint classification. A total of 17 types of linear constraints that often occur as a subset of the constraints of MIP instances were identified. The table is sorted from most specific to most general. If a constraint belongs to multiple types, the classification always assigns the most specific, i.e., topmost, type that applies. Note that even empty, free, and singleton constraints are listed. While these types are removed during trivial presolving, they may well be present in the original formulation.

There are several types of constraints supported by the MPS format [30, 42] that are not strictly linear as required by Definition 1. A well-known extension are indicator constraints, which are conditional, linear constraints that only need to be satisfied if a corresponding binary variable, the so-called indicator variable, is set to 1. It is possible to linearize such a constraint by employing a sufficiently large coefficient M for the indicator variable, in which case the reformulation is called a big-M formulation. In many practical applications, big-M formulations require a very large value of M, which is why they often lead to numerically difficult models. Directly expressing such constraints as indicator constraint allows the solver to handle them in a more algorithmically advantageous way.

Indicator constraints were allowed into the MIPLIB 2017 collection, but (as we describe later) were not allowed in the benchmark set. The only feature used that involves indicator constraints was their fraction among all constraints. This feature is also part of the feature group Constraint classification in Table 4. Other features regarding, e.g., the linear part of the indicator constraint, are not collected. In total, 437 of the submitted instances contain indicator constraints. Many of them appear in instances of the MiniZinc submission, which were additionally submitted as big-M formulations.

There are other special types of constraints allowed by MPS, such as special-ordered sets (SOSs), semicontinuous variables, and piecewise linear constraints that not all solvers support. However, none of the instances submitted used such constraints.

4.5 Block-structured decomposition features

Dantzig-Wolfe reformulation and Lagrangian relaxation are decomposition techniques that can exploit the presence of logical groupings of constraints and variables in the model. Classically, one identifies “complicating” or “linking” constraints that, when removed from the model, result in several subproblems that can be solved independently. Concretely, this occurs when the constraint matrix has so-called block angular structure (defined below). One challenge in applying these techniques within a general purpose solver is identifying such structure. Standard input formats do not allow information about these structures to be passed to the solver directly. For this reason, several decomposition-based solvers have been developed that accept auxiliary input files indicating this structure when it is present. These include GCG [21], DIP [17,18,19], and DECOMP (a decomposition-based solver that is part of SAS/OR and SAS Optimization [44]). All three can exploit the identified structure by reformulating the instance and applying a branch-and-price algorithm to solve it.

Although block structure may be “obvious” in the original model, this structure is often lost when the model is populated with data and transformed into a format such as MPS. When information about the block structure is not provided, it can still be derived algorithmically. This information is thus at a higher level of abstraction than the other instance features alone. Contributors to MIPLIB 2017 were invited to provide complementary material, such as model files in GAMS or AMPL format or information on block-structured decompositions in the .dec file format. While some 20 submitters accompanied their instances with model files to produce them, no block structure information was contributed. Nevertheless, GCG, DIP, and DECOMP are all able to derive information about the block structure automatically (see, e.g., [23, Sec. 5]).

Given the coefficient matrix \(A\) of an instance, we formally characterize a (block structured) decomposition by a partition of the rows \(D^r = (D^r_1,\ldots ,D^r_k, L^r)\) of \(A\) and a partition of the columns \(D^c = (D^c_1,\ldots ,D^c_k, L^c)\) of \(A\), such that for every entry \(a_{i,j}\not =0\) of \(A\) with \(i\in D^r_{k_1}\) and \(j \in D^c_{k_2}\) it holds that \(k_1 = k_2\). We say that such a decomposition has k blocks. The number k of blocks (normalized by the average number of blocks over all instances), the vector statistics (except the standard deviation) of the numbers of rows and columns per block, respectively, and the so-called area score give rise to ten features related to block-structured decompositions that were derived from the trivially presolved instances using the detection capabilities of GCG 3.0.

The area score for a decomposition \(D= (D^r,D^c)\) of \(A\) is defined as

which intuitively measures the fraction of the matrix that is “white” in Fig. 2b. A variant of this score is used by GCG 3.0 to select a decomposition in the case that several, different ones are found (which is typically the case). An area score closer to 1.0 is better. A very low area score indicates that the model does not contain any substructures that are easily identifiable by GCG 3.0. This does not imply that there is no such structure, but rather that it is not obvious.

As computing decompositions can be very time consuming, in particular for huge instances, GCG was run in a minimal detection configuration. In this configuration, GCG first groups the constraints of \(A\) according to (a) their type in SCIP, (b) their MIPLIB type (see Table 4), and (c) their number of nonzeros. For each respective grouping, the smallest cardinality groups of constraints are joined until a specified (small) number of groups remains. From this collection, each subset is potentially selected as the set \(L^r\) of linking constraints of the decomposition. The so-called connected finisher assigns all remaining rows to as many blocks as possible. It is possible that this last step identifies a single block only. In this case the area score of the resulting decomposition is 0.0. From the pool of decompositions that are constructed this way, one with maximum area score is selected for computing the decomposition features of the instance.

4.6 Acquisition of performance data

The selection of the complete MIPLIB 2017 collection (see Sects. 5.2–5.4) was mainly driven by the feature data described above. The selection of the benchmark set (see Sects. 5.1, 5.5, and 5.6), however, took into account information about the computational and numerical difficulty of the instances. In order to quantify these empirically, we collected performance data using current solver software.

Every submitted instance was processed with each of the eight solvers listed in Table 6 to collect performance data. The experiments were performed on two Linux clusters. The first one consisted of 32 nodes, each equipped with two 3.20 GHz 4-core Intel Xeon X5672 CPUs and 48 GB RAM, the second consisted of 16 nodes, each equipped with two 2.50 GHz 10-core Intel Xeon E5-2670 v2 CPUs and 64 GB RAM. Two such jobs were run in parallel on the same cluster node, each job using a time limit of 4 h and 4 threads, except for SCIP and MATLAB,Footnote 6 which are both single-threaded. In total, this performance evaluation required almost 40 CPU years.

Figure 3a shows for every possible cardinality \(k = 0,1,\dots ,8\) the number of instances solved by exactly k solvers. 1969 of the 5721 instances (34%) were not solved by any solver within 4 h. An instance was considered solved if there were no inconsistencies between solvers and the solution was verified to be feasible (see Sect. 4.7). There were 1155 instances (20%) that could be solved by all eight solvers.

To summarize the results of the experiments, we report here the performance measures for virtual solvers, as described in the introduction. For each instance, a virtual solver is a summary of all tested solvers by means of an aggregation function such as \(\min \), \(\max \), and median, resulting in the best, worst, and median virtual solvers, respectively. The term “virtual” is used to distinguish the presentation from the best (fastest) or worst (slowest) actual solver over the complete set of instances. The performance measures collected are the time to optimality and the number of branch-and-bound nodes processed.

Figure 3b compares the fraction of instances solved by the virtual best and worst solvers. A large discrepancy between the curves can be observed. The virtual best solver finished on about 20%, 40%, and 60% of the submissions within 1 s, 1 min, and 1 h, respectively. The virtual worst solver required more than a second for any instance, and solved only 20.2% of the instances within the time limit of 4 h. The virtual best solver solved more instances in 2 s than the virtual worst solver was able to solve in 4 h. Note that all eight tested solvers contributed to the performance of the virtual best solver, i.e., each solver was the fastest on at least one instance.

Figure 3c summarizes data about the number of branch-and-bound nodes processed. As expected, the number of branch-and-bound nodes varies significantly between instances, but also between solvers on individual instances. In Fig. 3c, the minimum and maximum number of explored nodes are shown. In this figure, we consider only runs that completed sucessfully. Note that there are differences in how solvers report the required number of branch-and-bound nodes. Concretely, the solution of an instance during presolving may be reported as 0 or 1 nodes depending on the solver used. We therefore normalize the node results so they are always at least 1, i.e., we consider presolving as part of the root node solution process. This is also justified because we only consider instances that could not be solved completely by trivial presolving. At the left end of the scale are the instances that could be solved within 1 node. This group amounts to 1507 instances, which corresponds to 40% of the instances that could be solved at all and 26% overall. For more than 50% of the solved instances, the solution process required less than 1000 nodes. The maximum number of explored nodes is considerably larger. Less than 25% of the considered instances were solved within 1000 nodes by all solvers that finished within the time limit. Note that for all 385 records (see Fig. 3a) for which only one solver finished within the time limit, the minimum and maximum number of explored nodes coincide.

4.7 Consistency check of solver results

In order to identify numerically challenging instances and incorrect answers returned by the solvers, the results of the performance runs were independently verified in two different ways.

First, the feasibility of every primal solution reported at the end of a solution process was checked. To accomplish this step, a solution checker, whose purpose is to validate feasibility of the solutions computed by a given MIP solver against the original model, has been bundled with the MIPLIB scripts since MIPLIB 2010. The checker tries to recognize incorrect results, while at the same time taking into account that most MIP solvers use floating-point arithmetic, and thus exact feasibility cannot be expected. The overall structure of the solution checker has been largely unchanged since MIPLIB 2010. It is important to emphasize that it only checks feasibility of a given solution, it cannot check optimality in general. The solution vector returned by the solver is verified against the original instance in MPS format, with all computations performed using the arbitrary precision arithmetic package GMP [24]. Feasibility of linear constraints, integrality and the objective value are all verified according to given tolerances. We refer to the MIPLIB 2010 paper [32] for more details on the solution checker design and limitations.

For MIPLIB 2017, we updated the solution checker such that it uses a more flexible (and forgiving) definition of violation of a linear constraint. The previous version of the checker used an absolute tolerance of \(\varepsilon = 10^{-4}\), so that when given a linear constraint \(a_{i}^\top \! x \le u^A_{i}\) and a solution \(x^*\), it would have considered the constraint satisfied if and only if

However, this turns out to be too strict if the linear constraint has coefficients with a large absolute value. At the same time, switching to a purely relative tolerance was not considered a viable option, as it can lead to a too small effective tolerance when tiny coefficients are involved. So, in the new version we introduced a different definition of violation, that tries to combine the strengths of absolute and relative tolerances, and also to possibly cope with cancellation effects when evaluating the variable part (activity) of the constraint. In particular, we split the value \(a_{i}^\top \! x^*\) into its positive and negative parts as \(a_{i}^\top \! x^* = (a_{i}^\top \! x^*)_+ - (a_{i}^\top \! x^*)_-\) and consider the linear constraint satisfied if and only if

Given the relaxed definition of violation, it was decided to use the stricter value \(\varepsilon = 10^{-5}\) as tolerance. The very same logic is applied when checking variable bounds, and when checking the objective value \(c^*\) reported by the solver—we just treat it as the linear constraint \(c^{\top \!} x^* = c^*\). Note that integrality is still checked with a purely absolute tolerance of \(10^{-4}\). Finally, the solution checker was extended to support indicator constraints.

Following the feasibility check, which can be done independently for each solver and solved instance, the results were compared between solvers to identify discrepancies in the optimal values reported or inconsistencies in primal and dual bounds. We used the publicly available tool IPET [27] to parse the solver log files and validate the results. We considered results inconsistent when solver A reported a verified, feasible solution with value \(c^{*}\), while solver B timed out reporting a dual (lower) bound that was higher than \(c^{*}\). This included the special case that an instance had been reported infeasible by solver B. For example, on the instance bc1, seven of eight solvers agreed on an optimal value of 3.338 after exploring search trees with 3k–20k nodes. The eighth solver, however, reported a solution of value 3.418 as optimal after 720 nodes. The eighth solver cut off the optimal solution. Note that while such a behavior can be caused by a bug in the solver, it is also possible that different optimal values can be “correctly” obtained when different tolerances are used. Since all MIP solvers rely on floating-point arithmetics and use feasibility tolerances, the definition of “the optimal objective value” for a problem instance is ambiguous. In particular, for numerically challenging problems, a solver might return a different optimal objective value as a result of applying slightly stricter tolerances within the algorithm. Instances exhibiting such ambiguity are not suitable for benchmarking, since handling this numerical ambiguity can be done in different ways, requiring different amounts of computational effort. This leads to difficulties in comparison. Therefore, we disregarded all instances with such inconsistencies during the selection of the benchmark set (see Sect. 5.6), unless the inconsistency was obviously caused by a bug in one solver; 328 instances (5%) were removed for this reason.

5 Selection methodology

Due to the vast number of collected instances and the stark overrepresentation of some problem classes and instance types, it was crucial to reduce the submitted instances to a carefully chosen selection that provides both researchers and practitioners with a meaningful basis for experimental comparison. MIPLIB 2017 provides two main instance sets, namely the benchmark set and the collection. In the following, we discuss the actual selection process and the obtained result.

We approached this task in reverse order by first selecting the larger MIPLIB 2017 collection from the submitted instances, and then choosing the MIPLIB 2017 benchmark set as a subset of the collection. An overarching goal for both the collection and benchmark sets was to provide good coverage of the feature space of all submissions while maintaining balance. Note that we thus explicitly avoid allowing the distribution of instance properties observed in the set of submitted instances to affect the distribution in the final collection, since it is to be expected that the set of submitted instances would be highly unbalanced in its instance feature representation, if for no other reason than that some submissions contained many more related instances than others. A second overarching goal was to choose a collection as large as possible, in order to obtain a rich and diverse test set, but without sacrificing balance. As explained above, the benchmark selection step required additional restrictions. With respect to the benchmark set, the goal was to choose a large set of instances, but with a bias towards instances that are currently hard for all solvers and keeping in mind that it should be possible to perform benchmarking in a “reasonable” amount of time.

It seems quite natural to formulate the selection task as an optimization problem. In fact, we approach the generation of MIPLIB 2017 with a sequence of optimization problems: a set of diversity preselection MIP s, the collection MIP, and finally, the benchmark MIP. After an initial cleanup, large model groups (see Sect. 3.4) are cut down to a handful of diverse instances by the application of the diversity preselection model described in Sect. 5.2. The main purpose of the first selection procedure was to avoid overrepresentation of instance types from large and very homogeneous submissions that do not add to the diversity of the instance library. Sections 5.3 and 5.5 introduce the clustering procedures to partition the instances based on instance features and performance data, respectively. Sections 5.4 and 5.6 describe the mixed integer optimization models used to compute the MIPLIB 2017 collection and benchmark sets. Although the selection of the benchmark set is only the final step of the process, the initial reduction steps must be aware of which instances are judged to be suitable for the benchmark set. Otherwise, too many benchmark-suitable instances might be excluded initially for selecting a good benchmark set later. Hence, we start in Sect. 5.1 by giving our definition of benchmark suitability.

Note that before the selection steps outlined here, the submission pool of 5721 instances was already reduced to 5666 instances by the removal of LPs (no discrete variables) and instances that are empty after a trivial presolving (see Sect. 4.1). Furthermore, we removed pairs of duplicate instances identified during the selection process.

5.1 Benchmark suitability

The following definition characterizes the requirements for an instance to be in the benchmark set of MIPLIB 2017. It is important to point out that because we allow infeasible instances, successful “solution” of an instance for the purposes of this definition means that the solver either produced a (claimed) optimal solution or a (claimed) proof of infeasibility.

Definition 2

(Benchmark-suitable instance) We call an instance \(i \in {\mathscr {I}}\) benchmark-suitable if

-

(\({\mathscr {B}}\).1) it can be solved by at least one considered solver within 4 h;

-

(\({\mathscr {B}}\).2) it requires at least 10 s with 50% of the solvers;

-

(\({\mathscr {B}}\).3) it has a constraint and objective dynamism of at most \(10^{6}\) (see Sect. 4.3);

-

(\({\mathscr {B}}\).4) the absolute value of each matrix coefficient is smaller than \(10^{10}\);

-

(\({\mathscr {B}}\).5) the results of all solvers on i are consistent (see Sect. 4.7);

-

(\({\mathscr {B}}\).6) it has no indicator constraints (see Sect. 4.4);

-

(\({\mathscr {B}}\).7) it is either infeasible or has a finite optimum;

-

(\({\mathscr {B}}\).8) it is either infeasible, or its (objective) solution value is smaller than \(10^{10}\);

-

(\({\mathscr {B}}\).9) it has at most \(10^{6}\) nonzero entries.

The subset of benchmark-suitable instances from the ground set \({\mathscr {I}}^{\text {sub}}\) is denoted by \({\mathscr {B}}\).

(\({\mathscr {B}}\).2) eliminates instances from the benchmark selection that are too easy. Conversely, (\({\mathscr {B}}\).1) ensures that benchmark instances can be solved by at least one solver, as already done for MIPLIB 2010. This avoids the situation of MIPLIB 2003, for which four instances still remain unsolved 15 years after the release of the test set. The criteria (\({\mathscr {B}}\).3), (\({\mathscr {B}}\).4), (\({\mathscr {B}}\).5), (\({\mathscr {B}}\).8) ensure that the benchmark set does not contain numerically difficult instances for which results may be ambiguous. Furthermore, benchmark instances should not contain special constructs that are not supported by all solvers. As noted in Sect. 4.4, the only special constraint type in the submissions are constraints of indicator type, which are excluded from the benchmark set via (\({\mathscr {B}}\).6). (\({\mathscr {B}}\).7) excludes feasible instances that do not have a finite optimal value from the benchmark set for two reasons. First, a feasible, rational MIP has a finite optimal value if and only if its LP relaxation has a finite optimal value, rendering detection of this property more a continuous than a discrete problem. Second, there is currently no clear consensus on the expected behavior and output of MIP solvers in the case of a feasible MIP without a finite optimum. Note that in contrast, infeasible instances are deliberately not excluded. Finally, (\({\mathscr {B}}\).9) reduces the hardware requirements to perform tests with the benchmark set.

Table 7 lists for each criterion the number of excluded instances. Note that an instance may be excluded for several reasons. In total, 3407 instances were labeled as not benchmark-suitable, the majority of them because no solver solved them within the time limit of 4 h.

The larger MIPLIB 2017 collection covers a broader range of MIP instances. It includes at least one instance from each submitter, a constraint that cannot be enforced for the benchmark set due to runtime considerations. It may contain instances that are considered too easy or too hard for the benchmark set. It may contain instances with more dubious numerics suited for testing the robustness of solvers in general and techniques that explicitly aim at increasing numerical robustness. It may contain unbounded instances and instances with indicator constraints. It may contain up to five instances from each model group.

5.2 Diversity preselection

As with previous editions of MIPLIB, the number of instances varies significantly between different submissions and, more importantly, also between the model groups described in Sect. 3.4. While some model groups contain a single MIP instance that represents an optimization problem on a specific data set, other model groups contain hundreds of instances using the same underlying model for different data. Hence, for larger model groups, we preselect a diverse subset of instances as follows.

Let \({\mathscr {I}}= {\mathscr {I}}^{\text {sub}}\) denote the index set of submitted instances and let \({\mathscr {B}}\subseteq {\mathscr {I}}^{\text {sub}}\) be the subset of benchmark-suitable instances according to Definition 2. The choice of a subset of instances can be naturally encoded using a vector of binary variables \(x_i\) equal to one if and only if instance \(i \in {\mathscr {I}}\) is selected. For two instances \(i,j\in {\mathscr {I}}\), \(d_{i,j}\) denotes the Euclidean distance of their feature vectors. Then for a given model group \({\mathscr {G}}\subset {\mathscr {I}}\) of instances and specified \(\kappa \in {\mathbb {N}}\), we wish to choose \(\kappa \) instances maximally diverse in the sense that the minimum distance between two selected instances becomes maximal. If the model group contains benchmark-suitable instances, at least one of these should be included in the preselection. Such a preselection can be performed by solving the mixed binary optimization problem

An instance \(i \in {\mathscr {G}}\) is preselected if and only if the corresponding binary variable \(x_{i}\) equals one. The value \({\bar{d}}:= \max \{ d_{i,j} : i,j \in {\mathscr {G}}\}\) acts as big-M in Constraint (1b). In order to solve this optimization problem with a MIP solver, the bilinear product \(x_i x_j\) in (1b) must be linearized by replacing it with an auxiliary binary variable \(w_{i,j}\) under the additional constraints \(w_{i,j} \le x_i\), \(w_{i,j} \le x_j\), and \(w_{i,j} \ge x_i + x_j - 1\) for all pairs \(i \ne j \in {\mathscr {G}}\).

This preselection was performed for each model group exceeding five instances. The value of \(\kappa \) used was \(\kappa _{{\mathscr {G}}} = 5\) for groups with \(6 \le |{\mathscr {G}}| \le 10\) and \(\kappa _{{\mathscr {G}}} = 10\) for larger groups. The number of variables and constraints of the preselection model depends on the size of the corresponding model group. For the largest model group “cmflsp”, which comprises 360 instances of a capacitated multi-family lot-sizing problem, the diversity preselection instance has 129,242 rows, 64,981 columns, and 323,485 nonzeros. Except for this largest model group, which required approx. 800 s, all preselection problems could be solved to optimality within a time limit of 500 s using Gurobi 7.5.1.

As an example, Fig. 4 depicts the results of the preselection procedure for the model group “drayage”, which consists of 165 instances in total. The plot shows the instances from this group three times. The x and y-coordinates are computed using t-SNE [50], a technique to embed points of the high-dimensional feature space in 2D based on their distances. The leftmost plot highlights the optimal solution of the corresponding diversity preselection instance. Visually, the selected solution for this group is scattered evenly across the feature space of this group. The middle and right plot show the instances from this group that are selected for the collection and benchmark set, respectively, for which stricter cardinality restrictions on model groups apply. Despite those cardinality restrictions, the corresponding selections appear evenly spread across the ground set. A look at the performance results, which are taken into account for the selection of the benchmark set, reveals that the two selected instances also vary substantially regarding solution time. The easier of the two instances, namely drayage-100-23 could be solved by seven solvers with a median running time of 40 s. The harder instance drayage-25-23, on the other hand, could only be solved by three solvers and hence has a median running time of 4 h. The three other instances that are part of the collection lie in between. In total, diversity preselection reduces the instance set from 5666 to 2182 instances.

The preselected instances form a reduced index set \({\mathscr {I}}^{\text {pre}}\), which serves as input for the selection of the MIPLIB 2017 collection. Although the following selection procedure could have been applied to the entire set of submissions, we noticed several benefits of preselection empirically. It improves the results of the \(k\)-means clustering heuristic described in Sect. 5.3, reduces the size and difficulty of the selection MIP s to be solved, and finally leads to a larger collection and benchmark set.

5.3 Preparing multiple clusterings

One major challenge in selecting a test set is how to navigate the trade-off of good coverage of all observed instance properties against a balanced selection that avoids overrepresentation. The first goal is best achieved by simply selecting all suitable instances, while balancedness explicitly asks for removing instances from overrepresented problem classes.

A straightforward method would be to compute one clustering according to the entire feature matrix and pick instances uniformly from each cluster. When applied in a high-dimensional feature space, as in our setting, this naïve approach suffers from several problems well-known in data analysis, such as the curse of dimensionality [5] and the relative scaling of numerical features. The first term refers to the fact that with increasing dimensionality of the feature space, the difference of distances of one point to its nearest and to its farthest neighbor becomes smaller in relative terms. Hence, similar instances cannot be identified reliably. Conversely, depending on scaling, the distance with respect to one crucial feature may be dominated by less useful features, such that different instances cannot be distinguished reliably. Arguably, the same problem holds true everywhere where we use Euclidean distances in the selection process, for example during the diversity preselection in the previous section. An important difference between this section and the preselection is that for preselection, we only considered one model group (with many instances) at a time so that we already knew that all instances were similar to each other. In this and the following sections, however, this model group association is no longer used for the clustering, as we now incorporate also instances with no known model group association.

We therefore counteract the problems of a high-dimensional feature space by using multiple clusterings of the entire preselected instance set \({\mathscr {I}}^{\text {pre}}\) according to disjoint groups of features. Subsequently, we select instances such that they are balanced with respect to each of these clusterings. This selection process is more complex and cannot be achieved by simply picking uniformly from each cluster of each clustering. Instead, we formulate a mixed integer optimization problem with approximate balancing constraints for each of the multiple clusterings.

Formally, for a given index set \({\mathscr {I}}\) of instances we have \(K\) different clusterings, i.e.,

for \(k \in {\mathscr {K}}= \{ 1,\ldots ,K\}\), with disjoint \({\mathscr {C}}_{k,1} ,\dots , {\mathscr {C}}_{k,L_{k}}\) being a partition of the index set \({\mathscr {I}}\) for every k. The number of clusters \(L_k\) is allowed to vary, since different subsets of features may require a different number of clusters to achieve a high-quality clustering. We denote the index set of all clusters by \({\mathfrak {C}}:= \{ (k,\ell ) : k = 1,\ldots ,K, \ell = 1,\ldots ,L_k \}\). Furthermore, the cluster sizes contain outliers, which need special treatment in order to avoid limiting the size of the resulting test set too much. Hence, we partition the set of clusters into small, medium (regular-sized), and large clusters and denote the respective index sets by \({\mathfrak {S}}\), \({\mathfrak {M}}\), and \({\mathfrak {L}}\subseteq {\mathfrak {C}}\), as follows. A cluster \({\mathscr {C}}_{k,l}\) is denoted small if its size is less than half the average size of \({\mathscr {C}}_{k,1},\dots ,{\mathscr {C}}_{k,L_{k}}\). On the other hand, a cluster is treated as large if it is displayed as an outlier in a typical boxplot. Concretely, \({\mathscr {C}}_{k,l}\) is considered large if its size exceeds the 75% quantile among \({\mathscr {C}}_{k,1},\dots , {\mathscr {C}}_{k,L_{k}}\) by more than 1.5 interquartiles.

For the selection of the MIPLIB 2017 collection, we use one clustering for each of the \(K=11\) groups of instance features listed in Table 4. The clusterings of all preselected instances (2182 instances) are computed using a \(k\)-means heuristic [26], which yields a first family of clusterings denoted by \({\mathscr {K}}_1 = \{1,\ldots ,11\}\).

Table 8 gives insight into the result of this clustering process. It shows the total number of clusters (value of \(L_{k}\)) for each feature group clustering. The quality column describes the percentage of the total feature group distance between clusters. More formally, for a clustering \(k \in \{1,\dots ,K\}\) of instances, the quality of this clustering is

The value range of the above fraction is the interval [0, 1] such that the quality lies between 0 and 100%. A clustering has a high quality if long distances between instances are between different clusters. Note that for the distance computation for the quality measure, only features contained in the corresponding feature group were considered. The table shows that the quality of the clustering was at least 87% and often significantly above 90%, yielding an average quality of 92%. The individual value of \(L_{k}\) has been manually selected for every feature group as the minimum integer L that admits a clustering with at least 90% quality over the set \({\mathscr {I}}^{\text {sub}}\) (5666 instances) unless the targeted quality was not achievable using a reasonable amount of clusters. In addition to the number of clusters \(L_{k}\), the table presents the number of small and large clusters, which are constrained less strictly than the medium clusters, see Constraints (4a) and (4b) below.

The last two columns report the minimum and maximum total dissimilarity per feature group. For each cluster \((k,\ell ) \in {\mathfrak {C}}\), its total dissimilarity \(\delta _{k,\ell } \ge 1\) is defined as the shifted geometric mean of the pairwise Euclidean distances \(\{d_{i,j}\,:\,i < j\in {\mathscr {C}}_{k,\ell }\}\) between its instances, using a shift of 1. Here, distances are computed with respect to the entire feature space, in contrast to the above cluster quality computation. Because of the shift by 1, the smallest possible value of \(\delta _{k,\ell }\) is 1, which only occurs for clusters containing exactly one element, or for clusters that contain only instances which are indistinguishable in the feature space. All cluster parameters from Table 8 enter the selection constraints described in the next section.

For the selection of the benchmark set, we additionally use performance data to hand-craft three clusterings for each of the eight participating solvers, which yields additional clusterings \({\mathscr {K}}_2 = \{12,\ldots ,35\}\), see Sect. 5.5 below.

5.4 Selection of the MIPLIB 2017 Collection

In the following, we describe linear formulations to enforce the requirements specified by the committee. At this stage, the instance set was limited to the instances \({\mathscr {I}}= {\mathscr {I}}^{\text {pre}}\) left after the diversity preselection procedure. The set of clusterings \({\mathscr {K}}= {\mathscr {K}}_1\) was the one determined using the instance feature groups from Table 4.

To express balancedness, consider one clustering \({\mathscr {I}}= {\mathscr {C}}_{k,1} \cup \dots \cup {\mathscr {C}}_{k,L_k}\). Naïvely, we would like to pick the same number of instances from each cluster, i.e., \(\sum _{i \in {\mathscr {C}}_{k,\ell }} x_i \approx y_k\) for an auxiliary variable \(y_k \ge 0\). However, enforcing this for all clusterings is highly restrictive. Furthermore, while the instances in each of the clusters \({\mathscr {C}}_{k,1}, \dots , {\mathscr {C}}_{k,L_k}\) should be homogeneous with respect to the features that were used to compute clustering k, they may be heterogeneous with respect to the entire feature vector. This interaction between different clusterings must be taken into account.

To achieve this, we consider the total dissimilarity of the clusters that was introduced above. Arguably, from clusters with higher total dissimilarity, more instances should be picked, i.e., \(\sum _{i \in {\mathscr {C}}_{k,\ell }} x_i \approx \delta _{k,\ell } y_k\). Introducing a tolerance parameter \(\varepsilon \), \(0< \varepsilon < 1\), we arrive at the balancedness constraints

Concretely, we used \(\varepsilon = 0.5\) for the selection of the collection and the benchmark set. In practice, we discard the left inequality for small clusters and the right inequality for large clusters and use

Additionally, if two instances have identical feature vectors, then at most one of them should be chosen , i.e.,

At most five instances should be selected from each model group. If the model group contains benchmark-suitable instances, at least one of those should be included into the MIPLIB 2017 collection. Let \({\mathscr {I}}= {\mathscr {G}}_1 \cup \ldots \cup {\mathscr {G}}_P\) denote the partition of instances into different model groups, then this condition reads

Furthermore, from each submitter at least one instance should be selected, i.e.,

where \({\mathscr {I}}= {\mathscr {S}}_1 \cup \cdots \cup {\mathscr {S}}_S\) denotes the partition of instances with respect to S submitters. Finally, we imposed relative limits on a small number of specific subsets of instances, \({\mathscr {R}}_r \subset {\mathscr {I}}\), by requiring

The concrete values for \(\rho _r\), for both the collection MIP and the benchmark MIP described in Sect. 5.6, are given in Table 9. The numbers in parentheses show the size of respective ground sets \({\mathscr {I}}^{\text {pre}}\) and \({\mathscr {I}}^{\text {col}}\cap {\mathscr {B}}\), from which instances were selected. The number of instances from the NEOS server was limited by the committee because of the lack of reliable information on their application and model background. Purely binary problems (BP) were limited because they often represent academic applications such as combinatorial puzzles, but less often occur in industrial, “real-world” instances. The limit ensures that enough actual mixed integer instances are selected for the collection and benchmark sets. For the groups in Table 9 that refer to instance features, the features are always evaluated after trivial presolving.

Subject to those constraints, our objective was to include as many instances as possible, preferring benchmark-suitable instances. Hence, we formulate the collection MIP as the mixed binary optimization problem

with objective cofficients \(\beta \) to prefer benchmark-suitable instances,

We solved the collection MIP over the ground set \({\mathscr {I}}^{\text {pre}}\) of 2182 instances (after diversity preselection). Despite our efforts to remove obvious duplicates, there remained 48 pairs of instances in \({\mathscr {I}}^{\text {pre}}\) with a feature distance of zero. From each such pair, at most one instance was selected for \({\mathscr {I}}^{\text {col}}\) because of Constraint (4c). We obtained the MIPLIB 2017 collection \({\mathscr {I}}^{\text {col}}\), which comprises 1,065 instances, 499 of which are benchmark-suitable.