Abstract

Robot Learning from Demonstration (RLfD) seeks to enable lay users to encode desired robot behaviors as autonomous controllers. Current work uses a human’s demonstration of the target task to initialize the robot’s policy, and then improves its performance either through practice (with a known reward function), or additional human interaction. In this article, we focus on the initialization step and consider what can be learned when the humans do not provide successful examples. We develop probabilistic approaches that avoid reproducing observed failures while leveraging the variance across multiple attempts to drive exploration. Our experiments indicate that failure data do contain information that can be used to discover successful means to accomplish tasks. However, in higher dimensions, additional information from the user will most likely be necessary to enable efficient failure-based learning.

Similar content being viewed by others

Notes

In situations where success is more common, such as in Fig. 9 right, all approaches faired generally equally.

References

Abbeel P, Coates A, Quigley M, Ng AY (2006) An application of reinforcement learning to aerobatic helicopter flight. In: Neural inf proc systems

Argall BD, Chernova S, Veloso M, Browning B (2009) A survey of robot learning from demonstration. Robot Auton Syst 57(5):469–483

Billard A, Calinon S, Dillmann R, Schaal S (2008) Survey: robot programming by demonstration. Handbook of robotics. MIT Press, Cambridge

Chernova S, Veloso M (2007) Confidence-based policy learning from demonstration using Gaussian mixture models. In: Intl joint conf on autonomous agents and multi-agent systems

Dayan P, Hinton G (1997) Using expectation-maximization for reinforcement learning. Neural Comput 9(2):271–278

Deisenroth MP, Rasmussen CE (2011) Pilco: a model-based and data-efficient approach to policy search. In: Intl conf on machine learning

Dong S, Williams B (2011) Motion learning in variable environments using probabilistic flow tubes. In: Intl conf on robotics and automation

Gams A, Do M, Ude A, Asfour T, Dillmann R (2010) On-line periodic movement and force-profile learning for adaptation to new surfaces. In: Intl conf on humanoid robots

Grimes DB, Chalodhorn R, Rao RPN (2006) Dynamic imitation in a humanoid robot through nonparametric probabilistic inference. In: Robotics: science and systems

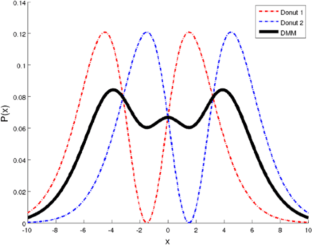

Grollman DH, Billard A (2011) Donut as I do: Learning from failed demonstrations. In: Intl conf on robotics and automation

Grollman DH, Jenkins OC (2007) Dogged learning for robots. In: Intl conf on robotics and automation

Hersch M, Guenter F, Calinon S, Billard A (2008) Dynamical system modulation for robot learning via kineshetic demonstrations. Trans Robot, 1463–1467

Hu X, Xu L (2004) Investigation on several model selection criteria for determining the number of cluster. Neural Inf Process - Lett Rev 4(1):1–10

Kober J, Peters J (2010) Policy search for motor primitives in robotics. Mach Learn 84(1–2):171–203

Kober J, Mohler B, Peters J (2008) Learning perceptual coupling for motor primitives. In: Intl conf on intelligent robots and systems

Kronander K, Khansari Zadeh SM, Billard A (2011) Learning to control planar hitting motions in a monigolf-like task. In: Intl conf on intelligent robots and systems

Kuniyoshi Y, Inaba M, Inoue H (1994) Learning by watching: Extracting reusable task knowledge from visual observation of human performance. IEEE Trans Robot Autom 10(6):799–822

Meltzoff AN (1995) Understanding the intentions of others: re-enactment of intended acts by 18-month-old children. Dev Psychol 31(5):838–850

Mtsui T, Inuzuka N, Seki H (2002) Adapting to subsequent changes of environment by learning policy preconditions. Int J Comput Inf Sci 3(1):49–58

Neal R, Hinton GE (1998) A view of the EM algorithm that justifies incremental, sparse, and other variants. In: Learning in graphical models

Pastor P, Kalakrishnan M, Chitta S, Theodorou E, Schaal S (2011) Skill learning and task outcome prediction for manipulation. In: Intl conf on robotics and automation

Ramachandran D, Amir E (2007) Bayesian inverse reinforcement learning. In: Intl joint conf on artificial intelligence

Sung HG (2004) Gaussian mixture regression and classification. PhD thesis, Rice

Thomaz AL, Breazeal C (2008) Experiments in socially guided exploration: lessons learned in building robots that learn with and without human teachers. Connect Sci 20(2–3):91–110

Want SC, Harris PL (2001) Learning from other people’s mistakes: causal understanding in learning to use a tool. Child Dev 72(2):41–443

Acknowledgements

This work was supported by the European Commission under contract number FP7-248258 (First-MM).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Grollman, D.H., Billard, A.G. Robot Learning from Failed Demonstrations. Int J of Soc Robotics 4, 331–342 (2012). https://doi.org/10.1007/s12369-012-0161-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-012-0161-z