Abstract

Purpose of Review

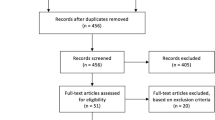

Augmented reality (AR) is becoming increasingly popular in modern-day medicine. Computer-driven tools are progressively integrated into clinical and surgical procedures. The purpose of this review was to provide a comprehensive overview of the current technology and its challenges based on recent literature mainly focusing on clinical, cadaver, and innovative sawbone studies in the field of orthopedic surgery. The most relevant literature was selected according to clinical and innovational relevance and is summarized.

Recent Findings

Augmented reality applications in orthopedic surgery are increasingly reported. In this review, we summarize basic principles of AR including data preparation, visualization, and registration/tracking and present recently published clinical applications in the area of spine, osteotomies, arthroplasty, trauma, and orthopedic oncology. Higher accuracy in surgical execution, reduction of radiation exposure, and decreased surgery time are major findings presented in the literature.

Summary

In light of the tremendous progress of technological developments in modern-day medicine and emerging numbers of research groups working on the implementation of AR in routine clinical procedures, we expect the AR technology soon to be implemented as standard devices in orthopedic surgery.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Technologic advances significantly influenced the way orthopedic surgery is practiced today and paved the way for recent innovations in surgical procedures. Complex surgical interventions, as needed for the treatment of musculoskeletal tumors, fracture reduction, corrective osteotomy procedures, or instrumentation adjacent to critical anatomic structures require precise preoperative planning and accurate intra-operative execution. Computer-driven approaches have been established in many areas of orthopedic surgery to support the surgeon in facilitating preoperative planning or improving surgical execution [1, 2]. Different technological innovations have been implemented in orthopedic surgery such as robotic surgery [3], 3D-printed patient-specific instrumentation (PSI) [1], navigation tools with tracking visualized on monitors [4], and the emerging technology of augmented reality which allow a human-computer interface [5]. Robotic surgery still has significant limitations that prevent broader clinical use, as devices are very expensive [6], spacious, and their setup and maintenance are time-consuming and cost-intensive [7]. PSI has proven as feasible tool with excellent postoperative results [8, 9]. However, the design and manufacturing of patient-specific instruments need up to weeks of lead time. Another drawback is that accurate navigated surgical execution requires extensive bone exposition [10].

AR is defined as “a system”, where the real world is enhanced with virtual computer-generated sensory impressions that appear to coexist in the same space as the real world [11]. These virtual impressions can be visual stimuli (e.g., holograms), and any other sensorial information such as sound. The AR technology originated from the military sector [11, 12] has been widely used commercially in the area of entertainment and gaming [13] and is now promoted for use in orthopedic surgery [14]. Recently, the FDA (U.S. Food and Drug Administration) approved the first AR applications for elective spinal surgery [15, 16].

Orthopedic surgical procedures require a vast amount of numeric and geometric information, such as angles of deformity [17], anatomic relations for instrumentation [18], vital parameters such as blood pressure for blood loss control [19, 20] or trajectory orientation for instrumentation and implant placement [21••]. In daily clinical practice, these parameters are analyzed preoperatively by the surgeon and made available via prints and/or digital imaging data on screens in the operating room. Still, information is lost between preoperative planning and the execution of the procedure.

AR represents a valuable solution to improve information transfer and consideration during surgery. Current AR approaches are mainly visual and use monitors [22] or head-mounted displays (HMD) [23•].

Recent publications on the topic evaluate the potential benefits of this technology, especially in regard to radiation exposure of the patient and staff, procedure time in the operating room, and improvement of accuracy of surgical execution. This review provides a comprehensive overview of the current state of the technology and recent research of AR in the field of orthopedic surgery (Table 1). The aim of the article is to elucidate basic principles and current applications of AR to orthopedic surgeons, who lack profound knowledge in computer sciences.

Basic Principles of AR

Data Preparation for Visualization of Radiologic Imaging and Navigation

In case of surgical navigation, the relevant information for the surgeon is typically extracted preoperatively from two-dimensional (2D) or three-dimensional (3D) radiologic imaging. The virtual model then consists of a computed 3D anatomy model including data about the surgical navigation to execute critical surgical steps. Fürnstahl et al. [45••] described the process of data preparation and surgical planning for the purpose of 3D surgical navigation of long bone deformities as follows.

A CT scan of the pathologic bones is acquired in high-resolution with a slice thickness of 1 mm or smaller. The data is then imported in a commercial image processing software such as Mimics (Mimics Medical, Version 19; Materialise, Leuven, Belgium). The anatomical region of interest is segmented from the soft tissue using density-based thresholding and region-growing functions. A 3D triangular surface model is generated from the segmented image using the Marching Cube algorithm [46]. The 3D model is then imported into a surgical planning software in order to elaborate a computer-assisted surgical plan by stepwise simulation of the surgery. Machine-learning approaches have shown good results for improving automatization of this process [47,48,49,50,51,52], but the gold standard for clinical-grad surgical planning remains human-based planning performed by experts [53••].

Registration and Tracking

Registration is the process in which the visualized computer-generated object, which can be a radiologic image or a modality of navigation, is superimposed and oriented into situs in the correct position. After registration, tracking enables the visualized object to stay in the right position when moving and to adapt to the user position as well as to the detect instruments and their orientation and movement in three-dimensional space. Tracking requires the AR-system to reference the visualization or instrument from its original registration in a spatial room. For instruments, this is referred to as pose reconstruction. Low accuracy of registration and motion tracking is one of the main pitfalls of this technology [21••] for surgical use. The methods for registration presented in this review are camera-augmented c-arm registration [22, 44, 54,55,56], marker-based registration [25, 28, 34, 38], and surface registration [21, 23]. Navab et al. [54••] first engineered the camera-augmented surgical c-arm and discovered its potential for augmented reality in the operating room. This system registers intraoperatively acquired X-rays to the camera image of the operation field in a 3D coordinate system shared with the AR-head-mounted-display (HMD), such that the X-rays can then be visualized in real-time [56].

Marker-based registration is based on the registration of marker positions in a 3D coordinate system in relation to the computed reality augmentation, usually a 3D model of the anatomical region of interest. Its accuracy depends on exact positioning of the markers [25, 34, 38, 57].

Liebmann et al. [21••] first introduced intraoperative radiation-free registration by surface digitalization using only an AR-HMD and a pointing device to navigate pedicle screw instrumentation. Their approach registers a CT-based 3D preoperative plan by superimposing the 3D model to the intraoperative bony surface. A marker senses the surface of the exposed bone surface and samples a point cloud intraoperatively. When sufficient information is collected, the unique surface pattern is recognized, and the 3D model including the surgical plan is superimposed on the surgical field using iterative closest point registration [58].

Visualization

Within the field of human-computer interaction, augmented reality can be understood as a class of displays [59]. The fusion between artificial information and real-world images is performed by either optical or video see-through techniques [11]. There are three main approaches to implementing those displays: head-mounted displays (HMD), monitors, and projectors [11]. Display weight, size, and resolution favor the use of an HMD as a simple method of visualization. HMD with a see-through display visualizes information into the field of view of the user [60].

Regarding visualization, there are two main approaches. First, the visualization can be independent from any spatial relation. This application is mainly useful for displaying additional information such as numeric data [61]. A more sophisticated approach displays a visualization depending on associated spatial positions. Thus, the image changes according to the position of the user. This requires position tracking of the HMD. If the device itself is capable to determine its spatial position by means of integrated sensors, it uses inside-out tracking [62]. On the contrary, outside-in tracking uses an external camera system to detect and track the position of the AR device. In outside-in tracking additional hardware is required, and occlusions may occur, which are two disadvantages of this system [63].

Most commercial AR-devices have originally been developed in the area of entertainment and gaming [13]. Therefore, the commercially available hardware itself is hardly appropriate for clinical use since its original capacity was not intended for high-accuracy visualization. The accuracy is mainly dependent on two factors: registration and exact spatial location for tracking. Liebmann et al. [64•] has pointed out the problem with low-fidelity tracking for navigation purposes resulting in drifting virtual models in orthopedic surgery. In 2019, an HMD device engineered for surgical use, Xvision (Augmedics, Arlington Heights IL, USA), was the first to be cleared by the FDA for spinal surgery navigation [15•] and since early 2020, the Microsoft HoloLens has been approved to be used for spinal surgery navigation in a Swiss first-in-man clinical study by Swissmedic.

Clinical Applications of AR

Spine Surgery

The spinal cord as well as its emerging spinal nerves and accompanying vessels are prone to iatrogenic injury during instrumentation due to the close proximity to the bony structure of the spine. Mispositioning of pedicle screws during spinal fusion surgery can result in neurological or vascular injury with severe long-term sequelae [22]. Therefore, the majority of AR applications in spine surgery address the surgical navigation of pedicle screw instrumentation [15, 16, 21, 22, 25, 26, 28].

Yoon et al. [26] placed forty pedicle screws using the Google Glass (Foxconn, Google, Mountain View, CA, USA) as a head-mounted display (HMD) in 10 consecutive patients. The study group instrumented cervical, thoracic, and lumbar pedicle screws navigated with the Medtronic Stealth S7 (Medtronic Inc., Littleton, Massachusetts) image-guidance system with radiologic imaging using the O-ARM (Medtronic Inc) and visualized with the HMD. The HMD had a voice control feature to control the information to be displayed. The registration, tracking, and navigation were performed by the Medtronic Stealth S7 system. This feasibility study described the use of HMDs during the procedure of pedicle screw instrumentation as safe. No complications were reported in the results.

Molina et al. [15•] placed Th6 to L5 pedicle screws in five male cadaver torsos using the Xvision (Augmedics, Arlington Heights IL, USA). The group navigated 120 pedicle screws and graded accuracy using the Gertzbein scale (GS) [65], a combination of that scale and the Heary classification [66], referred to in this paper as the Heary-Gertzbein scale (HGS). Overall accuracy when using the AR system was 96.7% based on the HGS and 94.6% based on the GS, which is similar to the accuracy reported for computer-navigated pedicle instrumentation. User experience evaluated with the user experience questionnaire [67] was rated as excellent in terms of usability.

Elmi-Terander et al. [22] navigated 253 pedicle screws from Th1-S1 in a clinical study of twenty patients. They used a modified version of the camera-augmented c-arm [55] and also graded accuracy using the GS and achieved overall accuracy of 94.1%. No screws were assessed as Gertzbein grade 3. The group described a decreased time for instrumentation once experience was gained. From a starting time of 17 min required for screw placement, the average instrumentation time dropped to 1.8 ± 0.9 min with increased experience with the navigation system.

The same research group performed a follow-up study in the form of a case-control-study [27] consisting of 20 AR-guided versus 20 free-hand instrumented pedicle screws, which confirmed most of their preliminary findings. The clinical accuracy of AR navigation was 93.9% and thus slightly higher compared to the free-hand group with 89.6%. The percentage of perforation was only half as high with AR compared to free-hand screws. No significant difference in instrumentation time could be shown between the groups.

Liebmann et al. [68] developed a new registration method to superimpose a 3D model of the patient vertebra together with planning information including pedicle screw insertion point and trajectory. Their idea was to register a point cloud of the exposed bone surface using a marker-tracked pointing device to the 3D model of the surgery plan. The group navigated L1–L5 screws using the HoloLens on spine sawbone models and reported an accuracy of 3.38°± 1.73 for the screw trajectory orientation and 2.77 ± 1.46 mm for the entry point localization. The mean time required for surface digitization was 125 ± 27 s.

Müller et al. [25] described an image-based registration approach, which was evaluated in a cadaver study. Three spine cadavers were embedded in opaque agar gel to simulate a lumbar torso. They attached optical markers with radiopaque parts to specified anatomical locations on the cadaver and acquired a CT scan with a cone beam CT device. After segmentation and registration of the bony anatomy, the transformation between markers and anatomy enabled real-time overlay of the surgery plan for pedicle screw instrumentation. The proposed approach could achieve an accuracy in pedicle screw placement comparable to navigation with high-precision optical systems.

Wu et al. [28] superimposed radiologic imaging onto the patient’s skin using a commercially available entertainment projector. The used system visualized the patient’s anatomy to guide needle instrumentation for vertebroplasty. They evaluated the system on a synthetic phantom and verified precision of the system on an animal cadaver. Later, he assessed accuracy of inserting points during vertebroplasty in three clinical trial participants. The mean transition error in entry point location was 4.4 mm, and the system reduced the time of finding the entry point by 70%. They, however, noted that adipose tissue during surgery influences the accuracy of this system since the overlying skin, where the markers are attached, is mobile.

Osteotomies

Precise surgical execution of osteotomies is crucial in corrective procedures to reconstruct the physiologic anatomy [30]. Particularly, complex corrective osteotomies consisting of multiple oblique or curved osteotomy planes are challenging to perform without support through surgical navigation [29, 45, 69, 70].

Fallavolita et al. [30] presented a method to visualize the mechanical leg axis intraoperatively using AR. The group used the camera-augmented c-arm [55] to create a panorama view of the hip center, knee, and upper ankle joint based on three X-ray images. Twenty-five cadaver legs with random varus or valgus deformities were used to validate their method and confirmed it to ground truth CT data with no statistically significant difference. The group stated that the method allowed reliable tracking of the leg axis intraoperatively requiring only 3 X-rays.

Kosterhorn et al. [29] presented a case report about an AR application integrated into a surgical microscope through an HMD. Surgical planning was visualized in situ and allowed the study group to navigate the osteotomy planes of pedicle subtraction osteotomies. The anatomy was registered to intraoperative accessible landmarks of the vertebral body. The procedure itself is a high-risk intervention consisting of invasive osseous reduction of the vertebral body in proximity to the neuronal structures of the spine. The method was first simulated on a sawbone spine model and later implemented in the operating room. In the presented case, the surgeons resected a 27° posterior wedge of the Th1 vertebral body and reported good match with the virtually overlaid navigation template. The pathologic segmental kyphosis Th11–12 improved from 45 to 5°. No complications or neurologic deficits were observed.

Arthroplasty

In arthroplasty, exact implantation of the prosthetic components with respect to the patient’s anatomy is a main contributor to successful outcomes, functional recovery, and longevity [32, 34, 36]. Three studies performed on sawbones evaluated AR navigation of arthroplasties as more accurate than free-hand procedures in hip [32, 36] and knee surgery [33].

Ogawa et al. [34] performed 56 total hip arthroplasties in 54 patients superimposing cup orientation through a smartphone into the surgical filed (group 1) and using a goniometer (group 2) for navigating placement and orientation of the acetabular cup component. Three months after implantation, a CT scan was acquired for assessment of the surgical accuracy. AR navigation was significantly more accurate in terms of radiographic anteversion compared to the goniometer method (2.7° vs. 6.8°). The AR system was evaluated as a safe and effective navigation tool for cup orientation. No information on clinical outcome or complications was provided.

In the next step, Ogawa et al. [31••] conducted a randomized controlled trial, where forty-six patients were randomly assigned to undergo acetabular cup placement during THA using either a marker-based AR navigation system or a conventional mechanical alignment guide. They found no differences in acetabular anteversion accuracy, and no clinically important differences in acetabular inclination.

Trauma

The outcome of trauma surgery is highly dependent on exact anatomic reduction of the fractured bone fragments.

Ortega et al. [44] conducted a multicenter study including 50 patients using an HMD to display in-situ intraoperative fluoroscopic images acquired by a c-arm. With this technique, the surgeons’ attention left the operative field only five times compared to 207 times with conventional visualization. Radiation exposure was also significantly reduced.

Shen et al. [43] used an HMD for preoperative bending of osteosynthesis plates in 6 cases of pelvic fractures. The group reduced the fracture in a computer-assisted simulation and evaluated the optimal plate design. After determining the optimal plate shape, they bent the plate preoperatively by visualizing the optimal plate template with an HMD or monitor. After surgical sterilization, the pre-bent plates were used in the surgeries. For all patients, good anatomical reconstruction, good functional recovery, and no complications were reported. The surgery time was reduced by a mean of 10 min.

Von Heide [42] compared osteosynthesis, wiring, and implant removal surgeries using an AR application together with the camera-augmented c-arm [55] in 28 cases, in which registered imaging of the fractures was superimposed in-situ on a monitor and compared to 45 cases performed with conventional c-arm fluoroscopy. The group reduced radiation exposure by 46% (18 X-rays) using the AR visualization, but without observing any reduction in surgery time.

Weidert et al. [41] applied the camera-augmented c-arm for distal intramedullary nail locking in 42 bovine forelimb bones superimposing registered imaging of the X-rays in-situ on a monitor. Three surgeons with different levels of experience (beginner, intermediate, expert) conducted the experiments. The study group analyzed surgical accuracy, radiation dose, and surgery time. The main finding and benefit of their AR application was the significant reduction of radiation use, especially for novice surgeons.

Orthopedic Oncology

Oncologic surgery is faced with a constant compromise between maintaining the safety margin required for sufficient tumor resection and excessive removal of functional tissue. Therefore, high surgical accuracy and exact execution are crucial for patient survival and optimal functional outcome.

Cho et al. [38] published an experimental study focusing on bone tumor resection. The group compared the feasibility of using AR with a tablet in comparison to a conventional tumor resection navigation method in 82 porcine cadaveric femurs. The conventional method consisted of an optical tracking system, a display, and a workstation. The group injected bone cement in the cadaver legs to simulate bone tumors. In the resections, the goal was to maintain a resection margin of 10 mm. The mean error was 1.71 mm in the AR group without any tumor violation and 2.64 mm in the conventional group with three tumor violations. The aimed oncologic margin of 10 mm was achieved in 90.2% of AR-guided resections and in 70.7% in the conventional group.

In addition to the cadaver evaluation, one clinical case of AR-navigated resection of a low-grade osteosarcoma in the diaphysis of the tibia was performed. The preoperative plan aimed for a 1.5-cm safety margin. Histologic workup showed a 1.4-cm margin proximally and a 1.7-cm margin distally.

The same research group described a further AR application for pelvic tumors [37]. As in their previous work, the resections were simulated by injecting bone cement in 18 porcine cadaver pelvises. The resection errors were classified into four grades: ≤ 3 mm, 3 to 6 mm, 6 to 9 mm, and > 9 mm or any tumor violation. After evaluation, average resection margin of the AR group was 1.59 ± 4.13 mm in comparison to 4.55 ± 9.7 mm in the control group. The group described the study as a proof of concept. Still, current results do not yet justify a clinical trial without further in-vivo animal studies.

Choi et al. [39] presented a similar study and resected 60 simulated bone tumors in porcine cadaver pelvises to compare AR-navigation using a tablet with conventional navigation. The conventional navigation method was not further described. As in the study of Cho et al., the aimed oncologic tumor resection margin was 10 mm. After analyzing the resected cadavers, AR showed a mean resection margin of 9.85 mm compared to a 7.11 mm resection margin in the control group.

Conclusion

The technology of augmented reality is on the rise, and its application in orthopedic surgery has gained increasing attention opening up new opportunities in surgical planning and execution. The translation of pre-clinical results from proof of concept and feasibility studies to daily practice has been initiated. However, to this day the way AR and HMDs influence our concentration, perception and cognition is far from understood [71]. The sensory impression delivered through an HMD is a new experience to many surgeons. Avoiding overload of information and providing well-designed user interfaces will be necessary to smoothly integrate this technology in daily clinic practice. For final deployment and adoption, AR needs to be fully integrated into the surgical workflow [72].

The lack of robust and accurate registration and tracking processes which represent major limitations and refinements of this technology are needed to allow implementation of AR systems in the operating room. Here, inaccuracies can lead to misplaced virtual models making the navigation unreliable. Buggy navigation is widely known from other computer-assisted navigation approaches based on optical markers. Different new innovations are coming up; nevertheless, the technology itself needs further development in this direction. The ideal AR system should work automatically and allow not only surgical navigation but also error detection.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Schweizer A, Furnstahl P, Harders M, Szekely G, Nagy L. Complex radius shaft malunion: osteotomy with computer-assisted planning. Hand (N Y). 2010;5(2):171–8.

Schlenzka D, Laine T, Lund T. Computer-assisted spine surgery. Eur Spine J. 2000;9(1):S057–S64.

Hernandez D, Garimella R, Eltorai AEM, Daniels AH. Computer-assisted orthopaedic surgery. Orthop Surg. 2017;9(2):152–8.

End K, Eppenga R, Kfd K, Groen HC, van Veen R, van Dieren JM, et al. Accurate surgical navigation with real-time tumor tracking in cancer surgery. NPJ Precis Oncol. 2020;4(1):8.

Navab N, Blum T, Wang L, Okur A, Wendler T. First deployments of augmented reality in operating rooms. Computer. 2012;45(7):48–55.

Watkins RG, Gupta A, Watkins RG. Cost-effectiveness of image-guided spine surgery. Open Orthop J. 2010;4:228–33.

Barbash GI, Glied SA. New technology and health care costs — the case of robot-assisted surgery. N Engl J Med. 2010;363(8):701–4.

Qiu B, Liu F, Tang B, Deng B, Liu F, Zhu W, et al. Clinical study of 3D imaging and 3D printing technique for patient-specific instrumentation in total knee arthroplasty. J Knee Surg. 2017;30(8):822–8.

Roner S, Bersier P, Furnstahl P, Vlachopoulos L, Schweizer A, Wieser K. 3D planning and surgical navigation of clavicle osteosynthesis using adaptable patient-specific instruments. J Orthop Surg Res. 2019;14(1):115.

Furnstahl P, Vlachopoulos L, Schweizer A, Fucentese SF, Koch PP. Complex osteotomies of tibial plateau malunions using computer-assisted planning and patient-specific surgical guides. J Orthop Trauma. 2015;29(8):e270–6.

Azuma R, Baillot Y, Behringer R, Feiner S, Julier S, MacIntyre B. Recent advances in augmented reality. IEEE Comput Graph Appl. 2001;21(6):34–47.

Azuma R. Tracking requirements for augmented reality. Commun ACM. 1993;36(7):50–1.

Tan CT, Soh D. Augmented reality games: a review 2011. 212–218 p.

Blackwell M, Morgan F, DiGioia AM. Augmented reality and its future in orthopaedics. Clin Orthop Relat Res. 1998;354:111–22.

• Molina CA, Theodore N, Ahmed AK, Westbroek EM, Mirovsky Y, Harel R, et al. Augmented reality-assisted pedicle screw insertion: a cadaveric proof-of-concept study. J Neurosurg Spine. 2019:1–8 Shows that that AR-navigated pedicles screws are not inferior to conventional navigation systems and robotic-assited screw placement. Results were superior to freehand pedicle screw placement.

Gibby JT, Swenson SA, Cvetko S, Rao R, Javan R. Head-mounted display augmented reality to guide pedicle screw placement utilizing computed tomography. Int J Comput Assist Radiol Surg. 2019;14(3):525–35.

Cherian JJ, Kapadia BH, Banerjee S, Jauregui JJ, Issa K, Mont MA. Mechanical, anatomical, and kinematic axis in TKA: concepts and practical applications. Curr Rev Musculoskelet Med. 2014;7(2):89–95.

Bernard TN Jr, Seibert CE. Pedicle diameter determined by computed tomography. Its relevance to pedicle screw fixation in the lumbar spine. Spine. 1992;17(6 Suppl):S160–3.

Lambert DH, Deane RS, Mazuzan JE Jr. Anesthesia and the control of blood pressure in patients with spinal cord injury. Anesth Analg. 1982;61(4):344–8.

Nelson CL, Fontenot HJ. Ten strategies to reduce blood loss in orthopedic surgery. Am J Surg. 1995;170(6A Suppl):64s–8s.

•• Liebmann F, Roner S, von Atzigen M, Scaramuzza D, Sutter R, Snedeker J, et al. Pedicle screw navigation using surface digitization on the Microsoft HoloLens. Int J Comput Assist Radiol Surg. 2019;14(7):1157–65 This article explains a new registration method to superimpose a 3D model of the patient vertebra together with surgical planning by registering a point cloud of the exposed bone surface using a marker-tracked pointing device.

Elmi-Terander A, Burström G, Nachabe R, Skulason H, Pedersen K, Fagerlund M, et al. Pedicle screw placement using augmented reality surgical navigation with intraoperative 3D imaging: a first in-human prospective cohort study. Spine. 2019;44(7).

• Wanivenhaus F, Neuhaus C, Liebmann F, Roner S, Spirig JM, Farshad M. Augmented reality-assisted rod bending in spinal surgery. Spine J. 2019;19(10):1687–9 Already simple AR applications such as 3D visualization of a target shaped rod significantly reduces bending time resulting in a potential decrease of surgical time.

•• Elmi-Terander A, Burström G, Nachabé R, Fagerlund M, Ståhl F, Charalampidis A, et al. Augmented reality navigation with intraoperative 3D imaging vs fluoroscopy-assisted free-hand surgery for spine fixation surgery: a matched-control study comparing accuracy. Sci Rep. 2020;10(1):707 This matched-control group study showed less cortical bone breach when placing pedicle screws with AR-navigation compared to freehand placement. A study reporting how AR navigation improves safe execution of complex procedures.

Müller F, Roner S, Liebmann F, Spirig JM, Fürnstahl P, Farshad M. Augmented reality navigation for spinal pedicle screw instrumentation using intraoperative 3D imaging. Spine J. 2020;20(4):621–8.

Yoon JW, Chen RE, Han PK, Si P, Freeman WD, Pirris SM. Technical feasibility and safety of an intraoperative head-up display device during spine instrumentation. Int J Med Robot. 2017;13(3).

Elmi-Terander A, Skulason H, Soderman M, Racadio J, Homan R, Babic D, et al. Surgical navigation technology based on augmented reality and integrated 3D intraoperative imaging: a spine cadaveric feasibility and accuracy study. Spine. 2016;41(21):E1303–e11.

Wu JR, Wang ML, Liu KC, Hu MH, Lee PY. Real-time advanced spinal surgery via visible patient model and augmented reality system. Comput Methods Prog Biomed. 2014;113(3):869–81.

Kosterhon M, Gutenberg A, Kantelhardt SR, Archavlis E, Giese A. Navigation and image injection for control of bone removal and osteotomy planes in spine surgery. Oper Neurosurg. 2017;13(2):297–304.

Fallavollita P, Brand A, Wang L, Euler E, Thaller P, Navab N, et al. An augmented reality C-arm for intraoperative assessment of the mechanical axis: a preclinical study. Int J Comput Assist Radiol Surg. 2016;11(11):2111–7.

•• Ogawa H, Kurosaka K, Sato A, Hirasawa N, Matsubara M, Tsukada S. Does an augmented reality-based portable navigation system improve the accuracy of acetabular component orientation during THA? A randomized controlled trial. Clin Orthop Relat Res. 2020;478(5):935–43 The first clinical study for acetabular cup placement in total hip arthroplasty showing the superior results regarding plan to outcome error using AR navigation.

Alexander C, Loeb AE, Fotouhi J, Navab N, Armand M, Khanuja HS. Augmented reality for acetabular component placement in direct anterior total hip arthroplasty. J Arthroplast. 2020.

Tsukada S, Ogawa H, Nishino M, Kurosaka K, Hirasawa N. Augmented reality-based navigation system applied to tibial bone resection in total knee arthroplasty. J Exp Orthop. 2019;6(1):44.

Ogawa H, Hasegawa S, Tsukada S, Matsubara M. A pilot study of augmented reality technology applied to the acetabular cup placement during total hip arthroplasty. J Arthroplast. 2018;33(6):1833–7.

Fotouhi J, Alexander CP, Unberath M, Taylor G, Lee SC, Fuerst B, et al. Plan in 2-D, execute in 3-D: an augmented reality solution for cup placement in total hip arthroplasty. J Med Imaging (Bellingham). 2018;5(2):021205.

Liu H, Auvinet E, Giles J, Rodriguez y Baena F. Augmented reality based navigation for computer assisted hip resurfacing: a proof of concept study. Ann Biomed Eng 2018;46(10):1595–1605.

Cho HS, Park MS, Gupta S, Han I, Kim HS, Choi H, et al. Can augmented reality be helpful in pelvic bone cancer surgery? An in vitro study. Clin Orthop Relat Res. 2018;476(9):1719–25.

Cho HS, Park YK, Gupta S, Yoon C, Han I, Kim HS, et al. Augmented reality in bone tumour resection: an experimental study. Bone Joint Res. 2017;6(3):137–43.

Choi H, Park Y, Lee S, Ha H, Kim S, Cho HS, et al. A portable surgical navigation device to display resection planes for bone tumor surgery. Minim Invasive Ther Allied Technol. 2017;26(3):144–50.

Fritz J, P Ut, Ungi T, Flammang AJ, McCarthy EF, Fichtinger G, et al. Augmented reality visualization using image overlay technology for MR-guided interventions: cadaveric bone biopsy at 1.5 T. Investig Radiol 2013;48(6):464–470.

Weidert S, Wang L, Landes J, Sandner P, Suero EM, Navab N, et al. Video-augmented fluoroscopy for distal interlocking of intramedullary nails decreased radiation exposure and surgical time in a bovine cadaveric setting. Int J Med Robot. 2019;15(4):e1995.

von der Heide AM, Fallavollita P, Wang L, Sandner P, Navab N, Weidert S, et al. Camera-augmented mobile C-arm (CamC): a feasibility study of augmented reality imaging in the operating room. Int J Med Robot. 2018;14(2).

Shen F, Chen B, Guo Q, Qi Y, Shen Y. Augmented reality patient-specific reconstruction plate design for pelvic and acetabular fracture surgery. Int J Comput Assist Radiol Surg. 2013;8(2):169–79.

Ortega G, Wolff A, Baumgaertner M, Kendoff D. Usefulness of a head mounted monitor device for viewing intraoperative fluoroscopy during orthopaedic procedures. Arch Orthop Trauma Surg. 2008;128(10):1123–6.

•• Fürnstahl P, Schweizer A, Graf M, Vlachopoulos L, Fucentese S, Wirth S, et al. Surgical treatment of long-bone deformities: 3D preoperative planning and patient-specific instrumentation. In: Zheng G, Li S, editors. Computational radiology for orthopaedic interventions. Cham: Springer International Publishing; 2016. p. 123–49. Describes the process of data preparation and surgical planning for the purpose of 3D surgical navigation of corrective procedures for long bone deformities.

Lorensen W, Cline EH. Marching cubes: a high resolution 3D surface construction algorithm. 1987. 163 p.

Lindgren Belal S, Sadik M, Kaboteh R, Enqvist O, Ulén J, Poulsen MH, et al. Deep learning for segmentation of 49 selected bones in CT scans: first step in automated PET/CT-based 3D quantification of skeletal metastases. Eur J Radiol. 2019;113:89–95.

Zhou A, Zhao Q, Zhu J, editors. Automatic segmentation algorithm of femur and tibia based on Vnet-C network. 2019 Chinese Automation Congress (CAC); 2019 22–24 Nov. 2019.

Jodeiri A, Zoroofi RA, Hiasa Y, Takao M, Sugano N, Sato Y, et al. Fully automatic estimation of pelvic sagittal inclination from anterior-posterior radiography image using deep learning framework. Comput Methods Prog Biomed. 2020;184:105282.

• Bae H-J, Hyun H, Byeon Y, Shin K, Cho Y, Song YJ, et al. Fully automated 3D segmentation and separation of multiple cervical vertebrae in CT images using a 2D convolutional neural network. Comput Methods Prog Biomed. 2020;184:105119 First approaches of automatic segmentation of bone imaging will allow automatization of currently highly manual process steps in the future.

Kamiya N. Muscle segmentation for orthopedic interventions. In: Zhuang X, Zheng G, Tian W, editors. Intelligent orthopaedics. Singapore: Springer; 2018. p. 1093.

• Kamiya N. Deep learning technique for musculoskeletal analysis. In: G L, H F, editors. Deep learning in medical image analysis. 1213: Springer, Cham; 2020. First approaches of automatic segmentation of bone imaging will allow automatization of currently highly manual process steps in the future.

• Dou Q, Yu L, Chen H, Jin Y, Yang X, Qin J, et al. 3D deeply supervised network for automated segmentation of volumetric medical images. Med Image Anal. 2017;41:40–54 First approaches of automatic segmentation of bone imaging will allow automatization of currently highly manual process steps in the future.

•• Navab N, Bani-Kashemi A, Mitschke M, editors. Merging visible and invisible: two camera-augmented Mobile C-arm (CAMC) applications. Proceedings 2nd IEEE and ACM international workshop on augmented reality (IWAR’99); 1999 20-21 Oct. 1999. It describes the camera augmented surgical c-arm for augmented reality in the operating room.

Navab N, Heining S, Traub J. Camera augmented mobile C-arm (CAMC): calibration, accuracy study, and clinical applications. IEEE Trans Med Imaging. 2010;29(7):1412–23.

Fotouhi J, Unberath M, Song T, Gu W, Johnson A, Osgood G, et al. Interactive flying frustums (IFFs): spatially aware surgical data visualization. Int J Comput Assist Radiol Surg. 2019;14(6):913–22.

Wu H-K, Lee SW-Y, Chang H-Y, Liang J-C. Current status, opportunities and challenges of augmented reality in education. Comput Educ. 2013;62:41–9.

Chen Y, Medioni G, editors. Object modeling by registration of multiple range images. Proceedings 1991 IEEE International Conference on Robotics and Automation; 1991 9–11 April 1991.

Milgram P, Takemura H, Utsumi A, Kishino F. Augmented reality: a class of displays on the reality-virtuality continuum: SPIE; 1995.

Schmalstieg D, Höllerer T. Augmented reality - principles and practice: Addison-Wesley professional; 2016.

Chandra ANR, Jamiy FE, Reza H, editors. Augmented reality for big data visualization: a review. 2019 International Conference on Computational Science and Computational Intelligence (CSCI); 2019 5–7 Dec. 2019.

Jih-Fang W, Ronald TA, Gary B, Vernon C, John E, Henry F, editors. Tracking a head-mounted display in a room-sized environment with head-mounted cameras. ProcSPIE; 1990.

Hoff W, Vincent T. Analysis of head pose accuracy in augmented reality. IEEE Trans Vis Comput Graph. 2000;6(4):319–34.

• Florentin Liebmann SR, Marco von Atzigen, Florian Wanivenhaus, Caroline Neuhaus, José Spirig, Davide Scaramuzza, Reto Sutter, Jess Snedeker, Mazda Farshad, Philipp Fürnstahl. Registration made easy -- standalone orthopedic navigation with HoloLens. CVPR 2019 workshop on computer vision applications for mixed reality headsets. 2019. The work shows how a conventional HMD developed for entertainment purposes (Microsoft HoloLens 1) used for surgical navigation purposes might meet clinical accurcy requirements.

Gertzbein SD, Robbins SE. Accuracy of pedicular screw placement in vivo. Spine. 1990;15(1).

Heary RF, Bono CM, Black M. Thoracic pedicle screws: postoperative computerized tomography scanning assessment. J Neurosurg. 2004;100(4 Suppl Spine):325–31.

Schrepp M, Hinderks A, Thomaschewski J. Design and evaluation of a short version of the user experience questionnaire (UEQ-S). Int J Interactive Multimedia Artif Intell. 2017;4:103–8.

Liebmann F, Roner S, von Atzigen M, Scaramuzza D, Sutter R, Snedeker J, et al. Pedicle screw navigation using surface digitization on the Microsoft HoloLens. Int J Comput Assist Radiol Surg. 2019.

Arnd Viehöfer SHW, Stefan Michael Zimmermann, Laurenz Jaberg, Cyrill Dennler, Philipp Fürnstahl, Mazda Farshad. Augmented reality guided osteotomy in Hallux Valgus Correction (Preprint). BMC musculoskeletal disorders. 2020;Preprint.

Roner S, Vlachopoulos L, Nagy L, Schweizer A, Fürnstahl P. Accuracy and early clinical outcome of 3-dimensional planned and guided single-cut osteotomies of malunited forearm bones. J Hand Surg. 2017;42(12):1031. e1–8.

Cometti C, Païzis C, Casteleira A, Pons G, Babault N. Effects of mixed reality head-mounted glasses during 90 minutes of mental and manual tasks on cognitive and physiological functions. PeerJ. 2018;6:e5847–e.

Navab N, Traub J, Sielhorst T, Feuerstein M, Bichlmeier C. Action- and workflow-driven augmented reality for computer-aided medical procedures. IEEE Comput Graph Appl. 2007;27(5):10–4.

Funding

Open Access funding provided by Universität Zürich.

Author information

Authors and Affiliations

Contributions

Fabio A. Casari, M.D.: manuscript writing, literature research, table compilation, manuscript revisions.

Nassir Navab, PhD.: manuscript revision, literature research, consulting.

Laura A. Hruby, MD PhD.: manuscript revision and editing.

Philipp Kriechling, M.D.: literature research, revision, table compilation.

Ricardo Nakamura, PhD.: tech section writing, literature research.

Romero Tori, PhD.: tech section writing, literature research.

Fátima de Lourdes dos Santos Nunes, PhD.: tech section writing, literature research.

Marcelo C. Queiroz; M.D. MSc: manuscript revision, literature research.

Philipp Fürnstahl, PhD and Mazda Farshad, M.D., MPH: both senior authors contributed equally.

Major manuscript, revision, literature research, consulting.

All authors have approved submission of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

Fabio A. Casari, Nassir Navab, Laura A. Hruby, Philipp Kriechling, Ricardo Nakamura, Romero Tori, Fátima de Lourdes dos Santos Nunes, Marcelo C. Queiroz, Philipp Fürnstahl and Mazda Farshad declare that they have no conflict of interest.

Ethical Approval

Inapplicable.

Human and Animal Rights and Informed Consent

The review article contains studies with human subjects and cadaver experiments performed by the authors. All these included studies have been approved by the appropriate ethical review board and patients have signed an informed consent as described in the articles.

Additional information

An article on behalf of the invitation by Section Editors, Dr. Sara Goldchmit and Dr. Marcelo Queiroz, to contribute an article for the Emerging Trends in Design for Musculoskeletal Medicine section in Current Reviews in Musculoskeletal Medicine.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Emerging Trends in Design for Musculoskeletal Medicine

Philipp Fürnstahl and Mazda Farshad contributed equally.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Casari, F.A., Navab, N., Hruby, L.A. et al. Augmented Reality in Orthopedic Surgery Is Emerging from Proof of Concept Towards Clinical Studies: a Literature Review Explaining the Technology and Current State of the Art. Curr Rev Musculoskelet Med 14, 192–203 (2021). https://doi.org/10.1007/s12178-021-09699-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12178-021-09699-3