Abstract

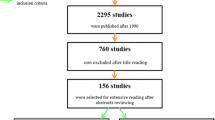

Loss of voice is a serious concern for a laryngectomee which should be addressed prior to planning the procedure. Voice rehabilitation options must be educated before the surgery. Even though many devices have been in use, each device has got its limitations. We are searching for probable future technologies for voice rehabilitation in laryngectomees and to familiarise with the ENT fraternity. We performed a bibliographic search using title/abstract searches and Medical Subject Headings (MeSHs) where appropriate, of the Medline, CINAHL, EMBASE, Web of Science and Google scholars for publications from January 1985 to January 2020. The obtained results with scope for the development of a device for speech rehabilitation were included in the review. A total of 1036 articles were identified and screened. After careful scrutining 40 articles have been included in this study. Silent speech interface is one of the topics which is extensively being studied. It is based on various electrophysiological biosignals like non-audible murmur, electromyography, ultrasound characteristics of vocal folds and optical imaging of lips and tongue, electro articulography and electroencephalography. Electromyographic signals have been studied in laryngectomised patients. Silent speech interface may be the answer for the future of voice rehabilitation in laryngectomees. However, all these technologies are in their primitive stages and are potential in conforming into a speech device.

Similar content being viewed by others

Data Availability

Any relevant data/information regarding the proposal will be available on request

References

Ţiple C, Drugan T, Dinescu FV, Mureşan R, Chirilă M, Cosgarea M (2016) The impact of vocal rehabilitation on quality of life and voice handicap in patients with total laryngectomy: J Res. Med Sci 21:127

McQuellon RP, Hurt GJ (1997) The psychosocial impact of the diagnosis and treatment of laryngeal cancer. Otolaryngol Clin North Am 30:231–241

Kapila M, Deore N, Palav RS, Kazi RA, Shah RP, Jagade MV (2011) A brief review of voice restoration following total laryngectomy. Indian J Cancer 48:99–104

Tang CG, Sinclair CF (2015) Voice Restoration After Total Laryngectomy. Otolaryngol Clin North Am 48:687–702

van Sluis KE, van der Molen L, van Son RJJH, Hilgers FJM, Bhairosing PA, van den Brekel MWM (2018) Objective and subjective voice outcomes after total laryngectomy: a systematic review. Eur Arch Otorhinolaryngol 275:11–26

Pawar PV, Sayed SI, Kazi R, Jagade MV (2008) Current status and future prospects in prosthetic voice rehabilitation following laryngectomy. J Cancer Res Ther 4:186–91

Denby B, Schultz T, Honda K, Hueber T, Gilbert JM, Brumberg JS (2010) Silent Speech Interfaces: Speech Commun 52:270–87

Hawley M, Cunningham S, Green P, Enderby P, Palmer R, Sehgal S, et al. A Voice-Input Voice-Output Communication Aid for People With Severe Speech Impairment: IEEE transactions on neural systems and rehabilitation engineering : a publication of the IEEE Engineering in Medicine and Biology Society 2012;21:23-31

Judge S, Townend G. Perceptions of the design of voice output communication aids: Int J Lang Commun Disord 2013 Jul-Aug;48(4):366-81

Fleury A, Wu G, Chau T (2019) A wearable fabric-based speech-generating device: system design and case demonstration. Disabil Rehabil Assist Technol 14:434–444

Furlong LM, Morris ME, Erickson S, Serry TA. Quality of Mobile Phone and Tablet Mobile Apps for Speech Sound Disorders: Protocol for an Evidence-Based Appraisal:JMIR Res Protoc 2016;5:e233

Nakajima Y, Kashioka H, Shikano K, Campbell N. Non-Audible Murmur Recognition: Interspeech 2003;4

Heracleous, Panikos et al. Accurate hidden Markov models for non-audible murmur (NAM) recognition based on iterative supervised adaptation: IEEE Workshop on Automatic Speech Recognition and Understanding 2003: 73-76

Tajiri Y, Tanaka K, Toda T, Neubig G, Sakti S, Nakamura S. Non-Audible Murmur Enhancement Based on Statistical Conversion Using Air- and Body-Conductive Microphones in Noisy Environments: Interspeech 2015 :5

Itoi M, Miyazaki R, Toda T, Saruwatari H, Shikano K. Blind speech extraction for Non-Audible Murmur speech with speaker’s movement noise: IEEE International Symposium on Signal Processing and Information Technology (ISSPIT) 2012: 320-325.

Kumar TR, Suresh GR, Raja S (2018) Conversion of Non-Audible murmur to normal speech based on full-rank gaussian mixture model. J Comput Theor Nanosci 15:185–190

Kumaresan A, Selvaraj P, Mohanraj S, Mohankumar N, Anand SM. Application of L-NAM speech in voice analyser: Advances in Natural and Applied Sciences 2016; 10:172

Csapó TG, Grósz T, Gosztolya G, Tóth L, Markó A. DNN-Based Ultrasound-to-Speech Conversion for a Silent Speech Interface: Interspeech 2017 (ISCA) 2017:3672–6

Denby B, Stone M. Speech synthesis from real time ultrasound images of the tongue: IEEE International Conference on Acoustics, Speech, and Signal Processing 2004:685–8.

Denby B, Oussar Y, Dreyfus G, Stone M. Prospects for a Silent Speech Interface using Ultrasound Imaging: IEEE International Conference on Acoustics Speed and Signal Processing Proceedings 2006;365-368

Hueber T, Aversano G, Cholle G, Denby B, Dreyfus G, Oussar Y, et al. Eigentongue Feature Extraction for an Ultrasound-Based Silent Speech Interface: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2007;1245-1248

Hueber T, Benaroya EL, Chollet G, Denby B, Dreyfus G, Stone M (2010) Development of a silent speech interface driven by ultrasound and optical images of the tongue and lips. Speech Commun 52:288–300

Kimura N, Kono M, Rekimoto J. SottoVoce: An Ultrasound Imaging-Based Silent Speech Interaction Using Deep Neural Networks: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems 2019;1–11

Harper S, Lee S, Goldstein L, Byrd D (2018) Simultaneous electromagnetic articulography and electroglottography data acquisition of natural speech. J Acoust Soc Am 144:380–5

Steiner I, Richmond K, Ouni S. Speech animation using electromagnetic articulography as motion capture data: AVSP - 12th International Conference on Auditory-Visual Speech Processing 2013:55-60

Narayanan S, Toutios A, Ramanarayanan V, Lammert A, Kim J, Lee S et al (2014) Real-time magnetic resonance imaging and electromagnetic articulography database for speech production research. J Acoust Soc Am 136:1307–11

Chen F, Li S, Zhang Y, Wang J. Detection of the Vibration Signal from Human Vocal Folds Using a 94-GHz Millimeter-Wave Radar: Sensors 2017;17:543

Svec JG, Schutte HK, Miller DG (1996) A subharmonic vibratory pattern in normal vocal folds. J Speech Hear Res 39:135–43

Janke M, Diener L. EMG-to-Speech: Direct Generation of Speech From Facial Electromyographic Signals: IEEE/ACM Trans Audio Speech Lang Process 2017;25:2375–85

Toth AR, Wand M, Schultz T. Synthesizing Speech from Electromyography Using Voice Transformation Techniques: Interspeech 2009:4

Nakamura K, Janke M, Wand M, Schultz T. Estimation of fundamental frequency from surface electromyographic data: EMG-to-F0: International Conference on Acoustics, Speech and Signal Processing (ICASSP) IEEE; 2011;573–6

Janke M, Wand M, Nakamura K, Schultz T. Further investigations on EMG-to-speech conversion: International Conference on Acoustics, Speech and Signal Processing (ICASSP) IEEE; 2012;365–8.

Meltzner GS, Heaton JT, Deng Y, De Luca G, Roy SH, Kline JC. Silent Speech Recognition as an Alternative Communication Device for Persons With Laryngectomy: IEEE/ACM Trans Audio Speech Lang Process 2017;25:2386–98

Porbadnigk A, Wester M, Calliess J-P, Schultz T. EEG-based Speech Recognition - Impact of Temporal Effects: Biosignals- Proceedings of the International Conference on Bio-inspired Systems and Signal Processing 2009;1;376-381

DaSalla C, Kambara H, Koike Y, Sato M. Spatial filtering and single-trial classification of EEG during vowel speech imager: ICREATE ’09 - International Convention on Rehabilitation Engineering and Assistive Technology 2009;

Birbaumer N, Kübler A, Ghanayim N, Hinterberger T, Perelmouter J, Kaiser J, et al. The thought translation device (TTD) for completely paralyzed patients: IEEE Trans Rehabil Eng 2000;8:190–3

Farwell LA, Donchin E (1988) Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol 70:510–23

Pfurtscheller G, Neuper C (2001) Motor imagery and direct brain-computer communication: IEEE 89:1123–34

Blankertz B, Losch F, Krauledat M, Dornhege G, Curio G, Müller K-R (2008) The Berlin brain-computer interface: accurate performance from first-session in BCI-naïve subjects. IEEE Trans Biomed Eng 55:2452–62

Brumberg JS, Nieto-Castanon A, Kennedy PR, Guenther FH. Brain–computer interfaces for speech communication: Speech Communication 2010;52:367–79

Anumanchipalli GK, Chartier J, Chang EF (2019) Speech synthesis from neural decoding of spoken sentences. Nature 568:493–8

O’Connor TF, Fach ME, Miller R, Root SE, Mercier PP, Lipomi DJ. The Language of Glove: Wireless gesture decoder with low-power and stretchable hybrid electronics: PLOS ONE 2017;12:e0179766

Schuldt T, Kramp B, Ovari A, Timmermann D, Dommerich S, Mlynski R et al (2018) Intraoral voice recording-towards a new smartphone-based method for vocal rehabilitation. HNO 66:63–70

Kunikoshi A, Qiao Y, Minematsu N, Hirose K. Speech Generation from Hand Gestures Based on Space Mapping: Interspeech 2009 :5

Fels SS, Hinton GE. Glove-Talk: a neural network interface between a data-glove and a speech synthesizer: IEEE Trans Neural Netw 1993;4:2–8

Fels SS, Hinton GE (1997) Glove-talk II - a neural-network interface which maps gestures to parallel formant speech synthesizer controls. IEEE Trans Neural Netw 8:977–84

Tolba AS, Abu-Rezq AN. Arabic glove-talk (AGT): A communication aid for vocally impaired: Pattern Analysis & Applic 1998;1:218–30

Goyal A, Dixit A, Kalra S, Khandelwal A, Nair NP. 2019. Automatic Speech Generation. Indian Patent Application 201911035856A (2019)

Funding

There was no funding involved in this

Author information

Authors and Affiliations

Contributions

NPN (a) Conceptualization, (b) Drafting the article, (c) Screening articles for inclusion in review article, (d) Writing- Review and editing, (e) Validation. VS (a) Writing–original drafting, (b) Data Curation- Helped in literature search and collecting articles, (c) Helped in screening articles for inclusion in article, (d) Validation. AD (a) Data Curation- Helped in literature search and collecting articles, (b) Helped in screening articles for inclusion in article, (c) Writing- Review and Editing, (d)Validation. DK (a) Helped in literature search and collecting articles, (b) Helped in screening articles for inclusion in article, (c) Validation. KS (a) Helped in literature search and collecting articles, (b) Helped in screening articles for inclusion in article, (c) Validation. BC (a) Helped in screening articles for inclusion in article, (b) Validation. AG (a) Conceptualization, (b) Drafting the article, (c) Screening articles for inclusion in review article, (d) Writing- Review and editing, (e) Validation.

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interests among authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nair, N.P., Sharma, V., Dixit, A. et al. Future Solutions for Voice Rehabilitation in Laryngectomees: A Review of Technologies Based on Electrophysiological Signals. Indian J Otolaryngol Head Neck Surg 74 (Suppl 3), 5082–5090 (2022). https://doi.org/10.1007/s12070-021-02765-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12070-021-02765-9