Abstract

Simulation of large-scale networks of neurons is an important approach to understanding and interpreting experimental data from healthy and diseased brains. Owing to the rapid development of simulation software and the accumulation of quantitative data of different neuronal types, it is possible to predict both computational and dynamical properties of local microcircuits in a ‘bottom-up’ manner. Simulated data from these models can be compared with experiments and ‘top-down’ modelling approaches, successively bridging the scales. Here we describe an open source pipeline, using the software Snudda, for predicting microcircuit connectivity and for setting up simulations using the NEURON simulation environment in a reproducible way. We also illustrate how to further ‘curate’ data on single neuron morphologies acquired from public databases. This model building pipeline was used to set up a first version of a full-scale cellular level model of mouse dorsal striatum. Model components from that work are here used to illustrate the different steps that are needed when modelling subcortical nuclei, such as the basal ganglia.

Similar content being viewed by others

Introduction

Neuroscientists are producing data at an ever growing rate, and sharing the data in public databases. Within the computational neuroscience field, hypothesis-driven modelling has over many decades generated new ideas that in turn have been tested via experiments. Recently a data-driven mechanistic modelling approach has also gained ground thanks to new technologies allowing the collection of large quantities of useful data. In particular, large-scale spiking neural network models have been reconstructed in a data-driven manner and simulated (Markram et al., 2015; Gratiy et al., 2018; Migliore et al., 2018; Casali et al., 2019; Einevoll et al., 2019; Kanari et al., 2019; Billeh et al., 2020; Hjorth et al., 2020). Collecting data from the brain at multiple biological scales from mouse, non-human primates, and human, are important goals of several of the big brain initiatives (Insel et al., 2013; Amunts et al., 2019; Okano et al., 2015; Grillner et al., 2016), and will further facilitate and speed up this modelling process. In parallel, various brain simulation tools have been optimized to capitalize on supercomputers (Hepburn et al., 2012; Plesser et al., 2015; Carnevale & Hines, 2006; Hines et al., 2009; Kumbhar et al., 2019; Ray & Bhalla, 2008; Gleeson et al., 2010; Jordan et al., 2020; Akar et al., 2019). In this respect, the principles of FAIR – Findable, Accessible, Interoperable, Reusable (Wilkinson et al., 2016) – are important for catalysing this process, both with regard to the experimental data, the data-driven models as well as the software used during the modelling and simulation process. We also believe that to be able to reproduce the actual model reconstruction process, given the same or new additional data, is one important aspect of the FAIR criteria when making the modelling process transparent, repeatable, reusable and comparable.

Here we present our open source modelling pipeline that facilitates a reproducible, data-driven reconstruction of cellular level network/microcircuit models. This pipeline inspired by the cortical column microcircuit (Markram et al., 2015) has been applied to predict a full-scale microcircuit model of the mouse dorsal striatum (Hjorth et al., 2020). Snudda is a software to create a detailed network of connected neurons, where the connectivity is derived from reconstructed neuronal morphologies as well as from more qualitative experimental knowledge (see also Reimann et al., 2015). ‘Snudda’ means ‘touch’ in Swedish, and it supports the creation of a network with connectivity based on touch detection. If detailed morphological data exist, the algorithm looks for close appositions between axons and dendrites, which are locations for putative synapses. Thus the morphology restricts where connections can be positioned. Snudda can also define the axon using a probability cloud if a reconstructed axon is missing. This is an extension of the method where the connection probability is proportional to the overlap of two spheres representing axons and dendrites (Humphries et al., 2009). Based on a set of rules, as described below, the putative synapses are then pruned to match the connectivity seen from pairwise experimental recordings, or other types of connectivity experiments. The same technique can be applied to also place gap junctions. The generated network can then be simulated using parallel NEURON (Carnevale & Hines, 2006). Similar approaches have been used to build the somato-sensory cortex microcircuit (Markram et al., 2015; Colangelo et al., 2019), visual cortex model (Billeh et al., 2020; Dai et al., 2020), cerebellar network (Sudhakar et al., 2017; Casali et al., 2019; Wichert et al., 2020) and hippocampal neurons (Migliore et al., 2018).

The reconstruction of a local microcircuit model (such as striatum) consists of the following steps: a) experimental data acquisition of the electrophysiological and morphological properties of neuronal types, and also characterisation of synapses, b) optimization of neuron and synapse models, c) placement of the model neurons in the brain volume to be modelled, d) prediction of microcircuit connectivity in silico, e) constraining and emulating inputs for the model, and finally f) simulating the microcircuitry. Our software Snudda is used for steps c)-f). Software Treem for improving the morphological reconstructions in preparatory step b) is described at the end. The code is publicly available on GitHub (https://github.com/Hjorthmedh/Snudda/) and (https://github.com/a1eko/treem). Below we will go through the different steps and provide code to set up an example network using Snudda, followed by explanation of the configuration files, network building and simulation process, as well as some preprocessing options. The network example corresponds to a 0.5 mm cube within the mouse striatum. We will assume that we already have a set of electrophysiologically optimized neurons and synapses, e.g. using the optimization tool BluePyOpt (Van Geit et al., 2016). Examples of neuron and synapse models relevant for striatum are provided on GitHub (in the snudda/examples folder with scripts and notebooks).

Our approach offers novel contributions in the following aspects: (i) we design and present a complete, free and open source toolchain for building and simulating anatomically constrained biologically detailed neural networks including morphology-based neuron touch detection; (ii) we illustrate the use of this platform on the example of the striatal microcircuit, implemented at a very detailed level and accuracy; (iii) we include all tools and parameters in the source code repository, enabling other labs to reproduce as well as reconstruct our striatal model with new data when it becomes available.

Getting Started with Snudda

Snudda is available for download from GitHub (https://github.com/hjorthmedh/Snudda) or from PyPi through pip3 install snudda. Both source code and the data files necessary to set up a striatal network are provided, Table 1 provides an overview of the Snudda directory structure. Snudda is compatible with Linux, Mac and Windows 10.

In the directory snudda/data/neurons/<region> there are separate subdirectories for each neuron type (<region> in our use case is striatum). Each of those directories contains multiple subdirectories, one for each unique morphology from that neuron type. The neuron directories include the morphology in SWC format, a JSON parameter file with one or more sets of optimised neuron parameters from BluePyOpt, a JSON mechanism file specifying which mechanisms are present in each compartment, and a JSON modulation file which specifies the neuron modulation of the neuron. The JSON file format was chosen as it is a standardised and human readable way to store structured data. The neurons folder also has a mechanisms folder containing the NEURON model description language .mod files with definitions of ionic mechanisms.

To keep the networks separate, each generated network has its own directory which contains a network.json file that links together all the different components that make up the network. The network.json file can be manually created, or in the case of the striatal network there is a way to automatically generate a network.json file of user specified size. The script init.py can be extended to create networks of other brain structures. A Jupyter notebook in examples/notebooks shows an alternative example for how to define brain slices and other structures.

In the main Snudda directory there is an examples folder with useful scripts and Jupyter notebooks for generating and running networks. The directory snudda/plotting contains scripts to plot simulation results as well as visualise the network or parts of it using Blender (https://www.blender.org/).

Use Case: Striatal Microcircuit

First we create an example striatal network, then further down we go through all configuration details. The network-config.json can be generated using the snudda init shell command. In the below example a homogeneous cube with 0.5 mm side length in the mouse striatum is generated (Fig. 1a). This corresponds to 10,062 neurons (Fig. 1b) using the estimated average density of striatal neurons (Rosen & Williams, 2001), but the number of neurons can be varied depending on computational resources and research questions. The neurons are taken from the data/neurons/striatum directory, where every neuron type has its own directory, e.g. dspn or ispn. A neuron type is represented by one or more single-cell models, each in its own subdirectory as described above. When the network is initialised, the init.py code will look in the folders of the different neuron types and instantiate single-cell models in random order. No modifications of the neuron models other than rotations are applied at runtime. In order to improve the cell diversity, one should populate the neuron types directories with a sufficient number of different single-cell models.

Example of the volume definition. a Selection of the volume of interest (red cube, size of the side 500 μm) inside the left part of the dorsal striatum (blue shells beneath the cerebral cortex). b The 10,062 neuron somas placed within the red cube. c Illustration of 100 neurons showing the complexity of axons and dendrites

The following commands can be used in a terminal window to generate the example network that we have used for the figures in this article. We go into more detail in the sections later in the article. There are additional Jupyter notebook examples in examples/notebooks and examples/Neuroinformatics2021. The first step is to create a configuration file network.json specifying e.g. 10,062 neurons in a directory called smallSim.

Next we need to place the neurons in a specified volume. For large simulations the neurons are placed inside a volume representing the mouse striatum (Fig. 1a), while smaller networks use a simple cube (Fig. 1b, c). This is done to preserve physiological neuron densities in the simulations. The mesh definition of the striatal volume, or other structures, can be extracted from databases such as the Allen Brain Atlas of the mouse brain. The command to place the neurons inside the volume defined by the mesh is:

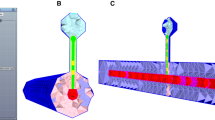

The next step is the touch detection (Fig. 2a). Here the algorithm voxelizes the space, and looks for overlaps (within a certain predefined distance) between axons and dendrites from different neurons (Fig. 3a, b) (Hellwig, 2000).

Example of the synaptic pruning procedure when connecting neurons within the microcircuit. a Putative synapses detected between iSPN and dSPN shown on top, and remaining synapses after pruning shown below. b Connection probability as a function of distance after each of the pruning steps. Distance dependent pruning (DP) filters synapses based on the distance to the soma on the postsynaptic neuron. A fraction f1 of all synapses is removed. The soft max (SM) synapse filter does not disconnect any connected pairs as it only reduces the number of synapses of pairs that are connected by a large number of synapses. Finally mu2 filters neuron pairs with few synapses, and leads to a large reduction in connectivity. c Number of synapses between connected pairs. d Number of connected neighbours each post synaptic neuron has

Touch detection and pruning. a Illustration of ball and stick neurons with soma and dendrites marked in black, axon in red and synapse in green. b Corresponding two neurons in the hypervoxel representation. The neurites are traced by taking small steps along Δx, Δy, Δz corresponding to the direction of the neurite. Where axon and dendrites occupy the same voxel a putative synapse is detected, here marked by a green dot. The volume of the soma is also voxelized. c Ball-and-stick neurons arranged so their synapses are on a grid, with four synapses connecting every neuron pair. Pruning with parameter f1 = 1, 0.5 and 0.25 keeping 100%, 50% and 25% of all synapses, respectively. Number of synapses retained shown under each network (including a numeric estimate of mean and standard deviation, n = 1000). d Combination of f1 and mu2 pruning. Here P = f1 · 1.0 / (1.0 + exp(−8.0 / mu2 · (nSynapses - mu2))), where P is the probability to keep a synapse, nSynapses is the total number of synapses connecting the neuron pre-post pair. e softMax pruning step. If there are more than softMax synapses then the probability of keeping synapses between that pair is P = 2 · softMax / ((1 + exp(−(nSynapses - softMax) / 5)) · nSynapses). f Pruning a3 = 1, 0.5 and 0.25 removing all synapses between a connected pair in 0%, 50% and 75% of the cases, respectively. g Distance dependent pruning of proximal, medial and distal synapses. Jupyter Notebooks to generate this figure are available on Snudda GitHub in the examples/Neuroinformatics2021 folder)

The touch detection will create a putative set of synapses at all the close appositions between axons and dendrites. However, not all close appositions correspond to real synapses, as explained in detail further down. The next step prunes the set of putative synapses to match the connectivity seen in experimental pairwise recordings (Fig. 2b, distance dependent connectivity). The rules used for pruning are qualitatively similar to what Markram et al. (2015) created for their cortical network. The parameters for the pruning (Fig. 3c-g) are specified in the network.json file, explained more in detail below. The command to perform the pruning is:

Code to generate figures analysing the connectivity (Fig. 2c, d) (distance dependent connection probability, histogram showing the number of synapses between connected neighbours, histogram showing the number of connected neighbours) is in snudda/analyse_striatum.py, also see examples/Neuroinformatics2021 for Jupyter notebooks.

Next we need to generate external synaptic input for the network simulation. Here we specify how much time we want to generate inputs for. The parameters for the synaptic input from cortex and thalamus are defined in a separate JSON file:

The last step involves compiling the .mod files, and then running the simulation.

There are two functions in the plotting directory that allow the user to plot either the spike raster or the voltage traces, plotting/plot_spike_raster.py (Fig. 4) and plotting/plot_traces.py, the latter requires the user to have run the simulation with the --voltOut parameter to also save the voltage traces. The simulation output files are stored in the $simName/simulations directory.

Simulation of 10,062 striatal neurons (4872 dSPN, 4872 iSPN, 133 FS, 113 ChIN, 72 LTS) receiving cortical and thalamic input. The cortical drive is increased for half a second at 1.0 s. The histograms above the spike raster show the total number of spikes in the respective neuron populations. (See the striatum_example* notebooks in the example/notebooks folder, note that the size of the network was changed)

Validation Against Anatomical Data

The network building results in Fig. 2 have been validated in our previous study. Figures 8, S4-S7 in Hjorth et al. (2020) showed experimental pair-wise connection probability between neuron types and how the Snudda generated network matched the data, and matched estimations of number of synapses between connected pairs.

To validate the mean synaptic density in the simulated network, we use the data from the recent studies with genetic labeling and electron microscopy techniques. Santuy et al. (2020) estimate a density of 1.41 synapses/μm3 in the striatum, with 4.4% of the symmetric synapses (range 1.29–20.23%), which corresponds to 62 million GABAergic synapses per mm3. In our striatal model 500,000 neurons (6.2mm3) has 469 million intrastriatal GABAergic synapses which corresponds to 75.6 million synapses per mm3, well within the experimental range (18–285 million). This also agrees with another independent study by Cizeron et al. (2020).

Snudda Configuration Explained

Connectivity Configuration

Running the command snudda init mysimulation --size N generates the Snudda network configuration file network-config.json where N is the size of the network to create. The network-config.json contains the blueprints for the striatal network hierarchically organised into the blocks RandomSeed, Volume, Units, Connectivity and Neurons. All parameters in the configuration file are specified in SI units. Below we go into more detail how Snudda is configured.

The RandomSeed block specifies the random seeds used for the different steps of the network creation.

The Volume block can contain several named volumes, such as for example Striatum, Cortex, Thalamus. For each volume block we define type (e.g. mesh), dMin (minimum distance between somas in the volume), meshFile (this specifies the Wavefront OBJ file that defines the mesh enclosing the volume) and meshBinWidth (voxelization size of the mesh for determining what is inside and outside the mesh during cell placement). Future versions of Snudda will allow for density variations within the volume and directional gradients for the neurons.

FG

An example block for PopulationUnits looks as follows. Here two units are defined: UnitID 1 with 20% of dSPN and iSPN neurons in Striatum, and UnitID 2 with 30%.

In the Connectivity block we define the rules guiding how the different neuron populations are connected together. Each connection pair has its own block (e.g. “iSPN,dSPN”). In the example below this is illustrated with the iSPN-dSPN pair (indirect-pathway and direct-pathway striatal projection neurons, respectively), which are connected by GABA synapses.

Each connection has a conductance parameter, which specifies the mean and standard deviation of the conductance. The channelParameters gives flexibility by specifying a dictionary with the channel specific parameters, in this case it is tau1, tau2, failRate (the synapse failure rate) that are passed directly to the NEURON channel model. Next parameterFile (JSON file with additional channel parameters) and modFile (NEURON channel .mod file). The final two blocks pruning and pruningOther specify the pruning parameters for neurons within the same population unit, and neurons in different population units. The pruning parameters help shape the connectivity by parameterising the rules that define which putative connections should be removed, and which ones should be kept. The probability to keep a synapse is equal to the product of the individual pruning steps:

The examples given in Fig. 3c-g are synthetic, their purpose is to illustrate pruning rules. The f1 parameter defines how large a fraction of the putative synapses should be kept, a value of 1.0 or None means that this pruning step is not used (Fig. 3c). For f1 = 0.5 we would expect on average 0.5 · 400 = 200 synapses kept, and for f1 = 0.25 we expect 0.25 · 400 = 100 synapses (c.f. 203 and 104 synapses randomly selected for f1 = 0.5 and f1 = 0.25, respectively, in Fig. 3c).

The mu2 defines a sigmoid curve used to decide whether to keep or remove all synapses between a coupled pair of neurons (Fig. 3d):

With mu2 = 3, we have Pmu(n = 4) = 93.5%, Pmu(n = 3) = 50%, Pmu(n = 2) = 6.5%, Pmu(n = 1) = 0.5%. Thus for f1 = 1 we expect 400 · 0.935 = 374 synapses left. For f1 = 0.5 we have two parts to the pruning, first f1 where half the synapses are removed, then mu2 which operates on all the synapses between a coupled pair. We thus look at the 100 possible neuron pairs. The expected number of synapses \( {E}_{syn}=100\cdotp {\sum}_1^4{P}_n\cdotp {P}_{mu}(n)\cdotp n \), with Pn the probability of a neuron pair having n synapses after the f1 pruning. We then get

synapses, and for f1 = 0.25 we expect on average

synapses.

The softMax specifies at which value we start applying a soft cap to the total number of synapses between the pair:

where PSM is probability to keep a synapse, and n is the initial number of synapses between the pair of neurons (Fig. 3e). For n = 4 and softMax = 3 yields PSM = 82.5% resulting in on average 400 · 0.825 = 330 synapses left. For softMax = 2 around 239 synapses will remain, and for softMax = 1 on average 129 synapses are kept.

The a3 parameter specifies which fraction of all connected pairs to keep, e.g. 0.8 means that 20% of all connected pairs will have all their synapses removed (Fig. 3f). Here a3 = 1, 0.5 and 0.25 result in on average 400, 200 and 100 synapses left, correspondingly. The distPruning defines a distance d dependent function Pdist: d → [0,1], where d is the distance from the soma along the dendrites (Fig. 3g). The expected number of synapses for the distance dependent pruning is given by \( {E}_{syn}=20\cdotp {\sum}_{k=1}^{20}P\left({d}_k\right) \) where P(d) is one of the equations in Fig. 3g. In this example the distances to the soma are d = 56, 65, 86, 95, 116, 125, 146, 155, 176, 185, 206, 215, 236, 245, 266, 275, 296, 305, 326 and 335 μm (with 20 putative synapses at each distance). The expected number of synapses in the three cases are thus 107, 134 and 91, respectively.

Continuing our look at the network configuration file structure, the final block Neurons defines the different neuron populations. Here each neuron template has its own block. For each neuron template we specify four files: morphology (a SWC file defining the soma, axon and dendrites), parameters (neuron parameters optimised using BluePyOpt), mechanisms (NEURON mechanisms), modulation (a JSON file defining the neuromodulation). The template can be used to define multiple neurons, the number defined by num. The hoc parameter is optional, and intended to be used in the future when exporting to SONATA format for use with Neurodamus (Williams et al., 2018). The neuronType can be either neuron or virtualNeuron, the latter can be used to define axons from other structures providing input to the striatum. The rotationMode lets us specify if the neurons should be left unrotated, or rotated in some manner. The volumeID defines which volume the neurons belong to.

The $DATA keyword is a shorthand for the snudda/data folder.

Configuring External Synaptic Input

The input spikes to the network are generated as prescribed in the input configuration file input.json in JSON format. Below we will give a simple example of how to set up input, and there are more examples available on Github in the examples/notebooks directory.

In this example the dSPN will each receive 200 inputs, with 1 Hz Poisson random spikes. The configuration also specifies the conductance and the tmGlut mod file that is used by NEURON to simulate the input synapses.

To complement the cortical (Ctx) input with thalamic, add a second input block with parameters inside the dSPN target block and give it a name e.g. Thalamic. The entire dSPN population will then receive both cortical and thalamic inputs.

Only one target block is applied to each neuron. When Snudda generates input for the network it iterates through all the different neurons in the simulation and picks the most specific target block that matches that neuron. In the example below a dSPN with neuron ID 5 and morphology dSPN_0 will match all three blocks, but the neuron ID is most specific so the “5” block will be used. A dSPN_1 morphology neuron will only match the dSPN block and will use that.

In the dSPN target configuration the start, end and frequency are specified as vectors. Here the input is 4 Hz at 2–3 s, and 2 Hz at 5–7 s. We can also use population units to specify heterogenous external input to the target volume, see examples/notebooks on Github.

To create advanced inputs not supported by Snudda the custom spike times can be read from a CSV file (with one spike train per row) by using “generator”: “csv” and “csvFile”:“path/to/your/csvfile”.

A more complex example using additional input generation functionality is given below. The type defines what sort of input the synapses form, e.g. AMPA_NMDA or GABA. The number of inputs to each neuron can either be defined directly using nInputs or indirectly by specifying the density of inputs synapseDensity along the dendrites. If both parameters are given, the code will use the density but scale it so that the nInputs are created. There is also an optional parameterFile that can be used to define a set of parameters for the synaptic channel.

The populationCorrelation describes how correlated the Poisson input is that is generated by mixing a shared mother process (each spike is included with probability P = sqrt(C)) and a number of independent child processes (inclusion probability 1 - P) to get the resulting input trains (Hjorth et al., 2009).

We also include the functionality of virtual neurons, which are neurons that are not simulated, instead their activity is driven by a predefined spike train. This can be used to model, for example, the activation of reconstructed cortical axons in the striatum, which after touch detection will drive the neurons they connect to.

When using synapse density to place excitatory input onto the neurons, larger neurons will receive more input than smaller neurons of the same type. However, size does not necessarily correlate with excitability of the neuron, or steepness of the I-V curve which depends on intrinsic channels. To handle this variation of excitability Snudda allows the user to scale the number of synapses reaching a neuron, a process which in a real network might be regulated by neuronal homeostatic processes.

What Happens under the Hood?

For each volume modelled the cell placement is restricted to be inside the mesh specified. The neurons are placed one by one, with coordinates randomly sampled from a uniform distribution. If a neuron position is inside the mesh, and there are no other neurons within a distance dMin from it, the position is accepted. To avoid an artificial increase of neuron density at the border, the neuron positions placed outside the mesh are also tracked. These padding positions are not counted towards the total, and are discarded afterwards. Orientation of the neurons in the striatum is completely random, but it is possible to specify other ways to sample the orientation.

For touch detection the space is divided into voxels of 3 μm side length (Fig. 3b). Synapses are only detected when axon and dendrite are present in the same voxel. The maximal interaction distance is thus decided by the voxel size. To parallelise the touch detection the voxels are grouped into hypervoxels, containing 1003 voxels each. The mouse dorsal striatum occupies about 26.2 mm3 (https://mouse.brain-map.org/), and contains almost 2 million neurons (Rosen & Williams, 2001). The first step is to identify which neurons belong to which hypervoxels. For a large portion of the neurons they will be present in more than one hypervoxel. This procedure is done in parallel where the worker nodes of the parallel computer get allocated a subset of the neurons and based on the vertex coordinates of the neurites calculate which hypervoxel the neurons are in. The results are gathered, creating a list of neurons for each hypervoxel. The hypervoxels are then sorted based on the number of neurons inside, and those with most neurons are processed first for better load balance. To perform the touch detection, a line parsing algorithm takes small steps Δx, Δy, Δz along all line segments of the dendrites, marking the voxels they intersect. The voxels contained within the soma are also marked. It then repeats the procedure for the axon line segments of the morphologies. Voxels that contain both axons and dendrites are considered to have a putative synapse if the two neuron types are allowed to have a connection between them (Fig. 3a, b).

The purpose of the touch detection is to find the potential locations where neurons can connect to each other based on the restrictions set by the morphologies. The result of the above touch detection is a set of putative synapses, which is larger than the set of actual synapses. In the pruning step, the set of putative synapses is reduced to match the connectivity statistics from experimental pairwise recordings.

In experiments it is common to report only the binning size and the number of connected neuron pairs, and the total number of pairs. It would be beneficial for circuit modelling if the distance for each pair was also recorded, we could then extract distance dependent connectivity profiles and compare those to what the computer models predict. The pruning is divided into multiple steps, described above. The touch detection for a cubic millimeter can be run in a couple of hours on a desktop, and the whole striatum can be created in a couple of hours on a supercomputer (Fig. 5). As an example, creating a striatal network with 10,000 neurons (6.4 million synapses and 1468 gap junctions) on a desktop Intel Xeon W-2133 CPU @ 3.60GHz with 6 cores and 64GB RAM took: init ~ 1 s, place 10 s, detect 8 min and prune 6 min. For 20,000 (50,000) neurons the corresponding times are ~1 s (~1 s), 16 s (32 s), 15 min (38 min), 12 min (36 min) to detect 14.3 million (39.9 million) synapses and 3462 (9395) gap junctions.

Snudda benchmarking on Tegner cluster at PDC/KTH. Each node has Intel E5-2690v3 Haswell with 2 × 12 cores and 512 GB RAM. a Runtime on one node (24 CPU cores) for different network sizes. b Runtime as a function of the number of CPUs when creating a network with 500,000 neurons, around 469 million synapses and a hundred thousand gap junctions. Place takes very little time compared to the other two phases and is barely visible at the bottom of the two graphs

In addition to JSON configuration files, the resulting network data is stored in HDF5 files.

The Challenge of Limited Morphology Data

A big challenge of the biologically detailed anatomically constrained simulations of the neural microcircuits is availability of the high quality morphological reconstructions of the main neuron types, in sufficient numbers and variability. Open public morphometric repositories, similar to ModelDB for models present in the world-wide web since 1996 (McDougal et al., 2017), were pioneered in 2006 by G. Ascoli with NeuroMorpho.Org (Akram et al., 2018; http://neuromorpho.org/) and continued by other research centers like Allen Brain Institute (Jones et al., 2009; https://portal.brain-map.org), Janelia Research Campus (Gerfen et al., 2018; Economo et al., 2019; http://mouselight.janelia.org/), eBRAINS Knowledge Graph (https://kg.ebrains.eu/), to name a few, have become increasingly popular among computational neuroscientists.

Single-cell morphological reconstructions vary in quality due to the difference in experimental procedures leading to varying degrees of physical integrity of the neurites, spatial resolution, tissue shrinkage, slicing, etc. Need for consistent quality assurance of morphological reconstructions facilitated development of morphology processing tools for morphometric measurements, data processing and error correction, such as L-measure (Scorcioni et al., 2008; http://cng.gmu.edu:8080/Lm), TREES toolbox (Cuntz et al., 2010; https://www.treestoolbox.org/), btmorph (Torben-Nielsen, 2014; https://bitbucket.org/btorb/btmorph) and NeuroM/NeuroR (Anwar et al., 2009; https://github.com/BlueBrain/NeuroM;https://github.com/BlueBrain/NeuroR). Here we will illustrate typical use cases of manipulating morphological data on the example of a small Python module treem (https://github.com/a1eko/treem), developed by the authors in conjunction with Snudda as a complementary instrument to above mentioned packages.

Module Treem provides data structure and command-line tools for accessing and manipulating the digital reconstructions of the neuron morphology in Stockley-Wheal-Cannon format, SWC (Cannon et al., 1998). Access to morphological data from the source code is supported by several Python classes. Common operations with SWC files are possible from the user-written scripts or via the command-line tool swc. For the detailed description of the user interface, see API and CLI references in the online documentation (https://treem.readthedocs.io).

A common reconstruction error is so called “z-jump” (Brown et al., 2011) when a part of the neurite gets shifted along the z-axis by a few micrometers as shown in Fig. 6a (top panel). These can result from an accumulated error during the manual reconstruction or as a mistake in automatic procedure. Possible z-jumps can be eliminated in Treem by the repair command using one of the four methods, align, split, tilt or join, as illustrated in Fig. 6a. Choice of the repair method as well as the assessment of the result should ideally be left to the author of the reconstructed data; if this is not possible the preference is given to the method which better preserves cell symmetry.

Repairing digital reconstruction of the neuron morphology. a Correcting “z-jump” reconstruction errors (top panel). Dots illustrate reconstructed points, soma is black, dendrites are blue, the orange dot labels the node at the point of presumed discontinuity. Four correction methods implemented in Treem (Python module treem) are shown below (align, split, tilt and join). b Repairing the dendrites cut at the slice border. Orange dots in a 3D plot show the cut points of the dendrites. Red lines in 2D projections show “repaired” dendrites, i.e. extended neurites using undamaged reconstructions of the same topological order as the cut branches

Since neuronal tissue can shrink due to dehydration during histological preparation, correction factors are required before a reconstructed morphology can enter the simulation pipeline. Shrinkage correction involves scaling of the entire reconstruction in (x, y)-plane, expansion in z-direction, as well as decreasing contraction of selected neurites, e.g. dendrites, by stretching along their principal axes (termed “unravelling” in Markram et al., 2015) or length-preserving spatial filtering (not shown, see online documentation).

Another important omission is that the neurons located close to the slice surface often have their neurites cut and thus missing in the digital reconstruction. Cut neurites can be replaced using the intact branches of the same topological order from the inner part of the slice, assuming spherical or axial symmetry of the neuron morphology as shown in Fig. 6b. In Treem this is achieved with the repair command (see online documentation for the example commands to reproduce Fig. 6b).

One of the aspects of the large-scale simulations is realistic variability of the model parameters mimicking the natural spread of morpho-electric characteristics in live neurons. To enforce variability in the simulation based on the limited number of reconstructed neurons, we apply random manipulations to the morphological reconstructions. Examples of the length-preserving modifications implemented in Treem are shown in Fig. 7a. Methods jitter, twist and rotate do not change the length of the dendritic branches and thus do not affect electrophysiological features of the optimized models but help to recover spatial symmetry of the morphological reconstructions as shown in Fig. 7b. To distribute excitability of the single-cell models, digital reconstructions can be scaled randomly in 3D, as was done in the large-scale simulation by Hjorth et al. (2020) and illustrated in the online documentation of Treem (https://treem.readthedocs.io).

Adding variability to the morphological reconstructions. a Examples of modification methods used in Treem (modifications preserving the total length are shown). b Distribution of the coordinates of the dendritic terminations of the fast-spiking interneurons at different stages of morphology processing - original reconstructions (n = 3), repaired reconstructions (n = 3) and “jittered”, i.e. randomly manipulated reconstructions, nine variants per each cell (n = 27). Here, only random twisting of the dendritic branches at the bifurcation points was applied which proved to be sufficient to restore the symmetry

Discussion

In this paper we show how to use our open source modelling pipeline to build microcircuit models. An important goal is that the model building process should be transparent and possible to reproduce by other labs, and the model should be extendable when new data accumulate. The pipeline is developed for setting up large-scale simulations of subcortical nuclei, such as striatum. In our current pipeline, based on the software Snudda, neurons can be placed in a defined volume, and then prediction of the location of synapses (as well as gap junctions) can be made using neuron morphologies and the specified pruning rules. Also synaptic data for short-term synaptic plasticity or failure rates can be represented. Finally a simulation using the NEURON simulation environment can be launched. A challenge when using cellular level data from public databases is that sometimes the data for the reconstructed neuronal morphologies only include soma and dendrites, missing the axon entirely. Therefore Snudda supports the prediction of synapses using different approaches. If the detailed morphology is available, the reconstructed axons and dendrites can be used to constrain which neurons are within reach of one another. If the axon is missing, the user can instead specify an axonal density which is then used for the synapse detection. Also our pipeline provides the opportunity to ‘repair’ the dendritic morphologies, and we have illustrated ways to do this using the software Treem. In addition, Treem can provide jittering of morphological parameters to increase the variability in the modelled population of neurons, which is useful to avoid artefacts when there are too few available morphologies for each neuron type. The current version of Snudda does not treat spines separately from the rest of the dendrites, a future improvement would be to allow the user to specify requirements to target spines separately, e.g. if spines are already specified in the reconstruction data.

Models of neocortical microcircuits have been built with similar approaches (see above), however, not all elements of their workflow were available or open-source at the time of Snudda development. We believe that our open source pipeline might become useful when building biophysically detailed microcircuit models of other subcortical brain regions, such as the other basal ganglia nuclei.

When using the current modelling workflow, it is assumed that one has a collection of quantitatively detailed neuron models for each neuron type to be used in the modelled microcircuit. Such neuron models might come from public databases (see above). But most likely several of the neuron types in the selected microcircuit to be modelled might have to be built from scratch. Here the challenges are several as described above. Data on electrophysiological recordings published might be incomplete in such a way that only a few selected traces, as shown in the published manuscripts, exist. Also transcriptional, electrophysiological and morphological data might come from different experiments. Ideally, however, it would be best to have recordings from the neurons that were morphologically reconstructed, such as in patch-seq technique (e.g. Fuzik et al., 2016). Although the electrophysiological properties are well studied, one might lack the knowledge of which ion conductances are expressed in the neurons. Fortunately, such data are starting to emerge, and for example, for striatum transcriptomics data already exist (Muñoz-Manchado et al., 2018; Ho et al., 2018; Gokce et al., 2016; Saunders et al., 2018). If one has a good hypothesis of which channels are expressed, characterisation as well as models are starting to be collected at resources such as the Channelpedia (Ranjan et al., 2011; http://channelpedia.net) and Ion Channel Genealogy (Podlaski et al., 2017; https://icg.neurotheory.ox.ac.uk/). Although still not trivial, if one has both the morphology and electrophysiological data of a particular neuron type, workflows have already been developed for optimizing neuron models (Van Geit et al., 2016; Migliore et al., 2018; Masoli et al., 2020).

A natural future goal would, however, be to link microcircuit models built in different labs, e.g. a cortical microcircuit connected to a striatal microcircuit. Then interoperability between models as well as model specification, such as SONATA, would be crucial. We have on our road map for Snudda to support the SONATA standard (Dai et al., 2020) and work has already started on it to become interoperable with the EBRAINS infrastructure (https://ebrains.eu/).

Information Sharing Statement

The presented software Snudda (version 1.1; RRID:SCR_021210) and Treem (version 1.0.0, DOI:https://doi.org/10.5281/zenodo.4890845) are available on GitHub and PyPI:

Snudda - https://github.com/Hjorthmedh/Snudda, https://pypi.org/project/snudda/

Treem - https://github.com/a1eko/Treem, https://pypi.org/project/Treem/

Data Availability

Data used for single-cell neuron models as well as synaptic connectivity within the example network of the striatal microcircuit is available on GitHub (in the snudda/data and examples folders at https://github.com/hjorthmedh/Snudda).

References

Akar, N. A. et al. (2019). Arbor — A morphologically-detailed neural network simulation library for contemporary high-performance computing architectures. 2019 27th Euromicro International Conference on Parallel, Distributed and Network-Based Processing (PDP), Pavia, Italy, 2019, pp. 274–282. https://doi.org/10.1109/EMPDP.2019.8671560.

Akram, M., Nanda, S., Maraver, P., Armañanzas, R., & Ascoli, G. A. (2018). An open repository for single-cell reconstructions of the brain forest. Sci Data, 5, 180006. https://doi.org/10.1038/sdata.2018.6.

Amunts, K., Knoll, A. C., Lippert, T., Pennartz, C. M. A., Ryvlin, P., Destexhe, A., Jirsa, V. K., D’Angelo, E., & Bjaalie, J. G. (2019). The human brain project-synergy between neuroscience, computing, informatics, and brain-inspired technologies. PLoS Biology, 17(7), e3000344. https://doi.org/10.1371/journal.pbio.3000344.

Anwar, H., Riachi, I., Schürmann, F., & Markram H. (2009). An approach to capturing neuron morphological diversity. In Computational neuroscience: Realistic modeling for experimentalists. De Schutter E., editor. (Cambridge: The MIT Press) 211–232. https://doi.org/10.7551/mitpress/9780262013277.003.0010.

Berthet, P., Lindahl, M., Tully, P. J., Hellgren-Kotaleski, J., & Lansner, A. (2016). Functional relevance of different basal ganglia pathways investigated in a spiking model with reward dependent plasticity. Frontiers in Neural Circuits., 10, 53. https://doi.org/10.3389/fncir.2016.00053.

Billeh, Y. N., Cai, B., Gratiy, S. L., Dai, K., Iyer, R., Gouwens, N. W., Abbasi-Asl, R., Jia, X., Siegle, J. H., Olsen, S. R., Koch, C., Mihalas, S., & Arkhipov, A. (2020). Systematic integration of structural and functional data into multi-scale models of mouse primary visual cortex. Neuron, 106(3), 388–403.e18. https://doi.org/10.1016/j.neuron.2020.01.040.

Brown, K. M., Barrionuevo, G., Canty, A. J., De Paola, V., Hirsch, J. A., Jefferis, G. S., Lu, J., Snippe, M., Sugihara, I., & Ascoli, G. A. (2011). The DIADEM data sets: Representative light microscopy images of neuronal morphology to advance automation of digital reconstructions. Neuroinformatics, 9(2–3), 143–157. https://doi.org/10.1007/s12021-010-9095-5.

Cannon, R. C., Turner, D. A., Pyapali, G. K., & Wheal, H. V. (1998). An on-line archive of reconstructed hippocampal neurons. Journal of Neuroscience Methods, 84(1–2), 49–54. https://doi.org/10.1016/s0165-0270(98)00091-0.

Carnevale, T., & Hines, M. (2006). The NEURON book (p. 2006). Cambridge University Press. https://doi.org/10.1017/CBO9780511541612.

Casali, S., Marenzi, E., Medini, C., Casellato, C., & D’Angelo, E. (2019). Reconstruction and simulation of a scaffold model of the cerebellar network. Frontiers in Neuroinformatics, 13, 37. https://doi.org/10.3389/fninf.2019.00037.

Cizeron, M., Qiu, Z., Koniaris, B., Gokhale, R., Komiyama, N. H., Fransén, E., & Grant, S. G. N. (2020). A brainwide atlas of synapses across the mouse life span. Science., 369(6501), 270–275. https://doi.org/10.1126/science.aba3163.

Colangelo, C., Shichkova, P., Keller, D., Markram, H., & Ramaswamy, S. (2019). Cellular, synaptic and network effects of acetylcholine in the neocortex. Frontiers in Neural Circuits, 13, 24. https://doi.org/10.3389/fncir.2019.00024.

Cuntz, H., Forstner, F., Borst, A., & Häusser, M. (2010). One rule to grow them all: A general theory of neuronal branching and its practical application. PLoS Computational Biology, 6(8), e1000877. https://doi.org/10.1371/journal.pcbi.1000877.

Dai, K., Hernando, J., Billeh, Y. N., Gratiy, S. L., Planas, J., Davison, A. P., Dura-Bernal, S., Gleeson, P., Devresse, A., Dichter, B. K., Gevaert, M., King, J. G., van Geit, W. A. H., Povolotsky, A. V., Muller, E., Courcol, J. D., & Arkhipov, A. (2020). The SONATA data format for efficient description of large-scale network models. PLoS Computational Biology, 16(2), e1007696. https://doi.org/10.1371/journal.pcbi.1007696.

Economo, M. N., Winnubst, J., Bas, E., Ferreira, T. A., & Chandrashekar, J. (2019). Single-neuron axonal reconstruction: The search for a wiring diagram of the brain. The Journal of Comparative Neurology, 527(13), 2190–2199. https://doi.org/10.1002/cne.24674.

Einevoll, G. T., Destexhe, A., Diesmann, M., Grün, S., Jirsa, V., de Kamps, M., Migliore, M., Ness, T. V., Plesser, H. E., & Schürmann, F. (2019). The scientific case for brain simulations. Neuron., 102(4), 735–744. https://doi.org/10.1016/j.neuron.2019.03.027.

Fuzik, J., Zeisel, A., Máté, Z., Calvigioni, D., Yanagawa, Y., Szabó, G., Linnarsson, S., & Harkany, T. (2016). Integration of electrophysiological recordings with single-cell RNA-seq data identifies neuronal subtypes. Nature Biotechnology, 34(2), 175–183. https://doi.org/10.1038/nbt.3443.

Gerfen, C. R., Economo, M. N., & Chandrashekar, J. (2018). Long distance projections of cortical pyramidal neurons. Journal of Neuroscience Research, 96(9), 1467–1475. https://doi.org/10.1002/jnr.23978.

Gleeson, P., Crook, S., Cannon, R. C., Hines, M. L., Billings, G. O., Farinella, M., Morse, T. M., Davison, A. P., Ray, S., Bhalla, U. S., Barnes, S. R., Dimitrova, Y. D., & Silver, R. A. (2010). NeuroML: A language for describing data driven models of neurons and networks with a high degree of biological detail. PLoS Computational Biology, 6(6), e1000815. https://doi.org/10.1371/journal.pcbi.1000815.

Gokce, O., Stanley, G. M., Treutlein, B., Neff, N. F., Camp, J. G., Malenka, R. C., Rothwell, P. E., Fuccillo, M. V., Sudhof, T. C., & Quake, S. R. (2016). Cellular taxonomy of the mouse striatum as revealed by single-cell RNA-Seq. Cell Reports, 16(4), 1126–1137. https://doi.org/10.1016/j.celrep.2016.06.059.

Gratiy, S. L., Billeh, Y. N., Dai, K., Mitelut, C., Feng, D., Gouwens, N. W., Cain, N., Koch, C., Anastassiou, C. A., & Arkhipov, A. (2018). BioNet: A Python interface to NEURON for modeling large-scale networks. PLoS ONE, 13(8), e0201630. https://doi.org/10.1371/journal.pone.0201630.

Grillner, S., Ip, N., Koch, C., Koroshetz, W., Okano, H., Polachek, M., Poo, M. M., & Sejnowski, T. J. (2016). Worldwide initiatives to advance brain research. Nature Neuroscience, 19(9), 1118–1122. https://doi.org/10.1038/nn.4371.

Gurney, K., Prescott, T. J., & Redgrave, P. (2001). A computational model of action selection in the basal ganglia. II Analysis and simulation of behaviour. Biological Cybernetics, 84(6), 411–423. https://doi.org/10.1007/PL00007985.

Hellwig, B. (2000). A quantitative analysis of the local connectivity between pyramidal neurons in layers 2/3 of the rat visual cortex. Biological Cybernetics, 82(2), 111–121. https://doi.org/10.1007/pl00007964.

Hepburn, I., Chen, W., Wils, S., & De Schutter, E. (2012). STEPS: Efficient simulation of stochastic reaction-diffusion models in realistic morphologies. BMC Systems Biology, 6, 36. https://doi.org/10.1186/1752-0509-6-36.

Hines, M. L., Davison, A. P., & Muller, E. (2009). NEURON and Python. Frontiers in Neuroinformatics, 3, 1. https://doi.org/10.3389/neuro.11.001.2009.

Hjorth, J., Blackwell, K. T., & Kotaleski, J. H. (2009). Gap junctions between striatal fast-spiking interneurons regulate spiking activity and synchronization as a function of cortical activity. The Journal of Neuroscience, 29(16), 5276–5286. https://doi.org/10.1523/jneurosci.6031-08.2009.

Hjorth, J. J. J., Kozlov, A., Carannante, I., Frost Nylén, J., Lindroos, R., Johansson, Y., Tokarska, A., Dorst, M. C., Suryanarayana, S. M., Silberberg, G., Hellgren Kotaleski, J., & Grillner, S. (2020). The microcircuits of striatum in silico. Proceedings of the National Academy of Sciences of the United States of America, 117(17), 9554–9565. https://doi.org/10.1073/pnas.2000671117.

Ho, H., Both, M. D., Siniard, A., Sharma, S., Notwell, J. H., Wallace, M., Leone, D. P., Nguyen, A., Zhao, E., Lee, H., Zwilling, D., Thompson, K. R., Braithwaite, S. P., Huentelman, M., & Portmann, T. (2018). A guide to single-cell transcriptomics in adult rodent brain: The medium spiny neuron transcriptome revisited. Frontiers in Cellular Neuroscience, 12, 159. https://doi.org/10.3389/fncel.2018.00159.

Humphries, M. D., Wood, R., & Gurney, K. (2009). Dopamine-modulated dynamic cell assemblies generated by the GABAergic striatal microcircuit. Neural Networks, 22(8), 1174–1188. https://doi.org/10.1016/j.neunet.2009.07.018.

Insel, T. R., Landis, S. C., & Collins, F. S. (2013). Research priorities. The NIH BRAIN initiative. Science., 340(6133), 687–688. https://doi.org/10.1126/science.1239276.

Jones, A. R., Overly, C. C., & Sunkin, S. M. (2009). The Allen brain atlas: 5 years and beyond. Nature Reviews. Neuroscience, 10(11), 821–828. https://doi.org/10.1038/nrn2722.

Jordan, J., Helias, M., Diesmann, M., & Kunkel, S. (2020). Efficient communication in distributed simulations of spiking neuronal networks with gap junctions. Frontiers in Neuroinformatics, 14, 12. https://doi.org/10.3389/fninf.2020.00012.

Kanari, L., Ramaswamy, S., Shi, Y., Morand, S., Meystre, J., Perin, R., Abdellah, M., Wang, Y., Hess, K., & Markram, H. (2019). Objective morphological classification of neocortical pyramidal cells. Cerebral Cortex, 29(4), 1719–1735. https://doi.org/10.1093/cercor/bhy339.

Kumbhar, P., Hines, M., Fouriaux, J., Ovcharenko, A., King, J., Delalondre, F., & Schürmann, F. (2019). CoreNEURON : An optimized compute engine for the NEURON simulator. Frontiers in Neuroinformatics, 13, 63. https://doi.org/10.3389/fninf.2019.00063.

Lindahl, M., & Hellgren Kotaleski, J. (2017). Untangling basal ganglia network dynamics and function: role of dopamine depletion and inhibition investigated in a spiking network model. eNeuro, 3(6), ENEURO.0156-16.2016. https://doi.org/10.1523/ENEURO.0156-16.2016.

Markram, H., Muller, E., Ramaswamy, S., Reimann, M. W., Abdellah, M., Sanchez, C. A., Ailamaki, A., Alonso-Nanclares, L., Antille, N., Arsever, S., Kahou, G. A. A., Berger, T. K., Bilgili, A., Buncic, N., Chalimourda, A., Chindemi, G., Courcol, J. D., Delalondre, F., Delattre, V., Druckmann, S., Dumusc, R., Dynes, J., Eilemann, S., Gal, E., Gevaert, M. E., Ghobril, J. P., Gidon, A., Graham, J. W., Gupta, A., Haenel, V., Hay, E., Heinis, T., Hernando, J. B., Hines, M., Kanari, L., Keller, D., Kenyon, J., Khazen, G., Kim, Y., King, J. G., Kisvarday, Z., Kumbhar, P., Lasserre, S., le Bé, J. V., Magalhães, B. R. C., Merchán-Pérez, A., Meystre, J., Morrice, B. R., Muller, J., Muñoz-Céspedes, A., Muralidhar, S., Muthurasa, K., Nachbaur, D., Newton, T. H., Nolte, M., Ovcharenko, A., Palacios, J., Pastor, L., Perin, R., Ranjan, R., Riachi, I., Rodríguez, J. R., Riquelme, J. L., Rössert, C., Sfyrakis, K., Shi, Y., Shillcock, J. C., Silberberg, G., Silva, R., Tauheed, F., Telefont, M., Toledo-Rodriguez, M., Tränkler, T., van Geit, W., Díaz, J. V., Walker, R., Wang, Y., Zaninetta, S. M., DeFelipe, J., Hill, S. L., Segev, I., & Schürmann, F. (2015). Reconstruction and simulation of neocortical microcircuitry. Cell, 163(2), 456–492. https://doi.org/10.1016/j.cell.2015.09.029.

Masoli, S., Tognolina, M., Laforenza, U., Moccia, F., & D'Angelo, E. (2020). Parameter tuning differentiates granule cell subtypes enriching transmission properties at the cerebellum input stage. Communications Biology, 3(1), 222. https://doi.org/10.1038/s42003-020-0953-x.

McDougal, R. A., Morse, T. M., Carnevale, T., Marenco, L., Wang, R., Migliore, M., Miller, P. L., Shepherd, G. M., & Hines, M. L. (2017). Twenty years of ModelDB and beyond: Building essential modeling tools for the future of neuroscience. Journal of Computational Neuroscience, 42(1), 1–10. https://doi.org/10.1007/s10827-016-0623-7.

Migliore, R., Lupascu, C. A., Bologna, L. L., Romani, A., Courcol, J.-D., Antonel, S., van Geit, W. A. H., Thomson, A. M., Mercer, A., Lange, S., Falck, J., Rössert, C. A., Shi, Y., Hagens, O., Pezzoli, M., Freund, T. F., Kali, S., Muller, E. B., Schürmann, F., Markram, H., & Migliore, M. (2018). The physiological variability of channel density in hippocampal CA1 pyramidal cells and interneurons explored using a unified data-driven modeling workflow. PLoS Computational Biology, 14(9), e1006423. https://doi.org/10.1371/journal.pcbi.1006423.

Muñoz-Manchado, A. B., Bengtsson Gonzales, C., Zeisel, A., Munguba, H., Bekkouche, B., Skene, N. G., Lönnerberg, P., Ryge, J., Harris, K. D., Linnarsson, S., & Hjerling-Leffler, J. (2018). Diversity of interneurons in the dorsal striatum revealed by single-cell RNA sequencing and PatchSeq. Cell Rep, 24(8), 2179–2190.e7. https://doi.org/10.1016/j.celrep.2018.07.053.

Okano, H., Miyawaki, A., & Kasai, K. (2015). Brain/MINDS: Brain-mapping project in Japan. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 370(1668), 20140310. https://doi.org/10.1098/rstb.2014.0310.

Plesser, H., Diesmann, M., Gewaltig, M., & Morrison, A. (2015). Nest: The neural simulation tool. In D. Jaeger & R. Jung (Eds.), Encyclopedia of computational neuroscience (pp. 1849–1852). Springer New York.

Podlaski, W. F., Seeholzer, A., Groschner, L. N., Miesenböck, G., Ranjan, R., & Vogels, T. P. (2017). Mapping the function of neuronal ion channels in model and experiment. Elife., 6, e22152. https://doi.org/10.7554/elife.22152.

Ranjan, R., Khazen, G., Gambazzi, L., Ramaswamy, S., Hill, S. L., Schürmann, F., & Markram, H. (2011). Channelpedia: An integrative and interactive database for ion channels. Frontiers in Neuroinformatics, 5, 36. https://doi.org/10.3389/fninf.2011.00036.

Ray, S., & Bhalla, U. S. (2008). PyMOOSE: Interoperable scripting in Python for MOOSE. Frontiers in Neuroinformatics, 2, 6. https://doi.org/10.3389/neuro.11.006.2008.

Reimann, M. W., King, J. G., Muller, E. B., Ramaswamy, S., & Markram, H. (2015). An algorithm to predict the connectome of neural microcircuits. Frontiers in Computational Neuroscience, 9, 120. https://doi.org/10.3389/fncom.2015.00120.

Rosen, G. D., & Williams, R. W. (2001). Complex trait analysis of the mouse striatum: Independent QTLs modulate volume and neuron number. BMC Neuroscience, 2, 5. https://doi.org/10.1186/1471-2202-2-5.

Santuy, A., Tomás-Roca, L., Rodríguez, J. R., González-Soriano, J., Zhu, F., Qiu, Z., Grant, S. G. N., DeFelipe, J., & Merchan-Perez, A. (2020). Estimation of the number of synapses in the hippocampus and brain-wide by volume electron microscopy and genetic labeling. Scientific Reports, 10, 14014. https://doi.org/10.1038/s41598-020-70859-5.

Saunders, A., Macosko, E. Z., Wysoker, A., Goldman, M., Krienen, F. M., de Rivera, H., Bien, E., Baum, M., Bortolin, L., Wang, S., Goeva, A., Nemesh, J., Kamitaki, N., Brumbaugh, S., Kulp, D., & McCarroll, S. A. (2018). Molecular diversity and specializations among the cells of the adult mouse brain. Cell, 174(4), 1015–1030.e16. https://doi.org/10.1016/j.cell.2018.07.028.

Scorcioni, R., Polavaram, S., & Ascoli, G. (2008). L-measure: A web-accessible tool for the analysis, comparison and search of digital reconstructions of neuronal morphologies. Nature Protocols, 3, 866–876. https://doi.org/10.1038/nprot.2008.51.

Sudhakar, S. K., Hong, S., Raikov, I., Publio, R., Lang, C., Close, T., Guo, D., Negrello, M., & De Schutter, E. (2017). Spatiotemporal network coding of physiological mossy fiber inputs by the cerebellar granular layer. PLoS Computational Biology, 13, e1005754. https://doi.org/10.1371/journal.pcbi.1005754.

Torben-Nielsen, B. (2014). An efficient and extendable Python library to analyze neuronal morphologies. Neuroinformatics, 12(4), 619–622. https://doi.org/10.1007/s12021-014-9232-7.

Van Geit, W., Gevaert, M., Chindemi, G., Rössert, C., Courcol, J., Muller, E. B., Schürmann, F., Segev, I., & Markram, H. (2016). BluePyOpt: Leveraging open source software and cloud infrastructure to optimise model parameters in neuroscience. Frontiers in Neuroinformatics, 10, 17. https://doi.org/10.3389/fninf.2016.00017.

Wichert, I., Jee, S., De Schutter, E., & Hong, S. (2020). Pycabnn: Efficient and extensible software to construct an anatomical basis for a physiologically realistic neural network model. Frontiers in Neuroinformatics, 14, 31. https://doi.org/10.3389/fninf.2020.00031.

Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J. W., da Silva Santos, L. B., Bourne, P. E., Bouwman, J., Brookes, A. J., Clark, T., Crosas, M., Dillo, I., Dumon, O., Edmunds, S., Evelo, C. T., Finkers, R., Gonzalez-Beltran, A., Gray, A. J. G., Groth, P., Goble, C., Grethe, J. S., Heringa, J., ’t Hoen, P. A. C., Hooft, R., Kuhn, T., Kok, R., Kok, J., Lusher, S. J., Martone, M. E., Mons, A., Packer, A. L., Persson, B., Rocca-Serra, P., Roos, M., van Schaik, R., Sansone, S. A., Schultes, E., Sengstag, T., Slater, T., Strawn, G., Swertz, M. A., Thompson, M., van der Lei, J., van Mulligen, E., Velterop, J., Waagmeester, A., Wittenburg, P., Wolstencroft, K., Zhao, J., & Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship [published correction appears in Sci Data. 2019 Mar 19;6(1):6]. Sci Data, 3, 160018. https://doi.org/10.1038/sdata.2016.18.

Williams, T. J., Balakrishnan, R., Delalondre, F., Schuermann, F., Muller, E., &Gewaltig, M. O. (2018). Large-Scale Simulation of Brain Tissue, Blue Brain Project, EPFL. United States: N. p., 2018. Web. https://doi.org/10.2172/1483995.

Acknowledgements

The simulations were performed on resources provided by the Swedish National Infrastructure for Computing at PDC (Center for Parallel Computing). We acknowledge the use of Fenix Research Infrastructure resources, which are partially funded from the European Union’s Horizon 2020 research and innovation programme through the ICEI project under the grant agreement No. 800858. The authors wish to thank Sten Grillner, Johanna Frost-Nylén, Robert Lindroos and Ilaria Carannante for helpful discussions. We also thank Robin de Schepper, Kadri Pajo, and Wilhelm Thunberg for help with software compatibility.

Code Availability

(see Information Sharing Statement)

Funding

Open access funding provided by Royal Institute of Technology. Horizon 2020 Framework Programme (785907, HBP SGA2); Horizon 2020 Framework Programme (945539, HBP SGA3); Vetenskapsrådet (VR-M-2017-02806, VR-M-2020-01652); Swedish e-science Research Center (SeRC); KTH Digital Futures.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest/Competing Interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hjorth, J.J.J., Hellgren Kotaleski, J. & Kozlov, A. Predicting Synaptic Connectivity for Large-Scale Microcircuit Simulations Using Snudda. Neuroinform 19, 685–701 (2021). https://doi.org/10.1007/s12021-021-09531-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12021-021-09531-w