Abstract

Background

The burden of clinical documentation in electronic health records (EHRs) has been associated with physician burnout. Numerous tools (e.g., note templates and dictation services) exist to ease documentation burden, but little evidence exists regarding how physicians use these tools in combination and the degree to which these strategies correlate with reduced time spent on documentation.

Objective

To characterize EHR note composition strategies, how these strategies differ in time spent on notes and the EHR, and their distribution across specialty types.

Design

Secondary analysis of physician-level measures of note composition and EHR use derived from Epic Systems’ Signal data warehouse. We used k-means clustering to identify documentation strategies, and ordinary least squares regression to analyze the relationship between documentation strategies and physician time spent in the EHR, on notes, and outside scheduled hours.

Participants

A total of 215,207 US-based ambulatory physicians using the Epic EHR between September 2020 and May 2021.

Main Measures

Percent of note text derived from each of five documentation tools: SmartTools, copy/paste, manual text, NoteWriter, and voice recognition and transcription; average total and after-hours EHR time per visit; average time on notes per visit.

Key Results

Six distinct note composition strategies emerged in cluster analyses. The most common strategy was predominant SmartTools use (n=89,718). In adjusted analyses, physicians using primarily transcription and dictation (n=15,928) spent less time on notes than physicians with predominant Smart Tool use. (b=−1.30, 95% CI=−1.62, −0.99, p<0.001; average 4.8 min per visit), while those using mostly copy/paste (n=23,426) spent more time on notes (b=2.38, 95% CI=1.92, 2.84, p<0.001; average 13.1 min per visit).

Conclusions

Physicians’ note composition strategies have implications for both time in notes and after-hours EHR use, suggesting that how physicians use EHR-based documentation tools can be a key lever for institutions investing in EHR tools and training to reduce documentation time and alleviate EHR-associated burden.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Despite known benefits,1,2,3 electronic health records (EHRs) have been associated with increased clerical burden and, in some cases, had detrimental effects for clinician experience. For example, more time spent using the EHR (particularly after-hours) is associated with a higher risk of burnout,4,5 and physicians who use an EHR are more likely to be dissatisfied with the amount of time spent on clerical tasks.6 It is difficult to discern to what extent this is related to the EHR itself versus the work physicians have taken on as EHR use has become more widespread. Prior research has demonstrated significant differences in total and after-hours time spent on the EHR across specialties,7 with primary care specialties spending significantly more time on the EHR than medical or surgical counterparts. There is also significant physician-level variation in EHR documentation time within specialty types.8

Multiple tools exist to assist clinicians with documentation. These include copying and pasting text from other parts of the EHR, templated text (known as SmartTools or “dot-phrases”), click-based graphical user interfaces for text, and voice transcription software. Prior research across two health systems found increased and decreased odds of burnout at the highest quartiles of SmartTools and Copy Paste use, respectively.9 This work also linked the use of copy paste to increased note length and redundancy10 and to the presence of inconsistencies and outdated information in documentation.11 While it is known that physicians use a combination of note composition tools to write clinical notes, there is no evidence illustrating the most common combinations of tools. This has in turn precluded research into how different common note composition strategies relate to physician time spent in the EHR, and how use of these strategies varies by physician specialty type. In the face of mounting evidence of EHR-driven burnout, understanding how different note composition strategies may be associated with less EHR time is critically important to policymakers, health system leaders, and EHR vendors seeking to improve physician well-being.

To address this gap, our study used EHR metadata for more than 200,000 ambulatory physicians to explore three research questions. First, we explored what common patterns exist in note composition strategies at the provider level. Specifically, we examined how providers combine different note composition tools (e.g., manual text and templated text) to draft clinical notes, and what combinations (or clusters, which we term “note composition strategies”) are the most prevalent. Second, we explored the relationship between distinct strategies and physician time spent in the EHR. Finally, we analyzed how these clusters are distributed across physician specialty types. Our findings provide important insight into physician-level variation in approaches to note composition and can help to inform organizational efforts to reduce physician EHR burden.

METHODS

Sample

Data for this study were derived from a national database of all physicians who used Epic’s EHR in an ambulatory setting from September 2020 through May 2021. We derived our primary study measures from weekly, physician-level measures available through the Epic Signal data warehouse.3,4,5 These data were available for 215,207 physicians in 391 health systems, representing the vast majority of Epic’s US ambulatory user-base. All physicians and organizations were de-identified prior to receipt of the data.

Measures

Note Composition

We created five measures for use in cluster analyses to characterize the composition of physician notes. Each measure captured physician-level averages of the proportion of note text attributable to each of five note composition sources: Manual Entry; SmartTools (templated text frequently referred to as “dot-phrases”); NoteWriter (a click-based documentation tool); Copy/Paste; and a combined measure capturing characters from both Transcription and Voice Recognition.

For example, a 2000-character note might be composed of 50% (1000 characters) from SmartTools, 25% (500 characters) manually entered characters, and 25% (500 characters) from copy and paste. Of note, while different physician populations may be using transcription versus voice recognition, these methods ultimately capture a set of EHR-related workflows which are conceptually similar — in that they share an intermediary between the physician and the note (a transcription service or voice-to-text software). This similarity in processes, as well as the fact that across-physician variation in these two tools was low, influenced our decision to combine these two methods in our classifications.

Time-Based Measures

We divided weekly time spent by physicians on notes, in total on the EHR, and after-hours on the EHR by number of weekly physician visits to develop physician-level, per visit averages of active time on notes, total active time on the EHR, and after-hours active time on the EHR. Using visit-normalized measures adjusts for differences in average daily visit volumes across physicians. In Epic’s Signal database, “after hours” active time (also known as “Time Outside Scheduled Hours”) is defined as any active time that falls outside a 30-min buffer before or after the physician’s first and final visits for the day. Furthermore, Epic defines “active time” within the EHR as the time a user is performing active tasks.13 If no activity is detected for 5 s, the system stops counting time. This measurement allows our data to capture actual EHR work while excluding time a clinician spends with the EHR open but performing other tasks.12 Given this construction of active time, these measures may underestimate true EHR work time, as clinicians often spend time reading notes or otherwise performing EHR tasks without directly interacting with the system.13,14 Given that time that documentation support personnel, such as scribes, are typically contributed under a separate user login, their contributions to a physicians’ documentation are not accurately discernible via audit logs. It is possible, however, to see what proportion of note text was contributed by a non-physician user.

Physician and Organization Control Variables

Consistent with previous work examining differences in Epic Signal measures across specialty types, physicians included in our analysis were grouped into primary care, medical specialties, or surgical specialties.7 Physician specialty groupings are provided in Appendix 1. We used several measures to describe physician case load and complexity, which may influence both note length and EHR time. These included average number of weekly visits, average new characters per note, and the percent of weekly new and established patient evaluation and management (E/M) visits billed to each of E/M levels 1 through 5. Consistent with prior work,15 the only organizational level data available through Signal’s data warehouse were organizational structure (ambulatory-only; hospital and clinic facilities; other) and US census region;16 this helps preserve de-identification of the Epic Signal data.

Analysis

To address our first research question exploring the note composition strategies that most commonly occur among physicians, we employed K-means cluster analyses with our five proportional measures of note composition per text source (e.g., percent of note text attributable to manual text, percent of note text attributable to copy/paste, etc.). Because a priori note composition categories for classifying physicians do not exist, this method allowed us to identify clusters of similar physicians with respect to their note composition style. K-means cluster analysis is an unsupervised machine learning approach that takes observations of continuous variables and identifies potential cluster averages, iteratively calculating distances between each point and a randomly chosen cluster to assign each observation to the closest cluster.17,18 The K-means clustering algorithm seeks to minimize the within-cluster sum of squares, thus assigning similar observations to the same cluster. We tested cluster quantities of three through ten to determine the optimal number of clusters at which discriminant validity was maximized while preserving the interpretability of clusters. Use of clustering was important because physicians use multiple tools and use those tools to varying degrees, which lowers the utility of analytic models that regress EHR and note time measures on raw character counts of note content from different sources.

With note composition strategy clusters identified, we calculated descriptive statistics for all demographic, time, and practice pattern measures for the entire sample and then and within each note composition strategy cluster. Chi-square tests were used to describe differences in specialty type, US census region, and organization type across note composition clusters. Wilcoxon rank sum tests were used to characterize differences in average weekly visits, note length, time on notes per visit, total EHR time per visit, and after-hours EHR time per visit across clusters.

To analyze the relationship between note composition cluster and time spent in the EHR, we regressed our three dependent variables of average time in notes, total time in the EHR, and after-hours time in the EHR (measured in minutes per visit) on independent variables of note composition clusters, adjusting for average note length, provider specialty, percent of providers’ visits billed at each E/M visit level, region, and organization type. Given changes in E/M documentation requirements in January of 2021 that may have influenced notes via removing previously required note content, simplifying E/M level justification criteria, and enabling time-based billing for both face-to-face and other activities related to the patient visit, we also conducted a sensitivity analysis using 2021 data only. Finally, we conducted a sensitivity analysis additionally adjusting for proportion of note text generated by a non-physician user (most often a scribe or other clinician acting as a scribe).

All models used two-way clustered errors at the physician and organization levels, with an alpha of 0.05. All analyses were conducted in R version 3.6.0 using RStudio.19,20

RESULTS

The sample included 215,207 physicians across all four US geographic regions (Table 1). Overall, 42.2% of providers were medical specialists, with 30.5% primary care and 27.3% and surgical specialists. Among physicians, 88% practiced in a facility with both a hospital and a clinic. Clinicians in the sample had a mean (SD) of 34 (26.7) visits per week. They spent a mean (SD) of 9.5 (12.6) minutes per visit on notes. Mean (SD) total time and total after-hours time on the EHR per visit were 27.2 (31.7) minutes and 4.4 (5.4) minutes, respectively.

Six note composition clusters emerged in our sample (Fig. 1). Those using a predominance of Copy Paste (CopyPaste-st) comprised 22.0% of the sample and composed an average of 59.4% of notes via Copy/Paste, with a smaller percentage via Smart Tools. Those who used a predominance of Manual Entry (ManualEntry-st) comprised 7.2% of the sample and composed an average of 55.2% of their notes via Manual Entry, with a smaller percentage via Smart Tools. Those who used predominantly Smart Tools (SmartTools) comprised 39.7% of the sample and composed more than 75% of their notes via the Smart Tool function. Those who used a predominance of Smart Tools with some Copy Paste (SmartTools-cp) comprised 22.2% of the sample. They composed over half their notes with Smart Tools and 28% via Copy/Paste. Physicians who used a combination of Smart Tools and Note Writer (SmartTools-NoteWriter) comprised 10.7% of the sample and wrote about 40% of their notes with each of Smart Tools and Note Writer. Finally, those physicians who used a predominance of Transcription and Voice Recognition (Transcription-VoiceRec) comprised 7.2% of the sample, with 64.3% of notes composed via these mechanisms.

Distributions of documentation strategy clusters varied by specialty (Fig. 2). Medical specialists had the highest proportion of physicians (24.2%) in the CopyPaste-st cluster. Surgical specialists had the highest proportion of physicians using Transcription/Voice Recognition (12.5%), with 43.8% using predominantly Smart Tools. Over half of primary care clinicians used predominantly Smart Tools, with 22.2% in the SmartTools-NoteWriter cluster.

As seen in Table 1, physicians in the SmartTools-Notewriter cluster had the most average visits per week (mean (SD): 43.6 (27.0) visits), while those in the ManualEntry-st cluster had the fewest (mean (SD): 21.0 (22.6) visits). On average, physicians in the CopyPaste-st cluster wrote the longest notes (mean (SD): 6775.4 (5174.5) characters), while those in the ManualEntry-st cluster wrote the shortest notes (mean (SD): 3434.4 (2773.5) characters). Unadjusted analyses demonstrated that the Transcription-Voice recognition cluster spent the least per visit time on notes, in total on the EHR, and after-hours on the EHR. Physicians in the manual entry cluster spent the most time per visit on notes, in total on the EHR, and after-hours on the EHR. The Transcription/Voice Recognition cluster was more represented in the Midwest, while the ManualEntry-st and CopyPaste-st clusters were relatively more common in the West.

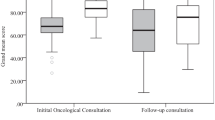

Significant differences in note time, total EHR time, and after-hours EHR time across the six note composition clusters persisted in regression analyses adjusting time estimates for specialty, US census region, weekly visits, note length, and organization type. All models used the SmartTools cluster (39.7% of sample) as the reference group. As shown in Figure 3, relative to physicians in the SmartTools cluster, physicians in the ManualEntry-st cluster spent 7.4 more minutes in notes per visit (95% CI: 6.84, 7.95; p<0.001), 17.8 (95% CI: 16.4, 19.1; p<0.001) more total minutes in the EHR per visit, and 3 minutes more after-hours per visit (95% CI: 2.76, 3.21); p<0.001). Meanwhile, physicians in the SmartTools-cp and Copy/Paste-st clusters each spent about 3 minutes more on notes per visit than physicians who used predominantly SmartTools (SmartTools-cp: β: 2.5, 95% CI: 2.3, 2.8, p<0.001; Copy/Paste-st: β: 2.4, 95% CI: 1.9, 2.8, p<0.001). Detailed regression results are shown in Appendix Table 2.

As depicted in Figure 4, these differences translated to physicians using predominantly Transcription/Voice Recognition spending an average of 4.8 minutes per visit on Notes. In contrast, those physicians in the ManualEntry-st or Copy/Paste-st clusters spent an average of 14.1 minutes on notes per visit, with physicians who used predominantly Smart Tools or Smart Tools in combination with other strategies spending between 8 and 12 minutes. Physicians using a predominance of Transcription/Voice Recognition spent an average of 19.4 minutes total EHR time per visit and 3.2 minutes after-hours EHR time per visit. In contrast, physicians in the ManualEntry-st cluster spent an average of 39.3 minutes total EHR time per visit and 6.1 minutes after-hours EHR time per visit, while those in the CopyPaste-st cluster spent 35.8 minutes total EHR time per visit, and 6.3 minutes after-hours EHR time per visit. Sensitivity analyses using only 2021 data did not differ significantly from the main analysis (Appendix Table 3). Differences between clusters additionally persisted in analyses adjusting for percent of note text generated by a non-physician user (Appendix Table 4).

DISCUSSION

In this national cross-sectional study, we identified six documentation strategy clusters across ambulatory physicians. These patterns were associated with major differences in time spent on notes per visit, total time on the EHR, and after-hours time on the EHR. There was significant variation in the prevalence of these note composition clusters by specialty, contributing to differential time spent on documentation and aggregate time spent on the EHR. These note composition patterns are likely associated with physician satisfaction and burnout, although we could not assess these relationships, or the quality of the notes produced. These findings have implications for institutions investing in EHR tools and training aimed at enhancing the experience of EHR use. They additionally speak to a goal of reducing documentation burden on US clinicians by 75% by 2025,21 which makes identifying better strategies for clinical documentation a top priority.

Other studies have evaluated the relationships between documentation strategies and specialties and the length and quality of notes, albeit in much smaller samples.22,23,24 We have quantified the relationship between patterns of documentation strategies and time spent on the EHR in a national sample. Our findings suggest that while a small proportion of physicians currently use Transcription or Voice Recognition, these modalities may have significant efficiency benefits. In contrast, predominant use of Copy/Paste or Manual Entry, which also require the least training to use, are associated with significantly longer note times and, ultimately, longer total and after-hours time on the EHR. Documentation strategies employing a combination of modalities or predominantly Smart Tools represent a middle ground, requiring more time than Transcription/Voice Recognition, but less time than predominant use of Copy/Paste or Manual Entry. Our findings are notable given differences in time spent on the EHR across specialties,7,8 and linkages between time spent on the EHR and measures of burnout.4,5,25 Identifying which technologies can aid physicians in efficiently writing notes is key for supporting physician satisfaction, reducing perceptions of clinical burden, and redirecting physicians’ time to activities they perceive as high value, including perhaps most importantly, time spent with patients.26

Our findings also shed light on differences in note composition across specialties, and how these patterns interact with time spent on documentation and on the EHR in total. Among all specialties, medical specialists had the highest percent of notes derived from the Copy/Paste function. Medical specialists may rely more heavily on clinical documentation to convey their reasoning and provide valuable consultation to colleagues, influencing heavier use of Copy/Paste, particularly for inclusion of laboratory and other test information in notes. Many subspecialists additionally use the note as a report of longitudinal data and employ Copy/Paste functionality for this purpose. Yet prior work has shown that use of Copy/Paste is associated with increased note length, redundancy,10 and documentation inaccuracies.27 Taken together, these findings suggest that reducing use of Copy/Paste functions while simultaneously providing clinicians whose work relies heavily on clinical documentation and review of significant quantities of longitudinal data with more efficient alternatives, may both speed documentation times and increase the quality of clinical notes.

Use of Transcription and Voice Recognition was low across the study sample despite this function being associated with the lowest adjusted average time spent on documentation and on the EHR. Notably, use of dictation technology has been associated with decreased use of Copy/Paste functionalities and decreased documentation errors.28 Prior work has demonstrated a reduction in users of speech recognition technology after initial adoption to a more stable core set of users, corresponding with a “trough of disillusionment” in the adoption of new technologies.29 This suggests that the learning curve associated with use of voice recognition technologies could be a focus for technological innovation and training.

Additionally, it is possible that Transcription and Voice Recognition technologies may be optimized to meet the needs of certain specialties, in particular for specialties with large quantities of stereotyped notes, such as some surgical specialties. In our sample, surgical specialties had almost twice and four times the use of these technologies compared to medical and primary care specialists, respectively. In the future, speech recognition and natural language processing solutions that can detect and document the contents of physician-patient conversations may enhance use of Voice Recognition technologies, with multiple companies developing products in this realm. Leveraging artificial intelligence capabilities in these solutions would ideally augment physicians’ clinical reasoning and diagnostic and therapeutic choices rather than obscuring them.30,31

Limitations

This study has several limitations. We used data from only one EHR, although Epic is the most widely implemented ambulatory EHR in the USA.32 As noted earlier, the assessments of time generated by this approach are conservative, because they count active use. We are unable to link notes to encounter types, or comment on the quality or content of documentation. We could not assess in-office or workflow solutions that physicians (i.e., scribes) may have in place to assist with documentation burden; however, when including additional controls for proportion of note text contributed by a scribe or other non-physician team member, our multivariable model results are similar. Additionally, to preserve data de-identification, limited organizational characteristics were available from Signal’s data warehouse, reducing our ability to adjust for organizational-level factors that may influence note composition patterns. Our analysis is unable to estimate a causal impact of note composition patterns on EHR time; it may be the case that physicians who are facile with the EHR choose more efficient note composition patterns, rather than note composition patterns enabling physician facility with the EHR. Future research should focus on identifying causal estimates, either through randomized trials or natural experiments such as evaluating EHR training initiatives or the introduction of new note composition tools.

CONCLUSION

In this cross-sectional national study of physician EHR documentation, we found that different note composition strategies had a major impact on time spent on notes and total and after-hours time spent on the EHR. Use of these strategies varies substantially by specialty, particularly by medical versus surgical specialty. Greater use of Transcription and Voice Recognition is associated with lower note and EHR time, while predominant use of Manual Entry and Copy Paste are associated with greater note and EHR time. Future studies should aim to characterize reasons for differential use of these strategies by specialty, study interventions that promote uptake of strategies associated with lower EHR time, and examine associations between use of more efficient strategies and physician wellbeing.

References

Lin SC, Jha AK, Adler-Milstein J. Electronic health records associated with lower hospital mortality after systems have time to mature. Health Affairs 2018; 37(7):1128-1135.

Poon EG, Wright A, Simon SR, et al. Relationship between use of electronic health record features and health care quality: results of a statewide survey. Med Care. 2010;48(3):203-209.

Cebul RD, Love TE, Jain AK, Hebert CJ. Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(9):825-833.

Adler-Milstein J, Zhao W, Willard-Grace R, Knox M, Grumbach K. Electronic health records and burnout: time spent on the electronic health record after hours and message volume associated with exhaustion but not with cynicism among primary care clinicians. J Am Med Inform Assoc .2020;27(4):531. https://doi.org/10.1093/JAMIA/OCZ220

Peccoralo LA, Kaplan CA, Pietrzak RH, Charney DS, Ripp JA. The impact of time spent on the electronic health record after work and of clerical work on burnout among clinical faculty. J Am Med Inform Assoc JAMIA. 2021;28(5):938-947.

Shanafelt TD, Dyrbye LN, Sinsky C, et al. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin Proc. 2016;91(7):836-848.

Rotenstein LS, Holmgren AJ, Downing NL, Bates DW. Differences in total and after-hours electronic health record time across ambulatory specialties. JAMA Intern Med. 2021;181(6):863-865.

Rotenstein LS, Holmgren AJ, Downing NL, Longhurst CA, Bates DW. Differences in clinician electronic health record use across adult and pediatric primary care specialties. JAMA Netw Open. 2021;4(7):e2116375-e2116375.

Hilliard RW, Haskell J, Gardner RL. Are specific elements of electronic health record use associated with clinician burnout more than others? J Am Med Inform Assoc. 2020;27(9):1401-1410.

Rule A, Bedrick S, Chiang MF, Hribar MR. Length and redundancy of outpatient progress notes across a decade at an academic medical center. JAMA Netw Open. 2021;4(7):e2115334-e2115334.

O’Donnell HC, Kaushal R, Barrón Y, Callahan MA, Adelman RD, Siegler EL. Physicians’ attitudes towards copy and pasting in electronic note writing. J Gen Intern Med. 2009;24(1):63-68.

Holmgren AJ, Downing NL, Bates DW, et al. Assessment of electronic health record use between US and non-US health systems. JAMA Intern Med. 2021;181(2):251-259.

Baxter SL, Apathy N, Cross DA, Sinsky C, Hribar M. Measures of electronic health record use in outpatient settings across vendors. J Am Med Inform Assoc. 2021; 28(5):955-959.

Melnick ER, Ong SY, Fong A, et al. Characterizing physician EHR use with vendor derived data: a feasibility study and cross-sectional analysis. J Am Med Inform Assoc. 2021; 28(7):1383-1392.

Downing NL, Bates DW, Longhurst CA. Physician burnout in the electronic health record era: are we ignoring the real cause? Ann Intern Med. 2018; 169(1):50-51.

United States Census Bureau. Geographic Levels. Census.gov. Accessed August 25, 2022. https://www.census.gov/programs-surveys/economic-census/guidance-geographies/levels.html

James G, Witten D, Hastie T, Tibshirani R. An introduction to statistical learning: with applications in R. 1st ed. 2013, Corr. 7th printing 2017 edition. Springer; 2013.

Likas A, Vlassis NJ, Verbeek J. The global k-means clustering algorithm. Pattern Recognit. 2003;36(2):451-461. https://doi.org/10.1016/S0031-3203(02)00060-2

RStudio | Open source & professional software for data science teams - RStudio. Accessed November 7, 2021. https://www.rstudio.com/

R: The R Project for Statistical Computing. Accessed November 7, 2021. https://www.r-project.org/

Report from the 25 By 5: Symposium Series to Reduce Documentation Burden on U.S.Clinicians by 75% by 2025. Accessed December 11, 2021. https://www.dbmi.columbia.edu/wp-content/uploads/2021/08/25x5_Executive_Summary.pdf

Pollard SE, Neri PM, Wilcox AR, et al. How physicians document outpatient visit notes in an electronic health record. Int J Med Inf. 2013;82(1):39-46. https://doi.org/10.1016/j.ijmedinf.2012.04.002

Neri PM, Volk LA, Samaha S, et al. Relationship between documentation method and quality of chronic disease visit notes. Appl Clin Inform. 2014;5(2):480-490. https://doi.org/10.4338/ACI-2014-01-RA-0007

Edwards ST, Neri PM, Volk LA, Schiff GD, Bates DW. Association of note quality and quality of care: a cross-sectional study. BMJ Qual Saf. 2014;23(5):406-413. https://doi.org/10.1136/bmjqs-2013-002194

Nguyen OT, Jenkins NJ, Khanna N, et al. A systematic review of contributing factors of and solutions to electronic health record–related impacts on physician well-being. J Am Med Inform Assoc. 2021;28(5):974-984.

Tai-Seale M, Olson CW, Li J, et al. Electronic health record logs indicate that physicians split time evenly between seeing patients and desktop medicine. Health Aff. 2017;36(4):655-662.

Roddy JT, Arora VM, Chaudhry SI, et al. The prevalence and implications of copy and paste: internal medicine program director perspectives. J Gen Intern Med. 2018;33(12):2032-2033.

Al Hadidi S, Upadhaya S, Shastri R, Alamarat Z. Use of dictation as a tool to decrease documentation errors in electronic health records. J Community Hosp Intern Med Perspect. 2017;7(5):282-286.

Fernandes J, Brunton I, Strudwick G, Banik S, Strauss J. Physician experience with speech recognition software in psychiatry: usage and perspective. BMC Res Notes. 2018;11(1):690.

Lin SY, Shanafelt TD, Asch SM. Reimagining clinical documentation with artificial intelligence. 2018; 93(5):563-565.

Lin SY, Mahoney MR, Sinsky CA. Ten ways artificial intelligence will transform primary care. J Gen Intern Med. 2019;34(8):1626-1630. https://doi.org/10.1007/s11606-019-05035-1

Top 10 Ambulatory EHR Vendors by 2019 Market Share. Definitive Healthcare. Accessed December 16, 2021. https://www.definitivehc.com/blog/top-ambulatory-ehr-systems

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 63 kb)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rotenstein, L.S., Apathy, N., Holmgren, A.J. et al. Physician Note Composition Patterns and Time on the EHR Across Specialty Types: a National, Cross-sectional Study. J GEN INTERN MED 38, 1119–1126 (2023). https://doi.org/10.1007/s11606-022-07834-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-022-07834-5