INTRODUCTION

A survey is a list of questions aiming to extract a set of desired data or opinions from a particular group of people.1 Surveys can be administered quicker than some other methods of data gathering and facilitate data collection from a large number of participants. Numerous questions can be included in a survey that allow for increased flexibility in evaluation of several research areas, such as analysis of risk factors, treatment outcomes, disease trends, cost-effectiveness of care, and quality of life. Surveys can be conducted by phone, mail, face-to-face, or online using web-based software and applications. Online surveys can help reduce or prevent geographical dependence and increase the validity, reliability, and statistical power of the studies. Moreover, online surveys facilitate rapid survey administration as well as data collection and analysis.2

Surveys are frequently used in a variety of research areas. For example, a PubMed search of the key word “survey” on January 7, 2021, generated over 1,519,000 results. These studies are used for a number of purposes, including but not limited to opinion polls, trend analyses, evaluation of policies, measuring the prevalence of diseases.3,4,5,6,7,8,9,10,11,12 Although many surveys have been published in high-impact journals, comprehensive reporting guidelines for survey research are limited13, 14 and substantial variabilities and inconsistencies can be identified in the reporting of survey studies. Indeed, different studies have presented multiform patterns of survey designs and reported results in various non-systematic ways.15,16,17

Evidence-based tools developed by experts could help streamline particular procedures that authors could follow to create reproducible and higher quality studies.18,19,20 Research studies that have transparent and accurate reporting may be more reliable and could have a more significant impact on their potential audience.19 However, that is often not the case when it comes to reporting research findings. For example, Moher et al.20 reported that, although over 63,000 new studies are published in PubMed on a monthly basis, many publications face the problem of inadequate reporting. Given the lack of standardization and poor quality of reporting, the Enhancing the QUAlity and Transparency Of health Research (EQUATOR) Network was created to help researchers publish high-impact health research.20 Several important guidelines for various types of research studies have been created and listed on the EQUATOR website, including but not limited to the Consolidated Standards of Reporting Trials and encompasses (CONSORT) for randomized control trial, Strengthening the Reporting of Observational studies in Epidemiology (STROBE) for observational studies, and Preferred Reporting Items for Systemic Reviews and Meta-analyses (PRISMA) for systematic reviews and meta-analyses. The introduction of PRISMA checklist in 2009 led to a substantial increase in the quality of the systemic reviews and is a good example of how poor reporting, biases, and unsatisfactory results can be significantly addressed by implementing and following a validated reporting guideline.21

SURGE22 and CHERRIES23 are frequently recommended for reporting of non-web and web-based surveys. However, a report by Tarek et al. found that many items of the SURGE and CHERRIES guidelines (e.g., development, description, testing of the questionnaire, advertisement, and administration of the questionnaire, sample representativeness, response rates, informed consent, statistical analysis) had been missed by authors. The authors therefore concluded a need to produce a single universal guideline as a standard quality-reporting tool for surveys. Moreover, these guidelines lack a structured approach for the development of guidelines. For example, CHERRIES which was developed in 2004 lacks a comprehensive literature review and the Delphi exercise. These steps are crucial in developing guidelines as they help identify potential gaps and opinions of different experts in the field.20, 24 While the SURGE checklist used a literature review for generation of their items, it also lacks the Delphi exercise and is limited to only self-administered postal surveys. There is also little information available about the experts involved in the development of these checklists. SURGE’s limited citations since its publication suggest that it is not commonly used by authors and not recommended by journals. Furthermore, even after the development of these guidelines (SURGE and CHERRIES), there has been limited improvement in reporting of surveys. For example, Alvin et al. reviewed 102 surveys in top nephrology journals and found that the quality of surveys was suboptimal and highlighted the need for new reporting guidelines to improve reporting quality and increase transparency.25 Similarly, Prasad et al. found significant heterogeneity in reporting of radiology surveys published in major radiology journals and suggested the need for guidelines to increase the homogeneity and generalizability of survey results.26 Mark et al. also found several deficiencies in survey methodologies and reporting practices and suggested a need for establishing minimum reporting standards for survey studies.27 Similar concerns regarding the qualities of surveys have been raised in other medical fields.28,29,30,31,32,33

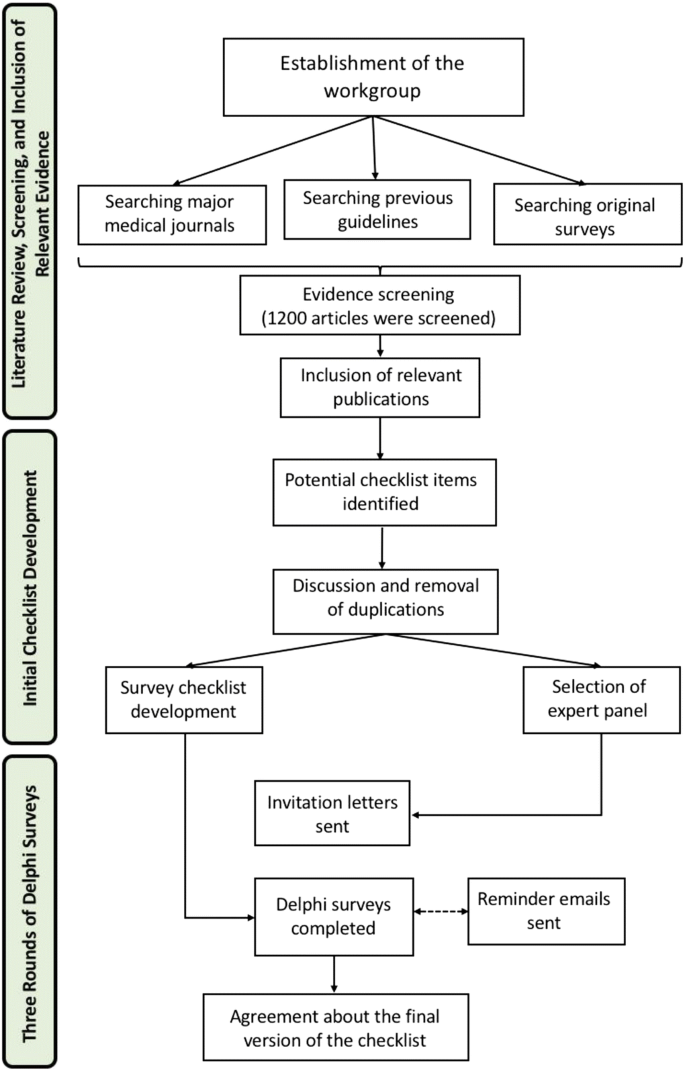

Because of concerns regarding survey qualities and lack of well-developed guidelines, there is a need for a single comprehensive tool that can be used as a standard reporting checklist for survey research to address significant discrepancies in the reporting of survey studies.13, 25,26,27,28, 31, 32 The purpose of this study was to develop a universal checklist for both web- and non-web-based surveys. Firstly, we established a workgroup to search the literature for potential items that can be included in our checklist. Secondly, we collected information about experts in the field of survey research and emailed them an invitation letter. Lastly, we conducted three rounds of rating by the Delphi method.

METHODS

Our study was performed from January 2018 to December 2019 using the Delphi method. This method is encouraged for use in scientific research as a feasible and reliable approach to reach final consensus among experts.34 The process of checklist development included five phases: (i) planning; (ii) drafting of checklist items; (iii) consensus building using the Delphi method; (iv) dissemination of guidelines; and (v) maintenance of guidelines.

Planning Phase

In the planning phase, we established a workgroup, secured resources, reviewed the existing reporting guidelines, and drafted the plan and timeline of our project. To facilitate the development of Checklist for Reporting of Survey Studies (CROSS), a reporting checklist workgroup was set up. This workgroup had seven members from five countries. The expert panel members were found via searching original survey-based studies published between January 2004 and December 2016. The experts were selected based on their number of high-impact and highly cited publications using survey research methods. Furthermore, members of the EQUATOR Network and contributors to PRISMA checklist were involved. Panel members’ information, such as current affiliation, email address, and number of survey studies involved in were collected through their ResearchGate profiles (see Supplement 1). Lastly, a list of potential panel members was created and an invitation letter was emailed to every expert to inquire about their interest in participating in our study. Consenting experts received a follow-up email with a detailed explanation of the research objectives and the Delphi approach.

Drafting the Checklist

This process generated a list of potential items that could be included in the checklist. This procedure included searching the literature for potential items to be considered for inclusion in the checklist, establishing a checklist based on those potential items, and revising the checklist. Firstly, we conducted a literature review to identify survey studies published in major medical journals and extracted relevant information for drafting our potential checklist items (see Supplement 2 for a sample search strategy). Secondly, we searched the EQUATOR Network for previously published checklists for reporting of survey studies. Thirdly, three teams of two researchers independently extracted the potential items that could be included in our checklist. Lastly, our group members worked together to revise the checklist and remove any duplicates (Fig. 1). We discussed the importance and relevance of each potential item and compared each of them to the selected literature.

Consensus Phase Using the Delphi Method

The first round of Delphi was conducted using SurveyMonkey (SurveyMonkey Inc., San Mateo, CA, USA; www.surveymonkey.com). An email was sent to the expert panel containing information about the Delphi process, the timeline of each Delphi phase, and a detailed overview of the project. A Likert scale was used for rating items from 1 (strongly disagree) to 5 (strongly agree). Experts were also encouraged to provide their comments, modify items, or propose a new item that they felt was necessary to be included in the checklist. Nonresponding experts were sent weekly follow-up reminders. Items that did not reach consensus were rerated in the second round along with the modified or newly added items. The main objectives of the first round were to determine unnecessary items and identify incomplete items in the survey checklist. A pre-set 70% agreement (70% experts rating 4/5 or 5/5) was used as a cutoff for including an item in the final checklist.35 Items that did not reach the 70% agreement threshold were adjusted according to experts’ feedback and redistributed to the panelists for round 2. In the second round, we included items that did not reach consensus in round one. In this round, experts were also provided with their round one scoring so that they could modify or preserve their previous responses. Lastly, a third round of Delphi was launched to solve any disagreements about the inclusion of items that did not reach consensus in the second round.

RESULTS

A total of 24 experts with a median (Q1, Q3) of 20 (15.75, 31) years of research experience participated in our study. Overall, 24 items were selected in their original form in the first round, and 27 items were reviewed in the second round. Out of these 27 items, 10 items were merged into five, and 11 items were modified based on experts’ comments. In the second round, 24 experts participated and 18 items were finally included. Overall, 18 experts responded in the third round and only one additional item was included in this round.

All details regarding the percentage agreement and mean and standard deviation (SD) of items included in the checklist are presented in Table 1. CROSS contains 19 sections with 40 different items, including “Title and abstract” (section 1); “Introduction” (sections 2 and 3); “Methods” (sections 4–10); “Results” (sections 11–13); “Discussion” (sections 14–16); and other items (sections 17–19). Please see Supplement 3 for the final checklist.

DISCUSSION

The development of CROSS is the result of a literature review and Delphi process which involved international experts with significant expertise in the development and implementation of survey studies. CROSS includes both evidenced-informed and expert consensus-based items which are intended to serve as a tool that helps improve the quality of survey studies.

The detailed descriptions of the methods and procedures in developing this guideline are provided in this paper so that the quality of the checklist can be assessed by other scholars. Our Delphi respondent members were made up of a panel of experts with backgrounds in different disciplines. We also spent a considerable amount of time researching and debating the potential items to be included in our checklist. During the Delphi process, the agreement of each potential item was rated by participants according to a 5-point Likert scale. Although the entire process was conducted electronically, we gathered data and feedback from the participants via email instead of conducting Skype or face-to-face discussions as suggested by the EQUATOR network.13

In comparison to the CHERRIES or SURGE checklists, CROSS provides a single but comprehensive tool which is organized according to the typical primary sections required for peer-reviewed publications. It also assists researchers in developing a comprehensive research protocol prior to conducting a survey. The “Introduction” provides a clear overview of the aim of the survey. In the “Methods” section, our checklist provides a detailed explanation of initiating and developing the survey, including study design, data collection methods, sample size calculation, survey administration, study preparation, ethical considerations, and statistical analysis. The “Results” section of CROSS describes the respondent characteristics followed by the descriptive and main results, issues that are not discussed in CHERRIES and SURGE checklists. Also, our checklist can be used in both non-web-based and web-based surveys that serves all types of survey-based studies. New items were added to our checklist to address the gaps in the available tools. For example, in item 10b, we included reports of any modification of variables. This can help researchers to justify and readers to understand why there was a need to modify the variables. In item 11b, we encourage researchers to state the reasons for non-participation at each stage. Publishing these reasons can be useful for future researchers intending to conduct a similar survey. Finally, we have added components related to limitations, interpretation, and generalizability of study results to the “Discussion” section, which are an important effort in increasing transparency and external validity. These components are missing from previous checklists (i.e., CHERRIES and SURGE).

Dissemination and Maintenance of the Checklist

Following the consensus phase, we will publish our checklist statement together with a detailed Explanation and Elaboration (E&E) document in which an in-depth explanation of the scientific rationale for each recommendation will be provided. To disseminate our final checklist widely, we aim to promote it in various journals, make it easily available on multiple websites including EQUATOR, and disseminate it through presentations at relevant conferences if necessary. We will also use social media to reach certain demographics, and also the key persons in research organizations who are regularly conducting surveys in different specialties. We also aim to seek endorsement of CROSS by journal editors, professional societies, and researchers, and to collect feedback from scholars about their experience.

Taking comments, critics, and suggestion from experts for revising and correcting our guidelines could help maintain the relevancy of the checklist. Lastly, we are planning on publishing CROSS in several non-English languages to increase its accessibility across the scientific community.

Limitations

We acknowledge the limitations of our study. First, the use of the Delphi consensus method may involve some subjectivity in interpreting experts’ responses and suggestions. Second, six experts were lost to follow up. Nonetheless, we think our checklist could improve the quality of the reporting of survey studies. Similar to other reporting checklists, CROSS requires to be re-evaluated and revised overtime to ensure it remains relevant and up-to-date with evolving research methodologies of survey studies. We therefore welcome feedback, comments, critiques, and suggestions for improvement from the research community.

CONCLUSIONS

We think CROSS has the potential to be a beneficial resource to researchers who are designing and conducting survey studies. Following CROSS before and during the survey administration could assist researchers to ensure their surveys are sufficiently reliable, reproducible, and transparent.

References

Wikipedia contributors. (2020). Survey (human research). In Wikipedia, The Free Encyclopedia. Retrieved 19:59, December 26, 2020, from https://en.wikipedia.org/w/index.php?title=Survey_(human_research)&oldid=994953597.

Maymone MBC, Venkatesh S, Secemsky E, Reddy K, Vashi NA. Research Techniques Made Simple: Web-Based Survey Research in Dermatology: Conduct and Applications. J Invest Dermatol 2018;138(7):1456-1462. doi: https://doi.org/10.1016/j.jid.2018.02.032.

Alcock I, White MP, Pahl S, Duarte-Davidson R, Fleming LE. Associations Between Pro-environmental Behaviour and Neighbourhood Nature, Nature Visit Frequency and Nature Appreciation: Evidence from a Nationally Representative Survey in England, Environ Int 2020;136:105441. doi: https://doi.org/10.1016/j.envint.2019.105441.

Siddiqui J, Brown K, Zahid A, Young CJ. Current Practices and Barriers to Referral for Cytoreductive Surgery and HIPEC Among Colorectal Surgeons: a Binational Survey. Eur J Surg Oncol 2020;46(1):166-172. doi: https://doi.org/10.1016/j.ejso.2019.09.007

Lee JG, Park CH, Chung H, Park JC, Kim DH, Lee BI, Byeon JS, Jung HY. Current Status and Trend in Training for Endoscopic Submucosal Dissection: a Nationwide Survey in Korea. PLoS One 2020;15(5):e0232691. doi: https://doi.org/10.1371/journal.pone.0232691

McChesney SL, Zelhart MD, Green RL, Nichols RL. Current U.S. Pre-Operative Bowel Preparation Trends: a 2018 Survey of the American Society of Colon and Rectal Surgeons Members. Surg Infect 2020;21(1):1-8. doi: https://doi.org/10.1089/sur.2019.125

Núñez A, Manzano CA, Chi C. Health Outcomes, Utilization, and Equity in Chile: an Evolution from 1990 to 2015 and the Effects of the Last Health Reform. Public Health 2020;178:38-48. doi: https://doi.org/10.1016/j.puhe.2019.08.017

Blackwell AKM, Kosīte D, Marteau TM, Munafò MR. Policies for Tobacco and E-Cigarette Use: a Survey of All Higher Education Institutions and NHS Trusts in England. Nicotine Tob Res 2020;22(7):1235-1238. doi: https://doi.org/10.1093/ntr/ntz192

Liu S, Zhu Y, Chen W, Wang L, Zhang X, Zhang Y. Demographic and Socioeconomic Factors Influencing the Incidence of Ankle Fractures, a National Population-Based Survey of 512187 Individuals. Sci Rep 2018;8(1):10443. doi: https://doi.org/10.1038/s41598-018-28722-1

Tamanini JTN, Pallone LV, Sartori MGF, Girão MJBC, Dos Santos JLF, de Oliveira Duarte YA, van Kerrebroeck PEVA. A Populational-Based Survey on the Prevalence, Incidence, and Risk Factors of Urinary Incontinence in Older Adults-Results from the “SABE STUDY”. Neurourol Urodyn 2018;37(1):466-477. doi: https://doi.org/10.1002/nau.23331

Tink W, Tink JC, Turin TC, Kelly M. Adverse childhood experiences: survey of resident practice, knowledge, and attitude. Fam Med 2017;49(1):7-13

Shi S, Lio J, Dong H, Jiang I, Cooper B, Sherer R. Evaluation of Geriatrics Education at a Chinese University: a Survey of Attitudes and Knowledge Among Undergraduate Medical Students. Gerontol Geriatr Educ 2020;41(2):242-249. doi: https://doi.org/10.1080/02701960.2018.1468324

Bennett, C., Khangura, S., Brehaut, J. C., Graham, I. D., Moher, D., Potter, B. K., & Grimshaw, J. M. (2010). Reporting Guidelines for Survey Research: an Analysis of Published Guidance and Reporting Practices. PLoS Med, 8(8), e1001069. https://doi.org/10.1371/journal.pmed.1001069

Turk T, Elhady MT, Rashed S, Abdelkhalek M, Nasef SA, Khallaf AM, Mohammed AT, Attia AW, Adhikari P, Amin MA, Hirayama K, Huy NT. Quality of Reporting Web-Based and Non-web-based Survey Studies: What Authors, Reviewers and Consumers Should Consider. PLoS One 2018;13(6):e0194239. doi: https://doi.org/10.1371/journal.pone.0194239

Jones, T. L., Baxter, M. A., & Khanduja, V. (2013). A Quick Guide to Survey Research. Ann R Coll Surg Engl, 95(1), 5–7. https://doi.org/10.1308/003588413X13511609956372

Jones D, Story D, Clavisi O, Jones R, Peyton P. An Introductory Guide to Survey Research in Anaesthesia. Anaesth Intensive Care 2006;34(2):245-53. doi: https://doi.org/10.1177/0310057X0603400219

Alderman AK, Salem B. Survey Research. Plast Reconstr Surg 2010;126(4):1381-9. doi: https://doi.org/10.1097/PRS.0b013e3181ea44f9

Moher D, Weeks L, Ocampo M, Seely D, Sampson M, Altman DG, Schulz KF, Miller D, Simera I, Grimshaw J, Hoey J. Describing Reporting Guidelines for Health Research: a Systematic Review. J Clin Epidemiol 2011;64(7):718-42. doi: https://doi.org/10.1016/j.jclinepi.2010.09.013

Simera, I., Moher, D., Hirst, A. et al. Transparent and Accurate Reporting Increases Reliability, Utility, and Impact of Your Research: Reporting Guidelines and the EQUATOR Network. BMC Med 8, 24 (2010). https://doi.org/10.1186/1741-7015-8-24

Moher D, Schulz KF, Simera I, Altman DG. Guidance for Developers of Health Research Reporting Guidelines. PLoS Med 2010;7(2):e1000217. doi: https://doi.org/10.1371/journal.pmed.1000217

Tan WK, Wigley J, Shantikumar S. The Reporting Quality of Systematic Reviews and Meta-analyses in Vascular Surgery Needs Improvement: a Systematic Review. Int J Surg 2014;12(12):1262-5. doi: https://doi.org/10.1016/j.ijsu.2014.10.015

Grimshaw, J. (2014). SURGE (The SUrvey Reporting GuidelinE). In Guidelines for Reporting Health Research: a User’s Manual (eds D. Moher, D.G. Altman, K.F. Schulz, I. Simera and E. Wager). https://doi.org/10.1002/9781118715598.ch20

Eysenbach G. Improving the Quality of Web Surveys: The Checklist for Reporting Results of Internet E-Surveys (CHERRIES). J Med Internet Res 2004;6(3):e34. DOI: https://doi.org/10.2196/jmir.6.3.e34.

EquatorNetwork.org. Developing your reporting guideline. 3 July 2018 [cited 12/28/2020]; Available from: https://www.equator-network.org/toolkits/developing-a-reporting-guideline/developing-your-reporting-guideline/.

Li AH, Thomas SM, Farag A, Duffett M, Garg AX, Naylor KL. Quality of Survey Reporting in Nephrology Journals: a Methodologic Review. Clin J Am Soc Nephrol 2014;9(12):2089-94. doi: https://doi.org/10.2215/CJN.02130214

Shankar PR, Maturen KE. Survey Research Reporting in Radiology Publications: a Review of 2017 to 2018. J Am Coll Radiol 2019;16(10):1378-1384. doi: https://doi.org/10.1016/j.jacr.2019.07.012

Duffett M, Burns KE, Adhikari NK, Arnold DM, Lauzier F, Kho ME, Meade MO, Hayani O, Koo K, Choong K, Lamontagne F, Zhou Q, Cook DJ. Quality of Reporting of Surveys in Critical Care Journals: a Methodologic Review. Crit Care Med 2012 Feb;40(2):441-9. doi: https://doi.org/10.1097/CCM.0b013e318232d6c6

Story DA, Gin V, na Ranong V, Poustie S, Jones D; ANZCA Trials Group. Inconsistent Survey Reporting in Anesthesia Journals. Anesth Analg 2011;113(3):591-5. doi: https://doi.org/10.1213/ANE.0b013e3182264aaf

Marcopulos BA, Guterbock TM, Matusz EF. [Formula: see text] Survey Research in Neuropsychology: a Systematic Review. Clin Neuropsychol 2020;34(1):32-55. doi: https://doi.org/10.1080/13854046.2019.1590643

Rybakov KN, Beckett R, Dilley I, Sheehan AH. Reporting Quality of Survey Research Articles Published in the Pharmacy Literature. Res Soc Adm Pharm 2020;16(10):1354-1358. doi: https://doi.org/10.1016/j.sapharm.2020.01.005

Pagano MB, Dunbar NM, Tinmouth A, Apelseth TO, Lozano M, Cohn CS, Stanworth SJ; Biomedical Excellence for Safer Transfusion (BEST) Collaborative. A Methodological Review of the Quality of Reporting of Surveys in Transfusion Medicine. Transfusion. 2018;58(11):2720-2727. doi: https://doi.org/10.1111/trf.14937

Mulvany JL, Hetherington VJ, VanGeest JB. Survey Research in Podiatric Medicine: an Analysis of the Reporting of Response Rates and Non-response Bias. Foot (Edinb) 2019;40:92-97. doi: https://doi.org/10.1016/j.foot.2019.05.005

Tabernero P, Parker M, Ravinetto R, Phanouvong S, Yeung S, Kitutu FE, Cheah PY, Mayxay M, Guerin PJ, Newton PN. Ethical Challenges in Designing and Conducting Medicine Quality Surveys. Tropical Med Int Health 2016 Jun;21(6):799-806. doi: https://doi.org/10.1111/tmi.12707

Keeney S, Hasson F, McKenna H. Consulting the Oracle: Ten Lessons from Using the Delphi Technique in Nursing Research. J Adv Nurs 2006; 53(2): 205-12 8p. doi: https://doi.org/10.1111/j.1365-2648.2006.03716.x.

Zamanzadeh V, Rassouli M, Abbaszadeh A, Alavi-Majd H, Nikanfar A, Ghahramanian A. Details of content validity index and objectifying it in instrument development. Nursing Pract Today 2014; 1(3): 163-71.

Acknowledgements

We are thankful to Dr. David Moher (Ottawa Hospital Research Institute, Canada) and Dr. Masahiro Hashizume (Department of Global Health Policy, Graduate School of Medicine, The University of Tokyo, Tokyo, Japan) for initial contribution of the project and in rating and development of the checklist. We are also grateful to Obaida Istanbuly (Keele University, UK) and Omar Diab (Private Dental Practice, Jordan) for their contribution in the earlier phases of the project.

Funding

None.

Author information

Authors and Affiliations

Contributions

NTH is the generator of the idea, and supervised and helped in writing, reviewing, and mediating Delphi process; AS participated in making a draft of guidelines, mediating Delphi process, analysis of results, writing, and process validation; TLT helped in making a draft of guidelines, and analysis; MNT helped in drafting checklist and mediating Delphi process; NNH, NSJ, KSA, and MK helped in writing and mediating Delphi; AM, JK, CLP, JKB, CDW, FJD, MH, YK, EK, JV, GHL, AG, KGT, ML, EMJ, WKL, STS, CDS, BM, SL, UST, MM and MK helped in the rating of items in Delphi rounds and reviewing the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Ethics approval

Ethics approval was not required for the study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 22 kb)

Rights and permissions

About this article

Cite this article

Sharma, A., Minh Duc, N., Luu Lam Thang, T. et al. A Consensus-Based Checklist for Reporting of Survey Studies (CROSS). J GEN INTERN MED 36, 3179–3187 (2021). https://doi.org/10.1007/s11606-021-06737-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-021-06737-1