ABSTRACT

BACKGROUND

Bringing new therapies to patients with rare diseases depends in part on optimizing clinical trial conduct through efficient study start-up processes and rapid enrollment. Suboptimal execution of clinical trials in academic medical centers not only results in high cost to institutions and sponsors, but also delays the availability of new therapies. Addressing the factors that contribute to poor outcomes requires novel, systematic approaches tailored to the institution and disease under study.

OBJECTIVE

To use clinical trial performance metrics data analysis to select high-performing cystic fibrosis (CF) clinical research teams and then identify factors contributing to their success.

DESIGN

Mixed-methods research, including semi-structured qualitative interviews of high-performing research teams.

PARTICIPANTS

CF research teams at nine clinical centers from the CF Foundation Therapeutics Development Network.

APPROACH

Survey of site characteristics, direct observation of team meetings and facilities, and semi-structured interviews with clinical research team members and institutional program managers and leaders in clinical research.

KEY RESULTS

Critical success factors noted at all nine high-performing centers were: 1) strong leadership, 2) established and effective communication within the research team and with the clinical care team, and 3) adequate staff. Other frequent characteristics included a mature culture of research, customer service orientation in interactions with study participants, shared efficient processes, continuous process improvement activities, and a businesslike approach to clinical research.

CONCLUSIONS

Clinical research metrics allowed identification of high-performing clinical research teams. Site visits identified several critical factors leading to highly successful teams that may help other clinical research teams improve clinical trial performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

The implementation of clinical trials is complex and involves the interactions of multiple components, including the center research team, the study sponsor, a contract research organization, and institutional services such as the institutional review board (IRB) and the contracts and budgets office. Effective communication between the clinical research team and clinical care team may also be necessary to identify research participants. Sub-optimal conduct of clinical trials in academic medical centers both raises the costs to institutions and sponsors and delays the availability of new therapies.1 The slow development of protocols, redundant scientific and ethical reviews, and protocol requirements that hinder enrollment contribute to the inferior conduct of clinical trials in academic centers.2–4 In 2006, the Clinical and Translational Science Award (CTSA) program of the National Institutes of Health (NIH) was developed to support a national consortium of biomedical research institutions to accelerate progress in clinical research. The NIH subsequently requested proposals for work that would improve processes related to the development, approval, activation, enrollment, and completion of clinical trials.

The limited patient population available for clinical trials in rare diseases makes optimization of clinical trial conduct even more relevant to these affected patients. The Cystic Fibrosis Foundation’s Therapeutics Development Network (TDN), including 77 centers, was established in 1998 to accelerate the testing of new therapeutics, collect data on the natural history of CF through observational studies, and test the utility of new outcome measures for people with cystic fibrosis.5 Because 31 of the 77 TDN centers were also CTSA institutions that had collected clinical trial metrics data, it was possible to identify high-performing centers and evaluate their clinical research practices.

The aim of this project was a collaborative benchmarking inquiry6,7 to identify the critical factors that enable high-performing clinical research teams to excel at clinical trial initiation and study execution. We aimed to use clinical trial performance metrics data to identify centers with superior performance, and then mixed methods, including semi-structured qualitative interviews, to identify common success factors.

METHODS

Selection of Centers to Benchmark

Clinical Study Metrics Data Collection and Analysis

All CF TDN centers contribute study metrics quarterly into a web-accessed database for each TDN study. Data collected include the number of patients enrolled and the time from regulatory packet receipt to: 1) Institutional Review Board (IRB) approval, 2) contract execution, 3) approval to enroll (site activation), and 4) first patient enrolled.

Analyzing study metrics for a network of research centers, even within the same disease entity, is complicated by variations in the study portfolio of each TDN center. No studies include all 77 of the network centers; rather, each sponsor selects participating centers based on requirements of the protocol. Furthermore, because each study has its own factors that influence study metrics, normalization of the metrics data is required to compare centers within the network and to identify centers with consistently superior performance.

Normalization for Enrollment

We developed a scoring algorithm to weight the enrollment data according to study complexity and burden. The weighting score considers observational vs. interventional study, duration, complexity of procedures, visit intensity (many visits over a shorter period of time), restrictiveness of inclusion/exclusion criteria, and ease of working with the sponsor (e.g., responsiveness to inquiries, quality of materials provided, and ease of budget and contract negotiation). The weighting scores for each study were evaluated and approved by the TDN Steering Committee, a group of 15 TDN principal investigators and research coordinators from different network centers. The weighting scores range from 0.1 (single-visit observational studies) to 3.0 (highly complex long-duration interventional studies). Enrollment is normalized by calculating a percent weighted enrollment for each center:

Normalization for Start-up Timing

Start-up timing metrics are normalized for each study by performing quartile analyses for each of the key start-up milestones. For example, on a 40-center study, the first ten centers to achieve the milestone of first patient enrolled would receive a quartile score of 4, the next ten centers a quartile score of 3, and so on, with the slowest ten centers receiving a quartile score of 1. For each center, an average quartile score is calculated for each of the start-up milestones by summing the quartile scores for that milestone and then dividing by the number of studies with data for that milestone.

During the time period assessed for this study, 67 multi-center studies (with at least four participating centers) provided data for analysis. To select highly successful centers, the start-up milestone deemed most important was the time to first patient enrolled, since it reflected the efficiency of the entire study start-up process.

To select centers for benchmarking visits, we conducted a composite analysis that plotted the average quartile score for time to first patient enrolled against the percent weighted enrollment for each center (Fig. 1). The latter was considered more important.

Composite analysis of start-up timing and enrollment success. Each dot represents a single center. The Y axis shows the average quartile score for the metric of time from regulatory package receipt to first patient enrolled. A quartile score of 4 indicates that a center is in the fastest 25 % for that particular metric. The X axis shows the percentage of a center’s total CF patient population (weighted for study complexity) enrolled into studies during two consecutive evaluation periods, the first lasting 24 months and the second 18 months. Dotted lines mark the medians for these metrics during the designated time period. Centers represented in the (shaded) upper right quadrant had start times that were generally faster and enrolled a greater proportion of their patients in clinical studies compared to other centers. Letters designate centers selected for benchmarking. These centers showed either sustained high performance or significant improvement between the first and second evaluation periods.

The subset of data for the 31 CTSA centers was used to select centers for benchmarking using data from two time periods (10/1/06–9/30/08 and 10/1/08–3/31/10 [month/day/year]). Later in the project, a small non-CTSA center (Center E) was added to represent similar centers. We selected seven centers with sustained high performance (generally within the upper right quadrant of Fig. 1 for each of the two periods evaluated) and two centers (Centers C and G) with the greatest improvement in enrollment (at least twofold) between the first and second evaluation periods. We also considered center size (small to large) and CF translational focus (none to significant) in making our selection. As a final step, we solicited informal feedback from study sponsors to confirm that the centers we had selected were also perceived as delivering quality data (i.e., general perceptions of responsiveness and acceptable rates for protocol violations, subject completion, and queries). This feedback resulted in the exclusion of several centers that might have been selected on the basis of the metrics data analysis alone.

CONDUCTING THE BENCHMARKING ACTIVITIES

Realist Evaluation Framework

We applied the realist evaluation framework to our benchmarking strategy and the development of the semi-structured interview questions.8 Realist evaluation is a mixed-methods approach that begins with a practical theory about what works under specific circumstances and then refines and improves the theory based on testing using qualitative and quantitative data collected over time. The realist evaluation approach is based on a formula: mechanism + context = outcomes. Mechanisms are processes that trigger and produce reactions to generate outcomes in specific contexts, while contexts are defined as places or settings in which the mechanisms work and in which the outcomes are produced. The “theory of the case” thus specifies a prediction or explanation about what happens (mechanisms) in specific research institutions (contexts) to produce changes in performance (outcomes). Our team developed a theory about the success characteristics of high-performing centers (Fig. 2) that was evaluated and refined based on data collected during the benchmarking research.

A priori theory of the case. We established this list of likely mechanisms of success based on information gathered between 1998 (when the CF TDN was established) and 2010 through informal discussions, workshops, and training sessions with TDN centers. Our site selection process allowed us to evaluate centers with different institutional contexts by including centers of varying size and translational focus, centers located in densely populated urban areas as well as centers located in smaller cities, some centers with nearby competing programs, and varying levels of institutional resources for all centers.

Investigational Team

The benchmarking protocol development and site-visiting team included personnel with significant experience in clinical research management, CF investigators and research coordinators, and CF clinical care and quality improvement scientists from several universities (Seattle Children’s Hospital Research Institute/University of Washington, University of North Carolina at Chapel Hill, University of Pittsburgh and Dartmouth) and the CF Foundation.

Data Collection and Analysis

All centers selected for benchmarking were approached, agreed to the visit requirements, and were visited between July and November 2010. The site visit included an initial meeting with the clinical research team to present an overview of the program’s structure and processes. Each team member was given a questionnaire developed around the theory of the case and asked to select the five factors from those listed that they believed were most important to their team’s success (Online Appendix 1). Notably, key team members were asked to complete the questionnaires before our interviews and were given only the brief description of each characteristic included in the questionnaire when providing their rankings. The following day, the site visitors observed a research team meeting, toured the facilities, and conducted individual interviews (TDN principal investigators and lead research coordinators/managers) or group interviews (including other members of the research team). When possible, institutional leadership such as CTSA program directors or department chairs, IRB managers, and contracts office managers were also interviewed to assess institutional characteristics. Although the interview questions were primarily designed to probe the theory of the case, open-ended questions were also included to allow center participants to describe factors and processes not specifically addressed (Online Appendix 2). Our approach during site visits and interviews was appreciative inquiry, which acknowledges achievement and seeks to identify the reasons for success.9

The research plan was approved by the Seattle Children’s Institutional Review Board. All interviews were recorded and transcribed, and the interview information was analyzed using content analysis software (Atlas.ti., Berlin, Germany) to code individual quotes into various themes. The results of the interview content analysis were integrated with questionnaire data and observer notes using an iterative process, and cross-case methodology10 was applied. For each center, an individual case study summary was written, then reviewed and edited by the site visitors. Finally, each center reviewed its own case summary to confirm that key concepts had not been missed.

RESULTS

Institutional Context and Outcomes

The centers ranged in size from 101 to 454 patients, with five of nine centers visited having adult CF populations that made up more than half of the patient population served. (Table 1) All teams had weekly research meetings. The ratio of research staff to patient population spanned nearly a threefold range. Center staff and investigators noted a variety of challenges: (1) working across two institutions (Centers A and D), (2) significant travel time between staff offices and research visit space (Centers F and I), (3) low ratio of research staff to CF patient population (Centers B, C, F and G), and (4) institutional barriers to timely start-up [Center H (IRB) and Center I (contract)]. However, as reflected in each center’s overall success, the clinical research teams were able to compensate for these challenges.

Top-Ranked Success Factors for Benchmarked Centers

For each center, the five factors that were mentioned most frequently in the individual questionnaires are noted in Table 2. Teams were unanimous regarding the importance of shared leadership between the principal investigator and research coordinator, as well as the importance of communication between the clinical care and research teams and the value of a customer-service orientation to interactions with study participants. However, what was perceived as important varied considerably for all of the other factors. For example, the centers that identified the longevity of their research staff as being most important did not see either structured training or regular meetings as highly important, while those that did not identify longevity did identify those two factors as important. This suggests that teams with newer staff depended more on structured communication and training than more experienced staff did.

Key Success Characteristics Based on Interviews and Observation

Table 3 presents the characteristics identified by site visitors based on their observations and the interviews. Leadership, effective communication, and adequate staff to complete the work were identified at all centers.

Leadership

Leadership was highly ranked in the questionnaire and in the interviews. The presence of at least one highly engaged leader and a model of shared leadership were universally valued. The shared leadership might be between a pediatric and adult principal investigator, or between the principal investigator and research coordinator. The most valued qualities of leadership included a visible commitment to TDN studies and goals, availability to the team, the solicitation of team opinions in decision-making, and in particular, attention to team workload. The two centers selected for benchmarking visits based on their observed improvement reported that the primary reason for the change were new team leaders who focused on improvement and used metrics data to compare their study performance to peer institutions.

Effective Communication

The diverse components of the clinical research process within an institution are linked through effective communication. This was demonstrated through regular research team meetings, close interaction between clinical research and clinical care teams, and shared expectations for responsiveness to calls and email correspondence. Communication was also facilitated by physically placing team members near one another and near the clinical program, where conversation could replace email.

Staff Adequacy

This refers to a broad range of team qualities, including having staff whose skills match the work, having a sufficient number of coordinators and support staff, and ensuring that the total number of studies managed by the team does not exceed the staff’s capacity. In general, comments about what attributes were sought when hiring came from centers that had recently recruited new staff. Three of the four centers with a low ratio of research support staff to patients (C, F, and G) were the largest centers, suggesting that there may be some efficiencies of scale. However, most of these centers had recently experienced turnover and planned to hire additional staff.

One key characteristic we identified had not been included in the theory of the case: the importance of a businesslike approach to the financial sustainability of the program. This strategy includes the development of adequate study budgets, enrolling the number of subjects specified within the contract, financial tracking to ensure that payments due have been received, and examining final accounting to inform future budgeting.

We had anticipated that a culture of research would be a key success factor, but did not expect that such a large amount of time and effort would be necessary to develop such a culture. For many centers, the effort had been an essential part of the program for years. Clinical research was woven into discussions in clinic visits from infancy through adulthood, and reflected a longstanding general focus on CF research in the program (basic, translational and clinical). In other centers, having a culture of research was only recently recognized as a success characteristic, and intentional activity to develop such a culture had begun only a few years before our benchmarking work.

DISCUSSION

Critical success factors noted at all nine high-performing centers were strong leadership, established and effective communication within the research team and with the clinical care team, and adequate staff to complete the work. Other frequent characteristics included a mature culture of research, a customer service orientation in interactions with study participants, shared efficient processes, continuous process improvement activities, and a businesslike approach to clinical research. We believe that collecting clinical trial performance metrics is central to facilitating improvement in clinical research, and have continued to monitor metrics data for all centers in the network. Since 2009, TDN centers have received annual metrics reports that compare their performance with that of other centers in the network. This allows them to identify potential areas for improvement and to measure the impact of changes made over time.

Our theory of the case was largely confirmed and reinforces the notion that the people comprising the team are as important—if not more important—than specific clinical research processes in achieving success. Some of our findings were not predicted by our theory; specifically, the attention paid to financial sustainability in some centers, while others were less important than we expected (e.g., IT systems to manage processes and a customer-service orientation in interactions with sponsors). Overall, we gained a rich understanding of how individual teams leveraged different strengths to achieve similar goals.

Our initial assumptions regarding the importance of multi-level shared leadership between the principal investigator and the lead research coordinator were broadened to reflect the variety of leadership structures observed at the centers. We found that the strongest leadership could come from the pediatric or adult program investigators, the lead research coordinator, or the research manager. In some cases, it was strong leadership from both adult and pediatric investigators or an investigator and coordinator equally sharing the role. Each of these models was effective and depended on the people involved and the institutional context. Not surprisingly, turnover of strong leadership affects a team’s dynamics and performance. Such perturbations force the team to adapt and provide another opportunity to measure how some teams recover and improve. Given the inevitability of staff turnover, those who manage such disruptions well can share their strategies for preserving team effectiveness or accelerating recovery.

We identified several apparent contradictions between the forced rankings of success factors that each research team member completed before their interview (Table 2) and the actual observations and analysis of the interview content (Table 3). For example, only one center ranked Process Improvement as one of its top five factors, yet information shared during the interview was coded to a category that included process improvement, and it was deemed as a significant contributor for six centers. We offer four factors to explain these apparent discrepancies: 1) forcing team members to rank the five most important factors caused them to leave out other important factors ; 2) team members completed the questionnaires and performed forced rankings before the interviews, relying on the brief description in the questionnaire; 3) the coding process used for the interview content and site visitor observations changed over time to group commonly cited, related themes together into a specific coded category; and 4) the interview questions themselves may have solicited more comments about some themes than others.

Our study has several limitations. Our selection of centers was not entirely driven by data, but was modified by additional factors, such as observations by others regarding study quality and our decision to pick a broad range of centers by size and research environment. Although we used a consensus approach to develop the benchmarking protocol, bias is inevitable when creating a theory of the case, which then guides the content of the questionnaires and interview guides. The technique of appreciative inquiry focuses primarily on what is going well rather than what is not going well, and therefore may introduce positive biases. We studied only centers that were highly successful, which prevented us from formally comparing characteristics of centers with average or below-average performance; to see if these differed substantially in centers that were less successful. The content analysis approach relies on grouping data into themes by the same investigators who developed the study, which also introduces bias. We observed only a small number of centers and made our observations during a single time period rather than in multiple visits over an extended period; thus, we could not prospectively assess how teams cope with new challenges. Our analysis of the institutional context was limited to a few factors. We did not pursue an in-depth examination of factors that could affect success in recruitment, such as demography, socioeconomic profile, population density of patients served in the region where the center is located, presence of competing clinical research centers in large metropolitan areas, and distance patients traveled to the center. During the interview process, we were able to determine the CF research team’s perceptions of institutional research support (and were able to observe the physical facilities available to the research teams); however, we did not systematically evaluate the institutional review boards, contracts and grants offices, or other institutional clinical research offices.

Through this work, we identified a number of modifiable factors common to high-performing centers. If these factors are unique or if particular combinations of them are associated with high performance, they may be characteristics that set these teams apart. However, because we were not able to use quantitative methods, we cannot offer evidence to support a causal association between the factors at high-performing centers compared to those with average or below-average performance. We were able to assess the context or institutional setting in only a limited way, so it is possible the factors we identified at high-performing centers may be more dependent on the institution than we appreciated, making them less transferable to other teams.

Modifiable factors include those that may be adapted by any study team regardless of institutional context, such as regular team meetings, use of white boards to share key information, use of checklists to ensure consistent and efficient processes, sharing space to facilitate communication, and providing flexible hours in the evening and weekend for study visits. Establishing strong connections and a presence in the clinical-care setting facilitates informing the clinical team about research studies, and is a venue for explaining clinical research to patients outside of recruitment to specific studies.

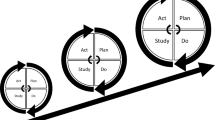

For clinical research teams who wish to improve their performance, familiarity with the basic principles and standard methods of quality improvement is a first step. This includes an understanding of change models, the importance of systems-based approaches, an appreciation of group dynamics, and quantitative assessment of the impact of changes made. Collecting and tracking the team’s study metrics (start-up and enrollment) represent such measures, which can be extended more broadly to the institutional or research network level.

Our benchmarking work identified several key factors and practices associated with clinical research success that can be modified by study teams. Clinical research teams may find these observations useful as they undertake their own quality improvement work through assessing their clinical research performance, identifying suitable targets for improvement, and considering strategies or practices to adopt. We would anticipate that such changes would enhance the experience of clinical research for study participants and teams alike, as well as improve the conduct and completion of clinical trials.

REFERENCES

Kitterman DR, Cheng SK, Dilts DM, Orwoll ES. The prevalence and economic impact of low-enrolling clinical studies at an academic medical center. Acad Med. 2011;86(11):1360–6.

Califf RM. Clinical research sites–the underappreciated component of the clinical research system. JAMA. 2009;302(18):2025–7.

Dilts DM, Sandler AB. Invisible barriers to clinical trials: the impact of structural, infrastructural, and procedural barriers to opening oncology clinical trials. J Clin Oncol. 2006;24(28):4545–52.

English R, Lebovitz Y, Griffin R. Transforming Clinical Research in the United States: Challenges and Opportunities: Workshop Summary. Washington (DC): National Academies Press (US); 2010.

Rowe SM, Borowitz DS, Burns JL, Clancy JP, Donaldson SH, Retsch-Bogart G, et al. Progress in cystic fibrosis and the CF Therapeutics Development Network. Thorax. 2012;67(10):882–90.

Nelson EC, Batalden PB, Huber TP, Mohr JJ, Godfrey MM, Headrick LA, et al. Microsystems in health care: Part 1. Learning from high-performing front-line clinical units. Jt Comm J Qual Improv. 2002;28(9):472–93.

Ettorchi-Tardy A, Levif M, Michel P. Benchmarking: a method for continuous quality improvement in health. Health Policy. 2012;7(4):e101–19.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review—a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10(Suppl 1):21–34.

Carter CA, Ruhe MC, Weyer S, Litaker D, Fry RE, Stange KC. An appreciative inquiry approach to practice improvement and transformative change in health care settings. Qual Manag Health Care. 2007;16(3):194–204.

Donaldson MS, Mohr JJ. Exploring innovation and quality improvement in health care micro-systems: a cross-case analysis : a technical report for the Institute of Medicine Committee on the Quality of Health Care in America Washington (DC): Institute of Medicine; 2000.

Acknowledgements

The authors would like to acknowledge the many staff members at the nine benchmarked centers that participated in interviews and allowed us to observe their meetings and processes. We are also very grateful for our collaboration with Leila Atry, Elizabeth Hartigan, Diane Towle, and Kathryn Sabadosa, who participated on the site visit teams.

Funding

This research was funded by grant #3UL1RR025014-03S2 (currently ITHS-UL1TR000423) from the National Institutes of Health, and by the Cystic Fibrosis Foundation.

Conflict of Interest

E. Nelson reports stock ownership in Quality Data Management Inc. No other conflicts of interest were identified for the authors.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Retsch-Bogart, G.Z., Van Dalfsen, J.M., Marshall, B.C. et al. Highly Effective Cystic Fibrosis Clinical Research Teams: Critical Success Factors. J GEN INTERN MED 29 (Suppl 3), 714–723 (2014). https://doi.org/10.1007/s11606-014-2896-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-014-2896-8