Abstract

Purpose

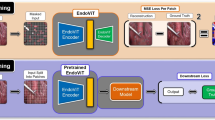

Advances in surgical phase recognition are generally led by training deeper networks. Rather than going further with a more complex solution, we believe that current models can be exploited better. We propose a self-knowledge distillation framework that can be integrated into current state-of-the-art (SOTA) models without requiring any extra complexity to the models or annotations.

Methods

Knowledge distillation is a framework for network regularization where knowledge is distilled from a teacher network to a student network. In self-knowledge distillation, the student model becomes the teacher such that the network learns from itself. Most phase recognition models follow an encoder-decoder framework. Our framework utilizes self-knowledge distillation in both stages. The teacher model guides the training process of the student model to extract enhanced feature representations from the encoder and build a more robust temporal decoder to tackle the over-segmentation problem.

Results

We validate our proposed framework on the public dataset Cholec80. Our framework is embedded on top of four popular SOTA approaches and consistently improves their performance. Specifically, our best GRU model boosts performance by \({\textbf {+3.33}}\%\) accuracy and \({\textbf {+3.95}}\%\) F1-score over the same baseline model.

Conclusion

We embed a self-knowledge distillation framework for the first time in the surgical phase recognition training pipeline. Experimental results demonstrate that our simple yet powerful framework can improve performance of existing phase recognition models. Moreover, our extensive experiments show that even with 75% of the training set we still achieve performance on par with the same baseline model trained on the full set.

Similar content being viewed by others

Data, code and/or material availability

Privately held.

Notes

Student and teacher networks share the same architecture.

Authors in [11] show empirically that using a normalized projection improves model learning towards the downstream task.

References

Maier-Hein L, Vedula SS, Speidel S, Navab N, Kikinis R, Park A, Eisenmann M, Feussner H, Forestier G, Giannarou S et al (2017) Surgical data science for next-generation interventions. Nat Biomed Eng 1(9):691–696

Padoy N, Blum T, Feussner H, Berger M-O, Navab N (2008) On-line recognition of surgical activity for monitoring in the operating room. In: AAAI, pp 1718–1724

Charrière K, Quellec G, Lamard M, Martiano D, Cazuguel G, Coatrieux G, Cochener B (2017) Real-time analysis of cataract surgery videos using statistical models. Multimedia Tools Appl 76(21):22473–22491

Twinanda AP, Shehata S, Mutter D, Marescaux J, De Mathelin M, Padoy N (2016) Endonet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36(1):86–97

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis (IJCV) 115(3):211–252

Czempiel T, Paschali M, Keicher M, Simson W, Feussner H, Kim ST, Navab N (2020) Tecno: surgical phase recognition with multi-stage temporal convolutional networks. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 343–352

He Z, Mottaghi A, Sharghi A, Jamal MA, Mohareri O (2022) An empirical study on activity recognition in long surgical videos. In: Machine learning for health. PMLR, pp 356–372

Gao X, Jin Y, Long Y, Dou Q, Heng P-A (2021) Trans-svnet: accurate phase recognition from surgical videos via hybrid embedding aggregation transformer. In: Medical image computing and computer assisted intervention—MICCAI 2021. Springer, Cham, pp 593–603

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. Stat 1050:9

Kim K, Ji B, Yoon D, Hwang S (2021) Self-knowledge distillation with progressive refinement of targets. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6567–6576

Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: International conference on machine learning. PMLR, pp 1597–1607

Grill J-B, Strub F, Altché F, Tallec C, Richemond P, Buchatskaya E, Doersch C, Avila Pires B, Guo Z, Gheshlaghi Azar M, Piot B, kavukcuoglu k, Munos R, Valko M (2020) Bootstrap your own latent-a new approach to self-supervised learning. Adv Neural Inf Process Syst 33:21271–21284

Kadkhodamohammadi A, Luengo I, Stoyanov D (2022) Patg: position-aware temporal graph networks for surgical phase recognition on laparoscopic videos. Int J Comput Assist Radiol Surg 17(5):849–856

Farha YA, Gall J (2019) Ms-tcn: multi-stage temporal convolutional network for action segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3575–3584

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778

Bai S, Kolter JZ, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. Universal Language Model Fine-tuning for Text Classification

Lillicrap TP, Hunt JJ, Pritzel A, Heess N, Erez T, Tassa Y, Silver D, Wierstra D (2016) Continuous control with deep reinforcement learning. In: Bengio Y, LeCun Y (eds) ICLR

Ding X, Liu Z, Li X (2022) Free lunch for surgical video understanding by distilling self-supervisions. In: Medical image computing and computer assisted intervention—MICCAI 2022: 25th international conference, Singapore, September 18–22, 2022, Proceedings, Part VII. Springer, pp 365–375

Jin Y, Li H, Dou Q, Chen H, Qin J, Fu C-W, Heng P-A (2020) Multi-task recurrent convolutional network with correlation loss for surgical video analysis. Med Image Anal 59:101572

Funding

This work was funded by Medtronic plc.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Mr. Barbarisi, Drs. Zhang, Kadkhodamohammadi and Luengo; and Prof. Stoyanov are employees of Medtronic plc. Prof. Stoyanov is also a co-founder and shareholder in Odin Vision, Ltd.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, J., Barbarisi, S., Kadkhodamohammadi, A. et al. Self-knowledge distillation for surgical phase recognition. Int J CARS 19, 61–68 (2024). https://doi.org/10.1007/s11548-023-02970-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02970-7