Abstract

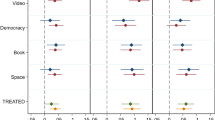

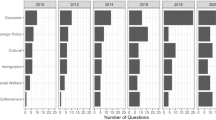

Scaling methods pioneered by Poole and Rosenthal (Am J Polit Sci 29(2):357–384, 1985) redefined how scholars think about and estimate the ideologies of representatives seated in the US Congress. Those methods also have been used to estimate citizens’ ideologies. Whereas studies evaluating Congress typically use a behavioral measure, roll call votes, to estimate where representatives stand on the left-right ideological spectrum, those of the public most often have relied on survey data of stated, rather than revealed, preferences. However, measures of individuals’ preferences and, accordingly, estimates of their ideal points, may differ in important ways based on how preferences are elicited. In this paper, we elicit the same individuals’ preferences on the same 10 issues using two different methods: standard survey responses measured on a Likert scale and a donation exercise wherein individuals are forced to divide $1.50 between interest groups with diametrically opposed policy preferences. Importantly, expressing extreme views is costless under the former, but not the latter, method. We find that the type of elicitation method used is a significant predictor of individuals’ ideal points, and that the elicitation effect is driven primarily by Democratic respondents. Under the donation method, the ideal points of Democrats in the aggregate shift left, particularly for those Democrats who are politically engaged. In contrast, wealthy Democrats’ ideal points shift to the right. We also document effects for Republicans and Independents and find that overall polarization is similar under both elicitation methods. We conclude with a discussion of our results, and the consequences and tradeoffs of each elicitation method.

Similar content being viewed by others

Notes

We thank an anonymous reviewer for this comment.

The requirements are designed to reduce the variance or noise in subject behavior by restricting participation to those who are known to obey the rules, so to speak, of MTurk studies. One possible concern is that the requirements also change the expected response rather than just the variance in responses. The aforementioned studies finding that MTurk subjects are more representative and behave similarly to other subjects have used the same or similar approval ratings, residency, and/or previous jobs completed requirements (Arechar et al. 2017; Berinsky et al. 2012; Clifford et al. 2015; Coppock 2018; Levay et al. 2016; Paolacci et al. 2010), although our previous completed jobs requirement is comparatively more stringent.

The subjects also had met the criteria for this study: they were located in the United States, had approval ratings greater than or equal to 95%, and already had at least 1,000 jobs approved. We targeted those subjects by making their worker identification codes an additional criteria for participation in the study, which meant that positions in the study were not taken by others and remained open for them to enroll.

For example, a report by Pew found that 54% of Americans said that whether a person is a man or a woman was determined by their sex at birth. While 80% of Republicans held this view, only 34% of Democrats did. Accordingly, we felt that including a question on transgender identity, using the same question wording as the Pew study, might help to distinguish individuals with different ideological leanings. See: http://www.pewresearch.org/fact-tank/2017/11/08/transgender-issues-divide-republicans-and-democrats/.

Malhotra et al. (2009) find that branching endpoints (e.g., “oppose” and “favor”, but not “neither favor nor oppose”) and providing three extremity choices (e.g., “a little”, “moderately” and “a great deal”) yields greater criterion validity. Scholars have theorized that branching questions may perform better by allowing respondents to break complex questions into more manageable, component parts (Armstrong et al. 1975). Respondents can evaluate their attitude directions and their attitude strengths separately. We seek to compare a behavioral measure of preferences with the best possible version of Likert elicitation; accordingly, we use branching questions for Likert elicitation in this paper.

Screenshots of the study in its entirety are available from the authors.

In Appendix Figure 10, we show, for each individual (and by party identification), whether his or her ideal point shifted left or right under the donation elicitation method, as compared with the Likert method. Individuals’ ideal points more often shift left under the donation method, particularly for Democrats.

By design, no significant difference arises using normalized data, which have been rescaled separately for donation and Likert methods to have mean zero.

This result survives a Benjamini-Hochberg (1995) false discovery rate (FDR) correction for multiple comparisons. See Supplemental Appendix B, Table B1.

These results are robust to using normalized data.

The mean Absolute Value Difference is 1.23 and is significantly different from 0 (\(p=0.00\)). Using normalized data, the mean difference is 0.39, which likewise is significantly different from 0 (\(p=0.00\)).

This result is robust to using normalized data (\(p=0.04\)).

The mean Raw Difference is 0.42, which is significantly different from 0 (\(p=0.00\)). Using normalized data, the mean Raw Difference is not significantly different from 0 (\(p=0.98\)).

Using normalized data, the effect on political engagement remains significant (\(p=0.04\)), but the effect with regard to wealth does not (\(p=0.12\)). Neither result survives a Benjamini-Hochberg (1995) FDR rate correction for multiple comparisons. See Supplemental Appendix B, Table B2.

This result is robust to using normalized data (\(p=0.03\)). However, it does not survive a Benjamini-Hochberg (1995) FDR rate correction for multiple comparisons. See Supplemental Appendix B, Table B3.

This result is robust to using normalized data (\(p=0.01\)).

Alternatively, observed effects might be explained by differences in interest group pairs chosen for the different issues, a possibility discussed at greater length in this section. We thank an anonymous reviewer for this comment.

For instance, on the issue of equal pay for women, Republican men averaged 3.1 under the Likert elicitation and 4.8 under the donation elicitation on a 1–7 scale, where higher numbers indicate more support for a conservative issue stance or interest group.

Although we supplemented our sample with additional Republicans, it is also possible that some of our null findings with that group owed to the comparatively smaller sample size.

We thank an anonymous reviewer for this example.

We thank an anonymous reviewer for this recommendation and for providing the guiding principles for selection of interest groups in future work under the donation method that we enumerate in this paper.

We thank an anonymous reviewer for this idea.

Of course, not all individuals may be confident as to which side they prefer.

References

Aldrich, J. H., & McKelvey, R. D. (1977). A method of scaling with applications to the 1968 and 1972 presidential elections. American Political Science Review, 71(1), 111–130.

Alford, J., & Hibbing, J. R. (2007). Personal, interpersonal, and political temperaments. The ANNALS of the American Academy of Political and Social Science, 614, 196–212.

Anderson, L. R., Mellor, J. M., & Milyo, J. (2005). Do liberals play nice? The effects of party and political ideology in public goods and trust games. In J. Morgan (Ed.), Advances in Applied Microeconomics: Experimental and Behavioral Economics (pp. 107–131). Bingley: Emerald Group Publishing.

Ansolabehere, S., Rodden, J., & Snyder, J. M. (2008). The strength of issues: Using multiple measures to gauge preference stability, ideological constraint, and issue voting. American Political Science Review, 102(2), 215–232.

Arechar, A. A., Gachter, S., & Molleman, L. (2017). Conducting interactive experiments online. Experimental Economics, 21(1), 99–131.

Armstrong, J. S., Denniston, W. B., & Gordon, M. M. (1975). The use of the decomposition principle in making judgments. Organizational Behavior and Human Performance, 14(2), 257–263.

Bafumi, J., & Herron, M. C. (2010). Leapfrog representation and extremism: A study of American voters and their members in Congress. American Political Science Review, 104(3), 519–542.

Barbera, P. (2015). Birds of the same feather tweet together. Bayesian ideal point estimation using Twitter data. Political Analysis, 23(1), 76–91.

Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society Series B (Methodological), 57(1), 289–300.

Berinsky, A. J., Huber, G. A., & Lenz, G. S. (2012). Evaluating online labor markets for experimental research: Amazon.com’s Mechanical Turk. Political Analysis, 20(3), 351–368.

Bodner, R., & Prelec, D. (2003). Self-signaling in a neo-Calvinist model of everyday decision making. In I. Brocas & J. Carrillo (Eds.), Psychology of economic decisions (pp. 105–126). London: Oxford University Press.

Brooks, A. (2007). Who really cares. New York: Basic Books.

Chandar, B., & Weyl, G. (2017). Quadratic voting in finite populations. Working Paper.

Cliffiord, S., Jewell, R. M., & Waggoner, P. D. (2015). Are samples drawn from Mechanical Turk valid for research on political ideology? Research and Politics, 1–9.

Coppock, A. (2018). Generalizing from survey experiments conducted on Mechanical Turk: A replication approach. Political Science Research and Methods, 1-16.

Farc, M. M., & Sagarin, B. J. (2009). Using attitude strength to predict registration and voting behavior in the 2004 U.S. presidential elections. Basic and Applied Social Psychology, 31(2), 160–73.

Farwell, L., & Weiner, B. (2000). Bleeding hearts and the heartless: Popular perceptions of liberal and conservative ideologies. Personality and Social Psychology Bulletin, 26(7), 845–852.

Fiorina, M. P., Abrams, S. A., & Pope, J. C. (2008). Polarization in the American public: Misconceptions and misreadings. Journal of Politics, 70(2), 556–560.

Fisman, R., Jakiela, P., Kariv, S., & Markovits, D. (2015). The distributional preferences of an elite. Science, 349(6254), 1300–1307.

Fowler, J. H., & Kam, C. D. (2007). Beyond the self: Social identity, altruism, and political participation. The Journal of Politics, 69(3), 813–827.

Graham, J., Nosek, B. A., & Haidt, J. (2012). The moral stereotypes of liberals and conservatives: Exaggeration of differences across the political spectrum. PLoS ONE, 7(12), 1–13.

Haas, N., Hassan, M., Mansour, S., & Morton, R.B. (2017). Polarizing information and support for reform. Working Paper.

Hare, C., Armstrong, D. A, I. I., Bakker, R., Carroll, R., & Poole, K. T. (2015). Using bayesian Aldrich-McKelvey scaling to study citizens’ Ideological preferences and perceptions. American Journal of Political Science, 59(3), 759–774.

Hauser, D. J., & Schwartz, N. (2016). Attentive turkers: MTurk participants perform better on online attention checks than do subject pool participants. Behavior Research Methods, 48(1), 400–407.

Hill, S. J., & Tausanovitch, C. (2015). A disconnect in representation? Comparison of trends in congressional and public polarization. Journal of Politics, 77(4), 1058–1075.

Holbrook, A. A., Sterrett, D., Johnson, T. P., & Krysan, M. (2016). Racial disparities in political participation across issues: The role of issue-specific motivators. Political Behavior, 38(1), 1–32.

Horton, J. J., Rand, D. G., & Zeckhauser, R. J. (2011). The online laboratory: Conducting experiments in a real labor market. Experimental Economics, 14(3), 399–425.

Kristof, N. (2008). Bleeding heart tightwads. The New York Times.

Krosnick, J. A. (1988). Attitude importance and attitude change. Journal of Experimental Social Psychology, 24, 240–255.

Krosnick, J.A., Presser, S., Fealing, K.H., & Ruggles, S. (2015). The future of survey research: Challenges and opportunities. A Report to the National Science Foundation Presented by The National Science Foundation Advisory Committee for the Social, Behavioral and Economic Sciences Subcommittee on Advancing SBE Survey Research.

Lalley, S.P., & Weyl, G. (2016). Quadratic voting. Working Paper.

Levay, K. E., Freese, J., & Druckman, J. N. (2016). The demographic and political composition of Mechanical Turk samples. SAGE Open, 6(1), 1–17.

Malhotra, N., Krosnick, J. A., & Thomas, R. K. (2009). Optimal design of branching questions to measure bipolar constructs. The Public Opinion Quarterly, 73(2), 304–324.

Miller, J. M., Krosnick, J. A., Holbrook, A., Tahk, A., & Dionne, L. (2016). The impact of policy change threat on financial contributions to interest groups. In J. A. Krosnick, I. C. Chiang, & T. Stark (Eds.), Explorations in Political Psychology. New York: Psychology Press.

Mullinix, K. J., Leeper, T. J., Druckman, J. N., & Freese, J. (2015). The generalizability of survey experiments. Journal of Experimental Political Science, 2(2), 109–138.

Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on Amazon Mechanical Turk. Judgment and Decision Making, 5(5),

Poole, K. T., & Rosenthal, H. (1985). A spatial model for legislative roll call analysis. American Journal of Political Science, 29(2), 357–384.

Poole, K. T. (1998). Recovering a basic space from a set of issue scales. American Journal of Political Science, 42(3), 954–993.

Poole, K. T., & Rosenthal, H. (2000). Congress: A political-economic history of roll call voting. New York: Oxford University Press.

Posner, E. A., & Weyl, G. (2015). Voting squared: Quadratic voting in democratic politics. Vanderbilt Law Review, 68(2), 441–500.

Quarfoot, D., Kohorn, D. V., Slavin, K., Sutherland, R., Goldstein, D., & Konar, E. (2017). Quadratic voting in the wild: Real people, real votes. Public Choice, 172(1–2), 283–303.

Visser, P. S., Krosnick, J. A., & Simmons, J. P. (2003). Distinguishing the cognitive and behavioral consequences of attitude importance and certainty: A new approach to testing the common-factor hypothesis. Journal of Experimental Social Psychology, 39(2), 118–141.

Visser, P. S., Krosnick, J. A., & Norris, C. J. (2016). Attitude importance and attitude-relevant knowledge: Motivator and enabler. In J. A. Krosnick, I. C. Chiang, & T. Stark (Eds.), Explorations in Political Psychology. New York: Psychology Press.

Treier, S., & Hillygus, D. S. (2009). The nature of political ideology in the contemporary electorate. The Public Opinion Quarterly, 73(4), 679–703.

Weyl, Glen. (2017). The robustness of quadratic voting. Public Choice, 172(1–2), 75–107.

Acknowledgements

We acknowledge the helpful research assistance of Arusyak Hakhnazaryan. We thank Douglas von Kohorn for helpful comments. The authors take credit for all errors. This research was approved by NYU’s Institutional Review Board under protocol IRB # 1304.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix 1: additional figures

Appendix 2: Estimation strategy

Bayesian multinomial IRT as presented in Hill and Tausanovitch (2015).

The authors assume a one-dimensional issue space wherein the probability that person i chooses response k to question j is given by the following:

where \(x_{i}\) represents person \(i's\) ideology, \(y_{ij}\) their response to question j, \(y_{ij} = k\) indicates response k to question j, and \(K_j\) indicates response options for question j, which may vary across questions.

The authors present the complete likelihood, which is the product of all individual observed likelihoods for each response, as below, where I is the set of all people, J is the set of all items, and \(I{(y_ij} = k)\) takes the value of 1 if respondent gave response k to question j, and 0 otherwise.

Rights and permissions

About this article

Cite this article

Haas, N., Morton, R.B. Saying versus doing: a new donation method for measuring ideal points. Public Choice 176, 79–106 (2018). https://doi.org/10.1007/s11127-018-0558-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11127-018-0558-9