Abstract

On January 27, 2017, President Trump signed executive order 13769, which denied citizens of seven Muslim-majority countries entry into the United States. Opposition to what was termed the “Muslim ban” quickly amassed, producing sudden shifts to the information environment and to individual-level preferences. The present study examines whether within-subject shifts against the ban lasted over an extended period of time. Evidence from a three-wave panel study indicates that individual-level opinions, once they shifted against the ban, remained fairly stable one year later. Analysis of a large corpus of cable broadcast transcripts and newspaper articles further demonstrates that coverage of the ban from February 2017 to January 2018 did not dissipate, remained largely critical, and lacked any significant counter-narratives to potentially alter citizens’ preferences once again. Our study underscores the potential of capturing the dynamics of rapid attitudinal shifts with timely panel data, and of assessing the durability of such changes over time. It also highlights how mass movements and political communication may alter and stabilize citizens’ policy preferences, even those that target stigmatized groups.

Source: New York Times, Washington Post, Wall Street Journal, USA Today

Similar content being viewed by others

Notes

For an exception, see Christenson and Glick (2015b)’s use of a multi-wave MTurk panel design.

While many studies have relied on online platforms such as MTurk for various experiments, Christenson and Glick (2013) have shown that MTurk, primarily due to its speed and flexibility, also offers clear advantages for panel studies, especially those that require immediate implementation.

Please see "Appendix 3" for a more detailed methodology. We relied on three trained coders, with an inter-coder reliability of 0.92 on 25% of the sample.

After matching respondents based on MTurk IDs and conducting data quality checks, the final dataset consists of \(n=422\) Wave 1, \(n=280\) Wave 2, and \(n=161\) Wave 3 respondents.

As an additional robustness check, we imputed the data using a \(m=5\) chained dataset, based on education, age, income, party identification, race, gender, and Trump approval. That is, we imputed the full (three wave) dataset for all wave 1 respondents and then again for just wave 2 and wave 3 respondents. In both imputed datasets, presented in Appendix 2 Table 13, mean ban attitudes shifted from wave 1 to wave 2, but not from wave 2 to wave 3. These results are substantively similar to the main findings detailed below.

We are also sensitive to the fact that MTurk respondents may be particularly aware of the news and more likely to be exposed to the Muslim ban backlash compared to the general population. If overly attentive respondents bias our results, those who reportedly watched the protests in wave 2 possibly gave different ban attitude responses than those who reported not watching the protests (presumably less attentive people). We tested this by conducting a \(\chi ^{2}\) test between wave 2 ban attitude and “watched demonstrations” (1 = yes, 0 = no). We find no evidence of a statistically significant difference in wave 2 ban attitudes between the two groups (\(\chi ^{2}\) =1.8, df = 4, p = 0.771), or even between the two variables when we subset to just respondents who completed the wave 2 survey (\(\chi ^{2}\) = 2.6, df = 4, p = 0.617). This provides some evidence that an overly news attentive sample is not biasing our findings. We also located a 2016 probability-based representative Pew Research Center survey that asked questions about local news and national news consumption. We downloaded the data and calculated the percentage of respondents who reported watching television news. About 81% of respondents said they watched news, which is very similar to the percentage of our wave 2 respondents who reported watching local or national television news (78%). Thus, external evidence suggests our sample is not biased in the form of greater news consumption relative to the adult U.S. population. We provide greater discussion of the Pew sample in ″Appendix 1″.

Due to the administration’s executive order ban changes, the wave 3 ban question read: “President Trump’s executive order restricting immigration from Syria, Iran, Libya, Yemen, Somalia, and Chad—do you strongly agree (5), somewhat agree (4), neither agree nor disagree (3), somewhat disagree (2) or strongly disagree with this order (1)?” These changes are minor, and given citizens’ low state of political knowledge, are unlikely to produce attitudinal effects due to question wording alone (Schuman and Presser 1996).

Question wording of all the variables used in the analyses is presented in ″Appendix 1″.

Krosnick et al. (2002) examine whether no-opinion options (e.g. “don’t know”) may discourage some respondents from cognitively engaging in work necessary to report the true opinions they do have. They find that the inclusion of no-opinion options may not enhance data quality and instead may preclude measurement of some meaningful opinions. This is particularly applicable in a context where online survey respondents may be motivated by speed and might not deeply engage with the survey questions when “don’t know” options are present. Based on this line of research, we precluded no-opinion options.

We excluded a dummy variable for Trump vote from the three-wave regression analysis due to a high number of missing cases. However, we do include a Trump favorability variable, which is highly correlated with Trump vote at 0.831.

For more details refer to ″Appendix 1″.

A concern is that our respondents vary in how much attention they pay to the survey, and that this might impact the reliability of our results. One way to test this possibility is to examine whether answers to factual questions about the political environment correlate with how long it takes the respondent to complete the survey. Respondents who take the survey more quickly may be giving random responses and thus may be more likely to get the factual questions incorrect. We test this possibility by correlating political knowledge with survey time completion. First, political knowledge is completely uncorrelated with length of survey completion. Second, respondents who took longer to answer the survey (above the mean length) were no different in political knowledge than those who did not take as long (below the mean length), as measured by a chi-square test. We find similar results between reported Wave 1 Muslim ban attitudes and survey length (χ2 = 5.66, p = 0.22); Wave 2 Muslim ban attitudes and survey length (χ2 = 4.373, p = 0.348); Wave 3 Muslim ban attitudes and survey length (χ2 = 3.09, p = 0.541).

Support for the border wall appears to have dropped somewhat, but the difference is not statistically significant. That said, to the extent the drop may be realized with a larger dataset, the change is sensible given the continued discussion and criticism of the border wall throughout the year.

We are cognizant that self-reported media consumption may be subject to social desirability. We note here again that our share of respondents reporting they watched local or national news is about 78%, whereas the comparable number from Pew is 81%. This provides added confidence that our respondents are answering truthfully.

One may observe that the Republican coefficient slightly increases from wave 1 to wave 2, but this is due to a comparison of a slightly larger drop in ban support among independents (the comparison group).

Our wave 2, but not wave 1, survey included measures of attention to news. We asked respondents if they “watched local or national television news” (1 = No, I have not done that, 2 = Yes, once or twice, 3 = Yes, several times), and whether they “read print or digital news stories” (1 = No, I have not done that, 2 = Yes, once or twice, 3 = Yes, several times). We combined answers to the two questions, then divided them into high attention (5 or 6 on the scale) and lower attention on the scale (2–4). It is possible that the protests stimulated citizens’ interest in the news and so this is not such a great baseline political attention measure. To attempt to compensate for this, we proxy for attention with education measured in wave 1.

Search terms: (1) democrat, democrats; (2) republican, republicans; (3) trump; (4) protest, protesters, protests, airport, airports; (5) graham, mccain; (6) schumer, pelosi; (7) American, unamerican, un-american, core values, religious freedom, religious test, liberty, violation, nation of immigrants.

References

Althaus, S. L., & Tewksbury, D. (2002). Agenda setting and the “new” news: Patterns of issue importance among readers of the paper and online versions of the New York Times. Communication Research, 29(2), 180–207.

Ashmore, R. D., Jussim, L. J., & Wilder, D. (2001). Social identity, intergroup conflict, and conflict reduction (Vol. 3). Oxford: Oxford University Press.

Broockman, D., & Kalla, J. (2016). Durably reducing transphobia: A field experiment on door-to-door canvassing. Science, 352(6282), 220–224.

Christenson, D. P., & Glick, D. M. (2013). Crowdsourcing panel studies and real-time experiments in MTurk. The Political Methodologist, 20(2), 27–32.

Christenson, D. P., & Glick, D. M. (2015a). Chief Justice Roberts’s health care decision disrobed: The microfoundations of the Supreme Court’s legitimacy. American Journal of Political Science, 59(2), 403–418.

Christenson, D. P., & Glick, D. M. (2015b). Issue-specific opinion change: The Supreme Court and health care reform. Public Opinion Quarterly, 79(4), 881–905.

Citrin, J., Reingold, B., & Green, D. P. (1990). American identity and the politics of ethnic change. The Journal of Politics, 52(4), 1124–1154.

Citrin, J., Wong, C., & Duff, B. (2001). The meaning of American national identity. Social Identity, Intergroup Conflict, and Conflict Reduction, 3, 71.

Collingwood, L., Lajevardi, N., & Oskooii, K. A. R. (2018). A change of heart? Why individual-level public opinion shifted against Trump’s Muslim Ban. Political Behavior, 10(4), 1035–1072.

Converse, P. E. (1964). The nature of belief systems in mass publics. In D. Apter (Ed.), Ideology and discontent. New York: Free Press.

Davis, D. W. (2007). Negative liberty: Public opinion and the terrorist attacks on America. New York: Russell Sage Foundation.

Davis, D. W., & Silver, B. D. (2004). Civil liberties vs. security: Public opinion in the context of the terrorist attacks on America. American Journal of Political Science, 48(1), 28–46.

Duss, M, Taeb, Y, Gude, K & Sofer, K (2015). “Fear, Inc. 2.0.” Center for American Progress .

Edgell, P., Gerteis, J., & Hartmann, D. (2006). Atheists as “other”: Moral boundaries and cultural membership in American society. American Sociological Review, 71(2), 211–234.

Erikson, Robert S, MacKuen, Michael B, & Stimson, James A. (2002). The macro polity. Cambridge: Cambridge University Press.

Espenshade, T. J., & Calhoun, C. A. (1993). An analysis of public opinion toward undocumented immigration. Population Research and Policy Review, 12(3), 189–224.

Frendreis, J., & Tatalovich, R. (1997). Who supports English-only language laws? Evidence from the 1992 National Election Study. Social Science Quarterly, 78, 354–368.

Gaines, B. J., Kuklinski, J. H., & Quirk, P. J. (2006). The logic of the survey experiment reexamined. Political Analysis, 15(1), 1–20.

Gerber, A. S., Gimpel, J. G., Green, D. P., & Shaw, D. R. (2011). How large and long-lasting are the persuasive effects of televised campaign ads? Results from a randomized field experiment. American Political Science Review, 105(1), 135–150.

Gilens, M., & Murakawa, N. (2002). Elite cues and political decision-making. Research in Micropolitics, 6, 15–49.

Gustavsson, G (2017). National attachment–cohesive, divisive or both?: The divergent links to solidarity from national identity, national pride, and national chauvinism. In Liberal nationalism and its critics: Normative and empirical questions, June 20-21 2017.

Harrison, B. F., & Michelson, M. R. (2017). Listen, we need to talk: How to change attitudes about LGBT rights. New York, NY: Oxford University Press.

Huddy, L. (2001). From social to political identity: A critical examination of social identity theory. Political Psychology, 22(1), 127–156.

Huddy, L. (2015). Group identity and political cohesion. Emerging Trends in the Social and Behavioral Sciences: An Interdisciplinary, Searchable, and Linkable Resource. https://doi.org/10.1002/9781118900772.etrds0155.

Huddy, L., & Sears, D. O. (1995). Opposition to bilingual education: Prejudice or the defense of realistic interests? Social Psychology Quterly, 58, 133–143.

Huddy, L., & Khatib, N. (2007). American patriotism, national identity, and political involvement. American Journal of Political Science, 51(1), 63–77.

Huff, C., & Tingley, D. (2015). “Who are these people?” Evaluating the demographic characteristics and political preferences of MTurk survey respondents. Research & Politics, 2(3), 2053168015604648.

Iyengar, Shanto, & Kinder, Donald R. (2010). News that matters: Television and American opinion. Chicago: University of Chicago Press.

Kalkan, K. O., Layman, G. C., & Uslaner, E. M. (2009). “Bands of others”? Attitudes toward Muslims in contemporary American Society. The Journal of Politics, 71(3), 847–862.

Kearns, E. M., Betus, A., & Lemieux, A. (2018). Why do some terrorist attacks receive more media attention than others?’. Justice Quarterly. https://doi.org/10.1080/07418825.2018.1524507.

Kinder, D. R., & Sanders, L. M. (1996). Divided by color: Racial politics and democratic ideals. Chicago, IL: University of Chicago Press.

Klar, S. (2013). The influence of competing identity primes on political preferences. The Journal of Politics, 75(4), 1108–1124.

Klar, S. (2014). Partisanship in a social setting. American Journal of Political Science, 58(3), 687–704.

Krosnick, J. A. (1988). Attitude importance and attitude change. Journal of Experimental Social Psychology, 24(3), 240–255.

Krosnick, J. A. (1991). Response strategies for coping with the cognitive demands of attitude measures in surveys. Applied Cognitive Psychology, 5(3), 213–236.

Krosnick, J. A. (1999). Survey research. Annual Review of Psychology, 50(1), 537–567.

Krosnick, J. A., Holbrook, A. L., Berent, M. K., Carson, R. T., Michael Hanemann, W., Kopp, R. J., et al. (2002). The impact of “no opinion” response options on data quality: Non-attitude reduction or an invitation to satisfice? Public Opinion Quarterly, 66(3), 371–403.

Krosnick, J. A., & Kinder, D. R. (1990). Altering the foundations of support for the president through priming. American Political Science Review, 84(2), 497–512.

Lajevardi, Nazita (2017). A comprehensive study of Muslim American discrimination by legislators, the media, and the masses. University of California, San Diego. Doctoral Dissertation.

Lajevardi, N., & Oskooii, K. A. R. (2018). Old-fashioned racism, contemporary islamophobia, and the isolation of Muslim Americans in the age of trump. Journal of Race, Ethnicity, and Politics, 3(1), 112–152.

Lajevardi, N., & Abrajano, M. A. (2018). How negative sentiment towards Muslim Americans predicts support for trump in the 2016 presidential election. The Journal of Politics, 81(1), 296–302.

Lenz, G. S. (2013). Follow the leader?: How voters respond to politicians’ policies and performance. Chicago, IL: University of Chicago Press.

Levay, K. E., Freese, J., & Druckman, J. N. (2016). The demographic and political composition of Mechanical Turk samples. Sage Open, 6(1), 2158244016636433.

Levendusky, M. S. (2010). Clearer cues, more consistent voters: A benefit of elite polarization. Political Behavior, 32(1), 111–131.

Mason, L. (2013). The rise of uncivil agreement: Issue versus behavioral polarization in the American electorate. American Behavioral Scientist, 57(1), 140–159.

McCombs, M. E., & Shaw, D. L. (1972). The agenda-setting function of mass media. Public Opinion Quarterly, 36(2), 176–187.

Mullinix, K. J., Leeper, T. J., Druckman, J. N., & Freese, J. (2015). The generalizability of survey experiments. Journal of Experimental Political Science, 2(2), 109–138.

Nelson, T. E., & Kinder, D. R. (1996). Issue frames and group-centrism in American public opinion. The Journal of Politics, 58(4), 1055–1078.

Page, B. I., & Shapiro, R. Y. (1982). Changes in Americans’ policy preferences, 1935–1979. Public Opinion Quarterly, 46(1), 24–42.

Page, Benjamin I, & Shapiro, Robert Y. (2010). The rational public: Fifty years of trends in Americans’ policy preferences. Chicago: University of Chicago Press.

Putnam, R. D., & Campbell, D. E. (2010). American grace: How religion divides and unites us. New York: Simon & Schuster.

Redlawsk, D. P. (2002). Hot cognition or cool consideration? Testing the effects of motivated reasoning on political decision making. Journal of Politics, 64(4), 1021–1044.

Roberts, M., Wanta, W., & Dzwo, T.-H. (2002). Agenda setting and issue salience online. Communication Research, 29(4), 452–465.

Scheufele, D. A., & Tewksbury, D. (2006). Framing, agenda setting, and priming: The evolution of three media effects models. Journal of Communication, 57(1), 9–20.

Schildkraut, D. J. (2003). American identity and attitudes toward official-English policies. Political Psychology, 24(3), 469–499.

Schuman, H., & Presser, S. (1996). Questions and answers in attitude surveys: Experiments on question form, wording, and context. Thousand Oaks: Sage.

Taber, C. S., & Lodge, M. (2006). Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science, 50(3), 755–769.

Tesler, M. (2015). Priming predispositions and changing policy positions: An account of when mass opinion is primed or changed. American Journal of Political Science, 59(4), 806–824.

Zaller, J. (1992). The nature and origins of mass opinion. Cambridge: Cambridge University Press.

Acknowledgements

We would like to thank the past and current editorial team of Political Behavior and the three anonymous reviewers for their helpful feedback and suggestions. Special thanks is also extended to Erin Cassese, Seulgi Lee, Charles Mills, Emily Tohma, Ali Valenzuela, and participants at the UCSD PRIEC, UCR Mass Behavior Brown Bag Series, Princeton Center for the Study of Democratic Politics workshop, and APSA panel on the racialization of Islam and Muslims.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Authors are listed in reverse alphabetical order; authorship is equal. Data and code to reproduce the findings is available at: https://doi.org/10.7910/DVN/NNXBHP.

Appendices

Appendix 1

Survey Question Wording

-

DV: President Trump’s executive order restricting immigration from Syria, Iran, Iraq, Libya, Yemen, Somalia, and Sudan (wave 2: Syria, Iran, Libya, Yemen, Somalia, and Chad). Strongly disagree (1); Somewhat disagree (2); Neither agree nor disagree (3); Somewhat agree (4); Strongly agree (5).

-

President Trump’s executive order allowing for the Keystone and Dakota Access Pipelines. Strongly disagree (1); Somewhat disagree (2); Neither agree nor disagree (3); Somewhat agree (4); Strongly agree (5).

-

President Trump’s executive order to build a wall on the southern border. Strongly disagree (1); Somewhat disagree (2); Neither agree nor disagree (3); Somewhat agree (4); Strongly agree (5).

-

Income: What is your family’s annual income? Under $20,000 a year (1); Between $20,000 and $40,000 a year (2); Between $40,000 and $60,000 a year (3); Between $60,000 and $80,000 a year (4); Between $80,000 and $120,000 a year (5); Over $120,000 a year (6). $60K or less = 1; else = 0.

-

Education: What is the highest level of education you have completed? No High School Degree (1); High School Degree (2); Some College (3); 2-Year College Degree (4); 4-Year College Degree (5); Post Graduate Degree (6). Some College or less = 1; else = 0.

-

Which political party do you most align with? (1 = Democrat; else = 0; 1 = Republican; else = 0; Independent/other = base category)

-

American Identity (additive scale): To what extent do you agree or disagree with the following statements—strongly disagree (1), somewhat disagree (2), neither agree nor disagree (3), somewhat agree (4), or strongly agree (5)? The scale runs from 4 (no American identity) to 20 (high American identity):

-

My American identity is an important part of my “self.”

-

Being an American is an important part of how I see myself.

-

I see myself as a typical American person.

-

I am proud to be an American.

-

-

The Muslim affect scale, developed in Lajevardi (2017) and tested extensively in Lajevardi and Abrajano (2018), consists of nine questions that scale at an alpha of 0.91: with respect to Muslim Americans, how much do you agree or disagree with the following statements—strongly disagree, somewhat disagree, neither agree nor disagree, somewhat agree, strongly agree? (statements (re)coded so that high values indicate positive affect)

-

Muslim Americans integrate successfully into American culture.

-

Muslim Americans sometimes do not have the best interests of Americans at heart.

-

Muslims living in the US should be subject to more surveillance than others.

-

Muslim Americans, in general, tend to be more violent than other people.

-

Most Muslim Americans reject jihad and violence.

-

Most Muslim Americans lack basic English language skills.

-

Most Muslim Americans are not terrorists.

-

Wearing headscarves should be banned in all public places.

-

Muslim Americans do a good job of speaking out against Islamic terrorism.

-

-

Age: In what year were you born (2016—answer)

-

Female: What is your gender? Male (0) or Female (1)

-

White: What racial group best describes you? White (1), else = 0.

-

Do you approve of the way President Trump’s is handling his job as President? 1 = Approve, else = 0.

2016 Pew Research Center’s American Trends Panel Wave 14

Pew fielded a survey from January 12 to February 8, 2016. The survey is a mixed mode national, probability-based panel of adults in the United States. The survey sample size is 4654 (4339 by web and 315 by mail), with a margin of error of ± 1.95 percentage points. The data come with survey weights that account for differential probabilities of selection into the panel as well as issues related to non-response. Survey weights were used to calculate the reported percentages. The three questions we used are posted below:

And how often do you...

-

Watch local television news?

-

Often (1)

-

Sometimes (2)

-

Hardly ever (3)

-

Never (4)

-

-

Watch national evening network television news (such as ABC World News, CBS Evening News, or NBC Nightly News)?

-

Often (1)

-

Sometimes (2)

-

Hardly ever (3)

-

Never (4)

-

-

Watch cable television news (such as CNN, The Fox News cable channel, or MSNBC)?

-

Often (1)

-

Sometimes (2)

-

Hardly ever (3)

-

Never (4)

-

Appendix 2

See Tables 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15.

Appendix 3: Media Content Analysis

Media Transcript Valence Content Analysis Description

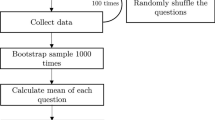

All CNN, Fox News, and MSNBC broadcast transcripts from January 1 to December 31, 2017 were downloaded from Lexis-Nexis academic. However, the unit of analysis is the media segment, which typically lasts 5–10 min in a typical television program. Below we review our technical coding procedure for CNN. There were not enough FOX and MSNBC transcripts to accurately represent media coverage of the Muslim ban.

CNN Media Transcripts

In total, the CNN corpus consists of 40,287 segments. All transcript text were converted to lower case, then run through a filter based on whether the segment included at least one occurrence of the terms “Muslim ban” or “Travel ban.” In total, 3050 segments matched our criteria.

Within each segment, we further split the data based on a key word in context (KWIC) search on the term “Muslim,” to assess how anchors and guests talked about Muslims in specific. We selected 25 words on either side of the term “Muslim,” which we refer to as a snippet. The snippet is empirically large enough to content analyze how the word “Muslim” is used, and small enough to not conflate the utterance with other themes.

Next, we randomly sampled n = 1200 snippets from all “Muslim” snippets. Each snippet was then hand-coded for valence: anti-ban, balanced/informational, and pro-ban. Pro-ban snippets are those where the discussant advocates support for Trump’s Muslim ban policy. Anti-ban snippets are the converse: the discussant criticizes the Muslim ban and advocates opposition to the policy. Balanced or informational segments do not express an opinion one way or the other, such as an anchor announcing a court decision, or include rare situations where the snippet is both pro and anti-ban.

For this specific analysis, three trained coders independently coded the same 300 snippets, reaching an inter-coder reliability of 0.92. The remaining 900 snippets were then divided evenly among the three coders. Upon completion of the snippet coding, daily valence scores were calculated based on the number of anti-ban, pro-ban, and informational (balanced) occurrences in all Muslim ban segments appearing on that day. We thus have three daily count measures of Muslim ban media valence across the full year time series. For example, on February 1, 2017, one of the most active days exhibited in Fig. 3, we observed 25 anti-ban snippets spread across the full day’s programming, 40 balanced/informational snippets, and 7 pro-ban snippets.

FOX and MSNBC Media Transcripts

Unfortunately, both FOX News and MSNBC do not provide Lexis-Nexis with their full array of media segment transcripts over the time period studied. In our search with the exact same date criterion, we only managed to locate 2321 Fox News media segments, with just 370 segments matching the “Muslim ban”/“Travel Ban” filter criteria. MSNBC transcripts exhibit a similar pattern. We located just 1,627 MSNBC total segments from the Lexis Nexis broadcast transcripts database for the time period January 1, 2017–December 31, 2017. Of these, just 340 segments were about the “Muslim ban”/“Travel Ban.” As with the CNN analysis, we further split both corpora by a KWIC search on the term “Muslim,” selecting 25 words on either side of the the term. This resulted in n = 818 snippets referencing the term “Muslim” for FOX News and n = 802 for MSNBC. We coded all of these snippets using the same criteria we employed for CNN, resulting in three categories: anti-ban, balanced/informational, pro-ban. However, given the low sample size, we immediately noticed many repeat snippets. Due to this issue and the fact that both FOX and MSNBC transcripts were not routinely or systematically uploaded, we are unable to make any reliable valence-related inferences of the ban coverage throughout 2017.

Newspaper Valence Content Analysis Description

New York Times

To produce the New York Times (NYT) corpus, we interfaced with the NYT Application Programming Interface (API) “article search.” Given the technicalities of the API, we submitted searches to download any article containing the phrase “Muslim ban” or “Travel ban” between January 1, 2017 and December 31, 2017. We combined the resulting observations into one corpus. We then removed any duplicate articles—those that appeared more than once since some articles include both “Muslim ban” and “Travel ban.”

This produced a corpus containing 710 news articles across the full year. We then coded each article for positive (pro-ban), balanced/informational, or negative (anti-ban). Positive articles portray the “Muslim ban” EO in an overall favorable light, negative articles in an overall negative light, and balanced/informational as either strictly informational or an even mix of negative and positive with no clear takeaway. For NYT, WSJ, WAPO, and USAT articles, three coders read 20% of all articles, achieving an inter-coder reliability of 89%. One coder then separately coded the rest of the corpus.

Upon deeper inspection, 30% of the NYT articles were not about the Muslim ban or executive order but included the search terms. These articles were subsequently dropped from the analysis. This type of article is typified by an article appearing in the Book Review section on April 21, 2017, entitled “American Poets Refusing to Go Gentle Rage Against the Right.” The article is wide ranging and focused on poets’ criticisms of modern politics. The only reference to a travel ban occurs here: “In March the Poetry Coalition which includes 25 organizations in the United States held readings around the country focused on the theme of migration with some programs put together partly in response to the Trump administration’s attempted travel bans. At City Lights Bookstore in San Francisco the Poetry Society of America held a reading and discussion about the plight of Syrian refugees.” Thus, while the article does reference “Muslim ban,” it is not about the “Muslim ban;” the term simply turns up in passing.

Our final NYT corpus includes 496 articles. Of these articles, 263 (53%) were coded as negative towards the order (anti-ban), 230 (46%) balanced/informational, and only 3 (1%) positive towards the order (pro-ban). Figure 5 shows the corpus’s over-time valence distribution.

Wall Street Journal

For the Wall Street Journal (WSJ), we accessed ProQuest, an academic database program that archives many media sources. We submitted a search for “Travel ban” or “Muslim ban” for any articles between January 1, 2017 - December 31, 2017. This produced 545 total articles. Of the articles appearing in the corpus, as with the NYT search, 236 were not about the Muslim ban or controversy surrounding the executive order. Thus, these articles were dropped, leaving us with a total of 308 articles. The articles were then hand-coded in the same manner as those in the NYT corpus. This left us with 106 (34%) anti-ban/negative articles, 183 (59%) balanced/information articles, and 19 (6%) pro-ban/positive articles.

Washington Post

We also accessed ProQuest to download Washington Post (WAPO) articles, containing the search terms “Travel ban” or “Muslim ban” between January 1, 2017 and December 31, 2017. This produced 569 total articles. Of the articles appearing in corpus, 262 were not about the Muslim ban or controversy surrounding the executive order. Thus, these articles were dropped, leaving us with a total of 307 articles. The articles were then hand-coded in the same manner as those in the NYT and WSJ corpora. This left us with 143 (47%) anti-ban/negative articles, 160 (52%) balanced/information articles, and 4 (1.3%) pro-ban/positive articles.

USA Today

To acquire USA Today (USAT) articles, we accessed Access World News’ NewsBank, an academic database program that archives many media sources including USAT. We submitted a search for “Travel ban” or “Muslim ban” for any articles between January 1, 2017 and December 31, 2017. This produced 154 total articles. Of the articles appearing in database, as with the other searches, 40 were not about the Muslim ban or controversy surrounding the executive order. Thus, these articles were dropped, leaving us with a total of 114 articles. The articles were then hand-coded in the same manner as those in the NYT, WSJ, and WAPO corpora. This left us with 73 (64%) anti-ban/negative articles, 29 (25%) balanced/information articles, and 12 (10.5%) pro-ban/positive articles.

Rights and permissions

About this article

Cite this article

Oskooii, K.A.R., Lajevardi, N. & Collingwood, L. Opinion Shift and Stability: The Information Environment and Long-Lasting Opposition to Trump’s Muslim Ban. Polit Behav 43, 301–337 (2021). https://doi.org/10.1007/s11109-019-09555-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11109-019-09555-8