Abstract

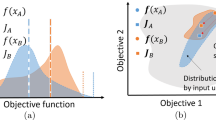

We consider Reliability-based Robust Design Optimization (RRDO) where it is sought to optimize the mean of an objective function while satisfying constraints in probability. The high computational cost of the simulations underlying the objective and constraints strongly limits the number of evaluations and makes this type of problems particularly challenging. The numerical cost issue and the parametric uncertainties have been addressed with Bayesian optimization algorithms which leverage Gaussian processes of the objective and constraint functions. Current Bayesian optimization algorithms call the objective and constraint functions simultaneously at each iteration. This is often not necessary and overlooks calculation savings opportunity. This article proposes a new efficient RRDO Bayesian optimization algorithm that optimally selects for evaluation, not only the usual design variables, but also one or several constraints along with the uncertain parameters. The algorithm relies on a multi-output Gaussian model of the constraints. The coupling of constraints and their separated selection are gradually implemented in three algorithm variants which are compared to a reference Bayesian approach. The results are promising in terms of convergence speed, accuracy and stability as observed on a two, a four and a 27-dimensional problem.

Similar content being viewed by others

Notes

The Kriging Believer assumption states that the value of a function at an unobserved point is equal to the kriging prediction at that point. For our constraints, it means \(g_p(\tilde{\textbf{x}}\tilde{\textbf{u}}) = {{\textbf{m}}_{\textbf{G}}^{(t)}}_p(\tilde{\textbf{x}},\tilde{\textbf{u}}) ~,~p=1,\ldots ,l\).

References

Alvarez MA, Rosasco L, Lawrence ND (2011) Kernels for vector-valued functions: A review. arXiv preprint arXiv:1106.6251

Balesdent M, Brevault L, Morio J, Chocat R (2020) Overview of problem formulations and optimization algorithms in the presence of uncertainty. Springer, Cham, pp 147–183. https://doi.org/10.1007/978-3-030-39126-3_5

Bect J, Ginsbourger D, Li L, Picheny V, Vazquez E (2012) Sequential design of computer experiments for the estimation of a probability of failure. Stat Comput 22(3):773–793

Beland JJ, Nair PB (2017) Bayesian optimization under uncertainty. In: NIPS BayesOpt 2017 workshop

Brooks CJ, Forrester A, Keane A, Shahpar S (2011) Multi-fidelity design optimisation of a transonic compressor rotor

Cakmak S, Astudillo R, Frazier P, Zhou E (2020) Bayesian optimization of risk measures. arXiv preprint arXiv:2007.05554

Chen Z, Peng S, Li X, Qiu H, Xiong H, Gao L, Li P (2015) An important boundary sampling method for reliability-based design optimization using kriging model. Struct Multidiscip Optim 52(1):55–70

Cousin A, Garnier J, Guiton M, Zuniga M (2020) Chance constraint optimization of a complex system: Application to the fatigue design of a floating offshore wind turbine mooring system. In: WCCM-ECCOMAS2020. https://www.scipedia.com/public/Cousin_et_al_2021a

Deville Y, Ginsbourger D, Contributors OR, Durrande N, Deville MY, Rcpp D, DiceKriging S, Imports M, Rcpp L (2015) Package ‘kergp’

Dubourg V, Sudret B, Bourinet J-M (2011) Reliability-based design optimization using kriging surrogates and subset simulation. Struct Multidiscip Optim 44(5):673–690

Dunham J (1998) CFD validation for propulsion system components. Technical report, Advisory Group for Aerospace Research and Development, Neuilly-sur-Seine (France)

El Amri R, Le Riche R, Helbert C, Blanchet-Scalliet C, Da Veiga S (2021) A sampling criterion for constrained Bayesian optimization with uncertainties. https://arxiv.org/abs/2103.05706

Fernández-Godino MG, Park C, Kim N-H, Haftka RT (2016) Review of multi-fidelity models. arXiv preprint arXiv:1609.07196

Garland N, Le Riche R, Richet Y, Durrande N (2020) Multi-fidelity for MDO using gaussian processes. In: Aerospace system analysis and optimization in uncertainty. Springer, pp 295–320

Genz A, Bretz F (2009) Computation of Multivariate Normal and T Probabilities, vol 195. Springer

Ghoreishi SF, Allaire D (2019) Multi-information source constrained Bayesian optimization. Struct Multidiscip Optim 59(3):977–991

Goulard M, Voltz M (1992) Linear coregionalization model: tools for estimation and choice of cross-variogram matrix. Math Geol 24(3):269–286

Hernández-Lobato JM, Gelbart MA, Adams RP, Hoffman MW, Ghahramani Z (2016) A general framework for constrained Bayesian optimization using information-based search. J Mach Learn Res 17:1–53

Janusevskis J, Le Riche R (2012) Simultaneous kriging-based estimation and optimization of mean response. J Glob Optim. https://doi.org/10.1007/s10898-011-9836-5

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Global Optim 13(4):455–492

Kennedy MC, O’Hagan A (2000) Predicting the output from a complex computer code when fast approximations are available. Biometrika 87(1):1–13

Lacaze S, Missoum S (2013) Reliability-based design optimization using kriging and support vector machines. In: Proceedings of the 11th international conference on structural safety & reliability, New York

Le Gratiet L, Garnier J (2014) Recursive co-kriging model for design of computer experiments with multiple levels of fidelity. Int J Uncertain Quantific 4(5):1

Li X, Qiu H, Chen Z, Gao L, Shao X (2016) A local kriging approximation method using mpp for reliability-based design optimization. Comput Struct 162:102–115

Mattrand C, Beaurepaire P, Gayton N (2021) Adaptive kriging-based methods for failure probability evaluation: Focus on ak methods. Mechanical Engineering in Uncertainties From Classical Approaches to Some Recent Developments 205:1

Meliani M, Bartoli N, Lefebvre T, Bouhlel, M-A, Martins JR, Morlier J (2019) Multi-fidelity efficient global optimization: methodology and application to airfoil shape design. In: AIAA Aviation 2019 Forum, p 3236

Menz M, Gogu C, Dubreuil S, Bartoli N, Morio J (2020) Adaptive coupling of reduced basis modeling and kriging based active learning methods for reliability analyses. Reliabil Eng Syst Saf 196:106771

Mockus J (2012) Bayesian approach to global optimization: theory and applications, vol 37. Springer, Berlin

Moustapha M, Sudret B, Bourinet J-M, Guillaume B (2016) Quantile-based optimization under uncertainties using adaptive kriging surrogate models. Struct Multidiscip Optim 54(6):1403–1421

Nemirovski A (2012) On safe tractable approximations of chance constraints. Eur J Oper Res 219(3):707–718

Peherstorfer B, Willcox K, Gunzburger M (2018) Survey of multifidelity methods in uncertainty propagation, inference, and optimization. SIAM Rev 60(3):550–591

Pelamatti J, Brevault L, Balesdent M, Talbi E-G, Guerin Y (2020) Overview and comparison of gaussian process-based surrogate models for mixed continuous and discrete variables: application on aerospace design problems. In: High-performance simulation-based optimization. Springer , pp 189–224

Perdikaris P, Raissi M, Damianou A, Lawrence ND, Karniadakis GE (2017) Nonlinear information fusion algorithms for data-efficient multi-fidelity modelling. Proc R Soc A: Math Phys Eng Sci 473(2198):20160751

Picheny V, Wagner T, Ginsbourger D (2013) A benchmark of kriging-based infill criteria for noisy optimization. Struct Multidiscip Optim 48(3):607–626

Powell MJ (1994) A direct search optimization method that models the objective and constraint functions by linear interpolation. In: Advances in optimization and numerical analysis. Springer, pp 51–67

Qian PZG, Wu H, Wu CJ (2008) Gaussian process models for computer experiments with qualitative and quantitative factors. Technometrics 50(3):383–396

Rasmussen CE (2003) Gaussian processes in machine learning. In: Summer school on machine learning. Springer, pp 63–71

Reid L, Moore RD (1978) Design and overall performance of four highly loaded, high speed inlet stages for an advanced high-pressure-ratio core compressor. Technical report, NASA (1978). report NASA-TP-1337

Ribaud M, Blanchet-Scalliet C, Helbert C, Gillot F (2020) Robust optimization: a kriging-based multi-objective optimization approach. Reliab Eng Syst Saf 200:106913. https://doi.org/10.1016/j.ress.2020.106913

Roustant O, Ginsbourger D, Deville Y (2012) Dicekriging, diceoptim: Two r packages for the analysis of computer experiments by kriging-based metamodeling and optimization

Schonlau M, Welch WJ, Jones DR (1998) Global versus local search in constrained optimization of computer models. Lecture Notes-Monograph Series, pp 11–25

Scott W, Frazier P, Powell W (2011) The correlated knowledge gradient for simulation optimization of continuous parameters using gaussian process regression. SIAM J Optim 21(3):996–1026

Shah A, Ghahramani Z (2016) Pareto frontier learning with expensive correlated objectives. In: International conference on machine learning. PMLR, pp 1919–1927

Swiler LP, Hough PD, Qian P, Xu X, Storlie C, Lee H (2014) Surrogate models for mixed discrete-continuous variables. In: Constraint programming and decision making. Springer, pp 181–202

Tao S, Van Beek A, Apley DW, Chen W (2021) Multi-model Bayesian optimization for simulation-based design. J Mech Des 143(11):111701

Torossian L, Picheny V, Durrande N (2020) Bayesian quantile and expectile optimisation. arXiv preprint arXiv:2001.04833

Valdebenito MA, Schuëller GI (2010) A survey on approaches for reliability-based optimization. Struct Multidiscip Optim 42(5):645–663

Williams C, Bonilla EV, Chai KM (2007) Multi-task gaussian process prediction. Adv Neural Inf Process Syst 1:153–160

Yao W, Chen X, Luo W, Van Tooren M, Guo J (2011) Review of uncertainty-based multidisciplinary design optimization methods for aerospace vehicles. Prog Aerosp Sci 47(6):450–479

Zhang Y, Notz WI (2015) Computer experiments with qualitative and quantitative variables: a review and reexamination. Qual Eng 27(1):2–13

Zhang J, Taflanidis A, Medina J (2017) Sequential approximate optimization for design under uncertainty problems utilizing kriging metamodeling in augmented input space. Comput Methods Appl Mech Eng 315:369–395

Zhang Y, Tao S, Chen W, Apley DW (2020) A latent variable approach to gaussian process modeling with qualitative and quantitative factors. Technometrics 62(3):291–302

Zhou Q, Qian PZ, Zhou S (2011) A simple approach to emulation for computer models with qualitative and quantitative factors. Technometrics 53(3):266–273

Acknowledgements

This work was partly supported by the OQUAIDO research Chair in Applied Mathematics.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Main notations and acronyms

Appendix B: Covariance kernel for a nominal input: the hypersphere decomposition.

The hypersphere decomposition (Zhou et al. 2011) is a possible choice to parameterize a discrete covariance kernel. The underlying idea is to map each of the l levels \( \{z_1,..,z_p,...,z_l\}\) of the considered discrete variable onto a distinct point on the surface of a l-dimensional hypersphere by:

where \(b_{p,r}\) represents the r-th coordinate of the p-th discrete level mapping, and is calculated as follows:

with \(-\pi \le \theta _{p,r} \le \pi \). It can be noticed that in the equations above, some of the mapping coordinates are arbitrarily set to 0. This allows to avoid rotation indeterminacies (i.e., an infinite number of hyperparameter sets characterizing the same covariance matrix), while also reducing the number of parameters required to define the mapping. The resulting kernel is then computed as the Euclidean scalar product between the hypersphere mappings,

The discrete kernel can then be characterized as an \(l\times l\) symmetric positive definite matrix \(\textbf{T}\) containing the covariance values between the discrete variable levels computed as:

where each element of \(\textbf{L}_{i,j}\) is computed as \(b_{i,j}\),

In the output-as-input model of the constraints, Equation (3), the matrix \(\textbf{T}\) contains the covariance terms related to the constraint index, \(k_p(i,j) = \textbf{T}_{ij}~,~i,j = \{1,\ldots ,l\}\).

Appendix C: Probability of feasibility with dependent constraints

The probability of satisfying the coupled constraints intervenes in Eq. (6) for the EFI acquisition criterion. It can be estimated with GPs as:

In practice, a set of M instances of the random variables \(\textbf{U}\) is sampled from \(\rho _U\). Subsequently, N independent multi-output trajectories of \(\textbf{G}(\textbf{x},\cdot )\) are simulated at the aforementioned sampled uncertain parameters. The probability of feasibility can finally be computed by simply counting the number of trajectories for which the ratio of samples associated to feasible constraints is larger than \(1-\alpha \). Note that a multi-output GP prediction of the constraint vector is defined as feasible when all of its components are below their specific threshold value (which is 0 in this work).

In (7), the improvement is computed with respect to the incumbent best feasible solution \(z_{min}^{feas}\). However, the objective function mean is not observed therefore \(z_{min}^{feas}\) cannot be read in the data set used to condition the GPs F and \(\textbf{G}\). For this reason, in El Amri et al. (2021) the incumbent best feasible solution is defined by taking into account the mean of the GP \(Z^{(t)}(\textbf{x})\) and the expected value of the process \(C^{(t)}(\textbf{x})\):

Given that the Fubini condition holds since the value of \(C(\cdot )\) is bounded by definition, the expected value of the process C can be written

where \(\Phi (\cdot )\) is the cumulative distribution function of a multivariate Gaussian distribution which, like the univariate version, is estimated numerically (Genz and Bretz 2009). The above Equation and Eq. (18) are used to compute the current feasible minimum. If Eq. (18) yields no solution, \(m^{(t)}_Z(\textbf{x})\) at the \(\textbf{x}\) providing the largest probability of feasibility is taken as the incumbent optimal solution.

Appendix D: Estimating the proxy of the one-step-ahead feasible improvement variance

During the optimization process, the value of the coordinates \(\textbf{u}^{t+1}\) of the point to be added to the training data set \(\mathcal {D}\) is computed by minimizing the proxy of the one-step-ahead variance of the EFI at \(\textbf{x}_{targ}\) (see Eq. (8)), which is defined as:

In this appendix, we recall some details about the computation of the first term in the previous equation. An expression of the improvement variance \(Var\left( I^{(t+1)}(\textbf{x}_{targ})\right) \) which bears some similarities to the one of expected improvement is given in El Amri et al. (2021) and can be expressed in terms of probability and density functions of Gaussian distributions:

At step \(t+1\), the training data set \(\mathcal {D}^{(t)}\) is enriched by \((\tilde{\textbf{x}}, \tilde{\textbf{u}})\) on which the output \(f(\tilde{\textbf{x}},\tilde{\textbf{u}})\) is unknown and represented by \(F(\tilde{\textbf{x}},\tilde{\textbf{u}})\).

As a consequence, \(Var\left( I^{(t+1)}(\textbf{x}_{targ})\right) \) cannot directly be computed because of the randomness of \(m_Z^{(t+1)}(\textbf{x}_{targ})\). Indeed, \(m_Z^{(t+1)}(\textbf{x}_{targ})\) is equal to

\(E\left( Z(\textbf{x}) \vert F(\mathcal {D}^{(t)})=f^{(t)}, F(\tilde{\textbf{x}}, \tilde{\textbf{u}}) \right) \) and follows:

We can note that \(\sigma _Z^{(t+1)}(\textbf{x}_{targ})\) is not random as it depends only on the location \((\tilde{\textbf{x}},\tilde{\textbf{u}})\) and not on the function evaluation at this point. By applying the law of total variance, it can be shown that:

This calculation is performed numerically using samples of \(m_Z^{(t+1)}(\textbf{x}_{targ}) \). For the sake of clarity, the reader is referred to the previous work (El Amri et al. 2021) for details regarding the implementation.

Appendix E: Optimization bounds of the industrial test case

The bounds of the optimization problem detailed in Sect. 6.4 are provided in the table below.

\(x_1\) | \(x_2\) | \(x_3\) | \(x_4\) | \(x_5\) | \(x_6\) | \(x_7\) | \(x_8\) | \(x_9\) | \(x_{10}\) | |

|---|---|---|---|---|---|---|---|---|---|---|

Lower bound | 0.05 | 0.05 | 0.05 | 0.05 | 0.09 | 0.09 | 0.09 | 0.09 | 0.45 | 0.45 |

Upper bound | 0.06 | 0.06 | 0.06 | 0.06 | 0.11 | 0.11 | 0.11 | 0.11 | 0.55 | 0.55 |

\(x_{11}\) | \(x_{12}\) | \(x_{13}\) | \(x_{14}\) | \(x_{15}\) | \(x_{16}\) | \(x_{17}\) | \(x_{18}\) | \(x_{19}\) | \(x_{20}\) | |

|---|---|---|---|---|---|---|---|---|---|---|

Lower bound | 0.45 | 0.45 | 30 | 39 | 47 | 56 | 0.2 | 0.2 | 0.2 | 0.2 |

Upper bound | 0.55 | 0.55 | 42 | 51 | 59 | 68 | 8.0 | 8.0 | 8.0 | 8.0 |

\(u_1\) | \(u_2\) | \(u_3\) | \(u_4\) | \(u_5\) | \(u_6\) | \(u_7\) | ||||

|---|---|---|---|---|---|---|---|---|---|---|

Lower bound | 0.05 | 0.0000004 | 0.98 | -5.763 | -0.5 | 19.78 | 16845 | |||

Upper bound | 0.80 | 0.0000012 | 1.02 | 5.763 | 0.5 | 20.59 | 17532 |

REF algorithm

SMCS algorithm

MMCU algorithm

MMCS algorithm

Appendix F: Flow charts of the algorithms

This appendix contains the flowcharts of the four algorithms which are implemented and compared in the body of the article. The REF, SMCS, MMCU and MMCS methods are described in Algorithms 2, 3, 4 and 5, respectively. REF stands for reference and was initially proposed in El Amri et al. (2021). It is a method where the GPs of the constraints and objective function are independent, and the same pair \((\textbf{x}^{t}, \textbf{u}^{t})\) is added to every GP at each iteration. SMCS, which stands for Single Models of the constraints and Constraint Selection, has independent GPs, like the REF algorithm, but only one constraint is updated at each iteration. The random parameters of the objective function and the selected constraint, \(\textbf{u}_f\) and \(\textbf{u}_g\), are different. MMCU means Multiple Model of the constraints and Common \(\textbf{u}\). The MMCU algorithm has a joint model of all the constraints and the same iterate \((\textbf{x}^{t}, \textbf{u}^{t})\) enriches all GPs. Finally, MMCS is the acronym of Multiple Model of the constraints and Constraint Selection. The MMCS algorithm relies on a joint model of the constraints and identifies at each iteration a single constraint and the associated random sample, \(\textbf{u}_g\), to carry out the next evaluation.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pelamatti, J., Le Riche, R., Helbert, C. et al. Coupling and selecting constraints in Bayesian optimization under uncertainties. Optim Eng 25, 373–412 (2024). https://doi.org/10.1007/s11081-023-09807-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11081-023-09807-x