Abstract

Duration is a key characteristic of floods influencing the design of protection infrastructures for prevention, deployment of rescue resources during the emergency, and repartition of damage costs in the aftermath. The latter financial aspect mainly relies on the insurance industry and allows the transfer of damage costs from the public sector to the private capital market. In this context, the cost of catastrophes affecting a large amount of insured properties is partly or totally transferred from insurance companies to reinsurance companies by contracts that define the portion of transferred costs according to the temporal extent of the flood events synthesized in the so-called hours clause. However, hours clauses imply standard flood event durations, such as 168 h (1 week), regardless of the hydrological properties characterizing different areas. In this study, we firstly perform a synoptic-scale exploratory analysis to investigate the duration and magnitude of large flood events that occurred around the world and in Europe between 1985 and 2016, and then we present a data-driven procedure devised to compute flood duration by tracking flood peaks along a river network. The exploratory analysis highlights the link of flood duration and magnitude with flood generation mechanism, thus allowing the identification of regions that are more or less prone to long-lasting events exceeding the standard hours clauses. The flood tracking procedure is applied to seven of the largest river basins in Central and Eastern Europe (Danube, Rhine, Elbe, Weser, Rhone, Loire, and Garonne). It correctly identifies major flood events and enables the definition of the probability distribution of the flood propagation time and its sampling uncertainty. Overall, we provide information and analysis tools readily applicable to improve reinsurance practices with respect to spatiotemporal extent of flooding hazard.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

Natural disasters such as hurricanes, earthquakes, and floods can cause large losses at regional and national scales and can also affect financial markets because of their consequences on society, economy, and finance (insurance, catastrophe bonds, etc.). Among natural disasters occurring between 1994 and 2013, floods were the main threat in terms of the number of events (43%; 2937 events) and affected people (55%; 2.4 billion of people), and the third most expensive type of disaster in terms of recorded lost assets (US$ 636 billion) after storms (US$ 936 billion) and earthquakes (US$ 787 billion) (UNISDR-CRED 2015). Similarly, 1816 worldwide inland flood events affected more than 2.2 billion persons in the period 1975–2001, indicating the enormous impacts of flood disasters on a worldwide scale (Jonkman 2005).

Effective flood management strategies must account for key flood characteristics such as their inherent spread over many administrative/physical regions (Barredo 2007; Kundzewicz et al. 2013), causing simultaneous collective losses, and their temporal clustering, resulting in flood-rich and flood-poor periods (Glaser et al. 2010; Montanari 2012; Hall et al. 2014; Serinaldi and Kilsby 2016). More integrated flood risk management strategies comprise both structural flood protection assets such as dikes, levees, resilience-improved residences, and upstream retention areas (Zhou et al. 2012) and non-structural solutions such as property level protection, land use planning, and insurance arrangements (Bouwer et al. 2007; Botzen and Van Den Bergh 2008; Bubeck et al. 2017; Prettenthaler et al. 2017; Serinaldi and Kilsby 2017).

Among non-structural measures, reinsurance basically plays a twofold role, spreading the risk related to (1) the occurrence of one or more very large individual losses, or an accumulation of losses arising from one event, relative to premium income and reserves of insurance companies, and (2) the fluctuation of the annual aggregate claims around the expected value (Carter 1983, p. 7). Reinsurance contracts can be either proportional or non-proportional, where the former implies that the reinsurer accepts a fixed share of liabilities (premium and claims recovery) assumed by the primary insurer, whereas in the latter, which is also known as excess of loss reinsurance, the reinsurer only becomes liable to pay if the losses incurred by the ceding company exceed some predetermined value (Carter 1983, p. 70).

An excess of loss reinsurance designed to protect the reinsured against an accumulation of losses arising from one event (or occurrence) of a particularly severe or catastrophic nature is defined as catastrophe cover (Carter 1983, p. 185) or catastrophe excess of loss cover (CatXL) reinsurance (Cipra 2010, p. 266). In fact, for each loss occurrence there is potentially a payout on the reinsurance treaty. The definition of ‘event’ is one of the key factors determining the extent of a reinsurer’s liability under a CatXL treaty. When the treaty is arranged on an individual occurrence basis, it is highly desirable for the parties to specify in the treaty the meaning of ‘any one event’, ‘happening’, ‘occurrence’, or whatever other phrase is used (Carter 1983, p. 294). In fact, a portfolio covering natural perils is exposed to the risk of accumulation of losses that may occur over a wide area during a period of perhaps several days, as is the case of storms (Thornes 1991), a series of earthquake shocks (Pucci et al. 2017; De Guidi et al. 2017), or floods (Barredo 2007; Glaser et al. 2010; Pińskwar et al. 2012; Kundzewicz et al. 2013, 2017), causing simultaneous collective losses such as damages both to property and to vehicles (Speight et al. 2017).

To cope with spatiotemporal variability of natural hazards in CatXL treaties as well as the lack of a unique and shared definition of what qualifies as a severe event, reinsurers usually introduce time and/or geographical limits in order to determine what constitutes an event or occurrence. These limits are defined in the so-called n-hour clause, such that the reinsurer covers only losses that accumulate within n hours for a given loss event (Cipra 2010, p. 266). Most hours clauses in reinsurance contracts are fairly standard: a hurricane, typhoon, windstorm, rainstorm, hailstorm and/or tornado, earthquake, seaquake, tidal wave, and/or volcanic eruption has 72 consecutive hours limit. The same holds for riots, civil commotions, and malicious damage within the limits of one city, town, or village. (These events have also a geographical limit.) All other catastrophes, including floods and forest fires, have 168 consecutive hours (7 days) (see, e.g., Carter 1983, pp. 360–361; Thornes 1991).

Therefore, no individual loss from whatever insured hazard that occurs outside these periods or areas is included in that ‘loss occurrence’. Usually, the reinsured may choose the time and date when any such period of consecutive hours starts. If any catastrophe spans longer periods, the reinsured may divide that catastrophe into two or more ‘loss occurrences’, provided no two periods overlap and provided no period starts earlier than the time and date of the occurrence of the first recorded individual loss to the reinsured in that catastrophe (Carter 1983, pp. 360–361). The possibility of choosing the starting date to be used to aggregate individual losses occurring during a period of n consecutive hours along with the option of splitting longer events in different non-overlapping periods is introduced to deal with the intrinsic difficulty uniquely defining an ‘event’. For example, Thornes (1991) highlighted this problem discussing storms that affected the UK in October 1987, and January/February 1990. In particular, the storm of October 15/16, 1987, was regarded as one event, whereas the flooding in Wales on 17/18/19 October involved a separate frontal system and could not be added to the damage of the previous storm.

Focusing on floods, the effectiveness of a 168-h clause strongly depends on multiple factors, including the duration and spatial extent of meteorological forcing, the antecedent soil moisture conditions of the interested areas as well as topological and geomorphological properties of the drainage basins, land use, and water management and the presence of flood mitigation measures (Haraguchi and Lall 2015). For example, the 2013 Alberta flood was triggered by heavy rainfall with large spatial coverage which commenced on 19 June and continued for three days, thus causing convergence of the nearly synchronous floodwaters downstream in the South Saskatchewan River system (Pomeroy et al. 2016; Liu et al. 2016; Teufel et al. 2017). Therefore, the initial damage occurred during the first week even if some areas remained flooded for several weeks. On the other hand, the 2011 Thailand floods were more problematic as they lasted 2–3 months and many reinsurance contracts did not have aggregate caps to limit the number of losses (Courbage et al. 2012; Haraguchi and Lall 2015). In this case, intense rainfall events did not span few days, but affected the northern regions of Thailand early in the monsoon season (March and April). Then, above-average rainfall continued throughout the 6-month summer monsoon season. This situation was further exacerbated by heavy rainfall from four tropical storms, which consequently doubled runoff (Komori et al. 2012; Ziegler et al. 2012; Gale and Saunders 2013; Takahashi et al. 2015).

These examples from heterogeneous regions are paradigmatic and indicate the importance of defining flood events and their duration or propagation time along a river network in order to set up more effective and efficient mitigation strategies along with clear and fair (re)insurance policies helping avoid legal controversies (England and Wales High Court 2013; Lees 2014). However, as highlighted by Ward et al. (2016), flood duration received quite limited attention despite its consequences in terms of indirect losses and health-related issues due to business interruptions and disruption of local to global supply chains (Haraguchi and Lall 2015; Koks et al. 2015), or negative influence on clean water supply and sanitation (Dang et al. 2011), for instance. As further discussed in Sect. 4, Uhlemann et al. (2010), Gvoždíková and Müller (2017), and Morrill and Becker (2017) proposed alternative methods, based on similar principles, devised to identify flood events at a basin scale for flood risk management and reinsurance purposes.

In this paper, we introduce a numerical algorithm to track floods along a river network in order to provide an assessment of flood propagation time and therefore flood duration. The methodology is purely data-driven and requires minimal information which is usually provided by stream flow repositories as meta-data, and its rationale differs from previous approaches. To put our analysis in a wider context and compare our results with observed flood durations, we also perform an exploratory analysis of the statistical properties of flood events recorded by the Global Active Archive of Large Flood Events of the Dartmouth Flood Observatory (DFO Archive; Brakenridge 2017). The algorithm is validated by analyzing the flood propagation across the river networks of seven major European basins. It should be further stressed that the focus here is on flood events at a basin scale, and flood duration does not refer to persistence of flooding conditions at a specific site, which in turn impacts on magnitude of losses and claims at that specific location (Thieken et al. 2005).

The paper is organized as follows: Sect. 2 introduces the data sets used in this study and the proposed algorithm for flood tracking. Section 3 describes the results of the exploratory analysis of DFO data, and the output of the application of flood tracking procedure to the seven European basins considered in this study. Discussion and concluding remarks are reported in Sect. 4.

2 Data and methods

2.1 Analyzed data sets

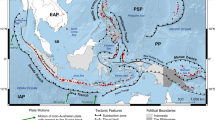

As the flood tracking algorithm relies on daily stream flow records, we used daily stream flow time series recorded across seven of the largest river basins in Central and Eastern Europe (Danube, Rhine, Elbe, Weser, Rhone, Loire, and Garonne) provided by the Global stream flow Data Centre (GRDC; Federal Institute of Hydrology (BfG), Koblenz, Germany). For each gauge station, stream flow data and corresponding dates are complemented with the identification number of the downstream station provided by GRDC in the meta-data available at the Web site http://www.bafg.de/GRDC/EN/02_srvcs/21_tmsrs/211_ctlgs/catalogues_node.html. As further discussed in Sect. 2.2, this is the only additional information required by the flood tracking procedure together with daily flow records. In fact, identification numbers of downstream stations are sufficient to build the connected network of available gauges within each basin. The resulting networks are reported in Fig. 1. Stations that do not appear in the downstream catalog are the most upstream in the mainstream or tributaries, while the station with no downstream gauges is considered as the outlet station, even though it is not generally the physical outlet of the basin. Of course, the accuracy of the resulting flood propagation time increases as the temporal resolution of input data available is finer (e.g., sub-daily) and the coverage of stream gauge network increases (i.e., the network is very dense).

Stream gauge networks built by using the pairs of upstream–downstream IDs available from GRDC meta-data. Note that as the density of gauging stations with simultaneous data increases, so the constructed network better resembles the real river network, and the estimation of flood duration D becomes more accurate

In order to give a more general picture, and put our results in a wider context, we also provide an exploratory analysis of large flood events occurred in the last 32 years. This analysis relies on the DFO database (Brakenridge 2017), which represents a unique source of information exclusively devoted to documentation of flood events worldwide. DFO database results from merging news reports and orbital remote sensing, which is used to define maps of affected areas. Referring to Kundzewicz et al. (2013, 2017) and DFO archive notes (available at the Web site http://www.dartmouth.edu/~floods/Archives/ArchiveNotes.html) for a detailed description of the DFO data, we recall some key properties. Among other flood characteristics, the DFO archive includes flood causation, duration D in days, severity S, affected area in km\(^2\), ‘flood magnitude’ M, and georeferenced information (GIS polygon of affected area, and latitude and longitude of polygon centroids). Flood causation comprises 11 categories: heavy rain, tropical cyclone, extra-tropical cyclone, monsoonal rain, snowmelt, rain and snowmelt, ice jam/breakup, dam/levee break or release, brief torrential rain, tidal surge, and avalanche-related. Of course, tropical storms and monsoonal rain do not cause European floods. Severity is a discrete index classifying flood events in three categories based on the concept of return period (RP, i.e., average elapsed time between occurrences of critical events; Serinaldi 2015): Class 1 corresponds to floods with RP in the range 10–20 years, class 2 to events with RP in the range 20–100 years, and class 3 to events with RP \(\ge 100\) years. The affected area is not the inundation area, but the geographical area affected by reported flooding, which is a geographical envelope around the areas affected by intense precipitation and flooding rivers. Flood magnitude M is a continuous index combining three flood characteristics by the relationship \(\hbox {Magnitude} = \log _{10}(\hbox {Duration} \times \hbox {Severity} \times \hbox {Affected area}\)). Brakenridge proposed this index to provide a more realistic representation of the overall flood severity by accounting for multiple critical aspects.

2.2 Flood tracking procedure

As mentioned in Sect. 1, the proposed flood tracking algorithm requires minimal input data consisting of daily stream flow records and a network plan of the river network. The latter is summarized by a simple two-column table reporting all possible pairs of adjacent upstream and downstream gauges labeled as ‘From’ and ‘To’. For example, if water flows between two gauges A and B, without any intermediary gauges, then the From–To relationship would be \(\hbox {From} = \{\hbox {A}\}\) and \(\hbox {To} = \{\hbox {B}\}\). If we have two further gauges, C and D, and both are directly upstream from A, then \(\hbox {From} = \{\hbox {C},\hbox {D},\hbox {A}\}^\top \) and \(\hbox {To} = \{\hbox {A},\hbox {A},\hbox {B}\}^\top \) (see Fig. 2). This From–To table is readily available for GRDC gauging stations, and it needs to be created from meta-data when it is not provided by data repositories.

In order to track flood-generating flow pulses through the river system, we assume an additive model for the discharge Q. Referring, for example, to the above small (four-station) river system, we assume that:

where \(Q_{\text {X}}(t)\) denotes the flow value at a generic site X and time t (formally, \(Q_{\text {X}}:{\mathbb {R}}^{+} \rightarrow {\mathbb {R}}^{+}\)), \(\tau _{\text {XY}}\) is a characteristic propagation time (delay) of the flow pulses traveling between a generic From–To pair of locations X and Y, and \(\varepsilon _{\text {Y}}\) is the residual difference between the downstream discharge, \(Q_{\text {Y}}\), and the upstream contributions, \(\sum Q_{\text {X}}\), due to inter-site basin dynamics, including possible missing tributaries, natural or artificial diversions, flow coming from the inter-site contributing area, and any phenomenon occurring between the two gauges of each From–To pair.

We assume that \(\tau _{\text {XY}}\) is approximately constant for a given From–To pair for a sufficiently large discharge. Under this hypothesis, a first-order approximation of \(\tau _{\text {XY}}\) is estimated by the time lag minimizing the sum of squared differences between the squared standardized flow values \(Q'_{\text {X}}\) and \(Q'_{\text {Y}}\), where \(Q' = [(Q-m)/s]^2\), and m and s are the sample median and standard deviation. Note that squaring the standardized flows amplifies the weight of large discharges and facilitates the least squares optimization. Once we have \(\tau _{\text {XY}}\), time series are shifted to a common pseudo-time so that all flow pulses are synchronized. Of course, \(\tau _{\text {XY}}\) values are also additive. In fact, focusing on the network in Fig. 2, we have, for instance, \(\tau _{\text {CB}} = \tau _{\text {CA}} + \tau _{\text {AB}}\). The daily values of the resulting (synchronized) time series are then summed up in order to obtain a unique benchmark sequence (master series) whose local minima are used to identify flow pulses (master events). Then, an ID is assigned to each sequence of pseudo-dates between two local minima, thus introducing a unique identification for each master event. Shifting back the pseudo-date sequence (by adding \(\tau _{\text {XY}}\)) for each location, we can assign IDs to flow pulses of each time series in the original (true) time frame. In this way, flow pulses occurring at different dates (due to traveling time) across the river system are identified by a unique ID denoting a single event (corresponding to the master event in the synchronized master series) propagating downstream along the river network.

This ID labeling implies that every local maximum corresponds to a flow pulse, which, however, can be a nonsignificant event. For each peak ID, we can then filter for significant events by selecting gauges showing Q values above a given threshold corresponding, for instance, to a specified annual probability of exceedance or RP. For each peak ID, this procedure yields a map of gauges where we observe a sufficiently large discharge. The flood propagation time is therefore estimated as the maximal temporal difference between the peak times across all these gauges. Of course, this value can be increased by a suitable factor accounting for the fact that the flood may begin before the first peak and end after the last peak. However, for our purposes, time lags between flood events’ peaks provide a satisfactory representation of flood durations.

As the proposed methodology is purely data-driven, some aspects deserve further discussion. The additive model in Eq. 1 can be an oversimplified description of nonlinear dynamics of basin response to hydrometeorological forcing at fine (sub-daily) timescales. However, it provides a satisfactory approximation at daily scale. Moreover, the additive scheme is introduced only to highlight the role of the time lag \(\tau _{\text {XY}}\) used in the synchronization procedure, and it is not applied to perform any computation of downstream flow from upstream records.

The residuals \(\varepsilon _{\text {X}}\) summarize the effect of every factor different from the linear combination of the upstream flow contributions. For example, the missing contribution of an ungauged tributary located between two gauges can result in a non-centered sequence of residuals (systematic bias), which can be modeled if required. On the other hand, when the residuals are centered around zero, we can conclude that no systematic water contribution is missed and the differences between upstream and downstream records reduce to (random) fluctuations due to the superposition of multiple factors acting across the basin area between the gauge stations.

The proposed approach assumes that some factors influencing the basin response can arguably be considered unchanged over the period of record. This is a strong assumption for anthropized areas showing significant changes in land use and cover, and interventions including flood defense infrastructures, reservoirs, etc. Obviously, if these changes substantially modify the stream flow regime, these effects can be accounted for only if we have additional information on such factors. However, these changes can have a limited impact on the tracking procedure as it focuses on the synchronization of flow records minimizing a specified metric rather than the exact matching of the absolute flow values recorded at adjacent gauges.

The tracking procedure basically yields the differences of times of concentration for any pair of adjacent gauges in the river network. Therefore, after identifying flood events by an over-threshold criterion, and selecting the subset of gauges exceeding that threshold, then the maximal time difference between the peaks observed at these ‘flooded’ gauges is a good approximation of the timing of the flood event.

3 Empirical results

3.1 Synoptic overview of flood duration, magnitude, and generating mechanism

3.1.1 Spatial and temporal patterns of worldwide floods

In order to contextualize the flood tracking results based on GRDC daily flow records (Sect. 3.2), we firstly perform an analysis at global and European level of the main properties of major floods reported in the DFO archive. We focus on flood magnitude (as defined in Sect. 2.1) and duration, which is of primary interest in the hours clause context. Stratifying information according to the main flood-generating processes, Fig. 3a shows that heavy rain globally causes more floods than the other driving factors combined (62.01% of cases), followed by brief torrential rain (13.75%), tropical storms (9.98%), and monsoonal rain (8.74%). These four factors account for the 94.48% of flood events worldwide. Figure 3b shows that the longer events correspond to meteorological causes evolving at a seasonal scale (i.e., monsoonal rain, snowmelt, and rain and snowmelt), thus generating floods lasting several days or weeks. Excluding avalanche-related floods, the probability that the flood duration D exceeds seven days (i.e., the 168-h clause), \({\mathbb {P}}[D>7~\text{ days }]\), is always greater than 10% (for floods due to tidal surge and extra-tropical storms), reaching 67, 66, and 57%, for monsoonal rain, snowmelt, and rain and snowmelt, respectively. These classes of floods also tend to be the most severe, showing a probability of exceeding magnitude \(M=6\), \({\mathbb {P}}[M>6]\), of 48, 49, and 41%, respectively (Fig. 3c).

a Frequency of worldwide flood events stratified by main generating mechanism according to identification provided by DFO archive. b Box plots summarizing the distribution of flood duration D corresponding to the main causes of flood events. The relative frequency of events with duration \(D>7~\text {days}\) (corresponding to the 168-h clause) is also reported. c Box plots describing the distribution of flood magnitude M (as defined in the text) for each main flood-generating mechanism. The relative frequency of events with magnitude \(M>4\) and \(M>6\) is also reported

Figure 4a shows that the first decade of the twenty-first century was a flood-rich period in terms of the number of events, while the last 6 years are similar to the period 1985–2000. Moreover, the number of events shows an evident seasonality with concentration in the summer season (Fig. 4b). Flood duration shows neither evident changes in the annual variability (Fig. 4c), nor an evident seasonal pattern (Fig. 4d), meaning that there is no preferential season/month for long or short floods. Flood magnitude shows an oscillating behavior across the period of record with relatively high values around 1994–1999 and 2008–2016 (Fig. 4e). Since both the severity of the experienced negative consequences and the timing of the previous event play an important role in risk perception (Bubeck et al. 2012), we argue that the overall increasing trend in the global flood magnitude recorded in the last 16 years along with the higher number of events in the period 2000–2010 may contribute to an overall perception of increasing flood risk. However, the first half of the series shows that large events (and some of the largest) already occurred between 1985 and 1999. At the global scale, flood magnitude does not show any seasonal pattern (Fig. 4f).

a Temporal evolution of the number of worldwide flood events along the period of record (1985–2016). b Seasonal pattern of the number of worldwide flood events recorded between 1985 and 2016. c Box plots showing the evolution of the annual distributions of flood duration D during the period of record. d Box plots summarizing the seasonal variation of the distribution of D. Reference duration \(D=7~\text {days}\) is also reported (red lines). e, f Similar to panels (c, d), but for the flood magnitude M (reference values \(M=4\) and \(M=6\) are shown as red and dark red lines)

3.1.2 Spatial and temporal patterns of European floods

Focusing on European floods (Fig. 5a), heavy rain is still the main cause of floods (67.46% of cases) followed by brief torrential rain (19.38%) and snowmelt (7.89%). Floods due to snowmelt have a higher probability to last more than seven days (55 and 69%; Fig. 5b), in agreement with the worldwide data set. Of course, monsoonal rain and tropical storms are not included among the possible causes of floods in Europe. As for the global data set, snowmelt is also the cause of the major floods in terms of magnitude, with 45–46% of chance of generating floods with \(M>6\) (Fig. 5c).

Similar to Fig. 3, but for European flood events. The same interpretation applies

In agreement with global results, the first years of the twenty-first century correspond to a flood-rich period also for Europe (Fig. 6a). The seasonality of the flood occurrence in Europe is, however, less clear than that of the global data set. Local maxima in July, October, and January (Fig. 6b) likely reflect the specific seasonality of the factors triggering floods across the continent. For example, the Mediterranean area is prone to autumn–winter floods related to heavy and/or persistent rain, while spring floods due to snowmelt tend to affect the Alpine region and the Central–Eastern Europe, which in turn is also prone to summer floods related to heavy rain. The variability of the duration of European floods does not show any evident pattern over time (Fig. 6c), while there is a seasonal pattern indicating that longer events likely related to snowmelt occur in the spring months (especially March and April; Fig. 6d). Analogously, the inter-annual variability of the flood magnitude shows no particular patterns (Fig. 6e), while the largest floods tend to occur in spring (March and April).

Similar to Fig. 4, but for European floods. The same interpretation applies

These results along with those concerning the flood typology (Fig. 5) confirm that the longest and most severe flood events across Europe are related to spring snowmelt (with and without rain). However, snowmelt accounts for a small fraction of the total number of European floods, whereas the majority of events are due to heavy rain and brief torrential rain occurring in summer and in the late autumn or early winter, thus resulting in shorter and less severe floods. Nevertheless, rain-related floods have a non-negligible probability of lasting more than seven days (31% for heavy rain; Fig. 5b), and of being very severe (\({\mathbb {P}}[M>6]=15\%\) for heavy rain; Fig. 5c).

Based on DFO data, Kundzewicz et al. (2017) updated a previous analysis of the evolution of the number of severe European floods with \(S \ge 1.5\) and \(M \ge 5\) during the period of record (Kundzewicz et al. 2013), recognizing an increasing tendency in the number of these classes of event and strong inter-annual variability. Therefore, Kundzewicz et al. (2017) suggest caution when using flood hazard projections under considerable uncertainty. We further investigate these aspects by including two additional cases: (1) all flood events and (2) floods with \(D>7\) days. We also attempt to refine the analysis of Kundzewicz et al. (2017) quantifying the slowly varying (low-frequency) long-term trend by a local scatter plot smoothing (LOESS) curve (Cleveland and Devlin 1988) instead of using a less justifiable linear regression. Moreover, we assess the consistency of the observed long-term trends with those resulting from surrogate data simulated by the iterative amplitude adjusted Fourier transformation (IAAFT; Schreiber and Schmitz 1996), which yields stationary sequences preserving both marginal distribution and power spectrum (autocorrelation) of the observed series. In this way, we can account for the variance inflating effect of persistence, bearing in mind that the actual variability of the underlying process is inevitably underestimated when dealing with short time series as in this case.

Figure 7 shows the observed trends and the 95% confidence intervals (CIs) based on \(10^4\) LOESS curves corresponding to IAAFT surrogates. Only the number of floods with \(S \ge 1.5\) exhibits a low-frequency incremental behavior, whereas the number of events corresponding to the other classes of floods shows a non-monotonic behavior with a local maximum during the first years of the twenty-first century. It should be noted that all the curves fall within the CIs apart from the portion corresponding to the period 1985–1989, for which it is possible that less media-based information was available (Kundzewicz et al. 2013). Thus, bearing in mind the known lack of homogeneity of DFO data and the difficulty in assessing the actual variability of the underlying process from short time series, we cannot exclude the possibility that the observed trends are still consistent with a reference stationary process. This does not mean that possible systematic changes are not in action, but only that the available information is not enough to support such a conclusion excluding any other reasonable explanation. These findings further support the cautionary recommendation of Kundzewicz et al. (2017) regarding flood management and decision making under high uncertainty.

Temporal evolution of the annual number of European flood events belonging to different classes: all events (a), and events with duration \(D>7\) (b), severity \(S\ge 1.5\) (c), and magnitude \(M\ge 5\) (d). Each panel shows the observed series (dots), LOESS curves describing low-frequency long-term patterns (blue lines), and the 95% CIs of LOESS curves resulting from \(10^4\) IAAFT surrogate series (shaded areas). Circles highlight the data corresponding to the period (1990–2016) where the records are deemed more reliable

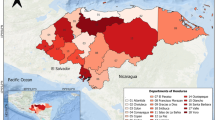

Figure 8 provides a picture of the spatial distribution of the number of European floods belonging to different classes of interest. Figure 8a refers to the entire data set and indicates that England is the most flood-prone area along with the northeastern Romania (corresponding to the middle-lower part of the Danube basin), southeastern Germany (i.e., the Rhine and upper Danube), and the flash-flood-prone southern French Mediterranean regions (Nuissier et al. 2008). Focusing on events with \(D> 7\) (Fig. 8b), the most affected areas include the middle-lower Danube and basins in the Central–Eastern Europe (i.e., the Rhine and Elbe Rivers in the central Germany and the Oder and Vistula Rivers in southern Poland), while England is no longer the most prominent hotspot likely because of the smaller area of its basins and faster flood propagation. As shown in Fig. 8c, d and already discussed by Kundzewicz et al. (2017), selecting large events in terms of severity \((S\ge 1.5)\) and magnitude \((M\ge 5)\) yields similar spatial distributions of flood hotspots: England is still the most critical area along with southern Germany and northeastern Romania.

Spatial distribution of the frequency of European flood events belonging to different classes: all events (a), and events with duration \(D>7\) (b), severity \(S\ge 1.5\) (c), and magnitude \(M\ge 5\) (d). The maps allow for the identification of flood-prone hotspots for each class of events. Note that the DFO data set used to construct these maps is based on media reports which may be biased toward reports in English, thus over-emphasizing flood frequency in the UK

As mentioned before such results should be used with caution, bearing in mind that they rely on news reports. In particular, news in languages different from English can be missed, whereas UK media give extensive coverage of flood events. This can introduce some bias in the catalog of floods, over-emphasizing for instance the England hotspot. Nevertheless, the overall picture of the spatial distribution of severe floods seems to be credible if we recall that the UK is regularly affected by seasonal extra-tropical storms often producing local or extensive flood events.

3.2 Flood tracking analysis

The exploratory analysis in Sect. 3.1 synthesizes information concerning the evolution of the flood occurrences along the years and the flood-prone hotspots across Europe. This information can be used to refine flood management strategies and to tailor (re)insurance contracts on a geographical basis accounting for the alternation of flood-rich and flood-poor periods. However, such a synoptic description does not shed light on the distribution of the flood duration across a basin, which is paramount to assess the financial risk related to the application of a hours clause. In other words, the question is: What is the probability that a flood affecting a given basin lasts more than n hours? The data-driven procedure described in Sect. 2.2 provides a realistic first approximation answer with minimal input data readily available from accessible flow data repositories.

3.2.1 Identification of historical events

Before analyzing the flood propagation time, we need to check the ability of the proposed procedure to recognize historical floods and their propagation across the network. For the sake of illustration, Fig. 9 illustrates the cases of the 1995 flood of the Rhine River (Fig. 9a), the 2002 Elbe floods (Fig. 9b), and the trans-basin events affecting Germany in 1970 (Fig. 9c). In all cases, maps report the dates (as colors) in which the peak flow (corresponding to the event ID identified by the master series resulting from the synchronization procedure) occurred for each station. The color scales clearly highlight that the peak flow dates move forward along the time axis as we move downstream along the network. In other words, downstream flow peaks coherently follow the upstream flow peaks corresponding to the same event ID.

Examples of flood events identified by the proposed flood tracking procedure: Rhine flood in January 1995 (a), Elbe flood in August 2002 (b), and trans-basin Germany flood involving the Elbe, Rhine, and Weser basins in February 1970 (c). Colors denote the date of occurrence of the flood peak in each gauging station during the events. The symbol ‘\(\oplus \)’ denotes the locations where the flood peak exceeds the at-site critical values \(Q_{2}\) (for the trans-basin Germany flood) and \(Q_{10}\) (for the 1995 Rhine flood and the 2002 Elbe events)

Although the flood tracking method relies on intra-basin connected networks, this does not prevent the identification of trans-basin events (Uhlemann et al. 2010; Gvoždíková and Müller 2017) such as the 1970 Germany events. In fact, the identified intra-basin events can be combined by checking their chronological overlap to obtain trans-basin events. Figure 9c shows not only that flow peaks coherently propagated downstream in each basin, but also that the events evolved from southwest to northeast, starting in the upper Rhine and progressively affecting the Weser and then the Elbe, where the flow peak in the most downstream gauging station is recorded at the end of the event.

It should be noted that the flood tracking procedure identifies the flow peaks in all locations for every event ID. However, some of these peaks may be not extreme. Therefore, actual flood events are usually selected by using some criterion such as a peak-over-threshold approach (POT; Uhlemann et al. 2010; Gvoždíková and Müller 2017; Morrill and Becker 2017). The threshold can be chosen according to the aim of the study, but common values are the at-site discharge values exceeded with annual probability of 0.5 or 0.1 (i.e., the 2- and 10-year RP levels, \(Q_2\) and \(Q_{10}\), respectively), where the former option provides a good approximation of the bankfull discharge, while the latter is commonly used to delineate the first class of flood-prone areas in risk mapping as well as the areas within which assets are usually considered as not insurable (Kron 2005; Uhlemann et al. 2010, and references therein). Higher thresholds can be used bearing in mind the lack of reliability of the estimates of extreme quantiles for the available sample sizes.

Focusing on gauges where the peak flow exceeds at-site \(Q_{10}\), Fig. 9a, b shows that the 1995 Rhine events impacted the middle-lower part of the basin, while the 2002 Elbe floods affected the entire river basin. The 1995 Rhine flood propagated in about one week (January 23–31, 1995), while the 2002 Elbe event in two weeks (i.e., from August 13, 2002), which is in good agreement with historical documentation (Barredo 2007) and studies on flood extent identification (Uhlemann et al. 2010; Gvoždíková and Müller 2017). For the 1970 trans-basin event, we highlight the sites with peak flow exceeding at-site \(Q_{2}\) to allow for a comparison with results provided by Uhlemann et al. (2010). Our results confirm that the flood event mainly affected the Rhine and Weser and only marginally the Elbe basin. Combining the information concerning the magnitude with the remarks regarding the event evolution, Fig. 9c shows the trans-basin flood started in the lower part of Rhine basin with no critical peaks, then evolved toward the northeast, and finally ended with no critical flow peaks traveling along the mainstream of the Elbe River located in the eastern part of the basin.

3.2.2 Distribution functions of flood propagation time

The cases discussed above are only three examples of a set of events identified by the flood tracking procedure for each basin along the time interval with simultaneous records across the gauging network. These sets can be used to compute the probability of D exceeding the n hours of the hours clause. Figure 10 shows the output of this analysis for our seven European basins and the two thresholds \(Q_{2}\) and \(Q_{10}\). Figure 10a–g, o–u shows the empirical cumulative distribution function (ECDF) of propagation times computed by considering only the stations experiencing peak flows exceeding \(Q_{2}\) and \(Q_{10}\), respectively. These propagation times are specifically related to extreme events as they consider only the ‘critical’ sites for each event and they are close to the quantity of interest for reinsurance purposes. Obviously, these distributions are also influenced by the number of stations with available data. In fact, short propagation times can be related to events occurred in years where only few time series are available (e.g., only few time series cover the first half of the twentieth century). In these cases, the propagation time corresponds to observations recorded in two or three stations, other data being unavailable. However, Fig. 10h–n, v–ad shows that there is a weak relationship between duration and the number of sites when looking at POT events. Of course, the more dense the network of gauges with data covering long periods, the more accurate the reconstruction of the entire propagation process is; otherwise, we have only a partial picture.

a–g Distribution functions of flood duration D resulting from events with flow peaks greater than at-site \(Q_{2}\) identified by the flood tracking algorithm. Each panel shows the empirical distribution, the NB distribution fitted to data, and the corresponding 98% Monte Carlo CIs. h–n Scatter plots of D versus the number of stations showing flood peaks greater than at-site \(Q_{2}\). o–u and v–ad are similar to a–g and h–n, respectively, but refer to flood events with flow peaks exceeding at-site \(Q_{10}\)

To quantify the uncertainty of ECDFs and provide a parametric model useful for further analysis and simulation, we fit a two-parameter negative binomial distribution (NB; see Appendix) whose cumulative distribution functions are reported in Fig. 10a–g, o–u along with the 98% confidence intervals (CIs) obtained by simulating \(10^4\) samples of length equal to the number of events used to build ECDFs (see, e.g., Serinaldi 2009). The NB distribution describes the frequency of data very well, and the relatively narrow CIs indicate a good reliability of such estimates. When the number of events is small, as for the case of Danube and \(Q_{10}\) POT events, the width of CIs communicates the lack of information and thus the high uncertainty affecting the point estimates. For each basin and POT class, Table 1 reports some summary statistics of event duration D, the maximum likelihood point estimates of NB parameters, and the probability \({\mathbb {P}}[D>7~\text{ days }]\) resulting from the fitted NB distributions. All point estimates are complemented with their 95% Monte Carlo CIs. The NB models can be used, for instance, to compute the probability to observe events lasting more than seven days (i.e., the standard hours clause), or the expected flood duration. For example, focusing on \(Q_{10}\) POT events, the point estimates of the average flood duration are approximately four days for the Rhine, eight days for the Elbe, and one day for the Garonne, while the probabilities of D exceeding seven days are \(\approx 17\%\) for the Rhine, \(\approx 55\%\) for the Elbe, and \(\approx 1\%\) for the Garonne.

Concerning the extreme events exceeding \(Q_{10}\), the propagation times are generally shorter than those corresponding to \(Q_{2}\) events because only a smaller number of stations experiences high flows, even in large events. For example, only the middle-lower part of the basin was affected by \(Q_{10}\) exceedances in the 1995 Rhine flood (Fig. 9a), while almost the entire basin experienced \(Q_{2}\) exceedances in the 1970 event. To check the robustness of these results, we performed an analysis based on a leave-one-out (jackknife) procedure on four of the seven basins, for the sake of illustration. It consists of repeating the flood tracking procedure by excluding one station at a time as if it were not available. This approach allows for testing the flood tracking output when a node of the network is missing. ECDFs in Fig. 11 show that the results are rather insensitive to node removal, and the variability generally falls within the CIs quantifying the sampling uncertainty, thus confirming the overall robustness of the proposed procedure.

4 Discussion and conclusions

In this study, we have investigated the problem of defining flood event duration from a reinsurance perspective in order to improve the current practices based on the ‘one-size-fits-all’ hours clauses. We tackled the problem from two different perspectives in order to provide (1) a spatiotemporal synoptic picture of flood duration and magnitude resulting from an exploratory analysis of the DFO archive comprising flood events recorded worldwide in the period 1985–2016 and (2) a practical tool to explore the flood propagation time and its distribution in a given river basin with minimal readily available information.

The exploratory analysis allows the identification of general spatiotemporal patterns of the frequency of flood exceeding a given duration or magnitude. It highlights, for instance, the alternation of flood-poor and flood-rich periods, and hotspot areas prone to long-lasting events, as well as the relationship between duration/magnitude and flood generation process. In particular, floods triggered by seasonally evolving processes, such as monsoonal rain and snowmelt, have more than 55% of chance to last more than the seven days characterizing standard hours clauses. This result can help in quantifying the recognized inadequacy of the hours clauses when applied to areas prone to this kind of events. However, we find that the probability of exceeding 7-day duration is not negligible also for events caused by heavy rain (42% worldwide and 31% in Europe) or brief torrential rain (24% worldwide and 16% in Europe), which is less expected and highlights the necessity of more accurate and empirically based criteria to define clauses in reinsurance contracts. Snowmelt-related events also exhibit the highest probability to be large in terms of magnitude (as defined in Sect. 2.1) both in Europe and worldwide. At a global scale, monsoonal rain and tropical storms (which cannot affect Europe) should be considered as additional causes often generating flood with high magnitude. This information can allow for the definition of region-tailored duration clauses according to the dominating flood generation process.

The DFO data analysis also reveals that the number for flood events and their duration and magnitude show no particular evolution along the period of record. The values of these parameters fluctuate resulting in an alternation of periods with less/more, shorter/longer, and smaller/bigger events. On the other hand, on a global scale, there is a clear seasonal pattern in the number of floods, with higher frequency in the summer months. No seasonal patterns emerge in terms of duration and magnitude. Focusing on Europe, the number of flood events shows higher frequencies in January, June, July, and October, corresponding to the seasonality of the main weather systems characterizing the European climatic zones. In agreement with the analysis of flood-generating processes, longer and larger events tend to occur in spring (March and April) as a consequence of snowmelt or combination of snow and rainfall.

Despite the simplicity of this analysis and the intrinsic limits of DFO data (see Kundzewicz et al. (2017) and Sect. 2.1), it should be noted that these results agree with those reported by Hundecha et al. (2017), which rely on a more refined procedure based on a pan-European hydrological model. In particular, Hundecha et al. (2017) identified four main flood-generating mechanisms for Europe (i.e., short rain, long rain, snowmelt, and rain on snow) that broadly correspond to the main causes recognized in Fig. 5a, and showed that the snowmelt-related events generally last more than rainfall-related floods (Fig. 5b). Hundecha et al. (2017) also highlighted how the different types of events tend to cluster across European regions based on seasonality, thus reflecting the weather conditions characterizing different climatic zones. Emphasizing the role of the sampling uncertainty and persistence, our results in Fig. 7 also confirm the lack of monotonic trend in the total number of European flood events (Hundecha et al. 2017) as well as in the number of events exceeding given duration or magnitude (Kundzewicz et al. 2017).

Previous studies tackled the problem of the identification of flood events affecting wide areas (Uhlemann et al. 2010; Gvoždíková and Müller 2017; Morrill and Becker 2017; Hundecha et al. 2017). Leaving aside specific differences, these approaches comprise a preliminary POT selection, which identifies potential flood peaks over a set of locations, and a subsequent clustering procedure that gathers together sites showing POT exceedances within a given time window. This time window can be constant, resulting from consideration of the hydrology of the studied area (Uhlemann et al. 2010; Gvoždíková and Müller 2017; Hundecha et al. 2017), or site specific, reflecting the characteristic travel time of a flood wave through a given transect/location (Morrill and Becker 2017). Our approach differs from these methods in several respects. We reverse the procedure of POT selection and spatial clustering. In fact, we firstly apply a synchronization procedure allowing for the identification of flood events on a smoothed auxiliary master series and then we identify the at-site flow peaks corresponding to master events in the original time frame. In this way, all peaks are flagged as belonging to a specific event and then labeled as critical or not according to POT criteria. The synchronization procedure explicitly exploits the relationship between the entire discharge series recorded at nearby upstream and downstream locations (stylized by Eq. 1), thus automatically retrieving the characteristic time of propagation between each pair of upstream–downstream locations. The spatial extent is defined at the second stage by checking which peaks belonging to the same master event exceed a prescribed critical value, thus automatically identifying clusters of critical sites (Fig. 9). This procedure has an additional advantage. In fact, the relevance of the hours clause also depends on the geographical extension of the insurer’s portfolio. In other words, if only properties in a small part of the basin are insured, the travel time for the whole basin might not matter. In this context, the proposed methodology accounts for this problem by allowing the computation of the flood travel time between two arbitrary points of interest or for a specific part of the river network.

Of course, the larger the number of sites with available data covering the same period and the finer the temporal resolution of records, the more the tracking procedure is accurate. However, the proposed method also automatically accounts for missing data or low-density networks, yielding the number of locations whose data are available in each master event. Our flood tracking approach also differs from previous methods as it relies on the nodes of a connected river network. Even though this seems a limitation, it allows for a more realistic flood tracking, attempting to follow (albeit in a simple way) the basin response dynamics. Moreover, as discussed in Sect. 3.2, trans-basin floods can also be identified by checking the temporal overlap of the identified intra-basin floods, as for the case of the 1970 Germany event.

From a reinsurance standpoint, the proposed flood tracking method provides fundamental information regarding the distribution of flood duration at both intra-basin and trans-basin scales (after merging chronologically overlapping intra-basin events). For the cases analyzed in this study, such a distribution can be modeled by a two-parameter negative binomial distribution that enables the assessment of the reliability of standard hours clauses accounting for the sampling uncertainty, as well as simulation and further analysis of possible interest. For example, regarding the \(Q_{10}\) POT events of the Danube, where the lack of simultaneous observations across the network yields a small number of available events, one can conclude that the probability that the \(Q_{10}\) floods last more than seven days ranges between the 26 and 92% at the 95% confidence level (see Table 1), thus highlighting the high uncertainty of these estimates and the need for additional data and analysis. On the other hand, when the gauging network and available records have a good spatial and temporal coverage, as for the Rhine basin, for instance, then the estimates are obviously more reliable and robust and can be used with more confidence.

In both cases, this type of analysis allows for more informed decisions and communication, taking into account the current state of knowledge. In fact, the distribution of flood duration can be used to assign a probability (or a range of probabilities) to standard hours clauses (e.g., 7-h clause) or to adopt region-specific n-hour values corresponding to fixed probabilities. Even though we are aware that the introduction of new provisions in (re)insurance policies is not straightforward and requires time, our aim is to stimulate the debate in the community of researchers and market operators, showing an option to complement n-hour clauses with additional probabilistic information. We also hope that this work will encourage multidisciplinary research involving hydrologists, econometricians, and actuaries, thus enabling the design of improved financial procedures better incorporating hydrological information.

References

Barredo JI (2007) Major flood disasters in Europe: 1950–2005. Nat Hazards 42(1):125–148

Botzen WJW, Van Den Bergh JCJM (2008) Insurance against climate change and flooding in the Netherlands: present, future, and comparison with other countries. Risk Anal 28(2):413–426

Bouwer LM, Crompton RP, Faust E, Höppe P, Pielke RA (2007) Confronting disaster losses. Science 318(5851):753

Brakenridge GR (2017) Global active archive of large flood events. Dartmouth Flood Observatory, University of Colorado. http://floodobservatory.colorado.edu/Archives/index.html. Accessed 24 May 2017

Bubeck P, Botzen WJW, Aerts JCJH (2012) A review of risk perceptions and other factors that influence flood mitigation behavior. Risk Anal 32(9):1481–1495

Bubeck P, Kreibich H, Penning-Rowsell EC, Botzen WJW, de Moel H, Klijn F (2017) Explaining differences in flood management approaches in Europe and in the USA—A comparative analysis. J Flood Risk Manag 10(4):436–445

Carter R (1983) Reinsurance, 2nd edn. Springer, Dordrecht

Cipra T (2010) Financial and insurance formulas. Physica-Verlag HD, Dordrecht

Cleveland WS, Devlin SJ (1988) Locally-weighted regression: an approach to regression analysis by local fitting. J Am Stat Assoc 83(403):596–610

Courbage C, Orie M, Stahel WR (2012) 2011 Thai floods and insurance. In: Courbage C, Stahel WR (eds) The Geneva reports-risk and insurance research—extreme events and insurance: 2011 annus horribilis, vol 5. The Geneva Association, Geneva, pp 121–132

Dang NM, Babel MS, Luong HT (2011) Evaluation of food risk parameters in the Day River Flood Diversion Area, Red River Delta, Vietnam. Nat Hazards 56(1):169–194

De Guidi G, Vecchio A, Brighenti F, Caputo R, Carnemolla F, Di Pietro A, Lupo M, Maggini M, Marchese S, Messina D, Monaco C, Naso S (2017) Co-seismic displacement on October 26 and 30, 2016 \(\text{M}_{{\rm W}}5.9\) and 6.5)—earthquakes in central Italy from the analysis of discrete GNSS network. Nat Hazards Earth Syst Sci 17:1885–1892

England and Wales High Court (2013) England and Wales High Court (Commercial Court) Decisions: Tokio Marine Europe Insurance Ltd v Novae Corporate Underwriting Ltd [2013] EWHC 3362 (Comm) (06 November 2013). http://www.bailii.org/cgi-bin/markup.cgi?doc=/ew/cases/EWHC/Comm/2013/3362.html

Gale EL, Saunders MA (2013) The 2011 Thailand flood: climate causes and return periods. Weather 68(9):233–237

Glaser R, Riemann D, Schönbein J, Barriendos M, Brázdil R, Bertolin C, Camuffo D, Deutsch M, Dobrovolný P, van Engelen A, Enzi S, Halícková M, Koenig SJ, Kotyza O, Limanówka D, Macková J, Sghedoni M, Martin B, Himmelsbach I (2010) The variability of European floods since AD 1500. Clim Change 101(1–2):235–256

Gvoždíková B, Müller M (2017) Evaluation of extensive floods in western/central Europe. Hydrol Earth Syst Sci 21(7):3715–3725

Hall J, Arheimer B, Borga M, Brázdil R, Claps P, Kiss A, Kjeldsen TR, Kriaučiūnienė J, Kundzewicz ZW, Lang M, Llasat MC, Macdonald N, McIntyre N, Mediero L, Merz B, Merz R, Molnar P, Montanari A, Neuhold C, Parajka J, Perdigão RAP, Plavcová L, Rogger M, Salinas JL, Sauquet E, Schär C, Szolgay J, Viglione A, Blöschl G (2014) Understanding flood regime changes in Europe: a state-of-the-art assessment. Hydrol Earth Syst Sci 18(7):2735–2772

Haraguchi M, Lall U (2015) Flood risks and impacts: a case study of Thailands floods in 2011 and research questions for supply chain decision making. Int J Disaster Risk Reduct 14:256–272

Hundecha Y, Parajka J, Viglione A (2017) Flood type classification and assessment of their past changes across Europe. Hydrol Earth Syst Sci Dis 2017:1–29

Jonkman SN (2005) Global perspectives on loss of human life caused by floods. Nat Hazards 34(2):151–175

Koks EE, Bočkarjova M, Moel H, Aerts JCJH (2015) Integrated direct and indirect flood risk modeling: development and sensitivity analysis. Risk Anal 35(5):882–900

Komori D, Nakamura S, Kiguchi M, Nishijima A, Yamazaki D, Suzuki S, Kawasaki A, Oki K, Oki T (2012) Characteristics of the 2011 Chao Phraya River flood in Central Thailand. Hydrol Res Lett 6:41–46

Kron W (2005) Flood Risk = Hazard • Values • Vulnerability. Water Int 30(1):58–68

Kundzewicz ZW, Pińskwar I, Brakenridge GR (2013) Large floods in Europe, 1985–2009. Hydrol Sci J 58(1):1–7

Kundzewicz ZW, Pińskwar I, Brakenridge GR (2017) Changes in river flood hazard in Europe: a review. Hydrol Res. https://doi.org/10.2166/nh.2017.016

Lees A (2014) The Thai floods: issues for international insurers and reinsurers. http://www.elexica.com/en/legal-topics/insurance/28-the-thai-floods-issues-for-international-insurers-and-reinsurers. Accessed 05 Sept 2017

Liu AQ, Mooney C, Szeto K, Thriault JM, Kochtubajda B, Stewart RE, Boodoo S, Goodson R, Li Y, Pomeroy J (2016) The June 2013 Alberta Catastrophic Flooding Event: Part 1 climatological aspects and hydrometeorological features. Hydrol Process 30(26):4899–4916

Montanari A (2012) Hydrology of the Po River: looking for changing patterns in river discharge. Hydrol Earth Syst Sci 16(10):3739–3747

Morrill EP, Becker JF (2017) Defining and analyzing the frequency and severity of flood events to improve risk management from a reinsurance standpoint. Hydrol Earth Syst Sci Dis 2017:1–33. https://doi.org/10.5194/hess-2017-167

Nuissier O, Ducrocq V, Ricard D, Lebeaupin C, Anquetin S (2008) A numerical study of three catastrophic precipitating events over southern France. I: numerical framework and synoptic ingredients. Q J R Meteorol Soc 134(630):111–130

Pińskwar I, Kundzewicz ZW, Peduzzi P, Brakenridge GR, Stahl K, Hannaford J (2012) Changing floods in Europe. In: Kundzewicz ZW (ed) Changes in flood risk in Europe, vol 10. IAHS Special Pub, Wallingford, UK, pp 83–96 IAHS Press and CRC Press, Balkema (Taylor&Francis Group)

Pomeroy JW, Stewart RE, Whitfield PH (2016) The 2013 flood event in the South Saskatchewan and Elk River basins: causes, assessment and damages. Can Water Resour J 41(1–2):105–117

Prettenthaler F, Albrecher H, Asadi P, Köberl J (2017) On flood risk pooling in Europe. Nat Hazards 88(1):1–20

Pucci S, De Martini PM, Civico R, Villani F, Nappi R, Ricci T, Azzaro R, Brunori CA, Caciagli M, Cinti FR, Sapia V, De Ritis R, Mazzarini F, Tarquini S, Gaudiosi G, Nave R, Alessio G, Smedile A, Alfonsi L, Cucci L, Pantosti D (2017) Coseismic ruptures of the 24 August 2016, \(\text{M}_{{\rm W}}6.0\) Amatrice earthquake (central Italy). Geophys Res Lett 44(5):2138–2147

Schreiber T, Schmitz A (1996) Improved surrogate data for nonlinearity tests. Phys Rev Lett 77:635–638

Serinaldi F (2009) Assessing the applicability of fractional order statistics for computing confidence intervals for extreme quantiles. J Hydrol 376(3):528–541

Serinaldi F (2015) Dismissing return periods!. Stoch Environ Res Risk Assess 29(4):1179–1189

Serinaldi F, Kilsby CG (2016) Understanding persistence to avoid underestimation of collective flood risk. Water 8(4):152

Serinaldi F, Kilsby CG (2017) A blueprint for full collective flood risk estimation: demonstration for European river flooding. Risk Anal 37(10):1958–1976

Speight LJ, Hall JW, Kilsby CG (2017) A multi-scale framework for flood risk analysis at spatially distributed locations. J Flood Risk Manag 10(1):124–137

Takahashi HG, Fujinami H, Yasunari T, Matsumoto J, Baimoung S (2015) Role of tropical cyclones along the monsoon trough in the 2011 Thai flood and interannual variability. J Clim 28(4):1465–1476

Teufel B, Diro GT, Whan K, Milrad SM, Jeong DI, Ganji A, Huziy O, Winger K, Gyakum JR, de Elia R, Zwiers FW, Sushama L (2017) Investigation of the 2013 Alberta flood from weather and climate perspectives. Clim Dyn 48(9):2881–2899

Thieken AH, Müller M, Kreibich H, Merz B (2005) Flood damage and influencing factors: new insights from the August 2002 flood in Germany. Water Resour Res 41(12):W12,430

Thornes JE (1991) Applied climatology: severe weather and the insurance industry. Prog Phys Geogr 15(2):173–181

Uhlemann S, Thieken AH, Merz B (2010) A consistent set of trans-basin floods in Germany between 1952–2002. Hydrol Earth Syst Sci 14(7):1277–1295

UNISDR-CRED (2015) The human cost of natural disasters: a global perspective. https://www.emdat.be/human_cost_natdis. Accessed 20 Aug 2017

Ward P, Kummu M, Lall U (2016) Flood frequencies and durations and their response to El Niño Southern Oscillation: global analysis. J Hydrol 539:358–378

Zhou Q, Lambert JH, Karvetski CW, Keisler JM, Linkov I (2012) Flood protection diversification to reduce probabilities of extreme losses. Risk Anal 32(11):1873–1887

Ziegler AD, She LH, Tantasarin C, Jachowski NR, Wasson R (2012) Floods, false hope, and the future. Hydrol Process 26(11):1748–1750

Acknowledgements

This work was supported by the Engineering and Physical Sciences Research Council (EPSRC) Grant EP/K013513/1 ‘Flood MEMORY: Multi-Event Modelling Of Risk & recoverY’ and Willis Research Network. The authors acknowledge the Global stream flow Data Centre (GRDC; Federal Institute of Hydrology (BfG), Koblenz, Germany) for providing the daily river flow data, and Prof. G. Robert Brakenridge and his collaborators for making Dartmouth Flood Observatory archive publicly available at the Web site http://floodobservatory.colorado.edu/. The authors wish to thank Prof. András Bárdossy (Universität Stuttgart and Newcastle University) for fruitful discussions about methods and early results, and two anonymous reviewers for their remarks.

Author information

Authors and Affiliations

Corresponding author

Additional information

Disclaimer: Any views and opinions expressed in this article are those of the authors and do not necessarily reflect the scientific view or position of AIR Worldwide.

Appendix: Negative binomial distribution

Appendix: Negative binomial distribution

The negative binomial (NB) probability mass function used in this study is defined as:

where \(d \in \{0,1,2,...\}\), \(r>0\), \(\mu =r(1-p)/p\) is the mean of the NB distribution, and \(p \in (0,1]\).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Serinaldi, F., Loecker, F., Kilsby, C.G. et al. Flood propagation and duration in large river basins: a data-driven analysis for reinsurance purposes. Nat Hazards 94, 71–92 (2018). https://doi.org/10.1007/s11069-018-3374-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-018-3374-0