Abstract

Physical paper, in its various forms (e.g. books, leaflets, catalogues), is extensively used in everyday activities, despite any advancements in digital technology and ICT. Inspired by the popularity of this medium, several research efforts have envisioned and pursued a new era of interactive paper, however several challenges still remain to be addressed. On the other hand, recent advancements towards Ambient Intelligence (AmI) and Smart environments, bear the promise of seamless integration of the physical and digital worlds in an intuitive and user-friendly manner. This paper presents InPrinted, a systematic and generic framework supporting physical paper augmentation and user interaction in Ambient intelligence environments. InPrinted enables natural multimodal user interaction with any kind of printed matter in smart environments, providing context aware and anticipation mechanisms, as well as tools and interaction techniques that support the development of applications incorporating printed matter augmentation. InPrinted has been put to practice in the development of various systems, including the Interactive Maps system, facilitating interaction with printed maps as well as their augmentation with digital information. The results of an in-situ observation experiment of the Interactive Maps system are reported, highlighting that interacting with augmented paper is quite easy and natural, while the overall User Experience is positive.

Similar content being viewed by others

1 Introduction

Physical paper has indisputably constituted a fundamental means for information sharing among people throughout the centuries. In the last decade, due to the radical evolvement of technology and in particular of portable devices, the idea of a paperless world started to seem very appealing. However, as Sellen and Harper discuss [48], this idea seems to constitute rather a myth than a tangible reality. On the other hand, new practices of everyday activities related to physical paper (e.g., reading), which are totally interlinked with technology [31], emerge today. For instance, a survey with over 1000 U.S. and 500 U.K. consumers asking their opinions on a variety of issues surrounding paper-based vs. digital media [7], indicated that a sizeable majority of 88% of respondents understand, retain and use information better when they read print on paper, while 81% of respondents preferred to read print on paper when given the choice.

As technology advanced, combining the two worlds - the physical and the digital - potentially retaining the benefits of both became an appealing idea, which led to the birth of many research efforts in this direction, beginning from the last decade of the previous century. Since then, numerous approaches have been proposed, aiming to benefit from users’ familiarity with paper and the natural interaction fostered by paper’s affordances and fundamental properties: it is inexpensive, lightweight and can be easily found anywhere.

On the other hand the rise of smart environments and ubiquitous computing provides the opportunity for the creation of new interaction paradigms in the digital world, which resemble those used in everyday activities of the physical world. Furthermore, the opportunity of pervasive access to digital information offered by approaches such as Ambient Technology increases the potential of engaging everyday objects like printed matter in digital interaction. However, there is still a lack of generic and systematic approaches to support the augmentation and interaction with printed matter in the context of intelligent environments.

This paper presents the InPrinted framework, a generic framework supporting printed matter augmentation and user interaction within Ambient Intelligence (AmI) Environments. The framework aims to provide a stepping stone for bridging the use of physical paper with the state-of-the-art technologies that characterize AmI environments. The InPrinted framework has already been employed in the development of various systems [24,25,26,27, 29, 30, 41], while several evaluation iterations have been conducted to assess the related user experience, focusing on the issue of interaction with digitally augmented paper. As a use case to illustrate how the framework has been used, this paper presents Interactive Maps, a system that was implemented with the InPrinted framework. Interactive Maps is a smart tabletop system that enables interaction with printed maps in order to acquire useful digital tourist information related to points of interest. Information is augmented either upon or laterally to the printed map. Concurrently, the users are still able to take annotations on the printed map and take the map with them afterwards, maintaining unaffected the basic use of the map. The results of an in-situ observation experiment that was carried out to assess the ease of use of Interactive Maps, the naturalness of interaction with physical paper, as well as the overall user experience are also presented.

The paper is structured as follows: Section 2 discusses related work, focusing on natural interaction with digitally augmented printed matter and printed matter augmentation, closing by discussing the topic of embedding interactive printed matter in AmI environments and how this work contributes to this end. Section 3 illustrates the developed ontology meta-model, which enables natural interaction with printed matter in AmI environments. Section 4 introduces the InPrinted framework and its components. Section 5 summarizes the results of the various evaluation iterations, introduces the Interactive Maps application, and presents its in-situ evaluation. Section 6 discusses the lessons learned through evaluations that have been carried out, focusing on the in-situ evaluation results. Finally, section 7 concludes the paper and discusses directions for future work.

2 The era of interactive paper

The first efforts towards digitally augmenting physical paper are traced back to the early 90’s, when DigitalDesk [56] and its successor EnhancedDesk [17] exhibited physical paper augmentation with technology, offering interaction via touch. However, the term “interactive paper” was first coined in 1999 by Mackay and Favard [23], who signified the potential role of digitally augmented paper in the forthcoming technologies.

Since then, more sophisticated Augmented Reality (AR) solutions have been proposed by exploiting the means offered by immersive environments and high quality 3D graphics. For example, MagicBook [4] provides augmentation of physical books with 3D graphics and moving avatars through Virtual Reality (VR) glasses, giving to the reader the sense of living pages. The basic interaction technique in such environments is touch. Pointing and writing in augmented reality environments has also been studied, but the majority of research work is based on proprietary technological artefacts like light pens, pen with pads, haptic devices, etc. ([11, 47]).

The availability of new digital pens capable of capturing marks made on paper documents has led to the development of various systems exploiting user annotations on physical paper in order to assist users’ reading process. For example, the Anoto system [1] combines a unique pattern printed on each page with a digital pen to capture strokes made on paper. PapierCraft [20], on the other hand, uses pen gestures on paper to support active reading and allows users to carry out a number of actions, such as to copy and paste information from one document to another. Finally, the Paper++ system [22] uses documents overprinted with a non-obtrusive pattern that uniquely encodes the x-y (and page) location on the document; this code can then be interpreted by a specialized pen when it comes in contact with the paper. In such approaches specialized devices (such as the digital pens) are necessary. In order to achieve context-awareness, systems such as Paper++ have to also employ specialized paper besides the digital pen.

2.1 Natural interaction with digitally augmented printed matter

Users’ preference for paper due to its affordances, but also due to its simplicity and naturalness when annotating and writing on it, have inspired research efforts towards pursuing natural interaction with digitally augmented paper. A first level of categorization of natural interaction with printed matter can be based on whether the user uses his/her bare hands or other objects (e.g., a stylus) to interact. Five main types of finger based interactions with printed matter have been defined [58]:

-

Interactions based on action time: if, for a short period of time, the user pauses his/her finger on a specific point of the paper, then this maps to a GUI click (select), while a longer pause on the paper indicates a double click (open/play).

-

Interactions based on hand shapes: this category refers to hand postures that are mapped to specific actions (e.g., hand fist corresponds to stop or abort, a “V” sign stands for zooming).

-

Interactions based on drawing: virtually drawing simplistic shapes with finger (e.g., a triangle might restore an annotation to initial state, left and right arrows signify the transition from current state forward or backwards respectively).

-

Interactions based on movement: moving a user’s hand in a specific posture corresponds to specific action (e.g., moving hand in the “V” sign upwards stands for zoom in the whole view).

-

Interactions with two hands involved: for example using the two index fingers together doing a pinch gesture connotes the resize of an annotation projected near the printed matter.

Holman et al. [15] introduce interaction styles suitable for digitally augmented paper in the 3D space. They define eight interaction styles based on the natural manipulation of paper:

-

Hold: the users can hold a paper with both their hands raising it to the air, signifying to the system that this is the currently active document.

-

Collocate: this gesture annotates the system to prioritize the paper that the user holds against others that co-exist in the same space.

-

Collate: while the user collates papers on a stack in order to organize them in piles, the system assigns a separate group for each pile, in order to avoid cluttering.

-

Flip: if the active paper is flipped by the user, then the system changes the content of the paper to the next or previous page.

-

Rub: this gesture is used in order for the user to transfer content among two papers or among a paper and a computing peripheral.

-

Staple: in a same way of physically stapling two papers, this gesture is used to link two different viewports into the same document.

-

Point: a user pointing on a specific area of the paper and then raising up his/her hand is interpreted by the system as a single click gesture. If the user performs this gesture twice sequentially and in a short period of time, then this gesture is considered as a double click.

-

Two-handed Pointing: with this gesture the user disjoints items on a single paper or across multiple papers.

On the other hand, the use of stylus or other types of pointers for printed matter interaction supports a reduced set of gestures mainly based on handwriting techniques that humans use for annotating on printed matter. In [20] a set of five fundamental types of scope selects are described, inspired by typical marks found on manuscripts, namely underline, margin bar, lasso, crop marks and stitching marks (across two documents). The use of stylus based interaction also incorporates a basic props that hand – based interaction cannot sufficiently compensate, namely handwriting. As mentioned in [54], styli should be used for writing and fingers for drawing, since the outcome of the performed survey highlighted that in terms of legibility and usability using a stylus was better than using a finger to write.

2.2 Printed matter augmentation

According to Milgram’s and Kishino’s “Virtuality Continuum” [36], printed matter augmentation in AmI environments can be considered closer to AR rather than to Augmented Virtuality (AV), due to two fundamental reasons: a) printed matter constitutes a basic means of interaction so it cannot be replaced by any virtual alternative and b) the philosophy of AmI is to technologically enhance the real world and not to substitute it with virtual environments.

To this end, various AR approaches emerged for printed matter augmentation. A common way for creating AR environments over printed matter is through the use of portable devices (PDAs, smart phones, tablets, etc.). For example, the gesture-based PACER system [21] supports fine-grained paper document content manipulation through a smartphone with a touch screen and camera. Users can augment a printed document through the smartphone’s screen in order to perform actions such as keyword search, browse street view along a route, sweep on a music score to play the corresponding song, copy and email part of the document, etc. PaperPoint [49] enables PowerPoint presentations to be controlled from printed slide handouts on handheld devices through stylus based interaction.

Similar approaches are based on Head Mounted Displays (HMD), offering greater immersion, depending however on expensive and cumbersome equipment. MagicBook [4] is such a pioneering work, which uses a physical book as the main means of interaction. Users of the MagicBook look at the book pages through an augmented reality display (HMD) that displays 3D virtual models appearing out of the pages. The models appear attached to the real page so users can see the augmented reality scene from any perspective, by moving themselves or the book. A similar approach is discussed in [57], where five educational AR exhibits are presented, based on printed matter containing special markers. The exhibits provide digital content on top of the printed matter employing the ARToolkit [2], while users need to wear HMDs to view the augmented content.

A second category of augmentation employs information projected on the printed matter. Such an approach was first introduced by Wellner [56], employing a projector and a camera lying over the printed matter. While the user interacts, digital content is projected either on top or laterally to the printed matter. The projected content is 2D multimedia info or mini interactive applications (e.g., calculator, map view, slide show, etc.). Live Paper [44] transforms paper sheets into I/O devices and provides audio and video augmentation, including collaboration with remote users, through video writing and projected annotations. A later approach to augmenting printed books is discussed in [8], where the concept of Projective Augmented Books is proposed. The described system consists of a mobile device with an embedded pico-projector and a digital pen. The mobile device with the pico-projector is used as a reading lamp in order to project dynamic content onto a book printed on digital paper based on Anoto Technology [1]. A digital pen and gestures are used for the user interaction with the printed book. An even more portable system is PenLight [52] that comprises a mobile projector mounted on a digital pen similar to that used in the Live Paper system. In PenLight the projected digital content is relevant to the position of the digital pen, allowing users to visibly correlate information that is stored inside the pen or on any connected resource with the document.

A third category of printed matter augmentation includes approaches that use a companion display to provide the augmented content to the users. For example, Bridging Book [10] is a mixed - media system that combines a physical book containing magnets with a touchscreen tablet that incorporates a built-in digital compass. The synchronization between the thumbing of the physical book and the digital content is achieved by placing magnets on the book pages that change the magnetic field strength detected by the digital compass sensor. Each page of the physical book has a corresponding digital set, which includes short animations and interactive elements, context and feedback sounds. Another example is discussed in [18], which offers physical interaction through printed cards on a tabletop setup, where a simple webcam monitors the table’s surface and identifies the thrown cards. The system consists of two mini games based on the printed cards shown on the table: a multiple-choice quiz and a geography-related game.

A prominent category of augmenting printed matter in real-life environments is that of using projection of visual content on convenient surfaces in the environment, such as the walls or the floor of a room [16, 42]. In such systems virtual content is anchored in the real world in such a way that it blends with the physical environment. In this context, an important issue to achieve an effective AR experience is tracking, so that the viewer’s position can be properly reflected in the presentation of the virtual content. Several tracking techniques exist, however the most widely used approaches currently use computer vision techniques and can provide marker-based and markerless tracking at real time frame rates [5]. Approaches employing depth cameras in the context of tabletop AR include ARTable [19], a prototype virtual table for playing trading card games, MirageTable [3], which is a curved projection-based AR system that digitizes any object on its surface, and Tangible Tiles [55], which uses optically tracked transparent plexiglass tiles for interaction and display of projected imagery on a table or whiteboard.

In summary, although several interactive printed matter solutions have been developed, more than a few challenges remain to be addressed [50] in terms of device independence, digital ink abstraction, application deployment, visual encoding, interaction design, as well as authoring and publishing. All these new aspects will constitute the objective of future efforts while the idea of interactive printed matter constantly evolves.

2.3 Integrating interactive documents in AmI environments

The emergence of smart environments, ubiquitous computing, Internet of Things and mainly the notion of Ambient Intelligence, provide nowadays opportunities to better integrate interactive printed matter in our everyday living. Employing interactive printed matter systems and approaches in AmI environments retaining a very common and necessary human practice, namely to use physical paper in various daily activities, presents a significant potential. However, there is currently a lack of approaches aiming at merging these two aspects in an integrated and holistic approach. Figure 1 depicts a conceptual roadmap of printed matter evolution, as a basic means of static information, towards interactive and context-aware information provision in technologically intelligent environments. The proposed framework constitutes the missing link in the evolutionary chain, introducing a generic approach that will seamlessly bridge current printed matter augmentation and interactivity with AmI environments.

Such a systematic approach is discussed in the context of this paper, elaborating on the development of an extensible context-aware interaction framework which enables the integration of printed matter into AmI environments. This extensible and context sensitive framework, named InPrinted, includes all the necessary components for facilitating the integration of AmI applications that use printed matter interaction during their whole implementation and deployment lifecycle. The framework has been implemented according to a service-oriented-architecture (SoA) that supports its easy integration and deployment into already existing AmI environments. Additionally, an ontology-based model for modelling printed matter interaction in AmI environments has been defined, which can be easily extended to allow the incorporation of new means of information acquisition, processing and visualization that may emerge in the future, thus making the framework fully extensible.

In summary, the InPrinted framework provides:

-

an open architecture, enabling the integration of new types of technologies for information acquisition and provision,

-

independence from applications’ development technologies

-

an extensible ontology based reference model for printed matter, context-awareness and anticipation mechanisms,

-

implementation of printed matter augmentation mechanisms in the environment,

-

support for multimodal natural interaction with printed matter in AmI environments.

An important contribution of this work is the elaboration of a sustainable and robust mechanism that can be easily integrated in any AmI environment and supports natural interaction with and digital augmentation of printed matter. Furthermore, the proposed framework constitutes a stepping stone towards the efficient development of applications that incorporate physical interaction with printed matter in AmI environments, through implementing fundamental mechanisms such as global interaction handling, reasoning and printed matter augmentation. These mechanisms are integrated into the basic AmI infrastructure and can be used as services of the infrastructure. Moreover, ready to use printed matter augmentation components and semantic content analysis tools have also been developed, thus fostering the easy creation of AmI applications.

2.4 A scenario-based preamble

Following the scenario-based design approach [46] three exemplifying scenarios follow, highlighting users’ natural interaction with printed matter and printed matter augmentation, as they are modeled by the InPrinted framework and deployed in the applications that use it.

2.4.1 Maria @ school

Today, Maria, a high-school literature teacher, is going to employ the smart augmented desk of her school to discuss with her students a poem by Constantine P. Kavafis, titled Ithaca. The desk supports teaching through physical book recognition, hand writing recognition and information augmentation. Maria gathers her students around the table and starts reading the poem, holding the book in her hands: “As you set out for Ithaca hope that your journey is a long one, full of adventure, full of discovery. Laestrygonians and Cyclops, angry Poseidon-don’t be afraid of them …”. Then she places the open book on the table, and immediately the room lights dim and the ceiling-mounted projector sheds light on the table. To initiate a discussion with her students, Maria circles the word Ithaca with her pencil, while asking: “Do you know where Ithaca is?”. A map of Greece is presented laterally to the book. Maria encourages students to explore the map via touch and identify the exact location of the island. Once the class becomes more aware of the island and its relationship with Greek mythology, Mary asks “Ok, with Ithaca, but what about the Laistrygonians and the Cyclops?”. Nobody seems to know, so Maria underlines in the book page the words “Laistrygonians” and “Cyclops” with her pencil. An encyclopaedia article for each word is displayed on the desk, and illustrations through an image slide show are overlaid on the book.

2.4.2 Kristen on the go

It is Thursday afternoon and Kristen is heading towards the Community Centre for the elderly, for her weekly bridge game. She is in the subway station, when she is handed a leaflet about a book reading with John the giraffe, her grandson’s favourite character. The reading is scheduled for the next day, but she is not familiar with the address of the library. She approaches the “Smart documents table” located at the station, where citizens can use printed documents to receive digital information such as location details. The table features a projector, and can be operated through touch and gestures on the table surface. Kristen places the leaflet on the table and the system provides a set of relevant options (find route, add event to calendar, etc.). She selects the first option and the system provides a digital map over which proposed routes are displayed starting from the current subway station and ending to the library. Kristen selects her preferred route and touches the print icon, displayed in the top right corner of the map, she receives her printout and continues her way towards the Community Centre.

2.4.3 Peter @ the library

Peter is visiting the library with his grandmother Kristen to attend a book reading event. Peter is very excited about books these days, since he has just learned how to read. He and Kristen find a book that aims to support children in their first reading activities. The book contents are organized around phonemes. For instance, the first chapter, “The cat on the mat”, introduces the vowel’s “a” sound, and the “at” combination. Each book page features an image, accompanied by a short text passage, including words and rhymes using the specific phonemes. What’s more, the book is also supported by the smart table of the library and is accompanied by printed cards to support interactive educational games. Peter places the book on the table and the system welcomes him and plays an introductory video displaying its capabilities. Following the video instructions, Peter opens a book page and points with his finger on the text. The system starts reading aloud: “The fat cat sat on the mat”, overlaying at the same time on the book the image of a cat sitting on a mat. A few minutes later, Peter finishes the first chapter and a suite of card games is initiated to enhance his comprehension. The first game displays words in a nearby screen and asks Peter to throw on the table a card depicting the indicated word. The next game involves comprehension questions that can be answered by selecting the appropriate cards (e.g., “What animal sat on the mat first?”). Finally, a spelling game asks Peter to spell specific words, by placing letter cards on the table in the correct order. Peter with the help of Kristen successfully spells all the words.

The elaborated scenarios highlight several aspects on how printed matter can still be a fundamental component of everyday activities, illustrating at the same time how it can be augmented with AmI technologies. In order to classify and define the relationships of human interplay with such technologies in the context of using printed matter, an ontology – based model has been defined and incorporated in the InPrinted framework, as presented in the next section.

3 The InPrinted model

As already discussed in the previous section, the InPrinted framework provides the necessary tools and a software infrastructure for developers to easily integrate their systems in AmI environments. A basic concern in this respect is supporting context-awareness activities, such as acquiring context from various sources (e.g., sensors, databases and agents), performing context interpretation, carrying out dissemination of context to interested parties in a distributed and timely manner, and providing programming models for constructing context-aware services through an appropriate infrastructure [14]. To this end, ontologies are key requirements for building context-aware systems for the following reasons [6]: (i) a common ontology enables knowledge sharing in open and dynamic distributed systems, (ii) ontologies with well-defined declarative semantics provide a means for intelligent agents to reason about contextual information, and (iii) explicitly represented ontologies allow devices and agents - not expressly designed to work together and interoperate - to achieve “serendipitous interoperability”. Based on the above, a new ontology meta-model scheme has been designed.

For the genericity of the framework’s approach, physical paper is considered as a subclass of printed matter. It should be noted that white paper does not provide any content, and therefore it can be incorporated as soon as anything is written, projected or printed on it.

An ontology-based model for modelling printed matter interaction in smart environments has been defined, which can readily incorporate new means of information acquisition, processing and visualization that may emerge in the future, thus making it fully extensible. In [28] an earlier version of the InPrinted framework’s ontology meta-model is discussed, describing its basic entities as well as their inter-relationships. Figure 2 illustrates an updated version of the InPrinted model, while the next sections discuss in detail the role and interdependences of each basic entity of the model.

3.1 Printed matter

Printed matter, in the context of the presented approach, is considered as any physical object that carries the physical paper’s affordances and can be recognized uniquely based on what has been printed / written / projected on it. For the representation of printed matter, the framework’s scheme uses “printed matter” and “printed matter content”, which are connected through the relationship “has content”. Furthermore, the “printed matter entity” is directly correlated to “printed matter augmentation”, so that the InPrinted framework can populate the types of digital augmentations that can be supported by a specific printed matter. Finally, the relation between the “printed matter” and the AmI “system” is also defined, illustrating this way the system (among the available ones) in which a particular printed matter is used.

The content of each printed matter is represented by the “printed matter content” entity. For the purposes of the InPrinted framework, this entity captures two dimensions of the printed matter content: (a) the content itself along with its structure and (b) the type of interaction that can be applied on any part of such content. In order to address the structure of the content that a printed matter may potentially contain, the Bibliographic Ontology [53] has been incorporated into the framework’s ontology, as a sibling of the “printed matter content” entity. The Bibliographic Ontology describes bibliographic entities and can be used as a citation ontology, as a document classification ontology, or simply as a way to describe any kind of document. For defining the interactive parts of a printed matter content, the relationship “hasInteractiveArea” has been defined between the “printed matter content” and the “printed matter interactive area” entities.

3.2 Interaction

User interaction with printed matter can be classified into three basic categories: (a) basic, (b) gestures and (c) combined. These types of interaction, as well as their instances, have been modelled in the InPrinted framework’s scheme under the “printed matter interaction” entity. The instances of each category are illustrated in Fig. 3.

Any interaction with printed matter in an AmI environment is triggered by a specific user, either explicitly or implicitly. This notion is captured in the ontology via the “interacts using” relationship, which is defined between the “user” and the “printed matter interaction” entity. Additionally, the “interacts with” relationship between the “printed matter interaction” and “printed matter interactive area” entities outlines a user’s interaction with a specific printed matter. Once the relationships between the user, the printed matter and the interaction that is committed have been defined, then at a second level the type of interaction, as well as the printed matter interactive parts, are also defined. In particular, each printed matter content can comprise one or more “printed matter interactive areas” through the definition of the “has” relationship between the “printed matter content” and “printed matter interactive area” entities. Printed matter interactive areas are categorized into four basic types:

-

a)

illustration: refers to any type of printed illustration (e.g., photo, image, chart, drawing, etc.)

-

b)

text: includes any textual information that can be used in a printed document, such as document body, captions, references, etc.,

-

c)

input field: adheres to any part of a printed matter content that requires user input (e.g., handwritten or typed text, a stylus gesture, drawing, etc.),

-

d)

math equation: includes anything related to a math expression.

Whenever a “printed matter interactive area” is engaged, then the supported “printed matter actions” are automatically triggered. The relationship that describes this particular connection of the aforementioned entities is the “supportsAction” relationship.

3.3 Printed matter augmentation

The term augmentation, in the context of the present work, refers to the visualization of any digital information provided by the InPrinted framework as feedback to a user’s implicit or explicit interaction. Figure 4 illustrates the printed matter augmentation model, which is classified in two main categories: (a) intrinsic augmentation and (b) extrinsic augmentation. Intrinsic augmentations include all the visualization techniques that can be rendered directly on the printed matter or on a digital representation of it. On the other hand, any visualization which is rendered externally to the corresponding printed matter is considered as extrinsic. This augmentation classification is an important indication for the Context awareness manager in order to decide, during the reasoning process, which AmI systems will eventually render the augmentation.

The instances of the intrinsic and extrinsic augmentation entities in Fig. 4 are the implemented augmentations that the InPrinted framework already supports through the UI Toolkit described section 4.6.

Each printed matter incorporated in the AmI environment, when it participates in a user’s interaction, is assigned the system that provides the interaction, through the “operates with” relationship, which is applied between the “printed matter” and the “system” entities of the InPrinted framework’s ontology meta-model. Each AmI system is capable of providing a number of augmentations. This is declared into the ontology meta-model via the “incorporates” relationship between the “system” and the “printed matter augmentation” entities. A “printed matter augmentation” is triggered during the engagement of a corresponding “printed matter action”, which is defined using the “provides” relationship between the latter entities.

3.4 User model

A user profile is a set of properties that uniquely identify a user, and specify their state, capabilities and preferences. For the purposes of the presented work, a generic model of user profile has been defined, aiming to cover a wide range of user categories. The description of the user is crucial for the proposed approach, since it assists the Context awareness manager to target a specific or event customized service or assistance to the user. The InPrinted framework user model is based on the user profile ontology introduced in [13], including user’s characteristics such as identity, reading preferences, preferred interaction modalities. Such model has been further extended in order to provide extra information regarding users’ interaction with printed matter.

3.5 System and environment

Figure 5 illustrates the system and environment model of the InPrinted framework. The “system” entity represents any AmI system that has been developed based on the InPrinted framework and deployed into a referenced AmI environment. Each system provides a set of capabilities, such as the supported printed matter interactions and augmentations, and exposes the set of “devices” that it integrates. A “device” entity adheres to any existing devices / agents / services that have been installed in the AmI ecosystem and can be used by any “system”. The framework’s devices are classified in two categories:

-

a)

input devices, including any type of service acting as a sensor in the environment, and providing primitive information regarding the existence and interaction of a user with the system

-

b)

output devices, referring to the environment “devices” that act as actuators and provide printed matter augmentations.

The environment model is based on the ontology defined in [43]. The “environment” entity provides a number of “environmental conditions”, such as humidity, lighting, noise, pressure and temperature. Furthermore, the notion of “location” is defined in a retrospective hierarchy by introducing the “absolute” and “relative” location entities. Each “device” of the environment is assigned to one “location” through the “located at” relationship. Since a “system” can consist of many “devices” situated in different locations, its location is considered as the union of all the locations of its individual devices.

4 InPrinted framework

The InPrinted framework provides all the necessary components to facilitate the integration of applications that use printed matter interaction in smart environments. The framework has been implemented according to a service-oriented-architecture (SoA), which makes its integration and deployment into already existing smart environments easy.

The components of the framework are illustrated in Fig. 6, and discussed in the following sections.

4.1 External H/W and S/W integration with InPrinted

The lower levels depicted in Fig. 6 represent Input / Output (I/O) components that may exist in AmI environments and are necessary for the acquisition of real world properties (e.g., printed matter localization, recognition) and activities (e.g., user interaction). In order to integrate the large diversity of such I/O components, the framework abstracts them into two main categories:

-

(a)

Printed matter recognition and tracking. There are several ways to recognize printed matter or physical paper. For example, Holman et al. in [15] use special markers for physical paper recognition and tracking. Another approach is based on SIFT features for printed matter recognition and localization [26]. To address such diversity, the InPrinted framework intercommunicates with a separate low-level external layer through a common middleware infrastructure. This approach enables the genericity of the framework, decoupling it from the mechanisms that a system uses to perceive the real world (e.g., physical object recognition).

-

(b)

Interaction recognition. Following the same approach as with printed matter recognition and tracking, the interaction recognizer represents the class of all potential printed matter interaction facilitators of an AmI environment. In this respect, the InPrinted framework intercommunicates with interaction facilitators through a common middleware infrastructure.

For each of these two classes, the framework provides communication details and specifications.

The communication layer is realized by the FAmINE middleware [12], which provides the necessary intercommunication infrastructure with the available I/O components for printed matter recognition, localization and interaction.

4.2 Interaction manager

The Interaction manager undertakes the task of interpreting users’ interactions with printed matter. In more details, the interaction manager receives input from the available I/O components of the environment and correlates it with recognized printed matter. The interaction techniques that are currently supported by the Interaction manager are:

-

a)

Handwriting: a user’s sequential drawing on a handwriting placeholder (i.e., an empty area of printed matter reserved for handwriting, such as a textbox or a page margin) is interpreted as handwriting. The Interaction manager feeds the user’s inscription to a handwriting analysis engine [35] in order to recognize the text. If a text is recognized, then the Interaction manager annotates the Context awareness manager with the text. In the opposite case, the user writing is analyzed again and classified as a specific gesture or user’s drawing annotation.

-

b)

Gestures: Table 1 summarizes the gestures that are currently supported by the InPrinted framework. Similarly to handwriting recognition, if the Interaction manager recognizes a specific gesture (after handwriting analysis), it triggers the Context awareness manager with the recognized gesture.

-

c)

Touch / click: user’s pointing on a specific area of the printed matter is interpreted as a touch action. If, afterwards, the user raises up their hand (or the stylus) without sliding towards a direction, then the Interaction manager perceives this as a click, analogous to a mouse click.

-

d)

Printed matter direct manipulation: this category of interaction includes all user’s interactions referring to a direct printed matter manipulation, and namely: printed matter appeared in the interaction field of an AmI system, printed matter disappeared, printed matter moved in relation to its previous location, a new content of the printed matter is set active (e.g., a new page of a book is visible to the system after a user has flipped / changed page).

-

e)

Combined interaction: the user can combine two or more pieces of printed matter in order to accomplish more complex tasks (e.g., transfer a picture displayed on a paper that has been placed on an interactive surface to the user’s image gallery by approaching a printed camera next to it). The combined interactions supported are: collocate (two or more pieces of printed matter or other physical objects are placed laterally and close to each other), collate (two or more pieces of printed matter are collated as a stack), flip (a piece of printed matter is flipped either on its long or its short edges), staple (two pieces of printed matter are stapled together applying one’s side on the other’s side), and put on top (one or more physical objects or hands are placed on top of a printed matter).

The Interaction Manager functional architecture is presented in Fig. 7. Its core lies in the Interaction Recognition components, which are responsible for the timely recognition of users’ interactions with the AmI systems that are installed into the environment. All the Interaction recognition components run in parallel, grouping the incoming events appropriately, and keeping the history needed for producing the corresponding interaction results. In more detail, during initialization, the Interaction manager requests from the Context awareness manager a list containing the installed devices / agents in the AmI environment, as well as their current status. This information is kept in the Device/Agent Register component. For each Device/Agent contained in the list, a Device/Agent Listener is activated and starts listening for corresponding interaction events. When an interaction event is received by a listener, different steps are executed, depending on the type of the event, (touch or printed matter event), as illustrated in Table 2.

Interaction Events are generic events that are broadcasted from the Interaction manger to the rest of the components through the system’s middleware. Each Interaction Event consists of a header containing: (i) the interaction type, (ii) the corresponding device/agent and (iii) the region of interest of the event. Optionally, and in accordance with the type of the event, a string array payload can be also included.

Each time the status of a registered device is changed, a “device/agent status update” event is sent by the Context awareness manager and the Device/Agent Register, which is listening for these events and updates the Interaction Manager registry.

4.3 Context awareness manager

The decision layer is implemented by the Context Awareness Manager, a fundamental component of the InPrinted framework, which is responsible for the selection of the appropriate UI components and corresponding content, according to the user’s interaction in a specific context of use. The Context awareness manager is based on the ontology of Fig. 2.

For the implementation of the framework’s decision making, a rules-based expert system is used as the core semantic reasoner. Many mature tools exist, such as Jess,Footnote 1 Drools,Footnote 2 and the Windows Workflow Foundation Rules Engine (WWFRE) [34], which provide decision-making functionality that can be based on ontology models. In the context of the InPrinted framework, WWFRE was selected mainly for simplicity in terms of integration (e.g., it provides C# APIs that can be easily incorporated in the framework).

One main feature of the aforementioned technology is its forward chaining capability, which allows atomic rules to be assembled into RuleSets without the definition of, or necessarily even the knowledge of, the dependencies among the rules. However, WWFRE provides the possibility to rules’ writers to gain more control over the chaining behavior by limiting the chaining that takes place. This enables the rule modeler to limit the repetitive execution of rules, which may give incorrect results. Furthermore, this approach increases the overall reasoning performance and prevents runaway loops. This level of control is facilitated in WWFRE rules by two properties, which can be both set during ruleset design:

-

Chaining Behavior property on the Ruleset.

-

Re-evaluation Behavior property on each rule.

Figure 8 illustrates the overall functional architecture of Context awareness manager, which consists of three basic components namely (i) Ontology Manager, (ii) Reasoner and (iii) Intercommunication facilitator.

Each component is responsible for realizing specific functionality, which adheres to the context awareness reasoning provided by the framework, as follows:

-

The Ontology Manager is the local ontology based repository of the framework, which provides the necessary content management functionality, for reading, enriching and modifying the ontology of Fig. 2. The implementation of the Ontology Manager is based on the dotNetRDF library,Footnote 3 which provides basic functionality for reading and writing data in OWL/RDF format, perform SPARQL queries, as well as interoperating with relational databases specifically designed for relational property graphs data management, such as the Virtuoso Universal Server.Footnote 4 The Ontology Manager offers a Create, read, update and delete (CRUD) API to the rest of the Context awareness manager’s components, transforming the framework’s ontology from OWL/RDF to C# objects. In addition, this component provides the transformation of the XML files originating by the Page annotation tool (described in section 4.7) to the ontology based model of the framework.

-

The Reasoner component is responsible for materializing the whole Printed Matter Framework rationale, providing the decision making process which is based on the produced inferences made by the application of the Ruleset on interaction events triggered in the AmI environment. This process, which is one of the basic mechanisms of the proposed framework, is discussed below.

-

The Intercommunication facilitator provides the necessary functionality for the communication of the Context awareness manager with the rest of the framework’s components. It is actually a client implementation of the FAmINE communication layer, which is responsible for listening to all the interaction events produced by the Interaction manager and forwarding the reasoning results to the rest of the framework’s components. It also provides fundamental information related to the whole ecosystem as it is captured by the framework’s ontology model (e.g., it provides the list of the available devices operating in the environment).

The reasoning process is triggered every time a new interaction event is generated and propagated by the Interaction Manager. The notion of an interaction event in the context of the reasoning process adheres to the intentional or implicit user prompt for information acquisition anticipated by the AmI environment. An example of an implicit user interaction can be the fact that a user has kept open the page of a printed document for a certain time period, which signifies to the system their interest for that particular page. The system can then initiate a page content analysis process, aiming at finding relevant content (e.g., from its database or the internet) which may also interest the user.

The major objective of the Reasoner component is to provide the user with the most suitable information by the appropriate AmI system in the most suitable form. In other words, this component aims to select the most appropriate user’s environment augmentation for rendering the information that pertains to the user’s interaction.

For every new interaction event received by the Context manager, a process based on five priority groups, as illustrated in Fig. 9, is commenced. Each priority group corresponds to different Rulesets, yielding intermediate inferences related to the selection of the most appropriate augmentation that will be rendered by the appropriate system of the AmI environment.

The first priority group regards preparatory rules that should be applied in order to determine who is the user issuing the event, which is the printed matter involved, which is the originating AmI system from which the event was triggered, and if this event is part of a broader user interaction with the environment. Once the user, the printed matter and the system have been identified, the next priority concerns the selection of the most suitable augmentations for providing the information corresponding to the event. In this case, decisions regarding the content that will be visualized are also required, using – when necessary – the tools of the content processing toolkit (see section 4.6). When a prominent augmentation is selected, then it is validated by the next Rulset, based on the user’s preferences, the AmI system’s availability and capability of rendering the selected augmentation, as well as the environmental conditions (e.g., a noisy place versus the user’s bedroom). If the recommended augmentation meets all the aforementioned prerequisites, then the system selects it as the final augmentation. In case that some of the aforementioned prerequisites are not fulfilled, then the system applies an alternative selection Ruleset, which tries to find alternative ways for providing the corresponding information to the user, either by selecting an alternative nearby system which supports the recommended augmentation, or by selecting an alternative augmentation. In the latter case, due to the Chaining behavior of WWFRE, the Rulesets of priorities two to five are re-evaluated based on the new findings.

4.4 Content processing toolkit

A Content processing toolkit has been implemented, so that the Context manager can extract the necessary information from the corresponding printed matter. The toolkit includes a number of external processes such as Optical Character Recognition (OCR), information harvesting from various internet sources (e.g., Google search, Wikipedia), and a page content extractor (e.g., extracting text or images from the open pages, etc.)

For the content extractor and OCR functionality, an independent service has been developed based on the .NET wrapper of the Tesseract open source OCR engine [51]. It accepts as input any digital image of printed matter and tries to retrieve any text in the image, as well as to recognize non-text parts of the page, such as photos, figures, etc. After the text of the document has been extracted, the tool proceeds to spell checking using the NHunspell free Spell-Checker for .NETFootnote 5 in order to improve the recognized text. The tool returns an xml file containing the recognized text and other printed objects, annotating each of these with the pertinent coordinates in pixels with respect to digital image of the source printed matter.

Additionally, a generic web search tool has also been integrated, aiming at finding web resources (i.e., text, digital images, videos, etc.) related to a set of keywords, provided from the Context awareness manager, originating either from the semantic analysis of a printed matter or from a printed matter extracted area annotated by the user (e.g., using the cropmarks gesture). In the second case, the content extractor and the OCR tool are used for extracting the relevant text from the printed matter. The web search tool accepts as input any arbitrary text, from which it tries to extrapolate meaningful search terms. For doing so, keyword extraction is performed based on word co-occurrence statistical information [32]. The set of the extracted keywords is then inserted as search terms in prevalent search engines, such as the Google search engine or Microsoft Bing. For each of these search engines, the recommended official APIs are used. The results are populated back to the Context awareness manager.

Another tool of the Content processing toolkit is the online dictionary and thesaurus tool, aiming at providing word definitions and other related lexicographic information. This tool has also been developed as a standalone service accepting words as input. For each of the input words, it returns back lexicographical information based on the Wordnet electronic lexical database [37], such as the word definition, synonyms and antonyms, part of speech (e.g., adjective, noun, verb, adverb), and example sentences explaining the use of the word. The online dictionary and thesaurus interoperates with the rest AmI ecosystem through the FAmINE middleware.

4.5 Augmentation manager

The information layer consists of the Augmentation manager, which is responsible for the rendering of the available UIs provided by the InPrinted framework for printed matter digital augmentation, adapted to the users’ preferences and needs. In more detail, the Augmentation manager provides an API through which every AmI application developed using the framework is able to: (a) listen for augmentation results that stem from the reasoning processing after a user’s interaction, (b) acquire assets, in an appropriate format, related to the augmentation result and (c) render this information to an AmI System (using the UI toolkit, which is discussed in section 4.6).

To this end, each application developer is equipped with the necessary tools in order to easily incorporate in the AmI ecosystem any application supporting interaction with augmented printed matter.

Figure 10 depicts the overall Augmentation manager architecture, which consists of four basic components that materialize the aforementioned rationale for perceiving, preparing and rendering a digital augmentation in an AmI System.

The Augmentation results listener intercommunicates with the Interaction manager and the Context awareness manager, receiving related messages regarding actions that should be handled by the application. Although the majority of the event types that the Augmentation manager handles mainly originate from the Context awareness manager, there are a few event types that can be received directly from the Interaction manager. This fosters the performance of the overall system, since applications can be directly aware of events that do not need further processing, such as printed matter appearance, disappearance or move. Of course, if these types of events affect the overall context of use, then the system anticipates the user’s interaction in a more sophisticated manner (requiring the involvement of the Context awareness manager), in which case additional events can be produced and sent to the Augmentation manager as well.

For example, let’s consider the case of an application in the AmI environment that has been placed on a horizontal surface (e.g., a table), and which augments printed matter by continuously highlighting it (as an indicator that the system has recognized it). If a user moves one of the pieces of printed matter existing on the surface, then the application should be aware of this event in order to update its augmentation rendering. This can be directly provided by the Augmentation manager. However, if the user, while moving the piece of printed matter, brings it near to or collates it with another piece of printed matter on the table, then this action triggers a combined interaction event that will be processed by the Context awareness manager, providing a more complex augmentation to be performed (e.g., combine the contents of the two printed matter pieces to provide a merged text). After a new interaction or reasoning event message has been received, the Augmentation results listener de-serializes it and extrapolates two different types of information: (a) the kind of augmentation which should be rendered and (b) the content of the augmentation.

The Information formatting component provides the necessary functionality for appropriately formatting the acquired information for the selected augmentation. It formats any textual information based on predefined templates, it acquires and caches video or audio clips that are available in external repositories, and it produces multimedia information, including any combination of the aforementioned types of content, in a solid hypertext content.

The Augmentation initialization component is responsible for instantiating and initiating the selected augmentation. In order to do so, it uses a combination of the UI components that are available through the UI toolkit library (see section 4.6), and it encapsulates them in a basic UI container, which is populated to the Application renderer.

The Application renderer component acquires input by the Information formatting and Augmentation initialization components and fuses this information into one final digital augmentation that is rendered by the AmI system that the application is running on.

4.6 UI toolkit

A number of basic UI components for printed matter augmentation have been developed as part of the InPrinted framework: video player, images slide show, drawing tools, the LexiMedia word dictionary, auditory feedback, map terrain, and content effects component. All these UI components, which are described in detail below, constitute ready-to-use modules that developers can embed in their applications.

4.6.1 Video player

This component provides a simple video player that can be cropped in any shape to support superimposed videos on the printed matter. Figure 11 illustrates a potential use of the Video Player component. A children’s book page is augmented with a video clip cropped according to any custom shape defined in the page’s content on which the video is superimposed, thus providing the impression that it has become part of the book.

4.6.2 Images slide show

The image slide show provides an intuitive component, sequentially displaying a number of still images, supporting gestures or simple touch/click for image transition. Figure 12 depicts an example of printed matter augmentation through projection. A user can select to see images related to a specific content of the open page by touching this particular part. The system projects the Images slide show component laterally to the physical page. The user can then browse the available images using the next/previous buttons of the component, or by carrying out the appropriate touch gesture.

4.6.3 Drawing tools

This component (Fig. 13) comprises a set of drawing facilities such as color palette, ink thickness, undo and clear all actions. The user is able to draw over or next to a printed matter, keep notes, make annotations, etc.

4.6.4 LexiMedia

LexiMedia can be used as a rich dictionary that provides words definitions, thesaurus, etc., but also displays related multimedia content (images and video) acquired from the web [25]. As soon as the user indicates the word of interest, a preview of the word information is displayed including up to three definitions for the given word, five representative images and five related videos (Fig. 14a). Additionally, dictionary information can be viewed, including all the definitions available for the word, as well as synonyms and examples for each definition (Fig. 14b). Furthermore, users can view a number of images (Fig. 14c) and videos related to the specified word, which are retrieved by Google. Additional facilities include viewing enlarged images, playing videos, and viewing the visited words history.

4.6.5 Auditory feedback

The InPrinted framework supports printed matter augmentation through audio cues. It incorporates a text-to-speech component as well as an audio player. The audio feedback can be accompanied by visual cues, further enhancing the overall User Experience (UX). An example is illustrated in Fig. 15. The user selects to hear a song related to the content of the open page by clicking on a specific hot spot. While the song is playing, moving musical notes that appear and disappear are displayed on the open page near the active hot spot.

4.6.6 Map terrain

The map terrain component provides an interactive digital map using available online geospatial data (e.g., Google Maps, Open Street Maps, Bing Maps). It supports annotation of points of interest, route finder, etc. Fig. 16 illustrates the Map terrain component that is projected near the first page of the leaflet of the Foundation for Research and Technology - Hellas (FORTH). The user can get geospatial information by touching the title of the leaflet. Subsequently, they can interact with the Map terrain, getting for instance directions on how they can reach FORTH.

4.6.7 Content effects

A set of content effects is supported by the InPrinted framework, facilitating the transformation of augmented printed matter into a live document. An example is illustrated in Fig. 17b, where the hedgehog image is an active hot-spot. When a user clicks / touches on it, the hedgehog leaves its initial place in the page and starts running out of the screen. A similar example is depicted in Fig. 17c, where Rickie, one of the three little pigs, introduces itself, when it is clicked / touched by the user, turning its head and saying its name while a textual cue is provided. The text provided also follows an animation path.

4.7 Page annotation tool

Figure 18 portrays the Page annotation tool, an editor for developers and content creators. It supports basic functionality for the definition of interactive hot-spots on a digital copy of a printed matter, facilitating the classification of actions that will be triggered by the framework on each hot-spot activation by the users.

The basic components of the Page annotation tool are:

-

a.

Digital image canvas: a digital copy of a printed matter is displayed and the users can create and modify the outlines of the provided hotspot for the specific printed matter.

-

b.

Hot-spot outline toolbar: provides the necessary functionality for hot-spots area definition on the printed matter, supporting actions such as hot-spot outline path modifications (add a new point or remove a point from the path), undo actions, fit image to the screen and clear outline path.

-

c.

Host-spot data panel: consists of a digital form for hot-spot properties that the user should fill in. Each hot-spot consists of: a unique ID that is assigned automatically by the framework the first time the hot-spot is created; metadata information defining the required actions that the framework should trigger when the hotspot is activated (e.g., in Fig. 18 a video search related to works of a specific poet is assigned to the selected hot-spot); and presentation attributes, such as automatic activation of the specific hot-spot when the printed matter is recognized by the framework, hot-spot exclusivity concerning other already triggered hot-spots, the color of hot-spot’s outline, etc.

-

d.

Available hot-spots list: summarizes all the available hot-spots for the specific printed matter, allowing the users to select and modify them.

The Page annotation tool exports the aforementioned information for each hot-spot to xml format and populates it in the Context awareness manager.

4.8 Interactive printed matter simulator

This tool simulates an AmI environment that supports interaction with printed matter. It can be considered as an abstract AmI system, which incorporates simulated “devices and services” and propagates information to the InPrinted framework. The simulator can be used throughout the implementation phase of any new application, enabling the developers to simulate AmI environments where the application will be embedded, without the need of actually deploying the application in such environments. This is very useful for the first implementation steps, in order to easily and effectively debug a new application.

The available functionalities provided by the simulator are:

-

Registration of a virtual/simulated AmI system with specific capabilities to the Context awareness manager. The user can define which available devices/services are employed, as well as, physical properties of the system (e.g., surface dimensions in case the system is tabletop).

-

Simulation of the existence, move and disappearance of multiple printed matter pieces that are collocated in the system.

-

Provision of all the available user interactions on the existing printed matter.

5 Evaluation

For the assessment and evaluation of the InPrinted framework, four Ambient Intelligence applications have been implemented, constituting indicative real life examples, as summarized in Table 3. Several evaluation iterations have been carried out with the aim to assess interaction with printed matter, as it has been instantiated in the four applications developed. At first, all four systems were evaluated individually, aiming to assess their usability [24, 26, 27, 41], emphasizing issues pertaining to user interaction with printed matter and the supported augmentations. Finally, a large-scale in-situ evaluation was carried out, aiming to test one of the systems with a larger number of users in order to acquire quantitative results regarding interaction with paper [30].

This section briefly describes the various evaluation iterations and summarizes their results (Section 5.1). The main focus is on the results of the large-scale in-situ evaluation. To this end, Interactive Maps, the system that was evaluated is introduced (Section 5.2), and the evaluation methodology and procedure briefly described (Section 5.3), as these have been extensively reported elsewhere [30]. Then, the results of the in-situ evaluation are presented in details, focusing on the system’s ease of use, the naturalness of interaction with augmented paper, the overall user experience, as well as the effects of users’ age and computer expertise on user satisfaction and (perceived) ease of use (Section 5.4).

5.1 Synopsis of the evaluation iterations

Table 3 provides an overview of the systems have been developed using the InPrinted framework, and summarizes the supported interactions and augmentations.

In summary, all the systems have been evaluated following a heuristic evaluation approach involving UX experts [38], while the heuristic evaluation of Study desk was followed up by user testing. Study desk was selected to be tested with users as the most inclusive system, incorporating a large number of interaction modalities and augmentations supported by the framework. Beyond the individual assessment of each system, however, a comparative evaluation has been carried out involving end-users in a within subjects experiment, aiming to not only find specific UX problems for each system, but also to derive conclusions regarding the overall UX and users’ preferences of various interaction modalities, as they are provided by each system.

Through all the evaluation phases it was evident that users and UX experts agreed on the fact that the systems provide a positive user experience and are well accepted. Furthermore, no specific concerns or usability problems were found regarding augmenting physical paper and interacting with its digital counterpart, or digital augmentations. In fact, users in the laboratory experiment where the think-aloud protocol was applied, identified as a positive attribute of the systems the fact that traditional studying artifacts were augmented. The users were also pleased about the potential benefits that this could bring to educational activities.

An interesting finding, consistent throughout all the heuristic evaluation experiments, was the concern of experts regarding the discoverability of the supported gestures, especially by first time users. This result was further confirmed in the user testing that was carried out for Study desk. This issue was addressed by adding a help functionality in all the systems, including the Interactive Maps system.

In terms of interaction modalities, the comparative evaluation revealed that touch and card-based interactions scored higher than pen-based and handwriting interaction regarding their ease of use.

5.2 The interactive maps system

The Interactive Maps system is an adapted version of the Interactive Documents system [26], targeted to facilitate interaction with printed maps as well as their augmentation with digital information. The system has been installed at the Tourism InfoPoint of the Municipality of Heraklion, in the island of Crete, Greece. The InfoPoint is an original facility by international standards that promotes the island’s attractions and provides visitors with novel ways to access information.

More specifically, Interactive Maps is a tabletop system on which visitors may place a printed map of the historical center of Heraklion, freely distributed at the InfoPoint (Fig. 19). Once the map is placed on the table, the system annotates the hotspots that are digitally augmented and thus become interactive, assisting users in easily identifying points of information on the printed map. Users can touch on the printed map hotspots in order to receive further information, which is presented laterally to the map. More specifically, for each hotspot, multimedia in several languages is made available to the user, including textual information, images and videos. As a result, the city visitors can have a preview of several sightseeing points and plan in advance their tour in the historical center of the city. At the same time, the printed map can be used in a traditional manner, for example by annotating points of interest or routes. Finally, visitors can take the printed map with them to guide them in their city tour.

Interactive Maps comprises a desk, over which a projector, a high resolution camera and a depth sensor have been installed. The system is integrated into a regular PC (i.e., Intel i7 CPU, 8GB RAM). The camera overlooks what is happening on the desk’s surface and feeds real time the application with captured images. The application is able to recognize predefined printed maps and track them continuously while they are placed on the desk’s surface. The depth sensor is used in order to provide depth images of objects that are placed over or near the surface. These images are analyzed in order for the system to be able to recognize and eventually track human fingers. Every time a finger touches a map on the desk, the application examines if this touch should trigger a hotspot trigger event. In such case, the system searches and acquires digital assets that adhere to the corresponding hotspot of the map. Then these assets are compiled, formatted and displayed laterally to the map nearby the engaged hotspot [30].

The system was evaluated via a large-scale in-situ observation experiment with actual visitors of the InfoPoint, aiming at (i) a large number of users in order to acquire quantitative results regarding interaction with paper and (ii) observing how the system is used in a real-world setting. The in-situ evaluation took place during five consecutive days at the official working hours of the InfoPoint. Typically, the InfoPoint has in average 80 visitors per day during the high tourist season (from early spring until late autumn). It should be noted that the InfoPoint features seven interactive systems in total, with Interactive Maps being one of them. Although the methodology and procedure were the same for all the seven systems that participated in the evaluation, the hypotheses that were explored and the results described in the following sections pertain only to the Interactive Maps system.

5.3 In-situ evaluation: methodology and procedure

The evaluation of Interactive Maps aimed at assessing the overall user experience with the system in terms of interaction with physical paper, and in more details to explore the following hypotheses:

-

H1. The system is easy to use with minimum guidance.

-

H2. Touch-based interaction with the augmented paper is natural.

-

H3. The overall user experience is positive.

-

H4. The system will be easier to use and more appealing to younger users.

-

H5. Experienced computer users will find the system more appealing and easier to use.

The method of in-situ evaluation was selected, mainly due to the benefits it promises, which are associated with exploring how a system is actually used in its real environment, avoiding contrived situations imposed in laboratory and field testing [9], while revealing how the environment itself can have quite a different impact on the user experience [45]. Compared to laboratory evaluations, in-situ evaluations have been found to identify more problems in general, being at the same time the only method that reveals problems related to cognitive load and interaction style [39].

Before determining the exact methodology and tools, it was important to fully understand the context. Therefore, the research team delved deep into the InfoPoint daily routines and visitors’ behavior around the interactive systems by observing and documenting points of interest and points of caution. These observations along with state of the art research in the field dictated the need for an evaluation combining objective and subjective assessments, namely observation, semi-structured interviews with users, semi-structured interviews with employees of the InfoPoint, as well as questionnaires filled-in by the users themselves [30]. The questionnaire included open-ended comments as well as metrics aiming to assess the system’s appeal, usability, and usefulness, which were provided as bipolar adjective pairs on a 5-point rating scale. The questions included in the semi-structured interviews and the questionnaires are available in the Supplementary Material.

With regard to observations, free note taking was complemented with a structured observation grid, aiming to record the following parameters based on [33]: appeal, learnability, effects of breakdowns, distraction. Additionally the observation grid was further enhanced with parameters related to cultural readiness, flexibility, and interaction behavior as summarized in Table 4.

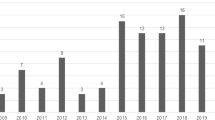

In total, the Interactive Maps system was used by 87 visitors, individually, in pairs or small groups of three or four visitors, resulting in 46 system usages (individual: 15, pairs: 24, small groups: 7). Participant ages ranged from children to seniors, as follows: 5 children (younger than 12 years old), 1 teenager (12–17 years old), 24 youth (17–30 years old), 35 young adults (30–44 years old), 21 adults (44–60 years old), 1 senior (older than 60 years).

Additional self-reported demographic data of the participants [30] indicated that many of them have good computer skills (low: 3.23%, intermediate: 54.84%, high: 41.94%), that they use computers on a daily basis (every day: 93.55%, several times a week: 3.23%, several times a month: 0.00%, almost never: 3.23%, never: 0.00%), and that many of them have computer-related studies or profession (61.29%).

Upon approaching the first interactive system installed at the InfoPoint premises, evaluation participants were informed of the evaluation and the procedures that would be followed. Those who orally agreed to be observed during their interaction with the systems were given an informed consent form to sign. Then, they were left to move inside the InfoPoint and use the interactive systems or freely ask for instructions the InfoPoint staff. Their interactions with the Interactive Maps system were recorded by a dedicated observer for each system, using custom-made observation sheets [30]. Before leaving the building, participants were given the questionnaires to fill-in (one for each system they had interacted with), and were interviewed. Finally, they were thanked for their time and contribution towards improving the InfoPoint user experience.

5.4 In-situ evaluation results

The initial hypotheses have been explored by combining data recorded in the observation sheets with questionnaire data, as illustrated in Table 5. This section reports the results for each one of the five hypotheses, and provides insights from the qualitative analysis of the free text comments and interviews with visitors and employees.

-

H1. The system is easy to use with minimum guidance

According to the observations, participants in their majority used the system without reading instructions or asking for assistance, and without encountering major difficulties, as illustrated in Fig. 20.

A correlation of observation data highlighted that out of the 8 participants who read instructions, 7 (87.5%) understood how to use the system by themselves, while only 1 participant (12.5%) did not fully understand how to use the system (Fig. 21 left) and had to ask for assistance in order to do so (Fig. 21 right).

Further analysis of the difficulties encountered revealed that out of the 15 participants who faced difficulties, 12 (80%) encountered problems related to touch, 4 (26.67%) tried to carry out an unsupported gesture – namely swipe and moving a popup, while 1 (6.67%) faced a problem since the printed map was out of the camera recognition area. A qualitative analysis of observers’ notes suggested that visitors seemed familiar with touch gestures, perhaps having prior experience with smartphones or tablets, and had no specific problems in extending the use of gestures from mobile devices to printed matter. The responsiveness of such devices however is quite more immediate than that of gestures detected through computer vision, a technology which was not visible to participants. As a result, the system did not adhere to their expected response time, leading to repeated touches, which was recorded as a difficulty.