Abstract

We consider a product of 2 × 2 random matrices which appears in the physics literature in the analysis of some 1D disordered models. These matrices depend on a parameter 𝜖 > 0 and on a positive random variable Z. Derrida and Hilhorst (J. Phys. 16(12), 2641, 1983, § 3) conjecture that the corresponding characteristic exponent has a regular expansion with respect to 𝜖 up to — and not further — an order determined by the distribution of Z. We give a rigorous proof of that statement. We also study the singular term which breaks that expansion.

Similar content being viewed by others

Notes

We choose \(\{0,1\}^{\mathbf {T}_{N}}\) instead of \(\{-1,1\}^{\mathbf {T}_{N}}\) to simplify the formulas. They are equivalent by easy manipulations.

References

Bougerol, P., Lacroix, J.: Products of Random Matrices with Application to Schrödinger Operators, vol. 8. Prog Probab (1985)

Calan, C.d., Luck, J.M., Nieuwenhuizen, T.M., Petritis, D.: On the distribution of a random variable occurring in 1d disordered systems. J. Phys. A 18(3), 501 (1985)

Campanino, M., Klein, A.: Anomalies in the one-dimensional anderson model at weak disorder. Comm. Math. Phys. 130(3), 441–456 (1990)

Comets, F., Giacomin, G., Greenblatt, R.L.: Continuum limit of random matrix products in statistical mechanics of disordered systems, arXiv:1712.09373. To appear in Comm. Math Phys (2019)

Crisanti, A., Paladin, G., Vulpiani, A.: Products of Random Matrices in Statistical Physics, vol. 104. Springer Ser Solid-State Sci (1993)

Derrida, B., Hilhorst, H.: Singular behaviour of certain infinite products of random 2×2 matrices. J. Phys. 16(12), 2641 (1983)

Derrida, B., Zanon, N.: Weak disorder expansion of Liapunov exponents in a degenerate case. J. Stat. Phys. 50, 509–528 (1988)

Dyson, F.J.: The dynamics of a disordered linear chain. Phys. Rev. 92, 1331–1338 (1953)

Furstenberg, H.: Non-commuting Random Products. Trans. Amer. Math. Soc. 081, 377–428 (1963)

Furstenberg, H., Kesten, H.: Products of random matrices. Ann. Math. Statist. 31, 457–469 (1960)

Furstenberg, H., Kifer, Y.: Random matrix products and measures on projective spaces. Israel J. Math. 46, 12–32 (1983)

Genovese, G., Giacomin, G., Greenblatt, R.L.: Singular behavior of the leading Lyapunov exponent of a product of random 22 matrices. Comm. Math. Phys. 351(3), 923–958 (2017)

Grabsch, A., Texier, C., Tourigny, Y.: One-dimensional disordered quantum mechanics and sinai diffusion with random absorbers. J. Stat. Phys. 155(2), 237–276 (2014)

Hennion, H.: Limit theorems for products of positive random matrices. Ann. Probab. 25(4), 1545–1587 (1997)

Kevei, P.: A note on the Kesten Grincevičius Goldie theorem. Electron. Commun. Probab., 21 (2016)

Kevei, P.: Implicit renewal theory in the arithmetic case. J. Appl. Probab. 54(3), 732–749 (2017). https://doi.org/10.1017/jpr.2017.31

Matsuda, H., Ishii, K.: Localization of Normal Modes and Energy Transport in the Disordered Harmonic Chain. Progr. Theoret. Phys. Suppl. 45, 56–86 (1970)

McCoy, B.M., Wu, T.T.: Theory of a two-dimensional ising model with random impurities. I. Thermodynamics. Phys. Rev. 176, 631–643 (1968)

Nieuwenhuizen, T.M., Luck, J.M.: Exactly soluble random field Ising models in one dimension. J. Phys. A 19(7), 1207 (1986)

Oseledec, V.I.: A multiplicative ergodic theorem: Lyapunov characteristic exponents for dynamical systems. Trans. Moscow Math. Soc. 19, 197–231 (1968)

Ruelle, D.: Analycity properties of the characteristic exponents of random matrix products. Adv. Math. 32, 68–80 (1979)

Sadel, C., Schulz-Baldes, H.: Random Lie group actions on compact manifolds: a perturbative analysis. Ann. Probab. 38, 2224–2257 (2010)

Schmidt, H.: Disordered one-dimensional crystals. Phys. Rev. 105, 425–441 (1957)

Shankar, R., Murthy, G.: Nearest-neighbor frustrated random-bond model in d = 2: Some exact results. Phys. Rev. B 36, 536–545 (1987)

Viana, M.: Lectures on Lyapunov Exponents. Cambridge Studies in Advanced Mathematics. Cambridge University Press (2014)

Acknowledgements

This work is part of my PhD thesis supervised by Giambattista Giacomin. I would like to thank him for giving me the opportunity to work on this subject and for fruitful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Generalization to Higher Dimension

Appendix: Generalization to Higher Dimension

The techniques developed in the previous sections are sufficiently robust to be used in more general settings. We apply them to a square matrix of size d + 1 which is a perturbation of a matrix alike Diag(1, Z), which still have a preferred direction. Since the proofs are only slightly different from the previous sections, they will be only sketched in this appendix. We will just point out the arguments that must be adapted and many details will be omitted.

We now consider the (d + 1) ×(d + 1) matrix

where L𝜖 and C𝜖 are random vectors of size d, and N𝜖 is a random matrix, of size d × d. We are still interested in the Lyapunov exponent, defined by the limit

where \((M_{k,\epsilon })_{k \geqslant 1}\) are iid copies of M𝜖. This limit exists almost surely and is deterministic (see again [10]) as soon as for every 𝜖 > 0, E[log+∥M𝜖∥] < + ∞.

We derive in this section a regular expansion for \(\mathcal {L}(\epsilon )\), alike the expansion provided by Proposition 3.1 in the previous setting. However, no lower bound on the error will be given here. We start by deriving a formula alike “\(\mathcal {L}(\epsilon )= \mathrm {E}[\log (1+\epsilon ^{2} X_{\epsilon })]\)” (Lemma 14).

In the whole section ∥⋅∥will denote a given norm on Rd or Rd+ 1, as well as the induced operator norm on \(\mathscr{M}_{d}(\mathbf {R})\) or \(\mathscr{M}_{d+1}(\mathbf {R})\). On another note, if x, y ∈Rd, we will write x ⩽y if the inequality holds coordinatewise. Similarly the stochastic dominance \(\preccurlyeq \) will be extended to random vectors: \(X \preccurlyeq Y\) means that there exists a copy \(\tilde X\) of X and a copy \(\tilde Y\) of Y satisfying \(\tilde X \leqslant \tilde Y\) almost surely (coordinatewise).

Let’s introduce the assumptions under which we will work in the section. Observe that under these assumptions, the condition E[log+∥M𝜖∥] < + ∞ is fulfilled so the Lyapunov exponent is well defined.

Assumptions 2

We assume that the following holds, for every 𝜖 ∈(0, 𝜖0).

-

(a)

The random matrix M𝜖 has non-negative entries. And, almost surely, there exists \(N\geqslant 1\) such that the product MN, 𝜖⋯M1, 𝜖 has positive entries.

-

(b)

There exists δ𝜖 > 0 such that \(\mathrm {E}[\| N_{\epsilon } \|^{\delta _{\epsilon }}]<1\) and \(\mathrm {E}[\|C_{\epsilon }\|^{\delta _{\epsilon }}]<+\infty \).

-

(c)

E[log+∥L𝜖∥] < + ∞.

Before deriving the formula for the Lyapunov exponent, we introduce the random vector Y𝜖, which will play the same role as X0 in our new setting (except that here it will depend on 𝜖). Namely it will be used through stochastic dominances.

Lemma 13

Fix 𝜖 ∈(0, 𝜖0) and let (N𝜖, k, C𝜖, k) be iid copies of (N𝜖, C𝜖). The series

converges almost surely. Moreover E[log+∥Y𝜖∥] is finite. If, in addition,

then E[∥Y𝜖∥β] = O(1) as 𝜖 goes to 0.

Proof

Since all the entries of M𝜖 are non-negative, the sum (115) is always defined. A priori, some of its entries could be + ∞. Denote by Y𝜖 the random vector defined by this infinite sum. Using Minkowski’s inequality or another convexity inequality as for Lemma 1, one proves, under Assumption 2 (b), that \(\mathrm {E}[\|Y_{\epsilon }\|^{\delta _{\epsilon }}]\) is finite. So Y𝜖’s entries are almost surely finite. With the same technique, we prove the rest of the lemma. □

The next lemma provides the desired formula for \(\mathcal {L}(\epsilon )\).

Lemma 14

There exists a random vector X𝜖 ∈Rd, with non-negative entries, satisfying

or equivalently,

where C𝜖, N𝜖 and L𝜖 are the blocks of the random matrix M𝜖, independent of X𝜖. One has \(X_{\epsilon } \preccurlyeq Y_{\epsilon }\). Moreover the Lyapunov exponent can be written as

And for every \(\boldsymbol x, \boldsymbol y \in \mathbf {R}_{+}^{d+1}\),

Proof

The method is the same as in Lemma 2’s proof for 2 ×2 matrices. We fix iid copies (M𝜖, n) of M𝜖 and set \(x_{0} = 0_{\mathbf {R}^{d}}\). Then define inductively, for \(n \geqslant 0\), the random variables

Observe that since all the vectors have non-negative entries, one can write, coordinatewise,

So, by an easy induction, \(x_{n} \preccurlyeq Y_{\epsilon }\) for every \(n \geqslant 0\). The end of the proof is the same as for Lemma 2. We do not reiterate all the details here. Just note that we do not claim the uniqueness of a non-negative solution to (118) and that Assumption 2 (a) is a sufficient condition for H. Hennion’s result to apply. □

To state our main result, and more precisely to formulate its premises, some multi-index notations will be required, which we set in the next lines. The norm of a multi-index λ ∈Nd will be denoted by |λ|:

For every \(l \geqslant 0\), there are \(\binom { l+d-1 }{ d-1}\) multi-indices with norm l: it is the number of (weak) compositions of l into d non-negative integers. For a vector x ∈Rd and a multi-index λ ∈Nd, we define the multi-index power

Similarly, for a matrix \(A \in \mathscr{M}_{d}(\mathbf {R})\) and a multi-index \(\boldsymbol {\omega } \in \mathbf {N}^{d^{2}} \simeq \mathscr{M}_{d}(\mathbf {N})\), define

There should be no confusion with a standard matrix power since ω is a multi-index.

For \(l \geqslant 0\), consider the square matrix G(l) with size \(\binom { l+d-1 }{ d-1}\), whose elements are

Note that all the multi-indices ω in the sum have norm |ω|= l. The matrix G(l) will play a similar role as E[Zl] in this generalized context. Of course these matrices, which require the existence of \(\lim _{\epsilon \to 0}\mathrm {E}\left [ N_{\epsilon }^{\boldsymbol {\omega }}\right ]\), are not always defined.

We have set enough notations to state the generalization of Proposition 3.1, giving a regular expansion of the Lyapunov exponent \(\mathcal {L}(\epsilon )\).

Proposition A.1

Fix \(K \geqslant 0\) and β ∈(K, K + 1]. Suppose that

-

1.

For all multi-indices λ, μ ∈Nd, \(\boldsymbol {\omega } \in \mathbf {N}^{d^{2}}\) such that l = |λ|+ |μ|+ |ω|⩽K, \(\mathrm {E}[L_{\epsilon }^{\boldsymbol { \lambda }} C_{\epsilon }^{\boldsymbol {\mu }} N_{\epsilon }^{\boldsymbol { \omega }}]\) is finite and admits a regular expansion, as 𝜖 goes to 0, up to the order 2(K −l):

$$ \mathrm{E}[L_{\epsilon}^{\boldsymbol{ \lambda}} C_{\epsilon}^{\boldsymbol{\mu}} N_{\epsilon}^{\boldsymbol{ \omega}}] = \sum\limits_{r=0}^{2(K-l)} c_{\boldsymbol{\lambda},\boldsymbol{\mu},\boldsymbol{\omega},r} \epsilon^{r} + \O(\epsilon^{2(\beta-l)}); $$(127) -

2.

For all 1 ⩽l ⩽K, the matrix I −G(l) is invertible;

-

3.

\(\limsup _{\epsilon \to 0} \mathrm {E}[\|L_{\epsilon }\|^{\beta }]\) is finite.

Then there exist real coefficients q2,…q2K such that, as 𝜖 goes to 0,

Remark 11

For Proposition A.1 to be usable, one needs to control E[∥X𝜖∥β]. With Lemmas 13 and 14, one has E[∥X𝜖∥β] = O(1) as 𝜖 goes to 0 as soon as (116) holds.

Remark 12

One could be surprised that the upper bound involves E[1 + ∥X𝜖∥β] instead of E[∥X𝜖∥β]. Such a caution was not necessary in the previous context since the latter was bounded form below as 𝜖 goes to 0. Here, a priori, it could happen that E[∥X𝜖∥β] vanishes as 𝜖 goes to 0.

Remark 13

The existence of G(l), for l ⩽K, is ensured by the assumption (127), which gives \(\lim _{\epsilon \to 0} \mathrm {E}[N_{\epsilon }^{\boldsymbol {\omega }}] = c_{\boldsymbol {0}, \boldsymbol {0}, \boldsymbol {\omega }, 0}\). The invertibility of I −G(l) is the counterpart of the assumption “E[Zl] < 1” in Proposition 3.1.

Proof

The same proof as for Proposition 3.1 works: one expands the logarithm:

where x(r) stands for the rth coordinate of x. Note that

and that for any r1,…, rk there exists λ ∈Nd, with norm k such that \( \mathrm {E}\left [ {\prod }_{i=1}^{k} X^{(r_{i})}_{\epsilon } \right ] = \mathrm {E}[ X_{\epsilon }^{\boldsymbol {\lambda }}]. \) Thus we need expansions for X𝜖’s moments. They are given by the next lemma. By substituting the regular expansion (129), given in Lemma 15, in the expansion (131) of \(\mathcal {L}(\epsilon )\), the proof of Proposition A.1 will be complete. □

Lemma 15

Under Proposition A.1’s premises, for all l ⩽K, and λ ∈Nd, such that |λ|= l, the following expansion holds, for some real coefficients (gλ, k):

Proof (Sketch of proof of Lemma 15)

We can follow the same proof as for Lemma 4. We go back to that proof to understand how the present one must be adjusted. The only point which merits special attention is the line (59) where the term \(\mathrm {E}[Z^{l}] \mathrm {E}[X_{\epsilon }^{l}]\) is isolated on the left-hand side. That line could be summarized as follow: we wrote

where (♢l) stands for all the terms in the expansion of \(\mathrm {E}[X_{\epsilon }^{l}]\) for which the induction hypothesis provided an expansion up to the required order. To be explicit,

Then we could conclude by writing

and applying the induction hypothesis. That is where was used the condition “E[Zl] < 1” (actually E[Zl]≠ 1 was enough), and this is where will be used the invertibility of 1 −G(l).

In our generalized setting, we still carry out an induction on (n, l = |λ|) (equipped with the lexicographic order). For the inductive step, there are a lot of multi-indices with given norm l. They will be solved simultaneously, by writing a joint system satisfied by all these multi-indices moments \(\mathrm {E}[X_{\epsilon }^{\boldsymbol {\lambda }}]\) with |λ|= l. To this end, use the identity

Then develop the denominator

Eventually, after manipulation, that moment takes the form

where, again, (♢λ) stands for all the term in the expansion of \(\mathrm {E}[X_{\epsilon }^{\boldsymbol {\lambda }}]\) for which the induction hypothesis, and the premise (127) of Proposition A.1, provide an expansion up to the required order. Then, since I −G(l) is invertible, one can solve that joint system satisfied by the family \((\mathrm {E}[X_{\epsilon }^{\boldsymbol {\lambda }}])\):

That concludes the proof of the induction step and thus the proof of the lemma. □

Remark 14

The same methods as in Section 4 can produce the lower bound on the error

as long as (127) holds with β = K + 1.

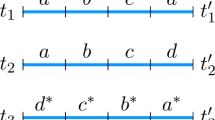

Application to a 1D Ising Model

The product of random matrices considered in the first sections appeared in [6] to express the free energy of the nearest-neighbour Ising model on the line with inhomogeneous magnetic field. The generalization considered in this appendix allows finite range interactions to be included. Let us be more precise. Consider the Ising model on TN := Z/NZ, with homogeneous interactions up to the distance d and inhomogeneous magnetic field (hk). It is the spin model with configurationsFootnote 1 \({\underline {\sigma } \in \{0,1\}^{\mathbf {T}_{N}}}\) whose Hamiltonian is

The magnetic field \((h_{k})_{k \in \mathbf {T}_{N}}\) is supposed to be iid. Thanks to a transfer matrix approach, the free energy in the thermodynamic limit can be expressed through a random matrix products:

where An is a 2d ×2d sparse matrix (two non-zero entries on each line and each column) whose entries are the following. If \(\underline {\tau },\underline {\upsilon } \in \{0,1\}^{d}\), which represent the partial configuration (σn,…, σn + d− 1) and its shift (σn+ 1,…, σn + d), then

One can check that Assumption 2 (a) holds with N = d. Proposition A.1 provides an expansion for the free energy f(T) when the coupling constants αl tend to be very large. Set Zn = exp(−hn/T) and 𝜖l = exp(−αl/T) for every l ⩽d. The parameters 𝜖l vanish when the coupling constants αl tend to be very large. Then An is a random perturbation of Diag(1, 0,…,0, Zn) if one writes the configurations \(\underline {\tau },\underline {\upsilon }\) in lexicographic order. Thus, Proposition A.1 yields

as soon as E[Zβ] < 1 (note that β is not the inverse temperature here).

Remark 15

Similarly, the results apply for an Ising model on a strip of finite width s (i.e. [N] × [s]), or a cylinder ([N] × Z/sZ) with an inhomogeneous magnetic field and finite-range interactions, with free, fixed or periodic boundary conditions.

Rights and permissions

About this article

Cite this article

Havret, B. Regular Expansion for the Characteristic Exponent of a Product of 2 × 2 Random Matrices. Math Phys Anal Geom 22, 15 (2019). https://doi.org/10.1007/s11040-019-9312-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11040-019-9312-x