Abstract

This work introduces and compares approaches for estimating rare-event probabilities related to the number of edges in the random geometric graph on a Poisson point process. In the one-dimensional setting, we derive closed-form expressions for a variety of conditional probabilities related to the number of edges in the random geometric graph and develop conditional Monte Carlo algorithms for estimating rare-event probabilities on this basis. We prove rigorously a reduction in variance when compared to the crude Monte Carlo estimators and illustrate the magnitude of the improvements in a simulation study. In higher dimensions, we use conditional Monte Carlo to remove the fluctuations in the estimator coming from the randomness in the Poisson number of nodes. Finally, building on conceptual insights from large-deviations theory, we illustrate that importance sampling using a Gibbsian point process can further substantially reduce the estimation variance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we focus on rare events associated with the number of edges in the Gilbert graph G(X) on a homogeneous Poisson point process X = {Xi}i≥ 1 with intensity λ > 0 in \(\mathbb {R}^{d}\), for d ≥ 1. Consequently, the nodes of G(X) are the points of X and there is an edge between Xi, Xj ∈ X if ∥Xi − Xj∥≤ 1, where the upper bound 1 is the threshold of the Gilbert graph and ∥⋅∥ denotes the Euclidean norm. Our goal is to analyze the probability of the rare event that the number of edges in a bounded sampling window \(W \subset \mathbb {R}^{d}\) deviates considerably from its expected value. More succinctly, we ask:

What is the probability that the Gilbert graph has at least twice its expected number of edges? What is the probability that the Gilbert graph has at most half its expected number of edges?

These seemingly innocuous questions have an intriguing connection to large deviations of heavy-tailed sums. Indeed, suppose that {Wi} is an equal-volume partition of W such that the diameter of each Wi is at most 1. Then, letting Zi := |X ∩ Wi| denote the number of Poisson points in Wi, the edges entirely inside Wi contribute Zi(Zi − 1)/2 to the total edge count. Since Zi follows a Poisson distribution, the tail probability \(\mathbb {P}(Z_{i} \ge n)\) is of the order \(\exp (-c n \log n)\) for some constant c > 0. Hence, the tails of \({Z_{i}^{2}}\) are of the order \(\exp (- c \sqrt n \log n/2)\) and therefore \({Z_{i}^{2}}\) does not have exponential moments. This is critical to note, because it means that the problem at hand is tightly related to large deviations of heavy-tailed sums, where large deviations typically come from extreme realizations of the largest summand (Asmussen and Kroese 2006; Blanchet and Glynn 2008; Rojas-Nandayapa 2013).

The analysis and simulations in this paper rely on two methods: conditional Monte Carlo (MC) and importance sampling; see Chapters 9.4 and 9.7 of Kroese et al. (2013), respectively. Importance sampling techniques have been in use for the analysis of rare events in the setting of the Erdős–Rényi graph (Bhamidi et al. 2015), which is different from the Gilbert graph considered here. Other than that, there has been very little literature on the topic. Note that the same analysis can be extended to Gilbert graphs with the threshold not equal to 1 by modifying the size of the window W and the intensity λ of the Poisson point process.

Seen in a larger context, our work is the first to provide specific simulation-based tools for the estimation of rare events in the Gilbert graph, which is the simplest example of a spatial random network. This is of particular relevance when taking into account the central place that such networks have in models in materials science and wireless networks (Baccelli and Błaszczyszyn 2009; Stenzel et al. 2014). When these models form the basis for applications in security-sensitive contexts, it becomes essential to provide estimates for rare-event probabilities. Indeed, when introducing a new technology, such as for instance device-to-device networks, it is not enough to know that the system works well on average. At the same time, the probabilities for catastrophic events should be estimated sufficiently precisely such that they can be deemed negligible. A prototypical application scenario based on measurement data is outlined by Keeler et al. (2018).

We also mention that it would be possible to derive analytical approximations for rare-event probabilities, for instance by assuming that the quantity of interest is approximately Poisson or normally distributed. The appeal of such an approach would lie in its simplicity, as it only requires first- and second-moment information. However, in the analytical approximations, it is difficult to quantify the estimation error, whereas the simulation-based methods outlined in the present paper are unbiased, and therefore do not suffer from this weakness, provided the number of simulations is large enough.

The rest of the presentation is organized in three parts. First, in Section 2, we explore the potential of conditional Monte Carlo in a one-dimensional setting, where we can frequently derive explicit closed-form expressions. Surprisingly, when moving to the far end of the upper tails, it is possible to avoid simulation altogether, as we derive a fully analytic representation. Then, in Section 3, we move to higher dimensions. Here, we apply conditional MC to remove the randomness coming from the random number of nodes. Finally, in Section 4, we present a further refinement of the conditional MC estimator, by combining it with importance sampling using a Strauss-type Gibbs process. To ease notation, we henceforth identify the Gilbert graph with its edge set, so that |G(X ∩ W)| yields the number of edges in the Gilbert graph on X ∩ W.

2 Conditional MC in Dimension 1

In this section, we consider the one-dimensional setting. More precisely, we consider a line segment W = [0, w] as sampling window. In Section 2.1, we describe a specific conditional MC scheme that leads to estimators for the rare-event probability of the number of edges being small. In a simulation study in Section 2.2, we show that these new estimators are substantially better than a crude MC approach.

Then, Section 2.3 discusses rare events corresponding to the number of missing edges, i.e., the number of point-pairs that are not connected by an edge. The analysis is motivated from the observation that the Erdős–Rényi graph with edge probability p ∈ [0,1] exhibits a striking duality with its complement. Specifically, the missing edges of this graph again form an Erdős–Rényi graph but now with probability 1 − p. In Section 2.3, we point out that in the Gilbert graph such a duality is much more involved. We still elucidate how to compute the probability of observing no missing edges or precisely one missing edge.

In this section, we assume that the points {Xi}i≥ 1 of the Poisson point process on \([0, \infty )\) are ordered according to their occurrence; that is, Xi ≤ Xj, whenever i ≤ j.

2.1 Few Edges

Henceforth, let

denote the event that the number of edges in the Gilbert graph on X ∩ [0, w] is at most k ≥ 0. For fixed k and large w, the probability

becomes small, and we discuss how to use both the natural ordering on the real half-line and the independence property of the Poisson point process to derive a refined estimator.

We focus only on the cases k = 0,1. In principle, the methods could be extended to cover estimation of probabilities of the form p≤k for k ≥ 2. However, for large values of k the combinatorial analysis becomes quickly highly involved; see Remark 2 for more details.

2.1.1 No Edges

To begin with, let k = 0. That is, we analyze the probability that all vertices in X ∩ [0, w] are isolated in the sense that their vertex degree is 0. The key idea for approaching this probability is to note that E0 := E≤ 0 occurs if and only if X ∩ [X1,(X1 + 1) ∧ w] = ∅ and the Gilbert graph restricted to X ∩ [X1 + 1, w] does not contain edges; see Fig. 1. Here, we adhere to the convention that [a, b] = ∅ if a > b.

According to the Palm theory for one-dimensional Poisson point processes, the process

again forms a homogeneous Poisson point process, which is independent of X1; see Last and Penrose (2017, Theorem 7.2). In particular, writing

for the σ-algebra generated by X1 and X(1) allows for a partial computation of p0 := p≤ 0 via conditional MC (Kroese et al. 2013, Chapter 9.4). More precisely, when computing \(\mathbb {P}(E_{0} | \mathcal {F}_{1})\) we explicitly throw away the information from the configuration of X inside the interval [X1, X1 + 1].

Theorem 1 (No edges)

Suppose that w ≥ 1. Then,

Proof

First, X1 is isolated if there are no further vertices in the interval [X1,(X1 + 1) ∧ w]. Moreover, after conditioning on X1, the remaining vertices form a Poisson point process in [(X1 + 1) ∧ w, w]; see Last and Penrose (2017, Theorem 7.2). Therefore,

as asserted. □

In other words, invoking the Rao–Blackwell theorem (Billingsley 1995), Theorem 1 indicates that the right-hand side of identity (1) is an attractive candidate for estimating p0 via conditional Monte Carlo. The Rao–Blackwell theorem is a powerful tool in situations where p0 is not available in closed form. Note that elementary properties of the conditional expectation imply that the conditional MC estimator \(\mathbb {P}(E_{0} | \mathcal {F}_{1})\) is unbiased and exhibits smaller variance than the crude MC estimator  .

.

Moreover, the right-hand side of identity (1) features another indicator of an isolation event. Hence, it becomes highly attractive to refine the estimator further by proceeding iteratively. To make this precise, we define an increasing sequence \(X_{1}^{*} \le X_{2}^{*} \le \cdots \) of points of X recursively as follows. First, \(X_{1}^{*} = X_{1}\) denotes the left-most point of X. Next, once \(X_{m}^{*}\) is available,

denotes the first point of X to the right of \(X_{m}^{*} + 1\). Then, the event E0 occurs if none of the intervals \([X_{i}^{*}, X_{i}^{*} + 1]\) contains points from X; see Fig. 2.

Of particular interest is the last index

where \(X_{i}^{*}\) remains inside [0, w], together with the associated point

If X1 > w, we set I∗ = 0 and X∗ = w. Let

be the σ-algebra generated by \(\{X_{i}^{*} : i \geq 1\}\).

Theorem 2 (No edges – iterated)

Suppose that w ≥ 1. Then,

Proof

To prove the claim, we first define the shifted process

and write

for the σ-algebra generated by \(X_{1}^{*}, \dots , X_{m}^{*}\) and X(m). Observe that \(\mathcal {F}_{1} \supseteq \mathcal {F}_{2} \supseteq {\cdots } \supseteq \mathcal {F}^{*}\). In particular, by the tower property of conditional expectation,

Hence, it suffices to show that for every m ≥ 0,

because \(X^{(I_{*})} \cap [1, w - X^{*}] = \emptyset \). To achieve this goal, we proceed by induction on m. For m = 0 and m = 1 we are in the setting of Theorem 1. To pass from m to m + 1, the induction hypothesis yields that

Since \(X_{m + 1}^{*}\) is the first point of X after \(X_{m}^{*} + 1\), by applying Theorem 1 to the Poisson point process X(m), we obtain

Combining (3) and (4) yields the assertion. □

The conditional MC estimator from Theorem 2 leads to Algorithm 1. Here, Exp(λ) is an exponential random variable with parameter λ that is independent of everything else.

2.1.2 At Most One Edge

Here, let k = 1; i.e., we propose an estimator for the probability p1 := p≤ 1 that the Gilbert graph on X ∩ [0, w] has at most one edge. Let

be the index of the first point of X whose predecessor is at distance at most 1. Putting \(X^{+} := (X - X_{I^{+}}) \cap [1, \infty )\), Fig. 3 illustrates that the event E≤ 1 is equal to the intersection of the events \(\{X_{I^{+} + 1} \ge X_{I^{+}} + 1\}\) and \(\{G(X^{+}\cap [1, w - X_{I^{+}}]) = \emptyset \}\).

Moreover, we write

for the σ-algebra generated by \(X_{1}, X_{2}, \dots , X_{I^{+}}\) and X+.

Theorem 3 (At most one edge)

Suppose that w ≥ 1. Then,

Proof

Since the proof is very similar to that of Theorem 1, we only point to the most important ideas. Equation 5 obviously holds for \(X_{I^{+}} > w\). For the case \(X_{I^{+}} \le w\), relying again on the Palm theory of the one-dimensional Poisson process, we have

as asserted. □

Similarly to Section 2.1.2, Theorem 3 yields a conditional MC estimator, which we describe in Algorithm 2.

Remark 1 (Symmetric window)

The methods described above could also be applied for a Poisson point process in an interval of the form [−w/2, w/2]. Then, in addition to I∗ and I+, we would need to take into account the corresponding quantities located to the left of the origin.

Remark 2 (k ≥ 2)

The method to handle k = 0,1 could certainly be extended to larger k ≥ 2, but the configurational analysis would quickly become very involved. For instance, for k = 2 we would first need to require that the interval \([X_{I_{*} - 1}, X_{I_{*} - 1} + 1]\) contains only the point \(X_{I_{*}}\). Next, if \(X_{I_{*} + 1} \le X_{I_{*}} + 1\), then there may be no more edges to the right of \(X_{I_{*} + 1}\). On the other hand, the analysis will be different if \(X_{I_{*} + 1} > X_{I_{*}} + 1\), because then there can still be one edge in the graph to the right of \(X_{I_{*} + 1}\).

2.2 Simulations

In this section, we illustrate how to estimate the rare-event probabilities p0 and p1 via MC. After presenting the crude MC estimator, we illustrate how the conditional MC estimators described in Section 2.1 improve the efficiency drastically. In the simulation study, we estimate p0 and p1 for sampling windows of size w ∈{5,7.5,10} and Poisson intensity λ = 2. Both the crude MC and the conditional MC estimator are computed based on N = 106 samples.

To estimate the rare-event probabilities p≤k using crude MC, we draw iid samples \(X^{(1)}, \dots , X^{(N)}\) of the Poisson point process on [0, w] and set

for the proportion of samples leading to a Gilbert graph with at most k edges.

The estimates reported in Table 1 reveal that as the size of the sampling window grows, the rare-event probabilities decrease rapidly and that the estimators exhibit a high relative error.

Next, we estimate p≤k for k = 0,1 with the conditional MC methods described in Algorithms 1 and 2. If \(P^{(1)}, \dots , P^{(N)}\) denote the simulation outputs, then we set

The corresponding estimates shown in Table 2 highlight that the theoretical improvements over crude MC predicted from Theorems 2 and 3 also manifest themselves in the simulation study. This is particularly striking in the setting k = 0, where the variance can be reduced by several orders of magnitude.

2.3 Few Missing Edges

We now focus on computing the rare-event probabilities of having few missing edges. More precisely, we write

for the number of edges of G(X ∩ [0, w]) that are missing from the complete graph on the vertices X ∩ [0, w]. We write

for the probability that at most k ≥ 0 edges are missing. Surprisingly, this seemingly more complicated task is more accessible than the probability of seeing few edges considered in Section 2.1, as both \(p^{\prime }_{0} = p^{\prime }_{\leq 0}\) and \(p^{\prime }_{\leq 1}\) are amenable to closed-form expressions.

For \(p^{\prime }_{0}\), the key insight is to note that Mw = 0 if and only if X ∩ [X1 + 1, w] = ∅; see Fig. 4.

Theorem 4 (No missing edges)

Suppose that w ≥ 1. Then,

Proof

As observed in the above remark, we need to compute \(\mathbb {P}(X\cap [X_{1} + 1, w] = \emptyset )\). Hence, invoking the void probability for a Poisson point process,

which equals e−λ(w− 1) + (w − 1)λ e−λ(w− 1), as asserted. □

Next, we compute the probability of observing at most one missing edge.

Theorem 5 (At most one missing edge)

Suppose that w ≥ 2. Then,

Proof

We decompose \(p^{\prime }_{\leq 1}\) as

and compute the second and third probability separately. This corresponds to Case 1 and Case 2 below. Note that under the event {X ∩ [X1 + 2, w]≠∅}, we have |X ∩ [0, w]| = 2, since more points would imply at least two missing edges. □

Case 1.

(X ∩ (X1, X1 + 2] = ∅ and |X ∩ [X1 + 2, w]| = 1)

Conditioning on X1, the probability of this event becomes

Inserting the void probability for the Poisson process, we arrive at

It remains to treat the case X ∩ [X1 + 2, w] = ∅. First, note that conditioned on X1 = x1, the point process X ∩ [x1, w] is Poisson. Thinking of this Poisson point process to be formally extended to \(-\infty \) to the left, we write \(X_{1}^{\prime }\) for the first Poisson point to the left of w. Then, Fig. 5 illustrates that the event {Mw = 1}∩{X ∩ [X1 + 2, w] = ∅} is equivalent to \(X_{1}^{\prime } \in [X_{1} + 1, X_{1} + 2 ]\) and \(X\cap (X_{1}, X_{1}^{\prime } - 1] = \emptyset \).

For Case 2, we distinguish between the cases X1 ≤ w − 2 and X1 ∈ [w − 2, w − 1].

Case 2a.

(X1 ≤ w − 2, \(X_{1}^{\prime } \in [X_{1} + 1, X_{1} + 2]\) and \(X \cap (X_{1}, X_{1}^{\prime } - 1] = \emptyset \)) Then, we compute the desired probability as

which equals (w − 2)λ2e−λ(w− 1).

Case 2b.

(X1 ∈ [w − 2, w − 1], \(X_{1}^{\prime } \in [X_{1} + 1, w]\) and \(X\cap (X_{1}, X_{1}^{\prime } - 1] = \emptyset \)) Finally,

which equals \(= \frac {\lambda ^{2}}2 e^{-\lambda (w - 1)}.\) Assembling the different cases together concludes the proof.

3 Conditional MC in Higher Dimensions

In Section 2, we analyzed rare events related to few edges or few missing edges in a one-dimensional setting. There, closed-form expressions for conditional probabilities were derived from the natural ordering of the Poisson points. Now, we proceed to higher dimensions and also consider more general deviations from the mean number of edges. First, we again illustrate that substantial variance reductions are possible through a surprisingly simple conditional MC method. Loosely speaking, the Poisson point process consists of 1) an infinite sequence of random points in the window determining the locations of points and 2) a Poisson random variable determining the number of points in the sampling window. We use that after conditioning on the spatial locations, the rare-event probability is available in closed form. This type of Poisson conditioning is novel in a spatial rare-event estimation context, but has strong ties to approaches appearing earlier in seemingly unrelated problems. More precisely, for instance in reliability theory, related conditional MC schemes lead to spectacular variance reductions (Lomonosov and Shpungin 1999; Vaisman et al. 2015).

The rest of this section is organized as follows. First, Section 3.1 describes how to estimate the rare event probabilities related to too few and too many edges relative to their expected number through conditional MC. Then, Section 3.2 presents a simulation study illustrating that this estimator can reduce the estimation variance by several orders of magnitude.

3.1 Conditioning on a Spatial Component

We consider a full-dimensional sampling window \(W \subset \mathbb {R}^{d}\) and rare events of the form

where \(\mu := \mathbb {E}[|G(X \cap W)|]\) denotes the mean (i.e., expected) number of edges, which can be estimated through simulations. Alternatively, if W is so large that edge effects can be neglected, then the Slivnyak–Mecke formula (Last and Penrose 2017, Theorem 4.4) gives the approximation \(\mu \approx \frac 12|W|\lambda ^{2} \kappa _{d}\), where κd denotes the volume of the unit ball in \(\mathbb {R}^{d}\).

The key idea for developing a conditional MC scheme is to use the explicit construction of a Poisson point process in a bounded sampling window. More precisely, let \(X_{\infty } = \{X_{n}\}_{n \ge 1}\) be an iid family of uniform random points in W and K be an independent Poisson random variable with parameter λ|W|. Then, {Xn}n≤K is a Poisson point process in W with intensity λ, Last and Penrose (2017, Theorem 3.6).

In conditional MC, we use the fact that the rare-event probabilities can be computed in closed form after we condition on the locations \(X_{\infty }\). More precisely, we let

denote the first time where the Gilbert graph on the nodes \(\{X_{1}, \dots , X_{k}\}\) contains at least (1 − a)μ edges and refer to Fig. 6 for an illustration.

Similarly, let

denote the largest k for which the Gilbert graph on the nodes \(\{X_{1}, \dots , X_{k}\}\) contains at most (1 + a)μ edges. Then,

where \({F_{\textsf {Poi}}:\mathbb {Z}_{\ge 0} \to [0, 1]}\) denotes the cumulative distribution function of a Poisson random variable with parameter λ|W|.

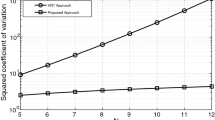

3.2 Numerical Results

We sample planar homogeneous Poisson point processes \(X^{\textsf {S}} = \{X^{\textsf {S}}_{i}\}_{i \ge 1}\), \(X^{\textsf {M}} = \{X^{\textsf {M}}_{i}\}_{i \ge 1}\) and \(X^{\textsf {L}}= \{X^{\textsf {L}}_{i}\}_{i \ge 1}\) with intensity λ = 2 in windows of size 20 × 20, 25 × 25, and 30 × 30, respectively. Here, S,M, and L stand for small, medium, and large, respectively.

In Section 1, we mentioned that a major challenge in devising efficient estimators for rare-event probabilities related to the edge count comes from a qualitatively different tail behavior: light on the left, heavy on the right. In other words, we expect that in the left tail, we see changes throughout the sampling window, whereas in the right tail, a singular particular configuration in a small part of the window is sufficient to induce the rare event. We recall that the left tail of a random variable Z refers to the probabilities \(\mathbb {P}(Z \le r)\) for small r and the right tail refers to the probabilities \(\mathbb {P}(Z \ge r)\) for large r.

Although on a bounded sampling window, the theoretical difference between the left and the right tail is subtle, we illustrate in Table 3 that it does become visible when considering the quantiles Qα and Q1−α for the number of edges if α is small. Here, the empirical quantiles for 106 samples of the edge counts in a (20 × 20)-window are shown. For instance the 1%-quantile is 16.4% lower than the mean, which is a similar deviation as the 18% exceedance of the 99%-quantile. However, when moving to the 0.01%-quantile, then it is 25.2% lower than the mean, whereas the corresponding 99.99% quantile exceeds the mean by 30.0%. These figures are an early indication of the difference in the tails that will reappear far more pronouncedly in the numerical results concerning the estimation of the rare-event probabilities \(\mathbb {P}(F_{<0.2})\) and \(\mathbb {P}(F_{>0.2})\).

In the rest of the section, we estimate the rare-event probabilities

corresponding to 20% deviations from the mean. Here, we draw N = 105 samples of \(X_{\infty }\). Then, taking into account the representation (6), we set

The variances of the crude MC estimators are equal to q< 0.2(1 − q< 0.2).

We report the estimates \(p^{\textsf {Cond}}_{< 0.2}\) and \(p^{\textsf {Cond}}_{> 0.2}\) in Table 4. First, we see that the exceedance probabilities \(p^{\textsf {Cond}}_{> 0.2}\) are always smaller than the corresponding undershoot probabilities \(p^{\textsf {Cond}}_{< 0.2}\). This supports the preliminary impression of the difference in the tail behavior hinted at in Table 3. Moreover, in all examples conditional MC reduces the estimation variance massively and the efficiency gains become more pronounced the rarer the event.

4 Importance Sampling

The conditional MC estimators constructed in Section 3 take into account that an atypically large number of Poisson points leads to a Gilbert graph exhibiting considerably more edges than expected. However, not only the number but also the location of points play a pivotal role. For instance if the points tend to repel each other, then we would typically observe fewer edges. Similarly, clustered point patterns should induce more edges.

In order to implement these insights, we resort to the technique of importance sampling (Kroese et al. 2013, Chapter 9.7). That is, we draw samples from a point process with distribution \(\mathbb {Q}\) for which the rare event becomes typical and then correct the estimation bias by weighting with likelihood ratios. In Sections 4.1 and 4.2 below, we explain how to implement these steps through configuration-dependent birth-death processes reminiscent of the Strauss process from spatial statistics (Møller and Waagepetersen 2004). Finally, in Section 4.3, we illustrate in a simulation study how importance sampling of the spatial locations reduces the estimation variance further.

4.1 Lower Tails

The key observation is that under the rare event of seeing exceptionally few edges, we expect a repulsion between points. More precisely, the most likely reason for the rare event are changes to the configuration of the underlying Poisson point process throughout the entire sampling window. The large-deviation analysis of Seppäläinen and Yukich (2001) suggests to perform importance sampling where, instead of considering the distribution \(\mathbb {P}\) of the a priori Poisson point process, we draw samples according to a different stationary point process with distribution \(\mathbb {Q}\) such that under \(\mathbb {Q}\), the original rare event becomes typical and whose deviation from \(\mathbb {P}\), as measured through the Kullback–Leibler divergence \(h(\mathbb {Q} | \mathbb {P})\), is minimized. We implement this repulsion by a dependent thinning inspired from the Strauss process.

Here, we start from a realization of the Gilbert graph on n0 = ⌊λ|W|⌋ iid points \(\{X_{1}, \dots , X_{n_{0}}\}\), and then, we thin out points successively. An independent thinning of points would give rise to uniformly distributed locations without interactions. In the importance sampling, we thin instead via a configuration-dependent birth-death process; see e.g., Chapter 9.7 of Kroese et al. (2013).

To describe the death mechanism more precisely, we draw inspiration from the Strauss process and choose the probability pi to remove point Xi proportional to \(\gamma ^{\deg (X_{i})}\), where \(\deg (X_{i})\) denotes the degree of Xi in the Gilbert graph and γ > 1 is a parameter of the algorithm. Algorithm 3 shows the pseudo-code leading to the importance sampling estimator \(q^{\textsf {IS}}_{<a}\) for q<a; in the algorithm we write |X| for the number of points of X. To understand the principle behind Algorithm 3, we briefly expound on the general approach in importance sampling, and refer the reader to Chapter 9.7 of Kroese et al. (2013) for an in-depth discussion.

As mentioned above, when thinning out points independently until the number of edges in the Gilbert graph falls below μ(1 − a), we would arrive at the random variable \(K_{<a}(X_{\infty })\) from Section 3. However, thinning out according to a configuration-dependent probability distorts its distribution towards a probability measure \(\mathbb {Q}\) that is in general different from the true distribution \(\mathbb {P}\). Still, by construction, \(\mathbb {Q}\) is absolutely continuous with respect to \(\mathbb {P}\), and we let \(\rho := \mathrm {d} \mathbb {P} / \mathrm {d} \mathbb {Q}\) be the likelihood ratio. Then, from a conceptual point of view, Algorithm 3 first draws N ≥ 1 iid samples \(X_{\infty }(1), \dots , X_{\infty }(N)\) from the distorted distribution \(\mathbb {Q}\) with associated likelihood ratios \(\rho _{1}, \dots , \rho _{N}\), and then computes

where we recall that FPoi denotes the cumulative distribution function of a Poisson random variable with parameter λ|W|.

We now explain why the \(q^{\textsf {IS}}_{<a}\) returned by the algorithm is a good approximation for the probability q<a. The general philosophy is that when performing importance sampling with a proposal distribution that is close to the conditional distribution under the rare event, we can be optimistic that importance sampling decreases the estimation variance. For instance, in Kroese et al. (2013, Section 13.2), it is shown that an unbiased zero-variance estimator could be obtained if the proposal distribution coincided with the conditional distribution under the rare event. In our setting, this means that we can expect \(q^{\textsf {IS}}_{<a}\) to be a good approximation for the probability q<a if the proposal density in the importance sampling is close to the conditional distribution under the event F<a.

To achieve this goal, intuitively, we would like to choose the thinning parameter γ > 1 such that the number of edges in the rare event F<a should match the expected number of edges under the thinning. To develop a heuristic for this choice, we consider a Strauss process, where we restrict to the two-dimensional setting to allow for an accessible presentation. To make the article self-contained, we briefly recall the definition of a Strauss process from Møller and Waagepetersen (2004). The Strauss process in the observation window W is a Gibbs process whose density f(φ) with respect to a unit-intensity Poisson point process on W is given by

with parameters R > 0 (interaction radius), β > 0 (interaction parameter) and γ ∈ [0,1) (interaction strength) where α > 0 is a normalizing constant and \(s_{R}(\varphi ) = \#\{\{x,y\} \subseteq \varphi \colon 0 < |x - y| \le R\}\) denotes the number of unordered point pairs in φ within a distance at most R.

Hence, matching the number of edges in the rare event to the expected number of edges leads to the equation

where λStr > 0 and \(\rho ^{\textsf {Str}}: [0,\infty ) \to [0,\infty )\) denote the intensity and pair-correlation function of the Strauss process, respectively (Møller and Waagepetersen 2004). In contrast to the Poisson setting, neither λStr nor ρStr are available in closed form. Still, both quantities admit accurate saddle-point approximations λPS > 0 and \(\rho ^{\textsf {PS}}: [0, \infty ) \to [0,\infty )\) that are ready to implement in the Strauss case (Baddeley and Nair 2012).

More precisely, λPS is the unique positive solution of the equation

where G = (1 − β)π and \(\beta = \log (\gamma )\), see Baddeley and Nair (2012). Then, recalling that we work in a planar setting, we put

where

denotes the intersection area of two unit disks at distance r. Inserting these approximations into (7) and solving the resulting implicit equation yields \(\beta \approx \log (1.018)\) and a value of γ ≈ 1.018.

4.2 Upper Tails

Similar to the lower tails, we can strengthen the estimator by combining conditional MC with importance sampling on the spatial locations. For the upper tails, it is natural to devise an importance sampling scheme favoring clustering rather than repulsion. From the point of view of large deviations, this phenomenon was considered in Chatterjee and Harel (2020). There, it is shown that all excess edges come from a ball of size 1 containing a highly dense configuration of Poisson points. More precisely, the method is particularly powerful in settings where the rare event is the epitome of a condensation phenomenon. That is, a peculiar behavior of the point process in a small part in the window becomes the most likely explanation of the rare event. As laid out in Chatterjee and Harel (2020), at least in the asymptotic regime of large deviations, the rare event of observing too many edges is governed by the above-described condensation phenomenon.

We propose to take the clustering into account via a birth mechanism favoring the generation of points in areas that would lead to a large number of additional edges. To ease implementation, the density of the birth mechanism is discretized and remains constant in bins of a suitably chosen grid. The density in a bin at position x ∈ W is proportional to γn(x), where γ > 1 is a parameter governing the strength of the clustering and n(x) denotes the number of Poisson points in a suitable neighborhood around x, such as the bin containing x together with all adjacent bins.

Similar to the lower tails case, a subtle point in this approach pertains choosing the parameter γ > 1. Unfortunately, an attractive Strauss process is ill-defined in the entire Euclidean space, so that the saddle-point approximation from Section 4.1 does not apply. In Section 4.3 below, we therefore rely on a pilot run suggesting γ = 1.01 as a good choice for further variance reduction. Although in this pilot run, the number of simulations is small, and estimates of the variance are still volatile, we found that it provides a good indication for simulations on a larger scale.

Section 3.2 revealed that even with a sample size of N = 105 the conditional MC estimators still exhibit a considerable relative error. Now, we illustrate that importance sampling may be an appealing option to reduce this error.

Table 5 reports the estimates \(q^{\textsf {IS}}_{<0.2}\) and \(q^{\textsf {IS}}_{>0.2}\) for different sizes of the sampling window as in Section 3.2. In the left tail, we see massive variance improvements when compared to the crude MC estimator. In the right tail, the gains are substantial, but a little less pronounced. This matches the intuition that the root of the rare events in the right tails should be a condensation phenomenon, which goes against the heuristic of changing the point process homogeneously throughout the window.

References

Asmussen S, Kroese DP (2006) Improved algorithms for rare event simulation with heavy tails. Adv Appl Probab 38(2):545–558

Baccelli F, Błaszczyszyn B (2009) Stochastic Geometry and Wireless Networks. Now Publishers Inc

Baddeley A, Nair G (2012) Approximating the moments of a spatial point process. Stat 1(1):18–30

Bhamidi S, Hannig J, Lee CY, Nolen J (2015) The importance sampling technique for understanding rare events in Erdȯs-Rényi random graphs. Electron J Probab. 20(107):30

Billingsley P (1995) Probability and measure, 3rd edn. Wiley, New York

Blanchet J, Glynn P (2008) Efficient rare-event simulation for the maximum of heavy-tailed random walks. Ann Appl Probab 18(4):1351–1378

Chatterjee S, Harel M (2020) Localization in random geometric graphs with too many edges. Ann Probab 48(2):574–621

Keeler HP, Jahnel B, Maye O, Aschenbach D, Brzozowski M (2018) Disruptive events in high-density cellular networks. 16th International Symposium on Modeling and Optimization in Mobile, Ad Hoc and Wireless Networks (WiOpt)

Kroese DP, Taimre T, Botev ZI (2013) Handbook of monte carlo methods. Wiley, New York

Last G, Penrose MD (2017) Lectures on the poisson process. Cambridge University Press, Cambridge

Lomonosov M, Shpungin Y (1999) Combinatorics of reliability Monte Carlo. Random Struct Algorithm 14(4):329–343

Møller J, Waagepetersen RP (2004) Statistical inference and simulation for spatial point processes. CRC, Boca Raton

Rojas-Nandayapa L (2013) A review of conditional rare event simulation for tail probabilities of heavy tailed random variables. Bol Soc Mat Mex (3) 19 (2):159–182

Seppäläinen T, Yukich JE (2001) Large deviation principles for Euclidean functionals and other nearly additive processes. Probab Theory Rel Fields 120(3):309–345

Stenzel O, Hirsch C, Brereton T, Baumeier T, Andrienko D, Kroese DP, Schmidt V (2014) A general framework for consistent estimation of charge transport properties via random walks in random environments. Multiscale Model Simul 12:1108–1134

Vaisman R, Kroese DP, Gertsbakh IB (2015) Improved sampling plans for combinatorial invariants of coherent systems. IEEE Trans Rel 65(1):410–424

Acknowledgments

The authors thank the anonymous referee for reading through the entire manuscript thoroughly and for providing us with high-quality and constructive feedback. Major parts of this research were carried out while Christian Hirsch was on a research visit at the University of Queensland and while Sarat B. Moka was on a research visit at Ulm University. We thank both hosts for their hospitality and the Australian Research Council Centre of Excellence for Mathematical and Statistical Frontiers (ACEMS) for the support under grant number CE140100049.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hirsch, C., Moka, S.B., Taimre, T. et al. Rare Events in Random Geometric Graphs. Methodol Comput Appl Probab 24, 1367–1383 (2022). https://doi.org/10.1007/s11009-021-09857-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11009-021-09857-7

Keywords

Mathematics Subject Classification (2010)

Profiles

- Christian Hirsch View author profile