Abstract

We express the topological expansion of the Jacobi Unitary Ensemble in terms of triple monotone Hurwitz numbers. This completes the combinatorial interpretation of the topological expansion of the classical unitary invariant matrix ensembles. We also provide effective formulæ for generating functions of multipoint correlators of the Jacobi Unitary Ensemble in terms of Wilson polynomials, generalizing the known relations between one point correlators and Wilson polynomials.

Similar content being viewed by others

1 Introduction and results

Throughout this paper we denote \({\mathcal {H}}_N(I)\) the set of hermitian matrices of size \(N=1,2,\dots \) with eigenvalues in the interval \(I\subseteq {\mathbb {R}}\). In particular \({\mathcal {H}}_N(I)\) can be endowed with the Lebesgue measure

The Jacobi Unitary Ensemble (JUE) is defined by the following measure on \({\mathcal {H}}_N(0,1)\)

with parameters \(\alpha ,\beta \) satisfying \(\mathrm {Re}\,\alpha ,\mathrm {Re}\,\beta >-1\). The normalizing constant

ensures that \(\mathrm dm_N^{\mathsf{J}}\) has total mass 1; the above integral can be computed by a standard formula [19] in terms of the norming constants \(h_\ell ^{\mathsf{J}}\) of the monic Jacobi polynomials, see (4.2).

If \(\alpha \) and \(\beta \) are integers, so that \(M_\alpha =\alpha +N\) and \(M_\beta =\beta +N\) are integers, the probability measure (1.1) describes the distribution of the matrix \(X=(W_A+W_B)^{-1/2}W_A(W_A+W_B)^{-1/2}\in {\mathcal {H}}_N(0,1)\) where \(W_A=A^\dagger A\) and \(W_B=B^\dagger B\) are the Wishart matrices associated with the random matrices A, B of size \(M_\alpha \times N,M_\beta \times N\) respectively, with i.i.d. Gaussian entries [30].

Given positive integers \(k_1,\dots ,k_\ell \ge 0\) we shall consider the expectation values

which we term (respectively, positive and negative) JUE correlators.

Remark 1.1

Although (1.2) is defined only for \(\mathrm {Re}\,\alpha \pm \sum _{i=1}^\ell k_i>-1,\mathrm {Re}\,\beta >-1\), it will be clear from the formulæ of Corollary 1.6 below that the JUE correlators extend to rational functions of \(N,\alpha ,\beta \).

1.1 JUE correlators and Hurwitz numbers

Our first result gives a combinatorial interpretation for the large N topological expansion [25, 26, 28] of JUE correlators (1.2). This provides an analogue of the classical result of Bessis, Itzykson and Zuber [14] expressing correlators of the Gaussian Unitary Ensemble as a generating function counting ribbon graphs weighted according to their genus, see also [25]. At the same time, it is more similar in spirit (and actually a generalization, see Remark 1.10) of the analogous result for the Laguerre Unitary Ensemble, whose correlators are expressed in terms of double monotone Hurwitz numbers [17], and (for a specific value of the parameter) in terms of Hodge integrals [20, 22, 31]; in particular in [31] we provide an ELSV-type formula [24] for weighted double monotone Hurwitz numbers in terms of Hodge integrals.

Our description of the JUE correlators involves triple monotone Hurwitz numbers, which we promptly define; to this end let us recall that a partition is a sequence \(\lambda =(\lambda _1,\dots ,\lambda _{\ell })\) of integers \(\lambda _1\ge \dots \ge \lambda _\ell >0\), termed parts of \(\lambda \); the number \(\ell \) is called length of the partition, denoted in general \(\ell (\lambda )\), and the number \(|\lambda |=\sum _{j=1}^{\ell }\lambda _j\) is called weight of the partition. We shall use the notation \(\lambda \vdash n\) to indicate that \(\lambda \) is a partition of n, i.e. \(|\lambda |=n\).

We denote \({\mathfrak {S}}_n\) the group of permutations of \(\{1,\dots ,n\}\); for any \(\lambda \vdash n\) let \(\mathrm {cyc}(\lambda )\subset {\mathfrak {S}}_n\) the conjugacy class of permutations of cycle-type \(\lambda \). It is worth recalling that the centralizer of any permutation in \(\mathrm {cyc}(\lambda )\) has order

where the symbol \(|\cdot |\) denotes the cardinality of the set.

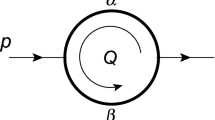

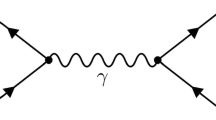

Hurwitz numbers were introduced by Hurwitz to count the number of non-equivalent branched coverings of the Riemann sphere with a given set of branch points and branch profile [37]. This problem is essentially equivalent to count factorizations in the symmetric groups with permutations of assigned cycle-type and, possibly, other constraints. It is a problem of long-standing interest in combinatorics, geometry, and physics [3, 4, 32,33,34,35,36, 47].

The type of Hurwitz numbers relevant to our study is defined as follows.

Definition 1.2

Given \(n\ge 0\), three partitions \(\lambda ,\mu ,\nu \vdash n\) and an integer \(g\ge 0\), we define \(h_g(\lambda ,\mu ,\nu )\) to be the number of tuples \((\pi _1,\pi _2,\tau _1,\dots ,\tau _r)\) of permutations in \({\mathfrak {S}}_n\) such that

-

1.

\(r=2g-2-n+\ell (\mu )+\ell (\nu )+\ell (\lambda )\),

-

2.

\(\pi _1\in \mathrm {cyc}(\mu )\), \(\pi _2\in \mathrm {cyc}(\nu )\),

-

3.

\(\tau _i=(a_i,b_i)\) are transpositions, with \(a_i<b_i\) and \(b_1\le \cdots \le b_r\), and

-

4.

\(\pi _1\pi _2\tau _1\cdots \tau _r\in \mathrm {cyc}(\lambda )\).

The relation of these Hurwitz numbers to the JUE is expressed by the following result.

Theorem 1.3

Under the re-scaling \(\alpha =(c_\alpha -1)N\), \(\beta =(c_\beta -1)N\), for any partition \(\lambda \) we have the following Laurent expansions as \(N\rightarrow \infty \);

where \(z_\lambda \) is given in (1.3) and \(h_g(\lambda ,\mu ,\nu )\) are the monotone triple Hurwitz numbers of Definition 1.2.

The proof is in Sect. 2. There is a similar result for the Laguerre Unitary Ensemble (LUE) [17] which is recovered by the limit explained in Remark 1.10. However, the proof presented in this paper uses substantially different methods than those employed in [17]; in particular our proof is completely self-contained and uses the notion of multiparametric weighted Hurwitz numbers, see, e.g. [36] and Sect. 2.1.

Remark 1.4

(Connected correlators and connected Hurwitz numbers) By standard combinatorial methods [49] it is possible to conclude from Theorem 1.3 that the connected JUE correlators

admit the same large N expansions as in Theorem 1.3, with the Hurwitz numbers \(h_g(\lambda ,\mu ,\nu )\) replaced by their connected counterparts \(h_g^{\mathsf{c}}(\lambda ,\mu ,\nu )\). The latter are defined as the number of tuples \((\pi _1,\pi _2,\tau _1,\dots ,\tau _r)\) satisfying (1)–(4) in Definition 1.2 and the additional constraint that the subgroup generated by \(\pi _1,\pi _2,\tau _1,\dots ,\tau _r\) acts transitively on \(\{1,\dots ,n\}\).

1.2 Computing correlators of hermitian models

To provide an effective computation of the JUE correlators we first consider the general case of a measure on \({\mathcal {H}}_N(I)\) of the form

with normalizing constant \(C_N=\int _{{\mathcal {H}}_N(I)}\exp \mathrm {tr}\,V(X)\mathrm dX\). Here V(x) is a continuous function of \(x\in I^\circ \) (the interior of I) and we assume that \(\exp V(x)={\mathcal {O}}\left( |x-x_0|^{-1+\varepsilon }\right) \) for some \(\varepsilon >0\) as \(x\in I^\circ \) approaches a finite endpoint \(x_0\) of I; further, if I extends to \(\pm \infty \) we assume that \(V(x)\rightarrow -\infty \) fast enough as \(x\rightarrow \pm \infty \) in order for the measure (1.4) to have finite moments of all orders, so that the associated orthogonal polynomials exist. The expression \(\mathrm {tr}\,V(X)\) in (1.4) for an hermitian matrix X is defined via the spectral theorem. The JUE is recovered for \(I=[0,1]\) and \(V(x)=\alpha \log x+\beta \log (1-x)\), \(\mathrm {Re}\,\alpha ,\mathrm {Re}\,\beta >-1\).

Introduce the cumulant functions

which are analytic functions of \(z_1,\dots ,z_\ell \in {\mathbb {C}}\setminus I\), symmetric in the variables \(z_1,\dots ,z_\ell \). To simplify the analysis it is convenient to introduce the connected cumulant functions

from which the cumulant functions can be recovered by

For example, \({\mathscr {C}}_1(z)={\mathscr {C}}_1^{\mathsf{c}}(z)\), \({\mathscr {C}}_{2}^{\mathsf{c}}(z_1,z_2)=\mathscr {C}_{2}(z_1,z_2)-{\mathscr {C}}_{1}(z_1){\mathscr {C}}_1(z_2)\),

We now express the connected cumulant functions in terms of the monic orthogonal polynomials \(P_\ell (z)=z^\ell +\dots \) uniquely defined by

and of the \(2\times 2\) matrix

which is the well-known solution to the Riemann-Hilbert problem of orthogonal polynomials [29]; it is an analytic function of \(z\in {\mathbb {C}}\setminus I\).

Theorem 1.5

Let

with \(Y_N(z)\) as in (1.9). Then the connected cumulant functions (1.6) are given by

where prime in the second formula denotes derivative with respect to z and \(\mathrm {cyc}((\ell ))\) in the last formula is the set of \(\ell \)-cycles in the symmetric group \({\mathfrak {S}}_\ell \).

The proof is given in Sect. 3. Formulæ of this sort for correlators of hermitian matrix models have been recently discussed in the literature, see, e.g. [21, 27]. They are directly related to the theory of tau functions (formal [21] and isomonodromic [9, 31]) and to topological recursion theory [5, 6, 15, 28]. Incidentally, similar formulæ also appear for matrix models with external source [7, 8, 11,12,13, 41]. In Sect. 3 we provide an extremely direct derivation based solely on the Riemann-Hilbert characterization of the matrix \(Y_N(z)\).

We can apply these formulæ to the Jacobi measure \(\mathrm dm_N^{\mathsf{J}}\), see (1.1), for which the support is \(I=[0,1]\). Therefore we can expand the cumulants near the points \(z=0\) or \(z=\infty \); the expansion at \(z=1\) could be considered but we omit it as it is recovered from the one at \(z=0\) by exchanging \(\alpha ,\beta \) and \(z \mapsto 1-z\) see (1.1). Using the definition in (1.5) and (1.6), we obtain the generating functions for the JUE connected correlators (1.2), namely

where

On the other hand, performing the same expansion on the right hand side of the expressions for the cumulants in Theorem 1.5, we have an explicit tool to compute the correlators.

For the specific case of Jacobi polynomials, we prove in Sect. 4 (Proposition 4.1) that at \(z=\infty \) the matrix R(z) has the Taylor expansion (valid for \(|z|>1\)) of the form \(R(z) =T^{-1}R^{[\infty ]}(z)T\) and the Poincaré asymptotic expansion \(R(z) \sim T^{-1} R^{[0]}(z)T \) at \(z=0\) valid in the sector \(0<\arg z<2\pi \). Here T is the constant matrix

with \(h_\ell ^{\mathsf{J}}\) given in (4.2), and the series \(R^{[\infty ]}(z),R^{[0]}(z)\) are

where

and

Here \({}_4F_3\) is the generalized hypergeometric function, and we use the rising factorial

For example, the first few terms read

Since the constant conjugation by T in (1.11) of the matrix R(z) does not affect the formulæ of Theorem 1.5 (see also Sect. 4) we obtain the following corollary, which provides explicit formulæ for the generating functions of the correlators.

Corollary 1.6

Let \(R^{[\infty ]}(z)\) and \(R^{[0]}(z)\) be the explicit series given in (1.12) and (1.13). The one-point generating function (1.10) of the JUE are

where \(R_{1,1}^{[\infty ]},R_{1,1}^{[0]}\) denote the (1, 1)-entry of \(R^{[\infty ]},R^{[0]}\) respectively. The multi-point generating functions (1.10) are

The proof is in Sect. 4.2, and is obtained from the formulæ of Theorem 1.5 by expansion at \(z_i\rightarrow \infty ,0\). In this corollary, the formulæ on the right hand side are interpreted as power series expansions at \(z=0\) or \(z=\infty \); to this end we remark that for \(\ell \ge 2\) these series are well defined, as it follows from the fact that the corresponding analytic functions are holomorphic in \(({\mathbb {C}}\setminus I)^\ell \) and in particular regular along the diagonals \(z_a=z_b\) for \(a\not =b\) (see also Remark 3.2 and Lemma 3.3).

The coefficients of \(R^{[0]}(z)\) and \(R^{[\infty ]}(z)\) are rational functions of \(N,\alpha ,\beta \) and we conclude that JUE correlators extend to rational functions of \(N,\alpha ,\beta \).

Examining more closely the formula for \({\mathscr {F}}_{1,\infty }\) we see that

which by the explicit expansion \(R^{[\infty ]}(z)\) in (1.12) implies

where \(A_k(N)\) is defined in (1.14). Reasoning in the same way for \({\mathscr {F}}_{1,0}(z)\) we obtain

where \(\widetilde{A}_k(N)\) is defined in (1.15). Equations (1.17) and (1.18) agree with the results of [18].

Remark 1.7

The coefficients \(A_\ell (N),B_\ell (N)\) can be expressed in terms of Wilson polynomials [40, 50], which are defined by

for more details see Proposition 4.4. Thus the formulæ of Corollary 2.7 extend the connection between JUE moments \(\left\langle \mathrm {tr}\,X^k\right\rangle \) and Wilson polynomials described in [18] (see also [42, 43]) to the JUE multi-point correlators \(\left\langle \mathrm {tr}\,X^{k_1}\cdots \mathrm {tr}\,X^{k_\ell }\right\rangle \).

Remark 1.8

(JUE mixed correlators) We could consider more general generating functions as follows; take \(q,r,s\ge 0\) with \(q+r+s>0\) and expand the cumulant function

for the Jacobi measure as \(z_i\rightarrow \infty ,w_i\rightarrow 0,y_i\rightarrow 1\), to obtain the generating function

It is then clear that we can compute the coefficients of such series in terms of the matrix series \(R^{[0]},R^{[\infty ]}\), and thus of Wilson polynomials, by the formulæ of Theorem 1.5; note that the expansion of R(z) at \(z=1\) is obtained from \(R^{[0]}\) by exchanging \(\alpha \) with \(\beta \) and z with \(1-z\).

Example 1.9

From the formulæ of Corollary 1.6 we can compute

With the substitution \(\alpha =(c_\alpha -1)N\) and \(\beta =(c_\beta -1)N\) we have the large N expansion

Matching the coefficients as in Theorem 1.3 we get the values for \(h_{g=0}^{\mathsf{c}}(\lambda =(1,1,1),\mu ,\nu )\) (the connected Hurwitz numbers defined in Remark 1.4) reported in the following table;

For example, the numbers in the first row (\(\mu =(3)\)) can be read from the following factorizations in \({\mathfrak {S}}_3\). To list them let us first note that we have \(\mathrm {cyc}(\lambda )=\{\mathrm{Id}\}\) and \(\mathrm {cyc}(\mu )=\{(123),(132)\}\); therefore for \(\nu =(3)\) we have 2 factorizations (\(r=\text{ number } \text{ of } \text{ transpositions }=0\))

for \(\nu =(2,1)\) (\(\mathrm {cyc}(\nu )=\{(12),(23),(13)\}\)) we have 6 factorizations (\(r=1\))

and for \(\nu =(1,1,1)\) we have the 4 factorizations (\(r=2\), here the monotone condition plays a role)

Similarly we can compute from Corollary 1.6

and from Theorem 1.3 we recognize the connected Hurwitz numbers tabulated above.

Remark 1.10

(Laguerre limit) There is a scaling limit of the JUE correlators to the LUE correlators; if \(k_1,\cdots ,k_\ell \) are arbitrary integers we have

Therefore the results of the present work about the JUE directly imply analogous results for the LUE; these results are already known from [17, 31]. See also Remark 2.9.

2 JUE Correlators and Hurwitz numbers

In this section we prove Theorem 1.3. For the proof we will consider the so-called multiparametric weighted Hurwitz numbers; this far-reaching generalization of classical Hurwitz numbers was introduced and related to tau functions of integrable systems in several works by Harnad, Orlov [36], and Guay-Paquet [35], after the impetus of the seminal work of Okounkov [47].

2.1 Multiparametric weighted Hurwitz numbers

Let \({\mathbb {C}}[{\mathfrak {S}}_n]\) be the group algebra of the symmetric group \({\mathfrak {S}}_n\); namely, \({\mathbb {C}}[{\mathfrak {S}}_n]\) consists of formal linear combinations with complex coefficients of permutations of \(\{1,\dots ,n\}\). We shall need two important type of elements of \({\mathbb {C}}[{\mathfrak {S}}_n]\), which we now introduce. For any \(\lambda \vdash n\) denote

where we recall that \(\mathrm {cyc}(\lambda )\subset {\mathfrak {S}}_n\) is the conjugacy class of permutations of cycle-type \(\lambda \). It is well known [48] that the set of \({\mathcal {C}}_\lambda \) for \(\lambda \vdash n\) form a linear basis of the center \(Z({\mathbb {C}}[{\mathfrak {S}}_n])\) of the group algebra.

The second class of elements consists of the Young-Jucys-Murphy (YJM) elements [39, 46] \({\mathcal {J}}_a\), for \(a=1,\dots ,n\), defined as

denoting (a, b) (with \(a<b\)) the transposition of \(\{1,\dots ,n\}\) switching a, b and fixing everything else.

Although singularly the YJM elements are not central, they commute amongst themselves, and symmetric polynomials of n variables evaluated at \({\mathcal {J}}_1,\dots ,{\mathcal {J}}_n\) generate \(Z({\mathbb {C}}[{\mathfrak {S}}_n])\). Indeed the following relation [39] takes place in \(Z({\mathbb {C}}[{\mathfrak {S}}_n])[\epsilon ]\);

With these preliminaries we are ready to introduce the class of multiparametric Hurwitz numbers [10, 35, 36] which we need. Fix the real parameters \(\gamma _1,\dots ,\gamma _L\) and \(\delta _1,\dots ,\delta _M\) (\(L,M\ge 0\)) and collect them into the rational function

Then, the (rationally weighted) multiparametric (single) Hurwitz numbers \(H_G^{d}(\lambda )\), associated to the function G in (2.3) and labeled by the integer \(d\ge 1\) and by the partition \(\lambda \vdash n\), are defined by

where the notation \([\epsilon ^d {\mathcal {C}}_\lambda ]\) denotes the coefficient in front of \(\epsilon ^d{\mathcal {C}}_\lambda \) in the expansion of \(\prod _{a=1}^nG\left( \epsilon {\mathcal {J}}_a\right) \in Z({\mathbb {C}}[{\mathfrak {S}}_n])[[\epsilon ]]\) in the basis \(\{{\mathcal {C}}_\lambda \}\); to compute the expression \(G\left( \epsilon {\mathcal {J}}_a\right) \in Z({\mathbb {C}}[{\mathfrak {S}}_n])[[\epsilon ]]\), the denominators in (2.3) are to be understood as \((1-\delta _jz)^{-1}=\sum _{k\ge 0}\delta _j^kz^k\).

2.2 Generating functions of multiparametric Hurwitz numbers in the Schur basis

The following result (see [36]) expresses the generating functions of multiparametric weighted Hurwitz numbers in the Schur basis. In this context, the latter is regarded as the basis \(\{s_\lambda ({\mathbf {t}})\}\) (\(\lambda \) running in the set of all partitions) of the space of weighted homogeneous polynomials in \({\mathbf {t}}=(t_1,t_2,\dots )\), with \(\deg t_k=k\), whose elements are

where the complete homogeneous symmetric polynomials \(h_k({\mathbf {t}})\) are defined by the generating seriesFootnote 1

In the following we shall denote \({\mathcal {P}}\) the set of all partitions.

Proposition 2.1

([36]) The generating function

of multiparametric weighted Hurwitz numbers (2.4) associated to the rational function (2.3) is equivalently expressed as

where \(s_\lambda ({\mathbf {t}})\) are the Schur polynomials (2.5) and the coefficients are given explicitly by

\(\dim \lambda \) being the dimension of the irreducible representation of \({\mathfrak {S}}_{|\lambda |}\) associated with \(\lambda \).

Before the proof we give a couple of remarks.

-

1.

In (2.8) and below we use the notation \((i,j)\in \lambda \) where the partition \(\lambda \) is identified with its diagram, i.e. the set of \((i,j)\in {\mathbb {Z}}^2\) satisfying \(1\le i\le \ell (\lambda )\), \(1\le j\le \lambda _i\). For example, the diagram of the partition \(\lambda =(4,2,2,1)\vdash 9\) is depicted below;

$$\begin{aligned} \begin{array}{c|cccc} &{} j=1 &{} j=2 &{} j=3 &{} j=4 \\ \hline i=1 &{} \bullet &{}\bullet &{}\bullet &{}\bullet \\ i=2 &{} \bullet &{}\bullet &{}&{} \\ i=3 &{} \bullet &{}\bullet &{}&{} \\ i=4 &{} \bullet &{}&{}&{} \end{array} \end{aligned}$$(2.9) -

2.

There exist several equivalent formulæ for \(\dim \lambda \), including the well-known hook-length formula; for later convenience we recall the expression

$$\begin{aligned} \frac{\dim \lambda }{|\lambda |!}= \frac{\prod _{1 \le i < j \le N}(\lambda _i-\lambda _j+j-i)}{\prod _{k=1}^N(\lambda _k-k+N)!}, \end{aligned}$$(2.10)valid for all \(N\ge \ell (\lambda )\), setting \(\lambda _i=0\) for all \(\ell (\lambda )<i\le N\).

Proof of Proposition 2.1

We need a few preliminaries. First we recall that \(Z\left( {\mathbb {C}}[{\mathfrak {S}}_n]\right) \) is a semi-simple commutative algebra; a basis of idempotents is given by (see, e.g. [48])

where \(\chi _\lambda ^\mu \) are the characters of the symmetric group and \({\mathcal {C}}_\mu \) are given in (2.1). Namely

For any symmetric polynomial \(p(y_1,\dots ,y_n)\) in n variables we have already mentioned that \(p({\mathcal {J}}_1,\dots ,{\mathcal {J}}_n)\) belongs to \(Z\left( {\mathbb {C}}[{\mathfrak {S}}_n]\right) \); central elements are diagonal on the basis of idempotents and it is proven in [39] that

where in the right hand side we denote \(p\left( \{j-i\}_{(i,j)\in \lambda }\right) \) the evaluation of the symmetric polynomial p at the n values of \(j-i\) for \((i,j)\in {\mathbb {Z}}^2\) in the diagram of \(\lambda \vdash n\); in the example \(\lambda =(4,2,2,1)\vdash 9\) above, see (2.9), this denotes the evaluation \(p(0,1,2,3,-1,0,-2,-1,-3)\).

We are ready for the proof proper. First note that by (2.12) and (2.8) we have

which implies, using (2.11), that

By the definition of \(H_G^d(\mu )\) in (2.4) we can rewrite the last identity as

Since \({\mathcal {C}}_\mu \) form a basis of \(Z\left( {\mathbb {C}}[{\mathfrak {S}}_n]\right) \) we get that for any partition \(\mu \)

Multiplying this identity by \(\prod _{i=1}^{\ell (\mu )}t_{\mu _i}\) and summing over all partitions \(\mu \), on the left we obtain (2.6) and on the right, thanks to the well-known identity [45]

we obtain (2.7). The proof is complete. \(\square \)

Remark 2.2

This result is used by the authors of [36] to prove that the generating function \(\tau _G(\epsilon ;{\mathbf {t}})\) is a one-parameter family in \(\epsilon \) of Kadomtsev-Petviashvili tau functions in the times \({\mathbf {t}}\); a tau function such that the coefficients of the Schur expansion have the form (2.8) is termed hypergeometric tau function. It is also worth remarking that the theorem stated here is a reduction of a more general result, proved in [35], dealing with generating functions of double (weighted) Hurwitz numbers. In this general setting, the corresponding integrable hierarchy is the 2D Toda hierarchy.

2.3 JUE partition functions

Let us introduce the formal generating functions

of JUE correlators; the sum in the right hand side is a formal power series in \(\mathbf{u}\) running over all partitions \(\lambda \), with the combinatorial factor \(z_\lambda \) defined in (1.3)Footnote 2. We call \(Z^+_N({\mathbf {u}})\) (resp. \(Z^-_N({\mathbf {u}})\)) the positive (resp. negative) JUE partition function. Although it will not be needed in the following, we mention that these partition functions are Toda tau functions in the times \(u_1,u_2,\dots \) [1, 2, 19].

Our goal in this paragraph is to show that the JUE partition functions can be expressed in the form (2.7) for appropriate choices of G (see Corollary 2.7).

The first step is to expand the JUE partition functions in the Schur basis; this is achieved by the following well-known general lemma, whose proof we report for the reader’s convenience. The idea of expanding a hermitian matrix model partition function over the Schur basis has been recently used in the computation of correlators in [38].

We first introduce the following notations

for the Vandermonde determinant and

for the characters of \(\mathrm{GL}_n\); again, we set \(\lambda _i=0\) for all \(\ell (\lambda )<i\le N\).

Lemma 2.3

For any potential V(x) (\(x\in I\)) we have

where the Schur polynomials are defined in (2.5) and the coefficients are

Here \({\underline{x}}=(x_1,\dots ,x_N)\) and \(\underline{x}^{-1}=(x_1^{-1},\dots ,x_N^{-1})\).

Proof

We have

where we use the standard decomposition \(\mathrm dX=\Delta ^2(\underline{x})\mathrm d^N{\underline{x}}\mathrm dU\) of the Lebesgue measure into eigenvalues \({\underline{x}}=(x_1,\dots ,x_N)\) and eigenvectors \(U\in \mathrm {U}_N\) of the hermitian matrix \(X=UXU^\dagger \), with \(\mathrm dU\) a Haar measure on \(\mathrm {U}_N\) (whose normalization is irrelevant as it cancels in (2.16) between numerator and denominator). The proof follows by an application of the identity

which is nothing but a form of Cauchy identity, see, e.g. [49]. \(\square \)

Remark 2.4

By applying Andreief identity

it is straightforward to show that the coefficients \(c_{\lambda ,N}\) in (2.15) can also be expressed as

see also [38]. However, for our purposes it is more convenient to work with the representation (2.15).

Applying this general lemma to \(I=[0,1]\) and \(V(x)=\alpha \log x+\beta \log (1-x)\) we can expand the positive and negative JUE partition functions in the Schur basis as

where

For the negative coefficients \(c_{\lambda ,N}^-\) we shall use the following elementary lemma.

Lemma 2.5

For any partition \(\lambda =(\lambda _1,\dots ,\lambda _\ell )\) of length \(\ell \le N\) we have

where \(\widehat{\lambda }\) is the partition of length \(<N\) whose parts are \(\widehat{\lambda }_j=\lambda _1-\lambda _{N-j+1}\).

Proof

The proof follows from the following chain of equalities;

In the first step we have shuffled the columns as \(j\mapsto N-j+1\), then we have multiplied both numerator and denominator by \((x_1\cdots x_N)^{N+\lambda _1}\), and finally we have applied the definition (2.14). \(\square \)

For the simplification of the coefficients (2.18) we rely on the following Schur-Selberg integral

for which we refer, e.g. to [45, page 385]. The above allows us to prove the following proposition.

Proposition 2.6

We have

Proof

We start with \(c_{\lambda ,N}^+\); using (2.18), (2.19), and (2.10) we compute

We remind that we are using the notation (1.16) for the rising factorial. For \(c_{\lambda ,N}^-\) we first note that, thanks to Lemma 2.5 and (2.19), we have

then with similar computations as above we obtain

\(\square \)

This proposition enables us to identify the Jacobi generating function (2.13) with the generating function of multiparametric weighted Hurwitz numbers in (2.6). Indeed we have the following result.

Corollary 2.7

Let \(c_\alpha :=1+\alpha /N\) and \(c_\beta :=1+\beta /N\); then the Jacobi formal partition functions in (2.13) take the form

where \(\tau _G\) is introduced in Theorem 2.1.

Proof

We first note that we can rewrite the expansion (2.17) as

with the sum over all partitions \({\mathcal {P}}\) and no longer restricted to \(\ell (\lambda )\le N\); this is clear as \(c_{N,\lambda }^\pm =0\) whenever \(N=0,1,2,\dots \) and \(\ell (\lambda )>N\). Then the proof is immediate by the formula (2.8) for the coefficients \(r_\lambda ^{(G,\epsilon )}\), since (2.20) can be rewritten as

\(\square \)

2.4 Hurwitz numbers \(h_g(\lambda ,\mu ,\nu )\) and multiparametric Hurwitz numbers

We now connect the multiparametric Hurwitz numbers (2.4) for the functions \(G^\pm (z)\), appearing in Corollary 2.7, with the counting problem in Definition 1.2.

Proposition 2.8

If \(G(z)=\frac{(1+z)(1+\gamma z)}{1-\delta z}\), with \(\gamma \) and \(\delta \) parameters, then for all partitions \(\lambda \vdash n\) and all integers \(g\ge 0\) we have

where the triple monotone Hurwitz number \(h_g(\lambda ,\mu ,\nu )\) has been introduced in Definition 1.2.

Proof

We apply (2.2) to the first two factors of the following to get

By definition (2.4), extracting the coefficient of \(\epsilon ^d{\mathcal {C}}_\lambda \) and dividing by \(z_\lambda \) we obtain \(H^d_G(\lambda )\); therefore

where d, r, g in this identity are related via

The proof is complete by the identity \(z_\lambda |\mathrm {cyc}(\lambda )|=n!\). \(\square \)

2.5 Proof of Theorem 1.3

From Corollary 2.7 we have, with the scaling \(\alpha =(c_\alpha -1)N\), \(\beta =(c_\beta -1)N\),

where we have used Proposition 2.1. It follows from (2.13) that

and using finally Proposition 2.8 we have

The proof is complete.\(\square \)

Remark 2.9

Let us note that letting \(c_\beta \rightarrow \infty \) in the functions \(G^\pm \) of Corollary 2.7 we have \(G^+(z)\rightarrow (1+z)(1+z/c_\alpha )\) and \(G^-(z)\rightarrow (1+z)/(1-z/(c_\alpha -1))\). The Hurwitz numbers corresponding to these limit functions can be identified as in Proposition 2.8 in terms of double strictly (\(+\)) or weakly (−) Hurwitz numbers, respectively. Thus, bearing in mind the scaling limit for \(\beta \rightarrow \infty \) of JUE correlators to the correlators of the Laguerre Unitary Ensemble of Remark 1.10, the Theorem 1.3 recovers the results of [17].

3 Computing correlators of Hermitian models

In this section we prove Theorem 1.5. First of all, we introduce a few notations and recall some standard facts about orthogonal polynomials. We denote with \(P_\ell (z)\) the monic orthogonal polynomials, \(h_\ell =\int _IP^2_\ell (x)\mathrm {e}^{V(x)}\mathrm dx\), see (1.8), and

their Cauchy transforms. The matrix

introduced in (1.9), is an analytic function of \(z\in {\mathbb {C}}\setminus I\). It satisfies the jump condition

where we use the notation

and \(I^\circ \) is the interior of the interval I. As \(z\rightarrow \infty \) we have

where we introduce also the standard notation \(\sigma _3=\begin{pmatrix} 1&{} 0 \\ 0 &{} -1 \end{pmatrix}\).

It is well known [19] that

where

and \(k_N(x,y)\) is the Christoffel-Darboux kernel

with \(P_N(x)\) the monic orthogonal polynomials. Using the matrix entries of \(Y_N(z)\) in (3.2), the above expression can be conveniently rewritten as

which is independent of the choice of boundary value of \(Y_N\). Let us finally note that the connected cumulant functions can be computed as

where (1.6), (1.7) and (3.6) imply

and the sum extends over the transitive permutations of \(\{1,\dots ,\ell \}\), i.e. cycles of length \(\ell \) in \({\mathfrak {S}}_\ell \).

3.1 Case \(\ell =1\)

In this case, it follows from (3.7) that

In the following we shall use the notation

for the jump of a function f across I, namely \(f_\pm (x)=\lim _{\epsilon \rightarrow 0_+}f(x\pm \mathrm {i}\epsilon )\).

The following lemma is well known, see, e.g. [16], and it is proven here for the reader’s convenience.

Lemma 3.1

We have

Proof

It follows from the jump condition (3.3) for \(Y_N\) that

Therefore we compute

The last term vanishes and so, by the cyclic property of the trace, we have

which is equivalent to relation (3.9). \(\square \)

From this lemma and (3.5) we get

where \(\Gamma \) is a smooth contour enclosing I, oriented counterclockwise (namely, the interval I is always to the left of \(\Gamma \)), and leaving z outside (namely z is to the right of \(\Gamma \))Footnote 3. Using Cauchy residue theorem we have

The first residue vanishes due to (3.4), while the second one is readily computed to give

3.2 Case \(\ell =2\)

In this case, using (3.7),

Introduce, as in the statement of Theorem 1.5,

It is an analytic function of \(z\in {\mathbb {C}}\setminus I\), satisfying

as it follows from (3.3). Furthermore \(\Delta R(x)\) is nilpotent:

so that the right hand side of the expression

is regular on the diagonal. Therefore we have, from (3.8),

where \(\Gamma \) is an anticlockwise contour encircling I and we assume that both \(z_1,z_2\) are outside the interior of \(\Gamma \). Therefore the inner integral can be computed by Cauchy residue theorem

The residue at infinity vanishes, as from (3.4) we see that

and the one at \(z_1\) is readily computed as

Therefore

with the same contour \(\Gamma \). Again by Cauchy residue theorem (both \(z_1,z_2\) are outside \(\Gamma \))

The residue at infinity vanishes again because of (3.11) and the remaining ones are computed as follows

where in the last step we have used \(\mathrm {tr}\,R^2(z)=1\) and its derivative \(\mathrm {tr}\,\left( R(z)R'(z)\right) =0\), as it follows directly from the definition (3.10) of R(z). The theorem is proved also for \(\ell =2\).

Remark 3.2

The function \(\left[ \mathrm {tr}\,(R(z_1)R(z_2))-1\right] /(z_1-z_2)^2\) is regular at \(z_1=z_2\), as \({\mathscr {C}}_2^{\mathsf{c}}(z_1,z_2)\) is. To verify this concretely, it suffices to note that \(\mathrm {tr}\,R^2(z)=1\) from the definition (3.10) of R(z), which implies that the numerator \(\left[ \mathrm {tr}\,(R(z_1)R(z_2))-1\right] \) vanishes at second order at \(z_1=z_2\).

3.3 Case \(\ell \ge 3\)

Using (3.7) we write

so that from (3.8) we obtain

where, similarly as before, \(\Gamma \) is a contour enclosing I in counterclockwise sense and leaving \(z_1,\dots ,z_\ell \) outside (namely I is to the left of \(\Gamma \) and \(z_1,\dots ,z_\ell \) are to the right of \(\Gamma \)).

Lemma 3.3

For all \(\ell \ge 3\) the function

is holomorphic for \((z_1,\dots ,z_\ell )\in ({\mathbb {C}}\setminus I)^\ell \), in particular it is regular on the diagonals \(z_a=z_b\) for all \(a\not =b\). Moreover, \(S(z_1,\dots ,z_\ell )={\mathcal {O}}(1/z_j)\) as \(z_j\rightarrow \infty \), for any \(j=1,\dots ,\ell \).

Proof

For the first statement, the denominators in S vanish at \(z_{a}=z_b\) only for \(\ell \)-cycles of the form \((i_1,\dots ,i_{\ell -2},a,b)\) and \((i_1,\dots ,i_{\ell -2},b,a)\); these terms have simple poles at \(z_a=z_b\) of the form

and

so that the polar parts at \(z_a=z_b\) cancel each other in the summation. The second statement follows directly from (3.11). \(\square \)

Using this lemma we can complete our computation,

because \(S(x_1,\dots ,x_\ell )\frac{\mathrm dx_1}{(z_1-x_1)}\) has no residue at infinity. Thus

because \(S(z_1,x_2,\dots ,x_\ell )\frac{\mathrm dx_2}{(z_2-x_2)}\) has no residue at infinity (and also it has no residue at \(z_1\) because S is regular along diagonals). Then

Iterating this argument we arrive at

which proves the theorem also in the case \(\ell > 3\).

Remark 3.4

We note here that since R(z) is a rank one matrix, the formulae of Theorem 1.5 for \(\mathscr {C}^{\mathsf{c}}_\ell \), \(\ell \ge 2\), can be expressed in terms of the scalar quantities

as

4 JUE correlators and Wilson Polynomials

In this section we prove Corollary 1.6. This is done by expanding the general formulæ of Theorem 1.5 as \(z_i\rightarrow 0,\infty \). To this end we consider the monic orthogonal polynomials for the Jacobi measure, which are the classical (monic) Jacobi polynomials

satisfying the orthogonality property

4.1 Expansion of the matrix R

This paragraph is devoted to the proof of the following proposition.

Proposition 4.1

We have the Taylor expansion at \(z=\infty \)

where T is the constant matrix (1.11) and \(R^{[\infty ]}(z)\) is the matrix-valued power series in \(z^{-1}\) in (1.12). We have the Poincaré asymptotic expansion at \(z=0\) uniformly within the sector \(0<\arg z<2\pi \)

where T is the constant matrix (1.11) and \(R^{[0]}(z)\) is the matrix-valued (formal) power series in z in (1.12).

Looking back at the definition (3.10) for the matrix R(z),

we notice that it is sufficient to compute the expansions of the product of the Jacobi polynomials with their Cauchy transforms at the prescribed points. To this end, recall the explicit formula (4.1) for the monic Jacobi orthogonal polynomials, which can be rewritten as the Rodrigues’ formula

The Cauchy transforms \(\widehat{P}_\ell ^{\mathsf{J}}(z)\) defined in (3.1) can be expanded as stated below.

Lemma 4.2

The following relations hold true:

where the first relation is a genuine Taylor expansion at \(z=\infty \), valid for all \(|z| > 1\), whilst the second one is a Poincaré asymptotic expansion at \(z=0\) uniform in the sector \(0<\arg z<2\pi \).

Proof

We start with the expansion (4.4) at \(z=\infty \), which is computed as follows;

In (i) we have expanded the geometric series and exchanged sum and integral by Fubini theorem, in (ii) we use that \(P^{\mathsf{J}}_\ell (z)\) is orthogonal to \(z^j\) for \(j<\ell \), in (iii) we use the Rodrigues’ formula (4.3), in (iv) we integrate by parts, in (v) we compute the derivative, and finally in (vi) we use the Euler beta integral. The computation at \(z=0\) is completely analogous, with the only difference that in (i) it is not legitimate to exchange sum and integral so this step holds only in the sense of a Poincaré asymptotic series. \(\square \)

The next step is to compute the expansions of the products of the Jacobi polynomials and their Cauchy transforms. To this end it is convenient to study more in detail the properties of R(z).

Proposition 4.3

The matrix \(\Psi _N(z):=Y_N(z)z^{\alpha \sigma _3/2}(1-z)^{\beta \sigma _3/2}\) satisfies the following linear differential equation

and the matrix R(z) satisfies the following Lax differential equation,

Here the matrix U(z) is explicitly given as

with

Proof

From the definition (3.10) we obtain \(R(z)=\Psi _N(z)\begin{pmatrix}1 &{} 0 \\ 0 &{} 0 \end{pmatrix}\Psi _N^{-1}(z)\); therefore the Lax equation (4.7) follows from (4.6). The latter is a classical property of Jacobi orthogonal polynomials [44]. \(\square \)

To prove Proposition 4.1 is equivalent to prove that \(\widetilde{R}(z)\sim R^{[p]}(z)\) for \(p=\infty ,0\) where

It follows from the previous proposition that \(\widetilde{R}(z)\) satisfies

where \(\widetilde{U}(z)=TU(z)T^{-1}=\widetilde{U}_0/z+\widetilde{U}_1/(1-z)\), with

Introduce the matrices

and write

where we used that \(\mathrm {tr}\,R(z)= 1, \mathrm {tr}\,U(z)=0\). For the sake of brevity we omit the dependence on z in the \(\mathfrak {sl}_2\) components. The Lax equation (4.10) yields the coupled first-order linear ODEs

which are equivalent to three decoupled third-order linear ODEs, one for \(\partial _zr_3\)

and for \(r_\pm \)

The following ansatz is quite natural in view of our previous work [31] about the Laguerre Unitary Ensemble (see also [21] for the Gaussian Unitary Ensemble); namely we write the expansions of the entries of R(z) at \(z=\infty \) as

for some coefficients \(A_\ell (N)=A_\ell (N,\alpha ,\beta )\) and \(B_\ell (N)=B_\ell (N,\alpha ,\beta )\). By substitution in (4.11) and (4.12) we see that the ansatz is consistent with them; in particular we get the following three term recurrence relations for \(A_\ell (N),B_\ell (N)\);

for \(\ell \ge 1\), together with the initial conditions

The initial conditions are obtained from (4.1) and (4.4). It can be checked that the recurrence relation for the coefficients of \(r_+(z)\) are actually those of \(r_-(z)\), modulo a shift in N, as claimed in (4.13).

The three term recurrence relations (4.14) and (4.15) can be solved in terms of Wilson polynomials (1.19).

Proposition 4.4

The coefficients \(A_\ell (N,\alpha ,\beta )\) and \(B_\ell (N,\alpha ,\beta )\) can be expressed in terms of Wilson Polynomials, defined in (1.19), as

This is equivalent to the hypergeometric representation

Proof

The identification with the Wilson polynomials is obtained by comparing the recurrence relations (4.14) and (4.15) with the difference equation for this family of orthogonal polynomials, which reads

where \(w(k)=W_n(k^2;a,b,c,d)\) and

The hypergeometric representation of \(A_\ell ,B_\ell \) then directly follows from that of the Wilson polynomials in (1.19). \(\square \)

The above Proposition, together with the expansions (4.13), yields the first part of Proposition 4.1. The asymptotics of R(z) at \(z=0\) are obtained in a similar way. More precisely, we claim that the expansion at \(z=0\) of the entries of \(\widehat{R}(z)\) reads as

This can be proven by checking that plugging the formulæ (4.16) in the equations (4.11), (4.12), one obtains the same recurrence relations (4.14) and (4.15). The associated initial conditions can again be computed from (4.1) and (4.5). This concludes the proof of Proposition 4.1.\(\square \)

4.2 Proof of Corollary 1.6

4.2.1 Case \(\ell =1\)

From Theorem 1.5 we write the formula for \(\mathscr {C}_1(z)\) by using the differential equation (4.6) as

where we denote \(E_{1,1}=\begin{pmatrix} 1 &{} 0 \\ 0 &{} 0 \end{pmatrix}\); in the first step we used that \(\mathrm {tr}\,(Y^{-1}_N(z)Y_N'(z))=\mathrm {tr}\,U(z)=0\) and in the second one we used the definition of \(R(z)=Y_N(z)E_{1,1}Y_N(z)^{-1}\), the cyclic property of the trace and the equation

which follows from (4.6).

Lemma 4.5

We have

Proof

We compute

The last term vanishes due to the Lax equation (4.7), because \(\mathrm {tr}\,(U(z)[U(z),R(z)])=0\) by the cyclic property of the trace. Then we use the identity

which can be checked directly from (4.8). The proof is complete. \(\square \)

By this lemma and (4.17) we obtain

where we use that \(\mathrm {tr}\,R(z)=1\) to compute \(\mathrm {tr}\,(R(z)\sigma _3)=2R_{1,1}(z)-1\), and we denote \(R_{1,1}\) the (1, 1)-entry of R. Integrating this identity implies that for any \(p\in {\mathbb {C}}\setminus [0,1]\) we have

Letting \(p\rightarrow 0\) in (4.18) we have \(p(1-p){\mathscr {C}}_1(p)\rightarrow 0\) and so

Expanding this identity at \(z=0\) we get at the left hand side

and using Proposition 4.1 (note that \((TR(z)T)_{1,1}=R_{1,1}(z)\) because T is diagonal) the formula for \({\mathscr {F}}_{1,0}(z)\) is proved.

Letting instead \(p\rightarrow \infty \) we have \(p(1-p){\mathscr {C}}_1(p)\sim (1-p)N-\left\langle \mathrm {tr}\,X\right\rangle +{\mathcal {O}}(1/p)\) and therefore from (4.18) we have (noting that \(R_{1,1}(w)=1+{\mathcal {O}}(w^{-2})\) so the integral is well defined)

We can compute

by expanding the general formula \(\mathscr {C}_1(z)=\mathrm {tr}\,\left( Y_N^{-1}(z)Y_N'(z)\sigma _3/2\right) \) at \(z=\infty \), using (4.1) and the first few terms in (4.4). We finally obtain

Expanding this identity at \(z=\infty \) we get at the left hand side

and using Proposition 4.1 (again note that \((TR(z)T)_{1,1}=R_{1,1}(z)\) because T is diagonal) the formula for \({\mathscr {F}}_{1,\infty }(z)\) is also proved.

4.2.2 Case \(\ell \ge 2\)

In this case we note that

where \(\widetilde{R}(z)=TR(z)T^{-1}\) as in (4.9). We now expand both sides of this identity at \(z=0,\infty \). The expansion of the right hand side follows from Proposition 4.1 which asserts that \(\widetilde{R}(z)\sim R^{[0]}(z),R^{[\infty ]}(z)\) as \(z\rightarrow 0,\infty \), respectively. For the left hand side instead, at \(z\rightarrow 0\) we have

while at \(z\rightarrow \infty \) we have

where in the last identity we use that terms with \(k_i=0\) for some i do not contribute to the sum; indeed the connected correlator \(\left\langle \mathrm {tr}\,X^{k_1}\cdots \mathrm {tr}\,X^{k_\ell }\right\rangle ^{\mathsf{c}}\) vanishes whenever \(k_i=0\) for some i. The proof is complete.\(\square \)

Change history

27 July 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11005-023-01707-6

Notes

For convenience we adopt a normalization which differs from the one common in the literature by a transformation \(t_k\mapsto t_k/k\).

A formal power series in infinitely many variables \(u_1,u_2,\cdots \) can be rigorously treated by introducing the grading \(\deg u_k=k\) and working in the completion of the algebra of polynomials in \(u_1,u_2,\dots \), filtered by degree.

When \(I={\mathbb {R}}\), we take a disconnected contour \(\Gamma =-({\mathbb {R}}+\mathrm {i}\epsilon )\cup ({\mathbb {R}}-\mathrm {i}\epsilon )\) with \(0< \epsilon < \mathrm {Im}\,z\).

References

Adler, M., van Moerbeke, P.: Matrix integrals, Toda symmetries, Virasoro constraints and orthogonal polynomials. Duke Math. J. 80(3), 863–911 (1995)

Adler, M., van Moerbeke, P.: Integrals over classical groups, random permutations, Toda and Toeplitz lattices. Comm. Pure Appl. Math. 54(2), 153–205 (2001)

Alexandrov, A., Chapuy, G., Eynard, B., Harnad, J.: Weighted Hurwitz numbers and topological recursion. Comm. Math. Phys. 375(1), 237–305 (2020)

Alexandrov, A., Lewanski, D., Shadrin, S.: Ramifications of Hurwitz theory, KP integrability and quantum curves. J. High Energ. Phys. 2016, 124 (2016)

Bergère, M., Borot, G., Eynard, B.: Rational differential systems, loop equations and application to the q-th reduction of KP. Ann. Henri Poincaré 16(12), 2713–2782 (2015)

Bergère, M., Eynard, B.: Determinantal formulas and loop equations. arXiv:0901.3273

Bertola, M., Dubrovin, B., Yang, D.: Correlation functions of the KdV hierarchy and applications to intersection numbers over \(\overline{{\cal{M}}}_{g, n}\). Phys. D 327, 30–57 (2016)

Bertola, M., Dubrovin, B., Yang, D.: Simple Lie algebras and topological ODEs. Int. Math. Res. Not. IMRN 5, 1368–1410 (2018)

Bertola, M., Eynard, B., Harnad, J.: Semiclassical orthogonal polynomials, matrix models and isomonodromic tau functions. Comm. Math. Phys. 263(2), 401–437 (2006)

Bertola, M., Harnad, J., Runov, B.: Generating weighted Hurwitz numbers. J. Math. Phys. 61(1), 013506 (2020)

Bertola, M., Cafasso, M.: The Kontsevich matrix integral: convergence to the Painlevé hierarchy and Stokes’ phenomenon. Comm. Math. Phys. 352(2), 585–619 (2017)

Bertola, M., Ruzza, G.: The Kontsevich-Penner matrix integral, isomonodromic tau functions and open intersection numbers. Ann. Henri Poincaré 20(2), 393–443 (2019)

Bertola, M., Ruzza, G.: Brezin-Gross-Witten tau function and isomonodromic deformations. Commun. Number Theory Phys. 13(4), 827–883 (2019)

Bessis, D., Itzykson, C., Zuber, J.B.: Quantum field theory techniques in graphical enumeration. Adv. in Appl. Math. 1(2), 109–157 (1980)

Chekhov, L., Eynard, B.: Hermitian matrix model free energy: Feynman graph technique for all genera. J. High Energy Phys. 3(014), 18 (2006)

Claeys, T., Grava, T., McLaughlin, K.D.T.-R.: Asymptotics for the partition function in two-cut random matrix models. Comm. Math. Phys. 339(2), 513–587 (2015)

Cunden, F.D., Dahlqvist, A., O’Connell, N.: Integer moments of complex Wishart matrices and Hurwitz numbers. To appear in Ann. Inst. Henri Poincaré D. https://doi.org/10.4171/AIHPD/103

Cunden, F.D., Mezzadri, F., O’Connell, N., Simm, N.: Moments of random matrices and hypergeometric orthogonal polynomials. Comm. Math. Phys. 369(3), 1091–1145 (2019)

Deift, P.: Orthogonal polynomials and random matrices. A Riemann-Hilbert approach courant lecture notes in mathematics, 3 new york university courant institute of mathematical sciences. American Mathematical Society, Providence (1999)

Dubrovin, B., Liu, S.-Q., Yang, D., Zhang, Y.: Hodge-GUE correspondence and the discrete KdV equation. Comm. Math. Phys. 379(2), 461–490 (2020)

Dubrovin, B., Yang, D.: Generating series for GUE correlators. Lett. Math. Phys. 107(11), 1971–2012 (2017)

Dubrovin, B., Yang, D.: On cubic Hodge integrals and random matrices. Commun. Number Theory Phys. 11(2), 311–336 (2017)

Dubrovin, B., Yang, D., Zagier, D.: Gromov-Witten invariants of the Riemann sphere. Pure Appl. Math. Q. 16(1), 153–190 (2020)

Ekedahl, T., Lando, S., Shapiro, M., Vainshtein, A.: Hurwitz numbers and intersections on moduli spaces of curves. Invent. Math. 146(2), 297–327 (2001)

Ercolani, N.M., McLaughlin, K.D.T.-R.: Asymptotics of the partition function for random matrices via Riemann-Hilbert techniques and applications to graphical enumeration. Int. Math. Res. Not. 14, 755–820 (2003)

Ercolani, N.M., McLaughlin, K.D.T.-R., Pierce, U.V.: Random matrices, graphical enumeration and the continuum limit of Toda lattices. Comm. Math. Phys. 278(1), 31–81 (2008)

Eynard, B., Kimura, T., Ribault, S.: Random matrices. arXiv:1510.04430

Eynard, B., Orantin, N.: Invariants of algebraic curves and topological expansion. Commun. Number Theory Phys. 1(2), 347–452 (2007)

Fokas, A.S., Its, A.R., Kitaev, A.V.: The isomonodromy approach to matrix models in 2D quantum gravity. Comm. Math. Phys. 147(2), 395–430 (1992)

Forrester, P.J.: Log-gases and random matrices London mathematical society monographs series. Princeton University Press, Princeton (2010)

Gisonni, M., Grava, T., Ruzza, G.: Laguerre Ensemble: Correlators, Hurwitz Numbers and Hodge Integrals. Ann. Henri Poincaré 21(10), 3285–3339 (2020)

Goulden, I.P., Guay-Paquet, M., Novak, J.: Monotone Hurwitz numbers and the HCIZ integral. Ann. Math. Blaise Pascal 21(1), 71–89 (2014)

Goulden, I.P., Guay-Paquet, M., Novak, J.: Toda equations and piecewise polynomiality for mixed double Hurwitz numbers. SIGMA Symmetry Integrability Geom. Methods Appl. 12 (2016), Paper No. 040, 10 pp

Goulden, I.P., Guay-Paquet, M., Novak, J.: On the convergence of monotone Hurwitz generating functions. Ann. Comb. 21(1), 73–81 (2017)

Guay-Paquet, M., Harnad, J.: 2D Toda \(\tau \)-functions as combinatorial generating functions. Lett. Math. Phys. 105(6), 827–852 (2015)

Harnad, J., Orlov, A.Y.: Hypergeometric \(\tau \)-functions, Hurwitz numbers and enumeration of paths. Comm. Math. Phys. 338(1), 267–284 (2015)

Hurwitz, A.: Ueber die Anzahl der Riemann’schen Flächen mit gegebenen Verzwei-gungspunkten. Math. Ann. 55(1), 53–66 (1901)

Jonnadula, B., Keating, J.P., Mezzadri, F.: Symmetric Function Theory and Unitary Invariant Ensembles. arXiv:2003.02620

Jucys, A.-A.A.: Symmetric polynomials and the center of the symmetric group ring. Rep. Math. Phys. 5(1), 107–112 (1974)

Koekoek, R., Swarttouw, R.F.: The Askey-scheme of hypergeometric orthogonal polynomials and its \(q\)-analogue, pp. 94–05. Delft University of Technology, Faculty of Technical Mathematics and Informatics, Report no (1994)

Kontsevich, M.: Intersection theory on the moduli space of curves and the matrix Airy function. Comm. Math. Phys. 147(1), 1–23 (1992)

Mezzadri, F, Simm, NJ: 2013 Tau-function theory of chaotic quantum transport with \(\beta = 1,2,4.\). Comm. Math. Phys. 324, 2, 465-513

Mezzadri, F., Simm, N.J.: Moments of the transmission eigenvalues, proper delay times and random matrix theory II. J. Math. Phys. 53(5), 053504 (2012)

Mourad, I.: Classical and quantum orthogonal polynomials in one variable. Cambridge University Press, Cambridge (2005)

Macdonald, I.G.: Symmetric functions and Hall polynomials, 2nd edn. The Clarendon Press, Oxford University Press, New York (2015)

Murphy, G.E.: A new construction of Young’s seminormal representation of the symmetric group. J. Algebra 69(2), 287–297 (1981)

Okounkov, A.: Toda equations for Hurwitz numbers. Math. Res. Lett. 7(4), 447–453 (2000)

Serre, J.-P.: Linear representations of finite groups graduate texts in mathematics. Springer, New York (1977)

Stanley, R.P.: Enumerative combinatorics, 1st edn. Cambridge University Press, Cambridge (2001)

Wilson, J.: Some hypergeometric orthogonal polynomials. SIAM Journ. Math. Anal. 11(4), 690–701 (1980)

Yang, D.: On tau-functions for the Toda lattice hierarchy. Lett. Math. Phys. 110(3), 555–583 (2020)

Acknowledgements

We thank M. Bertola and D. Yang for valuable conversations. This project has received funding from the European Union’s H2020 research and innovation programme under the Marie Skłodowska–Curie grant No. 778010 IPaDEGAN. The research of G.R. is supported by the Fonds de la Recherche Scientifique-FNRS under EOS project O013018F.

Funding

Open access funding provided by Scuola Internazionale Superiore di Studi Avanzati - SISSA within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

To the memory of Boris Dubrovin, our mentor, teacher, and a source of ever-lasting inspiration.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gisonni, M., Grava, T. & Ruzza, G. Jacobi Ensemble, Hurwitz Numbers and Wilson Polynomials. Lett Math Phys 111, 67 (2021). https://doi.org/10.1007/s11005-021-01396-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11005-021-01396-z