Abstract

In this study, a unique method for modelling the thermal conductivity of nanofluids is proposed using a "model of models" approach. Three distinct data streams are utilised to achieve this. The first stream uses experimental data to predict thermal conductivity, an input for the primary machine learning model. The other stream involves modelling correlations from previous studies and integrating them as an additional input. Lastly, theoretical data streams are modelled and included as a last stream. By training a model on these combined data streams, the study aims to overcome various challenges in modelling nanofluids' thermophysical properties. The research holds great significance as it can potentially reconcile and understand errors that come with various modelling methods. This could result in improved model performance that closely resembles experimental data. The presented model in the model of models’ approach achieves a remarkable coefficient of determination (R-squared) value of 0.999 on the test data set, showcasing its exceptional accuracy and effectiveness in handling complex data, particularly about the thermophysical properties of nanofluids. Furthermore, this implicit general model comprises of data models incorporating material properties and physical phenomena, offering broad applicability. It is recommended that this approach be extended to viscosity, enhancing the understanding and prediction of nanofluid properties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

This research utilises modelling techniques to estimate the thermal conductivity of nanofluids. It involves a unique approach where three types of data streams are employed.

The first stream is experimental data, used to predict thermal conductivity and fed as input to the main machine learning model. The second stream involves the theoretical model added as input to the main model. The third stream comprises correlation data as an added input stream to the main model.

A model is created and trained based on these three input streams. This particular modelling framework is anticipated to address numerous challenges encountered when modelling the thermophysical properties of nanofluids. The significance of this research lies in its ability to unify and comprehend the discrepancies present in the various modelling techniques, ultimately enhancing our model's performance and bringing us closer to replicating experimental data.

There has been a lot of advancement in numerical modelling and simulation [1,2,3,4,5,6,7,8,9,10,11]. Vajjha et al. [12] Developed new correlations for the Nusselt number and the friction factor under turbulent flow of nanofluids in flat tubes. Correlation for density, viscosity, specific heat and thermal conductivity have also been developed from experimental data.

Nanofluids find application across thermal energy systems due to their tailored attributes and heat transfer enhancement capabilities. Key properties, such as thermal conductivity and dynamic viscosity, play pivotal roles in heat transfer behaviour and fluid flow specifics. Support Vector Machines (SVMs), among advanced techniques, are favoured due to their exceptional precision in prediction and modelling [13]. In their research they employed SVMs to predict and model these nanofluid properties. They observed that the SVM-based approaches display considerable proficiency in accurately modelling thermal conductivity and dynamic viscosity, and they showed that the SVM methods performed better than other methods. They stated that the efficacy of these approaches was hinged on factors like optimisation algorithms [13].

Anticipating the thermophysical properties of nanofluids, known for their improved heat transfer, is vital, sparing the need for experimental investigations [14]. Sahin et al. [14] constructs two distinct artificial neural networks to forecast the thermal conductivity and zeta potential of Fe3O4/water nanofluid. They experimentally gauged the nanofluid's thermal conductivity and zeta potential at three different concentrations. Using temperature and concentration to compute thermal conductivity from the acquired experimental data, a novel mathematical correlation was introduced. Notably, the novelty lies in its divergence from conventional concentration-based correlations in existing literature. An extensive performance analysis involving different metrics was carried out. Neural network models yielded R-values above 0.99 and mean squared error values of 1.47E-05 and 1.58E-06 for thermal conductivity and zeta potential, respectively. Furthermore, they observed a mean deviation of 0.03% for the network's thermal conductivity and 0.05% for the new mathematical correlation. They observed a high-precision predictive capacity of artificial neural network (ANN) models for Fe3O4/water nanofluid's thermal conductivity and zeta potential. While highly accurate in thermal conductivity estimation, the novel mathematical correlation also had a comparative error with ANN. [14].

Chiniforooshan Esfahani [15] An innovative method for predicting the viscosity of nanofluids was developed by Chiniforooshan Esfahani in 2023. This method utilised a multi-fidelity neural network (MFNN) and was compared to various theoretical models to determine its accuracy. The MFNN combines two streams of predictors and minimises the sum of their errors to achieve high accuracy. The model's effectiveness was tested using MAPE, R2, and MSE. To ensure accuracy, the researchers used a neural network with temperature, particle size, volume fraction, particle density, and fluid viscosity inputs. They also tested various activation functions and found that the choice of neural network architecture did not impact the performance of the MFNN. The MFNN outperformed conventional ANN models and provides accurate predictions even with limited data. Unlike conventional models, This method can also predict viscosity for entirely new nanofluids. The researchers tested the model on training and high unseen fidelity data. Moving forward, exploring other machine-learning algorithms and analysing nanofluids' thermal conductivity would be beneficial.

[16] A new model has been developed for predicting the viscosity of nanofluids more efficiently and accurately, as demonstrated by Bhaumik et al. in 2023. This model includes physical laws automatically, making it superior to other prediction methods. The authors used the mean–variance estimator method to create point-wise confidence intervals for testing data, which was normalised. The PGDNN model was tested using 800 sets of data and 8200 additional simulated points, with various inputs such as nanoparticle density, particle size, volume fraction, temperature, viscosity of the base fluid, and output from the neural network. The Bayesian optimisation provided by Keras API was employed to obtain the optimal PGD neural network structure. The study found that this method was efficient, superior, and less costly than other machine learning models, especially where they failed. It is suggested that applying this method to the thermal conductivity of nanofluids could also be effective, and other machine learning models could be optimised for predicting nanofluid properties.

This study presents a model of model approach to modelling the thermal conductivity of nanofluids. This idea of modelling has been undertaken by some researchers. It is usually called physics informed methods. However, this study goes a step further by adding extra data stream to the theoretical or physical information and the experimental information, namely correlations which supplies modelling information and extra physical attributes not captured by the other models and applying the most suitable machine learning model to model the data streams, not necessarily a neural network.

Methodology

The thermal conductivity of nanofluids was modelled using the Gaussian process regressor, ensembles, and neural networks algorithms for this research. They were the best for the different data stream. The best modelling algorithm and features for each data type was selected and then used as input in a machine learning model.

Applied machine learning algorithm

Gaussian process regressor

Gaussian process regression is a nonparametric, Bayesian approach to regression that is widely used in machine learning and statistical modelling. It is based on the concept of Gaussian processes, which are probability distributions over functions [17, 18].

In Gaussian process regression, instead of assuming a specific functional form for the relationship between the input variables and the output variable, the model assumes that the data is generated from a Gaussian process. A Gaussian process is defined by its mean function and covariance function, also known as the kernel function [17, 18].

The key idea behind Gaussian process regression is that any finite subset of the data follows a multivariate Gaussian distribution. This allows predictions to be made and an estimation of uncertainty for new, unseen data points [17, 18].

Ensembles

Ensemble learning is a machine learning technique that involves combining multiple models to produce improved results. Ensemble methods have several advantages over individual models, including higher predictive accuracy, improved robustness, and better generalisation. The most commonly used ensemble methods are [19]:

-

Bagging Bagging (Bootstrap Aggregating) involves training multiple models on different subsets of the training data and then combining their predictions. This is known to reduce overfitting and improve the stability of the model [19].

-

Boosting Boosting involves training multiple models sequentially, with each subsequent model focusing on the errors made by the previous model. This is known to improve the accuracy of the model by reducing bias [19].

-

Stacking Stacking involves training multiple models and then using another model to combine their predictions. This is known to improve the accuracy of the model by leveraging the strengths of different models [19].

-

Voting Voting involves combining the predictions of multiple models by taking the majority vote for classification problems or averaging the predictions for regression problems [19].

Ensemble methods are widely used in machine learning and have been shown to produce more accurate solutions than individual models in many cases. They are particularly useful when dealing with complex or noisy data sets, where individual models may struggle to capture all the relevant patterns and relationships [19].

However, it's important to note that ensemble methods can be computationally expensive and may require more resources than individual models. Additionally, the choice of ensemble method and the specific models used can have a significant impact on the performance of the ensemble. Therefore, it's important to carefully select and tune the models and ensemble method to achieve the best results [19].

Neural networks

A neural network, also known as an artificial neural network (ANN), is a computational model inspired by the structure and functioning of the human brain. It is a machine learning technique that aims to recognise patterns and relationships in data by simulating the behaviour of interconnected neurons [20, 21].

Key points about neural networks:

-

Structure Neural networks consist of interconnected nodes, also known as artificial neurons or units. These units are organised into layers, including an input layer, one or more hidden layers, and an output layer. The connections between the units are represented by masses, which determine the strength of the connection [20, 21].

-

Activation function Each unit in a neural network applies an activation function to the weighted sum of its inputs. The activation function introduces nonlinearity and allows the network to learn complex relationships in the data [20, 21].

-

Training Neural networks learn from data through a process called training. During training, the network adjusts the masses of its connections based on the input data and the desired output. This is typically done using optimisation algorithms such as gradient descent, which minimise the difference between the predicted output and the actual output [20, 21].

-

Deep learning Neural networks with multiple hidden layers are referred to as deep neural networks. Deep learning is a subfield of machine learning that focuses on training deep neural networks. Deep neural networks have shown remarkable success in various domains, including image recognition, natural language processing, and speech recognition [20, 21].

-

Applications Neural networks have a wide range of applications. They are used in image and speech recognition, natural language processing, recommendation systems, fraud detection, financial forecasting, and many other areas where pattern recognition and prediction are important [20, 21].

Neural networks have gained popularity due to their ability to learn complex patterns and relationships in data. However, they can be computationally expensive to train and require large amounts of labelled data. Additionally, the interpretability of neural networks can be challenging, as they are often considered black-box models [20, 21].

Overall, neural networks are powerful tools in machine learning and have revolutionised various fields by enabling the development of sophisticated models that can learn from data and make accurate predictions [20, 21].

The data stream

The following are the description of each data stream carrying specific information in them that would later be used to model the thermal conductivity of nanofluids.

Experimental data stream

To prepare the experimental data model stream, various machine learning algorithms were trained on the experimental data. Seven (7) features from a previous study [22] were chosen, namely: temperature, particle size, volume fraction, nanoparticle thermal conductivity, melting point, base fluid kinematic viscosity, and base fluid viscosity. The response variable was the percentage enhancement of the thermal conductivity. The data used were those of [23]. This selection allowed for a generalised nanofluid thermal conductivity model.

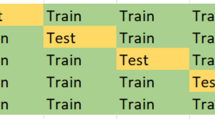

Several models were tested, including linear models, trees, support vector machines, Gaussian process regressors, kernels, ensembles, and neural networks. The best model was the Gaussian process regressors with the exponential kernel, which achieved a root mean squared error of 1.318 and a RSquared of 0.968 on validation data. On test data, it had a root mean squared error of 1.325 and a RSquare of 0.974 as shown in Table 1.

The experimental model's response plot is depicted in Fig. 1a. The x-axis denotes the record number of individual observations, while the y-axis represents the percentage in increase thermal conductivity. The response range is 0–40%, with 345 experimental observations recorded. The figure illustrates the predicted responses against the true response. An ideal plot would have a perfect match between the predicted and true responses, indicating a complete fit of the model to the observations. However, this plot shows some mismatch in responses, with record numbers 190 to 230 exhibiting a good match between the true and predicted responses.

In the graph shown in Fig. 1b, c, the x-axis represents the true response, and the y-axis represents the predicted response. The figure demonstrates how well the predicted responses match with the true responses. Ideally, a perfect model would have all the points on a linear line starting from the graph's origin. The points cluster around the line in this case, indicating a good fit. It had an RSquared of 0.968 for the training data set and an RSquared of 0.974 for the testing data. In the plot any point drawn vertically to the line represents the prediction error. For higher response values, the points are further away from the line, indicating the model's difficulty in predicting such values accurately. However, few data points at these higher values suggest the ability of the model in learning the pattern. This goes further to show that the model was a good fit. However, it is worthy of note that some points were more difficult to resolve. This difficulty is likely due to limited information to fully represent the thermal conductivity of the nanofluids. Figure 1b is for the training data, and Fig. 1c is for the test set.

In Fig. 2a, b, the residual plot of the experimental model is shown. The x-axis shows the true response, while the y-axis displays the residuals. This plot gives us a clear idea of the margin of error between the true response and the predicted response. The residuals are the differences between the true response and the predicted response. The average range of error in this plot is between + 8 and −4. To create this plot, the difference between the true response value and its corresponding predicted response was calculated and then plotted against the true response. Most points fall between −2 and + 2, indicating a low error range, especially for lower response values. This further supports the initial reasoning that limited data were likely the reason for the misfit on data points with higher sets. Figure 2a is for the training data, while Fig. 2b is for the test data.

At this stage, however, the focus of the modelling was not on the model's accuracy but rather on preparing the input for the model of the model approach.

Theoretical data stream

The theoretical model applied was Maxwell’s model as shown in Eqs. (1–2). The model assumes that the particle inclusions are much smaller than the spaces between them. Therefore, the thermal effects caused by each inclusion can be analysed separately. The model also considers the presence of multiple spherical inclusions, which may not be arranged regularly, and assumes that they have uniform conductivity. The model applies an external temperature gradient to drive heat flow through the medium [24]. It is from this model that data were generated. The generated data were converted into a model to form the second input to the final model.

The enhancement in thermal conductivity was calculated from

The input were the same as the inputs in the Maxwell’s model. The models selected for the modelling were the linear models, trees, support vector machines, Gaussian process regressors, kernels, ensembles and neural networks.

The best model was the Medium Neural Network with a root mean squared error of 2.770 and a RSquared of 0.178 on validation data. And a root mean squared error of 3.155 and a RSquared of −0.192 on test data, as shown in Table 2.

In Fig. 3, the response plot of the theoretical model is shown. The x-axis represents the record number, while the y axis represents the response variable (The percentage enhancement of thermal conductivity). The plot shows a combination of the predicted response and the true response. However, there is a significant mismatch between the two. It appears that on average the theoretical estimates are about three times lower than the experimental results. This discrepancy may be attributed to the challenge faced by machine learning models in identifying patterns in the theoretical model. Additionally, several theoretical models and phenomena have been suggested to represent the thermal conductivity of nanofluids. For this study, a specific nanofluid phenomenon outlined by Maxwell was chosen. To reconcile the errors, in this model, one may assume that the features selected were not enough to accurately capture the physics. But to avoid adding new features which will change the original assumptions of the Maxwell’s model. It is best to apply the model as it is without applying feature engineering.

Figure 4a, b shows a plot comparing the predicted response to the true response. The model of the theoretical data needed to fit better with the actual data. It has a RSquared of 0.178 on training and an RSquared of −0.192 for the test data. This is common as theoretical models often rely on certain assumptions about the data generation process, which machine learning models may need to capture accurately. This is demonstrated in Fig. 5a, b.

In Fig. 5a, b, the residuals of the theoretical model are displayed. The model was a poor fit. This is evident as the residuals are widely spaced from the zero line and are of the same magnitude as the true response (percentage enhancement in thermal conductivity of nanofluids). The model's accuracy was not the focus of the modelling at this stage, but the input preparation for the model of model approach as mentioned above.

Correlation data stream

This data model stream was prepared by passing the correlation data to various machine learning algorithms to be trained on. Rudyak et al. [4] was the selected the correlation for thermal conductivity as shown in Eq. (3).

It is to be noted that the thermal enhancement ratio was the response variable in this case. While the inputs were same as the inputs in the correlation model.

The models selected were the linear models, trees, support vector machines, Gaussian process regressors, kernels, ensembles and neural networks.

The best model was the Gaussian process regressors with Matern 5/2 kernel with a root mean squared error of 0.056 and an RSquared of 0.157 on validation data. And a root mean squared error of 0.075 and an RSquared of 0.119 on test data as shown in Table 3.

In Fig. 6a, the correlation model's response plot is presented. It's noticeable that the models are significantly different from each other, as previously seen in the theoretical model data stream. It's important to mention that the response variable is about ten times lower than the theoretical model and 30 times lower than the experimental model. The mismatch of the model is also highlighted in Fig. 6b, c.

In Fig. 6b, c, the predicted response is compared to the true response, revealing a clear misfit by the model with an RSquared of 0.157 for training data and an RSquared of 0.119 for test data. This suggests that the model has certain assumptions about the data generation process that machine learning models do not capture accurately, as shown in Fig. 6d, e.

In Fig. 6d and 6e, the residuals of the plot are displayed. Like Fig. 6a–c, it can be observed that the model did not accurately fit the data, for the same reasons as the theoretical data set.

The model's response on both training and unseen test data, along with the residuals, can be seen in Fig. 6a–e. It's worth noting that, at this stage, the focus of the modelling was not on accuracy but rather on preparing input for the model of model approach as mentioned earlier.

Model of model approach

The output generated by the previous models was used as input for other machine learning algorithms with the experimental data’s enhancement in thermal conductivity as the response variable. The goal was to assess how adding nanoparticles affects the thermal conductivity of nanofluids. The robust linear model was found to be the most efficient algorithm, as shown in Table 4, with a root mean squared error of 0.7164, an RSquared of 0.99 for validation data, and a root mean squared error of 0.2097 and an RSquared of 1.00 for test data. Showing that the model of model performed better on the test data than on the validation data. An indicator of good learning and generalisation.

In that specific order in Table 5, the experimental model data were given a higher mass and assigned lesser masses to the correlation and theoretical data. It's worth noting that the relationship between the experimental model and correlations is inverse, whereas the theoretical data is positively correlated.

Table 5 shows the coefficients or weightings of each of the effects of the data streams on the overall prediction of the model of the model approach.

Figure 7a–e, a good fit can be observed by the robust linear model on the three data streams. Figure 7a shows the predicted response plotted over the true response. It can be observed that there are only a few blue dots, indicating that the model learned the parameters accurately with an RSquared value of 0.991 for training data and 0.999 for test data. It can also be observed from Fig. 7b, c that the predicted vs. true response plot was mostly along the line through the origin, again indicating an accurate model. Similarly, Fig. 7d, e showed lower residuals and hence a more accurate model.

a Response plot of the model of models. b Predicted response versus true response plot of model of models. c Predicted response versus true response plot of the model of models for the unseen test data. d Residuals plot of model of models. e Residuals plot of the model of models for unseen test data

Figure 7a–e illustrates the model response to the training and unseen test data. Overall, it is noticeable that incorporating additional data streams, such as theoretical and correlation data, alongside the experimental data stream resulted in a more precise model and ensured its ability to generalise, as evidenced by its performance on the test set. This model can be deemed as a universal model for single material nanofluids because it considers the behaviour of both the nanoparticle and base fluid materials. Also, it can be observed that it performed even better on the test data implying its generalisation and ability to fully represent single material nanofluid. It is also worthy of note that the model does better even at higher values indicating than the experimental model that had difficulty resolving higher data values. This indicates that the model has now fully grasps the behaviour of the nanofluids thermal conductivity.

Physical meaning of the data streams

The study applied three data streams, first was the experimental, second was the theoretical and the third was the correlations. Each of these streams bring specific information into the final model. Each of the streams as acting as a feature in the final model. Below is the physical meaning that each of the streams carries.

Experimental stream

The study uses an experimental data stream. This data stream supplies the real behaviour of nanofluids in terms of the thermal conductivity. It carries the temperature, particle size, volume fraction, nanoparticle thermal conductivity, and melting point, base fluid kinematic viscosity, and base fluid viscosity information. A model from this data stream carries in it the these basic information about the nanofluid and its constituents.

Theoretical stream

The theoretical stream contains in it the assumptions and the physics of the nanofluids. This stream carries with it the information that the particle inclusions are much smaller than the spaces between them. It also assumes that they have uniform conductivity and an external temperature gradient to drive heat flow through the medium.

Correlation stream

The correlation stream models the molecular details of the nanofluids as this was the basic assumption made by the correlation model. It carries with it the modelling simplification and assumptions in the models as well.

The model of models represents these individual characteristics embedded in these model streams. The model shows that nanofluids can be fully represented by the above approach.

Conclusions

In this paper, “Modelling the thermal conductivity of nanofluids using a novel model of models approach.” a unique and comprehensive method for modelling the thermal conductivity of nanofluids is presented. The study’s objective was to accurately model the thermal conductivity of nanofluids by using a novel model of model approach containing three distinct data streams to enhance the accuracy of predictions.

-

(1)

The first data stream includes experimental data, serving as input for the primary machine learning model, while the second data stream incorporates theoretical model from previous studies. The third data stream comprises correlation model data. By training a model on the combined data streams, the study addresses the challenges associated with modelling the thermophysical properties of nanofluids.

-

(2)

The proposed model achieves exceptional accuracy, as evidenced by a high coefficient of determination (R-squared) value of 0.999. This indicates its effectiveness in handling complex data related to the thermal conductivity of nanofluids.

-

(3)

The paper also highlights other relevant studies in the field, showcasing the advancements in predicting nanofluids' viscosity using multi-fidelity neural networks (MFNN) and physically guided deep neural networks (PGDNN). These studies underscore the potential of machine learning approaches in accurately predicting nanofluid properties.

-

(4)

It provides a detailed description of the various data streams and machine learning algorithms employed. Different models and algorithms are tested for each data stream, with the performance evaluated using established metrics such as root mean squared error (RMSE), mean squared error (MSE), coefficient of determination (RSquared), and mean absolute error (MAE). Response plots, predicted response vs. true response plots, and residual plots are also presented to assess the fit of the models.

-

(5)

The model of models approach, where the output of previous models serves as input for subsequent algorithms, demonstrates its efficiency and effectiveness in predicting the enhancement in thermal conductivity of nanofluids. The robust linear model emerges as the most efficient algorithm, exhibiting high accuracy on validation and test data.

Overall, this paper contributes a comprehensive approach to modelling the thermal conductivity of nanofluids by integrating experimental, theoretical, and correlation data streams. The findings of this study can inform future research and facilitate the development of more accurate models for predicting the thermal conductivity of nanofluids. However, it is to be noted that the model is purely computational not tested beyond the presentation in this paper since the data sets all have different sources and different scales. It would be good to see how this modelling strategy performs in experimental tests.

Data availability

All data sources were referenced in the manuscript body. All data are sourced from other works available in literature. And have been referenced where they have been used.

Abbreviations

- \(D\) :

-

Diameter (m)

- \(ENT\) :

-

Enhancement of thermal conductivity (%)

- \(k\) :

-

Thermal conductivity (W mK−1)

- \(\phi\) :

-

Volume fraction (−)

- \(\rho\) :

-

Density (kg m3)

- \(bf\) :

-

Base fluid

- \(eff\) :

-

Effective

- \(m\) :

-

Molecular

- \(np\) :

-

Nanoparticle

- \(r\) :

-

Ratio

References

Bejawada SG, et al. 2D mixed convection non-Darcy model with radiation effect in a nanofluid over an inclined wavy surface. Alex Eng J. 2022;61(12):9965–76. https://doi.org/10.1016/j.aej.2022.03.030.

Bejawada SG, et al. Radiation effect on MHD Casson fluid flow over an inclined non-linear surface with chemical reaction in a Forchheimer porous medium. Alex Eng J. 2022;61(10):8207–20. https://doi.org/10.1016/j.aej.2022.01.043.

Goud BS. Heat generation/absorption influence on steady stretched permeable surface on MHD flow of a micropolar fluid through a porous medium in the presence of variable suction/injection. Int J Thermofluids. 2020;7:100044. https://doi.org/10.1016/j.ijft.2020.100044.

Goud BS, Kumar PP, Malga BS. Effect of heat source on an unsteady MHD free convection flow of Casson fluid past a vertical oscillating plate in porous medium using finite element analysis. Part Differ Equs Appl Math. 2020;2:100015. https://doi.org/10.1016/j.padiff.2020.100015.

Goud BS, Nandeppanavar MM. Ohmic heating and chemical reaction effect on MHD flow of micropolar fluid past a stretching surface. Part Differ Equs Appl Math. 2021;4:100104. https://doi.org/10.1016/j.padiff.2021.100104.

Hussain SM, et al. Effectiveness of nonuniform heat generation (sink) and thermal characterization of a carreau fluid flowing across a nonlinear elongating cylinder: a numerical study. ACS Omega. 2022;7(29):25309–20. https://doi.org/10.1021/acsomega.2c02207.

Kumar PP, Goud BS, Malga BS. Finite element study of Soret number effects on MHD flow of Jeffrey fluid through a vertical permeable moving plate. Part Differ Equs Appl Math. 2020;1:100005. https://doi.org/10.1016/j.padiff.2020.100005.

Reddy YD, et al. Heat absorption/generation effect on MHD heat transfer fluid flow along a stretching cylinder with a porous medium. Alex Eng J. 2023;64:659–66. https://doi.org/10.1016/j.aej.2022.08.049.

Shankar Goud B, Dharmendar Reddy Y, Mishra S. Joule heating and thermal radiation impact on MHD boundary layer Nanofluid flow along an exponentially stretching surface with thermal stratified medium. Proc Inst Mech Eng Part N: J Nanomater Nanoeng Nanosyst. 2022. https://doi.org/10.1177/23977914221100961.

Srinivasulu T, Goud BS. Effect of inclined magnetic field on flow, heat and mass transfer of Williamson nanofluid over a stretching sheet. Case Stud Thermal Eng. 2021;23:100819. https://doi.org/10.1016/j.csite.2020.100819.

Yanala DR, et al. Influence of slip condition on transient laminar flow over an infinite vertical plate with ramped temperature in the presence of chemical reaction and thermal radiation. Heat Transfer. 2021;50(8):7654–71. https://doi.org/10.1002/htj.22247.

Vajjha RS, Das DK, Ray DR. Development of new correlations for the Nusselt number and the friction factor under turbulent flow of nanofluids in flat tubes. Int J Heat Mass Transf. 2015;80:353–67. https://doi.org/10.1016/j.ijheatmasstransfer.2014.09.018.

Alfaleh A, et al. Predicting thermal conductivity and dynamic viscosity of nanofluid by employment of Support Vector Machines: a review. Energy Rep. 2023;10:1259–67. https://doi.org/10.1016/j.egyr.2023.08.001.

Sahin F, et al. An experimental and new study on thermal conductivity and zeta potential of Fe3O4/water nanofluid: Machine learning modeling and proposing a new correlation. Powder Technol. 2023;420:118388. https://doi.org/10.1016/j.powtec.2023.118388.

Chiniforooshan Esfahani I. A data-driven physics-informed neural network for predicting the viscosity of nanofluids. AIP Adv. 2023;13(2):025206. https://doi.org/10.1063/5.0132846.

Bhaumik B, et al. A unique physics-aided deep learning model for predicting viscosity of nanofluids. Int J Comput Methods Eng Sci Mech. 2023;24(3):167–81. https://doi.org/10.1080/15502287.2022.2120441.

Gramacy RB, Surrogates: Gaussian process modeling, design, and optimization for the applied sciences. 2020: CRC press.

MathWorks, Statistics and Machine Learning Toolbox: Documentation (R2022a). 2022.

Alhamid M, Ensemble Models: What Are They and When Should You Use Them? 2022 [cited 2023 28/08/2023]; Available from: https://builtin.com/machine-learning/ensemble-model.

IBM. What are neural networks? [cited 2023 28/08/2023]; Available from: https://www.ibm.com/topics/neural-networks.

Hardesty L, Explained: Neural networks. 2017 April 14, 2017 [cited 2023 28/08/2023]; Available from: https://news.mit.edu/2017/explained-neural-networks-deep-learning-0414.

Onyiriuka E, Predictive Modelling of Thermal Conductivity in Single-Material Nanofluids: A Novel Approach. PREPRINT (Version 1) available at Research Square [https://doi.org/10.21203/rs.3.rs-3113648/v1].

Patel HE, Sundararajan T, Das SK. An experimental investigation into the thermal conductivity enhancement in oxide and metallic nanofluids. J Nanopart Res. 2010;12(3):1015–31. https://doi.org/10.1007/s11051-009-9658-2.

Kiradjiev KB, et al. Maxwell-type models for the effective thermal conductivity of a porous material with radiative transfer in the voids. Int J Therm Sci. 2019;145:106009. https://doi.org/10.1016/j.ijthermalsci.2019.106009.

Acknowledgements

The author wishes to thank the Tertiary Education Trust Fund (TET Fund) for providing funding for the studies.

Author information

Authors and Affiliations

Contributions

EJO was the main author and only author and carried out all the work in the study.

Corresponding author

Ethics declarations

Conflict of interest

The author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Onyiriuka, E. Modelling the thermal conductivity of nanofluids using a novel model of models approach. J Therm Anal Calorim 148, 13569–13585 (2023). https://doi.org/10.1007/s10973-023-12642-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10973-023-12642-y