Abstract

The availability of digital tools aiming to promote adolescent mental health is rapidly increasing. However, the field lacks an up-to-date and focused review of current evidence. This study thus looked into the characteristics and efficacy of digital, evidence-based mental health programs for youth (11–18 years). The selection procedure followed the Preferred Reporting Items for Systematic Review and Meta-Analyses (PRISMA) guidelines and resulted in 27 eligible studies. The high heterogeneity of the results calls for careful interpretation. Nevertheless, small, but promising, effects of digital tools were found with respect to promoting well-being, relieving anxiety, and enhancing protective factors. Some important factors influencing overall efficacy include the given setting, the level of guidance and support, and the adherence to the intervention.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

Introduction

Between 10 and 20% of children and adolescents are affected by mental health issues worldwide (Kieling et al., 2011). Increasingly, digital technologies, such as mobile apps or web-delivered programs, are being used to meet the needs of mental health promotion within this age group (Bergin et al., 2020). Thus, the range of digital tools aiming to promote mental health is growing rapidly, and an increasing number of researchers are reporting on their potential and value (Lucas-Thompson et al., 2019; Sommers-Spijkerman et al., 2021). However, an up-to-date and focused review of current evidence is still lacking in the field. The present study thus aims to systematically review and analyze the availability and effectiveness of digital tools for mental health promotion among 11–18 year olds.

Although prevention and intervention programs can be implemented at any point across an individual’s lifespan, they are most effective when provided early, or at the time of disorder emergence (Polanczyk et al., 2015; Solmi et al., 2022). For approximately half of the individuals suffering from a mental disorder, the onset of illness occurs during adolescence, and in more than a third, the disorder emerges by the age of 14 (Solmi et al., 2022). This is especially relevant for neurodevelopmental disorders as well as for anxiety and fear-related disorders. For example, 51.8% of those affected developed anxiety/fear-related disorders before the age of 18 (Solmi et al., 2022). During this transition period from childhood to adulthood, young people face a number of social, physical and emotional challenges (e.g., relating to academic expectations, physical changes, identity and role development) which make them highly vulnerable (Byrne et al., 2007).

The estimated worldwide prevalence of mental disorders was reported to be 13.4% (CI 95% 11.3–15.9) for this population (Polanczyk et al., 2015). Disorder prevalence was highest for anxiety at 6.5% of the population, 2.6% for depressive disorder, 3.4% for attention-deficit hyperactivity, and 5.7% for disruptive disorders (Polanczyk et al., 2015). In addition, mental disorders among adolescents have increased in recent years (Atladottir et al., 2015; Steffen et al., 2018), with the increase being most notable with respect to developmental and mood disorders (Steffen et al., 2018). Suffering from mental illness not only affects the quality of daily life. It has also been found to decrease life expectancy by up to 10–15 years (Walker et al., 2015). This is true not only for those severe mental health problems exhibiting low rates of prevalence, such as psychosis, but also for milder mental disorders exhibiting higher prevalence rates, such as anxiety and depression (Walker et al., 2015).

Mental health encompasses not only one’s internal experience, but also shapes the way one connects and interacts with the external world. Therefore, an understanding of mental health needs to reflect the broad diversity of human experience (Galderisi et al., 2015). Mental health may be described as a “dynamic state of internal equilibrium” (Galderisi et al., 2015, pp. 231–232), i.e., as a malleable state that affects how we relate to ourselves and others. Factors such as cognitive and social skills, the ability to empathize, resilience, self-awareness, self-expression and regulation of emotions, all contribute to mental health in varying degrees and interact dynamically (Galderisi et al., 2015). The complexity and multifaceted nature of the phenomenon is also mirrored in the wide range of methods and instruments used to measure and promote mental health. Though the concept remains difficult to circumscribe, especially the difficulty of distinguishing conceptually between well-being and mental health (Galderisi et al., 2015), in a preliminary literature search, several domains closely connected to mental health and to efforts to enhance it were found: mental health literacy, well-being, resilience, mindfulness, stress management, relaxation, help-seeking behavior and positive psychology.

Since 2020, governmental policies within the context of the COVID-19 pandemic, such as enforced isolation or school closures, have most likely increased the strain on young people’s well-being and raised the risk of developing mental health problems. The frequency of lower health-related quality of life, and higher anxiety levels is now higher than that reported before COVID-19, especially among those with low socio-economic status, a migration background, or limited living space (Ravens-Sieberer et al., 2021). Looking at the alarming number of young people suffering from mental health issues (Atladottir et al., 2015) and the added stressors caused by the pandemic (Ravens-Sieberer et al., 2021), the urgency needed in providing support for this group is clearly evident. Hence, preventing mental disorders and promoting mental health in youth continues to be a main concern in health policies and strategy reports, both on the European (WHO, 2013), and global level (WHO, 2004, 2017).

The ongoing advances in technology mean that more and more mental health prevention programs may be provided successfully, either partly or fully, through digital media (Kaess et al., 2021, 2021; Mrazek et al., 2019). As barriers to mental health services increased during the pandemic (due to lockdowns and restrictions), the advantages of choosing a digital mode of delivery have become manifold, e.g., cost-effectiveness, anonymity, accessibility, adaptability, etc. These all serve to lower the threshold when seeking mental health support (Bauer et al., 2005; Mrazek et al., 2019). Simultaneously, new challenges and limitations have arisen in connection with the use of digital and/or online tools, e.g., confidentiality issues, low levels of engagement, or concerns regarding professionalism (Bauer et al., 2005). However, accessible, adaptable digital programs lower usage barriers in schools and other institutions, as they require relatively little expertise or effort compared to face-to-face (F2F) interventions. Web-delivered interventions may also improve fidelity by providing self-directed programs (Calear et al., 2016).

In addition to the high accessibility and availability of digital tools, their potential for successfully promoting young people’s mental health has repeatedly been reported in recent meta-analysis and/or systematic reviews. Harrer et al. (2019) found such tools to have positive effects on depression, anxiety, stress, eating disorder symptoms and role functioning. The findings of Clarke et al. (2015) and Sevilla-Llewellyn-Jones et al. (2018) support the effectivity of online interventions with respect to the treatment of anxiety and depressive symptoms. Noh and Kim (2022) reported beneficial results when preventing an increase in depressive symptoms, but not for anxiety or stress. Furthermore, well-tailored digital interventions are likely to increase engagement with a support tool and to aid the transfer of specific skills or strategies into the daily lives of young people (Lucas-Thompson et al., 2019). Indeed, web-based interventions have been reported to improve individuals’ quality of life and functioning (Sevilla-Llewellyn-Jones et al., 2018).

Extensive efforts have been made to provide systematic reviews on the issue of mental health provision. There have been reviews on older populations (Brown et al., 2016; Harrer et al., 2019; Noh & Kim, 2022), on clinical populations (Sevilla-Llewellyn-Jones et al., 2018), on F2F interventions (Carsley et al., 2018; Dray et al., 2017; Sapthiang et al., 2019), on school-based interventions (Cilar et al., 2020), but not on this specific focus, and in some cases the relevant meta-analysis has also been neglected (Clarke et al., 2015; Sapthiang et al., 2019). While previous reviews have reported on the efficacy of mobile apps (Bakker et al., 2016; Grist et al., 2017), the present review aims to include studies on mobile apps in addition to studies on other digital tools, thus expanding the range of intervention programs reviewed.

Current study

Although existing reviews are of substantial scientific value, as the technological landscape is changing so rapidly an update on the effects of digitally-delivered interventions is clearly needed. In addition, the past systematic reviews had a different focus. The present systematic review and meta-analysis aims to outline the current state of digital, evidence-based programs promoting mental health in young people, and to provide insight into the characteristics and effectiveness of such programs. The domain of interest is the promotion of mental health as supported by digital technologies, with a focus on mental health literacy, well-being, (mental health) help-seeking behavior, stress management, relaxation, mindfulness, resilience and positive psychology. The present study focused on three areas. First, it was of interest to determine, what digital-based interventions promoting mental health are available for children and adolescents aged 11 to 18. Second, the effectiveness of these interventions was analyzed. Third, the factors underlying their effectiveness were assessed.

Methods

This review makes use of the recommendations of the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) statement (Page et al., 2021).

Search Strategy

A comprehensive literature search was conducted (mid-May 2021) using the electronic databases PubMed, PsycInfo, and The Cochrane Library using the search strings stated in Table 1. A second search (end-October 2021) was run before the final analysis. Furthermore, registered trial protocols were checked for recently published studies and the reference lists of identified studies were searched manually in order to identify any potentially relevant literature.

Eligibility Criteria

Table 2 provides an overview of all criteria that determined whether a study was included.

Studies which focused on an age group outside the appointed range were included if the mean age of participants fell between 11 and 18 years. The WHO (World Health Organization) recommends that individuals between 10 and 19 years be regarded as adolescents (WHO, 1986). However, as the transition from childhood to adolescence and from adolescence to adulthood depends on several factors (genetic, nutritional, socioeconomic and demographic) and cannot occur at the same age for everyone, in practice one may need to be quite flexible (Canadian Paediatric Society, 2003). Despite this, in the present study it was decided on the narrower age span of 11–18 years, as 11 is the age where children in many countries transition out of primary school (e.g. Austria, UK, USA), and 18 is considered the legal age of majority in most countries around the world (Boyacıoğlu Doğru et al., 2018). The studies dealt with here focus on prevention rather than treatment, and target the general population, rather than participants with a self-reported or diagnosed mental illness.

The aim was to review interventions that promote mental health in general, and which target well-being, mental health literacy, resilience, help-seeking behavior, mindfulness, stress management, relaxation and positive psychology. Of particular importance was the application of a digital component, where the mode of delivery was either fully, or partly digitalized. Hence, interventions needed to be strongly supported by a digital component, with at least half the instructions being delivered in a digital format. However, whether the mode of delivery was online or offline (no internet connection necessary), was not relevant. Digital technologies and media continue to develop and evolve rapidly. To avoid the risk of basing the review on outdated or unavailable technologies, studies published before the year 2000 were not considered.

Studies with quantitative and mixed-methods were of interest, as quantitative data was needed to perform the planned meta-analysis. In order to be able to draw evidence-based conclusions, rigorous standards for quality and reliability of study results were indispensable. Thus, only peer-reviewed articles were included. Academic articles published in English were included. Considering that English is undoubtedly the dominant language in academic publications worldwide, and even journals in non-English-speaking countries increasingly favor English contributions (Murray & Dingwall, 2001), it was decided to focus on English publications.

Study Selection Process

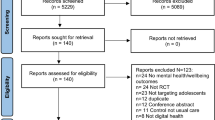

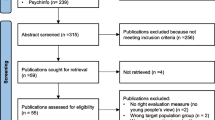

In total, 27 studies were included in the sample. Figure 1 illustrates the steps of the study selection process and the reasons for exclusion, modeled according to PRISMA recommendations (Page et al., 2021).

PRISMA flow chart (Page et al. 2021) of study selection process

Both searches, in May and in October, followed the same procedure. Four reviewers were involved in the study selection process and applied eligibility criteria for sample identification. As a first step, the literature search was conducted in all three databases and duplicates were removed. Secondly, all records were screened with respect to inclusion/exclusion criteria. Thirdly, doubtful cases for inclusion were double-checked and screened to ensure that they qualified for inclusion. Researchers were blinded to each other’s decisions in the first and second step only. Discrepancies and disagreements between individual judgements were resolved in online team meetings. If screening at the title and abstract level did not suffice, the full text was assessed by each reviewer in terms of the inclusion criteria. The software for recording decisions was Microsoft Excel, with more transparency being gained by using Google Sheets for the third step.

Data Extraction

The Microsoft Excel software was used for duplication removal and screening of studies. To facilitate collaboration during the work process, the free online tool SRDR+, Systematic Review Data Repository-Plus (EPC, 2022) was used for data management. Data extraction included design details, arm details, sample characteristics, outcome details, and results. In a first step, all data extraction items were specified. Relevant extractions involved, but were not limited to: bibliographical data (e.g. author, publication year, country), theoretical background, medium(s) of intervention, mode of intervention, intervention characteristics, study design, method, sample characteristics, setting, outcome data/results, potential moderators of intervention efficacy, effect size and data recording acceptance and engagement. In a third step, findings were checked and discussed.

Quality Assessment

In order to assess the risk of bias in the primary studies included, two independent reviewers applied assessment tools by Cochrane. For randomized controlled trials (RCT), the Cochrane Risk of Bias Tool - RoB (Higgins et al., 2022) was applied. For non-randomized trials the Risk Of Bias In Non-Randomized Studies–ROBINS-I (Sterne et al., 2016) was used. The tools help to detect the biases arising from pre-intervention, such as the bias in selection/randomization of participants, during intervention, such as the bias in classification of intervention, and post-intervention, such as the bias due to missing data (Sterne et al., 2016). The RoB tool allows for an overall bias rating of either low risk, some concerns, or high risk. For the ROBINS-I tool the overall rating scheme is more nuanced, and uses the categories low, moderate, serious and critical risk. Rating disagreements were resolved by calling on a third reviewer.

Analyses

Narrative synthesis

In the process of narrative synthesis, the key features of the studies and their interventions were summarized, and potential intervention barriers and facilitators were put forward. In an extensive summary table, relevant study characteristics, such as the underlying theoretical framework, reported findings and outcome data were brought together. Additionally, a detailed description was provided for those extracted variables identified as potential moderators of intervention efficacy. All eight moderators were operationalized as categorical variables (Table 5). These were: level of interaction (none, some, considerable), level of professional support (none, some, considerable), level of guidance (none, some, considerable), level of digitization (fully digital, partly digital), duration of intervention (long [>3 months], medium [2-3 months], short [1 month], single session), level of adherence (consistent, inconsistent, not specified), level of attrition (no attrition, low [<20%], concerning [>20%]), and setting (homeschool, school, leisure, mixed).

Meta-analytic procedure

Meta-analyses were carried out using R (4.2.1 version), specifically using the meta package (Schwarzer, 2022) and the dmetar package (Harrer et al., 2019). In the event of missing data, the corresponding authors were contacted via email. In the case of no response, a friendly reminder was sent out 1 to 3 weeks later. Additional data sent by August 7, 2022, before performing the final analysis, were included.

As done in previous research (Harrer et al., 2019), conceptually-related clusters were created based on outcome frequency. The creation of clusters followed a strategic approach and entailed examining which mental health (MH) domain the respective scale was designed to measure. If a scale measured the same, or a closely related MH domain (e.g. stress and school stress), the items were combined in the same cluster. If studies used multiple measures for one construct, the measure with the highest Cronbach’s alpha or relevance was chosen (Supplementary Material 1). Studies that presented results for the same outcomes were combined to generate the average effect size of said outcome, regardless of the level of outcome scope (i.e., primary, secondary, tertiary, or explorative). If a study reported results applicable to more than one cluster it was included in all analyses for which it provided suitable outcomes. Clusters containing at least five studies were created and separate meta-analysis on each cluster were performed.

In line with common procedure the standardized mean difference (SMD) between the intervention groups and the control groups were calculated only for post-intervention measurements (Cuijpers et al., 2017; Harrer et al., 2019). Analyzing pre-post values was consciously rejected since estimating pre-post correlation within groups can lead to biased outcomes (Cuijpers et al., 2017). Adopting the guidelines suggested by Cochrane (Higgins et al. 2022), data from primary studies were arranged to ensure that their respective scales were in the same direction. To compensate for small sample sizes, the SMD was corrected, expressing it in the form of Hedges’ g (Hedges & Olkin, 2014). The effect size was interpreted in accordance with Cohen. Hence 0.2 is interpreted as a small, 0.5 as a medium, and 0.8 as a large effect. As significant differences between the studies were expected, it was decided to opt for a random effects pooling model, using the Hartung–Knapp–Sidik–Jonkman method for correction (IntHout et al., 2014; Sidik & Jonkman, 2022). The restricted maximum likelihood estimator (Viechtbauer, 2005) was used to calculate the heterogeneity variance τ2. The prediction interval (95%) was calculated around the size of the aggregated effect, thus showing the range of predictions required to reduce the actual effects of similar future trials based on existing evidence (Borenstein et al., 2017). Finally, the heterogeneity was assessed using the I2 statistic (Higgins & Thompson, 2002). Here Higgins et al. (2003) was adopted as a metric for interpretation: 25% low, 50% moderate, and 75% substantial heterogeneity (Higgins et al., 2003).

A sensitivity analysis was carried out when the heterogeneity between studies exceeded 50%. A first approach consisted in performing the analysis with no statistical outliers. In this case, outliers were controlled for by removing studies when the range of their 95% confidence interval (CI) was completely outside the size of the pooled effect. An additional influence analysis, leave-one-out, was carried out to assess the effect of individual studies on overall effects. This method consists of omitting one study at a time when calculating the effect size of the collection (Viechtbauer & Cheung, 2010). Further strategies were adopted to control for unit-of-analysis problems, such as multi-arm studies, where more interventions were compared to the same control conditions. These comparisons are not independent and may artificially reduce heterogeneity and distort the size of the combined effect (Borenstein et al., 2021). The solution adopted entailed combining the effects of all intervention groups into a single comparison and then recalculating the results (Higgins et al., 2022).

To study possible sources of heterogeneity, the results obtained from a sufficient number of studies (k > 10) were analyzed in subgroups. As a result, four clusters were included in the subgroup analysis: anxiety, depressive symptoms, internalizing symptoms, and protective factors. These clusters were analyzed against eight previously identified moderators: setting, interaction, support, guidance, digital, length/duration, adherence, and attrition.

Finally, to evaluate potential publication bias, funnel plots were inspected (Peters et al., 2008) and Egger’s test were performed (Egger et al., 1997) to assess funnel plot asymmetry. Where evidence of publication bias was found, the possible bias was adjusted using the Duval and Tweedie Trim and Fill procedure (Duval & Tweedie, 2000).

Results

Study Characteristics

The final sample included 27 studies. Eleven studies (41%) were implemented in Europe, 2 (7%) in Asia, 1 (4%) in Africa, 6 (22%) in North America and 7 (26%) in Australia. More than half of the studies (15/56%) reported significant intervention effects favoring the intervention group, with one-third being targeted (5/33%) and two-thirds being universal interventions (10/67%). For the rest, significant between-group intervention effects were not found. Three studies (11%) reported no between-group effects but did report significant within-group effects (#16, #25 and #26). Table 3 and the following narrative synthesis provide a summary of the studies’ key characteristics and specifically address the first research question–What digital-based interventions promoting mental health are available for children and adolescents aged 11 to 18?

General study design details

The RCT design was the one most commonly used (14/52%), about a fourth were cluster-RCTs (6/22%), and a little more than a fourth were CTs (7/26%). The common control group, where participants were not exposed to any intervention during the study period, was the preferred option (20/74%). About one quarter (7/26%) used an alternative program, that matched the intervention in duration and extent. In three of these cases (#4, #15, #19) both conditions led to improvements in outcome measures and non-significant between-group effects. For the great majority of studies (20/74%) intervention delivery was fully digital. The rest opted for partly digital delivery (7/26%). All but two studies delivered their interventions either fully (18/67%) or partly (7/26%) online (Fig. 2). Offline refers to a mode of delivery where no internet connection was necessary. The media used varied greatly, as shown in Fig. 3.

Participant characteristics

Participants’ mean age ranged from 10.9 to 17.9 (M = 14.65). Study #4 was ignored here as the authors only reported the age range (15–24 years). The sample sizes ranged from 22 to 1841 participants, giving a total of 13,857 participants at baseline, and 13,216 for final analyses. Studies #1, #22 and #25 did not report gender details. The remaining 24 studies (n = 9654) included 5313 (55%) females and 4176 (43%) males in their analyses. Only three of them (#4, #8, #14) reported on additional gender identification options (e.g., nonbinary or transgender). These made up 165 (2%) of all analyzed participants.

No significant moderating influence of gender on intervention effectiveness was found. A few gender differences were reported at baseline, i.e., higher initial overall stress and lower academic buoyancy (#21), higher stress vulnerability and more frequent use of social support (#9), more frequent and longer logons, as well as higher program engagement (#22) was reported for females. In contrast, more frequent use of avoidant coping (#9) was reported for male participants. Age affected intervention effectiveness in 4 (27%) of the studies that yielded significant results. On the one hand, younger adolescents were more likely to be absent at follow-up (#18) and showed a smaller improvement in coping with stress (#24) than older adolescents. On the other hand, younger participants were more likely to logon or post questions (#22), showed greater decreases in depressive symptoms, and showed greater improvements in happiness scores and improved mental well-being (#19) compared to older adolescents.

One study (#4) focused on youth identifying as American Indian and Alaskan native teenagers. Three studies (11%) focused on specific genders, namely females (#23), males (#11) and sexual gender minority youth (#8). Aside from gender, the most commonly measured sociodemographic characteristics were ethnicity (10/37%) and socio-economic status (9/33%). Neither was reported to have a moderating influence on the impact of the intervention. Participants were predominantly white and resided in the country where the intervention was implemented (as this was normally one of the inclusion criteria).

Easy access to, or possession of, a device (e.g., phone, tablet, computer) was mentioned by about one third (8/30%) as a criterion for inclusion. Not surprisingly, these were all non-school-based studies. Most studies opted for universal (22/81%), rather than targeted (5/19%) interventions. Studies with targeted interventions used (mental) health-related inclusion criteria, namely, low levels of resilience (#26), mild or more emotional mental health issues (#12), past-experiences with cyberbullying (#8, #14), and physical chronic illness diagnosis (#6).

Participants differed in their baseline levels of outcome measures. In line with expectations from previous research (Swain et al., 2015), participants with an elevated baseline in clinical symptoms and a low baseline in protective factors (e.g. resilience) seem to benefit more from an intervention. In the current sample this was true for elevated levels of depressive symptoms (#12, #19) and stress (#21), and low levels of resilience (#26). In contrast, study #5 found that adolescents with low and medium anxiety levels experienced an improvement in their self-esteem, whereas adolescents with high anxiety did not. However, in general, studies found that the higher the baseline scores (emotional intelligence, self-esteem, affect balance, and prosocial behavior), the lower the change found. Furthermore, some authors argued that the low levels of stress (#1), anxiety (#3), and distress (#13, #25), as reported by sample participants, were potential explanations of why no intervention efficacy was found.

Setting

The setting was classified in terms of homeschool, school, leisure-based and mixed ([home-]school- and leisure-based) as can be seen in Table 5. The studies comprised a balanced mix of school-based (10/37% [2/7% homeschooling]), leisure-based (10/37%) and mixed (7/26%) interventions.

Risk of Bias

Of the 27 studies looked at, none of them was rated with a serious (RoB) or critical (ROBINS-I) risk of bias. Most of the randomized trials were rated as low risk (15/75%), one quarter raised some concerns (5/25%). For more than half (4/57%) of the non-randomized trials moderate concerns of bias were raised, whereas the remaining studies raised more serious concerns (3/43%). The present review reports on research that relied solely on self-reported measures and the blinding of participants was close to impossible, as is the case in psychotherapy research in general (Edridge et al., 2020). Therefore, the risks regarding such criteria are not considered as strictly as would be the case for clinical or medical trials. Figures 4 and 5 depict summary plots created with Robvis (McGuinness & Higgins, 2021), and provide more detail on judgement percentages.

Intervention Characteristics

Availability

The availability of the interventions was assessed based on their online accessibility. Live sessions were used by three studies (11%; #6, #11, #15). These intervention programs are not available online. About half of the programs (13/48%) are available for students, either open access and for free (9/69%), or through an institution (4/31%), e.g. a school, for free (2/15.5%), or for a fee (2/15.5%). Of the remaining studies (11/41%), the reported weblinks resulted in error messages (3/27%), or no links were reported and access to the programs could not be found (8/73%; (Supplementary Material 2).

Domains and measures

As described above, defining mental health is quite challenging, and this is mirrored in the diversity of the attempts at its promotion seen in the current sample. Only one study (#9) focused promotional effort on a single MH domain, i.e., on stress. All others targeted multiple areas of MH. Among these, some (8/30%) specifically reported focusing on two domains, while others (18/70%) aimed at promoting mental health in a more general sense. This was reflected in the heterogeneity and quantity of the outcome measures applied. The outcomes most frequently measured were anxiety (11/41%), depressive symptoms (10/37%), internalizing symptoms (8/30%), well-being (7/26%), stress (6/22%), and help-seeking behavior (6/22%). Externalizing symptoms (5/19%), resilience (3/11%), mindfulness (2/7%), or intrapersonal factors, such as self-efficacy (4/15%) or self-esteem (3/11%), were measured less often. Similarly, a relatively broad spectrum of activities was also found. Psychoeducative elements (e.g. MH definitions, descriptions, symptoms, treatment options, information on MH domains, links to MH web content or external resources) were by far the most common items incorporated in the interventions (23/85%), followed by elements that encourage help-seeking behavior (13/48%), mindfulness practices (8/30%), reflective questions and problem solving (8/30%), possibilities of peer exchange (8/30%), coping skills training (7/26%), and mood ratings or check-ins (5/19%). The most frequently used theoretical frameworks were cognitive behavioral therapy (CBT, 6/22%), mindfulness (5/19%), growth mindset theory (3/11%), and social(-emotional) learning theory (3/11%). Since intervention length, personal interaction, professional support and guidance are particularly relevant with respect to intervention impact, these indicators were looked at more closely.

Intervention length

Exposure to the intervention varied widely across studies. There were interventions with only one session (4/15%), interventions that included multiple sessions (17/63%), or interventions with no specified sessions (6/22%). The majority of the studies (14/54%) used an intervention period of 2 to 8 weeks. Four studies (15%) used single-session interventions (approx. 60 min) and one (#25) did not specify its duration. The remaining third (8/30%) reported longer intervention times ranging between 12 and 48 weeks. In summary, the studies analyzed and presented the duration of the intervention differently, with some not having structured sessions. This variability was accounted for by operationalizing and classifying intervention periods into long [>3 months], medium [2-3 months], short [1 month], and single session interventions.

Level of interaction

The level of interaction refers to the nature of received feedback and to the opportunities for participant interaction within the intervention. Only a small minority (3/11%) of the described interventions failed to use any means of engagement (level 0). Some form of interaction, such as peer-feedback, automated responses, and/or little (or no) exchange with professionals (level 1), was used by more than half (15/56%). A considerable level of interaction (level 2), meaning the intervention involved adaptive, individually-tailored, computerized responses, or considerable interaction with peers or professionals, was exhibited by one third (9/33%).

Level of professional support

The level of support relates to the involvement of a professional in the intervention. One quarter (7/26%) of the studies made no mention of professional support (level 0). Some level of support by a professional, e.g. a teacher or research assistant, or supervision by a mental health professional was integrated into almost half the studies (13/48%, level 1). The remaining quarter (7/26%) reported on the use of more substantial support, whereby a mental health professional was present or actively involved throughout the intervention (level 2).

Level of guidance

The level of guidance refers to the amount of structure and direction given on how and when to practice or use a certain tool/program. Relatively few studies (3/11%) provided no structure or guidance (level 0). Half of them (14/52%) used interventions that were mostly self-directed, and where only limited prompts or reminders were employed so as to ensure participants’ adherence (level 1). The designation considerable guidance was used when more than 50% of the protocol was guided, modules and activities followed a set structure, or the intervention was supervised at fixed times within a certain institution, such as a school (level 2). This was found to be the case for little over a third of the studies (10/37%).

Level of digitization

Regarding the level of digitization, it was distinguished between level 1 interventions, which had a F2F component and were partly digital (7/26%), and level 2 interventions that were fully digital (20/74%). A level 0 was not defined, as some level of digitization was a criterion for inclusion in the sample. Figure 6 demonstrates how the levels of interaction, professional support, guidance and digitization coincided with significant intervention outcomes. As expected, a more intense level of interaction and professional support is associated with larger intervention effects. Also, fully digital interventions reported larger effects. The results regarding the subgroup analysis are reported below (Table 5).

Adherence and Attrition

Attrition refers to participant dropout. Adherence pertains to user engagement, in other words, how well the participants complied with the intervention protocol. Both adherence and attrition are major concerns in mental health intervention studies (Sousa et al., 2020). Higher engagement often leads to higher effectivity. This has been reported in prior research and is also found in the current sample (studies #2, #11). The reporting on adherence differed greatly within the studies, and some studies (5/19%) did not predefine an adherence criterion at all. However, it was distinguished between rather consistent and rather inconsistent levels of adherence. Rather inconsistent engagement was attributed to studies where participants completed less than 50% of tasks/days/modules/activities or where app/program usage was reported to be low by the authors themselves. A little less than half (13/48%) of the studies reported consistent levels of adherence, one third (9/33%) inconsistent levels, and four (15%) did not report on adherence at all. Study #19 reported different adherence levels for its intervention groups, with inconsistent adherence for the leisure-based group and consistent adherence for the school-based group.

Closely related to the concept of adherence is that of attrition. The level of attrition relates to the overall dropout rate of participants from baseline to post-test or follow-ups. A dropout of 20% or less was considered to be low attrition (level 1), a dropout of 21% and above, was designated as concerning attrition (level 2). The cut-offs were chosen in accordance with previous findings, which suggest a concern for bias is called for when attrition rates exceed 20% (Marcellus, 2004). In the current sample, the overall attrition rates ranged between 0 and 58.4% (M = 25.2%) and were self-computed in most cases due to a lack of specific reporting. Two studies (#9, #25) could not be taken into account due to insufficient data, and two reported zero attrition (#15, #19). Generally speaking, attrition rates increased across time points (MT2 = 19.7%; MT3 = 26.2%; MT4 = 33.6%). At post-test (nT2 = 25) low levels of attrition were found for more than half the studies (15/60%), concerning levels for the rest (10/40%). At follow-up (nT3 = 11) low levels were found for about a third (4/36%), and concerning levels for almost two thirds (7/64%). For studies with a second follow-up (nT4 = 3), 2 out of 3 (67%) showed concerning levels.

Meta- and Sensitivity Analysis

Separate analyses for each cluster were conducted in order to address the second research question on how effective these interventions are. As mentioned above, seven clusters were created: anxiety, depressive symptoms, externalizing symptoms, internalizing symptoms, protective factors, stress, and well-being (Supplementary Material 1). The outcome measures in the clusters well-being, anxiety, depressive symptoms and stress were largely homogenous, this means they specifically measured the MH domain in question. The other clusters consisted of more heterogenous measures that were combined due to their conceptual relatedness. The cluster internalizing symptoms included measures of emotional symptoms, internalizing behavioral problems or rumination. The cluster externalizing symptoms contained hyperactivity, behavioral problems or difficulties. Lastly, the cluster protective factors contained measures of self-esteem, self-efficacy or help-seeking behavior.

The interpretation of results was carried out for single clusters. This entailed analyzing the observed pooled effects through forest plots (Figs. 7–13), the potential publication bias as depicted by asymmetry in funnel plots (Supplementary Material 3) and detected by Egger’s test (Supplementary Material 4), and looking at the heterogeneity and relative strategies adopted to correct for this in the sensitivity analysis (Table 4 and Supplementary Material 5). Additional data was needed from 15 studies, so their respective authors were contacted. Data was provided by studies #1, #5, #7, #8, #12 and #18. As no additional information was obtained from the remaining nine studies (with either no data [#9, #14, #25], or no response [#2, #4, #13, #21, #22, #26]), they were not part of the following considerations.

Anxiety

For studies of the anxiety cluster (n = 11), the pooled effect size for intervention was found to be significant at a small-to-medium level, with Hedges’ g at 0.37, 95% CI [0.04, 0.70], p = 0.031 (Fig. 7). High heterogeneity was detected, with a significant I2 of 91% (95%CI [87, 94], p < 0.001), confirmed also through the quite wide prediction interval (95% PI [−0.73, 1.47]), thus indicating that negative intervention effects cannot be ruled out for future studies. Since heterogeneity was substantial, it was deemed necessary to proceeded with a sensitivity analysis. The influence analysis pointed out one study as the most influential (#23), and one that increased heterogeneity (#27), which also happened to be an outlier. After removing outliers and re-running the analysis, the level of heterogeneity fell and was no longer significant (I2 = 29%, 95% CI [0, 67], p = 0.183), while the pooled effect size decreased but remained significant, g = 0.16, 95% CI [0.02, 0.31]. No evidence for publication bias was found, neither in the funnel plots, nor after performing the Egger’s test.

Depressive symptoms

Studies of the depressive symptoms cluster (n = 11) revealed a small and non-significant effect size for intervention, g = 0.19, 95% CI [−0.09, 0.46] (Fig. 8). High heterogeneity was detected (I2 = 87%, 95% CI [79, 92]), p < 0.001), carrying a high variability in predicting further results (95% PI [−0.71, 1.08]). Both approaches adopted in the sensitivity analysis pointed in the same direction, identifying the same highly influential/outlier study as in the anxiety cluster (#23). Omitting this study reduced the heterogeneity (I2 = 46%, 95% CI [0, 74], p = 0.054), at the expense of an even thinner and non-significant effect size for intervention, g = 0.07, 95% CI [−0.05, 0.19]. Funnel plots and Egger’s test found no indication for publication bias.

Externalizing symptoms

The cluster externalizing symptoms included only six studies. There is thus a need for caution regarding its interpretation. Results seem to buck the trend of other analyses, since here the effect size favors the control rather than the intervention group, although there is no statistical significance, g = −0.22, 95% CI [−0.89, 0.45] (Fig. 9). High heterogeneity was also found within this cluster, I2 = 93%, 95% CI [87, 96], 95% PI [2.08, 1.64], p < 0.001. According to the sensitivity analysis, study #23 was again detected as an outlier. Its removal brought the effect size to positive values although they still remained non-significant, g = 0.03, 95% CI [−0.89, 0.45]. Surprisingly enough, the study removed accounted for all the heterogeneity in the cluster, since after its omission, the I2 was zero and non-significant, 95% CI [87, 96], p = 0.535. No signs of publication bias were detected in the Egger’s test.

Internalizing symptoms

Studies of the internalizing symptoms cluster (n = 10) revealed a small and non-significant pooled effect size, g = 0.03, 95% CI [−0.11, 0.18] (Fig. 10). Heterogeneity between studies was high and significant (I2 = 72%, 95% CI [47, 85], p < 0.001), although the prediction interval was not as extreme as in other cases, 95% PI [−0.36, 0.42]. In the sensitivity analysis, no outliers were detected. However, one influential study (#18) was omitted in the influence analysis, lowering the heterogeneity to an I2 of 40%, 95% CI [0, 73], p = 0.099. As a consequence, the pooled effect size remained small and non-significant, although changing sign, g = −0.02, 95% CI [−0.16, 0.12]. Egger’s test revealed no indication for publication bias.

Protective factors

Studies in the protective factors cluster (n = 11), showed a medium-sized but non-significant pooled effect, g = 0.40, 95% CI [−0.07, 0.88] (Fig. 11). Heterogeneity was extremely high and significant (I2 = 99%, 95% CI [98, 99], p < 0.001), providing low predictive power for further research, 95% PI [−1.26, 2.07]. Both strategies of adjustment adopted in the sensitivity analysis pointed to the same study (#18), both as an outlier, and as well as being the most influential. Removal of this study reduced the heterogeneity significantly to an I2 of 12%, 95% CI [0, 53]. Interestingly enough, after removing the study the pooled effect size was also affected significantly. While it became smaller, it also became significant, g = 0.13, 95% CI [0.05, 0.21], p = 0.006. Egger’s test revealed no indication for publication bias.

Stress

As the stress cluster contained only 7 studies, interpretation of the results requires considerable caution. The pooled effect size was small and non-significant, g = 0.13, 95% CI [0.04, 0.31] (Fig. 12). Heterogeneity was present, but moderate-to-large with an I2 of 61% (95% CI [11, 83], 95% PI [−0.26, 0.52]), so sensitivity analysis was also conducted in this case. While no outliers were detected, omitting one particular study (#10) led to a strong reduction in heterogeneity, resulting in an I2 of 42%, 95% CI [0, 77]. There were no big differences in effect size, which remained small and non-significant, g = 0.09, 95% CI [−0.09, 0.27]. This was the only cluster where the Egger’s test revealed a risk for publication bias, with intercept = −2.89, 95% CI [−4.69, −1.10], p = 0.025. As a strategy for adjustment, the Duval and Tweedie Trim and Fill procedure was applied, which imputed two additional studies to adjust for publication bias. As a result, the effect size improved slightly and became significant, g = 0.20, 95% CI [0.00, 0.40], p = 0.049.

Well-being

Studies in the well-being cluster (n = 8) revealed a small but significant pooled effect size for interventions, g = 0.12, 95% CI [0.06, 0.18], p = 0.003 (Fig. 13). No heterogeneity was detected among the included studies (95% CI [0, 68]) and the prediction interval was quite narrow, 95% PI [0.02, 0.22]. Apparently, in this case, rather than using a random effects model, a fixed one would have been more appropriate. Egger’s test revealed no indication for publication bias.

Subgroup Analysis: Facilitators and Barriers

In order to answer the third research question–What are the factors underlying their effectiveness?–eight moderators were identified and included in the subgroup analysis. These were: level of interaction, level of professional support, level of guidance, level of digitization, duration of intervention, level of adherence, level of attrition, and setting. With regard to the subgroup/moderator analysis, only significant results were reported here. Should more detail be required, the reader is referred to Table 5.

The setting moderated the effects for two clusters, anxiety (p < 0.001) and internalizing symptoms (p < 0.001). Effects were significantly higher when the setting was at school, ganxiety = 0.51, 95% CI [−0.07, 1.09]; ginternalizing = 0.25, 95% CI [0.13, 0.37]. Effects were the lowest in both clusters when the intervention was leisure-based, ganxiety = 0.04, 95% CI [−0.17, 0.25]; ginternalizing = −0.06, 95% CI [−0.13, 0.02]. Further results indicated that for anxiety a homeschooling setting carried an even higher effect size (g = 0.86, 95% CI [0.72, 0.99]), although there was only one study included in this category. Finally, for internalizing symptoms a mixed setting generated a modest effect size for Hedges’ g at 0.20, 95% CI [−0.36, 0.76].

As for professional support, moderating effects were found within the anxiety cluster. Effects were significantly higher for studies that reported some level of support in their intervention (g = 0.59, 95% CI [−0.02, 1.20]), when compared to studies providing considerable support (g = 0.31, 95% CI [0.19, 0.43]), and to studies where no support was given, g = 0.07, 95% CI [−0.18, 0.33], p = 0.019.

With regard to the level of guidance, effects were found to be significantly higher within the protective factors cluster when the intervention was administered with some guidance (g = 0.66, 95% CI [−0.60, 1.93]), compared to when considerable (g = 0.31, 95% CI [−0.29, 0.91]) or no guidance (g = 0.11, 95% CI [0.03, 0.18], p < 0.001) was administered.

The level of adherence significantly moderated the effects for the depressive symptoms cluster (p < 0.001). Here, studies with consistent adherence showed greater effect size (g = 0.31, 95% CI [−0.23, 0.85]) compared to studies indicating inconsistent adherence (g = 0.18, 95% CI [0.07, 0.28]), and also to studies which did not indicate the level of adherence (g = −0.09, 95% CI [−0.52, 0.35].

Lastly, the level of attrition also was also shown to significantly moderate effect size in the anxiety cluster, p = 0.007. Studies with a low level (<20%) of attrition revealed a greater effect size (g = 1.17, 95% CI [−2.96, 5.30]) compared to studies that showed a concerning level of attrition (g = 0.14, 95% [−0.04, 0.33]), or to studies showing no attrition at all, g = 0.28, 95% CI [−0.75, 1.30].

Discussion

Digital tools are increasingly being used to try to counteract the declining mental health of adolescents (Bergin et al., 2020). The range and variety of these tools is growing rapidly, and more and more studies report on their potential and value (Lucas-Thompson et al., 2019; Sommers-Spijkerman et al., 2021). An updated overview of tools and programs is essential. Therefore, this systematic review examined digital and/or online evidence-based prevention programs for the promotion of mental health in young people aged 11 to 18 years old. In total 27 studies were identified to meet the inclusion criteria. Half of these studies reported significant effects in improving mental health. A meta-analysis was performed based on post-intervention measurements with a total sample of 13,216 participants to identify the effectiveness of the interventions and to examine the impact of underlying, predefined factors.

In line with prior research the results of the meta-analysis partially support the medium-to-low effectiveness of digital mental health promoting programs. In particular, small effects regarding a decrease of anxiety and an increase of well-being were identified, a finding which is consistent with previous research (Clarke et al., 2015; Harrer et al., 2019; Sevilla-Llewellyn-Jones et al., 2018). This seems particularly relevant given that anxiety is one of the most prevalent mental disorders in childhood and adolescence (Polanczyk et al., 2015). While Noh and Kim (2022) did not find beneficial results with respect to anxiety prevention, they suggest that this is due to the fact that their studies examined studies of general and at-risk populations whereas, for example, Sevilla-Llewellyn-Jones et al. (2018) examined studies of clinical populations where there is likely to be greater room for improvement in the related mental health domain (Noh & Kim, 2022).

After outlier-removal, small effects were also detected relating to the promotion of protective individual factors, including self-esteem, self-compassion, or help-seeking behavior. Contrary to the results of previous research (Clarke et al., 2015; Harrer et al., 2019; Sevilla-Llewellyn-Jones et al., 2018), no significant effects were found for depressive symptoms, stress, externalizing symptoms (e.g., hyperactivity, behavioral problems), and internalizing symptoms (e.g. loneliness, rumination, emotional difficulties).

When examining the impact of underlying predefined factors, the analysis showed that school-based interventions with consistent adherence, low levels of attrition and some level of professional support and guidance, were found to be most effective. These findings confirm previous research showing that schools are an appropriate setting for promoting and supporting mental health in children and adolescents (e.g., Cilar et al., 2020). A school-based setting, some level of professional support, and low levels of attrition were found to be the most beneficial concerning anxiety relief. A school-based setting was also found to be most effective in the improvement of internalizing disorders, besides a mixed setting, which generated modest beneficial effects too. Consistent adherence was shown to have the greatest effects on depressive symptoms and the administration of some level of guidance, in contrast to considerable or no guidance, was seen to provide the most benefit in the enhancement of protective factors. This result is partially consistent with the findings of Sevilla-Llewellyn-Jones et al. (2018) who identified studies in which engagement increased and outcomes improved when adherence was augmented by, for example, a guided diagnostic procedure, or the provision of feedback by a mental health professional (Kauer et al., 2012).

Even though individual attrition rates were up to 58% in the current sample, the mean attrition rate (M = 25.2%), relating to the most recent time point, remained below that found in the literature (Mweighted = 31) for internet-based treatment programs (Melville et al., 2010). However, the level of drop out in the current sample was noticeable (>20%) for about half the sample. This should not be neglected as high attrition rates may lead to underestimating the impact of the intervention (Eysenbach, 2005). High attrition is nothing new to school-based research and significant attention and effort has to be channeled into obtaining teachers’ and students’ compliance in order to maintain as many participants as possible (Calear et al., 2016). As mentioned above, one facilitator of efficacy is the level of guidance, where some, rather than none or substantial guidance was found to be most beneficial. This finding might be related to participants’ choice and higher motivation and engagement (Burckhardt et al., 2015), which is assumed to be present whenever there is a balance between sufficient instruction and freedom to choose ways of engagement. The presence of considerable guidance in a program might even be a barrier to effective intervention implementation, especially when guidance, and thus time and effort, is required by teachers (Fridrici & Lohaus, 2009). Guidance features thus need to be designed in such a way that no additional strain is placed on teachers during program implementation. At the same time, the age of the target group must be carefully considered, and more emphasis must be placed on age level when designing an intervention, as age has been found to significantly moderate its effects (e.g., Osborn et al., 2020; Sousa et al., 2020). It is advisable to focus on a narrow age range rather than a broad one, as the needs of older and younger adolescents may differ considerably in terms of the pace or challenge of an activity (Egan et al., 2021).

Another potential barrier with respect to detecting efficacy relates to the adequate choice of control condition. In three out of seven studies that used an alternative intervention program in the current sample, both conditions led to improvements in outcome measures (and non-significant between-group effects) and possibly made intervention effects harder to detect. Considerable discernment is therefore called for, particularly when opting for an alternative intervention method in place of the more common (waitlist) control group.

Additionally, the participants’ baseline level can also be seen as a potential barrier when detecting efficacy. Participants reporting higher levels of distress showed a higher increase in targeted outcome measures (Kauer et al., 2012; Osborn et al., 2020; Puolakanaho et al., 2019). Similarly, it has been argued that low levels of distress may be a reason for ineffective interventions (Bohleber et al., 2016; Calear et al., 2016; Kenny et al., 2020; van Vliet & Andrews, 2009). Thus, higher baseline levels of distress often led to a greater benefit of the intervention. Or, in other words, low baseline levels of distress may help explain why efficacy was not found in some studies. This was in line with results of previous studies that found greater benefits for targeted, compared to universal interventions (Feiss et al., 2019; Werner-Seidler et al., 2017), using more specific inclusion criteria. Feiss et al. (2019) found greater stress reduction and Werner-Seidler et al. (2017) greater reduction of depressive symptoms for targeted interventions compared to universal interventions. The establishment of appropriate inclusion criteria can therefore help target that part of the population which may benefit most from an intervention. Whatever the case, recent research shows that even individuals with mild, subclinical symptom levels may benefit from help (Ruscio 2019). Hence, universal interventions should not be disregarded, especially since adolescence is a vulnerable time when mental health problems often present themselves for the first time (Solmi et al., 2022). Therefore, in addition to targeted interventions, it is important to provide universal programs, preferably those that are adapted to the individual needs of young people in order to prevent initial disorder development.

Given that classrooms, as well as society overall, are increasingly characterized by diversity awareness, it was surprising that only two studies focused on minorities, namely sexual gender minority youth (Egan et al., 2021) and youth who identified as American Indian and Alaskan native (Craig Rushing et al., 2021), and only three studies reported on non-binary gender identification options. In their study, Craig Rushing et al. (2021) used a positive representation of native youth in both intervention conditions (alternate and intervention), and both groups showed improvement in mental health outcomes, such as resilience, coping or self-esteem. This demonstrates the importance of culturally relevant content and minority representation in the interventions themselves. This is a clear indication that future interventions should be designed with greater diversity awareness in mind.

Several limitations were detected in the process of reviewing and analyzing the data which have to be considered when interpreting the reported findings. One important limitation, especially regarding the subgroup analysis, is the high level of heterogeneity among the studies, which probably relates back to the creation of outcome clusters that were not directly derived from the original authors’ intentions. However, such clustering was necessary for the meta-analytic analysis and has also been found to be a sound approach in prior research (Harrer et al., 2019). Another limitation was the failure to compare universal and targeted interventions, as has been done in other systematic reviews and meta-analyses (Feiss et al., 2019; Werner-Seidler et al., 2017). However, the relatively small number (5) and high variability in mental health domains of the targeted interventions included in the present study did not allow for adequate statistical analysis. The plethora of mental health domains that are targeted within the mental health promoting interventions can also be seen as a limitation, as this could result in some discrepancy in the measurements of the outcomes used in the meta-analysis. This may be traced back to the nature of the concept itself which entailed the application of a broad range of outcome measures in many of the studies reviewed. A more targeted focus on specific mental health domains is therefore advisable in future research. Another associated limitation, and mentioned earlier, is the high heterogeneity observed in the intervention effects. In addition to the clustering issue, the heterogeneity could also be due to the wide variation in content, setting and length of the interventions. Two further limitations common in mental health research were also observed in the current sample, i.e., a sole reliance on self-reported measures, and the non-blinding of participants. These were mainly responsible for the relatively unfavorable results regarding the risk of bias, where one quarter of the RCTs raised some concerns, and a little less than half of the CTs raised serious concerns.

Based on the results of the review and meta-analysis, there are several important points that need to be taken into consideration for future studies. First, moderating variables must be considered in efficacy analyses, as they can have a significant impact on the success of an intervention. Setting, level of guidance and level of professional support were found to be particularly influential in the current study. The provision of adequate support and guidance for participants could also greatly improve the outcomes of prevention studies. It is recommended that support and guidance be offered in an adaptive manner, i.e., one which is tailored to the needs of the participants. Thus, special attention should be given to such moderating variables in the design of future studies. Second, two other important factors that influence the effectiveness of the intervention are adherence to the intervention protocol and the attrition rate. It is critical to provide adequate opportunities for engagement to keep participation high and dropout rates low. This entails considerable effort in study implementation. Therefore, more attention should be paid to implementation quality in the future, and interventions with smaller groups but higher implementation quality should be preferred over studies with large numbers of participants. Third, interventions employing diversity-sensitive design and content are critical in meeting the needs of all youth. Fourth, specific mental health domains should be addressed as part of the interventions in order to reduce the broad range of outcomes and to obtain meaningful results concerning impact. Finally, as only 13 of the interventions studied here were available to students, and even fewer (9) were free and accessible for all, it is crucial that further research look just as closely at maintaining and promoting the availability of an intervention as at intervention development.

Conclusion

Given the alarming number of young people suffering from mental health issues, there is a great need for easily accessible mental health promoting tools. These tools are increasingly being made available digitally, and in line with rapid technological development, the field of digital mental health tools is growing fast. Thus, an up-to-date look at these programs and their efficacy is clearly needed. This systematic review and meta-analysis provides such an overview and offers insight into the effectiveness, barriers and facilitators relating to digital evidence-based mental health programs for youth aged 11 to 18 years. Even though results have to be interpreted with caution, the findings support previous research in that digital interventions have the potential to promote adolescent mental health. Small effects were found for well-being, anxiety and protective factors (e.g. help-seeking behavior). Important factors in tool efficacy include the setting, levels of guidance and support and intervention adherence. It was found that a school-based setting, some level of guidance and professional support, and consistent adherence to the intervention were most beneficial. Future research should pay particular attention to these moderating factors, to a diversity-sensitive design and content, as well as to a sustained availability of the tools developed.

References

Atladottir, H. O., Gyllenberg, D., Langridge, A., Sandin, S., Hansen, S. N., Leonard, H., Gissler, M., Reichenberg, A., Schendel, D. E., Bourke, J., Hultman, C. M., Grice, D. E., Buxbaum, J. D., & Parner, E. T. (2015). The increasing prevalence of reported diagnoses of childhood psychiatric disorders: A descriptive multinational comparison. European Child & Adolescent Psychiatry, 24(2), 173–183. https://doi.org/10.1007/s00787-014-0553-8.

Bakker, D., Kazantzis, N., Rickwood, D., & Rickard, N. (2016). Mental health smartphone apps: review and evidence-based recommendations for future developments. JMIR Mental Health, 3(1), e7 https://doi.org/10.2196/mental.4984.

Bauer, S., Golkaramnay, V., & Kordy, H. (2005). E-mental-health. Psychotherapeut, 50(1), 7–15. https://doi.org/10.1007/s00278-004-0403-0.

Bergin, A. D., Vallejos, E. P., Davies, E. B., Daley, D., Ford, T., Harold, G., Hetrick, S., Kidner, M., Long, Y., Merry, S., Morriss, R., Sayal, K., Sonuga-Barke, E., Robinson, J., Torous, J., & Hollis, C. (2020). Preventive digital mental health interventions for children and young people: A review of the design and reporting of research. NPJ Digital Medicine, 3, 133 https://doi.org/10.1038/s41746-020-00339-7.

Bohleber, L., Crameri, A., Eich-Stierli, B., Telesko, R., & Wyl, Avon (2016). Can we foster a culture of peer support and promote mental health in adolescence using a web-based app? A control group study. JMIR Mental Health, 3(3), e45 https://doi.org/10.2196/mental.5597.

Borenstein, M., Hedges, L. V., & Rothstein, H. R. (2021). Introduction to meta-analysis. John Wiley & Sons.

Borenstein, M., Higgins, J. P. T., Hedges, L. V., & Rothstein, H. R. (2017). Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Research Synthesis Methods, 8(1), 5–18. https://doi.org/10.1002/jrsm.1230.

Boyacıoğlu Doğru, H., Gulsahi, A., Çehreli, S. B., Galić, I., van der Stelt, P., & Cameriere, R. (2018). Age of majority assessment in Dutch individuals based on Cameriere’s third molar maturity index. Forensic Science International, 282, 231.e1–231.e6. https://doi.org/10.1016/j.forsciint.2017.11.009.

Brown, M., O’Neill, N., van Woerden, H., Eslambolchilar, P., Jones, M., & John, A. (2016). Gamification and Adherence to Web-Based Mental Health Interventions: A Systematic Review. JMIR Mental Health, 3(3), e39 https://doi.org/10.2196/mental.5710.

Burckhardt, R., Manicavasagar, V., Batterham, P. J., Miller, L. M., Talbot, E., & Lum, A. (2015). A web-based adolescent positive psychology program in schools: randomized controlled trial. Journal of Medical Internet Research, 17(7), e187 https://doi.org/10.2196/jmir.4329.

Byrne, D. G., Davenport, S. C. & Mazanov, J. (2007). Profiles of adolescent stress: The development of the adolescent stress questionnaire (ASQ). Journal of Adolescence, 30(3), 393–416. https://doi.org/10.1016/j.adolescence.2006.04.004.

Calear, A. L., Christensen, H., Brewer, J., Mackinnon, A. J., & Griffiths, K. M. (2016). A pilot randomized controlled trial of the e-couch anxiety and worry program in schools. Internet Interventions, 6, 1–5. https://doi.org/10.1016/j.invent.2016.08.003.

Canadian Paediatric Society. (2003). Age limits and adolescents. Paediatrics & Child Health, 8(9), 577–578. https://doi.org/10.1093/pch/8.9.577.

Carsley, D., Khoury, B., & Heath, N. L. (2018). Effectiveness of mindfulness interventions for mental health in schools: a comprehensive meta-analysis. Mindfulness, 9(3), 693–707. https://doi.org/10.1007/s12671-017-0839-2.

Cilar, L., Štiglic, G., Kmetec, S., Barr, O., & Pajnkihar, M. (2020). Effectiveness of school-based mental well-being interventions among adolescents: A systematic review. Journal of Advanced Nursing. https://doi.org/10.1111/jan.14408.

Clarke, A. M., Kuosmanen, T., & Barry, M. M. (2015). A systematic review of online youth mental health promotion and prevention interventions. Journal of Youth and Adolescence, 44(1), 90–113. https://doi.org/10.1007/s10964-014-0165-0.

Craig Rushing, S., Kelley, A., Bull, S., Stephens, D., Wrobel, J., Silvasstar, J., Peterson, R., Begay, C., Ghost Dog, T., McCray, C., Love Brown, D., Thomas, M., Caughlan, C., Singer, M., Smith, P., & Sumbundu, K. (2021). Efficacy of an mHealth Intervention (BRAVE) to promote mental wellness for American Indian and Alaska native teenagers and young adults: randomized controlled trial. JMIR Mental Health, 8(9), e26158 https://doi.org/10.2196/26158.

Cuijpers, P., Weitz, E., Cristea, I. A., & Twisk, J. (2017). Pre-post effect sizes should be avoided in meta-analyses. Epidemiology and Psychiatric Sciences, 26(4), 364–368. https://doi.org/10.1017/S2045796016000809.

De la Barrera, U., Mónaco, E., Postigo-Zegarra, S., Gil-Gómez, J. ‑A., & Montoya-Castilla, I. (2021). Emotic: Impact of a game-based social-emotional programme on adolescents. PloS One, 16(4), e0250384 https://doi.org/10.1371/journal.pone.0250384.

Douma, M., Maurice-Stam, H., Gorter, B., Houtzager, B. A., Vreugdenhil, H. J. I., Waaldijk, M., Wiltink, L., Grootenhuis, M. A., & Scholten, L. (2021). Online psychosocial group intervention for adolescents with a chronic illness: A randomized controlled trial. Internet Interventions, 26, 100447 https://doi.org/10.1016/j.invent.2021.100447.

Dray, J., Bowman, J., Campbell, E., Freund, M., Wolfenden, L., Hodder, R. K., McElwaine, K., Tremain, D., Bartlem, K., Bailey, J., Small, T., Palazzi, K., Oldmeadow, C., & Wiggers, J. (2017). Systematic review of universal resilience-focused interventions targeting child and adolescent mental health in the school setting. Journal of the American Academy of Child and Adolescent Psychiatry, 56(10), 813–824. https://doi.org/10.1016/j.jaac.2017.07.780.

Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56(2), 455–463. https://doi.org/10.1111/j.0006-341x.2000.00455.x.

Edridge, C., Wolpert, M., Deighton, J., & Edbrooke-Childs, J. (2020). An mHealth Intervention (ReZone) to help young people self-manage overwhelming feelings: cluster-randomized controlled trial. JMIR Research Protocols, 6(11), e213 https://doi.org/10.2196/resprot.7019.

Egan, J. E., Corey, S. L., Henderson, E. R., Abebe, K. Z., Louth-Marquez, W., Espelage, D., Hunter, S. C., DeLucas, M., Miller, E., Morrill, B. A., Hieftje, K., Sang, J. M., Friedman, M. S., & Coulter, R. W. S. (2021). Feasibility of a web-accessible game-based intervention aimed at improving help seeking and coping among sexual and gender minority youth: results from a randomized controlled trial. The Journal of Adolescent Health: Official Publication of the Society for Adolescent Medicine, 69(4), 604–614. https://doi.org/10.1016/j.jadohealth.2021.03.027.

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315, 629–634.

EPC. (2022). SRDR+ Systematic Review Data Repository Plus [Computer software]. AHRQ - Agency for Healthcare Research and Quality. Brown University Evidence-based Practice Center (EPC). https://srdrplus.ahrq.gov/.

Eysenbach, G. (2005). The law of attrition. Journal of Medical Internet Research, 7(1), e11 https://doi.org/10.2196/jmir.7.1.e11.

Feiss, R., Dolinger, S. B., Merritt, M., Reiche, E., Martin, K., Yanes, J. A., Thomas, C. M., & Pangelinan, M. (2019). A systematic review and meta-analysis of school-based stress, anxiety, and depression prevention programs for adolescents. Journal of Youth and Adolescence, 48(9), 1668–1685. https://doi.org/10.1007/s10964-019-01085-0.

Fridrici, M., & Lohaus, A. (2009). Stress‐prevention in secondary schools: online‐ versus face‐to‐face‐training. Health Education, 109(4), 299–313. https://doi.org/10.1108/09654280910970884.

Galderisi, S., Heinz, A., Kastrup, M., Beezhold, J., & Sartorius, N. (2015). Toward a new definition of mental health. World Psychiatry: Official Journal of the World Psychiatric Association (WPA), 14(2), 231–233. https://doi.org/10.1002/wps.20231.

Grist, R., Porter, J., & Stallard, P. (2017). Mental health mobile apps for preadolescents and adolescents: a systematic review. Journal of Medical Internet Research, 19(5), e176 https://doi.org/10.2196/jmir.7332.

Harrer, M., Adam, S. H., Baumeister, H., Cuijpers, P., Karyotaki, E., Auerbach, R. P., Kessler, R. C., Bruffaerts, R., Berking, M., & Ebert, D. D. (2019). Internet interventions for mental health in university students: A systematic review and meta-analysis. International Journal of Methods in Psychiatric Research, 28(2), e1759 https://doi.org/10.1002/mpr.1759.

Harrer, M., Cuijpers, P., Furukawa, T. A., & Ebert, D. D. (2019). dmetar: companion R package for the guide ‘doing meta-analysis in R’: R package version 0.0.9000. http://dmetar.protectlab.org/.

Haug, S., Paz Castro, R., Wenger, A., & Patrick, M. P. (2021). A mobile phone–based life-skills training program for substance use prevention among adolescents: cluster-randomized controlled trial. JMIR MHealth and UHealth, 9(7). https://doi.org/10.2196/26951.

Hedges, L. V., & Olkin, I. (2014). Statistical methods for meta-analysis. Academic Press.

Higgins, J. P. T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M. J., & Welch, V. A. (Eds.). (2022). Cochrane Handbook for Systematic Reviews of Interventions version 6.2. www.training.cochrane.org/handbook.

Higgins, J. P. T., & Thompson, S. G. (2002). Quantifying heterogeneity in a meta‐analysis. Statistics in Medicine, 21, 1539–1558.

Higgins, J. P. T., Thompson, S. G., Deeks, J. J., & Altman, D. G. (2003). Measuring inconsistency in meta-analyses. BMJ (Clinical Research Ed.), 327(7414), 557–560. https://doi.org/10.1136/bmj.327.7414.557.

Huppert, F. A., & Johnson, D. M. (2010). A controlled trial of mindfulness training in schools: The importance of practice for an impact on well-being. The Journal of Positive Psychology, 5(4), 264–274. https://doi.org/10.1080/17439761003794148.

IntHout, J., Ioannidis, J. P. A., & Borm, G. F. (2014). The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Medical Research Methodology, 14, 25 https://doi.org/10.1186/1471-2288-14-25.

Kaess, M., Moessner, M., Koenig, J., Lustig, S., Bonnet, S., Becker, K., Eschenbeck, H., Rummel-Kluge, C., Thomasius, R., & Bauer, S. (2021). Editorial Perspective: A plea for the sustained implementation of digital interventions for young people with mental health problems in the light of the COVID-19 pandemic. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 62(7), 916–918. https://doi.org/10.1111/jcpp.13317.

Kauer, S. D., Reid, S. C., Crooke, A. H. D., Khor, A. S., Hearps, S. J. C., Jorm, A. F., Sanci, L. A., & Patton, G. (2012). Self-monitoring using mobile phones in the early stages of adolescent depression: Randomized controlled trial. Journal of Medical Internet Research, 14(3), e67 https://doi.org/10.2196/jmir.1858.

Kenny, R., Fitzgerald, A., Segurado, R., & Dooley, B. (2020). Is there an app for that? A cluster randomised controlled trial of a mobile app-based mental health intervention. Health Informatics Journal, 26(3), 1538–1559. https://doi.org/10.1177/1460458219884195.

Kieling, C., Baker-Henningham, H., Belfer, M., Conti, G., Ertem, I., Omigbodun, O., Rohde, L. A., Srinath, S., Ulkuer, N., & Rahman, A. (2011). Child and adolescent mental health worldwide: Evidence for action. Lancet, 378(9801), 1515–1525. https://doi.org/10.1016/S0140-6736(11)60827-1.

Kutok, E. R., Dunsiger, S., Patena, J. V., Nugent, N. R., Riese, A., Rosen, R. K., & Ranney, M. L. (2021). A cyberbullying media-based prevention intervention for adolescents on instagram: pilot randomized controlled trial. JMIR Mental Health, 8(9), e26029 https://doi.org/10.2196/26029.

Lucas-Thompson, R. G., Broderick, P. C., Coatsworth, J. D., & Smyth, J. M. (2019). New avenues for promoting mindfulness in adolescence using mHealth. Journal of Child and Family Studies, 28(1), 131–139. https://doi.org/10.1007/s10826-018-1256-4.

Malboeuf-Hurtubise, C., Léger-Goodes, T., Mageau, G. A., Taylor, G., Herba, C. M., Chadi, N., & Lefrançois, D. (2021). Online art therapy in elementary schools during COVID-19: Results from a randomized cluster pilot and feasibility study and impact on mental health. Child and Adolescent Psychiatry and Mental Health, 15(1), 15 https://doi.org/10.1186/s13034-021-00367-5.

Manicavasagar, V., Horswood, D., Burckhardt, R., Lum, A., Hadzi-Pavlovic, D., & Parker, G. (2014). Feasibility and effectiveness of a web-based positive psychology program for youth mental health: Randomized controlled trial. Journal of Medical Internet Research, 16(6), e140 https://doi.org/10.2196/jmir.3176.

Marcellus, L. (2004). Are we missing anything? Pursuing research on attrition. CJNR, 36(3), 82–98.

McGuinness, L. A., & Higgins, J. P. T. (2021). Risk-of-bias VISualization (robvis): An R package and Shiny web app for visualizing risk-of-bias assessments. Research Synthesis Methods, 12(1), 55–61. https://doi.org/10.1002/jrsm.1411.

Melville, K. M., Casey, L. M., & Kavanagh, D. J. (2010). Dropout from Internet-based treatment for psychological disorders. The British Journal of Clinical Psychology, 49(Pt 4), 455–471. https://doi.org/10.1348/014466509X472138.

Mrazek, A. J., Mrazek, M. D., Cherolini, C. M., Cloughesy, J. N., Cynman, D. J., Gougis, L. J., Landry, A. P., Reese, J. V., & Schooler, J. W. (2019). The future of mindfulness training is digital, and the future is now. Current Opinion in Psychology, 28, 81–86. https://doi.org/10.1016/j.copsyc.2018.11.012.

Murray, H., & Dingwall, S. (2001). The dominance of English at European Universities: Switzerland and Sweden compared. In U. Ammon (Ed.), The dominance of English as a language of science (pp. 85–112). DE GRUYTER MOUTON.

Noh, D., & Kim, H. (2022). Effectiveness of online interventions for the universal and selective prevention of mental health problems among adolescents: a systematic review and meta-analysis. Prevention Science: The Official Journal of the Society for Prevention Research, 1–12. https://doi.org/10.1007/s11121-022-01443-8.

O’Dea, B., Han, J., Batterham, P. J., Achilles, M. R., Calear, A. L., Werner-Seidler, A., Parker, B., Shand, F., & Christensen, H. (2020). A randomised controlled trial of a relationship-focussed mobile phone application for improving adolescents’ mental health. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 61(8), 899–913. https://doi.org/10.1111/jcpp.13294.

O’Dea, B., Subotic-Kerry, M., King, C., Mackinnon, A. J., Achilles, M. R., Anderson, M., Parker, B., Werner-Seidler, A., Torok, M., Cockayne, N., Baker, S. T. E., & Christensen, H. (2021). A cluster randomised controlled trial of a web-based youth mental health service in Australian schools. The Lancet Regional Health. Western Pacific, 12, 100178 https://doi.org/10.1016/j.lanwpc.2021.100178.

Osborn, T. L., Rodriguez, M., Wasil, A. R., Venturo-Conerly, K. E., Gan, J., Alemu, R. G., Roe, E., Arango G,, S., Otieno, B. H., Wasanga, C. M., Shingleton, R., & Weisz, J. R. (2020). Single-session digital intervention for adolescent depression, anxiety, and well-being: Outcomes of a randomized controlled trial with Kenyan adolescents. Journal of Consulting and Clinical Psychology, 88(7), 657–668. https://doi.org/10.1037/ccp0000505.