Abstract

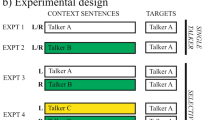

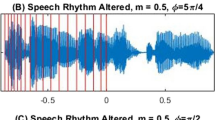

This study examined the temporal dynamics of spoken word recognition in noise and background speech. In two visual-world experiments, English participants listened to target words while looking at four pictures on the screen: a target (e.g. candle), an onset competitor (e.g. candy), a rhyme competitor (e.g. sandal), and an unrelated distractor (e.g. lemon). Target words were presented in quiet, mixed with broadband noise, or mixed with background speech. Results showed that lexical competition changes throughout the observation window as a function of what is presented in the background. These findings suggest that, rather than being strictly sequential, stream segregation and lexical competition interact during spoken word recognition.

Similar content being viewed by others

References

Allopenna, P. D., Magnuson, J. S., & Tanenhaus, M. K. (1998). Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language, 38, 419–439.

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412.

Barr, D. J. (2008). Analyzing ’visual world’ eye tracking data using multilevel logistic regression. Journal of Memory and Language, 59, 457–474.

Ben-David, B. M., Chambers, C. G., Daneman, M., Pichora-Fuller, K. M., Reingold, E. M., & Schneider, B. (2010). Effects of aging and noise on real-time spoken word recognition: Evidence from eye movements. Journal of Speech, Language and Hearing Research, 54, 243–262.

Brouwer, S., Mitterer, H., & Huettig, F. (2012). Speech reductions change the dynamics of competition during spoken word recognition. Language and Cognitive Processes, 27(4), 539–571.

Cooper, R. M. (1974). The control of eye fixation by the meaning of spoken language. A new methodology for the real-time investigation of speech perception, memory, and language processing. Cognitive Psychology, 6, 813–839.

McQueen, J. M. (2007). Eight questions about spoken-word recognition. In M. G. Gaskell (Ed.), The Oxford handbook of psycholinguistics (pp. 37–53). Oxford: Oxford University Press.

McQueen, J. M., & Huettig, F. (2012). Changing only the probability that words will be distorted changes how they are recognized. Journal of Acoustical Society of America, 131(1), 509–517.

Rapp, B., & Goldrick, M. (2000). Discreteness and interactivity in spoken word production. Psychological Review, 107(3), 460–499.

Tanenhaus, M. K., Spivey-Knowlton, M. J., Eberhard, K. M., & Sedivy, J. C. (1995). Integration of visual and linguistic information in spoken language comprehension. Science, 268, 1632–1634.

Acknowledgments

Writing this article has been supported by Grant R01-DC005794 from NIH-NIDCD and the Hugh Knowles Center at Northwestern University. We thank Chun Liang Chan, Masaya Yoshida, Matt Goldrick, Lindsay Valentino, and Vanessa Dopker.

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Brouwer, S., Bradlow, A.R. The Temporal Dynamics of Spoken Word Recognition in Adverse Listening Conditions. J Psycholinguist Res 45, 1151–1160 (2016). https://doi.org/10.1007/s10936-015-9396-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10936-015-9396-9