Abstract

We introduce Neural Network (NN for short) approximation architectures for the numerical solution of Boundary Integral Equations (BIEs for short). We exemplify the proposed NN approach for the boundary reduction of the potential problem in two spatial dimensions. We adopt a Galerkin formulation-based method, in polygonal domains with a finite number of straight sides. Trial spaces used in the Galerkin discretization of the BIEs are built by using NNs that, in turn, employ the so-called Rectified Linear Units (ReLU) as the underlying activation function. The ReLU-NNs used to approximate the solutions to the BIEs depend nonlinearly on the parameters characterizing the NNs themselves. Consequently, the computation of a numerical solution to a BIE by means of ReLU-NNs boils down to a fine tuning of these parameters, in network training. We argue that ReLU-NNs of fixed depth and with a variable width allow us to recover well-known approximation rate results for the standard Galerkin Boundary Element Method (BEM). This observation hinges on existing well-known properties concerning the regularity of the solution of the BIEs on Lipschitz, polygonal boundaries, i.e. accounting for the effect of corner singularities, and the expressive power of ReLU-NNs over different classes of functions. We prove that shallow ReLU-NNs, i.e. networks having a fixed, moderate depth but with increasing width, can achieve optimal order algebraic convergence rates. We propose novel loss functions for NN training which are obtained using computable, local residual a posteriori error estimators with ReLU-NNs for the numerical approximation of BIEs. We find that weighted residual estimators, which are reliable without further assumptions on the quasi-uniformity of the underlying mesh, can be employed for the construction of computationally efficient loss functions for ReLU-NN training. The proposed framework allows us to leverage on state-of-the-art computational deep learning technologies such as TENSORFLOW and TPUs for the numerical solution of BIEs using ReLU-NNs. Exploratory numerical experiments validate our theoretical findings and indicate the viability of the proposed ReLU-NN Galerkin BEM approach.

Similar content being viewed by others

1 Introduction

Following fundamental mathematical developments in the 90’s that established density (resp. “universal approximation capacity”) of shallow NNs (see, e.g., the surveys [28] and the references therein), Deep Neural Networks (DNNs for short) based computations have seen, during the past five to ten years, an increasing development driven by the advent of data science and deep learning techniques. In these developments, in particular the quantitative advantages in expressive power furnished by DNNs has moved into the focus of interest.

Mounting computational and theoretical evidence points to significant advantages of the expressive power of DNNs when furnished by possibly large NN depth. We mention only [29, 36] for the use of DNNs in the numerical solution of PDEs, and the recent works [27, 32] that indicate a scope for the efficacy of DNNs in the approximation of maps between high-dimensional spaces.

In the present work, we propose the use of NNs for the approximation of variational BIEs, in particular of the first kind. We focus on NNs with ReLU activation, of fixed depth. As we shall show, mathematically and computationally, these ReLU-NNs allow for optimal convergence rates of the Galerkin BEM in polygons. We also comment in passing on advantages afforded by NNs with large depth. Here, approximation theory indicates that exponential convergence is possible, in principle.

Contributions We consider the Laplace equation with appropriate boundary conditions on \({\mathbb {R}}^2\setminus \Gamma \), where \(\Gamma \) corresponds to a one dimensional open arc, and on a polygonal Lipschitz domain \({\text {D}}\), and consider their boundary reduction by means of BIOs as described, for example, in [31]. We prove that by using shallow ReLU-NNs as trial spaces in the Galerkin discretization of the resulting BIEs one can recover well-known algebraic convergence rate bounds of the BEM, which are in turn traditionally obtained by means of other methods, such as graded meshes or the adaptive BEM [9, 15] (ABEM). Even though not thoroughly studied in the present work, we remark that by using Deep ReLU-NNs (and working under the assumption of analytic regularity hypotheses on the data) one can recover exponential convergence rates usually obtained using the so-called hp Galerkin BEM. This can be obtained by recalling recently obtained ReLU-NN emulation results from [27] together with analytic regularity results of the solution to BIEs in polygonal Lipschitz domain \({\text {D}}\), with a finite number of straight sides, in weighted function spaces [3]. However, we hasten to add that the main goal of the present work is to study the approximation of the solution to BIEs by means of shallow ReLU-NNs.

The insight behind the results present herein is the interpretation that shallow ReLU-NNs realize approximations in linear subspaces, whose basis elements are realized by the so-called “hidden layers” of the ReLU-NN and are, therefore, subject to optimization during ReLU-NN training. In the present paper, we partially leverage this flexibility and the ability of the hidden layers of ReLU-NNs to express: (a) low order boundary element spaces with “free-knots” (in the terminology of spline approximation), i.e., to adapt the partitions of the boundary to the structure of the unknown solution (which is reminiscent of adaptive meshing strategies in Galerkin BEM), and (b) to leverage adaptive mesh refinement methods in BEM for a rational procedure to “enlarge” the ReLU-NN through the insertion of nodes.

We propose two different algorithms to, computationally, perform the construction of the ReLU-NNs (thus, two different paths to train the network). The first one is based on the observation that the solution of symmetric, coercive problems, such as the ones arising from the boundary reduction of the Laplace problem, can be written as the minimization of a suitable energy functional.

The second algorithm makes use of well-known a posteriori error estimates, which are commonly used in the ABEM. The definition of an efficiently computable loss function which is based on computable, reliable a-posteriori error estimators for Galerkin discretizations differs from other widely established methods, and is not limited to Galerkin BEM. Numerical experiments show the computational feasibility of these algorithms and a detailed convergence analysis shows that shallow ReLU-NN Galerkin BEM can attain the optimal algebraic convergence rates. NN training can, in particular, compensate for reduced convergence rates due to, e.g., corner singularities of the physical domain.

Outline This work is structured as follows. In Sect. 2 we briefly review the boundary reduction of potential problems in two space dimensions. We also present a recapitulation of the direct method of boundary reduction and the strong ellipticity of boundary integral operators of the first-kind in fractional order Sobolev spaces. Additionally, we recall results concerning the regularity of the solution to BIEs both in Lipschitz polygons and on open arcs, which will be used ahead to obtain convergence rates for the ReLU-NN approximation of BIEs.

We begin Sect. 3 by introducing rigorous definitions of DNNs and describing, in detail, the connection existing between \({\mathbb {P}}_1\)-spline boundary element spaces and ReLU-NNs. A key observation in our analysis is that the low-order BEM spaces with adaptive mesh refinement is shown to admit a representation through ReLU-NNs architectures. This property, together with the regularity results of the solutions to BIEs, allows us to establish convergence results with optimal rates in the approximation of the described BIEs by means of ReLU-NNs. These theoretical results provide a benchmark to assess the performance of the different training algorithms proposed ahead in Sect. 4.

In Sect. 4, we propose two concrete training algorithms for the construction of ReLU-NNs approximating the solution of the analyzed BIEs. The first one leverages on the coercivity properties of the BIEs for the potential problem and the interpretation of its solution as the minimizer of a suitable energy functional. By performing a combination of the optimization of hidden parameters of the ReLU-NN and computation of the output layer by solving the corresponding Galerkin linear system, the proposed algorithm attains the (theoretically proven) optimal convergence rates for different numerical test cases. The second algorithm proposed in Sect. 4 hinges on a posteriori error estimates which, in turn, provide a computable upper bound for the error between the exact solution to the BIE and the ReLU-NN. Consequently, we can use this property to guide the training of the ReLU-NNs at each step of the iterative process. We mention that the proposed numerical methodology does not rely on automatic differentiation of the loss function with respect to the DNN parameters. Section 5 then presents concrete numerical experiments and is followed by Sect. 6, which recapitulates our principal findings and indicates several lines of investigation for their extension and possible further mathematical results.

2 Preliminaries

2.1 Sobolev Spaces in Bounded Domains

Let \({\text {D}}\subset {\mathbb {R}}^2\) be a bounded, connected Lipschitz domain with boundary \(\partial {\text {D}}\). For \(s\in {\mathbb {R}}\), we denote by \(H^s({\text {D}})\) the standard Sobolev spaces of order s defined in \({\text {D}}\) equipped with the norm \(\left\Vert \cdot \right\Vert _{H^s({\text {D}})}\). As it is customary, we identify \(H^0({\text {D}})\) with \(L^2({\text {D}})\). Sobolev spaces on the boundary \(\Gamma :=\partial {\text {D}}\) are denoted by \(H^s(\Gamma )\), where the range of \(s\in {\mathbb {R}}\) is restricted in accordance to the regularity of the domain \({\text {D}}\) (cf. [26, Sections 3.8 and 3.11]). For Lipschitz domains, for example, the space \(H^s(\Gamma )\) is well-defined for \(s\in {[-1,1]}\). Furthermore, we shall denote by \(H^s({\text {D}})/{\mathbb {R}}\) (resp. \(H^s(\Gamma )/{\mathbb {R}}\)) the quotient space of \(H^s({\text {D}})\) (resp. \(H^s(\Gamma )\)) by the subspace \(\text {span}\{1\}\). We denote by \(\gamma _0: H^1({\text {D}}) \rightarrow H^{\frac{1}{2}}(\Gamma )\) and \(\gamma _1: H^1(\Delta ,{\text {D}}) \rightarrow H^{-\frac{1}{2}}(\Gamma )\) the Dirichlet and Neumann trace operators on \(\Gamma \), respectively, with \(H^1(\Delta ,{\text {D}}){:=}\{u\in H^1({\text {D}}):\Delta u\in L^2({\text {D}})\}\). The duality between \(H^s(\Gamma )\) and \(H^{-s}(\Gamma )\) is denoted by \(\left\langle \psi ,\phi \right\rangle _{\Gamma }\) for \(\psi \in H^{-s}(\Gamma )\) and \(\phi \in H^{s}(\Gamma )\).

2.2 Sobolev Spaces on Open Arcs

Let \(\Lambda \subset {\mathbb {R}}^2\) be a Jordan arc (in the sense of [30, Definition 2.4.2]). For \(s\in (-1,1)\), we denote by \(H^s(\Lambda )\) the standard Sobolev space on \(\Lambda \) and, furthermore, introduce the spaces \({\widetilde{H}}^s(\Lambda )\) as in [26, Section 3.6]. Moreover, the following relations hold (cf. [26, Section 3.11])

where, for a general Banach space X, \(X'\) denotes its dual space. The duality between \(H^s(\Lambda )\) and \({\widetilde{H}}^{-s}(\Lambda )\) is denoted by \(\left\langle \phi ,\psi \right\rangle _{\Lambda }\) for \(\psi \in {\widetilde{H}}^{-s}({\Lambda })\) and \(\phi \in H^{s}({\Lambda })\).

2.3 Boundary Integral Operators in Lipschitz Domains

Again, let \({\text {D}}\subset {\mathbb {R}}^2\) be a bounded Lipschitz domain with boundary \(\Gamma {:=}\partial {\text {D}}\). In the following, we introduce the main concepts concerning BIOs and BIEs to be used throughout this manuscript. As we will only discuss boundary value problems in \({\mathbb {R}}^2\), we limit our presentation of the aforementioned tools to the two dimensional case. Let \(\text {G}(\textbf{x},\textbf{y})\) be the fundamental solution of the Laplacian in \({\mathbb {R}}^2\) (cf. [34, Chapter 5] or [31, Section 3.1]), given by

Let \({\mathcal {N}}:{\widetilde{H}}^{-1}({\text {D}}) \rightarrow H^1({\text {D}})\) denote the Newton potential, defined for \(\varphi \in {\mathscr {C}}^\infty _0({\text {D}})\) as

We define the single and double layer potentials, respectively, as follows:

The operators \({\mathcal {S}} : H^{-\frac{1}{2}}(\Gamma ) \rightarrow H^1({\text {D}})\) and \({\mathcal {D}} : H^{\frac{1}{2}}(\Gamma ) \rightarrow H^1({\text {D}})\) define continuous mappings (cf. [31, Theorem 3.1.12 & Theorem 3.1.16]). Equipped with these definitions, we introduce the boundary integral operators (BIOs) on \(\Gamma \) as:

referred to as the single layer, double layer, adjoint double layer and hypersingular BIOs, respectively, where \(\textsf{Id}\) signifies the identity operator. The mapping properties of the BIOs in (2.2) are stated in the following result.

Proposition 2.1

[10, Theorem 1] Let \({\text {D}}\subset {\mathbb {R}}^2\) be a bounded Lipschitz domain with boundary \(\Gamma {:=}\partial {\text {D}}\). For each \(\sigma \in (-\frac{1}{2},\frac{1}{2})\), the BIOs introduced in (2.2) define continuous maps according to:

Moreover, the single layer and hypersingular BIOs are coercive.

Proposition 2.2

[34, Theorems 6.23 & Corollary 6.25] Let \({\text {D}}\subset {\mathbb {R}}^2\) be a bounded Lipschitz domain with boundary \(\Gamma {:=}\partial {\text {D}}\). There exists \(c_{\textsf{W}}>0\) such that

Assume that \( \text {diam}({\text {D}})<1\), then there exists \(c_{\textsf{V}}>0\) such that

Remark 1

Let us define the bilinear form \(\check{{\textsf{a}}}: H^{\frac{1}{2}}(\Gamma ) \times H^{\frac{1}{2}}(\Gamma ) \rightarrow {\mathbb {R}}\) as

One can also prove (see, e.g., [37, Section 2] and the references therein) that there exists a constant \(\alpha > 0\) such that

Lemma 2.3

(Maue’s formula, [20, Lemma 1.2.2] & [34, Theorem 6.15]) Let \({\text {D}}\subset {\mathbb {R}}^2\) be a bounded Lipschitz polygon with boundary \(\Gamma :=\partial {\text {D}}\). Then, for all \(\varphi \in {\mathscr {C}}^0(\Gamma )\) with continuous derivative on each smooth segment of \(\Gamma \) it holds that

where \(\frac{d}{ds}\) denotes the arc-length derivative. The relation (2.4) remains valid for \(\varphi \in H^{\frac{1}{2}}(\Gamma )\).

2.3.1 Direct Boundary Integral Formulation of the Interior Dirichlet BVP

We consider the interior Laplace problem equipped with Dirichlet boundary conditions in a bounded Lipschitz domain \({\text {D}}\subset {\mathbb {R}}^2\) with boundary \(\Gamma {:=}\partial {\text {D}}\).

Problem 2.4

(Dirichlet Boundary Value Problem) Let \(f\in H^{\frac{1}{2}}(\Gamma )\) be given . We seek \(u\in H^1({\text {D}})\) satisfying

As is customary, one may recast Problem 2.4 as an equivalent BIE using the BIOs introduced in (2.2). The starting point is the so-called integral representation formula: we express the weak solution \(u\in H^1({\text {D}})\) to Problem 2.4 using the layer potentials introduced in (2.1) as follows:

By applying the Dirichlet trace operator \(\gamma _0: H^1({\text {D}}) \rightarrow H^{\frac{1}{2}}(\Gamma )\) to (2.5) and using the boundary condition on \(\Gamma \) stated in Problem 2.4, one obtains the following BIE for the unknown datum \(\gamma _1 u \in H^{-\frac{1}{2}}(\Gamma )\).

Problem 2.5

(Boundary Integral Formulation of Problem 2.4) Let \(f\in H^{\frac{1}{2}}(\Gamma )\) be given. We seek \(\psi {:=}\gamma _1 u \in H^{-\frac{1}{2}}(\Gamma )\) such that

The well-posedness of the BIE in Problem 2.5 follows from the mapping properties of the BIOs in Proposition 2.1, the ellipticity of the single layer BIO \({\textsf{V}}: H^{-\frac{1}{2}}(\Gamma )\rightarrow H^{\frac{1}{2}}(\Gamma )\) in Proposition 2.2, and the Lax-Milgram lemma.

2.3.2 Direct Boundary Integral Formulation of the Interior Neumann BVP

We consider the interior Laplace problem equipped with nonhomogeneous Neumann boundary conditions in a bounded, simply connected Lipschitz domain \({\text {D}}\subset {\mathbb {R}}^2\) with boundary \(\Gamma {:=}\partial {\text {D}}\), thus rendering the boundary \(\Gamma \) connected itself.

Problem 2.6

(Neumann BVP in \({\text {D}}\)) Let \(g\in H^{-\frac{1}{2}}(\Gamma )\) be given. We seek \(u\in H^1({\text {D}}) \) satisfying

It is well-established that there exists a unique \(u\in H^1({\text {D}}) / {\mathbb {R}}\) solution to Problem 2.6 provided that one further imposes \(g\in H^{-\frac{1}{2}}(\Gamma )/{\mathbb {R}}\) (see, e.g., [34, Theorem 4.9]). We reduce the boundary value problem stated in Problem 2.6 to an equivalent BIE using the BIOs introduced in (2.2) and the integral representation formula (2.5). The application of the Neumann trace operator \(\gamma _1: H^1(\Delta ,{\text {D}})\rightarrow H^{-\frac{1}{2}}(\Gamma )\) to (2.5) together with boundary condition stated in Problem 2.6 yields the following boundary integral formulation of Problem 2.6.

Problem 2.7

(Boundary Integral Formulation of Problem 2.6) Let \(g\in H^{-\frac{1}{2}}(\Gamma )/{\mathbb {R}}\) be given. We seek \(\phi {:=}\gamma _0 u \in H^{\frac{1}{2}}(\Gamma )/{\mathbb {R}}\) such that

The well-posedness of Problem 2.7 follows from the mapping properties of the BIOs stated in Proposition 2.1, Proposition 2.2, the fact that \({\textsf{K}}'\) preserves integral mean value zero for densities defined over \(\Gamma \) and from the Lax-Milgram lemma, as it is thoroughly explained in [34, Section 7.2].

2.4 Regularity of the Solution to the BIEs in Lipschitz Polygons

We recapitulate results concerning the regularity of solutions to the BIEs stated in Problems 2.5 and 2.7. In the following, we assume that \({\text {D}}\subset {\mathbb {R}}^2\) is a bounded Lipschitz polygon with boundary \(\Gamma {:=}\partial {\text {D}}\) characterized by a finite number \(J \ge 3\) of vertices \(\{ \textbf{x}_j\}_{j=1}^{J} \subset {\mathbb {R}}^2\). We enumerate cyclically mod J, i.e., \(\textbf{x}_{J+1} = \textbf{x}_1\). We denote \(\Gamma _j = \textrm{conv}(\textbf{x}_j, \textbf{x}_{j+1})\), for \(j=1,\dots ,J\), (with the convention \(\Gamma _0 = \Gamma _J\)) and let \(\omega _j\in (0,2\pi )\) be the internal angle at \(\textbf{x}_j\), i.e., that of the wedge formed by the edges \(\Gamma _{j-1}\) and \(\Gamma _{j}\), \(j=1\dots ,J\).

Let \(\{\chi _j\}_{j=1}^{J}\) be a partition of unity of \(\Gamma \), where each function \(\chi _j\), \(j=1,\dots ,J\), is constructed by considering the restriction of a function in \({\mathscr {C}}^2_0({\mathbb {R}}^2)\) to the boundary \(\Gamma \) such that \(\chi _j = 1\) in a neighborhood of \(\textbf{x}_j\) and \(\text {supp}\{\chi _j\} \subset \Gamma _{j-1} \cup \{\textbf{x}_j\} \cup \Gamma _j\). Let \(\varphi :\Gamma \rightarrow {\mathbb {R}}\) be a function defined on the boundary \(\Gamma \). Using the previously described partition of unity, we may write

where by \((\varphi _{-},\varphi _{+})\) we denote the restriction of \(\varphi :\Gamma \rightarrow {\mathbb {R}}\) to \(\Gamma _{j-1} \cup \{\textbf{x}_j\} \cup \Gamma _j\) with \(\varphi _{-}{:=}\left. \varphi \right| _{\Gamma _{j-1}}\) and \(\varphi _{+}{:=}\left. \varphi \right| _{\Gamma _{j}}\), for \(j=1,\dots ,J\).

The following result (from [16]) describes the regularity of the solution to Problems 2.5 and 2.7.

Proposition 2.8

[16, Proposition 2.1] Set \(\alpha _{jk}{:=}k \frac{\pi }{ \omega _j}\), for \(k\in {\mathbb {N}}\) and \(j=1,\dots ,J\). Let \(t\ge 1/2\) and \(n\in {\mathbb {N}}\) be such that \(n+1 \ge \frac{ \omega _j}{\pi }\left( t-\frac{1}{2}\right) \ge n\) for all \(j=1,\dots ,J\).

-

(i)

Assume that \(f\in H^{\frac{1}{2}}(\Gamma )\) in Problem 2.5 is additionally piecewise analytic. Then, there exists \(\psi _0\) satisfying \(\left. \psi _0\right| _{\Gamma _j} \in H^{t-1}(\Gamma _j)\) for all \(j=1,\dots ,J\), such that the solution \(\psi \in H^{-\frac{1}{2}}(\Gamma )\) to Problem 2.5 admits the following representation:

$$\begin{aligned} \psi = \sum _{j=1}^{J} \sum _{k=1}^{n} \left( (\psi _{jk})_{-}, (\psi _{jk})_{+} \right) \chi _j + \psi _0. \end{aligned}$$(2.6)In (2.6), if \(\alpha _{j,k} \notin {\mathbb {Z}}\)

$$\begin{aligned} (\psi _{jk})_{\pm } = c^{\pm }_{jk} \left\Vert \textbf{x}-\textbf{x}_j \right\Vert ^{\alpha _{jk}-1}, \end{aligned}$$and if \(\alpha _{j,k} \in {\mathbb {Z}}\)

$$\begin{aligned} (\psi _{jk})_{\pm } = c^{\pm }_{jk} \left\Vert \textbf{x}-\textbf{x}_j \right\Vert ^{\alpha _{jk}-1} + d^{\pm }_{jk} \left\Vert \textbf{x}-\textbf{x}_j \right\Vert ^{\alpha _{jk}-1} \log \left\Vert \textbf{x}-\textbf{x}_j \right\Vert , \end{aligned}$$where \(c^{\pm }_{jk}, \;d^{\pm }_{jk} \in {\mathbb {R}}\), for \(j=1,\dots ,J\) and \(k=1,\dots ,n\).

-

(ii)

Assume that \(g\in H^{-\frac{1}{2}}(\Gamma )/{\mathbb {R}}\) in Problem 2.7 is additionally piecewise analytic. Then, there exists \(\phi _0\) such that \(\left. \phi _0\right| _{\Gamma _j} \in H^{t}(\Gamma _j)\), for all \(j=1,\dots ,J\), such that the solution \(\phi \in H^{\frac{1}{2}}(\Gamma )\) to Problem 2.7 admits the following representation:

$$\begin{aligned} \phi = \sum _{j=1}^{J} \sum _{k=1}^{n} \left( (\phi _{jk})_{-}, (\phi _{jk})_{+} \right) \chi _j + \phi _0. \end{aligned}$$(2.7)In (2.7), if \(\alpha _{j,k} \notin {\mathbb {Z}}\)

$$\begin{aligned} (\phi _{jk})_{\pm } = c^{\pm }_{jk} \left\Vert \textbf{x}-\textbf{x}_j \right\Vert ^{\alpha _{jk}}, \end{aligned}$$and if \(\alpha _{j,k} \in {\mathbb {Z}}\)

$$\begin{aligned} (\phi _{jk})_{\pm } = c^{\pm }_{jk} \left\Vert \textbf{x}-\textbf{x}_j \right\Vert ^{\alpha _{jk}} + d^{\pm }_{jk} \left\Vert \textbf{x}-\textbf{x}_j \right\Vert ^{\alpha _{jk}} \log \left\Vert \textbf{x}-\textbf{x}_j \right\Vert , \end{aligned}$$where \(c^{\pm }_{jk}, \;d^{\pm }_{jk} \in {\mathbb {R}}\), for \(j=1,\dots ,J\) and \(k=1,\dots ,n\).

2.5 Boundary Integral Operators on Open Arcs

We proceed to extend the definitions and results introduced in Sect. 2.3 for BIOs in Lipschitz domains \({\text {D}}\) with closed boundary \(\Gamma = \partial {\text {D}}\), i.e., \(\partial \Gamma = \emptyset \), to open arcs \(\Lambda \) in \({\mathbb {R}}^2\) (in the sense of [30, Definition 2.4.2]), for which \(\partial \Lambda \ne \emptyset \). To this end, we consider a bounded Lipschitz \({\text {D}}\subset {\mathbb {R}}^2\) with boundary \(\Gamma {:=}\partial {\text {D}}\) and assume that \(\Lambda \subset {\mathbb {R}}^2\) is a connected open arc of \(\Gamma \) with positive measure and endpoints \(\textbf{x}_1\) and \(\textbf{x}_2\) in \(\Gamma \). By [10, Theorem 1], the potentials in (2.1) are well defined as elements on local Sobolev spaces over the unbounded domain \(\Lambda ^{{\text {c}}}{:=}{\mathbb {R}}^2\setminus \overline{\Lambda }\), so that the layer potentials

define continuous operators. The continuity properties of the layer potentials and trace operators [26, Theorem 3.38] together with the jump properties of the layer potentials [10, Lemma 4.1] allow us to define the BIOs on \(\Lambda \) as before:

We recall key properties of the single layer and hypersingular BIOs on a open arc \(\Lambda \).

Proposition 2.9

([9, Lemmas 1 & 3]) Let \(\Lambda \subsetneq \Gamma \) be an open Jordan arc. For \(\left|\sigma \right|\le \frac{1}{2}\), the maps

are continuous.

Theorem 2.10

([9, Section 2] and [35, Theorem 1.5]) Let \(\Lambda \subsetneq \Gamma \) be a Jordan arc. Then, there exist positive constants \(\mu , \eta \) (depending upon \(\Lambda \)) such that for all \(\psi \in {\widetilde{H}}^{-\frac{1}{2}}(\Lambda )\) and for all \( \phi \in {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\) it holds

Problem 2.11

(Weakly singular BIE on \(\Lambda )\) Let \(f\in {H}^{\frac{1}{2}}(\Lambda )\) be given. We seek \(u \in {\widetilde{H}}^{-\frac{1}{2}}(\Lambda )\) satisfying

Problem 2.12

(Hypersingular BIE on \(\Lambda )\) Let \(g\in {H}^{-\frac{1}{2}}(\Lambda )\) be given. We seek \(\phi \in {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\) satisfying

As with Problems 2.5 and 2.7, the well-posedness of Problems 2.11 and 2.12 follows from Proposition 2.9, Theorem 2.10 and the Lax-Milgram lemma. Moreover, the respective solutions \(\psi \in {\widetilde{H}}^{-\frac{1}{2}}(\Lambda )\) and \(\varphi \in {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\) satisfy

with \(\mu >0\) as in Theorem 2.10.

2.6 Regularity of the Solution to the BIEs on Open Arcs

For the ensuing analysis of lower-order BEM, in particular on open arcs, we shall invoke a decomposition of solutions into regular and singular parts, from [16, 38]. For \(i=1,2\), let \(\varrho _i\) denote the Euclidean distance between \(\textbf{x}\in \Lambda \) and the endpoint \(\textbf{x}_i \in {\mathbb {R}}^2\) of \(\Lambda \) and let \(\xi _i\) be a \({\mathscr {C}}^\infty \) cut-off function on \(\Lambda \) with \(0\le \xi _i\le 1\), \(\xi _i=1\) near \(\textbf{x}_i\) and \(\xi =0\) at the opposite end. For \(\alpha _1,\alpha _2 \in {\mathbb {R}}\) and \(\psi _0 \in {\widetilde{H}}^s(\Lambda )\) for any \(s<2\), we set

and define

Proposition 2.13

[38, Theorem 1.8] For \(\sigma \in (-\frac{1}{2},\frac{1}{2})\) the operator \({\textsf{W}}: {\mathscr {T}}^{\frac{3}{2}+\sigma }(\Lambda ) \rightarrow H^{\frac{1}{2}+\sigma }(\Lambda )\)

is bijective and continuous and there exists \(C>0\) such that

where \(\psi \) is as in (2.8).

2.7 Galerkin Boundary Element Discretization

We proceed to detail the Galerkin discretization of Problems 2.5, 2.7, 2.11 and 2.12. We remark that other forms of numerical discretizations, such as collocation or Petrov-Galerkin formulations, may be considered as well.

In what follows, we assume that \({\text {D}}\subset {\mathbb {R}}^2\) is a bounded, Lipschitz polygon with boundary \(\Gamma {:=}\partial {\text {D}}\). As is customary in the h-Galerkin BEM, we decompose the boundary \(\Gamma \) into \(N \in {\mathbb {N}}\) straight, disjoint line segments \(\Gamma _j\), for \(j=1,\ldots ,N\), from now onwards referred to as elements. The vertices of the polygon \(\Gamma \) must match the endpoints of some of the elements and the mesh \({\mathcal {T}}_N{:=}\{\Gamma _i\}_{i=1}^N\) covers \(\Gamma \) itself. Equipped with these definitions, we introduce the usual boundary element spaces of piecewise polynomial functions on the mesh \({\mathcal {T}}_N\) of \(\Gamma \):

In the above, and for each \(j=1,\dots ,N\), \({\mathbb {P}}_p(\Gamma _j)\) denotes the space of polynomials of degree \(p\in {\mathbb {N}}\cup \{0\}\) on \(\Gamma _j\).

Problem 2.14

(BEM for Problem 2.5) Let \({\mathcal {T}}_N\) be a mesh of the boundary \(\Gamma \) and let \(f\in H^{\frac{1}{2}}(\Gamma )\) be given. We seek \(\psi _N \in S^{0}(\Gamma ,{\mathcal {T}}_N)\) satisfying

Problem 2.15

(BEM for Problem 2.7) Let \({\mathcal {T}}_N\) be a partition of the boundary \(\Gamma \) and let \(g\in H^{-\frac{1}{2}}(\Gamma )\) be given. We seek \(\phi _N \in S^{1}(\Gamma ,{\mathcal {T}}_N)\) satisfying

Remark 2

The continuity and ellipticity of \({\textsf{V}}\) and the bilinear form \(\check{{\textsf{a}}}(\cdot ,\cdot )\) (recall its definition in Remark 1) in \(H^{-\frac{1}{2}}(\Gamma )\) and \(H^{\frac{1}{2}}(\Gamma )\), respectively, will ensure the existence and uniqueness of solutions to Problems 2.14 and 2.15.

Remark 3

(Weakly Singular Operator \({{\textsf{V}}})\) For \(\Gamma := \partial {\text {D}}\) a closed curve of arclength \(L = |\Gamma |\), let \(\varvec{r}:[0,L)\rightarrow \Gamma \) be its arclength parametrization and consider the map I given for \(\phi \in {{\mathscr {C}}^0}(\Gamma )\) by

for all \(\textbf{x}\in \Gamma \). One verifies that the map I may be extended to \(I \in {{\mathcal {L}}}_{iso}(H^{-\frac{1}{2}}(\Gamma )/{\mathbb {R}}, H^\frac{1}{2}(\Gamma ))\). For \(\psi \in H^{-1/2}(\Gamma )\) define \(\breve{\psi }:= \psi - \frac{1}{L}\langle \psi ,1\rangle _\Gamma \in H^{-1/2}(\Gamma )/{\mathbb {R}}\). Then Maue’s formula in Lemma 2.3 implies, for every \(\psi _N,\varphi _N\in S^{0}(\Gamma ,{\mathcal {T}}_N)\), that

We continue with the Galerkin discretization of Problems 2.11 and 2.12. Recall \(\Lambda \subsetneq \Gamma \) to be a Jordan arc and let \(\widetilde{{\mathcal {T}}}_N :=\{\Lambda _i\}_{i=1}^N\) be a mesh on \(\Lambda \) consisting, as before, of straight, disjoint line segments \(\Lambda _j\), for \(j=1,\ldots ,N\). We introduce the following spaces of piecewise polynomials:

Problem 2.16

(Boundary element discretization of Problem 2.11) Let \(\widetilde{{\mathcal {T}}}_N\) be a mesh over the Jordan arc \(\Lambda \subsetneq \Gamma \) and let \(f\in H^{\frac{1}{2}}(\Lambda )\) be given. We seek \(\psi _N \in {S}^{0}(\Lambda ,\widetilde{{\mathcal {T}}}_N)\) satisfying

Problem 2.17

(Boundary element discretization of Problem 2.12) Let \(\widetilde{{\mathcal {T}}}_N\) be a mesh over the Jordan arc \(\Lambda \subsetneq \Gamma \) and let \(g\in H^{-\frac{1}{2}}(\Lambda )\) be given. We seek \(\varphi _N \in {S}^{1}(\Lambda ,\widetilde{{\mathcal {T}}}_N)\) satisfying

3 ReLU Neural Networks

We introduce a key ingredient in the present paper, namely the so-called DNNs. Even though in this work we use only the ReLU activation function, other (continuous) activation functions could be considered in the analysis and numerical construction of DNNs, such as sigmoidal, or \(\tanh \) activations. However, the approximation results presented herein hold with the simpler, and computationally more efficient, ReLU activation function.

Definition 3.1

(Deep Neural Network) Let \(L\ge 2\), \(N_0,N_1,\dots ,N_L\in {\mathbb {N}}\). A map \(\Phi :{\mathbb {R}}^{N_0}\rightarrow {\mathbb {R}}^{N_L}\) given by

with affine maps \(\varvec{W}_\ell :{\mathbb {R}}^{N_{\ell -1}}\rightarrow {\mathbb {R}}^{N_\ell }\), \(\varvec{W}_\ell ({\varvec{x}})=\varvec{A}_\ell {\varvec{x}}+\varvec{b}_\ell \), \(1\le \ell \le L\), where \(\varvec{A}_\ell \in {\mathbb {R}}^{N_\ell \times N_{\ell -1}}\), \(\varvec{b}_\ell \in {\mathbb {R}}^{N_\ell }\), and with activation function \(\varrho :{\mathbb {R}}\rightarrow {\mathbb {R}}\) (acting component-wise on vector inputs) is called Deep Neural Network. In the above definition, \(N_0\) is the dimension of the input layer, \(N_L\) denotes the dimension of the output layer, \(L= {\mathcal {L}}(\Phi )\) denotes the number of layers (excluding the input layer), \(N_1,\dots ,N_L\) correspond to the widths of each of the \(L-1\) hidden layers, and \(M = {\mathcal {M}}(\Phi ){:=}\max _{\ell }N_\ell \) corresponds to the width of the network. In addition, we denote by \({\mathcal{N}\mathcal{N}}^{\varrho }_{L,M,N_0,N_L}\) the set of all DNNs \(\Phi :{\mathbb {R}}^{N_0}\rightarrow {\mathbb {R}}^{N_L}\) with input dimension \(N_0\), output dimension \(N_L\), a depth of at most L layers, maximum width M, and activation function \(\varrho \).

For any \(\Phi \in {\mathcal{N}\mathcal{N}}^{\varrho }_{L,M,N_0,N_L}\) with \(L\ge 2\) as in (3.1), we introduce \(\Phi ^{ {\text {hid}}}:{\mathbb {R}}^{N_0}\rightarrow {\mathbb {R}}^{N_{L-1}}\) given by

ie., the subnetwork comprising all “responses from the hidden layers” of a DNN \(\Phi \in {\mathcal{N}\mathcal{N}}^{\varrho }_{L,M,N_0,N_L}\). Moreover, we denote the space of all the responses from the hidden layers of DNN’s in \({\mathcal{N}\mathcal{N}}^{\varrho }_{L,M,N_0,N_L}\) as

3.1 Structure of Galerkin Approximation Spaces generated by ReLU-NNs

It is an immediate consequence from Definition 3.1 that functions generated by DNNs (understood in the sense that \(L\ge 2\) as opposed to shallow NNs, where \(L=1\)) depend in a nonlinear fashion on the DNN parameters characterizing the hidden layers, i.e., the weights \(\varvec{A}_\ell \in {\mathbb {R}}^{N_\ell \times N_{\ell -1}}\) and biases \(\varvec{b}_\ell \in {\mathbb {R}}^{N_\ell }\). However, by setting the bias in the output layer of the DNN in Definition 3.1 to \(\textbf{0}\), i.e., \(\varvec{b}_L =\textbf{0}\), we have that DNN functions belong to the linear span of the space of functions generated by the “hidden layers” of the corresponding DNN.

Proposition 3.2

Assume given a DNN \(\Phi \in {\mathcal{N}\mathcal{N}}^{\varrho }_{L,M,N_0,N_L}\) with \(L\ge 2\) and such that \(N_L = 1\), \(\varvec{b}_L = {\varvec{0}}\). Then, for any activation function \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\), it holds that

where \(\Phi ^{ {\text {hid}}}_i\) denotes the i-th component of any \(\Phi ^{ {\text {hid}}} \in {\mathcal{N}\mathcal{N}}^{ {\text {hid}},\varrho }_{L,M,N_0,N_L}\).

We see from this proposition that DNNs:

-

(i)

span particular linear subspaces of dimension \(N_{L-1}\) and

-

(ii)

span subspaces with basis elements that, in turn, can be chosen in a problem-adapted fashion by adjusting the parameters in the hidden layers of the DNN.

It is clear from this (trivial) observation that shallow ReLU-NNs, which we will consider exclusively in the remainder of this paper, can exactly reproduce spaces of continuous and piecewise linear functions on \(\Gamma \) with a DNN of depth \(L=2\). Mesh refinement on \(\Gamma \) can be accounted for by adjusting the widths of the hidden layers during training.

As was shown in [27, Sections 4 and 5], for \(L>2\), ReLU-DNNs can represent hp-boundary element spaces on geometric partitions, which are known to afford exponential convergence rates for piecewise analytic data. We hasten to add, however, that Proposition 3.2 has wider implications, e.g., training \({\mathcal{N}\mathcal{N}}^{{\text {hid}},\varrho }\) to emulate reduced bases by a greedy search, for example, will imply that the corresponding Galerkin BEM (with subspaces corresponding to the DNN \({\mathcal{N}\mathcal{N}}^\varrho = \textrm{span} \{\Phi ^{{\text {hid}}} \in {\mathcal{N}\mathcal{N}}^{{\text {hid}},\varrho }\}\)) will deliver performance corresponding to reduced bases BEM. This could be employed to accommodate, for example, Galerkin BEM with basis sets that feature additional properties which are tailored to particular problem classes.

3.2 \({\mathbb {P}}_1\)-Spline Boundary Element Spaces as ReLU-NNs

Recall that \(N\in {\mathbb {N}}\) corresponds to the number of elements in the meshes on \(\Gamma \) and \(\Lambda \) (\({\mathcal {T}}_N\) and \(\widetilde{{\mathcal {T}}}_N\), respectively), and let \(p\in {\mathbb {N}}\) denote the polynomial degree of the boundary element spaces \(S^p(\Gamma ,{\mathcal {T}}_N)\) and \(S^p(\Lambda ,\widetilde{{\mathcal {T}}}_N)\) of piecewise polynomials of degree p. Moreover, define \({ {\text {I}}{:=}(-1,1)}\) and let \({\varvec{r}}: \overline{{\text {I}}} \rightarrow {\mathbb {R}}^2\) be a Lipschitz continuous and piecewise affine parametrization of the closed curve \(\Gamma \) (where \(\Gamma \) is Lipschitz) satisfying \({{\varvec{r}}(-1)={\varvec{r}}(1)}\) and \({\varvec{r}}'(t) \ne \textbf{0}\) for almost every \(t \in {{\text {I}}}\). Define, for \(\phi \in {\mathscr {C}}^0(\Gamma )\), the so-called pullback operator as

where \({\mathscr {C}}^0_{{{\text {per}}}}(\overline{{\text {I}}})\) denotes the subspace of continuous, 2-periodic functions. This operator can be uniquely extended in such a way that for \(s\in [-1,1]\) the map \(\tau _{{\varvec{r}}}: H^{{s}}(\Gamma )\rightarrow H^{{s}}_{{{\text {per}}}}({\text {I}})\) defines a linear, continuous operators that admits a bounded inverse, thus inducing an isomorphism between \(H^{{s}}(\Gamma )\) and \(H^{{s}}({\text {I}})\), where, for \(s\ge 0\), \(H^{{s}}_{{{\text {per}}}}({\text {I}})\) denotes the Sobolev space of 2-periodic functions of order s and \(H^{{-s}}_{{{\text {per}}}}({\text {I}})\) signifies its dual in the \(L^2({\text {I}})\) duality pairing. The following result addresses the representability of the space \(S^{1}(\Gamma ,{\mathcal {T}}_N)\) by means of ReLU-NNs.

Proposition 3.3

Let \(N\in {\mathbb {N}}\). For each \(\phi _N \in S^{1}(\Gamma ,{\mathcal {T}}_N)\) there is a ReLU neural network \(\Phi _N\in \mathcal{N}\mathcal{N}_{{2},{N+1},1,1}\) such that \({\left( \tau _{\varvec{r}}\phi _N\right) }(t)\equiv \Phi _N(t)\) for all \(t\in {\text {I}}\).

Proof

Recall the setting described in Sect. 2.7: Given \(N\in {\mathbb {N}}\) we consider a mesh \({\mathcal {T}}_N\) of \(\Gamma \) consisting of \(N+1\) points \(\{\textbf{x}_n\}_{n=0}^{N}\subset \Gamma \) and where \(\textbf{x}_0=\textbf{x}_{N}\) (i.e. N distinct points). The set of nodes \(\{\textbf{x}_n\}_{n=0}^{N} \subset \Gamma \) may be uniquely identified with \(\{t_j\}_{j=0}^{N}\subset \overline{{\text {I}}}\) through \({\varvec{r}}(t_j)=\textbf{x}_j\), for \(j=0,\dots ,N\), and we assume that \(-1=t_0<t_1<\cdots<t_{N-1}<t_N=1\) set \(K_j = (t_{j},t_{j+1})\) for \(j=0,\dots ,N-1\), and define

where \(\hat{{\mathcal {T}}}_N = \displaystyle \cup _{j=0}^{N-1}{\overline{K}}_j\) is a partition of the interval \(\overline{{\text {I}}}\). Set \(h_j = t_{j+1}-t_{j}\). Let us define the so-called “hat functions”

for \(j={1,\dots ,N-1}\) and \({t}\in {{\text {I}}}\), together with

Recall that \(\text {span}\{{\zeta _0},\dots ,\zeta _{N} \} = S^{1}({\text {I}},{\hat{{\mathcal {T}}}}_N)\), whence for all \({\Phi }_N \in S^{1}({\text {I}},{\hat{{\mathcal {T}}}}_N)\) there are unique coefficients \({c_0}({\Phi }_N),\dots ,c_{N}({\Phi }_N) \in {\mathbb {R}}\), such that

Each \(\zeta _j\) can be represented (non-uniquely) using ReLU-NNs as follows

for \(j={1,\dots ,N-1}\), together with

Using (3.3) we obtain

where \(\varvec{A}_1{:=}(1,\dots ,1)^\top \in {\mathbb {R}}^{{(N+1)}\times 1}\), \(\varvec{b}_1{:=}({-t_0,\dots ,-t_{N}})^\top \in {\mathbb {R}}^{{N+1}}\), \(\varvec{b}_2{:=}({1,0,\dots ,0})^\top \in {\mathbb {R}}^{{N+1}}\) and \(\varvec{A}_{2}\in {\mathbb {R}}^{{(N+1)}\times {(N+1)}}\) is defined as follows

where \({\varvec{v}_1 {:=}(-\frac{1}{h_0},\frac{1}{h_0},0,\dots ,0) \in {\mathbb {R}}^{1\times (N+1)} }\), \({\varvec{v}_2{:=}(0,\dots ,0,\frac{1}{h_{N-1}},0) \in {\mathbb {R}}^{1\times (N+1)} }\), and \({\varvec{H} \in {\mathbb {R}}^{(N-1) \times (N+1)}}\)

for \(i=1,\dots ,{N+1}\), \(j=1,\dots ,{N+1}\). Finally, we construct the output layer by using (3.2). Let us define \({\varvec{C}}{:=}\left( c_{0}({\Phi }_N),\dots ,c_{N}({\Phi }_N)\right) \in {\mathbb {R}}^{{1\times (N+1)}}\). Then we have that

Observe that \(\Phi _N\in \mathcal{N}\mathcal{N}_{{2},{N+1},1,1}\) and that, due to the construction (3.4), the weights of \({\Phi _N}\) are bounded in absolute value by \(\max \{1+h_1,\left\Vert {\phi _N} \right\Vert _{{L^\infty (\Gamma )}},2\displaystyle \max _{j=0,\dots ,{N-1}}\tfrac{1}{h_j}\}\).

Now, set \(\nu _j = \tau ^{-1}_{{\varvec{r}}} \zeta _j\), for \(j=0,\dots ,N\). Therefore, for each \(\phi _N \in S^{1}(\Gamma ,{\mathcal {T}}_N)\) we have that

Observe that since \(\phi _N(\textbf{x}_0) = \phi _N(\textbf{x}_N)\) we have \(\phi _N \in S^1(\Gamma ,{\mathcal {T}}_N)\). The application of \(\tau _{{\varvec{r}}}\) to (3.5) yields

Observe that the right-hand side of (3.6) defines an element of \(\mathcal{N}\mathcal{N}_{{2},N+1,1,1}\), thus concluding the proof. \(\square \)

Remark 4

As pointed out in the proof of Proposition 3.3, the representation of the “hat functions” \(\zeta _j\in \phi _N \in S^{1}({{\text {I}}},{\mathcal {T}}_N)\) is not unique. One may also write

for \(j=0,\dots ,N\) and \({t}\in {\overline{{\text {I}}}}\). Then, there exists a neural network \(\widetilde{\Psi }_{j}\in \mathcal{N}\mathcal{N}_{4,2,1,1}\) such that \(\Psi _{j}({t}) = \zeta _j({t})\), for all \({t} \in {\overline{{\text {I}}}}\) and \(j=1,\dots ,N\). This representation leads to ReLU-NNs of width 2 and depth 3.

3.3 Approximation Properties of ReLU-NNs: h-Galerkin BEM

Based on the result stated in Proposition 3.3 (concerning the exact emulation of the standard \({\mathbb {P}}_1\)-BEM spaces) one may conclude that existing results on the convergence rates of Galerkin BEM are straightforwardly “transferred” to the ReLU Galerkin-BEM framework. In this section, we provide a clear result establishing this connection. Firstly, we recapitulate known approximation results of singular functions on graded partitions.

Proposition 3.4

Set \({\text {I}}= (0,1)\) and consider a function \( u \in {\widetilde{H}}^{\frac{1}{2}}({\text {I}})\) of the form

Assume \(\lambda _0 := \min \{ \textrm{Re}\{\lambda _j\} : j=1,...,J \} \ge s\) for some \(0 < s \le 1\). For a grading parameter \(\beta \ge 1\), denote by \(S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) the space of continuous, piecewise linear functions in \({\text {I}}= (0,1)\) on the graded mesh \({{\mathcal {T}}}_{N,\beta }\) characterized by the nodes \(\{ x^{N,\beta }_k := (k/N)^\beta ,\; k=0,1,...,N\}\) in \({\text {I}}\). Then, for every \(\varepsilon > 0\) and \(s\ge 0\) such that \(s < \lambda _0+1/2\) and \(s\le 1\), and with \(I^\beta _N: {{\mathscr {C}}^0}(\overline{{\text {I}}})\rightarrow S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) as the nodal interpolant, there holds that

as N grows to infinity. In (3.7), the implied constant depends on \(\beta \), \(\{\lambda _j \}_{j=1,...,J}\), \(\alpha _j\), \({u_0}\) and \(\varepsilon \).

Proof

A proof of this result is provided, for the convenience of the reader, in Appendix A. \(\square \)

We observe that Proposition 3.3 allows for arbitrary locations of the nodes characterizing the mesh \({\mathcal {T}}_{N}\) on \(\Gamma \), while ensuring exact ReLU-NN emulation of the spaces \(S^1(\Gamma ,{\mathcal {T}}_{N})\). The preceding result, therefore, also implies approximation rate bounds for ReLU-NNs. We describe these now.

Theorem 3.5

(Approximation of the Solution to BIEs by ReLU–NNs)

-

(i)

Let \(\phi \in H^{\frac{1}{2}}(\Gamma )\) be the solution of Problem 2.7 on a bounded Lipschitz polygon with boundary \(\Gamma \) characterized by a finite number \(J\ge 3\) of vertices. Assume that \(g\in H^{-\frac{1}{2}}(\Gamma )/{\mathbb {R}}\) in Problem 2.7 is additionally piecewise analytic and let \({\varvec{r}}: {\text {I}}\rightarrow {\mathbb {R}}^2\) be a Lipschitz continuous and piecewise linear parametrization of \(\Gamma \) satisfying \({\varvec{r}}(-1)={\varvec{r}}(1)\) and \({\varvec{r}}'(t) \ne \textbf{0}\) for almost every \(t\in \overline{{\text {I}}}\). Then, there exists \(C>0\) such that for each \(N\in {\mathbb {N}}\) there exists \(\upphi _N \in \mathcal{N}\mathcal{N}_{{2},M,1,1}\) satisfying

$$\begin{aligned} \left\Vert \tau _{{\varvec{r}}}\phi - \upphi _{N} \right\Vert _{{H}^{\frac{1}{2}}({\text {I}})} \le C N^{-\frac{3}{2}}, \end{aligned}$$(3.8)where \(\tau _{{\varvec{r}}}: {H}^{\frac{1}{2}}(\Gamma )\rightarrow {H}^{\frac{1}{2}}({\text {I}})\) denotes the pullback operator introduced in Sect. 3.2 and \(M = {\mathcal {O}}(N)\).

-

(ii)

Let \(\varphi \in {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\) be the solution of Problem 2.12 for \(g \in H^{1}(\Lambda )\), and let \({\varvec{r}}: {\text {I}}\rightarrow {\mathbb {R}}^2\) be a regular parametrization, i.e. \({\varvec{r}}'(t) \ne \textbf{0}\) for \(t\in {\text {I}}\), of the open arc \(\Lambda \subset {\mathbb {R}}^2\). Then, for every \(\varepsilon >0\) there exists \(C(\varepsilon )>0\) (depending on \(\varepsilon >0)\) such that for each \(N\in {\mathbb {N}}\) there exists \(\upvarphi _N \in \mathcal{N}\mathcal{N}_{{2},N,1,1}\) satisfying

$$\begin{aligned} \left\Vert \tau _{{\varvec{r}}}\varphi - \upvarphi _N \right\Vert _{{\widetilde{H}}^{\frac{1}{2}}({\text {I}})} \le C(\varepsilon )N^{-\frac{3}{2}+\varepsilon }, \end{aligned}$$(3.9)where \(\tau _{{\varvec{r}}}: {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\rightarrow {\widetilde{H}}^{\frac{1}{2}}({\text {I}})\) denotes the pullback operator introduced in Sect. 3.2.

Proof

-

(i)

Let \({\varvec{r}}: {\text {I}}\rightarrow \Gamma \) be a Lipschitz continuous and piecewise linear parametrization of \(\Gamma \). Being \(\Gamma \) a bounded Lipschitz polygon with straight sides and defined by \(J\ge 3\) vertices, there exist points \(-1 =t_0<t_1<\cdots <t_J := 1\) satisfying \({\varvec{r}}(t_j) = \textbf{x}_j\), for \(j=0,\cdots ,J\), where we set \(\textbf{x}_0=\textbf{x}_J\). Throughout this proof, we use \({\text {I}}_j {:=}[t_j,t_{j+1}] \subset {\text {I}}\). A piecewise affine parametrization of this polygon is, for instance, given by

$$\begin{aligned} {\varvec{r}}(t) = \frac{t_j-t}{t_{j}-t_{j+1}} \textbf{x}_j + \frac{t-t_{j+1}}{t_{j}-t_{j+1}} \textbf{x}_{j+1}, \quad t\in {\text {I}}_j, \quad j=0,...,J-1. \end{aligned}$$Additionally, for \(j=0,\dots ,J-1\), we define the extension by zero and restriction operators \({\mathcal {E}}_j: {\mathscr {C}}^0({\text {I}}_j) \rightarrow {\mathscr {C}}^0({\text {I}})\) and \({\mathcal {R}}_j: {\mathscr {C}}^0({\text {I}}) \rightarrow {\mathscr {C}}^0({\text {I}}_j)\) as

$$\begin{aligned} {\mathcal {E}}_j(u)(t) = \left\{ \begin{array}{cl} u &{} t\in {\text {I}}_j \\ 0 &{} t\notin {\text {I}}_j \end{array} \right. , \quad \text {a.e. } t \in {\text {I}}, \quad \text {and} \quad {\mathcal {R}}_j(v)(t) = v(t), \quad \text {a.e. } t\in {\text {I}}_j \end{aligned}$$Equipped with this, and according to Proposition 2.8 item (ii), we have that

$$\begin{aligned} \left( \tau _{{\varvec{r}}}\phi \right) (t) = \eta _{j,1}(t) + \eta _{j,2}(t) + \left( \tau _{{\varvec{r}}}\phi _0 \right) (t), \quad t\in {\text {I}}_j. \end{aligned}$$with

$$\begin{aligned} \eta _{j,1}(t) {:=}{\chi _{j,1}(t)} \sum _{k=1}^{n} \beta _{j,k} \frac{\left\Vert \textbf{x}_{j+1}-\textbf{x}_j \right\Vert ^{\alpha _{jk}}}{\left|t_{j+1}-t_j \right|^{\alpha _{j,k}}} \left|t-t_j \right|^{\alpha _{j,k}}, \end{aligned}$$(3.10)and

$$\begin{aligned} \eta _{j,2}(t) {:=}{\chi _{j,2}(t)} \sum _{k=1}^{n} \beta _{j+1,k} \frac{\left\Vert \textbf{x}_{j+1}-\textbf{x}_j \right\Vert ^{\alpha _{j+1,k}}}{\left|t_{j+1}-t_j \right|^{\alpha _{j+1,k}}} \left|t-t_{j+1} \right|^{\alpha _{j+1,k}}\;, \end{aligned}$$(3.11)where \({\chi _{j,1}}, {\chi _{j,2}}: {\text {I}}_j \rightarrow {\mathbb {R}}\) are infinitely differentiable functions, which, furthermore, are identically equal to 1 for \(|t-t_j|<L_j/4\) and \(|t-t_{j+1}|<L_j/4\) (with \(L_j:=|t_{j+1}-t_j|\)), respectively. In addition, \(\chi _{j,1}\) and \(\chi _{j,2}\) vanish for \(|t-t_j|>L_j/2\) and \(|t-t_{j+1}|>L_j/2\), respectively. In (3.10) and (3.11), for each \(k\in {\mathbb {N}}\) and \(j=1,\dots ,J\), we have that \(\frac{3}{2}\frac{\omega _j}{\pi }-1\le n \le \frac{3}{2}\frac{\omega _j}{\pi }\), \(\alpha _{jk}{:=}k \frac{\pi }{ \omega _j}\), \({\mathcal {R}}_j(\tau _{{\varvec{r}}} \phi _0) \in H^{2}({\text {I}}_j)\) and \(\beta _{j,k},\beta _{j+1,k} \in {\mathbb {R}}\), as stated in Proposition 2.8. Observe that in (3.10) and (3.11) we have singularities arising at \(t=t_j\) and \(t=t_{j+1}\). The strength of these singularities is dictated by the inner angles of the polygon at the corresponding vertices. It follows, from Proposition 3.4, with \(s= \frac{1}{2}\) and \(\beta _{j}>\max \left\{ \frac{3}{2\alpha _{j,1}},\frac{3}{2\alpha _{j+1,1}}\right\} \), that there exist \(\eta _{j,1,N}, \eta _{j,2,N} \in S^1({\text {I}}_j,{{\mathcal {T}}}_{N,\beta _j})\) and a constant \(C>0\) independent of N such that

$$\begin{aligned} \left\Vert \eta _{1,j} - \eta _{1,j,N} \right\Vert _{{\widetilde{H}}^\frac{1}{2}({\text {I}}_j)} \le C N^{-\frac{3}{2}}, \quad \text {and} \quad \left\Vert \eta _{2,j} - \eta _{2,j,N} \right\Vert _{{\widetilde{H}}^\frac{1}{2}({\text {I}}_j)} \le C N^{-\frac{3}{2}}, \end{aligned}$$and, in addition, there exists \(\phi _{j,N} \in S^1({\text {I}}_j,{{\mathcal {T}}}_{N,\beta _j})\) such that

$$\begin{aligned} \left\Vert {\mathcal {R}}_j(\tau _{{\varvec{r}}} \phi _0) - \phi _{j,N} \right\Vert _{{\widetilde{H}}^\frac{1}{2}({\text {I}}_j)} \le C N^{-\frac{3}{2}}. \end{aligned}$$Set \(\upphi _{j,N} {:=}\eta _{j,1,N}+ \eta _{j,2,N}+ \phi _{j,N} \in S^1({\text {I}},{{\mathcal {T}}}_{N,\beta _{j}})\) and we have that

$$\begin{aligned} \left\Vert {\mathcal {R}}_j(\tau _{{\varvec{r}}} \phi ) - \upphi _{j,N} \right\Vert _{{\widetilde{H}}^\frac{1}{2}({\text {I}}_j)} \le C N^{-\frac{3}{2}} \end{aligned}$$(3.12)for some positive constant C independent of N. At this point, we make the following observation: It holds that \({\mathcal {R}}_j(\tau _{{\varvec{r}}} \phi ) = \upphi _{j,N}\) on \(\partial {\text {I}}_j\), hence \({\mathcal {E}}_j ({\mathcal {R}}(\tau _{{\varvec{r}}} \phi ) - \upphi _{j,N}) \in H^{\frac{1}{2}}({\text {I}})\) and

$$\begin{aligned} \left\Vert {\mathcal {E}}_j ({\mathcal {R}}(\tau _{{\varvec{r}}} \phi ) - \upphi _{j,N}) \right\Vert _{H^{\frac{1}{2}}({\text {I}})} = \left\Vert {\mathcal {R}}(\tau _{{\varvec{r}}} \phi ) - \upphi _{j,N} \right\Vert _{{\widetilde{H}}^{\frac{1}{2}}({\text {I}}_j)}. \end{aligned}$$Following Proposition 3.3, on each side of the polygon we have constructed a ReLU-NN belonging to \(\mathcal{N}\mathcal{N}_{3,N+2,1,1}\) that approximates \({\mathcal {R}}_j(\tau _{{\varvec{r}}} \phi )\) according to (3.12). In addition, Proposition 3.4 implies that these ReLU-NNs interpolate exactly the value of the solution to Problem 2.7 at the vertices of the polygon. Hence, by defining \(\upphi _{N} {:=}\upphi _{j,N} \) in \({\text {I}}_j\), for \(j=1,\dots ,J\), namely we define \(\upphi _{N} \) to be equal to the previously constructed ReLU on each side of the polygon, we have constructed a ReLU-NN satisfying (3.8). Indeed, we have that

$$\begin{aligned} \left\Vert \tau _{{\varvec{r}}}\phi - \upphi _{N} \right\Vert _{{H}^{\frac{1}{2}}({\text {I}})}\le & {} \sum _{j=0}^{J-1} \left\Vert \left( {\mathcal {E}}_j \circ {\mathcal {R}}_j \right) \left( \tau _{{\varvec{r}}}\phi - \upphi _{N} \right) \right\Vert _{{H}^{\frac{1}{2}}({\text {I}})} \nonumber \\{} & {} = \sum _{j=0}^{J-1} \left\Vert {\mathcal {E}}_j \left( {\mathcal {R}}_j( \tau _{{\varvec{r}}}\phi ) - \upphi _{j,N} \right) \right\Vert _{{\widetilde{H}}^{\frac{1}{2}}({\text {I}}_j)}. \end{aligned}$$This ReLU-NN in particular belongs to \(\mathcal {N}\mathcal {N}_{2,J(N+1),1,1}\), thus concluding the proof of this statement.

-

(ii)

For the sake of simplicity and without loss of generality, we consider \(\Lambda {:=}(-1,1) \times \{0\} \subset {\mathbb {R}}^2\). It follows from Proposition 2.13 that

$$\begin{aligned} \left( \tau _{{\varvec{r}}}\phi \right) (t) {:=}\alpha _1 \left|1+t \right|^\frac{1}{2}\chi _1(t) + \alpha _2 \left|1-t \right|^\frac{1}{2}\chi _2(t) + \check{v}(t), \quad t \in {\text {I}}, \end{aligned}$$where we have used the parametrization \({\varvec{r}}(t) = (t,0)^\top \) of \(\Lambda \subset {\mathbb {R}}^2\), \(\alpha _1,\alpha _2 \in {\mathbb {R}}\), \(\chi _1, \chi _2 \in {\mathscr {C}}^\infty ({\text {I}})\) are fixed cut-off functions with \(\chi _1 = 1\), \(\chi _2 = 1\) in a neighborhood of \(t=-1\) and \(t=1\), respectively, and \(\check{v} \in H^{\frac{1}{2}+\sigma }({\text {I}})\) for \(\sigma \in \left( -\frac{1}{2},\frac{1}{2}\right) \). Set

$$\begin{aligned} \eta _1(t) {:=}\alpha _1 \left|1+t \right|^\frac{1}{2}\chi _1(t), \quad \eta _2(t) {:=}\alpha _2 \left|1-t \right|^\frac{1}{2}\chi _2(t), \quad t\in {\text {I}}. \end{aligned}$$(3.13)Observe that \([0,1] \ni t \mapsto \eta _1(2t-1)\) and \([0,1] \ni t \mapsto \eta _1(-2t+1)\) defined in (3.13) fit the framework of Proposition 3.4 with \(J=1\) and \(\lambda _1 = \frac{1}{2}\). It follows from Proposition 3.4 with \(s= \frac{1}{2}\) that for \(\beta >\frac{2-s}{\lambda _0-(s-1/2)} = 3\) that there exists \(\eta _{1,N}, \eta _{2,N} \in S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) and a constant \(C>0\) such that

$$\begin{aligned} \left\Vert \eta _{1} - \eta _{1,N} \right\Vert _{{\widetilde{H}}^\frac{1}{2}({\text {I}})} \le C N^{-\frac{3}{2}}, \quad \text {and} \quad \left\Vert \eta _{2} - \eta _{2,N} \right\Vert _{{\widetilde{H}}^\frac{1}{2}({\text {I}})} \le C N^{-\frac{3}{2}}. \end{aligned}$$In addition, since \(\check{v} \in H^{\frac{1}{2}+\sigma }({\text {I}})\) for for \(\sigma \in \left( -\frac{1}{2},\frac{1}{2}\right) \), for every \(\varepsilon >0\) there exists \(C(\varepsilon )>0\) (depending on \(\varepsilon >0)\) such that for each \(N\in {\mathbb {N}}\) there exists \(\check{v}_N \in S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) satisfying

$$\begin{aligned} \left\Vert \check{v} - \check{v}_N \right\Vert _{{\widetilde{H}}^{\frac{1}{2}}({\text {I}})} \le C(\varepsilon )N^{-\frac{3}{2}+\varepsilon }. \end{aligned}$$Observe that by adding \(\eta _{1,N}, \eta _{2,N}, \check{v}_N\in S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) and recalling that \(S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) is a vector space, we conclude that \(\upvarphi _N {:=}\eta _{1,N}+ \eta _{2,N}+ \check{v}_N \in S^1({\text {I}},{{\mathcal {T}}}_{N,\beta })\) fulfills the estimate in (3.9). According to Proposition 3.3, \(\upvarphi _N \in \mathcal{N}\mathcal{N}_{{2,N+1},1,1}\). However, recalling that \(\upvarphi _N(-1) = \upvarphi _N(1) = 0\), we can discard the basis functions associated to the endpoints of the interval and conclude that \(\upvarphi _N \in \mathcal {N}\mathcal {N}_{2,N,1,1}\).

\(\square \)

4 ReLU Neural Network Galerkin BEM

In this section, we propose two algorithms to construct the ReLU-NNs described in Sect. 3 for the approximation of the solution to BIEs in polygons and arcs, introduced in Sects. 2.3 and 2.5, respectively. Following the representation of the lower-order boundary element spaces as ReLU-NNs elaborated in Sect. 3, we focus only on shallow NNs. The first method, described herein in Sect. 4.1, aims to construct a ReLU-NN by considering as a loss function the total energy of the problem. Indeed, it is well-known that the solution to operators equations involving “elliptic” operators in Hilbert spaces may be cast as minimization problems. We rely on this observation to mathematically justify this algorithm for the construction of the corresponding ReLU-NN. This approach has been previously described for example in [19, Section 7.2] in the context of one-dimensional FEM for the Poisson problem with non-homogeneous Dirichlet boundary conditions, and actually is the key ingredient of the so-called Deep Ritz Method, described in [13, 14, 22, 24]. The second method proposed in this work consists in the construction of a computable loss function based on a posteriori error estimators for BIEs of the first kind. These tools come in different flavors, and we refer to [15] for an extensive review, including their application to the Adaptive BEM. In particular, here we make use of the so-called weighted residual estimators [7,8,9], which have been proven to be reliable, thus providing a computable upper bound for the error of the Galerkin BEM. To date, these are the only ones shown to deliver optimal convergence rates when used in the Adaptive BEM algorithm (ABEM for short). Finally, in Sect. 4.3, we describe precise algorithms for the computations of the ReLU-NNs for the approximation of the solutions to the hypersingular BIEs introduced in Problems 2.7 and 2.12.

4.1 Energy Minimization

Throughout this section, let X be a real Hilbert space equipped with the inner product \(\left( \cdot ,\cdot \right) _X\) and the induced norm \(\left\Vert \cdot \right\Vert _X=\sqrt{\left( \cdot ,\cdot \right) _X}\). In addition, let \(X'\) denote the dual space of X and let \(\left\langle \cdot ,\cdot \right\rangle _X\) represent the duality pairing between \(X'\) and X. As it is customary, we endow \(X'\) with the dual norm

In addition, we say that operator \({\textsf{A}}: X \rightarrow X'\) is X-elliptic if there exists a constant \(C_a>0\) such that

In the following, we recall a well-established result on continuous, self-adjoint and positive semi-definite operators. This property has been previously used in the construction of DNNs in the “Deep Ritz Method” framework introduced in [14].

Lemma 4.1

([34, Lemma 3.2]) Let \({\textsf{A}}: X \rightarrow X'\) be a continuous, self-adjoint and positive semi-definite operator, i.e.

and let \({\textsf{f}}\in X'\). Then, \(u\in X\) solves the variational problem

if and only if

Lemma 4.1 allows us to express Problems 2.7 and 2.12 (as well as their discrete counterparts) as minimization problems over the corresponding Hilbert spaces. Let us define,

where \(g_{\Gamma }\in H^{-\frac{1}{2}}(\Gamma )\) and \(g_{\Lambda }\in H^{-\frac{1}{2}}(\Lambda )\) represent the right-hand sides of the variational BIEs.

For each \(N\in {\mathbb {N}}\), we aim to find ReLU-NNs \(\upphi ^\star _{\Gamma ,N} \in \mathcal{N}\mathcal{N}_{{2},{N+1},1,1}\) and \(\upvarphi ^\star _{\Lambda ,N} \in \mathcal{N}\mathcal{N}_{{2},N,{1},1}\) minimizing the loss functions defined in (4.2) and (4.3), i.e.,

Remark 5

Observe that \(\upvarphi ^\star _{\Lambda ,N}\) must vanish at the boundary of \(\Lambda \). Hence, the width of the family of ReLU-NNs over which we search \(\upvarphi ^\star _{\Lambda ,N}\) in (4.4) is exactly N. In addition, recall that \(\mathcal{N}\mathcal{N}_{{2},{N+1},2,1} \cap H^{\frac{1}{2}}_{{\text {per}}}({\text {I}}) \subset {\mathscr {C}}^0_{{\text {per}}}({\text {I}})\), thus enforcing periodicity of the corresponding of the ReLU-NN in the approximation of the density over a closed curve \(\Gamma \).

Remark 6

While the loss functions (4.2) and (4.3) are applied to the operator \({\textsf{W}}\), similar loss functions can be derived for \({\textsf{V}}\). Consisting of piecewise constant discontinuous functions on \(\Gamma \), the spaces \(S^0(\Gamma ,{{\mathcal {T}}}_N)\) in the Galerkin BEM Problem 2.14 for Symm’s BIE can not be exactly realized via ReLU-NNs. However, with Remark 3, the corresponding loss functions for the Galerkin BEM Problem 2.14 can be realized also with ReLU-NNs on \(\Gamma \). All results on convergence rates for \({\textsf{W}}\) will have, via Remark 3, analogs for \({\textsf{V}}\). For reasons of length, we develop the ReLU Galerkin BEM algorithms only for \({\textsf{W}}\) and loss functions introduced in (4.2) and (4.3).

The following result and accompanying corollaries motivate our choice of loss functions. We use this result to address the construction of ReLU–NNs by solving the minimization problems listed in (4.4).

Lemma 4.2

Let \({\textsf{A}}:X \rightarrow X'\) be a continuous, self-adjoint and X–elliptic operator. Given \(f\in X'\), let \(u\in X\) be the unique solution to the following variational problem:

Define \(\ell : X \rightarrow {\mathbb {R}}\) as

Then, there exist positive constants \(C_1\) and \(C_2\), both independent of \(u\in X\), such that

Proof

Due to the continuity and X-ellipticity of the operator \({\textsf{A}}: X \rightarrow X'\), we may define an equivalent norm to \(\left\Vert \cdot \right\Vert _X\) in X as follows:

Indeed, if \(C_c>0\) denotes the continuity constant of \({\textsf{A}}: X \rightarrow X'\) and \(C_a > 0\) is as in (4.1), for all \(v\in X\) it holds

Let \(u\in X\) be the unique solution to the variational problem (4.5). Then, for all \(v\in X\), we have

For \(v\in X\), we calculate

In (4.8) we use (4.5) and the self-adjointness of the operator \({\textsf{A}}:X \rightarrow X'\). The bounds presented in (4.6), follow from (4.8) and (4.7) with \(C_1=C_a^{-1}>0\) and \(C_2=C_c^{-1}>0\). \(\square \)

The next results, relevant for DNN training, follow from Lemma 4.2 and Proposition 3.3.

Corollary 4.3

Let \(\phi \in {H}^{\frac{1}{2}}(\Gamma )\) be the unique solution to Problem 2.7 with \(g_\Gamma \in H^{-\frac{1}{2}}(\Gamma )/ {\mathbb {R}}\). Then, there exist positive constants \(C_1\) and \(C_2\) (independent of \(\phi \) and g) such that for all \(N\in {\mathbb {N}}\) such that \(N+1>J\) and for all \(\upphi \in \mathcal{N}\mathcal{N}_{{2},N+1,1,1}\), it holds

where \(\ell _\Gamma : H^{\frac{1}{2}}(\Gamma ) \rightarrow {\mathbb {R}}\) is as in (4.2), the ReLU-NN \(\upphi \) is identified with its restriction to \({\text {I}}\) and \(\tau _{{\varvec{r}}}: H^{\frac{1}{2}}(\Gamma )\rightarrow H^{\frac{1}{2}}({\text {I}})\) denotes the pullback operator.

Corollary 4.4

Let \(\varphi \in {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\) be the unique solution to Problem 2.12 with right hand side \(g_\Lambda \in H^{-\frac{1}{2}}(\Lambda )\). Then, there exist positive constants \(C_1\) and \(C_2\) independent of \(\phi \) and g such that for all \(N\in {\mathbb {N}}\) and for all \(\upvarphi \in \mathcal{N}\mathcal{N}_{3,N,1,1} \cap {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\), it holds

where \(\ell _\Lambda : {\widetilde{H}}^{\frac{1}{2}}(\Lambda ) \rightarrow {\mathbb {R}}\) is as in (4.3), the ReLU-NN \(\upvarphi \) is identified with its restriction to \({\text {I}}\) and \(\tau _{{\varvec{r}}}: {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\rightarrow {\widetilde{H}}^{\frac{1}{2}}({\text {I}})\) denotes the pullback operator.

4.2 Weighted Residual Estimators

We shortly recall the so-called weighted residual estimators for the a-posteriori error estimation of the numerical solution to hypersingular BIEs in a bounded Lipchitz polygon and in an open arc in \({\mathbb {R}}^2\), namely Problems 2.7 and 2.12, respectively. We proceed to recapitulate the result of [7], in which reliable a-posteriori error estimates for first-kind integral equations are analyzed.

Proposition 4.5

([7, Theorem 2]) Let \(\Gamma \) be a closed or open arc in \({\mathbb {R}}^2\). If \(f\in L^2(\Gamma )\) is \(L^2(\Gamma )\)–orthogonal to \(S^{1}(\Gamma ,{\mathcal {T}}_N)\), then for \(s \in [0,1]\) it holds

where

\(C_s>0\) only depending on \(s\in [0,1]\), and \(h_{\mathcal {T}} \in L^\infty (\Gamma )\) is the piece-wise constant function defined element-wise as \(\left. h_{\mathcal {T}} \right| _{\Gamma _j} = h_j\).

Corollary 4.6

Let \({\text {D}}\subset {\mathbb {R}}^2\) be a bounded Lipschitz polygon with boundary \(\Gamma {:=}\partial {\text {D}}\), and let \(\Lambda \subset {\mathbb {R}}^2\) be a Jordan arc. Let \(c(\kappa )>0\) denote the constant in (4.9) with \(s= \frac{1}{2}\).

-

(i)

Let \(\phi \in H^{\frac{1}{2}}(\Gamma )\) and \(\phi _N \in S^{1}(\Gamma ,{\mathcal {T}}_N)\) be the solution to Problem 2.7 and Problem 2.17, respectively, with \(g_\Gamma \in L^2(\Gamma )\).

$$\begin{aligned} \left\Vert \phi -\phi _N \right\Vert _{{H}^{\frac{1}{2}}(\Gamma )} \le c(\kappa ) \left\Vert h^\frac{1}{2}_{\mathcal {T}} {\textsf{R}}_N \right\Vert _{L^2(\Gamma )}, \quad {\textsf{R}}_N{:=}{{\textsf{W}}} \phi _N - \left( \frac{1}{2}\textsf{Id}-{\textsf{K}}'\right) g_\Gamma . \end{aligned}$$ -

(ii)

Let \({\varphi } \in {\widetilde{H}}^{\frac{1}{2}}(\Lambda )\) and \(\varphi _N \in S^{1}(\Lambda ,{\mathcal {T}}_N)\) be the solution to Problems 2.12 and Problem 2.15, respectively, with \(g_\Lambda \in L^2(\Lambda )\).

$$\begin{aligned} \left\Vert \varphi -\varphi _N \right\Vert _{{\widetilde{H}}^{\frac{1}{2}}(\Lambda )} \le c(\kappa ) \left\Vert h^\frac{1}{2}_{\mathcal {T}} {\textsf{R}}_N \right\Vert _{L^2(\Lambda )}, \quad {\textsf{R}}_N{:=}{{\textsf{W}}} {\varphi _N}. \end{aligned}$$

Proof

We prove item (i). The Galerkin solution \(\phi _N \in S^{1}(\Gamma ,{\mathcal {T}}_N)\) to Problem 2.15 satisfies

as by construction it holds \(\left\langle \phi _N,1 \right\rangle _{\Gamma } = 0\). Recalling that for bounded Lipschitz polygons the maps \({\textsf{W}}:H^1(\Gamma ) \rightarrow L^2(\Gamma )\) and \({\textsf{K}}':L^2(\Gamma ) \rightarrow L^2(\Gamma )\) are continuous. Considering that \(g_\Lambda \in L^2(\Gamma )\), we have that the duality pairings appearing in (4.10) can be interpreted as \(L^2(\Gamma )\)-inner products. Hence, we have that

and we may conclude that the residual \({\textsf{R}}_N \in L^2(\Gamma )\) is orthogonal to \(S^{1}(\Gamma ,{\mathcal {T}}_N)\). Recall the bilinear form \(\check{{\textsf{a}}}: H^{\frac{1}{2}}(\Gamma ) \times H^{\frac{1}{2}}(\Gamma ) \rightarrow {\mathbb {R}}\) defined in (2.3). There exists an operator \(\check{{\textsf{W}}}: H^{\frac{1}{2}}(\Gamma ) \rightarrow H^{-\frac{1}{2}}(\Gamma )\) such that

Next, using the continuity and \(H^{\frac{1}{2}}(\Gamma )\)-ellipticity of the modified hypersingular BIO \(\check{{\textsf{W}}}:H^\frac{1}{2}(\Gamma ) \rightarrow H^{-\frac{1}{2}}(\Gamma )\), stated in Propositions 2.1 and 2.2, respectively, we conclude that there exists a constant \(C>0\) independent of \({\mathcal {T}}_N\) such that

In addition, \(\left\langle \phi _N,1 \right\rangle _{\Gamma } = 0\) yields \(\check{{\textsf{W}}}\phi _N = {{\textsf{W}}}\phi _N\). In view of this, together with (4.11) and using Theorem 4.5 with \(f=\left( \frac{1}{2}\textsf{Id}-{\textsf{K}}'\right) g-{{\textsf{W}}}\phi _N\), we get the desired result. The proof of item (ii) follows the exact same steps of item (i), hence we omit it for the sake of simplicity. \(\square \)

4.3 Training Algorithms

In this section, we describe two algorithms devised to construct ReLU-NNs for the approximation of the solution to Problems 2.7 and 2.12. These two approaches rely on the following observation: Each element space of piecewise linear polynomials defined on a suitable partition of the boundary can be exactly represented by a ReLU-NN according to Proposition 3.3. Moreover, the parameters of these ReLU-NNs, i.e. weights and biases, can be precisely described in terms of the parameters of the partition, namely the position of the nodes over the boundary. Therefore, we can replace the Galerkin solution to the discrete variational problem by a ReLU-NN as the ones described in Proposition 3.3 and proceed to find its parameters by minimizing a suitable loss function. The algorithms to be presented here are in the spirit of the “Deep Ritz Method” [14] and rely on the tools introduced in Sects. 4.1 and 4.2.

4.3.1 Training Using Minimization of the Total Energy

Corollaries 4.3 and 4.4 provide a justification to use the loss functions \(\ell _\Gamma (\cdot )\) and \(\ell _\Lambda (\cdot )\) in the construction of the sought ReLU-NNs. Indeed, these quantities can be used as surogates of the exact error in the minimization process. Equipped with this observation, we proceed to describe a two-step scheme to find a realization of ReLU-NN that approximates the solution to Problems 2.7 and 2.12 using the aforementioned loss functions.

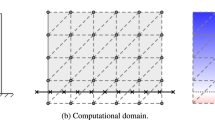

We begin by describing the algorithm for Problem 2.7 only. As per usual, we consider a bounded Lipschitz polygon \({\text {D}}\subset {\mathbb {R}}^2\) with boundary \(\Gamma {:=}\partial {\text {D}}\) characterized by a number \(J \in {\mathbb {N}}\) (\(J\ge 3\)) of vertices. Let \(N\in {\mathbb {N}}\) be fixed and such that \({N+1> J}\). We consider a mesh \({\mathcal {T}}_N\) of \(\Gamma \) as described in Sect. 2.7, with \(N+1\) points \(\{\textbf{x}_n\}_{n=0}^{N}\subset \Gamma \) and where \(\textbf{x}_0=\textbf{x}_{N}\) (i.e. N distinct points). Within the set \(\{\textbf{x}_n\}_{n=0}^{N} \subset \Gamma \) one may identify two kinds of nodes: (i) there is a first subset consisting in the vertices of the polygon \(\Gamma \), which in the the following are referred to as fixed nodes, and (ii) there is a second set of mesh nodes that are not vertices of the polygon, which in the following are referred to as free nodes. Moreover, with a Lipschitz continuous and piecewise linear parametrization of \(\Gamma \), \({\varvec{r}}:{\text {I}}\rightarrow \Gamma \) (as in Sect. 3.2), the set of nodes \(\{\textbf{x}_n\}_{n=0}^{N} \subset \Gamma \) may be identified with a set of biases \({\varvec{t}}:=\{t_n\}_{n=0}^{N}\subset {\text {I}}\) such that \({\varvec{r}}(t_n)=\textbf{x}_n\) for each \(n=0,\ldots ,N\) (see Proposition 3.3). We aim to find the position of the biases in an optimal fashion, while keeping the biases associated with fixed nodes unaltered. Figure 1 illustrates this setting for an open arc in \({\mathbb {R}}^2\).

Let \({\varvec{t}}_{\text {F}} \in {\mathbb {R}}^{{N+1-J}}\) be a vector containing the elements of the biases \(\{{\varvec{t}}_n\}^{N}_{n=0}\) that are associated to the free nodes only and let \(\upphi _N \in \mathcal{N}\mathcal{N}_{3,N+2,1,1}\). In view of Proposition 3.3, there holds

where \(\textbf{c}:=({c_0},\dots ,c_N)^\top \in {\mathbb {R}}^{{N+1}}\) and, for each \(n=1,\dots ,N\), \({\{\zeta _n\}_{n=0}^{N}}\) corresponds to the “hat” functions introduced in Sect. 3.2. Then, in view of Proposition 3.3, the task of finding a ReLU-NN \(\upphi _N\) to approximate the solution of Problem 2.7 may be stated in the following form:

where \(\ell _\Gamma (\cdot )\) has been introduced in (4.2) and by \(\upphi _N({\varvec{t}},\textbf{c})\) we denote the dependence of \(\upphi _N\) upon the biases \({\varvec{t}}\) and the set of weights \(\textbf{c}\). Note our slight abuse of notation by referring to both the vector of coefficients of the BEM solution in (4.12) and to the ReLU-NN weights as \(\textbf{c}\) (see Proposition 3.3).

In Algorithm 1, we propose a two-step scheme for the construction of optimal ReLU-NN in the approximation of the solution to Problem 2.7. This algorithm is based on the following observation: if we fix the free biases \({\varvec{t}}_{\text {F}}\), the minimization of (4.13) for the computation of \(\textbf{c}^\star \in {\mathbb {R}}^N\) boils down to the solution of the Galerkin discretization of Problem 2.7, namely Problem 2.15. Hence, rather than computing the gradient with respect to \({\varvec{t}}_{\text {F}} \in {\mathbb {R}}^{N+2-J}\) and \(\textbf{c} \in {\mathbb {R}}^{N+1}\), and executing a step of the gradient descent algorithm, we perform only a gradient descent step with respect to the vector of free nodes \({\varvec{t}}_{\text {F}} \in {\mathbb {R}}^{N+2-J}\) while keeping that of the coefficients \(\textbf{c} \in {\mathbb {R}}^{N+1}\) fixed. Then, in the second step of this algorithm, we fix the biases and compute \(\textbf{c}^\star \in {\mathbb {R}}^{N+1}\) in (4.13) by solving the discretized boundary integral equation in Problem 2.15.

For given initial set of biases (with a fixed number of biases), Algorithm 1 returns an optimal collection of biases in \(\Gamma \) (with the same cardinality) upon which the loss function \(\ell _\Gamma (\cdot )\) is minimized. This, in turn allows us to construct a ReLU–NN for the approximation of the solution to Problem 2.7 according to Proposition 3.3. To construct a sequence of ReLU–NN with increasing width (corresponding to a sequence of meshes with an increasing number of nodes according to Proposition 3.3) we propose Algorithm 2. Therein, we widen the network by including neurons with (initial) biases given as the midpoints between two contiguous biases, which are subsequently optimized through Algorithm 1.

Through Algorithm 2 we construct a sequence of biases \(\{{\varvec{t}}_{i}^\star \}_{i\in {\mathbb {N}}}\) from a given initial configuration \({\varvec{t}}\) such that the sequence \(\{\ell _{\Gamma }(\Phi ({\varvec{t}}_{i}^\star ,\textbf{c}_i))\}_{i\in {\mathbb {N}}}\) is monotonically decreasing, where \(\textbf{c}_i\) is obtained by solving Problem 2.15 on the mesh \({\mathcal {T}}^\star _{N_i}\) associated with the biases \({\varvec{t}}_{i}^\star \). Greedy algorithms aiming to construct shallow networks for function approximation characterized by a variety of activation function, e.g. the so-called \(\text {ReLU}^{k}\) and sigmoidal activation function, are studied in [33]. Improved convergence rates for the Orthogonal Greedy Algorithm (with respect to the well-known result presented in [4, 12]) depending on the smoothness properties of the activation function of choice are proven. Later in [18], these findings are used for the NN approximation of the solution to elliptic boundary value problem. We mention, however, that considering Algorithm 2 with a sufficiently high number of inner iterations \(M\in {\mathbb {N}}\) (i.e. iterations of Algorithm 1) yields, in our numerical examples, the same convergence rates proved in [18] and the references there. Furthermore, Algorithms 1 and 2 may be modified to tackle the minimization of \(\ell _\Lambda (\cdot )\) in (4.3) by simply replacing, in both algorithms, \(\Gamma \) by \(\Lambda \).

4.3.2 Training Using Weighted Residual Estimators

The result presented in Corollary 4.6 justifies the use of efficiently computable, weighted residual a-posteriori estimators in the construction of a suitable loss function to be used in the numerical implementation of the ReLU–NN BEM algorithms described herein. More precisely, the computable, local residual a-posteriori error estimates presented in Sect. 4.2 can be used as computable surrogates of the mismatch between the exact solution and its Galerkin approximation, in the corresponding norm.

Unlike the approach presented in Sect. 4.3.1, here we do not aim to construct a ReLU-NN to approximate the solution of the BIEs previously described by finding the optimal position of the mesh nodes, which in turn is driven by the minimization of a computable loss function. In turn, the algorithm presented herein aims to greedily select a set of basis functions to enrich the finite dimensional space upon which an approximation of the solution to the BIEs is built. We remark in passing that this approach is strongly related to the adaptive basis viewpoint elaborated in [11]. Due to Proposition 3.3, this amounts to increasing the width of the underlying ReLU-NN every time a new neuron, i.e. basis function, is added.

As in Sect. 4.2, we restrict our presentation to the case of a bounded Lipschitz polygon in \({\mathbb {R}}^2\) and, again, point out that the extension to an open arc is straightforward. The technique to be presented here is an adaptation of the orthogonal matching pursuit algorithm [6], and is also motivated by the recent results in [1, 18], which strongly leverage the variational structure of the discretization scheme.

Let \({\mathcal {S}} \subset H^{\frac{1}{2}}(\Gamma )\) denote a finite dimensional space of functions on \(\Gamma \) and let \(\{\zeta _n\}_{n=1}^{N}\) denote a basis of \(S^1(\Gamma ,{\mathcal {T}}_{N}) \). For each \(\xi \in {\mathcal {S}}\), \(\text{ span }\left( \{\zeta _n\}_{n=1}^{N} \cup \{\xi \}\right) \) is itself a valid finite dimensional space on which a solution to Problem 2.15 may be sought. For given \({\mathcal {S}} \subset H^{\frac{1}{2}}(\Gamma )\), we aim to determine the element \(\xi \in {\mathcal {S}}\) having the least angle with respect to the residual \(\varphi -\varphi _N\). We aim at finding \(\phi ^{\star } \in {\mathcal {S}}\) such that

However, in the computation of \(\phi ^{\star } \in {\mathcal {S}}\) in (4.14) we encounter the following difficulty: We can not directly compute the residual \(\varphi -\varphi _N \in H^{\frac{1}{2}}(\Gamma )\). In view of Corollary 4.6, we use

as a surrogate of the exact residual \(\varphi -\varphi _N\) in the \(H^{\frac{1}{2}}(\Gamma )\)-norm. We proceed to find an element in \({\mathcal {S}}\) such that its contribution to (4.15) has the least angle, in the \(L^2(\Gamma )\)-inner product, with the residual (4.15) itself, i.e., find \(\phi ^\star \in {\mathcal {S}}\) such that

To properly state an algorithm that allows us to construct a ReLU-NN, it remains to define how the set \({\mathcal {S}}\) is constructed at each step. As in Sect. 2.7, we consider a mesh \({\mathcal {T}}_N\) of \(\Gamma \) with \(N+1\) points \(\{\textbf{x}_n\}_{n=0}^{N} \subset {\mathbb {R}}^2\), and where \(\textbf{x}_0=\textbf{x}_{N}\) (i.e. N distinct points). Let \(\textbf{x}_n'\) denote the midpoint between \(\textbf{x}_n\) and \(\textbf{x}_{n+1}\) for each \(n=0,\dots ,N-1\), and consider the set of N piecewise linear functions \({\mathcal {S}}_N {:=}\{\xi _n\}_{n=0}^{N} \subset H^{\frac{1}{2}}(\Gamma )\), defined as

for \(n=0,\dots ,N-1\). In this case, the set \({\mathcal {S}}_N\) gathers the piecewise linear functions one would add to \(S^1(\Gamma ,{\mathcal {T}}_{N})\) if a uniform refinement of the mesh \({\mathcal {T}}_N\) of \(\Gamma \) were to be performed. We aim to select, among the candidates in \({\mathcal {S}}_N\), a function according to (4.16). Furthermore, since the incorporation of a single basis function of the set \({\mathcal {S}}_N\) at each step may result in a needlessly expensive procedure, we allow for the incorporation of a subset of \({\mathcal {S}}_N\) at each step in order to enhance the procedure (as in the ABEM algorithm). We do so by computing

and then selecting a number of elements of \({\mathcal {S}}_N\) having the largest values of \(q_n\). The number of elements of \({\mathcal {S}}_N\) incorporated at each iteration is controlled by an input a parameter \(\theta \in (0,1]\) specifying the fraction of elements of \({\mathcal {S}}_N\) to be included in our enhanced finite dimensional space at each iteration. The procedure is presented in Algorithm 3 for the setting of Problem 2.15 (recall the notation \({\varvec{t}}\) and \({\varvec{c}}\) denoting the biases and weights of the ReLU-NN). Note that Algorithm 3 may be implemented in tandem with Algorithm 1, as presented in Algorithm 4.

Finally, note that setting \(\theta =1\) on Algorithm 4 results in Algorithm 2, while setting \(M=0\) on Algorithm 4 results in Algorithm 3.

5 Numerical Results

In this section we present numerical results obtained using the algorithms described in Sect. 4.

5.1 Setting

For the sake of simplicity, we provide results only for the setting described in Problem 2.12 on the so-called slit \(\Lambda :=(-1,1)\times \{0\} \subset {\mathbb {R}}^2\). For the numerical implementation of the BEM and the evaluation of the weighted residual estimators we use the MATLAB based [25] BEM library HILBERT [2] and custom Fortran codes. We remark that, in the numerical examples presented in Sects. 5.2 and 5.3, we also compare the performance of Algorithm 4 to the built-in adaptive strategies implemented in HILBERT. These codes have been made available in https://gitlab.epfl.ch/fhenriqu/relugalerkinbem.

5.2 Example I

We consider Problem 2.12 with right-hand side \(g=1\) on \(\Lambda \). The exact solution to this problem in \(\Lambda \) is known and given by \(\phi (\textbf{x})={2\sqrt{1-x_1^2}},\; \textbf{x}=(x_1,x_2)^\top \in \Lambda \) (cf. [21, Section 4.2]). As a consequence of Lemma 4.1, \(\ell _\Lambda \) in (4.3) achieves its minimum at \(\phi \), with value