Abstract

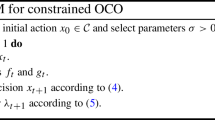

The online optimization problem with non-convex loss functions over a closed convex set, coupled with a set of inequality (possibly non-convex) constraints is a challenging online learning problem. A proximal method of multipliers with quadratic approximations (named as OPMM) is presented to solve this online non-convex optimization with long term constraints. Regrets of the violation of Karush-Kuhn-Tucker conditions of OPMM for solving online non-convex optimization problems are analyzed. Under mild conditions, it is shown that this algorithm exhibits \({{\mathcal {O}}}(T^{-1/8})\) Lagrangian gradient violation regret, \({{\mathcal {O}}}(T^{-1/8})\) constraint violation regret and \({{\mathcal {O}}}(T^{-1/4})\) complementarity residual regret if parameters in the algorithm are properly chosen, where T denotes the number of time periods. For the case that the objective is a convex quadratic function, we demonstrate that the regret of the objective reduction can be established even the feasible set is non-convex. For the case when the constraint functions are convex, if the solution of the subproblem in OPMM is obtained by solving its dual, OPMM is proved to be an implementable projection method for solving the online non-convex optimization problem.

Similar content being viewed by others

References

Márquez-Neila, P., Salzmann, M., Fua, P.: Imposing Hard Constraints on Deep Networks: Promises and Limitations (2017). arXiv:abs/1706.02025

Nandwani, Y., Pathak, A., Mausam, Singla, P.: A primal dual formulation for deep learning with constraints. In: Advances in Neural Information Processing Systems 32, pp. 12157–12168 (2019)

Cotter, A., Jiang, H., Gupta, M., Wang, S., Narayan, T., You, S., Sridharan, K.: Optimization with non-differentiable constraints with applications to fairness, recall, churn, and other goals. Journal of Machine Learning Research 20(172), 1–59 (2019)

Szegedy, C., Zaremba, W., Sutskever, I., Bruna Estrach, J., Erhan, D., Goodfellow, I., Fergus, R.: Intriguing Properties of Neural Networks. (2014). 2nd International Conference on Learning Representations (ICLR)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems 27, pp. 2672–2680 (2014)

Kalai, A., Vempala, S.: Efficient algorithms for online decision problems. J. Comput. System Sci. 71(3), 291–307 (2005)

Shalev-Shwartz, S.: Online learning: Theory, algorithms, and applications. PhD thesis, The Hebrew University (2007)

Shalev-Shwartz, S., Singer, Y.: A primal-dual perspective of online learning algorithms. Mach. Learn. 69(2), 115–142 (2007)

Kivinen, J., Warmuth, M.K.: Exponentiated gradient versus gradient descent for linear predictors. Inform. and Comput. 132(1), 1–63 (1997)

Rosenblatt, F.: The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 65(6), 386–408 (1958)

Littlestone, N.: Learning quickly when irrelevant attributes abound: A new linear-threshold algorithm. Mach. Learn. 2(4), 285–318 (1988)

Mohri, M., Rostamizadeh, A., Talwalkar, A.: Foundations of Machine Learning. Adaptive Computation and Machine Learning. MIT Press, Cambridge, MA (2012)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, New York, NY (2014)

Shalev-Shwartz, S.: Online learning and online convex optimization. Foundations and Trends® in Machine Learning 4(2), 107–194 (2011)

Hazan, E.: Introduction to online convex optimization. Foundations and Trends® in Optimization 2(3–4), 157–325 (2015)

Ertekin, S., Bottou, L., Giles, C.L.: Nonconvex online support vector machines. IEEE Trans. Pattern Anal. Mach. Intell. 33(2), 368–381 (2011)

Gasso, G., Pappaioannou, A., Spivak, M., Bottou, L.: Batch and online learning algorithms for nonconvex neyman-pearson classification. ACM Trans. Intell. Syst. Technol. 2(3), 1–19 (2011)

Gao, X., Li, X., Zhang, S.: Online learning with non-convex losses and non-stationary regret. In: Storkey, A., Perez-Cruz, F. (eds.) Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 84, pp.235–243. Playa Blanca, Lanzarote, Canary Islands (2018)

Hazan, E., Singh, K., Zhang, C.: Efficient regret minimization in non-convex games. In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 70, pp. 1433–1441. International Convention Centre, Sydney, Australia (2017)

Le Thi, H.A., Ho, V.T.: Online learning based on online dca and application to online classification. Neural Comput. 32(4), 759–793 (2020)

Yang, L., Deng, L., Hajiesmaili, M.H., Tan, C., Wong, W.S.: An optimal algorithm for online non-convex learning. In: Abstracts of the 2018 ACM International Conference on Measurement and Modeling of Computer Systems. SIGMETRICS ’18, pp.41–43, New York, NY, USA (2018)

Agarwal, N., Gonen, A., Hazan, E.: Learning in non-convex games with an optimization oracle. In: Beygelzimer, A., Hsu, D. (eds.) Proceedings of the Thirty-Second Conference on Learning Theory. Proceedings of Machine Learning Research, vol. 99, pp. 18–29. Phoenix, USA (2019)

Suggala, A.S., Netrapalli, P.: Online Non-Convex Learning: Following the Perturbed Leader is Optimal. arXiv:abs/1903.08110 (2019)

Roy, A., Balasubramanian, K., Ghadimi, S., Mohapatra, P.: Multi-Point Bandit Algorithms for Nonstationary Online Nonconvex Optimization. arXiv:abs/1907.13616 (2019)

Mahdavi, M., Jin, R., Yang, T.: Trading regret for efficiency: online convex optimization with long term constraints. J. Mach. Learn. Res. 13, 2503–2528 (2012)

Jenatton, R., Huang, J., Archambeau, C.: Adaptive algorithms for online convex optimization with long-term constraints. In: Proceedings of The 33rd International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 48, pp.402–411. New York, New York, USA (2016)

Yu, H., Neely, M.J.: A Low Complexity Algorithm with \(O(\sqrt{T})\) Regret and Finite Constraint Violations for Online Convex Optimization with Long Term Constraints. arXiv:abs/1604.02218 (2016)

Rockafellar, R.T.: Augmented Lagrangians and applications of the proximal point algorithm in convex programming. Math. Oper. Res. 1(2), 97–116 (1976)

Yu, H., Neely, M.J., Wei, X.: Online Convex Optimization with Stochastic Constraints. In: Advances in Neural Information Processing Systems, pp. 1428–1438 (2017)

Beck, A.: First-order Methods in Optimization. MOS-SIAM Series on Optimization, vol. 25. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (2017)

Acknowledgements

The authors would like to thank the two reviewers for the valuable suggestions. The research is supported by the National Natural Science Foundation of China (No. 11731013, No. 11971089 and No. 11871135).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Proof of Lemma 6

Appendix A: Proof of Lemma 6

Proof

Let

then it yields that \( \rho =1- \frac{rt_0}{2}\zeta . \) Define \(\eta (t)=Z_{t+t_0}-Z_t\), then we have \( |\eta (t)|\le t_0 \delta _{\max } \) and hence

From (A1) and the following inequality

we obtain

Case 1: \(Z_t\ge \theta \). In this case, one has from (13) that \(\eta (t) \le -t_0 \zeta \) and hence

Case 2: \(Z_t< \theta \). In this case, one has from (13) that \(\eta (t) \le t_0 \delta _{\max }\) and hence

Combining (A2) and (A3), we obtain

We next prove the following inequality by induction,

We first consider the case \(t \in \{0,1,\ldots , t_0\}\). From \(|Z_{t+1}-Z_t|\le \delta _{\max }\) and \(Z_0=0\) we have \(Z_t \le t \delta _{\max }\). This, together with the fact that \(\frac{e^{r\theta }}{1-\rho }\ge 1\), implies

Hence, (A5) is satisfied for all \(t \in \{0,1,\ldots , t_0\}\). We now assume that (A5) holds true for all \(t \in \{t_0+1,\ldots , \tau \}\) with arbitrary \(\tau > t_0\). Consider \(t=\tau +1\). By (A4), we have

Therefore, the inequality (A5) holds for all \(t\in \{0,1,\ldots \}\). Taking logarithm on both sides of (A5) and dividing by r yields

The proof is completed. \(\square \)

Rights and permissions

About this article

Cite this article

Zhang, L., Liu, H. & Xiao, X. Regrets of proximal method of multipliers for online non-convex optimization with long term constraints. J Glob Optim 85, 61–80 (2023). https://doi.org/10.1007/s10898-022-01196-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-022-01196-2