Abstract

Web search engines are increasingly deploying many features, combined using learning to rank techniques. However, various practical questions remain concerning the manner in which learning to rank should be deployed. For instance, a sample of documents with sufficient recall is used, such that re-ranking of the sample by the learned model brings the relevant documents to the top. However, the properties of the document sample such as when to stop ranking—i.e. its minimum effective size—remain unstudied. Similarly, effective listwise learning to rank techniques minimise a loss function corresponding to a standard information retrieval evaluation measure. However, the appropriate choice of how to calculate the loss function—i.e. the choice of the learning evaluation measure and the rank depth at which this measure should be calculated—are as yet unclear. In this paper, we address all of these issues by formulating various hypotheses and research questions, before performing exhaustive experiments using multiple learning to rank techniques and different types of information needs on the ClueWeb09 and LETOR corpora. Among many conclusions, we find, for instance, that the smallest effective sample for a given query set is dependent on the type of information need of the queries, the document representation used during sampling and the test evaluation measure. As the sample size is varied, the selected features markedly change—for instance, we find that the link analysis features are favoured for smaller document samples. Moreover, despite reflecting a more realistic user model, the recently proposed ERR measure is not as effective as the traditional NDCG as a learning loss function. Overall, our comprehensive experiments provide the first empirical derivation of best practices for learning to rank deployments.

Similar content being viewed by others

1 Introduction

Learning to rank (Liu 2009) is gaining increasing attention in information retrieval (IR), with machine learning techniques being used to learn an appropriate combination of features into an effective ranking model. An increasing amount of research is devoted to developing efficient and effective learning techniques, while major search engines reportedly deploy ranking models consisting of hundreds of features (Pederson 2010; Segalovich 2010).

Nevertheless, the manner in which learning to rank is deployed within real settings has not seen published discussion. For instance, learning to rank involves the use of a sample of top-ranked documents for a given query (Liu 2009), which are then re-ranked by the learned model before display to the user. However, in the literature, the properties of an effective sample are not clear. Indeed, despite his thorough treatment of existing learning to rank techniques, Liu (2009) does not address in detail how the sample should be made within an existing deployment, what document representation should be deployed when generating the sample (e.g. in addition to the body of the document, should anchor text be included or not?), nor how many documents it should contain.

Typically, a standard weighting model, such as BM25 (Robertson et al. 1992), is used to rank enough documents to obtain sufficient recall. Yet there is a great variation in the literature and existing test collections about how many documents should be re-ranked when learning models or deploying previously learned models, with large sample sizes such as “tens of thousands” (Chapelle et al. 2011), 5,000 (Craswell et al. 2010), 1,000 (Qin et al. 2009) as well as small samples such as 200 (Zhang et al. 2009) or even 20 (Chapelle and Chang 2011) observed. However, as we will show in this paper, such small samples can result in learned models with significantly degraded effectiveness.

On the other hand, reducing the size of the sample has various efficiency benefits. In particular, if document-at-a-time (DAAT) matching techniques such as Wand (Broder et al. 2003) are used to identify the sample of K documents within the search engine, then using a smaller K (i.e. a smaller sample) can markedly increase efficiency compared to a larger K (Broder et al. 2003). Moreover, the number of feature computations are decreased for smaller K. Lastly, for environments where learning time is critical, the use of smaller samples markedly reduces the learning time of many learning to rank techniques such as AFS (Metzler 2007) and RankBoost (Freund et al. 2003).

Another issue concerns the loss function that learning to rank techniques deploy to produce effective models. In particular, listwise techniques, which directly use an IR evaluation measure for the loss function are often the most effective (Liu 2009). However, the choice of this learning evaluation measure and the rank cutoff to which it is computed during learning may have a noticeable impact on the effectiveness of the learned model on unseen data. Nonetheless, there has not been much work on such issues—for instance, while Robertson (2008) provided theoretical speculations, Donmez et al. (2009) observed that matching the learning measure with the test measure results in the best test accuracy in presence of “enough” training data. This was contradicted by Yilmaz and Robertson (2010), who observed that “enough” training data may not always be present. Overall, the lack of agreement in the existing literature suggests the necessity of a thorough empirical study.

Our work is the first study into best practices in a real deployment of learning to rank. In particular, the contributions of this work are threefold:

-

1.

We propose an experimental methodology for investigating the size of the document sample as well as the choice and the rank cutoff of the evaluation measure deployed during learning;

-

2.

We thoroughly experiment with multiple learning to rank techniques across several query sets covering both diverse information needs and corpora, namely the existing LETOR v3.0 GOV learning to rank test collection and the TREC Web track ClueWeb09 corpus;

-

3.

From these experiments, we derive empirically identified recommendations on the sample size and the learning evaluation measure. In particular, three research themes are addressed in this paper, namely: the properties of the sample; the role of the learning evaluation measure; and the interaction between the learning evaluation measure cutoff and the sample size.

Through exhaustive experimentation across these three research themes, we investigate how the effectiveness of the learned model is affected by:

-

the document representation used to generate the sample and the size of the sample;

-

the learning to rank technique and the sample size;

-

the type of information need and the sample size;

-

the learning evaluation measure;

-

the rank cutoff of the learning evaluation measure.

Among many conclusions, we find, for instance, that on the larger ClueWeb09 corpus the minimum effective sample can be as low as 10–20 documents for the TREC 2009 and 2010 Web track queries. However, surprisingly, a sample size of 1,500 documents is necessary to ensure effective retrieval for navigational information needs on the same corpus. Moreover, for the same navigational information needs, we also show the importance of including anchor text within the document representation used to generate the sample. Finally, the test evaluation measure is shown to be important, as evaluation by the ERR cascade measure (Chapelle et al. 2009) (which penalises redundancy) is shown to permit smaller effective samples than for other measures such as NDCG (Järvelin and Kekäläinen 2002) and MAP (Buckley and Voorhees 2000). As for the choice of the learning loss function, despite reflecting a more realistic user model, ERR is not as effective as NDCG, even when ERR is the target test measure. Indeed, our results provide additional insights into the ongoing aforementioned debate on whether the test measure is actually the most suitable for learning.

The remainder of the paper is structured as follows: Sect. 2 discusses related work and elaborates on the research themes that we study in this article; Sect. 3 describes the proposed experimental methodology that allows hypotheses concerning our research themes for learning to rank techniques to be validated; In Sect. 4, we define the experimental setup; Experimental results and analysis follow in Sect. 5; Concluding remarks are made in Sect. 6.

2 Problem definitions

Learning to rank is the application of machine learning techniques to generate a learned model combining different document features in an information retrieval system (Liu 2009). For instance, learning to rank techniques are often applied by Web search engines, to combine various document weighting models and other query-independent features (Pederson 2008). The form of the model generated by different learning to rank techniques differs in nature: for some, it is a vector of weights for linearly combining each feature (Metzler 2007; Xu and Li 2007); for others it can represent a learned neural network (Burges et al. 2005), or a series of regression trees (Weinberger et al. 2010). Regardless of the applied technique, the general steps for obtaining a learned model using a learning to rank technique are the following (Liu 2009):

-

0.

Pooling: For each query in a training set, documents for which human relevance assessments are obtained are identified using a pooling methodology. By combining a diverse set of retrieval systems for each query (Voorhees and Harman 2005), a high quality pool can be obtained.

-

1.

Top K Retrieval: For a set of training queries, generate a sample of documents using an initial retrieval approach.

-

2.

Feature Extraction: For each document in the sample, extract a vector of feature values. A feature is a binary or numerical indicator representing the quality of a document, or its relation to the query.

-

3.

Learning: Learn a model by applying a learning to rank technique. Each technique deploys a different loss function to estimate the goodness of various combinations of features. Documents are labelled according to the relevance assessments identified in step (0).

In practice, step (0) may have been performed separately—for instance, by reusing relevance assessments created as part of the TREC evaluation forum—or can be integrated into the general learning process, using active learning approaches to select additional documents to be assessed (Donmez and Carbonell 2009; Long et al. 2010).

Once a learned model has been obtained from the above learning steps, it can be deployed within a search engine as follows:

-

4.

Top K Retrieval: For an unseen test query, a sample of documents is generated in the same manner as in step (1),

-

5.

Feature Extraction: As in step (2), a vector of feature values is extracted for each document in the sample. The set of features should be exactly the same as for step (2).

-

6.

Learned Model Application: The final ranking of documents for the query is obtained by applying the learned model on every document in the sample, and sorting by descending predicted score.

Figure 1 illustrates steps (4)–(6) of deploying a learned model in a search engine setting.

Of all seven steps, feature extraction [steps (2) and (5)] defines the features deployed by the search engine. As there are countless possible features from the body of information retrieval (IR) literature, we assume a standard set of features, therefore the efficient and effective calculation of these features are not considered in this discussion. On the other hand, step (6) is a straightforward application of the learned model to the vectors of feature values for each document in the sample (e.g. calculating the dot product of the document’s feature values with the feature weights of the learned model to obtain the final document score), and hence there is little that can be altered to vary efficiency or effectiveness. Therefore, in this paper, we concentrate on the generation of the sample [steps (1) and (4)] and on the actual learning process [step (3)]. In particular, the generation of a sample in step (1) is important, since for efficiency reasons, it is impractical to apply learning to rank on the entire corpus, nor even on the union of all documents containing any query term. Moreover, efficiency benefits may be obtained by minimising the size of the sample, both during learning and application.

However, the appropriate properties of the sample have not been the subject of much investigation. Moreover, the effectiveness of the model generated in step (3) is of utmost importance. Indeed, learning to rank techniques that deploy standard IR evaluation measures as their loss functions are often among the most effective (Liu 2009). However, while the effectiveness of the learned model is reported to depend on the choice of the measure (Robertson 2008), this aspect has not seen much empirical treatment within the learning to rank literature, and—as mentioned in Sect. 1—even brought inconsistent observations (Donmez et al. 2009; Yilmaz and Robertson 2010). In the following, we expand on both the role of the sample (Sect. 2.1) and the role of the learning evaluation measure (Sect. 2.2), to form research themes around which hypotheses and research questions are postulated. The hypotheses and research questions of each research theme are later investigated in Sect. 5.

2.1 Sample

In this section, we define the motivations for sampling (Sect. 2.1.1), as well as reviewing the manner in which the sampling is performed in the literature (Sect. 2.1.2). We also review the tradeoff between the quality and the size of the sample (Sect. 2.1.3), as well as how the sample has been obtained in existing learning to rank test collections (Sect. 2.1.4). We use this discussion to motivate several hypotheses and research questions, which are defined in Sect. 2.1.4.

2.1.1 Why sample?

The sample is a set of documents collected for each query, before learning [step (1)] or before applying a learned model [step (4)]. The motivations for the sample primarily occur for the efficient application of a learned model, but also has particular uses during learning, as detailed below. In the following, we provide the motivations for the use of sampling, ordered by their importance, across steps (4), (1) and also step (0).

Sampling for Applying a Learned Model [step (4)]: As mentioned above, a sample is used during the application of a learned model to reduce the size of the number of documents for which features are calculated (Liu 2009), by using an initial ranking approach to identify a set of documents that are likely to contain the relevant documents [Liu (2009) refers to the sample as a set of “possibly relevant documents”]. Minimising the number of documents for which features are calculated provides efficiency advantages, particularly if some features are expensive to compute [e.g. proximity features (Metzler and Croft 2005)]. Moreover, when a document-at-a-time (DAAT) retrieval strategy such as Wand (Broder et al. 2003) is used to identify the sample of documents in step (4), minimising the number of documents in the sample benefits efficiency by permitting Wand to omit the scoring of more documents that are unlikely to make the final sample (Broder et al. 2003).

Sampling for Learning [step (1)]: The use of a sample in learning has some similar motivations to its use within a deployed search engine. Indeed, even during learning, it is impractical to extract feature vectors for all documents in the corpus (Liu 2009). Following other supervised learning tasks such as classification and regression, a sample constitutes representative training data from which an effective learned model can be obtained. However, in contrast to the other supervised tasks, in learning to rank, it is not the case that every example document in the training data is independent and identically distributed (i.i.d). Indeed, the documents associated to each query in the sample form a group—the groups are i.i.d., however, the documents within a group are not i.i.d. (Li 2011). Instead, the documents should be identified in a deterministic manner (Li 2011). Finally, the learning time of many learning to rank techniques [step (3)] increases as the number of documents in the sample increases. Indeed, Fig. 2 shows the learning time for several learning to rank techniques as the sample size increases. For instance, for the AFS learning to rank technique (Metzler 2007) using NDCG@10 as the learning evaluation measure, the learning time for a sample of 2,000 documents is twice that for 1,000 documents (1,208 seconds vs. 604 seconds). Hence, if available resources for offline learning are limited, it may be desirable to reduce the learning time by constraining the size of the sample, providing there is no resulting loss in effectiveness when using a smaller sample.

Sampling for Pooling [step (0)]: Some work from the literature [e.g. Aslam et al. (2009)] merge the notion of sampling for learning [step (1)] with sampling for producing relevance assessments [step (0)]. In particular, the building of a high quality test collection involves the use of multiple different retrieval systems contributing documents to an assessment pool for each query. Each document in the pool is then assessed for its relevance. In this work, we assume that high quality relevance assessments are already available. In this way, we restrict the notion of sampling to refer only to the identification of documents during steps (1) and (4) above, and not for identifying those that should be assessed for relevance before learning can occur.

2.1.2 How to sample?

Different strategies can be used to create the sample. For instance, in the LETOR v3.0 learning to rank test collection (Qin et al. 2009), the top 1,000 documents are sampled using BM25 computed on a document representation that includes the body of the documents and the anchor text of their incoming hyperlinks. Cambazoglu et al. (2010) also used BM25 to create the sample. Liu (2009) does not provide any more details on how the sample should be obtained, but instead, simply states that while using BM25 alone to produce the sample is sufficient, it may not be the best approach. Hence, there are no empirically established guidelines for how to sample, nor for the properties of an effective sample for deploying learning to rank within a search engine. In this work, we investigate the use of anchor text in the documentation representation used by the sample, to determine its impact on the recall of the sample, and the effectiveness of the resulting learned models.

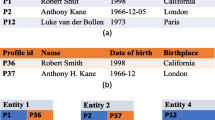

In the earlier LETOR v2.0 test collection, the sample included relevant documents from the pooled TREC relevance assessments, in addition to the top-ranked documents according to BM25. However, Minka and Robertson (2008) noted a bias in this document sampling strategy. In particular, it was observed that a learning to rank technique found a negative feature weight for BM25 to be effective, as this allowed relevant documents not present in the BM25 sample to be easily identified. On the other hand, such a learned model would not be effective on a sample obtained using BM25 alone, as it would rank highly documents with low BM25 scores, despite the fact that these are likely to be irrelevant. To illustrate this sample selection bias, Table 1 provides an example learning sample with features, where a selection of top-ranked documents have been identified using the BM25 weighting model within step (1). Two relevant documents (docid 4 & 7) have been added to the sample, in the hope that their presence will permit the learning to rank technique to learn how to rank these documents highly. However, their presence can cause bias within the obtained learned model, which may identify that these relevant documents can be highly ranked simply by identifying documents with the lowest BM25 score. Such a learned model would not perform well on a sample obtained from step (4) using BM25 alone, as it would highly rank documents with the lowest BM25 scores.

Other works in the literature have made use of active learning in the identification of sample during learning (Donmez and Carbonell 2009; Long et al. 2010). By adapting approaches previously used for sampling examples for learning classifiers, it was shown that effective models can be obtained with less documents considered during learning. However, neither of these works examine how the properties of sample are affected, such that the distribution of documents in the training samples remains comparable to that observed in the sample on which the learned model will be applied, to ensure that sample selection bias does not occur. In this work, our realistic setting always ensures that we obtain learned models from training samples that are identified in the same manner as the samples for test queries. For this reason, we do not consider it advantageous to sub-sample the learning samples, such as using active learning approaches, as their impact on the generality of the models remains unclear.

Aslam et al. (2009) compared different strategies for sampling documents, by combining sampling for pooling and sampling for learning [steps (0) and (1)]. In particular, strategies that pooled documents from multiple different retrieval systems (in this case participating systems in TREC 6–8) were found to generate high quality document samples. However, we argue that using multiple retrieval systems to generate the sample for learning may incur a similar bias to that reported by Minka and Robertson (2008) when the learned model is deployed using features obtained from a single system. Moreover, such strategies are not re-usable in a deployed search engine setting, as multiple different systems are not necessarily available with which to generate the sample. In this respect, Chapelle et al. (2011) noted that such approaches from the learning to rank literature only consider “an offline reranking scenario”. Naturally, the approach of Aslam et al. (2009) could potentially be achieved using multiple retrieval models for generating the sample instead of multiple systems. However, in practice, it is the diversity of entirely different retrieval systems rather than different retrieval models that contribute to the quality of a test collection pool (Beitzel et al. 2004; Zobel 1998).

In this work, we aim to learn an effective model for a given search engine. For this reason, we assume a realistic scenario, as identified from the literature, which we summarise as follows: The sample for applying the learned model [step (4)] should be generated by only a single feature (e.g. BM25) from the single system at hand (Chapelle et al. 2011); The sample for learning [step (1)] should have the same number of documents as for applying the learned model and generated using the same method (Liu 2009), to ensure a similar distribution of documents in the training and test samples; We assume that sampling for pooling [step (0)] has occurred independently of the learning to rank process, such that relevance assessments are already available for the documents in the sample, while to avoid sample selection bias, no additional relevant documents are added to the sample (Minka and Robertson 2008).

2.1.3 Quality versus quantity

It is intuitive that the strategy used to create the sample of documents to re-rank will impact on the effectiveness of the learned model. In particular, to maximise the effectiveness of the learned model, the recall of the sample should be as high as possible, as this maximises the potential for the learned model to promote the relevant documents to the top of the ranking. To achieve this objective, two alternative strategies are possible: either the approach used to generate the sample should be as effective as possible, or the sample should be large enough to achieve sufficient recall.

The natural way to improve the effectiveness of the sample would be to introduce more features into the ranking process, such that a sample with higher recall for the same number of documents is obtained. However, this induces a recursive application of learning to rank, i.e. learning to rank would be required to generate a sample on which to apply learning to rank.

Instead—as discussed in Sect. 2.1.2—the normal practice is to select many documents ranked by a standard weighting model (e.g. BM25) (Cambazoglu et al. 2010; Qin et al. 2009). By considering a large number of documents for the sample, the recall of the sample is increased, and it is hoped that the learned model can re-rank highly the relevant documents that were not present in the smaller sample. However, larger samples would typically contain more irrelevant documents, with the proportion of newly identified relevant documents compared to irrelevant ones diminishing as the size of the sample increases. For some learning to rank techniques, we postulate that the high imbalance between relevant and irrelevant documents brought by a large sample may hinder the learning of an effective learned model.

In terms of the quality of the document sample, we consider that the choice of the document representation used for obtaining the sample may have an impact upon effectiveness. In particular, adding anchor text to the document representation can alleviate the vocabulary mismatch problem (Plachouras 2006), while also permitting easier identification of the relevant documents for navigational queries (Hawking et al. 2004). However, adding anchor text to the document representation used to generate the sample can significantly change the documents retrieved in the sample, and may not lead to similar improvements for non-navigational queries (Plachouras 2006).

In summary, a smaller, more precise sample could be used, but this boils down to iterative applications of learning to rank. Instead, using a larger, less precise sample leads to the same relevant documents being present as in a smaller, more precise sample. However, a larger sample incurs the expense of further irrelevant documents and decreased efficiency, in terms of deployment and learning times (as illustrated by Fig. 2). Hence, in the next section, we discuss how the size of the sample has been addressed in previous works.

2.1.4 How much quantity?

Assuming that the sample should be generated by a single system using a standard weighting model—as per Sect. 2.1.2—we aim to determine how many documents should be considered in the sample to obtain sufficient recall to allow effective learned models to be obtained.

In general, recall is an area which has seen less work within IR, primarily because precision is deemed the most important aspect in many search applications. Notable exceptions are the types of information needs present within the patent (Piroi and Zenz 2011) and legal search (Tomlinson and Hedin 2011) domains where recall is an overriding important aspect. To this end, models are being developed to determine when to terminate a ranked list while ensuring recall (Arampatzis et al. 2009).

To provide an overview of the sample size used in the literature for various types of information needs, Table 2 quantifies the samples in existing learning to rank test collections. It also reports the type of information needs addressed in each query set, in terms of informational (denoted Inf.), or navigational (Nav.). For some test collections, the particular information need addressed is not specified in the associated literature (Unspec.).

From Table 2 and the literature, we observe that two ranges of sample size are commonly used:

-

Large Samples (≥ 1,000 documents): Samples of 1,000 documents are used by the early LETOR test collections (v3.0 and before) (Qin et al. 2009). Indeed, for the TREC GOV collection, it was previously claimed that 1,000 documents are sufficient for combining BM25 and PageRank, without loss of effectiveness (Craswell et al. 2005). Craswell et al. (2010) used a sample of 5,000 documents for retrieval from the 500 million documents of the ClueWeb09 collection, but without justification. Chapelle et al. (2011) anecdotally report sample sizes of “tens of thousands of documents”.

-

Small Samples (≤ 200 documents): From Table 2, we observe that various learning to rank test collections have mean sample sizes of 120 documents or less (e.g. LETOR v4.0, MSLR), with some as few as 10 documents per query (Yandex), even when sampled from the entire Web. Similarly, Zhang et al. (2009) used a sample of 200 documents ranked by BM25 and PageRank from a Web search engine.

It is noticeable that while for many of the query sets the type of information need addressed is not specified, we expect these to include both information and navigational needs, perhaps for the same query. Indeed, similar to recent TREC Web track test collections (Clarke et al. 2010, 2011), there is a trend towards using multiple relevance label grades to address different possible information needs for the same query. For example, for the query ‘University of Glasgow’, the University’s home page would be labelled 4 (‘perfect’), representing the best answer for the navigational information need of a typical user, while the Wikipedia entry about the University would be labelled 3 (‘excellent’). Documents not discussing the University would be judged irrelevant (labelled 0). The high mean numbers of relevant documents for query sets such as MSLR, Yandex and Yahoo! LTR Challenge in Table 2 are indicative that labelling with multiple relevance grades is used for these datasets. However, we observe no relationship between the type of information need and the size of the sample used within the existing learning to rank test collections.

Overall, there is clearly a lack of evidence on the appropriate size of the document sample for effective learning. Hence, in this work, as the first of three research themes, we formulate and validate several hypotheses relating to the sample, allowing us to empirically determine the properties of an effective sample. Firstly, we expect that, in general, sample size does have an impact on effectiveness:

Hypothesis 1

The observed effectiveness of learned models can be affected by different sample sizes.

Next, depending on the presence of anchor text within the document representation used for the sample, the few relevant documents for navigational information needs may be easier to find than the larger number of relevant documents for informational information needs. In particular, the choice of document representation to use when sampling will likely impact on the documents identified in the sample. Moreover, the most suitable document representation for sampling may vary across different types of information needs. For instance, if the document representation used by the weighting model for obtaining the sample does consider anchor text, then the navigational pages with quality anchor text in their incoming hyperlinks are more likely to be retrieved in the sample (Hawking et al. 2004; Plachouras and Ounis 2004). However, we postulate that using anchor text may reduce the number of relevant documents identified for more informational queries. Hence, we hypothesise that:

Hypothesis 2

The observed effectiveness of learned models can be affected by the type of information need observed in the queries, and the used document representation for generating the samples, regardless of the size of these samples.

Moreover, the choice of learning to rank technique may also have an impact on the effective choice of the sample size. For instance, pairwise learning to rank techniques aim to reduce the number of incorrectly ranked pairs of documents. However, as larger sample sizes exhibit less balance between relevant and irrelevant documents, there are larger number of document pairs for which no preference relation exists, which may degrade the effectiveness of these learning to rank techniques. Hence, we hypothesise that:

Hypothesis 3

The observed effectiveness of learned models depends on the deployed learning to rank technique and the sample size.

Finally, as discussed above in Sect. 2.1.1, minimising the size of the sample without significant degradations compared to an effective sample size has marked efficiency benefits. In particular, when applying the learned model, minimising the number of sample documents to be identified during matching reduces the response time, while also reducing the time required to calculate features for all documents in the sample. Moreover, smaller sample sizes reduce the learning time for most learning to rank techniques (see Fig. 2). For these reasons, the identification of the smallest sample size for obtaining effective retrieval performance is desirable, which we aim to answer in the following research question:

Research Question 1

What are the aspects that define the smallest sample size for an effective learned model?

2.2 Learning evaluation measure

Besides investigating the properties of an effective sample, we are also interested in the effective configuration of learning to rank techniques. Indeed, for listwise learning to rank techniques, the evaluation measure deployed during learning can impact on the effectiveness of the learned models (Xu and Li 2007). In the following, we review and discuss the use (Sect. 2.2.1), properties (Sect. 2.2.2) and choice of evaluation measures during learning (Sect. 2.2.3). This discussion is used to motivate hypotheses concerning our second and third research themes, which address the role of the learning evaluation measure, and the effect of the interaction between the learning evaluation measure cutoff and the sample size.

2.2.1 Need for evaluation measures in learning

In the process of learning a model, a learning to rank technique will attempt many different combinations of features, and evaluate them as per the defined loss function. Listwise learning to rank techniques, which directly deploy IR evaluation measures as their loss function [e.g. AFS (Metzler 2007) and AdaRank (Xu and Li 2007)] are reported to be particularly effective (Liu 2009).

Many evaluation measures have been defined in the literature, with different properties. However, their role within learning has not been the subject of an in-depth investigation. Indeed, in a keynote presentation, Croft (2008) speculated that different optimisations might be needed for different measures. In the following, we discuss the attributes of evaluation measures, and their impact when a measure is used for learning.

2.2.2 Properties of evaluation measures

In general, IR evaluation measures—such as Mean Average Precision (MAP) (Buckley and Voorhees 2000) and normalised Discounted Cumulative Gain (NDCG) (Järvelin and Kekäläinen 2002)— are not continuous. This is because each measure may or may not ‘react’ to a swap in the positions of two documents, depending on the relevance of the two documents and their respective positions in the ranked list. Moreover, measures are computed to a pre-determined cutoff rank, after which they do not consider the retrieved documents. For instance, precision only considers the number of relevant documents occurring before the cutoff, but not the order of the documents before the cutoff, nor the order after.

The responsiveness of a measure to swaps in the ranking is known as its informativeness (Robertson 2008). For example, consider a ranking of four documents for a query, of which one is highly relevant, two relevant, and one irrelevant. Of the 4! = 24 possible rankings of these four documents, precision can only give one possible value (0.75), and mean reciprocal rank (MRR) can give two (1 or 0.5). In contrast, MAP and NDCG are more informative measures, as they discriminate between the effectiveness of more of the 24 possible rankings, producing four and nine different values, respectively.

2.2.3 Evaluation measures for effective learning

Given a measure on the test queries that we wish to improve, it is not necessarily the case that we should also aim to maximise this measure during the learning process (He et al. 2008; Robertson 2008). Indeed, the informativeness of the learning measure will have a direct impact on the learned model, and hence a measure used for testing may not be sufficiently informative to guide the learning process. For instance, as precision at k does not react to many swaps in the document ranking during learning, it may not be able to differentiate between two possible models whose performance characteristics are actually quite different (e.g. consider two models that place a highly relevant document ranked at rank 1 and rank k, respectively). In contrast, when graded relevance assessments are available, NDCG and ERR are more informative measures, as they can differentiate between the relevant documents of different relevance grades (e.g. consider the ordering of a pair of documents, one highly relevant, one marginally relevant).

Next, the rank cutoff also affects the usefulness of the measure. For instance, by only considering documents to rank k, a measure cannot differentiate between ranking models that place a relevant document at rank k + 1 or rank k + 100—even if the former is more likely to be an effective model on unseen data (Robertson 2008). However, for a given sample of documents, using larger cutoffs during learning also degrades the efficiency of the learning to rank technique. For example, in Fig. 2, it takes AFS using NDCG@1,000 approximately twice as long to learn a model as AFS using NDCG@10 (e.g. 2,097 vs. 6,097 s for 5,000 document samples). Moreover, as most IR evaluation measures are ‘top-heavy’, they react less to changes deeper in the ranking, meaning that we expect diminishing returns in the effectiveness of the resulting model as the evaluation cutoff is increased.

The impact of the choice of measure and cutoff in listwise learning to rank techniques have not been the subject of much literature. An exception is the work of He et al. (2008), who compared the sensitivity of different measures for training under different levels of incompleteness. However, the training was conducted in the setting of hyper-parameters of retrieval models (e.g. the b hyper-parameter of BM25), rather than the now more typical learning to rank environment. Robertson (2008) speculated on the effect of the informativeness of the training measure, without however providing empirical evidence. Donmez et al. (2009) found that given enough training queries, for a given test measure, the same measure should be the most suitable for learning. Later, Yilmaz and Robertson (2010) challenged this finding, noting that sufficient queries are not always available. Our work further aids the understanding of this area, by investigating the choice of the learning evaluation measure within a learning to rank setting, across multiple search tasks, and with even sparser amounts of training queries.

As our second research theme, we formulate several hypotheses relating to the choice of the learning evaluation measure within a listwise learning to rank technique [step (3)]. Firstly, based on the different informativeness of various evaluation measures, we hypothesise the following:

Hypothesis 4

The observed effectiveness of the learned model obtained from a listwise learning to rank technique can be affected by the choice of the learning evaluation measure.

For a large sample, increasing the rank cutoff of the learning evaluation measure increases the informativeness of the measure by allowing it to potentially respond to more swaps between relevant and irrelevant documents. It follows that by using a measure with improved informativeness, a learning to rank technique may identify a yet unseen feature combination that works well for some pairs of document in the sample, which is not observed for the smaller rank cutoff. This may be useful later for improving the effectiveness of an unseen query, where the relevant documents are pushed to the top ranks, due to the additional feature combination. Hence, we hypothesise that:

Hypothesis 5

The observed effectiveness of the learned model obtained from a listwise learning to rank technique can be affected by the choice of the rank cutoff of the learning evaluation measure.

Lastly, as our third and final research theme, we combine the two investigations within this work concerning the sample size [step (1) and (4)] and the rank cutoff of the selected learning evaluation measure [step (3)], to investigate the dependence between the two. Consider the evaluation of 4 documents discussed above. If the sample is also of 4 documents, then there are only 4! = 24 possible rankings that can be produced. However, if the sample size is larger, say 5, then there are 5! = 120 possible rankings, even though the evaluation measure will only consider the ordering of the top-ranked 4 documents. The size of the increased space for the possible re-ranking should increase the chances that an effective learned model can be identified. For this reason, our last hypothesis is the following:

Hypothesis 6

The observed effectiveness of the learned model obtained from a listwise learning to rank technique can be affected by both the choice of the rank cutoff of the learning evaluation measure and the size of the samples.

In the following, we define the experimental methodology (Sect. 3), as well as the experimental setup (Sect. 4) permitting the investigation of our three research themes. Recall that these three research themes address the role of the sample, the role of the learning evaluation measure, and how the sample size and the learning evaluation measure interact, respectively. Experimental results are reported and discussed in Sect. 5.

3 Methodology

In this section, we define the methodology that permits the identification of best practices for the three research themes mentioned above. As discussed in Sect. 2.1.2, we assume that the learned model will be deployed on a single retrieval system, with the sample generated by using a standard weighting model. For learning, documents in the sample are labelled using high quality relevance assessments (e.g. from TREC) that are already available.

We investigate the research questions and hypotheses of our three research themes across different scenarios, including different types of information needs, learning to rank techniques, and corpora. To investigate each of our research questions, we perform empirical experiments using multiple query sets on several learning to rank test collections. In particular, query sets are grouped into training, validation and testing sets with no overlap of queries. For instance, for a given query set (HP04, NP04 etc.), LETOR v3.0 GOV prescribes five folds, each with separate training, validation and testing query sets. On a given testing query set, the test evaluation measure and its rank cutoff are pre-defined—to use the experimental design terminology, this is the dependent variable. Then, on the corresponding training query set, the “factor of interest” (independent variable) for a particular learning to rank technique is varied (namely sample size, or evaluation measure and cutoff). For each setting of a learning to rank technique (e.g. sample size, learning evaluation measure and cutoff), a model is learned. The effectiveness of this learned model is then evaluated on the test query set using the test evaluation measure. By comparing across different learning settings, the impact of the factor being varied can be assessed.

Within this framework, the columns of Table 3 detail the experimental design for each of our three research themes on a given pair of training/testing query sets. In particular, to investigate the role of the sample size, the original samples for the train and test query sets are both cropped to various different sizes, while maintaining their ordering of documents. Moreover, as discussed in Sect. 2.1.2, we ensure that a learned model is not deployed on a sample that has a different size from that with which it was learned. Using this methodology, we can vary the sample size as the primary factor in the experiment, thereby simulating the smaller samples used by other learning to rank test collections. However, the learning evaluation measure and cutoff (only applicable for listwise learning to rank techniques) as well as the test evaluation measure are held fixed. In this way, we can observe the impact of sample size on the effectiveness of the learned model. Moreover, as the conclusion may change for different learning to rank techniques or depending on the document representation used by the sampling strategy (i.e. anchor text or not), we vary these as additional “second” or “other” factors of interest.

To investigate our second research theme concerning the role of the learning evaluation measure for listwise learning to rank techniques, following Table 3, we firstly fix the sample size, as well as the evaluation measure for testing. The primary factors in this experiment are the learning evaluation measure and the rank cutoff—we vary both of these concurrently, to allow any dependent effects between the choice of the learning evaluation measure and the rank cutoff to be examined. Furthermore, as a second experimental factor, this experiment is conducted for different listwise techniques, to examine if the conclusions change for different techniques.

Lastly, for our third research theme, we vary both the sample size and the learning measure cutoff within a single experiment, to determine if there is any dependence between these factors. Hence, as shown in Table 3, both the sample size and the learning evaluation measure rank cutoff form the (1st factor) independent variables of interest.

For each set of experiments, to measure if there is a significant dependence between effectiveness and the first or second factors, we use within-subject ANOVAs (Coolican 1999) to measure the probability that the observed results are caused by the null hypothesis for each factor. In particular, each query is a subject, where the dependent variable (the test evaluation measure) is observed after each variation of the independent variables. Indeed, by using ANOVAs, we can measure that the observed variance across the different independent variables is not due to a type I error.

4 Experimental setup

Our experiments across the three research themes are conducted using the methodology defined in Sect. 3, and are reported in Sect. 5. In this section, we describe the setting of these experiments, structured in three subsections: Sect. 4.1 describes the learning to rank test collections used in our experiments, Sect. 4.2 provides an overview of the used learning to rank techniques, and Sect. 4.3 describes how we vary the experimental setup for the various factors identified in Sect. 3.

4.1 Learning to rank test collections

As detailed in Sect. 3, we experiment with document samples of various sizes. To this end, the learning to rank test collections that we apply must have sufficiently large original samples to allow cropping into smaller samples.

Of all learning test collections in Table 2, we consider that only the LETOR v3.0 GOV query sets have sufficiently large samples [∼1,000 documents per query (Qin et al. 2009)] to allow smaller sample sizes to be investigated. Each of the six query sets is split into fivefolds for the purposes of cross validation (train, validation and test), and have binary relevance assessments as well as a common set of 64 features. We select three query sets from TREC 2004, covering different types of information needs with the same number of queries: HP04 (home page) and NP04 (named page) query sets both represent navigational information needs with single relevant documents; while TD04 (topic distillation) is an informational query set.Footnote 1

However, as discussed in Sect. 2.1.4, the LETOR v3.0 GOV query sets do not exhibit the largest sample sizes seen in the literature [5,000 documents per query sampled from the ClueWeb09 collection (Craswell et al. 2010)]. To investigate whether such large samples are necessary, and to strengthen our conclusions, we create a new, larger and more modern learning to rank test collection. In particular, the TREC 2009 Million query track has various queries sampled from the logs of a Web search engine, while the TREC 2009 & 2010 Web tracks addressed queries that are faceted or ambiguous in nature (which can mix informational and navigational interpretations for each query), also sampled from the logs of a Web search engine. We select three such mixed query sets of queries from these recent TREC tracks that include graded relevance assessments:

-

MQ09: 70 queries from the TREC 2009 Million query track (Carterette et al. 2010).

-

WT09: 50 queries from the TREC 2009 Web track (Clarke et al. 2010).

-

WT10: 48 queries from the TREC 2010 Web track (Clarke et al. 2011).

Lastly, we use a query log to create a purely navigational query set, so that we can directly compare and contrast results with the purely navigational HP04 and NP04 query sets from LETOR v3.0:

-

NAV06: 150 ‘head’ queries with the highest clickthrough into ClueWeb09 documents as suggested by Macdonald and Ounis (2009), obtained from the MSN 2006 query log (Craswell et al. 2009).

The underlying document corpus for the MQ09, WT09, WT10 and NAV06 query sets is the TREC ‘category B’ ClueWeb09 Web crawl (CW09B). This corpus comprises 50 million English documents, aimed at representing the first tier of a commercial search engine index. We index these documents using the Terrier retrieval system (Ounis et al. 2006), including anchor text from incoming hyperlinks. For each query, we use a light version of Porter’s English stemmer and the DPH (Amati et al. 2008) Divergence from Randomness weighting model to extract large original document samples for each query set. As we are not aware of the particular parameter settings used for BM25 when sampling in the LETOR v3.0 query sets, using DPH permits comparable sample effectiveness to BM25, without the need to train any parameter, as DPH is parameter free (Amati et al. 2008).

To cover a wide range of sample sizes, we aim to sample as many documents as possible from CW09B for each query. However, as noted in Sect. 2.1.1, the learning time of various learning to rank techniques increases with sample size. To keep our experiments feasible, we investigate the number of relevant documents identified for the query sets when the number of retrieved documents is unconstrained. Figure 3 reports the precision@k, for k up to the size of the corpus (50 million documents). We note that after rank k = 5,000, precision falls below 0.01, meaning that, on average, for every additional 100 documents retrieved, at most 1 more relevant document will be retrieved. Moreover, from Fig. 2, running AFS for the WT09 query set using 5,000 documents can take over 1.5 hours. For these reasons, we deem an original sample of 5,000 documents to be sufficient for these experiments. Indeed, on a detailed inspection of the experiments conducted for Fig. 3, we find that all three query sets have 80–90 % recall at 5,000 documents compared to an exhaustive sampling comprising the union of all documents containing any query term.

Next, recall that our experiments consider the role of anchor text in the document representation used to identify the sample and its impact on retrieval effectiveness for different types of informational need. We firstly highlight the document representations that we consider. Figure 4 illustrates the fields of an example document, in terms of the title, body, URL and anchor text. In the GOV query sets, the documents in the sample are obtained by computing BM25 on all fields, including anchor text. To facilitate analysing the impact of the document representation used in the sampling, the document samples identified using DPH on the CW09B query sets are created using two different document representations, without and with the presence of anchor text. When sampling with anchor text, the ranking of documents in the sample changes, making it more likely that the homepage documents with much anchor text will be highly ranked for a corresponding navigational query, such as the query ‘Bing’ for the example document in Fig. 4.

For the CW09B query sets, we calculate a total of 75 document features for all documents in the samples, covering most of the features used in the learning to rank literature (Qin et al. 2009). These features are summarised in Table 4 and organised into the following five classes:

-

Standard weighting models (WM) computed on each field of each document, namely title, body, anchor-text and URL. We use four weighting models, namely BM25 (Robertson et al. 1992), PL2 (Amati 2003), DPH (Amati et al. 2008) and LM with Dirichlet smoothing (Zhai and Lafferty 2001). We note that the chosen document representation for generating the sample does not have an impact on the availability of the four fields—for instance, we may use a document representation without anchor text for generating the sample, but we can still calculate WM features based on the anchor text.

-

Link analysis-based features (LA) typically identify authoritative documents. We deploy PageRank and incoming and outgoing link counts.

-

Proximity weighting models (WMP) boost documents where the query terms occur in close proximity. For each field, we deploy proximity language models (Metzler and Croft 2005) and DFR models (Peng et al. 2007) based on Markov Random Fields.

-

URL features (URL) (e.g. short URLs) are often a useful feature for identifying homepages (Kraaij et al. 2002).

-

Spam documents are present in the realistic CW09B corpus. We include the fusion score by (Cormack et al. 2011) as a feature.

All parameters of the document features (e.g. b in BM25, c in PL2, μ in LM with Dirichlet smoothing) remain at their recommended settings, as implemented by the Terrier retrieval platform, namely b = 0.75, c = 1, and μ = 2500. Finally, as different features are measured on different scales, we follow the common practice (Liu 2009) [and in line with LETOR (Qin et al. 2009)] to normalise all features to lie between 0 and 1 for each query.

4.2 Learning to rank techniques

Various learning to rank approaches in the literature fall into one of three categories, namely pointwise, pairwise and listwise (Liu 2009). In this work, we deploy learning to rank techniques representative of each of the three categories, all of which are either freely available as open source or widely implemented:

-

GBRT (Pointwise), also known as Gradient Boosted Regression Trees, produces a set of regression trees that aim to predict the label of documents in the training data (Friedman 2000). The tree learned at each iteration only needs to find the difference between the target label and the prediction of the previous tree(s). We use the RT-Rank implementation (Weinberger et al. 2010).Footnote 2 However, going further than the open source implementation, we choose the number of trees that performs highest on the validation data as the final learned model.

-

RankBoost (Pairwise) constructs a linear combination of weak rankers (in our case the various document features), based on a loss function defined as the exponential difference between the labels of pairs of documents (Freund et al. 2003).

-

RankNet (Pairwise) constructs a neural network, based on a loss function encapsulating the cross entropy of pairs of objects being correctly ranked (Burges et al. 2005).

-

LambdaMART (Pairwise/Listwise Footnote 3 ) also deploys boosted regression trees internally, but the training of the trees consider NDCGFootnote 4 to obtain the gradient of the surrogate loss function between pairs of documents (Wu et al. 2008). We use the implementation of the Jforests open source package (Ganjisaffar et al. 2011).Footnote 5 A LambdaMART approach was the winning entry in the Yahoo! learning to rank challenge (Chapelle and Chang 2011). The model of the highest performing iteration on the validation data is chosen.

-

AFS (Listwise), also known as Automatic Feature Selection, obtains a weight for the linear combination of the most effective feature at each iteration, which is then added to the set of features selected in the previous iteration(s) (Metzler 2007). In our implementation, we use simulated annealing (Kirkpatrick et al. 1983) to find the combination weight for each feature that maximise NDCG@1,000. Note that such weights are obtained one by one, with no retraining of the weights of those already selected features. When validation data is used, the model of the highest performing iteration as measured using the same evaluation measure on the validation data is chosen [in this manner, the validation data is used to determine the correct number of AFS iterations, as suggested by Liu (2009)].

-

AdaRank (Listwise) optimises feature weights by applying boosting (Xu and Li 2007). In particular, at each iteration, a distribution of the importance of each query is updated, and the weight of the feature that improves the overall performance of those queries, after weighting by the importance distribution, is added to the model. A feature can be selected multiple times and its weight consequently updated. As suggested by Liu (2009), we use validation data to set the number of iterations, so as to prevent the overfitting of models on the training set.

4.3 Experimental factors

In order to address the hypotheses and research questions identified in Sects. 2.1.4 and 2.2.3, we analyse the outcome of various learning to rank settings on a corresponding test query set. Table 5 details how the query sets are used for training, validation and testing for the learning to rank test collection based on the GOV and CW09B corpora. In particular, for the GOV query sets, we use the fivefolds prescribed in LETOR v3.0, including the splits of the topics into separate training, validation and testing sets for each fold; we report the mean over all of the test topics from all folds. For the CW09B corpus, we retain a setting as realistic to a TREC deployment as possible. In particular, for WT10, we use the query set from the preceding TREC year (i.e. WT09) for training (60 %) and validation (40 %). As no training queries were available for WT09, we use queries from the TREC 2009 Million Query track (MQ09) for training (60 %) and validation (40 %). Similarly, we split the 150 NAV06 queries into equal sets, to form a single fold with separate training, validation and testing query subsets.

Table 5 also records the test evaluation measure used to test the effectiveness of the learned models on each query set. Indeed, for the GOV query sets, the measure used for each query set matches the official measure used by the corresponding TREC Web track (Craswell and Hawking 2004). For the CW09B query sets, NDCG@20 was used for the evaluation measure in TREC 2009 (Clarke et al. 2010), while for TREC 2010, ERR@20 was used (Clarke et al. 2011). In the following experiments, we use both measures, and for completeness, we additionally use MAP for both WT09 and WT10 query sets. For the NAV06 navigational query set, we report mean reciprocal rank (MRR).

Aside from the deployed learning to rank technique, there are four factors in our experiments that we defined in Sect. 3, namely: the size of the sample; the document representation used to generate the sample; the learning evaluation measure using within the loss function of the AFS and AdaRank listwise learning to rank techniques; and the rank cutoff of the learning evaluation measure. Indeed, as per the methodology prescribed in Sect. 3, we differentiate between the test evaluation measure, which remains fixed, and the learning evaluation measure used by a listwise learning to rank technique, which we vary. The settings for each of the factors in our experiments are as follows:

-

Sample Document Representation—For GOV, BM25 with anchor text, as provided by LETOR v3.0. For CW09B, DPH with or without anchor text, as illustrated in Fig. 4.

-

Sample Size—We experiment with different sample sizes, up to the maximum permitted by the original samples of size 1,000 for GOV and 5,000 for CW09B:

$$ \begin{aligned} \hbox{GOV} &= \{10,20,50,100,200,300,400,500, 600,700,800,900,1,000\};\\ \hbox{CW09B} &= \{10,20,50,100,500, 1,000,1,500,2,000,3,000,4,000,5,000.\} \end{aligned} $$ -

Learning Evaluation Measures—We experiment with a selection of standard measures for use within the loss functions of the listwise learning to rank techniques, which may be different from the test evaluation measure used to assess the retrieval performance of the resulting learned model: Precision (P), Mean Average Precision (MAP), Mean Reciprocal Rank (MRR), normalised Discounted Cumulative Gain (NDCG) (Järvelin and Kekäläinen 2002) and Expected Reciprocal Rank (ERR) (Chapelle et al. 2009).

-

Learning Evaluation Measure Cutoffs—We also vary the rank cutoff of the learning evaluation measures. The cutoff values used for both GOV and CW09B are the same values used for the sample size, as follows:

$$ \begin{aligned} \hbox{GOV} &= \{10,20,50,100,200,300,400,500, 600,700,800,900,1,000\}; \\ \hbox{CW09B} &= \{10,20,50,100,500, 1,000,1,500,2,000,3,000,4,000,5,000\}. \end{aligned} $$

Our chosen sample sizes (and cutoffs) cover small and large sizes observed in existing learning to rank test collections and in the literature. For the learning evaluation measures, we include both standard measures such as precision and MAP, in addition to measures that consider graded relevance judgements (NDCG and ERR). Moreover, due to the interaction of the sample size with the learning evaluation measure cutoff, the learning settings that would produce identical results are omitted. For example, if sample size is 500, MAP to rank 1,000 (i.e. MAP@1,000) is identical to MAP@500. All experimental factors, along with the deployed learners, are summarised for both GOV and CW09B in Table 6.

5 Results

In the following, we experiment to address each of our three research themes: Sect. 5.1 addresses the theme concerning the properties of the sample; Sect. 5.2 addresses the learning evaluation measure and cutoff research theme; Sect. 5.3 analyses the final research theme concerning the dependence between the sample size and the learning evaluation measure cutoff, as well as the features selected in the obtained learned models.

5.1 Sample size

Figures 5, 6 and 7 show the impact of different sample sizes on the test performance, for the GOV (Fig. 5), as well as the CW09B query sets, without and with the use of anchor text when generating the sample (Figs. 6 & 7, respectively). In each figure, the test performance of a learning to rank technique is represented by a line. For the listwise techniques—based on insights that we will discuss in Sect. 5.2—in this section, we fix the learning measure and rank cutoff to NDCG@10.

We firstly make some general observations from Figs. 5, 6 and 7. With respect to sample size, a common trend can be observed across all figures: retrieval performance generally increases as the sample size increases, but stabilises after a sufficiently large sample. However, the size at which effectiveness stabilises can vary widely: for some query sets, all but the smallest sample sizes appear to be effective-indeed, for the HP04 query set (Fig. 5a), even 10 documents appears to be very effective; yet for other query sets, effectiveness continues to rise until much larger samples—e.g. 1,000 in Fig. 6d. At the extreme end, we note that for the NAV06 query set without anchor text (Fig. 6g), a marked rise in effectiveness occurs when sample size reaches 5,000. Moreover, across all query sets, the trends exhibited by the RankNet learning to rank technique represent outliers, which we discuss further below.

In the following, we expand upon this analysis, by examining each of the query sets and learning to rank techniques in turn (Sects. 5.1.1 and 5.1.2), before concluding on each of our defined hypotheses in Sect. 5.1.3.

5.1.1 Query set analysis

Taking each query set in turn, we make observations about the relationship between sample size and effectiveness across these different query sets. Firstly, for the navigational query sets HP04 and NP04 (Figs. 5a, b), we note that a reasonable performance is obtained at small sample sizes, suggesting that most of the relevant documents that can be ranked highly by the learned models have been obtained within a sample of that size. In particular, the LambdaMART learning to rank technique achieves its highest HP04 MRR at sample size 10, while other techniques are all competitive from size 50. On the NP04 query set, sample sizes of 50 or larger are most effective. In contrast, for topic distillation TD04 (Fig. 5c), samples up to 400–600 documents are required before the most effective models are obtained. This larger sample size is expected, as topic distillation represents information needs that are more informational in nature, and suggests that relevant documents may appear at lower ranks in the samples for this query set.

The results for the ClueWeb09 query sets (WT09, WT10 and NAV06) are reported twice: in Fig. 6, documents are sampled using a document representation that does not consider anchor text; in Fig. 7, the sampling document representation includes anchor text in addition to the title, URL and body fields. Moreover, the results for WT09 and WT10 query sets are reported for each of the three test evaluation measures, namely ERR@20, NDCG@20 and MAP. Indeed, while the used evaluation measure changed between the TREC 2009 and TREC 2010 Web tracks from NDCG@20 to ERR@20 (see Table 5), we find that the choice of measure for testing impacts on the obtained conclusions, hence we provide figures for both, while adding the classical MAP measure permits observations about how sample size impacts effectiveness at deeper ranks.

Overall, we note that smaller samples result in lower effectiveness for the ClueWeb09 query sets. However, the degradation observed is dependent on the test evaluation measure being used. Indeed, for ERR@20, an effective model is obtainable with a sample size of 20–50 documents. However, for NDCG@20, in general, maximal effectiveness is not achieved for samples smaller than 100 (WT09) or 1,000 (WT10). For MAP, 1,000–3,000 documents is necessary for maximal effectiveness. These results show that the choice of test measure is important when making a decision on sample size. Indeed, the ERR measure discounts the contributions of documents that appear after highly relevant documents, hence relevant documents retrieved further down the ranking matter less for ERR than, for example, NDCG. This explains why smaller sample sizes are more effective for ERR than NDCG. In contrast, MAP needs the largest sized samples, explained by its recall component.

For the purely navigational NAV06 query set, the trend is markedly different from the WT09 and WT10 sets, whereby large improvements are obtained for very large sample sizes. In particular, when anchor text is not used (Fig. 6g), a sample size of 5,000 markedly improves over smaller sample sizes, as many of the relevant documents are not found in these smaller samples. When the anchor text document representation is used for sampling (Fig. 7g), more relevant documents are found in the smaller samples, resulting in increased effectiveness for smaller samples, due to the ability of anchor text to identify homepages (Hawking et al. 2004). However, the trends for NAV06 do not mirror the HP04 and NP04 samples on the GOV corpus, in that a sample size of 50 is still insufficient for fully effective retrieval, even with the inclusion of anchor text in the document representation used for generating the sample.

The need for bigger samples for CW09B than GOV suggests that relevant documents occur at deeper ranks in the sample than for GOV, and hence the sample must be larger to compensate, even for navigational information needs. Indeed, we note that CW09B is approximately 50 times larger than the GOV corpus. It also appears to represent a more difficult corpus, i.e. identifying relevant documents is comparatively more challenging. For instance, the presence of spam documents in CW09B—as discussed by Cormack et al. (2011)—will likely cause relevant documents to be ranked after spam in the sample. On the other hand, in Fig. 7g, the benefit of extending past size 2,000 is less pronounced, suggesting that the sample size of 5,000 used by Craswell et al. (2010) is perhaps unnecessary for information needs that are purely navigational in nature.

5.1.2 Learning to rank technique analysis

Taking each learning to rank technique in turn, we can make observations about their suitability for different sample sizes. To aid in this analysis, Table 7 shows the mean and standard deviation of the effectiveness of each learning to rank technique, for each query set and test evaluation measure.

In general, all techniques behave similarly with the exception of RankNet. Indeed, RankNet (denoted by ( ) in Figs. 5, 6 and 7) generally does not perform as high as other techniques for the GOV query sets, while for WT09 and WT10, it degrades retrieval performance for all sample sizes larger than 20 when evaluated using NDCG@20 or ERR@20 measures, and 100 for MAP. This suggests that it is unable to resolve the additional pairwise constraints that are exponentially added as sample size increases. For the navigational query sets with less relevant documents, the number of pair constraints are less, and hence RankNet exhibits less sensitivity. In contrast to RankNet, RankBoost

) in Figs. 5, 6 and 7) generally does not perform as high as other techniques for the GOV query sets, while for WT09 and WT10, it degrades retrieval performance for all sample sizes larger than 20 when evaluated using NDCG@20 or ERR@20 measures, and 100 for MAP. This suggests that it is unable to resolve the additional pairwise constraints that are exponentially added as sample size increases. For the navigational query sets with less relevant documents, the number of pair constraints are less, and hence RankNet exhibits less sensitivity. In contrast to RankNet, RankBoost  —also a pairwise technique—performs similarly to other learning to rank techniques, showing somewhat more robustness to larger sample sizes. Nevertheless, from Figs. 5, 6 and 7, the performances of both RankBoost and RankNet are relatively better for smaller sample sizes on the CW09B query sets than for the GOV query sets. This is likely due to the use of graded relevance assessments, which allow more pairwise preferences to be better expressed than for binary relevance assessments with the same size of sample.

—also a pairwise technique—performs similarly to other learning to rank techniques, showing somewhat more robustness to larger sample sizes. Nevertheless, from Figs. 5, 6 and 7, the performances of both RankBoost and RankNet are relatively better for smaller sample sizes on the CW09B query sets than for the GOV query sets. This is likely due to the use of graded relevance assessments, which allow more pairwise preferences to be better expressed than for binary relevance assessments with the same size of sample.

GBRT  produces a robust retrieval performance, giving a reasonable effectiveness at small sample sizes. However, for WT09, its performance is not comparable to other effective techniques for larger sample sizes. On the other hand, the state-of-the-art LambdaMART

produces a robust retrieval performance, giving a reasonable effectiveness at small sample sizes. However, for WT09, its performance is not comparable to other effective techniques for larger sample sizes. On the other hand, the state-of-the-art LambdaMART  exhibits effective performances across all sample sizes and query sets.

exhibits effective performances across all sample sizes and query sets.

Lastly, the AFS and AdaRank listwise techniques also produce robust and effective overall performances. On the GOV query sets, AdaRank  is generally more effective. On HP04 and TD04, the difference is not marked, but it is more marked for NP04. However, for WT09, WT10 and NAV06, AdaRank is less effective for very large samples (≥1,000), while AFS is more stable.

is generally more effective. On HP04 and TD04, the difference is not marked, but it is more marked for NP04. However, for WT09, WT10 and NAV06, AdaRank is less effective for very large samples (≥1,000), while AFS is more stable.

To conclude, while RankNet exhibits the worst overall performance in Table 7, LambdaMART followed by AFS and AdaRank are the most effective techniques across all sample sizes. These performances are in line with LambdaMART’s top performance in the Yahoo! Learning to Rank Challenge (Chapelle and Chang 2011), and the observations concerning the effectiveness of listwise techniques reported by Liu (2009).

5.1.3 Hypotheses analysis

To address each of the hypotheses and research questions defined in Sect. 2.1.4, we deploy ANOVAs. In particular, Table 8 presents the p values of the within-subject ANOVAs computed over all queries within a given query set, while varying the sample size and learning to rank technique. The a suffix denotes when the sample for a query set was obtained using anchor text. For example, WT09 a MAP represents the ANOVA calculated across all WT09 queries, learning to rank techniques, and sample sizes, where the sample document representation includes anchor text, and the queries are evaluated using the MAP test evaluation measure. From the ANOVAs, we exclude RankNet, for the reasons stated in Sect. 5.1.2, as we found that its high variance under large samples introduced sensitivities to sample size not present for the other learning to rank techniques.

In Hypothesis 1, we postulated that sample size should affect retrieval performance. On consideration of the Sample Size column of Table 8, we find that sample size significantly impacts on the NP04, TD04 and NAV06 query sets, as well as WT09 and WT10 for only the MAP test evaluation measure (a single outlier is WT09 a evaluated by ERR@20, which is explained below). Indeed, for MAP, deeper samples are required than for other measures. In general, the results in Table 8 assert the importance of the sample size for effective learning, and mirror our general observations across Figs. 5, 6 and 7. Overall, we conclude that Hypothesis 1 is generally validated.

Hypothesis 2 is concerned with the types of information needs. In particular, we argued that the impact of the sample size will vary for different information needs, while also postulating that the use of anchor text in the sampling document representation could affect the results. From Fig. 5a, we observed that for the navigational HP04 query set, a deep sample is not required for effective results. This is mirrored in Table 8, where we find that sample size has no significant impact for HP04 effectiveness. This contrasts with, for example, the informational TD04 query set, where a significant dependence (p = 8.101e−11) is observed, as would be expected from Fig. 5c. However, for the NAV06 query set, a significant dependence on sample size is observed, regardless of whether anchor text is present in the sampling document representation. Indeed, anchor text markedly improves the effectiveness of smaller samples for navigational queries—e.g. while in Fig. 5a the trend for HP04 is nearly flat, NAV06 (Fig. 7g) has a markedly improved effectiveness for sample sizes 100–4,000 when anchor text is used. However, anchor text for NAV06 does not exhibit the flat trend observed for HP04, suggesting that large samples are still necessary on the larger ClueWeb09 corpus, as illustrated by the significant p values for NAV06 and NAV06a in the Sample Size column of Table 8 (p = 4.429e−05 and 1.797e−06, respectively). To illustrate this, Table 9 reports the recall measure for samples with sizes 1,000 and 5,000, with and without anchor text. For the entirely navigational NAV06 query set, more relevant documents are ranked in the top 1,000 documents of the sample when using anchor text, explaining the improved effectiveness.

On the other hand, for the mixed queries of WT09 and WT10, comparing across Figs. 6 and 7, the general magnitude of effectiveness values are mostly unchanged by the addition of anchor text. We note a greater variation between the performances of different learning to rank techniques on WT09 when anchor text is deployed, suggesting that for these queries, anchor text produces a noisier sample, in which some learning to rank techniques struggle to properly rank relevant documents. This is manifested by significant values for WT09 a in Table 8 (e.g. p = 0.03584). This may be due to the contrasting nature of the WT09 query set and its corresponding MQ09 training queries. However, in general, from Table 9, we see that sampling with anchor text reduces the recall for the mixed Web track query sets. The likely cause of this is that anchor text is often spammed to improperly manipulate Web search results (Castillo et al. 2006), which may explain the adverse effect of the anchor text samples on recall. On the other hand, for the GOV corpus that only contains documents and links administered by various US government departments, there are less adversarial issues relating to anchor text.

In summary, we find that Hypothesis 2 is partially validated: the impact of the sample size can depend on the type of information need, while the presence of anchor text is important for assuring the effectiveness of smaller sample sizes for navigational queries.