Abstract

Identifying groups of variables that may be large simultaneously amounts to finding out which joint tail dependence coefficients of a multivariate distribution are positive. The asymptotic distribution of a vector of nonparametric, rank-based estimators of these coefficients justifies a stopping criterion in an algorithm that searches the collection of all possible groups of variables in a systematic way, from smaller groups to larger ones. The issue that the tolerance level in the stopping criterion should depend on the size of the groups is circumvented by the use of a conditional tail dependence coefficient. Alternatively, such stopping criteria can be based on limit distributions of rank-based estimators of the coefficient of tail dependence, quantifying the speed of decay of joint survival functions. A performance score calculated by ten-fold cross-validation allows the user to select one among the various algorithms and set its tuning parameters in a data-driven way.

Similar content being viewed by others

References

Agrawal, R., Srikant, R., et al.: Fast algorithms for mining association rules. In: Proceedings of the 20th International Conference on Very Large Data Bases, VLDB, vol. 1215, pp. 487–499 (1994)

Bacro, J.N., Toulemonde, G.: Measuring and modelling multivariate and spatial dependence of extremes. J. Soc. Française Stat. 154(2), 139–155 (2013)

Bücher, A., Dette, H.: Multiplier bootstrap of tail copulas with applications. Bernoulli 19(5A), 1655–1687 (2013)

Chiapino, M., Sabourin, A.: Feature clustering for extreme events analysis, with application to extreme stream-flow data. In: ECML-PKDD 2016, Workshop NFmcp2016 (2016)

Coles, S.G.: Regional modelling of extreme storms via max-stable processes. J. R. Stat. Soc. Ser. B (Methodol.) 55(4), 797–816 (1993)

Coles, S., Heffernan, J., Tawn, J.: Dependence measures for extreme value analyses. Extremes 2(4), 339–365 (1999)

De Haan, L., Zhou, C.: Extreme residual dependence for random vectors and processes. Adv. Appl. Probab. 43(01), 217–242 (2011)

Draisma, G., Drees, H., Ferreira, A., de Haan, L. Tail dependence in independence. Eurandom preprint (2001)

Draisma, G., Drees, H., Ferreira, A., de Haan, L.: Bivariate tail estimation: dependence in asymptotic independence. Bernoulli 10(2), 251–280 (2004)

Drees, H.: A general class of estimators of the extreme value index. J. Stat. Plan. Inference 66(1), 95–112 (1998a)

Drees, H.: On smooth statistical tail functionals. Scand. J. Stat. 25(1), 187–210 (1998b)

Eastoe, E.F., Tawn, J.A.: Modelling the distribution of the cluster maxima of exceedances of subasymptotic thresholds. Biometrika 99(1), 43–55 (2012)

Einmahl, J.H.: Poisson and Gaussian approximation of weighted local empirical processes. Stoch. Process. Appl. 70(1), 31–58 (1997)

Einmahl, J.H., Krajina, A., Segers, J., et al.: An M-estimator for tail dependence in arbitrary dimensions. Ann. Stat. 40(3), 1764–1793 (2012)

Goix, N., Sabourin, A., Clémençon, S.: Sparse representation of multivariate extremes with applications to anomaly ranking. In: Proceedings of the 19th AISTAT Conference, pp 287–295 (2016)

Goix, N., Sabourin, A., Clémençon, S.: Sparse representation of multivariate extremes with applications to anomaly detection. J. Multivar. Anal. 161, 12–31 (2017)

Ledford, A.W., Tawn, J.A.: Statistics for near independence in multivariate extreme values. Biometrika 83(1), 169–187 (1996)

Peng, L.: Estimation of the coefficient of tail dependence in bivariate extremes. Stat. Probab. Lett. 43(4), 399–409 (1999)

Pickands, III J.: Statistical inference using extreme order statistics. Ann. Stat. 3(1), 119–131 (1975)

Qi, Y.: Almost sure convergence of the stable tail empirical dependence function in multivariate extreme statistics. Acta Math. Appl. Sin. (English series) 13(2), 167–175 (1997)

Ramos, A., Ledford, A.: A new class of models for bivariate joint tails. J. R. Stat. Soc. Ser. B 71(1), 219–241 (2009)

Resnick, S.I.: Heavy-Tail phenomena. In: Springer Series in Operations Research and Financial Engineering. Springer, New York (2007)

Resnick, S.I.: Extreme Values, Regular Variation and Point Processes. Springer Series in Operations Research and Financial Engineering. Springer, New York, reprint of the 1987 original (2008)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series, No. 28. Princeton University Press, Princeton (1970)

Schlather, M., Tawn, J.A.: Inequalities for the extremal coefficients of multivariate extreme value distributions. Extremes 5(1), 87–102 (2002)

Schlather, M., Tawn, J.A.: A dependence measure for multivariate and spatial extreme values: Properties and inference. Biometrika 90(1), 139–156 (2003)

Shorack, G.R., Wellner, J.A.: Empirical Processes with Applications to Statistics. SIAM, Philadelphia (2009)

Smith, R.: Max-stable processes and spatial extremes. Unpublished manuscript (1990)

Stephenson, A.: Simulating multivariate extreme value distributions of logistic type. Extremes 6(1), 49–59 (2003)

Tawn, J.A.: Modelling multivariate extreme value distributions. Biometrika 77 (2), 245–253 (1990)

van der Vaart, A.W.: Asymptotic Statistics, Cambridge Series in Statistical and Probabilistic Mathematics, vol. 3. Cambridge University Press, Cambridge (1998)

van der Vaart, A.W., Wellner, J.A.: Weak Convergence and Empirical Processes. Springer, New York (1996)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by a public grant as part of the Investissement d’avenir project, reference ANR-11-LABX-0056-LMH, LabEx LMH.

Appendices

Appendix A: Proofs

Proof of Proposition 1

For ∅ ≠ a ⊂{1,…, d} and x ∈ [0, ∞)a, put

If a = {1,…, d}, then just write L rather than L{1,…, d}. Note that La(x) = L(xea) with  and that L{j}(xj) = R{j}(xj) and thus W(L{j}(xj)) = W{j}(xj). Einmahl et al. (2012, Theorem 4.6) show that, in the space ℓ∞([0, T]d) and under Conditions 1, 2 and 3, we have weak convergence

and that L{j}(xj) = R{j}(xj) and thus W(L{j}(xj)) = W{j}(xj). Einmahl et al. (2012, Theorem 4.6) show that, in the space ℓ∞([0, T]d) and under Conditions 1, 2 and 3, we have weak convergence

as n →∞. Here, we have taken a version of the Gaussian process W such that the trajectories x↦W(L(x)) are continuous almost surely.

As in Eq. 7, we have, for ∅≠a ⊂{1,…, d} and x ∈ [0, ∞)a, the identity

where xb = (xj)j∈b. Hence, we can view the vector \((\sqrt {k}(\widehat {r}_{{a}} - r_{{a}} ))_{\emptyset \ne {a} \subset \{1,\ldots ,d\}}\) as the result of the application to \(\sqrt {k}(\widehat {\ell }-\ell )\) of a bounded linear map from ℓ∞([0, T]d) to \({\prod }_{\emptyset \ne {a} \in \{1,\ldots ,d\}} \ell ^{\infty }([0, T]^{{a}})\). By the continuous mapping theorem, we obtain, in the latter space, the weak convergence

Here we used ℓ(xbeb) = ℓb(xb).

The set-indexed process W satisfies the remarkable property that W(A ∪ B) = W(A) + W(B) almost surely whenever A and B are disjoint Borel sets of [0, ∞]d ∖{∞} that are bounded away from ∞: indeed, Eq. 9 implies \({\mathbb {E}}[\{W(A \cup B) - W(A) - W(B)\}^{2}] = 0\). It follows that the trajectories of W obey the inclusion-exclusion formula, so that, for ∅ ≠ a ⊂{1,…, d} and x ∈ [0, ∞)a, we have, almost surely,

We can make this hold true almost surely jointly for all such a and x: first, consider points x with rational coordinates only and then consider a version of W by extending Wa to points x with general coordinates via continuity. Similarly, since W{j}(0) = W(∅) = 0 almost surely, we have

We have thus shown weak convergence as stated in Eq. 10. □

Proof of Corollary 1

The weak convergence statement (13) is a special case of Eq. 10: set x = 1a. The covariance formula (14) follows from the fact that

the first equality follows from Eq. 9 and the last one from Eq. 3. We obtain Eq. 14 by expanding Ga = Za(1a) using Eq. 10 and working out \({\mathbb {E}}[ G_{{a}} G_{{a}^{\prime }} ]\) with the above identity. □

Proof of Proposition 2

Let a = {a1,…, aS}⊂{1,…, d} with S = |a|≥ 2 and such that μ(Δa) > 0. In view of Eq. 12, we have κa = ga(τa) and \(\widehat {\kappa }_{{a}} = g_{{a}}(\widehat {\tau }_{{a}} )\) where τa = (χa, χa∖a1,…, χa∖aS), \(\widehat \tau _{{a}} = (\widehat \chi _{{a}}\), \(\widehat \chi _{{a}\setminus {a}_{1}}\), …, \(\widehat \chi _{{a}\setminus {a}_{S}})\), and

Let ∇ga(x) denote the gradient vector of ga evaluated x and let 〈⋅,⋅〉 denote the scalar product in Euclidean space. Proposition 1 combined with the delta method as in van der Vaart (1998, Theorem 3.1) gives, as n →∞,

the weak convergence holding jointly in a by Slutsky’s lemma and Proposition 1. The partial derivatives of ga are

Evaluating these at x = τa and using \({\sum }_{j \in {a}} \chi _{{a} \setminus j} - (S-1) \chi _{{a}} = \mu ({\Delta }_{{a}})\) as in Eqs. 11 and 12, we find that

in accordance to the right-hand side in Eq. 16.

To calculate the asymptotic variance \(\sigma _{\kappa ,{a}}^{2}\), we introduce a few abbreviations: we write Rb = Rb(1b) and \(W_{b}^{\cap } = W_{b}(\boldsymbol {1}_{b}) = W(R_{b})\) for ∅≠b ∈{1,…, d} and we put Wj = W{j}(1) for j = 1,…, d, so that \(G_{{a}} = W_{{a}}^{\cap } - {\sum }_{j\in {a}}\dot {\chi }_{j,{a}} W_{j}\). We find

From the proof of Proposition 1, recall that W(A ∪ B) = W(A) + W(B) almost surely for disjoint Borel sets A and B of [0, ∞]d ∖{∞} bounded away from ∞; moreover, for such A and B, the variables W(A) and W(B) are uncorrelated. Since Ra∖i is the disjoint union of Ra and Ra∖i ∖ Ra, we have therefore \(W_{{a}\setminus i}^{\cap } = W_{{a}}^{\cap } + W(R_{{a}\setminus i} \setminus R_{{a}})\) almost surely. In addition, \({\sum }_{i \in {a}} \chi _{{a}\setminus i} = \mu ({\Delta }_{{a}}) + (S-1)\chi _{{a}}\) by Eq. 11 applied to ν = μ. As a consequence,

where

The S + 1 variables \(W_{{a}}^{\cap } = W(R_{{a}})\) and W(Ra∖j ∖ Ra), j ∈ a, are all uncorrelated, since they involve evaluating W at disjoint sets; Wj = W(R{j}) is uncorrelated with W(Ra∖j ∖ Ra), for the same reason. Moreover, \({\mathbb {E}}[ W_{{a}}^{\cap } W_{j} ] = {\Lambda } (R_{{a}} \cap R_{\{j\}}) = {\Lambda } (R_{{a}}) = \chi _{{a}}\) and similarly \({\mathbb {E}}[ W(R_{{a}\setminus i} \setminus R_{{a}}) W_{j} ] = {\Lambda } (R_{{a}\setminus i} \setminus R_{{a}}) = \chi _{{a} \setminus i} - \chi _{{a}}\) if i, j ∈ a and i ≠ j. Hence

As \({\sum }_{j \in {a}} (\chi _{{a} \setminus j} - \chi _{{a}}) = \mu ({\Delta }_{{a}})- \chi _{{a}}\) and \({\sum }_{i \in {a} \setminus j}(\chi _{{a} \setminus i} - \chi _{{a}}) = \mu ({\Delta }_{{a}}) - \chi _{{a},j}\), we obtain

Recall κa(x) in Eq. 15. We have

It follows that \(\dot {\kappa }_{j,{a}} = - \chi _{{a}}^{-2} K_{{a}, j} / (1/\kappa _{{a}})^{2} = - K_{{a},j} / \mu ({\Delta }_{{a}})^{2}\). By Eq. 30, we find that \(\sigma _{\kappa ,{a}}^{2} = \mu ({\Delta }_{{a}})^{-4} {\mathbb {V}\text {ar}}(H_{{a}} )\) is equal to the right-hand side of Eq. 17. □

Proof of Proposition 3

We only need to prove that \(\widehat {\sigma }^{2}_{\kappa ,{a}} = \sigma _{\kappa , {a}}^{2} + \mathrm {o}_{{\mathbb {P}}}(1)\) as n →∞. In view of the expressions (17) and (19) for \(\sigma _{\kappa , {a}}^{2}\) and \(\widehat {\sigma }_{\kappa ,{a}}\), it is enough to show that \(\dot {\kappa }_{j,{a}, n} = \dot {\kappa }_{{a},j} + \mathrm {o}_{{\mathbb {P}}}(1)\), with \(\dot {\kappa }_{j,{a}, n}\) in Eq. 18; indeed, Corollary 1 already gives consistency of \(\widehat {\mu }({\Delta }_{{a}})\) and \(\widehat {\chi }_{b}\). Now since \(2^{-1} k^{1/4} \{ \kappa _{{a}}(\boldsymbol {1}_{{a}} + k^{-1/4}\boldsymbol {e}_{j}) - \kappa _{{a}}(\boldsymbol {1}_{{a}} - k^{-1/4}\boldsymbol {e}_{j} ) \} \to \dot {\kappa }_{{a},j}\) as n →∞, a sufficient condition is that for some ε > 0,

In turn, Eq. 31 follows from weak convergence of \(k^{1/2}(\widehat {\kappa }_{{a}} - \kappa _{{a}} )\) as n →∞ in the space ℓ∞([1 − ε, 1 + ε]a). In light of the expressions of \(\widehat {\kappa }_{{a}}\) and κa in terms of the (empirical) joint tail dependence functions \(\widehat {r}_{b}\) and rb, respectively, weak convergence of \(k^{1/2}(\widehat {\kappa }_{{a}} - \kappa _{{a}} )\) follows from Proposition 1 and the functional delta method (van der Vaart 1998, Theorem 20.8). The calculations are similar to the ones for the Euclidean case in the proof of Proposition 2; an extra point to be noted is that if a is such that μ(Δa) > 0, then the denominator in the definition of κa(x) in Eq. 31 is positive for all x in a neighbourhood of 1a. □

Proof of Proposition 4

Proposition 1 implies, as n →∞, the weak convergence

Now \(\widehat \eta _{{a}}^{P} = g(\widehat r_{{a}}(\boldsymbol {2}_{{a}}), \widehat r_{{a}} (\boldsymbol {1}_{{a}}))\) and ηa = 1 = g(ra(2a), ra(1a)) = g(2χa, χa), with g(x, y) = log(2)/ log(x/y); note that the function ra is homogeneous. The gradient of g is ∇g(x, y) = log(2)(log(x/y))− 2(−x− 1, y− 1), so that the delta method gives

The first part of the assertion follows. As for the variance,

The function ra is homogeneous of order 1, so that ∂jra is constant along rays, that is, the function 0 < t↦∂jra(tx) is constant. Moreover, the measure Λ is homogeneous of order 1 too. In view of Eqs. 9 and 10, it follows that \({\mathbb {V}\text {ar}}(Z_{{a}}(t \boldsymbol {x}) ) = t {\mathbb {V}\text {ar}}(Z_{{a}}(\boldsymbol {x} ) )\) for t > 0; in particular \({\mathbb {V}\text {ar}}(Z_{{a}}(\boldsymbol {2}_{{a}}) = 2 {\mathbb {V}\text {ar}}(Z_{{a}}(\boldsymbol {1}_{{a}} )\). Further, \(\chi _{{a}} = (\mathrm {d} r_{{a}}(t, \ldots , t) / \mathrm {d}t)_{t = 1} = {\sum }_{j \in {a}} \dot {\chi }_{j,{a}}\) and thus

The covariance term is

with 2a ∧ιj as explained in the statement of the proposition. Since \({\sum }_{j\in {a}} \dot {\chi }_{j,{a}} = \chi _{{a}}\), we can simplify and find

Divide the right-hand side by (2χa log 2)2 to obtain Eq. 22. □

Proof of Proposition 6

To alleviate notations, ∅ ≠ a ⊂{1,…, d} is fixed and the subscript a is omitted throughout the proof. Introduce the tail empirical process \(Q_{n}(t) = \widehat T_{(n - \lfloor kt \rfloor )}\) for 0 < t < n/k. The key is to represent the Hill estimator as a statistical tail functional (Drees (1998a), Example 3.1) of Qn, i.e., \(\widehat \eta ^{H} = {\Theta }(Q_{n})\), where Θ is the map defined for any measurable function \( z: (0,1]\to \mathbb {R}\) as \({\Theta }(z) = {{\int }_{0}^{1}} \log ^{+} \{ z(t) / z(1) \} \mathrm {d} t\) when the integral is finite and Θ(z) = 0 otherwise. Let zη : t ∈ (0, 1]↦t−η denote the quantile function of a standard Pareto distribution with index 1/η; it holds that Θ(zη) = η. The map Θ is scale invariant, i.e., Θ(tz) = Θ(z), t > 0.

The proof consists of three steps:

-

1.

Introduce a function space Dη, h making it possible to control Qn(t) and zη(t) as t → 0. In this space and up to rescaling, Qn − zη converges weakly to a Gaussian process.

-

2.

Show that the map Θ is Hadamard differentiable at zη tangentially to some well chosen subspace of Dη, h.

-

3.

Apply the functional delta method to show that ηH = Θ(Qn) is asymptotically normal and compute its asymptotic variance via the Hadamard derivative of Θ.

-

Step 1.

Let ε > 0 and h(t) = t1/2 + ε, t ∈ [0, 1]. Then \(h\in \mathcal {H}\), where

$$\mathcal{H} = \{z: [0,1]\to \mathbb{R} \mid z \text{ continuous, } \lim_{t\to 0} z(t) t^{-1/2} (\log\log(1/t))^{1/2} = 0 \}.$$Introduce the function space

$$D_{\eta, h} = \{z: [0,1]\to \mathbb{R} \mid \lim_{t\to 0} t^{\eta} h(t) z(t) = 0 ; t\mapsto t^{\eta}h(t) z(t) \in D[0,1] \}, $$where D[0, 1] is the space of càdlàg functions. Notice that zη ∈ Dη, h. Equip Dη, h with the seminorm ∥z∥η, h = supt∈(0, 1]|tηh(t)z(t)|. Let m = ⌈nq←(k/n)⌉, with ⌈⋅⌉ the ceil function, so that k/m → χ; for self-consistency of the present paper, the roles of k and m are reversed compared to the notation in Draisma et al. (2004). From Draisma et al. (2004, Lemma 6.2), we have, for all t0 > 0, in the space Dη, h, the weak convergence

$$ \sqrt{k} \left( \frac{m}{n}Q_{n} - z_{\eta} \right) \rightsquigarrow \left( \eta t^{-(\eta + 1)} \bar W(t)\right)_{t \in [0,t_{0}]} $$(33)where \(\bar W (t) = \tilde W(\boldsymbol {t}_{{a}})\), and \(\tilde W\) is defined as in the statement of Proposition 6. Indeed, the process \(\bar W\) in the statement from Draisma et al. (2004, Lemmata 6.1 and 6.2) has same distribution as W1(ta) in the case χ = 0; recall that our χ is denoted by l in Draisma et al. (2004). Put Ui, j = 1 − Fj(Xi, j), and let U(1), j ≤… ≤ U(d), j be the order statistics of U1, j,…, Un, j. In the case χ > 0, \(\bar W\) equals in distribution Wdra(ta) where Wdra appears in Lemma 6.1 in the cited reference as the limit in distribution (for a = {1, 2}), for x ∈ Ea, of

From Proposition 1 and Slutsky’s Lemma, we have \({\Delta }_{n,k,m} \rightsquigarrow \chi ^{-1/2} Z_{{a}}\) in ℓ∞([0, 1]a). Therefore, Wdra = χ− 1/2Za, as claimed.

-

Step 2.

The right-hand side of Eq. 33 belongs to \(\mathcal {C}_{h,\eta } = \{ z \in D_{\eta ,h} \mid \text {\textit {z} is continuous}\}\). To apply the functional delta-method (van der Vaart 1998, Theorem 20.8), we must verify that the restriction of Θ to \(\bar D_{\eta , h}\) is Hadamard-differentiable tangentially to \(\mathcal {C}_{\eta ,h}\), with derivative Θ′, where \(\bar D_{\eta , h}\) is a subspace of Dη, h such that \({\mathbb {P}}(Q_{n} \in \bar D_{\eta ,h})\to 1\) as n →∞; see the remark following Condition 3 in Drees (1998a). Then it will follow from the scale invariance of Θ, the identities \({\Theta }(Q_{n}) = \widehat \eta ^{H}\) and Θ(zη) = η, and the weak convergence in Eq. 33 that

$$ \sqrt{k} \left( \widehat\eta^{H} - \eta \right) = \sqrt{k} \left( {\Theta}\left( \frac{m}{n}Q_{n}\right) - {\Theta}(z_{\eta}) \right) \rightsquigarrow {\Theta}^{\prime}\left[\left( \eta t^{-(\eta + 1)} \bar W(t)\right)_{t \in [0,1]}\right] $$(34)as n →∞. From Drees (1998a, Example 3.1), the restriction of Θ to \(\bar D_{\eta ,h}\), the subset of functions on Dη, h which are positive and non increasing, is indeed Hadamard differentiable; letting ν denote the measure dν(t) = tηdt + dε1(t), with ε1 a point mass at 1, the derivative is

$${\Theta}^{\prime}(z) = {{\int}_{0}^{1}} t^{\eta} z(t) \mathrm{d} t - y(1) = {\int}_{[0,1]} z(t) \mathrm{d} \nu (t). $$ -

Step 3.

The weak limit in Eq. 34 is thus equal to \({\int }_{[0,1]} \eta t^{-(\eta + 1)} \bar W(t) \mathrm {d} \nu (t)\). From Shorack and Wellner (2009, Proposition 2.2.1), the latter random variable is centered Gaussian with variance

$$\sigma^{2} = \int\!\!\! {\int}_{[0,1]^{2}} \eta^{2} (st)^{-(\eta + 1)} {\mathbb{C}\text{ov}}(\bar W(s), \bar W(t)) \mathrm{d} \nu(s) \mathrm{d}\nu(t). $$By definition of ν and by symmetry of the covariance,

$$\begin{array}{@{}rcl@{}} \sigma^{2} / \eta^{2} &=& 2 \underbrace{{\int}_{s = 0}^{1}{\int}_{t = 0}^{s} (st)^{-1} {\mathbb{C}\text{ov}}(\bar W(s), \bar W(t)) \mathrm{d} t \mathrm{d} s}_{A}\\ &&- 2 \underbrace{{\int}_{s = 0}^{1} {\mathbb{C}\text{ov}}(\bar W(s), \bar W(1)) s^{-1} \mathrm{d} s}_{B} + {\mathbb{V}\text{ar}}(\bar W(1)). \end{array} $$For any s ∈ (0, 1),

$$\begin{array}{@{}rcl@{}} {\int}_{t = 0}^{s} {\mathbb{C}\text{ov}}(\bar W(s), \bar W(t))(st)^{-1} \mathrm{d} t & =&{\int}_{u = 0}^{1} {\mathbb{C}\text{ov}}(\bar W(s), \bar W(us))(su)^{-1} \mathrm{d} u \\ &=& {\int}_{u = 0}^{1} {\mathbb{C}\text{ov}}(\bar W(1), \bar W(u))(u)^{-1} \mathrm{d} u = B. \end{array} $$The penultimate equality follows from \({\mathbb {C}\text {ov}}(\bar W(\lambda s),\bar W(\lambda t)) = \lambda {\mathbb {C}\text {ov}}(\bar W(s),\bar W(t))\) for λ > 0 and s, t ∈ (0, 1]. Therefore A = B and \(\sigma ^{2} = \eta ^{2} {\mathbb {V}\text {ar}}(\bar W(1))\), as required.

□

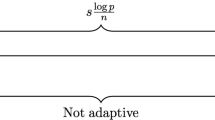

Appendix B: CLEF algorithm and variants

The CLEF algorithm is described at length in Chiapino and Sabourin (2016). For completeness, its pseudo-code is provided below. The underlying idea is to iteratively construct pairs, triplets, quadruplets… of features that are declared ‘dependent’ whenever \(\widehat \kappa _{{a}} \ge C\) for some user-defined tolerance level C > 0. Varying this criterion produces three variants of the original algorithm, namely CLEF-Asymptotic, CLEF-Peng, and CLEF-Hill. The pruning stage of the algorithm is the same for all three variants.

Rights and permissions

About this article

Cite this article

Chiapino, M., Sabourin, A. & Segers, J. Identifying groups of variables with the potential of being large simultaneously. Extremes 22, 193–222 (2019). https://doi.org/10.1007/s10687-018-0339-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10687-018-0339-3