Abstract

This paper presents a new methodology for analyzing the spatiotemporal variability of water table levels and redesigning a groundwater level monitoring network (GLMN) using the Bayesian Maximum Entropy (BME) technique and a multi-criteria decision-making approach based on ordered weighted averaging (OWA). The spatial sampling is determined using a hexagonal gridding pattern and a new method, which is proposed to assign a removal priority number to each pre-existing station. To design temporal sampling, a new approach is also applied to consider uncertainty caused by lack of information. In this approach, different time lag values are tested by regarding another source of information, which is simulation result of a numerical groundwater flow model. Furthermore, to incorporate the existing uncertainties in available monitoring data, the flexibility of the BME interpolation technique is taken into account in applying soft data and improving the accuracy of the calculations. To examine the methodology, it is applied to the Dehgolan plain in northwestern Iran. Based on the results, a configuration of 33 monitoring stations for a regular hexagonal grid of side length 3600 m is proposed, in which the time lag between samples is equal to 5 weeks. Since the variance estimation errors of the BME method are almost identical for redesigned and existing networks, the redesigned monitoring network is more cost-effective and efficient than the existing monitoring network with 52 stations and monthly sampling frequency.

Similar content being viewed by others

Introduction

Spatiotemporal variability analysis of water table levels is of great importance for managers of groundwater resources. In this regard, geostatistical interpolation techniques have been mostly used for spatial groundwater level monitoring network (GLMN) design. However, less direct consideration is given to determine sampling frequencies (temporal variability analysis). Some examples of spatial and temporal sampling in GLMN design have been reviewed in subsections 1.1 and 1.2. In addition, due to the importance of spatial sampling patterns in GLMN design, various sampling schemes used in different studies are introduced in subsection 1.3.

Overview of classical geostatistics used in GLMN design

The theoretical basis of geostatistics has been described in detail by several authors (Goovaerts 1997; Isaaks and Srivastava 1989; Kitanidis 1997). This branch of statistics focuses on spatial or spatiotemporal (S-T) datasets and originally developed to predict probability distribution of ore grades at locations where no observed data points are available (Krige 1951). There are several studies which have been conducted to assess or design GLMN from a geostatistical viewpoint. A number of recent researches on this issue are reviewed here. Fasbender et al. (2008) applied a Bayesian data fusion technique to integrate the results of a spatial Kriging interpolation method with information obtained from a drainage network and a digital elevation model (DEM). Peeters et al. (2010) extended the approach presented by Fasbender et al. (2008) to consider the results of a numerical groundwater model in the data fusion technique. Nourani et al. (2011) applied a hybrid, an artificial neural network-geostatistics method for the prediction of water table level in time and space. In their work, a calibrated geostatistical model is used to produce a spatial pattern of water table level. Manzione et al. (2012) presented an integration of time series analysis and geostatistics to estimate water table level for land-use planning and groundwater management. In their research, the kriging method has been utilized for spatial interpolation of the simulated water levels. Varouchakis and Hristopulos (2013) compared a number of deterministic and stochastic methods to investigate water table level spatial variability in the Mires Basin of Mesara Valley in Greece. The results showed that ordinary and universal kriging techniques outperform deterministic counterparts (i.e., Inverse Distance Weighting (IDW) and Minimum Curvature (MC)). Recently, Barca et al. (2015) and Ran et al. (2015) also used the kriging method for designing GLMN. Chikodzi and Mutowo (2016) also utilized linear regression analysis to obtain a relationship between groundwater levels and river stages. In their study, the kriging interpolation method was used to estimate the river stage in several random points. The results showed the good performance of applying the river stage for groundwater level estimation. The main shortcoming of these studies is that the correlations that exist between temporal and spatial measured data are not considered in the kriging interpolation method. In other words, several studies, which have been carried out to design GLMN through geostatistical methods, are believed to be in the category of spatiotemporal analysis. But in fact, these studies only represent a number of spatial analyses performed at different times and do not consider the correlations existed between temporal and spatial data obtained from monitoring stations. For example, Delbari et al. (2013) investigated groundwater depth variations over 13 years during wet and dry periods using the simple kriging (SK), ordinary kriging (OK), and inverse distance weighting (IDW) methods. The results of the experiment showed that OK can outperform other techniques. The variogram modeling was spatially conducted by using the GS+ software and was utilized during interpolation methods in different years. As another example, Barca et al. (2013) employed the evolutionary polynomial regressions (EPR) technique to calibrate and validate a groundwater level predictive tool. A nested variogram model was utilized to construct S-T maps of groundwater level. They applied the kriging standard deviation as a criterion to optimize sampling sites and frequencies. Recently, Hosseini and Kerachian (2017) utilized a spatiotemporal kriging interpolation method which considered the spatiotemporal correlations existed between samples.

Researchers have often employed different criteria (i.e., the kriging variance or standard deviation of the estimation error) to identify the regions of highest priority to set up new monitoring stations. Sometimes, these criteria have been applied in optimization problems. For example, Sophocleous et al. (1982) utilized error analysis of universal kriging to examine a GLMN located in northwest Kansas, USA. Olea (1984) applied universal kriging to minimize the sampling requirements in Equus Beds of Kansas, USA. The average and maximum standard error of estimation were used as global criteria of sampling efficiency. Kumar et al. (2005) examined the accuracy of the existing observation wells in a GLMN using universal kriging. They proposed some additional observation wells to improve the present level of accuracy in the areas having a high estimation error. Recently, Zhou et al. (2013) proposed a methodology to design a regional GLMN based on groundwater regime zone mapping in the Beijing plain, China. They applied a linear variogram model to calculate the standard deviation of interpolation error between the designed and existing GLMN. Triki et al. (2013) also utilized the universal kriging variances to optimize the existing GLMN in an aquifer in Tunisia. This optimization problem has been performed through a cross-validation test. Their findings demonstrated that by setting up 33 new monitoring wells, the average standard kriging deviation would be reduced from 26 to 11 m. Bhat et al. (2015) applied a geostatistical method to optimize numbers and locations of monitoring stations situated in the Upper Florida aquifer based on a predefined groundwater level prediction error. They recommended a hexagonal grid network to obtain a uniform level of information about GLMN as well as the minimum required accuracy. Barca et al. (2015) and Ran et al. (2015) also utilized the kriging variance in their research. The above reviews show that geostatistical estimation errors have been widely used in previous studies. However, these criteria alone do not seem enough for designing GLMNs and should be considered in combination with other criteria. In this paper, in addition to the BME estimation error, some new criteria are proposed to provide a more comprehensive approach for GLMN design.

Overview of Bayesian maximum entropy approach used in GLMN design

Bayesian maximum entropy (BME), as a powerful member of modern geostatistics, was initially proposed by Christakos (1990, 1991). In the previous studies, BME has been utilized to integrate general knowledge (obtained from general laws and principles) and specific knowledge (obtained through experiences and the specific situation) into S-T analysis (Serre and Christakos 1999). The ability of the BME paradigm to provide more accurate estimation than classical geostatistics has been shown in previous studies (Christakos and Li 1998; Christakos 1998).

Based on the authors’ knowledge, there are only a few scientific studies that have been carried out by considering the BME approach in estimation of water depth in a GLMN. For example, Yu and Chu (2009); Yu and Lin (2012, 2015) applied the BME technique to evaluate S-T variations of piezometric heads in some observation wells. Yu and Chu (2009) also utilized some rotated empirical orthogonal functions (REOF) to analyze the spatiotemporal groundwater level variations. To minimize systematic biases resulted from sampling, the BME technique was used to generate evenly distributed spatiotemporal estimations. In addition, the REOF method was applied to examine the spatiotemporal groundwater level data estimated using the BME technique.

The flexibility of the BME approach in the combination of uncertain (soft) data and measured (hard) data as well as its spatiotemporal mapping ability, which considers the S-T dependencies, was the motivation to apply this method in the proposed methodology. It should be noted that soft data refers to data not obtained through field measurements and can be of interval or probabilistic type (Christakos et al. 2002). Recently, Alizadeh and Mahjouri (2017), applied soft data to effectively improve BME estimations for redesigning the groundwater quality monitoring network of the Tehran aquifer in Iran. Based on the authors’ knowledge, in previous applications of BME in designing GLMNs, only observed data have been considered in S-T analysis, while one of the main features of the BME method is the use of soft data to improve the accuracy of the estimations. In the current paper, both hard and soft data are used for the spatiotemporal design of GLMNs.

Spatial sampling patterns (strategies) of groundwater networks

Spatial sampling patterns can affect the accuracy of geostatistical analysis and should be taken into consideration before any measurement. In monitoring networks, various sampling schemes have been used by different researchers. In the following sections, several papers, which deal with the application of hexagonal sampling patterns in GLMN, are reviewed.

Olea (1984) examined the efficiency of different sampling patterns, such as uniform hexagonal, square, triangular, and cluster data points used for analyzing data obtained by a GLMN in the Equus Beds aquifer of Kansas, USA. Based on the findings, a regular hexagonal pattern, in which monitoring stations are placed in cell centers, has been the most efficient pattern in terms of predefined indices of sampling accuracy (i.e., average standard error). Davis and Olea (1998) also proposed a stratified hexagonal sampling network, in which the region is divided into regular hexagonal cells. In this structure, observation wells are randomly located within hexagonal cells. Recently, Bhat et al. (2015) adopted a hexagonal gridding to achieve a uniform level of data. Three different stratified hexagonal grids were proposed in their research. Furthermore, the relation among prediction standard error, the number of required monitoring stations, and well spacing were investigated. Hosseini and Kerachian (2017) also proposed a new methodology for spatiotemporal redesigning of a groundwater level monitoring network in Iran using a hexagonal gridding pattern. The concept of Value of Information (VOI) and a data fusion technique were considered in their research. These studies show that hexagonal gridding patterns are very efficient; however, their applications in the optimal design of monitoring systems have been very limited.

In this paper, a combination of hexagonal gridding pattern and the BME technique is utilized in a new methodology to analyze the S-T variability of water table level and redesign a GLMN. Review of the literature shows that previous works on GLMN design have been based on only observed data obtained from monitoring wells, but the methodology proposed here integrates soft (uncertain) information available at unmeasured points from a numerical groundwater model as well as hard (measured) information at observation wells. Furthermore, some new design criteria are defined to have a more comprehensive GLMN design. The ordered weighted averaging (OWA) operator is also utilized for aggregating the opinion of several experts about the multiple criteria considered in a decision-making process for selecting the best S-T configuration. The methodology has been applied to designing optimal S-T sampling schemes for the Dehgolan plain in Kurdistan Province located in northwestern Iran.

The rest of this paper is arranged as follows. In Section 2, a flowchart of the proposed methodology is presented. Then, each step is described separately in more detail to provide a better understanding of the process. The study area of this paper is introduced in Section 3. Finally, the results and main findings are presented in Section 4.

Methodology

Figure 1 illustrates a flowchart of the proposed methodology. In the following sections, different parts of the flowchart are described in detail.

Data collection

Groundwater table level observations, which are considered as hard data, are required in the BME estimation process. On the other hand, outputs of a numerical groundwater simulation model can be taken into account as a valuable source of information available throughout the system. In general, spatial and temporal groundwater level estimations obtained using geostatistical methods are more accurate than the results of numerical simulation models, except in areas where monitoring wells are limited but large variations can be seen in water table level. Considering the existing uncertainty, in this paper, the outputs of a calibrated MODFLOW simulation model are taken into account as soft data (uncertain data). So, the BME method can be utilized due to its flexibility considering uncertain kind of information (soft data) in combination with measured information (hard data). In previous studies, the capability of the BME method to improve the accuracy of the estimations when additional information is available has been proved.

According to the above explanations, all required information including observed water level data and groundwater level values (calculated using a calibrated numerical simulation model) should be collected.

Data analysis

Geostatistical techniques are more appropriate for normally distributed and no trend variables (Finkenstadt et al. 2006). So, in the presence of spatial or temporal trends, it is necessary to determine the trends and subtract them from the original non-stationary and non-homogeneous random field. In addition, parametric tests, such as variance analysis, are based on the assumption that the data set follows a Gaussian distribution. In this paper, trend analysis is performed and then different normality tests are utilized to the residual being spatially homogeneous and temporally stationary using the SPSS software. If the residuals fail the assumption of normality, they are transformed to be normal using a suitable transformation function.

Identifying potential monitoring locations

Determining potential locations for monitoring wells are of great importance in monitoring network design. In this paper, these candidate points are identified using a regular hexagonal gridding pattern. Since application of different hexagons’ side lengths results in various configurations of monitoring wells, the regular hexagonal grids of different size obtained using the Geographical Information System (GIS) are considered to cover different situations.

Figure 2 shows an example of monitoring wells configuration obtained based on a hexagonal gridding pattern. For each of the hexagonal grids, new monitoring wells (new stations) are proposed to add to the center of hexagonal cells not containing any pre-existing monitoring stations in the area within a defined threshold being a circle with a radius equal to half of the hexagon’s side length. If the new stations are located outside the boundary of the study area (i.e., point 'A' in Fig. 2), they are shifted from the cell centers to points near the boundary of the region (i.e., point 'B' in Fig. 2). The pre-existing stations being outside the defined threshold value are not considered in the calculations and are proposed to remove from the monitoring network (removed stations). On the other hand, all the pre-existing stations located in the area within a defined threshold value are retained in the network (retained stations). By this way, there may be more than one retained station within the threshold per grid cell. Finally, at the end of this step, spatial sampling patterns in which new, retained, and removed stations are known will be identified for each hexagon’s side length.

Spatiotemporal simulation using Bayesian maximum entropy

In this paper, the Bayesian maximum entropy (BME) approach is implemented for estimating the S-T distribution of water table level in the study area.

The BME approach seeks to provide an efficient framework for organizing and incorporating one’s scientific experience in terms of the general (G) knowledge base, which refers to background knowledge, and specific (S) knowledge base, which is obtained through one’s experiences with the specific situation. Naturally, the union of the G and S knowledge bases is physical knowledge (K) (Christakos et al. 2002). This nonlinear estimator, BME, is a much more accurate and powerful method than any type of classical kriging technique, because it can provide an efficient way to incorporate different sources of physical knowledge bases (KBs), uncertain information, higher statistical moments, etc. into S-T analysis (Christakos et al. 2001). There are three main stages in BME analysis including prior stage, pre-posterior stage, and posterior stage, which are briefly summarized below (Christakos et al. (2002)):

-

(i)

Prior stage: This stage includes G knowledge and a basic set of assumptions. The consideration of the G knowledge base at this stage expresses the fact that physical applications are not started with complete ignorance. The goal of this stage is to maximize the prior information given G knowledge.

-

(ii)

Pre-posterior stage: This stage corresponds to the S knowledge base including hard data, which are accurate measurements obtained from the field observations, and soft data, which are uncertain information that can be represented as interval values or probability statements.

-

(iii)

Posterior stage: This stage is also called the integration stage in which the former two stages are integrated. This integration leads to the final outcome of the BME analysis, which is the posterior probability distribution function (PDF).

A more detailed description of the mentioned stages is discussed in Christakos et al. (2002).

Calculation of the space-time covariance for the water-level random field

Variogram/covariance function, which indicates the relation between the data points and their distances, is a kind of statistical correlation function. Making a good variogram is of great importance prior to any estimation process of a random variable. After calculation of experimental variogram/covariance obtained based on the all observed data, a number of theoretical variogram/covariance functions, such as the linear, the spherical, the Gaussian, and the exponential models, are needed to fit the experimental variogram/covariance. The shape of a theoretical variogram/covariance model is characterized in terms of its particular parameters, which are the sill, the range, and the nugget effect. A more detailed description of variogram parameters can be found in any textbook of geostatistics.

Since the BME method uses covariance model parameters for its estimation, a S-T covariance model has been developed in this paper using hard data. It is also possible to make a theoretical variogram model because parameters of this model can easily be transformed to those of theoretical covariance model.

There are different structures of separable variogram/covariance models such as sum, product, and product-sum that have been used in previous studies (De Cesare et al. 1997; De Cesare et al. 2001). Furthermore, several non-separable S-T variogram/covariance models have also been proposed in the literature (Cressie and Huang 1999). In this paper, several separable spatiotemporal covariance models are made and the best-fitted model is selected based on the most common measure of goodness of fit, which is the coefficient of determination (R 2). Then, the parameters of the selected model are used in the estimation process.

Performing a spatiotemporal analysis using hard data and defining priorities for reducing a number of pre-existing stations

In this paper, a kriging method, which is a special case of limited application of BME, is used to do a S-T analysis based on the S-T covariance model prepared in the previous section. In this kind of BME method, only hard data of desired stations are considered in calculations. To this end, a square grid with cells of size 2000 × 2000 square-meter is considered in the estimation process. The outcomes of each S-T analysis are the mean value of the posterior pdf and the related estimation error variance on the grid of points covering the study area. These outputs are extracted for each month during which ground truth data are available.

In this paper, a new methodology is proposed for a reduction in the number of pre-existing stations located in the plain. A goal of this methodology is to assign a reduction priority number to each station. Given that N is the number of pre-existing stations located in the region, the procedures of the method are described as follows:

-

Step 1:

Choose one of the pre-existing stations

-

Step 2:

Do a S-T analysis using the rest of the stations

-

Step 3:

Calculate the value of S1 expressed as Eq. 1 and substitute it into Eq. 2:

In the above equations, S1 and S2 are, respectively, average value of the estimation error variances and the standard deviation of S1, which is obtained based on 95.4% of the data being within ±2× standard deviations from the mean. V xt , denotes the value of the estimation error variance at location x and time t; Tt is total number of time steps; Tx is total number of grid points located inside the study area; and n is the total number of the estimation points at time and space.

-

Step 4:

Return the removed station, select another station for reduction and repeat steps 2 and 3

-

Step 5:

Repeat step 4 until all pre-existing stations have been selected

-

Step 6:

Remove the station associated with the minimum amount calculated in step 3 and assign its reduction priority number (RPN)

-

Step 7:

Repeat steps 1 through 6 for the rest of the pre-existing stations, which their numbers are equal to N-RPN until the priority number of each pre-existing station in the region has been assigned

It should be noted that the priority number of the first removed station is equal to 1. For the rest of the stations, this approach will also be continued in the same way. In addition, reduction of a station associated with the minimum amount of S2 reflects the fact that such station has the least impact on the total variance estimation error of the system. So, it has the first priority over other stations for removal.

Computing the mean and variance estimation error for different hexagons’ side lengths and sampling frequencies

In this part, the flexibility of BME in applying soft data is taken into consideration in the estimation process to improve the accuracy of the calculations and incorporate uncertainty of other sources of information. To define the spatial sampling location, the approach described in section 2.3 is used. As mentioned before, for several hexagonal grids, the locations of new stations, removed stations, and retained stations have been determined. So, for any hexagonal grids, spatial sampling is specified using the geographical locations of new stations and retained stations. To design temporal sampling also called sampling frequency, a new approach is proposed to consider uncertainty caused by limited information. In this approach, different water table level data (a time lag value of 4 weeks) are available from the existing monitoring system. The weeks containing these data are shown in Fig. 3a, which displays ellipses drawn around their names. It is clear that the simulation of the system at each time lag requires having the complete access to the information. For example, if a time lag value of 2 weeks is chosen, information should be available every 2 weeks as shown in Fig. 3a. This figure displays temporal sampling which should be taken at weeks which their related pictures contain stations inside (red points). Since this information does not always exist, another source of information is required for data gap filling. In this paper, simulation results of a numerical groundwater flow model are used as another source of information and soft data are generated using the calibrated simulation model. These soft data can be used to fill data gap of 2nd, 6th and 10th weeks, and so on. Another important point taken into account in this approach is to incorporate the uncertainty resulting from considering different starting points for system analysis. For example, by choosing a time lag value of 2 weeks, the first sample can be taken during the second week as shown in Fig. 3a or first week as shown in Fig. 3b. In the former case, measured data of fourth week, eighth week and so on are considered in the calculation process. In the later one, these data are not used; therefore, only soft data can be considered in BME estimation. By this way, this kind of uncertainty can be incorporated. As another example, for a time lag value of 3 weeks, the starting point for sampling can be during the first week, second week, or third week that use of any one of them can lead to different results.

In addition to the application of soft data mentioned above, there are similar cases that need to be considered. In new stations, there are not any data even during weeks in which observed data are available. Therefore, in such cases, soft data are also used in the estimation process.

In this paper, a large number of scenarios are defined to examine the use of different hexagonal grids and time lag values and incorporate the uncertainty caused by considering different starting points for temporal sampling. In each scenario, estimation error variances are calculated on the grid of points covering the study area. Details of this gridding were presented in section 2.4.2. Furthermore, from the perspective of time, the estimations are calculated during each week. For each hexagonal grid, due to the use of various starting points, different values of estimation error variances are obtained for each time lag value. The average of these variances is considered in the calculation process. It should also be noted that, in this paper, the estimation at a given time step is only calculated by considering the data from previous time steps. Finally, in each scenario, 2× standard deviation of the average value of the estimation error variances, S2 in Eq. 2, is calculated and is later considered in the decision-making process. It should also be noted that the Eq. 1 in which different starting points are considered, can be represented as Eq. 3, where, j denotes different starting points and ∆t indicates time lag between samples:

Redesigning a monitoring network using different criteria and engineering judgments

Defining different criteria

In this paper, a multi-criteria decision-making technique is utilized to decide upon the optimum alternative. Therefore, different criteria are established to be used for the evaluation of an available set of alternatives introduced in the previous section as different scenarios. These criteria are listed in Table 1 and described in detail in the following sections:

Maximizing the number of pre-existing stations retained in the system

As mentioned in section 2.3, for each of the hexagonal grids, some of the pre-existing stations located in the area within a defined threshold value are retained in the network and are used in the BME estimation process. Because of the importance of preserving historical records of the pre-existing stations, it is necessary to keep these stations in the system as much as possible. Therefore, in this paper, maximizing the number of pre-existing stations retained in the study area is defined as one of the criteria involved in decision-making process.

Minimizing the number of new stations proposed based on the regular hexagonal gridding

Application of hexagonal sampling patterns in GLMN requires that several new stations be added to the center of hexagonal cells which not contain any pre-existing monitoring stations in the area within a defined threshold. Because of the high cost of drilling and maintaining new stations, minimizing the number of new proposed stations is considered as another criterion.

Minimizing the standard deviation of the average value of the estimation error variances

The main feature of geostatistical techniques is to provide the estimation error variances being the measure of the accuracy of estimates at data points with no observations. Minimizing these variances is also defined as a criterion. These variances are calculated in a manner previously described in section 2.4.3.

Minimizing the sum of the priority rankings related to removed stations

In this paper, removal priority number of each pre-existing station located in the study area is assigned as described in section 2.4.2. On the other hand, as mentioned in section 2.3, pre-existing stations being outside the defined threshold are proposed to be removed from the monitoring network. The location of these stations may be different depending on the hexagonal grid taken into consideration. The priority rankings of these removed stations are also assigned based on the proposed removal priority number. The lower value of the sum of the priority rankings can reflect that removed stations based on the hexagonal gridding pattern are more consistent with the stations resulted from the methodology proposed for assigning the removal priority numbers in section 2.4.2. So, the other criterion designed to involve in the decision-making process is to minimize the sum of the priority rankings of removed stations for each scenario.

Minimizing the mean absolute error calculated using removed stations

Mean absolute error (MAE) is a common quantity used to measure the accuracy of estimations by calculating the average magnitude of a set of forecasts errors. In this section, removed stations, which are not considered in the estimation process, are applied to examine the accuracy of the BME method. To this end, the estimation values at removed stations are compared to the corresponding ground truth values via MAE index, which its minimization is considered as another criterion.

Maximizing time lag between samples

Sampling can be quite a time-consuming and expensive process. Therefore, the maximizing time lag between samples can be one of the criteria of decision makers in designing a GLMN.

It should be noted that the cost factor is implicitly considered in both criteria introduced in sections 2.5.1.2 and 2.5.1.6.

Gathering experts’ opinions on each criterion and using fuzzy linguistic quantifiers to identify the weight of each criterion based on each expert’s relative power

To enhance the quality of decision-making, the opinions of some experts about each criterion are gathered using fuzzy linguistic quantifiers and quantified by using triangular fuzzy numbers shown in Fig. 4. The maximum membership principle is applied here as the defuzzification method.

In addition, decision-making powers of experts are different from each other. Therefore, different weights are considered for experts to obtain a group opinion on each criterion. In this paper, the group weight of each criterion is calculated as follows:

where Gw(i) is the group weight of criterion i; w j is the weight assigned to decision maker j; and Cn ij denotes expert j’s opinion on criterion i quantified by using triangular fuzzy numbers. N e , the upper limit of summation, indicates the number of experts involved in decision-making.

The Gw(i) obtained from Eq. 4 is multiplied by the calculated values of criterion i normalized using the following equation:

where X n , i is the data point x i normalized between 0 and 1. x b , i and x u , i are, respectively, the ideal and unfavorable values among all alternatives for criterion i.

Application of the OWA operator for decision-making

In this paper, the ordered weighted averaging (OWA) is utilized as an aggregation operator (Yager 1988). The framework of this operator is briefly introduced below:

An OWA operator of dimension M is a mapping fof I M to I (f : I M → I , I = [0, 1]). Mapping f has an associated weighting vector w (w = [w 1 w 2 w 3 … . w M ]T, \( \sum_k{w}_k=1 \); w k ∈ [0, 1], where w i s are ordered weights of OWA operator). The main equation of this operator is presented as follows (Yager (1988)):

where b k is the kth largest member of the data set a (a = a 1 , a 2 , … , a M )). In this equation, the members of data set a are ranked in descending order. M is the number of data considered in the aggregation process. In this paper, the data set a of each scenario is arranged according to the numerical values of each criterion.

It should also be noted that the ordered weights of OWA operator, w k , are determined based on optimism degree of the decision maker. An optimistic decision maker assigns the largest weight to the first rank and the smallest weight to the last rank. In contrast, a pessimistic decision maker does the reverse action. In this paper, the method proposed by Fullér and Majlender (2003) is used to derive the ordered weights. This method is a nonlinear optimization problem exploring weights based on the entropy model proposed by O’Hagan (1990). By solving this optimization problem for different optimism degrees, each time a unique vector of ordered weights will be obtained. Fullér and Majlender (2003) have solved this problem by applying the Kuhn-Tucker second order condition. Their results are presented in Eqs. 7–9:

where θ is the optimism degree of the decision maker. In this paper, different optimism degrees varying from zero to one are considered to solve the decision-making problem and, therefore, different ordered weights are obtained.

Analysis of the selected alternative as final decision

In this section, for a certain optimism degree, the best scenario is selected and examined in detail to determine the final configuration for sampling locations and frequencies. It should be noted that the configuration of the final GLMN may be partially revised based on engineering judgments.

It is worth mentioning that the data obtained from the revised monitoring network should also present the variations of actual hydrogeological characteristics of the aquifer. As a calibrated groundwater flow simulation model is used in the proposed methodology, it can consider the spatial and temporal variations of the groundwater conditions. In addition, due to the nature of the hexagonal sampling patterns, the stations of the redesigned monitoring network are distributed over the entire area. Therefore, the changes in hydrogeological characteristics of the aquifer can be adequately covered by applying the final configuration.

Case study

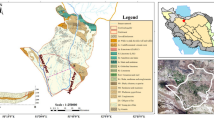

The study area of this paper is the Dehgolan plain, which contains an unconfined aquifer, in Kurdistan Province located in northwestern Iran. Groundwater is a major natural resource in this region and proper use of it can lead to the agricultural development. On the other hand, uncontrolled exploitation of groundwater resources in some parts of this plain has caused a significant drop in water table levels. The Dehgolan plain has an arid to semi-arid climate in flat areas and a very cold climate in the upper elevations. The average annual rainfall of this plain is about 380 mm. The boundaries of the groundwater balance area utilized in this paper are shown in Fig. 5. These boundaries cover an area of 632.91 km2. The locations of 52 observation wells used in this paper are also indicated in Fig. 5. Monthly water table level data for the period of observations from 1987 to 2009 are considered in this paper.

An MODFLOW-based groundwater flow simulation model has been calibrated and verified (validated) for the Dehgolan plain by the Mahab Ghods Consulting Engineering Company (2014). The model consisted of a square grid with a cell size of 500 × 500 m2, which contains 2603 active cells in a single layer. A steady-state model has been calibrated using the monitoring data obtained in 2006 in order to estimate aquifer hydraulic conductivities in different cells. In addition, due to the need for long-term simulation, a transient model has also been calibrated to obtain a specific yield of the aquifer media in different zones. The root mean square error (RMSE), mean error (ME), and mean absolute error (MAE) of simulated water level fluctuations for the verification period from September 2001 to September 2006 are 1.82, 0.29, and 1.33 m, respectively, which represent the good performance of the model. Except in areas where the large variations can be seen in water level fluctuations, the kriging methods outperform the MODFLOW-based model. So, the simulated water level values of the numerical model can be utilized as uncertain kind of data (i.e., soft data). In this paper, the MODFLOW results are used for generating soft data as interval values. The soft data are used in the BME model to improve the accuracy of estimations.

Results

In this section, the proposed methodology described in detail in section 2 has been applied for designing optimal spatiotemporal sampling schemes for the Dehgolan plain. The results obtained in different steps of the flowchart have been presented in the following sections:

Initial data analysis

In this paper, the monthly water table level values of 52 pre-existing monitoring wells have been utilized as hard data during 1987–2009. Fortunately, there are no missing data over an entire period of observations. In addition, water table level values obtained based on a numerical simulation model are also available, which have been used for generating soft data. It should also be noted that, in this paper, a probabilistic soft data type is considered. In the probabilistic type, it is possible to derive the probability density function (pdf) by using available evidence. Before generating soft data, a seasonal difference-based trend analysis is performed on model outputs as well as ground truth values. Figure 6a, b displays point scatter plots of ground truth data before and after the removal of linear trend along different directions, in which the x-axes represent longitude, latitude, and time, respectively. According to Fig. 6a, linear spatial and temporal trends can be detected. In addition, the time series displays seasonal components that have been estimated and subtracted from the original data as shown in Fig. 6b. The same point scatter plots are drawn for the groundwater flow model outputs being available since October 2001 through September 2006 (Fig. 7a, b).

After trend analysis, different normality tests have been utilized to the residual being spatially homogeneous and temporally stationary using the SPSS software. Since the residual random fields of both sources of data fail the assumption of normality, they have been transformed into a normally distributed variable.

Potential monitoring location

In this paper, for defining potential monitoring locations based on the hexagonal gridding pattern, different hexagons’ side lengths obtained using GIS have been considered to cover various configurations of monitoring wells. Figure 8a, b, c indicates examples of regular hexagonal grids of side lengths 2000, 3600, and 5000 m in the Dehgolan plain under an ideal condition in which there is a potential monitoring station at each hexagon center. Some of these stations have been shifted to points near the boundary of the study area by considering a threshold value as described in section 2.3.

Due to the importance of preserving historical records of the pre-existing stations, potential monitoring locations differ from those existing under ideal condition. Figure 9 shows two configurations of potential monitoring stations derived by a regular hexagonal grid of side length 4000 m obtained based on both the ideal condition and proposed methodology described in subsection 2.3. According to this figure, nine new monitoring wells have been added to the center of hexagonal cells not containing any pre-existing monitoring stations in the area within a defined threshold (a circle with a radius equal to half of the hexagon’s side length) and a limited number of them have been shifted to points near the boundary of the region. As noted before, the procedures mentioned above have been implemented for different hexagons’ side lengths. In addition, spatial sampling patterns in which new, retained, and removed stations are known to have been identified for each hexagon’s side length. Furthermore, it is also possible to remove some of the pre-existing stations from cells, which contains more than one pre-existing station, based on engineering judgments.

Space-time covariance model

In this paper, several different separable covariance models have been tested and finally, an exponential/Gaussian model, which contains an exponential spatial covariance model and a Gaussian temporal covariance model, has been selected as the best theoretical model obtained by fitting its parameters to experimental data. Figure 10 indicates the best S-T covariance model obtained here using the water table level observations of 52 pre-existing monitoring wells. This model can describe important characteristics of the S-T random field. The mathematical structure of the S-T separable covariance function developed here is as follows:

where C(r, τ) represents the S-T covariance function in spatial lag r and time lag τ. S, SR, and TR are, respectively, the sill, spatial range, and temporal range parameters. The values of these parameters are calculated as 1.37, 5.75e + 004, and 7.42, respectively. Later, these parameters are used in the estimation process.

Removal priority numbers assigned to each pre-existing station

Table 2 shows the removal priority numbers calculated using the methodology proposed in subsection 2.4.2. The location of each station is shown in Fig. 5. The priority numbers presented in Table 2 are obtained using the S-T covariance model shown in Fig. 10. These results have been utilized for assigning a priority ranking to each removed station. According to Table 2, for stations numbers 5, 27, 36, and 46, the priority numbers cannot be obtained. The reason is that it is impossible to do a spatiotemporal analysis because the number of stations involved in the estimation process is not enough. To make better use of the results shown in Table 2, relative changes in total variance estimation error have been plotted against the number of removed stations in Fig. 11. It is clear that increasing the number of removed stations results in more changes in total variance estimation error compared to the existing situation in which there are 52 observation wells in the region. As can be seen in Fig. 11, removal of eight stations having the eight higher priorities leads to a 3.6% increase in the total variance estimation error, which is not a significant increase.

Application of BME

The methodology proposed in subsection 2.4.3 has been implemented here to compute the mean and variance estimation error for different hexagons’ side lengths and sampling frequencies. Figure 12a displays 2× standard deviation of the average value of the estimation error variances, S2 in Eq. 2, plotted against time lag for a regular hexagonal grid of side length 2000 m. It should be noted that the same plot for a regular hexagonal grid of side length 4000 m is slightly different from that given in Fig. 12a. In this paper, different time lag values of 1 to 16 weeks (4 months) are tested. As shown in Fig. 12a, an increase in time lag value results in a higher S2. A greater magnification of Fig. 12a has been displayed in Fig. 12b to illustrate the differences among S2 values related to different starting points. The average of these differences has been considered in the decision-making process.

As noted before, a large number of scenarios have been defined to examine the use of different hexagonal grids as well as consider various time lag values. Figure 13 displays S2 values plotted against the hexagon’s side length considering different time lags. Indeed, this figure shows the S2 values calculated for all scenarios defined in this paper.

Redesign of the water table level monitoring network

Application and comparison of different criteria

In this paper, different scenarios have been established, each of which has been evaluated based on multiple conflicting criteria. In Figs. 14 through 18, the calculated values of different criteria have been plotted against the hexagon’s side length by considering a specific time lag value of 2 weeks in criteria 3 and 5. The calculated values of criteria 1, 2, and 6, which were explained in subsection 2.5.1, are the same for all time lag values.

Figure 14 displays the relationship between relative numbers of retained stations and the hexagon’s side length. This figure does not show a consistent trend but indicates an upward trend in the first part of the chart up to about 0.37 and a downward trend afterward. Also, it can be replaced by a linear trend. As noted before, maximizing this criterion is one of the objectives considered in this paper. In Fig. 15, the calculated values of the second criterion have been plotted against the hexagon’s side length and a downward trend can be easily recognized. Figure 16 indicates the relationship between 2× standard deviation of the average value of the estimation error variances, criterion 3, and the hexagon’s side length. Unlike the previous figure, this figure displays an upward trend. Figure 16 is the same as Fig. 13 but has only been shown for a time lag value of 2 weeks. In Fig. 17, the criterion 4, the sum of the priority rankings related to the removed stations has been considered. According to this figure, a downward trend can be seen. Finally, the fifth criterion being mean absolute error (MAE) calculated using removed stations has been plotted against the hexagon’s side length for all time lag values (Fig. 18). This figure does not display any particular trend, but it is the same for different time lag values. As mentioned in section 2.5.1, in this paper, the minimization of the criteria 2, 3, 4, and 5 have been taken into consideration. Furthermore, the maximization of the time lag between samples described in section 2.5.1.6 is the last objective considered in the decision-making problem. There is not any need to plot the values of this criterion against the hexagon’s side length; this is because, for a specific time lag, it will be constant over the hexagon’s side length.

It is necessary to mention that the relative number of stations in criteria 1 and 2 are utilized here, which are, respectively, the number of retained stations divided by the number of pre-existing stations and the number of new stations divided by the total number of hexagonal cells. The results obtained from this section have been utilized in the decision-making process.

Multiple criteria decision-making

In this paper, the opinions of three experts have been gathered for decision-making. Since decision-making powers of experts are different from each other, the weight values of the first, second, and third expert have been considered as 0.5, 0.3, and 0.2, respectively. Table 3 presents group weights of the criteria calculated using Eq. 4 based on opinions of the three experts. According to this table, the maximum group weight has been assigned to criterion 3 being the standard deviation of the average value of the estimation error variances. The group weight of each criterion has been multiplied by the respective normalized value obtained from Eq. 5.

Application of OWA operator requires that the ordered weights be properly determined based on different optimism degrees of the decision maker(s). These weights have been obtained through Eqs. 7 to 9 and have been shown in Table 4 for different optimism degree. In this table, the elements b 1 to b 6 , in which b kis the kth largest member of the data set,have been ranked in descending order for each scenario. Finally, the OWA operator has been utilized to aggregate the calculated value of each criterion for all scenarios defined in this paper.

After obtaining the aggregated results for each scenario, both the hexagon’s side length and time lag have been plotted against the ranking order for different optimism degree (Fig. 19). It should be noted that due to administrative problems, it is not possible to conduct sampling every day. Therefore, time lag values of 2 to 16 weeks have been considered for decision-making. As shown in Fig. 19, for each value on the horizontal axis, two values have been obtained. One of the values represents the selected length and the other is related to the selected time lag. Due to space limitation, only the 20 better ranking have been displayed for each optimism degree. The results presented in Fig. 19 can be effectively used for designing a water table level monitoring network in the Dehgolan plain. Based on the optimism degree and preferences of a decision maker, one of the scenarios can be easily chosen using Fig. 19.

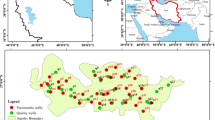

In the following, the first ranking order of a specific optimism degree (i.e., 0.1) is examined. The characteristics of this scenario have been listed in Table 5. Furthermore, Fig. 20a displays the configuration of monitoring stations for a regular hexagonal grid of side length 3600 m obtained based on proposed methodology. According to this figure, 16 new monitoring stations have been added to the center of hexagonal cells not containing any pre-existing monitoring stations in the area within a defined threshold and a limited number of them have been shifted to points near the boundary of the region. In addition, the number of pre-existing stations retained in the system is equal to 17 in this configuration. For better comparison, the existing situation of GLMN is also represented in Table 5 and Fig. 20b. Considering the hexagonal gridding pattern, Fig. 20b shows an inappropriate distribution of the stations in the current GLMN of the Dehgolan plain.

According to Fig. 20a, the stations of the redesigned monitoring network are distributed over the entire area. This suggests that the changes in hydrogeological characteristics of the aquifer can be adequately covered by applying the final configuration.

Since it is necessary to examine the performance of the methodology proposed here, the 2× standard deviation of the average value of the estimation error variances obtained based on the new designed monitoring network has been compared with those of the existing situation. According to Table 6, this value has increased by about 2.1%, which is not significant. It should be noted that this small increase in 2× standard deviation could be due to using soft data for filling data gaps. It is necessary to mention that the number of stations suggested in the selected scenario is equal to 33, representing a significant decrease compared with the existing situation (52 stations). So, it can be concluded that the selected scenario can significantly reduce maintenance and operational costs of the monitoring stations. It is also possible to remove one of the pre-existing stations from cell A shown in Fig. 20 based on engineering judgments. It should also be noted that the results presented in Table 5 and Fig. 20 can also be obtained for other cases shown in Fig. 19 by considering the optimism degree and preferences of a decision maker. Finally, due to the good performance of the proposed methodology, its application for revision of the GLMN of the Dehgolan plain is suggested.

Summary and conclusion

In this paper, a new methodology was proposed and applied for redesigning an optimal spatiotemporal sampling network for the Dehgolan plain in the Kurdistan Province located in northwestern Iran. At first, a new method, which can accurately be used for removing stations based on kriging estimation variances, was suggested to assign a removal priority number to each pre-existing station. Then, a new hexagonal gridding pattern was used to determine the spatial sampling. Despite the good performance of this pattern, especially when using geostatistical techniques, it has rarely been used in previous studies. In this paper, to design temporal sampling, a new approach was proposed to consider uncertainty caused by lack of information in areas with no monitoring station. In this approach, different time lag values of 1 to 16 weeks were tested by considering another source of information (i.e., results of the numerical groundwater flow model) for data gap filling. In addition, the uncertainty resulted from considering different starting times for modeling was regarded. The flexibility of the BME method was taken into consideration to incorporate the uncertainties mentioned above under a large number of scenarios (i.e., different hexagons’ side lengths and time lags). To improve the accuracy of the BME estimations, the results of a calibrated MODFLOW-based groundwater flow simulation model were used for generating soft data as interval values. Using this new idea, estimations of the groundwater flow model have been considered as a new source of information in the designing process. In addition, a multi-criteria decision-making technique (i.e., the ordered weighted averaging (OWA)) considering six main criteria was applied for aggregating the group opinions of some experts about the criteria by regarding their relative powers. By this way, the final monitoring network can be chosen based on the different criteria, rather than using only the kriging estimation variance, which has widely been used in literature. After obtaining the aggregated results for each scenario, both the hexagon’s side length and time lag were plotted against the ranking order of scenarios considering different optimism degrees in the OWA technique. The results provided the required information for choosing a GLMN for the Dehgolan plain under different construction and operational costs constraints. In the selected scenario, a configuration of 33 monitoring stations for a regular hexagonal grid of side length 3600 m was proposed, in which the time lag between samples is equal to 5 weeks. The results showed that the new monitoring network is more cost-effective and efficient than the existing network, which includes 52 stations with the monthly sampling frequency. It should be noted that the methodology proposed here is general and can be applied for redesigning any kind of monitoring network. To simplify the proposed methodology, the number of criteria in section 5 of the flowchart (Fig. 1) can be decreased. In the spatial and temporal analysis, the hexagons’ side lengths and time lags between samples can be also increased to reduce the computational cost of the methodology.

In this paper, a regular hexagonal gridding pattern with fixed side length was considered for redesigning GLMNs. As the density of potential monitoring stations can vary in different parts of the network, application of hexagonal gridding patterns with varying side lengths is suggested for future works. Furthermore, some other criteria related to time series characteristics of observed data (e.g., power of statistical trends) can be considered in temporal sampling design. The irregular temporal sampling can be also investigated.

References

Alizadeh, Z., and Mahjouri, N. (2017). A spatiotemporal Bayesian maximum entropy-based methodology for dealing with sparse data in revising groundwater quality monitoring networks: the Tehran region experience. Environmental Earth Sciences 76:436.

Barca, E., Calzolari, M. C., Passarella, G., & Ungaro, F. (2013). Predicting shallow water table depth at regional scale: optimizing monitoring network in space and time. Water Resources Management, 27(15), 5171–5190.

Barca, E., Passarella, G., Vurro, M., & Morea, A. (2015). MSANOS: data-driven, multi-approach software for optimal redesign of environmental monitoring networks. Water Resources Management, 29(2), 619–644.

Bhat, S., Motz, L. H., & Pathak, C. (2015). Geostatistics-based groundwater-level monitoring network design and its application to the Upper Floridan aquifer, USA. Environmental Monitoring and Assessment, 187, 4183. doi:10.1007/s10661-014-4183-x.

Chikodzi, D., & Mutowo, G. (2016). Using river altitude determined from a SRTM DEM to estimate groundwater levels of the Tokwe and Mutirikwi watersheds in Zimbabwe. Journal of Geographic Information System, 8(1), 65–72. doi:10.4236/jgis.2016.81007.

Christakos, G. (1990). A Bayesian/maximum-entropy view to the spatial estimation problem. Mathematical Geology, 22(7), 763–777.

Christakos, G. (1991). Some applications of the BME concepts in geostatistics. In W. T. Grandy & L. H. Schick (Eds.), Maximum entropy and Bayesian methods (pp. 215–229). Dordrecht: Kluwer Acad. Publ.

Christakos, G. (1998). Spatiotemporal information systems in soil and environmental sciences. Geoderma, 85(2–3), 141–179.

Christakos, G., & Li, X. (1998). Bayesian maximum entropy analysis and mapping: a farewell to kriging estimators. Mathematical Geology, 30(4), 435–462.

Christakos, G., Serre, M. L., & Kovitz, J. (2001). BME representation of particulate matter distributions in the state of California on the basis of uncertain measurements. Journal of Geophysical Research., 106(D9), 9717–9731.

Christakos, G., Bogaert, P., & Serre, M. L. (2002). Temporal GIS. New York: Springer-Verlag Press.

Cressie, N., & Huang, H. C. (1999). Classes of nonseparable, spatio-temporal stationary covariance functions. Journal of the American Statistical Association, 94, 1330–1340.

Davis, J. C., & Olea, R. A. (1998). Hexagonal basis of the observation well network. In: R. D. Miller, J. C. Davis, and R. A. Olea (Eds.), 1998 Annual water level raw data report for Kansas. Open-File Report No. 98–7 (electronic version): Kansas Geological Survey.

De Cesare, L., Myers, D., Posa, D. (1997). Spatial-temporal modeling of SO2 in milan district. In: E. Y. Baafi, N. A. Schofield (eds) Geostatistics Wollongong Vol. 96(2), (pp. 1031–1042). Dordrecht: Kluwer Academic.

De Cesare, L., Myers, D., & Posa, D. (2001). Product-sum covariance for space-time modeling. Environmetrics, 12, 11–23.

Delbari, M., Motlagh, M. B., & Amiri, M. (2013). Spatio-temporal variability of groundwater depth in the Eghlid aquifer in southern Iran. Earth Sciences Research Journal, 17(2), 105–114.

Fasbender, D., Peeters, L., Bogaert, P., and Dassargues, A. (2008). Bayesian data fusion applied to water table spatial mapping. Water Resources Research, 44(12). doi:10.1029/2008WR006921.

Finkenstadt, B., Held, L., & Isham, V. (2006). Statistical methods for spatio-temporal systems. USA: CRC Press, Chapmann and hall.

Fullér, R., & Majlender, P. (2003). On obtaining minimal variability OWA operator weights. Fuzzy Sets and Systems, 136, 203–215.

Goovaerts, P. (1997). Geostatistics for natural resources evaluation. New York: Oxford University Press.

Hosseini, M., and Kerachian, R. (2017). A data fusion-based methodology for optimal redesign of groundwater monitoring networks. Journal of Hydrology 552:267–282.

Isaaks, E., & Srivastava, R. M. (1989). An introduction to applied geostatistics. New York: Oxford University Press.

Kitanidis, P. K. (1997). Introduction to geostatistics: application to hydrogeology. Cambridge: Cambridge University Press.

Krige, D. G. (1951). A statistical approach to some basic mine valuation problems on the Witwatersrand. Journal of the Chemical, Metallurgical and Mining Society of South Africa, 52(6), 119–139.

Kumar, S., Sondhi, S. K., & Phogat, V. (2005). Network design for groundwater level monitoring in upper Bari Doab canal tract, Punjab, India. Irrigation and Drainage, 54(4), 431–442.

Mahab Ghods Consulting Engineering Company (2014). Integrated studies and mathematical groundwater modeling of the Ghorveh and Dehgolan plains. Technical Report (in Persian).

Manzione, R. L., Wendland, E., & Tanikawa, D. H. (2012). Stochastic simulation of time-series models combined with geostatistics to predict water-table scenarios in a Guarani Aquifer System outcrop area, Brazil. Hydrogeology Journal, 20(7), 1239–1249.

Nourani, V., Ejlali, R. G., & Alami, M. T. (2011). Spatiotemporal groundwater level forecasting in coastal aquifers by hybrid neural networks-geostatistics model: a case study. Environmental Engineering Science, 28(3), 217–228. doi:10.1089/ees.2010.0174.

O’Hagan, M. (1990). Using maximum entropy-ordered weighted averaging to construct a fuzzy neuron (pp. 618–623). Proc., 24th Annual IEEE Asilomar Conf. on Signals, Systems and Computers, Pacific Grove, Calif.

Olea, R. A. (1984). Sampling design optimization for spatial functions. Mathematical Geology, 16(4), 369–392.

Peeters, L., Fasbender, D., Batelaan, O., and Dassargues, A. (2010). Bayesian data fusion for water table interpolation: incorporating a hydrogeological conceptual model in kriging. Water Resources Research, 46(8). doi:10.1029/2009WR008353.

Ran, Y., Li, X., Lu, X., & Lian, Y. (2015). Optimal selection of groundwater-level monitoring sites in the Zhangye Basin, Northwest China. Journal of Hydrology, 525, 209–215.

Serre, M. L., & Christakos, G. (1999). Modern geostatistics: Computational BME analysis in the light of uncertain physical knowledge—the Equus Beds study. Stochastic Environmental Research and Risk Assessment, 13(1), 1–26.

Sophocleous, M., Paschetto, J. E., & Olea, R. A. (1982). Groundwater network design for Northwest Kansas, using the theory of regionalized variables. Ground Water, 20(1), 48–58.

Triki, I., Zairi, M., & Dhia, H. B. (2013). A geostatistical approach for groundwater head monitoring network optimization: case of the Sfax superficial aquifer (Tunisia). Water and Environment Journal, 27(3), 362–372.

Varouchakis, E. A., & Hristopulos, D. T. (2013). Comparison of stochastic and deterministic methods for mapping groundwater level spatial variability in sparsely monitored basins. Environmental Monitoring and Assessment, 185(1), 1–19.

Yager, R. R. (1988). On ordered weighted averaging aggregation operators in multi-criteria decision making. IEEE Transactions on Systems, Man, and Cybernetics, 18(1), 183–190.

Yu, H. L., & Chu, H. J. (2009). Understanding space-time patterns of groundwater system by empirical orthogonal functions: a case study in the Choshui River alluvial fan, Taiwan. Journal of Hydrology, 381(3–4), 239–247. doi:10.1016/j.jhydrol.2009.11.046.

Yu, H. L., & Lin, Y. C. (2012). Recharge signal identification based on groundwater level observations. Environmental Monitoring and Assessment, 184(10), 5971–5982.

Yu, H. L., & Lin, Y. C. (2015). Analysis of space–time non-stationary patterns of rainfall–groundwater interactions by integrating empirical orthogonal function and cross wavelet transform methods. Journal of Hydrology, 525, 585–597.

Zhou, Y., Dong, D., Liu, J., & Li, W. (2013). Upgrading a regional groundwater level monitoring network for Beijing Plain, China. Geoscience Frontiers, 4(1), 127–138.

Acknowledgments

This research has been financially supported by Iran National Science Foundation (INSF) under grant number no. 95849284. The authors would like to thank Dr. Mark Serre from the University of North Carolina for his invaluable clarification of some aspects related to the BME. Also, we would like to acknowledge the Mahab Ghods Consulting Engineering Company for sharing the MODFLOW-based groundwater flow simulation model of the Dehgolan plain.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hosseini, M., Kerachian, R. A Bayesian maximum entropy-based methodology for optimal spatiotemporal design of groundwater monitoring networks. Environ Monit Assess 189, 433 (2017). https://doi.org/10.1007/s10661-017-6129-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10661-017-6129-6