Abstract

Detecting communities on graphs has received significant interest in recent literature. Current state-of-the-art approaches tackle this problem by coupling Euclidean graph embedding with community detection. Considering the success of hyperbolic representations of graph-structured data in the last years, an ongoing challenge is to set up a hyperbolic approach to the community detection problem. The present paper meets this challenge by introducing a Riemannian geometry based framework for learning communities on graphs. The proposed methodology combines graph embedding on hyperbolic spaces with Riemannian K-means or Riemannian mixture models to perform community detection. The usefulness of this framework is illustrated through several experiments on generated community graphs and real-world social networks as well as comparisons with the most powerful baselines. The code implementing hyperbolic community embedding is available online https://www.github.com/tgeral68/HyperbolicGraphAndGMM.

Similar content being viewed by others

Notes

For Flickr dataset we only run one experiment for each parameter, moreover, the number of parameters tested is lower than those tested for others datasets.

ComE code repository is available at https://github.com/vwz/ComE.

References

Afsari B (2011) Riemannian \(L^p\) center of mass: existence, uniqueness and convexity. Proc Am Math Soc 139(2):655–6673

Alekseevskij D, Vinberg EB, Solodovnikov A (1993) Geometry of spaces of constant curvature. In: Geometry II. Springer, pp 1–138

Annamalai N, Mahinthan C, Rajasekar V, Lihui C, Yang L, Shantanu J (2017) graph2vec: learning distributed representations of graphs. In: Proceedings of the 13th international workshop on mining and learning with graphs (MLG)

Arnaudon M, Barbaresco F, Yang L (2013) Riemannian medians and means with applications to radar signal processing. J Sel Top Signal Process 7(4):595–604

Arnaudon M, Dombry C, Phan A, Yang L (2012) Stochastic algorithms for computing means of probability measures. Stoch Process Appl 58(9):1455–1473

Arnaudon M, Miclo L (2014) Means in complete manifolds: uniqueness and approximation. ESAIM Probab Stat 18:185–206

Arnaudon M, Yang L, Barbaresco F (2011) Stochastic algorithms for computing p-means of probability measures, geometry of Radar Toeplitz covariance matrices and applications to HR Doppler processing. In: International radar symposium (IRS), pp 651–656

Barachant A, Bonnet S, Congedo M, Jutten C (2012) Multiclass brain-computer interface classification by Riemannian geometry. IEEE Trans Biomed Eng 59(4):920–928

Becigneul G, Ganea O-E (2019) Riemannian adaptive optimization methods. In: International conference on learning representations (ICLR)

Boguná M, Papadopoulos F, Krioukov D (2010) Sustaining the internet with hyperbolic mapping. Nat Commun 1(1):1–8

Bonnabel S (2013) Stochastic gradient descent on Riemannian manifolds. IEEE Trans Autom Control 122(4):2217–2229

Cavallari S, Cambria E, Cai H, Chang KC, Zheng VW (2019) Embedding both finite and infinite communities on graphs [application notes]. IEEE Comput Intell Mag 14(3):39–50

Cavallari S, Zheng VW, Cai H, Chang KC-C, Cambria E (2017) Learning community embedding with community detection and node embedding on graphs. In: Proceedings of the 2017 ACM on conference on information and knowledge management (CIKM). ACM, pp 377–386

Chamberlain B, Deisenroth M, Clough J (2017) Neural embeddings of graphs in hyperbolic space. In: Proceedings of the 13th international workshop on mining and learning with graphs (MLG)

Chami I, Ying Z, Ré C, Leskovec J (2019) Hyperbolic graph convolutional neural networks. In: Wallach H, Larochelle H, Beygelzimer A, Alché-Buc FD, Fox E, Garnett R (eds) Advances in neural information processing systems, vol 32. Curran Associates Inc, pp 4868–4879

Cho H, DeMeo B, Peng J, Berger B (2019) Large-margin classification in hyperbolic space. volume 89 of Proceedings of Machine Learning Research. PMLR, pp 1832–1840, 16–18

Cui P, Wang X, Pei J, Zhu W (2019) A survey on network embedding. IEEE Trans Knowl Data Eng 31(5):833–852

Ganea O, Becigneul G, Hofmann T (2018) Hyperbolic neural networks. In: Advances in neural information processing systems 31 (NIPS). Curran Associates, Inc., pp 5345–5355

Gromov M (1987) Hyperbolic Groups. Springer, New York, pp 75–263

Grover A, Leskovec J (2016) Node2vec: scalable feature learning for networks. In: Proceedings of the 22th ACM international conference on knowledge discovery & data mining (SIGKDD), pp 855–864

Helgason S (2001) Differential geometry, Lie groups, and symmetric spaces. American Mathematical Society

Heuveline S, Said S, Mostajeran C (2021) Gaussian distributions on riemannian symmetric spaces, random matrices, and planar feynman diagrams. Preprint arXiv:2106.08953

Krioukov D, Papadopoulos F, Kitsak M, Vahdat A, Boguñá M (2010) Hyperbolic geometry of complex networks. Phys Rev E 82:036106

Lancichinetti A, Fortunato S, Radicchi F (2008) Benchmark graphs for testing community detection algorithms. Phys Rev E 78(4):046110

Lin F, Cohen WW (2010) Power iteration clustering. In: Proceedings of the 27th international conference on machine learning (ICML)

Liu Q, Nickel M, Kiela D (2019) Hyperbolic graph neural networks. In: Wallach H, Larochelle H, Beygelzimer A, Alché-Buc FD, Fox E, Garnett R (eds) Advances in neural information processing systems, vol 32. Curran Associates Inc, pp 8230–8241

Mathieu E, Le Lan C, Maddison CJ, Tomioka R, Teh YW (2019) Continuous hierarchical representations with poincaré variational auto-encoders. In: Wallach H, Larochelle H, Beygelzimer A, Alché-Buc FD, Fox E, Garnett R (eds) Advances in neural information processing systems, vol 32. Curran Associates Inc, pp 12565–12576

Mikolov T, Sutskever I, Chen K, Corrado GS, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: Advances in neural information processing systems 26 (NIPS). Curran Associates, Inc., pp 3111–3119

Miller GA (1995) Wordnet: a lexical database for english. Commun ACM 38(11):39–41

Nickel M, Kiela D (2017) Poincaré embeddings for learning hierarchical representations. In: Advances in neural information processing systems 30 (NIPS). Curran Associates, Inc., pp 6338–6347

Ovinnikov I (2018) Poincaré Wasserstein autoencoder. In: Bayesian deep learning workshop of advances in neural information processing systems (NIPS)

Pennec X (2006) Intrinsic statistics on Riemannian manifolds: basic tools for geometric measurements. J Math Imaging Vis 25(1):127

Pennington J, Socher R, Manning CD (2014) Glove: global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP)

Perozzi B, Al-Rfou R, Skiena S (2014) Deepwalk: online learning of social representations. In: Proceedings of the 20th ACM international conference on knowledge discovery and data mining (SIGKDD), KDD ’14, pp 701–710

Said S, Bombrun L, Berthoumieu Y, Manton JH (2017) Riemannian Gaussian distributions on the space of symmetric positive definite matrices. IEEE Trans Inf Theory 63(4):2153–2170

Said S, Hajri H, Bombrun L, Vemuri BC (2018) Gaussian distributions on Riemannian symmetric spaces: statistical learning with structured covariance matrices. IEEE Trans Inf Theory 64(2):752–772

Sala F, Sa CD, Gu A, Ré C (2018) Representation tradeoffs for hyperbolic embeddings. In: Proceedings of the 35th international conference on machine learning (ICML), pp 4457–4466

Skovgaard LT (1984) A riemannian geometry of the multivariate normal model. Scand J Stat:211–223

Spielmat DA (1996) Spectral partitioning works: planar graphs and finite element meshes. In: Proceedings of the 37th annual symposium on foundations of computer science, FOCS ’96. IEEE Computer Society, p 96

Tang J, Qu M, Wang M, Zhang M, Yan J, Mei Q (2015) Line: large-scale information network embedding. In: Proceedings of the 24th international conference on world wide web, pp 1067–1077

Tu C, Zeng X, Wang H, Zhang Z, Liu Z, Sun M, Zhang B, Lin L (2019) A unified framework for community detection and network representation learning. IEEE Trans Knowl Data Eng 31(6):1051–1065

Ungar AA (2008) A gyrovector space approach to hyperbolic geometry. Synth Lect Math Stat 1(1):1–194

Vulić I, Gerz D, Kiela D, Hill F, Korhonen A (2017) HyperLex: a large-scale evaluation of graded lexical entailment. Comput Linguist 43(4):781–835

Wang D, Cui P, Zhu W (2016) Structural deep network embedding. In: Proceedings of the 22Nd ACM international conference on knowledge discovery and data mining (SIGKDD ). ACM, pp 1225–1234

Wang D, Cui P, Zhu W (2016) Structural deep network embedding. In: Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining, pp 1225–1234

Zhu X, Ghahramani Z (2002) Learning from labeled and unlabeled data with label propagation. Technical report

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Jingrui He.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Implementation

Implementation

The package available at https://github.com/tgeral68/HyperbolicGraphAndGMM (soon to be released to the public) provides python code for performing graph embedding in the Poincaré Ball \(\mathbb {B}^m\) for all dimensions \(m\le 10\) and to apply Riemannian versions of Expectation-Maximisation (EM) and K-Means algorithm as detailed in the paper. The Readme details the required dependencies to operate the code, how to reproduce the results of the paper as well as effective ways to run experiments (produce a grid search, evaluate performances and so on). The code uses PyTorch backend with 64-bits floating-point precision (set by default) to learn embeddings. In this section, further details of the procedures are presented in addition to those given in the paper and the Readme .

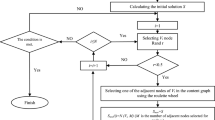

1.1 Centroids initialisation

To initialise both the centroids of the K-Means and the means of the Gaussian mixture models, one can use smart initialisations or random ones. A common way to initialise means or centroids is the K-Means++ algorithm using a smart initialisation instead of a purely random one. The main principle relies on selecting centroids that are far away from each other, consequently improving the initialisation. To this end, before running K-Means or Expectation-Maximisation algorithms, the means or centroids are selected as follow:

-

1.

For the first iteration, choose the first centroid \(c_1\) randomly from the embedded points according to a uniform distribution.

-

2.

For a future iteration t, sample a new centroid \(c_t\) according to \(p(x|c_1, c_2,\ldots ,c_{t-1})\), that associates a low probability for choosing x if it is associated to one of the existing centroids (\(\{c_1,\ldots ,c_{t-1}\}\). Technically, the distribution is implemented by finding for each point the closest centroid in \(\{c_1, c_2, \dots , c_t\}\) such that \(f(x) = \min \limits _{\{c_1, c_2, \dots , c_t\}} d_h(x, c_i)\) then computing for each point \(p(x|c_{1}, c_2,\ldots ,c_{t-1}) = \frac{d_h(x, f(x))^2}{\sum \limits _{z \in \mathcal {D}} d_h(z, f(z))^2}\)

-

3.

Repeat until K centroids have been chosen

The K-Means++ is implemented in the framework as a hyper-parameter of K-Means.

1.2 EM Algorithm

-

Weighted Barycentre We set for variable \(\lambda \) (learning rate) and \(\epsilon \) (convergence rate) in Algorithm 1 of the paper the values \(\lambda =5e-2\) and \(\epsilon =1e-4\).

-

Normalisation coefficient We compute the normalisation factor of the Gaussian distribution for \(\sigma \) in the interval [1e-3,2] with step size of 1e-3. This parameter is a quite important since if the minimum value of sigma is too high then unsupervised precision is better on datasets for which it is difficult to separate clusters in small dimensions (Wikipedia, BlogCatalog mainly) as discussed in the previous MCC subsection (In Sect. 4.4.1, the most common community labelled \(\approx 47\%\) of the nodes for Wikipedia and \(\approx 17\%\) for BlogCatalog).

-

EM convergence In the provided implementation, the EM is considered to have converged when the values of \(w_{ik}\) change less than 1e-4 w.r.t the previous iteration, more formally when:

$$\begin{aligned} \frac{1}{N}\sum \limits _{i=0}^N\frac{1}{K}\sum \limits _{k=0}^K(|w_{ik}^t-w_{ik}^{t+1}|)<1e-4 \end{aligned}$$For instance, using Flickr the first update of GMM distribution converged in approximately 50 to 100 iterations.

1.3 Learning embeddings

-

Optimisation In some cases, due to the Poincaré ball distance formula, the updates of the form \(\text {Exp}_u(\eta \nabla _uf(d(u,v))\) can reach a norm of 1 (because of floating point precision). In this special case we do not take into account the current gradient update. If it occurs too frequently, we recommend to lower the learning rate.

-

Moving context size Similarly to ComE, we use a moving size window on the context instead of a fixed one; thus we uniformly sample the size of the window between the max size given as input argument and 1.

1.4 Limitations for going in high dimensions

Numerical instabilities when computing the normalisation coefficient \(\zeta _m(\sigma )\) in Hyperbolic space. Recall that in Euclidean space, the normalisation coefficient of the multivariate isotropic Gaussian distribution is a linear function of the dimension m: \((2\pi \sigma )^{m/2}\). As for the Poincaré space used in the paper, the expression of the normalisation factor is:

Referring to Fig. 15, we can observe that \(\zeta _m(\sigma )\) increases exponentially as a function of m. Therefore, when increasing the dimension from 1 to 128 the computed probabilities \(f(x|\mu ,\sigma )\) where

become the result of a division with an ever-increasing number causing out-of-bound issues and numerical instabilities. This problem seems to be equally faced by the authors of Mathieu et al. (2019), who adapted variational auto-encoders to the Poincaré space. Their experiments are performed using 20 dimensions at most.

The ComE approach is however capable of reaching high dimensions without dealing with numerical instability issues.

A possible solution to this problem could be to reconsider the mathematical model at higher dimensions, and provide computationally efficient formulas that deals with floating representations. The very recent paper Heuveline et al. (2021) seems to have found new asymptotic formulas of the normalising factor on typical manifolds when the dimension grows and is definitively worth investigating to overcome the current difficulty.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gerald, T., Zaatiti, H., Hajri, H. et al. A hyperbolic approach for learning communities on graphs. Data Min Knowl Disc 37, 1090–1124 (2023). https://doi.org/10.1007/s10618-022-00902-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-022-00902-8