Abstract

We study a PDE-constrained optimal control problem that involves functions of bounded variation as controls and includes the TV seminorm of the control in the objective. We apply a path-following inexact Newton method to the problems that arise from smoothing the TV seminorm and adding an \(H^1\) regularization. We prove in an infinite-dimensional setting that, first, the solutions of these auxiliary problems converge to the solution of the original problem and, second, that an inexact Newton method enjoys fast local convergence when applied to a reformulation of the auxiliary optimality systems in which the control appears as implicit function of the adjoint state. We show convergence of a Finite Element approximation, provide a globalized preconditioned inexact Newton method as solver for the discretized auxiliary problems, and embed it into an inexact path-following scheme. We construct a two-dimensional test problem with fully explicit solution and present numerical results to illustrate the accuracy and robustness of the approach.

Similar content being viewed by others

1 Problem setting and introduction

This work is concerned with the optimal control problem

where throughout \(\Omega \subset {\mathbb {R}}^N\) is a bounded \(C^{1,1}\) domain and \(N\in \{1,2,3\}\). The control u belongs to the space of functions of bounded variation \(\text {BV}(\Omega )\), the state y lives in \(Y:=H^1_0(\Omega )\), the parameter \(\beta\) is positive, and \(Ay=u\) is a partial differential equation of the form

with a non-negative function \(c_0\in L^\infty (\Omega )\) and a linear and uniformly elliptic operator of second order in divergence form \(\mathcal{{A}} : H^1_0(\Omega ) \rightarrow H^{-1}(\Omega )\), \(\mathcal{{A}} y (\varphi ) = \int _{\Omega } \sum _{i,j=1}^N a_{ij}\partial _{i} y\partial _{j} \varphi \,\text{d}x\) whose coefficients satisfy \(a_{ij}=a_{ji}\in C^{0,1}(\Omega )\) for all \(i,j\in \{1,\ldots ,N\}\). The specific feature of (OC) is the appearance of the BV seminorm \(|u|_{\text {BV}(\Omega ) }\) in the cost functional, which favors piecewise constant controls and has recently attracted considerable interest in PDE-constrained optimal control, cf. [7, 13, 14, 16, 23, 25, 26, 29, 30, 33] and the earlier works [17, 18]. The majority of these contributions focuses on deriving optimality conditions and studying Finite Element approximations. In contrast, the main focus of this work is on a path-following method. Specifically,

-

we propose to smooth the TV seminorm in J and add an \(H^1\) regularization, and show in an infinite-dimensional setting that the solutions of the resulting family of auxiliary problems converge to the solution of (OC);

-

for any given auxiliary problem we prove that an infinite-dimensional inexact Newton method converges locally;

-

we derive a practical path-following method that yields accurate solutions for (OC) and illustrate its capabilities in numerical examples for \(\Omega \subset {\mathbb {R}}^2\).

To the best of our knowledge, these aspects have only been investigated partially for optimal control problems that involve the TV seminorm in the objective. In particular, there are few works that address the numerical solution when the measure \(\nabla u\) is supported in a two-dimensional set. In fact, we are only aware of [23], where a doubly-regularized version of the Fenchel predual of (OC) is solved for fixed regularization parameters, but path-following is not applied. We stress that in our numerical experience the two-dimensional case is significantly more challenging than the one-dimensional case. A FeNiCs implementation of our path-following method is available at https://arxiv.org/abs/2010.11628. It includes all the features that we discuss in Sect. 6, e.g., a preconditioner for the Newton systems, a non-monotone line search globalization, and inexact path-following.

A further contribution of this work is that

-

we provide an example of (OC) for \(N=2\) with fully explicit solution.

For the case that \(\nabla u\) is defined in an interval (\(N=1\)) such examples are available, e.g. [14, 33], but for \(N=2\) this is new.

Let us briefly address three difficulties associated with (OC).

First, the fact that (OC) is posed in the non-reflexive space \(\text {BV}(\Omega )\) complicates the proof of existence of optimal solutions. By now it is, however, well understood how to deal with this issue also in more complicated situations, cf. e.g. [14, 16].

Second, we notice that \(u\mapsto |u |_{\text {BV}(\Omega ) }\) is not differentiable. We will cope with this by replacing \(|u |_{\text {BV}(\Omega ) }\) with the smooth functional \(\psi _\delta (u)=\int _\Omega \sqrt{|\nabla u|^2+\delta ^2}\), \(\delta \ge 0\), that satisfies \(\psi _0(\cdot )=|\cdot |_{\text {BV}(\Omega ) }\). The functional \(\psi _\delta\) is well-known, particularly in the imaging community, e.g. [1, 21]. However, in most of the existing works the smoothing parameter \(\delta >0\) is fixed, whereas we are interested in driving \(\delta\) to zero. We will also add the regularizer \(\gamma \Vert u\Vert _{H^1(\Omega )}^2\), \(\gamma \ge 0\), to J and drive \(\gamma\) to zero. This allows us to prove that for \({\gamma ,\delta } >0\) the optimal control \({\bar{u}}_{{\gamma ,\delta } }\) of the smoothed and regularized auxiliary problem is \(C^{1,\alpha }\), which is crucial to show, for instance, that the adjoint-to-control mapping is differentiable; cf. Theorem 6. In contrast, for \(\gamma =0\) only \({\bar{u}}_{0,\delta }\in \text {BV}(\Omega )\) can be expected.

Third, our numerical experience with PDE-constrained optimal control involving the TV seminorm [14, 22, 29, 30, 33] suggests that path-following Newton methods work significantly better if the optimality systems of the auxiliary problems do not contain the control as independent variable. Therefore, we express the auxiliary optimality conditions in terms of state and adjoint state by regarding the control as an implicit function of the adjoint state.

Let us set our work in perspective with the available literature. As one of the main contributions we show that the solutions of the auxiliary problems converge to the solution of (OC), cf. Sect. 2.3. The asymptotic convergence for vanishing \(H^1\) seminorm regularization is analyzed in [16, Section 6] for a more general problem than (OC), but the fact that our setting is less general allows us to prove convergence in stronger norms than the corresponding [16, Theorem 10]. The asymptotic convergence for a doubly-regularized version of the predual of (OC) is established in [23, Appendix A], but one of the regularizations is left untouched, so convergence is towards the solution of a regularized problem, not towards the solution of (OC). Next, we demonstrate local convergence of an infinite-dimensional inexact Newton method applied to the optimality system of the auxiliary problem. Because the control and the adjoint state are coupled by a quasilinear PDE, this convergence analysis is non-trivial; among others, it relies on Hölder estimates for the gradient of the control that are rather technical to derive. A related result is [23, Theorem 3.5], where local q-superlinear convergence of a semismooth Newton method is shown for the doubly-regularized Fenchel predual for fixed regularization parameters. Yet, since we work with a different optimality system, the overlap is small. Nonetheless, [23] is closely related to the present paper, and it would be interesting to embed the semismooth Newton method [23, Algorithm 2] in a path-following scheme and compare it to our algorithm. The concept to view the control as an implicit function of the adjoint state or to eliminate it, is well-known in optimal control, cf., e.g., [14, 23, 35,36,37,38, 48, 49, 53, 56].

Turning to the discrete level we provide a Finite Element approximation and demonstrate that the Finite Element solutions of the auxiliary problems converge to their non-discrete counterparts. Finite Element approximations for optimal control in BV involving the TV seminorm have also been studied in [7, 13, 14, 16, 25, 26, 29, 30, 33], but in our assessment the regularization of (OC) that we propose is not covered by these studies. The papers [24, 43] study the linear systems that arise in split Bregman methods when applied to a discretization of (OC) with homogeneous Neumann boundary conditions.

The BV-term in (OC) favors sparsity in the gradient of the control. Other sparsity promoting control terms that have recently been studied are measure norms and \(L^1\)-type functionals, e.g., [2, 11, 12, 15, 19, 20, 34, 41, 49, 54].

TV-regularization is also of significant importance in imaging problems and its usefulness for, e.g., noise removal has long been known [50]. However, we take the point of view that imaging problems are different in nature from optimal control problems, for instance because their forward operator is usually cheap to evaluate and non-compact.

This paper is organized as follows. After preliminaries in Sect. 1, we consider existence, optimality conditions and convergence of solutions in Sect. 2. In Sect. 3 we study differentiability of the adjoint-to-control mapping, which paves the way for proving local convergence of an inexact Newton method in Sect. 4. Section 5 addresses the Finite Element approximation and its convergence, while Sect. 6 provides the path-following method. Numerical experiments are presented in Sect. 7, including for the test problem with explicit solution. In Sect. 8 we summarize. Several technical results such as Hölder continuity of solutions to quasilinear PDEs are deferred to the “Appendix”.

2 Preliminaries

We recall facts about the space \(\text {BV}(\Omega )\), introduce the smoothed BV seminorm that we use in this work, and collect properties of the solution operator associated to the PDE in (OC).

2.1 Functions of bounded variation

The following statements about \(\text {BV}(\Omega )\) can be found in [4, Chapter 3] unless stated otherwise. For \(u\in L^1(\Omega )\) we let

where here and throughout, \(|\cdot |\) denotes the Euclidean norm. The space of functions of bounded variation is defined as

and \(|u|_{\text {BV}(\Omega ) }\) is called the BV seminorm (also TV seminorm) of \(u\in \text {BV}(\Omega )\). We endow \(\text {BV}(\Omega )\) with the norm \(\Vert \cdot \Vert _{\text {BV}(\Omega ) } := \Vert \cdot \Vert _{L^1(\Omega )} + |\cdot |_{\text {BV}(\Omega ) }\) and recall from [5, Thm. 10.1.1] that this makes \(\text {BV}(\Omega )\) a Banach space. It can be shown that \(u\in \text {BV}(\Omega )\) iff there exists a vector measure \((\partial _{x_1} u, \dots , \partial _{x_N} u )^T = \nabla u \in \mathcal{M}(\Omega ) ^N\) such that for all \(i\in \{1,\ldots ,n\}\) there holds

where \(\mathcal{M}(\Omega )\) denotes the linear space of regular Borel measures, e.g. [51, Chapter 2]. Also, for \(u\in \text {BV}(\Omega )\) we have \(|u|_{\text {BV}(\Omega ) }=\Vert |\nabla u|\Vert _{\mathcal{M}(\Omega ) }\), i.e., \(|u|_{\text {BV}(\Omega ) }\) is the total variation of the vector measure \(\nabla u\). The space \(\text {BV}(\Omega )\) embeds continuously (compactly) into \(L^r(\Omega )\) for \(r\in [1,\frac{N}{N-1}]\) (\(r \in [1, \frac{N}{N-1})\)), see, e.g., [4, Cor. 3.49 and Prop. 3.21]. We use the convention that \(\frac{N}{N-1}=\infty\) for \(N=1\). Also important is the notion of strict convergence, e.g. [4, 5].

Definition 1

For \(r \in [1, \frac{N}{N-1} ]\) the metric \(d_{{\text {BV}},r}\) is given by

Convergence with respect to \(d_{{\text {BV}},1}\) is called strict convergence.

Remark 1

The embedding \(\text {BV}(\Omega ) \hookrightarrow L^r(\Omega )\), for \(r \in [1, \frac{N}{N-1} ]\), implies that \(d_{BV, r}\) is well-defined and continuous with respect to \(\Vert \cdot \Vert _{\text {BV}(\Omega ) }\).

We will also use the following density property.

Lemma 1

\(C^\infty ({\bar{\Omega }})\) is dense in \((\text {BV}(\Omega ) \cap L^r(\Omega ),\, d_{{\text {BV}},r} )\) for \(r \in [1, \frac{N}{N-1} ]\).

Proof

By straightforward modifications the proof for the special case \(r=1\) in [5, Thm. 10.1.2] can be extended, using that the sequence of mollifiers constructed in the proof converges in \(L^r\), see [5, Prop. 2.2.4].□

For the remainder of this work we fix a number \(s=s(N) \in (1,\frac{N}{N-1})\) with

where the first embedding is compact and the second is continuous. For \(N=1\) we interpret \(\frac{N}{N-1}\) as \(\infty\).

Remark 2

Consider, for instance, \(N=2\) and any \(r\in (1,2)\). Then we have \(\text {BV}(\Omega ) \hookrightarrow \hookrightarrow L^r(\Omega )\) and \(H^1(\Omega )\hookrightarrow L^\frac{r}{r-1}(\Omega )\) so that any \(s \in (1,2)\) can be used.

2.2 The smoothed BV seminorm

We will replace the BV seminorm in (OC) by the function \(\psi _\delta :\text {BV}(\Omega ) \rightarrow {\mathbb {R}}\),

where \(\delta \ge 0\). We stress that \(\psi _\delta\) is frequently employed in imaging problems for the same purpose, for instance in [1, 21]. It has the following properties.

Lemma 2

The following statements are true for all \(\delta \ge 0\).

-

1.

For any \(u\in \text {BV}(\Omega )\) there holds

$$\begin{aligned} |u|_{\text {BV}(\Omega ) } = \psi _0 (u) \le \psi _\delta (u) \le |u|_{\text {BV}(\Omega ) } + \sqrt{\delta } |\Omega |. \end{aligned}$$ -

2.

\(\psi _\delta\) is lower semi-continuous with respect to the \(L^1(\Omega )\) norm.

-

3.

\(\psi _\delta\) is convex.

-

4.

For all \(u\in W^{1,1}(\Omega )\) we have

$$\begin{aligned} \psi _\delta (u) = \int _\Omega \sqrt{ \delta + |\nabla u|^2 } \,\text{d}x. \end{aligned}$$ -

5.

The function \(\psi _\delta |_{H^1(\Omega )}\) is Lipschitz with respect to \(\Vert \cdot \Vert _{H^1(\Omega ) }\).

Proof

The first four statements are from [1, Section 2] and the last one follows from \(H^1(\Omega )\hookrightarrow W^{1,1}(\Omega )\), 4. and the Lipschitz continuity of \(r\mapsto \sqrt{\delta +r^2}\).□

2.3 The solution operator of the state equation

Lemma 3

For every \(u\in H^{-1}(\Omega )\) the operator equation \(Ay=u\) in (OC) has a unique solution \(y=y(u)\in Y\). The solution operator

is linear, continuous, and bijective. In particular, S is \(L^s\)-\(L^2\) continuous. Moreover, for given \(q\in (1,\infty )\) there is a constant \(C>0\) such that

is satisfied for all \(u\in L^q(\Omega )\).

Proof

Except for the estimate all statements follow from the Lax-Milgram theorem. The estimate is a consequence of [28, Lemma 2.4.2.1, Theorem 2.4.2.5].□

Remark 3

From \(\text {BV}(\Omega ) \hookrightarrow L^s(\Omega )\hookrightarrow H^{-1}(\Omega )\) and Lemma 3 we obtain that (OC) has a nonempty feasible set.

3 The solutions of original and regularized problems

In this section we prove the existence of solutions for (OC) and the associated regularized problems, characterize the solutions by optimality conditions, and show their convergence in appropriate function spaces.

3.1 The original problem: existence of solutions and optimality conditions

To establish the existence of a solution for (OC) we use the reduced problem

Lemma 4

The function \(j:\text {BV}(\Omega ) \rightarrow {\mathbb {R}}\) is well-defined, strictly convex, continuous with respect to \(d_{BV,s}\), and coercive with respect to \(\Vert \cdot \Vert _{\text {BV}(\Omega ) }\).

Proof

The term \(\frac{1}{2} \Vert Su-y_\Omega \Vert ^2_{L^2(\Omega )}\) is well-defined by Remark 3 and strictly convex in u due to the injectivity of S. Since \(|\cdot |_{\text {BV}(\Omega ) }\) is convex, the strict convexity of j follows. The continuity holds because S is \(L^s\)-\(L^2\) continuous. The coercivity follows by virtue of [1, Lemma 4.1] using again that S is injective and \(L^s\)-\(L^2\) continuous.□

The strict convexity implies that j has at most one (local=global) minimizer.

Theorem 1

The problem (ROC) has a unique solution \({\bar{u}}\in \text {BV}(\Omega )\).

Proof

The proof is included in the proof of Theorem 2.□

As usual, the optimal state \({\bar{y}}\) and the optimal adjoint state \({\bar{p}}\) are given by

where, due to \(\text {BV}(\Omega ) \hookrightarrow L^{\frac{N}{N-1}}(\Omega )\) and Lemma 3, we have \(r_N=\frac{N}{N-1}\) for \(N\in \{2,3\}\), respectively, \(r_N \ge 1\) arbitrarily large for \(N=1\). Moreover, \(S^*:H^{-1}(\Omega ) \rightarrow Y\) is the dual operator of S, where we have identified the dual space of \(H^{-1}(\Omega )\) with \(Y\) using reflexivity. Since \(S^*=S\) and \({\bar{y}}-y_\Omega \in L^2(\Omega )\), Lemma 3 yields \({\bar{p}}\in P\) for

It is standard to show that \({\bar{p}}\) is the unique weak solution of

Remark 4

The optimality conditions of (ROC) are only needed for the construction of the test problem with explicit solution in “Appendix 4”, but not for the following analysis. They are deferred to “Appendix 3”. We stress, however, that they allow to discuss the sparsity of \(\nabla {\bar{u}}\), cf. Remark 9.

3.2 The regularized problems: existence of solutions and optimality conditions

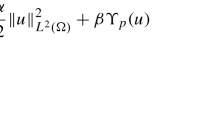

Smoothing the BV seminorm and adding an \(H^1\) regularization to j yields

where we set \(j_{\gamma ,\delta } (u):=+\infty\) for \(u\in \text {BV}(\Omega ) \setminus H^1(\Omega )\) if \(\gamma >0\).

Lemma 5

For any \({\gamma ,\delta } \ge 0\) the function \(j_{\gamma ,\delta } :\text {BV}(\Omega ) \rightarrow {\mathbb {R}}\cup \{+\infty \}\) is well-defined, strictly convex, and coercive with respect to \(\Vert \cdot \Vert _{\text {BV}(\Omega ) }\). Moreover, the function \(j_{\gamma ,\delta } \vert _{H^1(\Omega ) }\) is \(H^1(\Omega )\) continuous for any \({\gamma ,\delta } \ge 0\).

Proof

The well-definition and strict convexity of \(j_{\gamma ,\delta }\) follow similarly as for j in Lemma 4. Using Lemma 2 1. we find \(j\le j_{\gamma ,\delta }\), so \(j_{\gamma ,\delta }\) inherits the coercivity from j. The continuity follows term by term. For the first term it is enough to recall from Lemma 3 the \(L^2\)-\(L^2\) continuity of S. The second term is Lipschitz in \(H^1\) by Lemma 2. The continuity of the third term is obvious.□

We obtain existence of unique and global solutions.

Theorem 2

For any \({\gamma ,\delta } \ge 0\), (\(\hbox {ROC}_{\gamma ,\delta }\)) has a unique solution \({\bar{u}}_{\gamma ,\delta } \in \text {BV}(\Omega )\). For \(\gamma >0\) we have \({\bar{u}}_{\gamma ,\delta } \in H^1(\Omega )\).

Proof

Since \(j_{\gamma ,\delta }\) is strictly convex by Lemma 5, there is at most one minimizer. For \(\gamma >0\) the existence of \({\bar{u}}_{{\gamma ,\delta } }\in H^1(\Omega )\) follows from standard arguments since \(j_{\gamma ,\delta } \vert _{H^1(\Omega )}\) is strongly convex and \(H^1\) continuous by Lemma 5. For \(\gamma =0\) and any \(\delta \ge 0\), the existence of a minimizer follows from [1, Theorem 4.1] by use of the injectivity and boundedness of \(S:H^{-1}(\Omega )\rightarrow Y\hookrightarrow L^2(\Omega )\). While \(\Omega\) is convex in [1, Theorem 4.1] remains true without this assumption.□

Optimal state \({\bar{y}}_{\gamma ,\delta }\) and optimal adjoint state \({\bar{p}}_{\gamma ,\delta }\) for (\(\hbox {ROC}_{\gamma ,\delta }\)) are given by

where \(r_N=\frac{N}{N-1}\) for \(N\in \{2,3\}\), respectively, \(r_N\ge 1\) arbitrarily large for \(N=1\). In particular, \({\bar{p}}_{\gamma ,\delta }\) is the unique weak solution of

The optimality conditions of (\(\hbox {ROC}_{\gamma ,\delta }\)) are based on differentiability of \(j_{\gamma ,\delta }\).

Lemma 6

For \({\gamma ,\delta } >0\) the functional \(j_{{\gamma ,\delta } }: H^1(\Omega ) \rightarrow {\mathbb {R}}\) is Fréchet differentiable. Its first derivative is given by

where

Proof

It suffices to argue for \(\psi _\delta\), which we do in Lemma 15 in “Appendix 1”. The other terms are standard.□

For differentiable convex functions a vanishing derivative is necessary and sufficient for a global minimizer. This yields the following optimality conditions.

Theorem 3

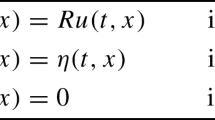

For \({\gamma ,\delta } > 0\) the control \({\bar{u}}_{\gamma ,\delta } \in H^1(\Omega )\) is the solution of (\(\hbox {ROC}_{\gamma ,\delta }\)) iff

which is the nonlinear Neumann problem

where \({\bar{p}}_{\gamma ,\delta } = S^*( S {\bar{u}}_{\gamma ,\delta } - y_\Omega )\).

3.3 Convergence of the path of solutions

We prove that \(({\bar{u}}_{\gamma ,\delta }\), \({\bar{y}}_{\gamma ,\delta }\),\({\bar{p}}_{\gamma ,\delta } )\) converges to \(({\bar{u}},{\bar{y}},{\bar{p}})\) for \({\gamma ,\delta } \rightarrow 0\). As a first step we show convergence of the objective values.

Lemma 7

We have

Proof

Let \(\epsilon >0\) and let \(({(\gamma _k,\delta _k)} )_{k\in \mathbb {N}} \subset {\mathbb {R}}_{\ge 0}^2\) converge to (0, 0). There holds

where we used \(j({\bar{u}}) \le j({\bar{u}}_{\gamma _k,\delta _k} ) \le j_{\gamma _k,\delta _k} ({\bar{u}}_{\gamma _k,\delta _k} )\). The first term in brackets satisfies

where the last inequality follows from Lemma 2. For the second term in brackets we deduce from Lemma 1 and the \(d_{BV,s}\) continuity of j established in Lemma 4 that there is \(u_\epsilon \in C^\infty ({\bar{\Omega }})\) such that \(|j({\bar{u}})-j(u_\epsilon )| < \epsilon\). This yields

Putting the estimates for the two terms together shows

For \(k\rightarrow \infty\) this implies the claim since \(\epsilon >0\) was arbitrary and

□

We infer that the optimal controls \({\bar{u}}_{{\gamma ,\delta } }\) converge to \({\bar{u}}\) in \(L^r\) for suitable r.

Lemma 8

For any \(r\in [1,\frac{N}{N-1})\) we have \(|| {\bar{u}}_{\gamma ,\delta } - {\bar{u}}||_{L^r(\Omega )} \xrightarrow {{(\gamma ,\delta )} \rightarrow (0,0)} 0\).

Proof

Let \(({(\gamma _k,\delta _k)} )_{k\in \mathbb {N}} \subset {\mathbb {R}}_{\ge 0}^2\) converge to (0, 0). Let \(C > 0\) be so large that \(\gamma _k,\delta _k\le C\) for all k. The optimality of \({\bar{u}}_{\gamma _k,\delta _k}\) and Lemma 2 yield for each \(k\in \mathbb {N}\)

As \((j({\bar{u}}_{\gamma _k,\delta _k} ))_{k\in \mathbb {N}}\) is bounded, we obtain from Lemma 4 that \((\Vert {\bar{u}}_{\gamma _k,\delta _k} \Vert _{\text {BV}(\Omega ) })_{k\in \mathbb {N}}\) is bounded, too. The compact embedding of \(\text {BV}(\Omega )\) into \(L^{r}(\Omega )\), \(r\in [1,\frac{N}{N-1})\) thus implies that there exists \({\tilde{u}}\in L^r(\Omega )\) such that a subsequence of \(\left( {\bar{u}}_{\gamma _k,\delta _k} \right) _{k\in \mathbb {N}}\), denoted in the same way, converges to \({\tilde{u}}\) in \(L^r(\Omega )\). It is therefore enough to show \({\tilde{u}}={\bar{u}}\). Since j is lower semi-continuous in the \(L^s\) topology, cf. Lemma 2, we have

where we used Lemma 7 to derive the equality. This shows \({\tilde{u}}\in \text {BV}(\Omega )\), hence Theorem 1 implies \({\tilde{u}} = {\bar{u}}\).□

In fact, the convergence of \({\bar{u}}_{{\gamma ,\delta } }\) to \({\bar{u}}\) is stronger.

Theorem 4

For any \(r\in [1,\frac{N}{N-1})\) we have \(d_{BV,r}({\bar{u}}_{\gamma ,\delta }, {\bar{u}}) \xrightarrow {{(\gamma ,\delta )} \rightarrow (0,0)} 0.\)

Proof

For any \({\gamma ,\delta } \ge 0\) we have \(j({\bar{u}}) \le j({\bar{u}}_{\gamma ,\delta } ) \le j_{\gamma ,\delta } ({\bar{u}}_{\gamma ,\delta } )\), so Lemma 7 yields \(\lim _{{(\gamma ,\delta )} \rightarrow (0,0)} j({\bar{u}}_{\gamma ,\delta } ) = j({\bar{u}})\). Furthermore, there holds

By Lemma 8 and the continuity of S from \(L^s(\Omega )\) to \(L^2(\Omega )\) we thus find

Together with Lemma 8 this proves the claim.□

We conclude this section with the convergence of \(({\bar{y}}_{{\gamma ,\delta } }, {\bar{p}}_{\gamma ,\delta } )\) to \(({\bar{y}},{\bar{p}})\).

Theorem 5

For any \(r\in [1,\frac{N}{N-1})\) and any \(r^\prime \in [1,\infty )\) we have

Proof

The continuity of S from \(L^q\) to \(W^{2,q}\) for any \(q\in (1,\infty )\), see Lemma 3, implies with Lemma 8 that \(\lim _{{(\gamma ,\delta )} \rightarrow (0,0)} \Vert {\bar{y}}_{\gamma ,\delta } - {\bar{y}} \Vert _{W^{2,r}(\Omega )} = 0\) for any \(r\in [1,\frac{N}{N-1})\). Since for any \(r^\prime \in (1,\infty )\) there is \(r\in [1,\frac{N}{N-1})\) such that \(W^{2,r}(\Omega )\hookrightarrow L^{r^\prime }(\Omega )\) is satisfied, we can use the \(L^{r^\prime }\)-\(W^{2,r^\prime }\) continuity of \(S^*= S\) to find \(\lim _{{(\gamma ,\delta )} \rightarrow (0,0)} \Vert {\bar{p}}_{\gamma ,\delta } - {\bar{p}} \Vert _{W^{2,r^\prime }(\Omega )}=\lim _{{(\gamma ,\delta )} \rightarrow (0,0)} \Vert S^*({\bar{y}}_{\gamma ,\delta } -{\bar{y}}) \Vert _{W^{2,r^\prime }(\Omega )}=0\).□

Remark 5

The results of Sect. 2 can also be established for nonsmooth domains \(\Omega\), but \({\bar{y}},{\bar{p}},{\bar{y}}_{\gamma ,\delta } ,{\bar{p}}_{\gamma ,\delta }\) may be less regular since S may not provide the regularity stated in Lemma 3. A careful inspection reveals that only Theorem 5 has to be modified. If, for instance, \(\Omega \subset {\mathbb {R}}^N\), \(N\in \{2,3\}\), is a bounded Lipschitz domain, then [52, Theorem 3] implies that Theorem 5 holds if \(W^{2,r}\) and \(W^{2,r^\prime }\) are both replaced by \(H^r\), where \(r\in [1,\frac{3}{2})\) is arbitrary. If \(\Omega\) is convex, then [28, Theorem 3.2.1.2] further yields that \(W^{2,r^\prime }\) can be replaced by \(H^2\).

4 Differentiability of the adjoint-to-control mapping

The main goal of this section is to show that the PDE

has a unique weak solution \(u=u(p)\in C^{1,\alpha }(\Omega )\) for every right-hand side \(p\in L^\infty (\Omega )\), and that \(p\mapsto u(p)\) is Lipschitz continuously Fréchet differentiable in any open ball, where the Lipschitz constant is independent of \(\gamma\) and \(\delta\) provided \(\gamma >0\) and \(\delta >0\) are bounded away from zero. This is accomplished in Theorem 7. Note that we suppress the dependency on \({\gamma ,\delta }\) in \(u=u(p;{\gamma ,\delta } )\).

Assumption 1

We are given constants \(0 < \gamma _0 \le \gamma ^0\), \(0 < \delta _0 \le \delta ^0\) and \(b^0 > 0\). We denote \(I:=[\gamma _0,\gamma ^0]\times [\delta _0, \delta ^0]\) and write \(\mathbb {B} \subset L^\infty (\Omega )\) for the open ball of radius \(b^0>0\) centered at the origin in \(L^\infty (\Omega )\).

We first show that \(p\mapsto u(p)\) is well-defined and satisfies a Lipschitz estimate.

Lemma 9

Let Assumption 1 hold. Then there exist \(L>0\) and \(\alpha \in (0,1)\) such that for each \((\gamma ,\delta )\in I\) and all \(p_1, p_2 \in \mathbb {B}\) the PDE (2) has unique weak solutions \(u_1=u_1(p_1)\in C^{1,\alpha }(\Omega )\) and \(u_2 = u_2(p_2)\in C^{1,\alpha }(\Omega )\) that satisfy

In particular, we have the stability estimate

Proof

Unique existence and the first estimate are established in Theorem 13 in “Appendix 2”. The second estimate follows from the first for \(p_2=0\).□

We introduce the function

so that (2) becomes

The following two results prove that the adjoint-to-control mapping is differentiable and has a locally Lipschitz continuous derivative whose Lipschitz constant is bounded on bounded sets uniformly in I.

Theorem 6

Let Assumption 1hold and let \(\alpha \in (0,1)\) be the constant from Lemma 9. For each \({(\gamma ,\delta )} \in I\) the mapping \(\mathbb {B} \ni p\mapsto u(p) \in C^{1,\alpha }(\Omega )\) is Fréchet differentiable. Its derivative \(z=u'(p)d\in C^{1,\alpha }(\Omega )\) in direction \(d \in L^\infty (\Omega )\) is the unique weak solution of the linear PDE

and there exists \(C>0\) such that for all \({(\gamma ,\delta )} \in I\), all \(p\in \mathbb {B}\), and all \(d\in L^\infty (\Omega )\) we have

Proof

Let \(p\in \mathbb {B}\) and \(d\in L^\infty (\Omega )\) be such that \(p+d \in \mathbb {B}\). From Lemma 9 we obtain \(u(p) \in C^{1,\alpha }(\Omega )\) and \(\Vert u(p)\Vert _{C^{1,\alpha }(\Omega )}\le C\Vert p\Vert _{L^\infty (\Omega )}\), where C is independent of \(\gamma ,\delta ,p\). Combining this with Lemma 16 implies

and the estimate \(\Vert A\Vert _{C^{0,\alpha }(\Omega )}\le a^0\) for \(A:=\gamma I+f^\prime (\nabla u(p))\) with a constant \(a^0\) that does not depend on \(\gamma ,\delta ,p\). Since \(f'(v)\), \(v\in {\mathbb {R}}^N\), is the Hessian of the convex function \(v\mapsto \sqrt{\delta +|v|^2}\), it is positive semi-definite. It follows that Theorem 11 is applicable. Thus, the PDE (4) has a unique weak solution \(z \in C^{1,\alpha }(\Omega )\) that satisfies the claimed estimate. Concerning the Fréchet differentiability we obtain for \(r := u(p+d)-u(p)-z \in C^{1,\alpha }(\Omega )\)

where we set \(w:=w(p,d):=\nabla u(p+d)-\nabla u(p)\). Theorem 11 implies that there is \(C>0\), independent of d, such that

The expression in the norm on the right-hand side satisfies the following identity pointwise in \(\Omega\)

Lemma 16 yields

As \(f \in C^3({\mathbb {R}}^N,{\mathbb {R}}^N)\) with bounded derivatives we have that \(f^{\prime \prime }\) is Lipschitz continuous and bounded. We infer from Lemma 16 and Lemma 9 that

which shows \(\Vert r\Vert _{C^{1,\alpha }(\Omega )} = o(\Vert d\Vert _{L^\infty (\Omega )})\) since C is independent of d.□

Theorem 7

Let Assumption 1hold and let \(\alpha \in (0,1)\) be the constant from Lemma 9. Then the mapping \(u^\prime : \mathbb {B} \rightarrow \mathcal{L} (L^\infty (\Omega ), C^{1,\alpha }(\Omega ))\) is Lipschitz continuous and the Lipschitz constant does not depend on \({(\gamma ,\delta )}\), but only on \(\Omega\), N, \(\gamma _0\), \(\gamma ^0\), \(\delta _0\), \(\delta ^0\) and \(b^0\).

Proof

Let \(p, q \in \mathbb {B}\) and \(d \in L^\infty (\Omega )\). Set \(z_p:=\nabla \bigl (u'(p)d\bigr )\) and \(z_q:=\nabla \bigl (u'(q)d\bigr )\). Then

holds in \(H^1(\Omega )^*\). Thus, the difference \(r := u^\prime (p)d - u^\prime (q) d\) satisfies

from which we infer that

in \(H^1(\Omega )^*\). By the same arguments as below (5), \(A := \gamma I + f^\prime \bigl ( \nabla u(p) \bigr )\) satisfies \(\Vert A\Vert _{C^{0,\alpha }(\Omega )} \le a^0\) with a constant \(a^0\) that does not depend on \(\gamma ,\delta ,p,q\). Moreover, A is elliptic with constant \(\gamma _0\). By Theorem 11 this yields

Here, \(C>0\) does not depend on p, q, but only on the desired quantities. From Lemma 16 and Theorem 6 we infer that

Lemma 16 and Lemma 9 therefore imply

The first factor is bounded since \(f''\) is bounded and Lipschitz. This demonstrates the asserted Lipschitz continuity.□

Remark 6

Theorem 7 stays valid, for some different \(\alpha\), if \(\Omega\) is of class \(C^{1,\alpha ^\prime }\) for some \(\alpha ^\prime >0\).

5 An inexact Newton method for the regularized problems

In this section we introduce the formulation of the optimality system of (\(\hbox {ROC}_{\gamma ,\delta }\)) on which our numerical method is based, and we show that the application of an inexact Newton method to this formulation is globally well-defined and locally convergent. We use the following assumption.

Assumption 2

We are given constants \(0 < \gamma _0 \le \gamma ^0\), \(0 < \delta _0 \le \delta ^0\) and \(b^0 \ge 0\). We denote \(I:=[\gamma _0,\gamma ^0]\times [\delta _0, \delta ^0]\) and fix \({(\gamma ,\delta )} \in I\).

The optimality conditions from Theorem 3 can be cast as \(F({\bar{y}}_{\gamma ,\delta } ,{\bar{p}}_{\gamma ,\delta } )=0\) for

and the pair \(({\bar{y}}_{\gamma ,\delta } ,{\bar{p}}_{\gamma ,\delta } )\) is the unique root of F. Note that we suppress the dependency of \(u=u(p;{\gamma ,\delta } )\) and \(F=F(y,p; {\gamma ,\delta } )\) on \({\gamma ,\delta }\). By standard Sobolev embeddings we have \(P\subset H^2(\Omega )\hookrightarrow L^\infty (\Omega )\), hence \(u(-p) \in C^{1,\alpha }(\Omega )\) for some \(\alpha >0\) by Lemma 9, so F is well-defined. A Newton system with a somewhat similar structure is considered in [53].

To find the root of F we apply the inexact Newton method Algorithm 1.

The remainder of this section is devoted to the convergence analysis for Algorithm 1. A similar analysis can be carried out if the optimality system of (\(\hbox {ROC}_{\gamma ,\delta }\)) and the inexact Newton method are based on u instead of (y, p). However, in our numerical experiments the path-following method based on (6), cf. Sect. 6, was clearly superior to its counterpart based on u: The former could reduce \({\gamma ,\delta }\) to much smaller values than the latter and was also significantly more robust. Both observations are well in line with our previous experience [14, 22, 29, 30, 33] on PDE-constrained optimal control problems involving the TV seminorm.

Since the homotopy path \({(\gamma ,\delta )} \mapsto ({\bar{u}}_{{\gamma ,\delta } },{\bar{y}}_{{\gamma ,\delta } },{\bar{p}}_{{\gamma ,\delta } })\) is not affected by the formulation of the optimality system, we conjecture that the superior performance of path-following based on (6) is related to the fact that \(({\bar{y}}_{\gamma ,\delta } ,{\bar{p}}_{\gamma ,\delta } )\) converges to \(({\bar{y}},{\bar{p}})\) in stronger norms than \({\bar{u}}_{\gamma ,\delta }\) to \({\bar{u}}\), cf. Theorem 5, respectively, Theorem 4.

There are many works in which the control is considered as an implicit variable of some sort or avoided altogether, e.g., [14, 23, 35,36,37,38, 48, 49, 53, 56]. Concerning the optimal triple \(({\bar{y}},{\bar{p}},{\bar{u}})\) for (OC) we share with those works the idea to base the optimality system on the smoother variables (y, p). In contrast to those works, however, (6) does neither improve the regularity of the controls that appear as iterates (in comparison to a formulation based on u) nor does it circumvent their use.

The next two lemmas yield convergence of Algorithm 1.

Lemma 10

Let Assumption 2 hold. Then F defined in (6) is locally Lipschitz continuously Fréchet differentiable. Its derivative at \((y,p)\in Y\times P\) is given by

Proof

Only \(p\mapsto u(-p)\) is nonlinear, so the claims follow from Theorem 7.□

Lemma 11

Let Assumption 2 hold. Then \(F^\prime (y,p)\) is invertible for all \((y,p) \in Y \times P\).

Proof

The proof consists of two parts. First we show that \(F^\prime (y, p)\) is injective and second that it is a Fredholm operator of index 0, see [39, Chapter IV, Section 5]. These two facts imply the bijectivity of \(F^\prime (y, p)\). For the injectivity let \((\delta y, \delta p) \in Y \times P\) with \(F^\prime (y,p)(\delta y, \delta p) = 0 \in Y ^*\times L^2(\Omega )\), i.e.

and therefore

The representation of \(z:=u^\prime (-p) \delta p\) from Theorem 6 yields

Since \(f^{\prime }\) is positive semi-definite, we find \(\Vert \delta y\Vert _{L^2(\Omega )}^2 \le 0\). This shows \(\delta y = 0\). By (7) this yields \(A^*\delta p = 0\) in \(L^2(\Omega )\), hence \(\delta p = 0\), which proves the injectivity.

To apply Fredholm theory we decompose \(F^\prime (y,p)\) into the two operators

We want to use [39, Chapter IV, Theorem 5.26], which states: If the first operator is a Fredholm operator of index 0 and the second operator is compact with respect to the first operator (see [39, Chapter IV, Introduction to Section 3]), then their sum \(F^\prime (y,p)\) is also a Fredholm operator of index 0. By the injectivity of \(F^\prime (y,p)\) this implies its bijectivity.

The operators \(A: Y \rightarrow Y ^*\) and \(A^*: P \rightarrow L^2(\Omega )\) are invertible by Lemma 3, and thus

is invertible and in particular a Fredholm operator of index 0. It remains to show that

is compact with respect to the first operator. Thus, we have to establish that for any sequence \(( (\delta y_n, \delta p_n) )_{n\in \mathbb {N}} \subset Y \times P\) such that there exists a \(C>0\) with

the sequence \(\bigl ( ( u^\prime (-p) \delta p_n, \delta y_n ) \bigr )_{n\in \mathbb {N}} \subset Y ^*\times L^2(\Omega )\) contains a convergent subsequence. By (9) we have that \(( \Vert \delta y_n\Vert _{ Y } )_{n\in \mathbb {N}}\) is bounded. The compact embedding \(Y \hookrightarrow \hookrightarrow L^2(\Omega )\) therefore implies the existence of a point \({\hat{y}} \in L^2(\Omega )\) and a subsequence, denoted in the same way, such that \(\Vert \delta y_n - {\hat{y}}\Vert _{L^2(\Omega )}\rightarrow 0\). We also have that \(( \Vert \delta p_n\Vert _{P} )_{n\in \mathbb {N}}\) is bounded. In particular \(\Vert \delta p_n\Vert _{L^\infty (\Omega )} \le b^0\) for all \(n\in \mathbb {N}\) for some \(b^0>0\). By Theorem 6 this implies that \(( u^\prime (-p) \delta p_n )_{n\in \mathbb {N}}\) is bounded in \(C^{1,\alpha }(\Omega )\). Since \(C^{1,\alpha }(\Omega )\hookrightarrow \hookrightarrow Y ^*\), the proof is complete.□

Remark 7

Lemma 11 implies that Algorithm 1 is globally well-defined.

It is well-known that the properties established in Lemmas 10 and 11 are sufficient for local linear/q-superlinear/q-quadratic convergence of the inexact Newton method if the residual in iteration k is of appropriate order, e.g. [40, Theorem 6.1.4]. Thus, we obtain the following result.

Theorem 8

Let Assumption 2hold. If \((y_0,p_0)\in Y \times P\) is sufficiently close to \(({\bar{y}}_{{\gamma ,\delta } },{\bar{p}}_{{\gamma ,\delta } })\), then Algorithm 1 either terminates after finitely many iterations with output \((y^*,p^*)=({\bar{y}}_{{\gamma ,\delta } },{\bar{p}}_{{\gamma ,\delta } })\) or it generates a sequence \(((y_k,p_k))_{k\in \mathbb {N}}\) that converges to \(({\bar{y}}_{{\gamma ,\delta } },{\bar{p}}_{{\gamma ,\delta } })\). The convergence rate is r-linear if \(\eta <1\), q-linear if \(\eta\) is sufficiently small, q-superlinear if \(\eta _k\rightarrow 0\), and of q-order \(1+\omega\) if \(\eta _k=O(\Vert F(y_k,p_k)\Vert _{}^\omega )\). Here, \(\omega \in (0,1]\) is arbitrary; for \(\omega =1\) this means q-quadratic convergence.

Remark 8

Lemma 9 shows that convergence of \((p_k)_{k\in \mathbb {N}}\) (with a certain rate) implies convergence of \((u(p_k))_{k\in \mathbb {N}}\) in \(C^{1,\alpha }(\Omega )\) (with a related rate).

6 Finite element approximation

In this section we provide a discretization scheme for (\(\hbox {ROC}_{\gamma ,\delta }\)) and prove its convergence. Throughout, we work with a fixed pair \({(\gamma ,\delta )} \in {\mathbb {R}}_{>0}^2\).

6.1 Discretization

We use Finite Elements for the discretization of (\(\hbox {ROC}_{\gamma ,\delta }\)). Control, state and adjoint state are discretized by piecewise linear and globally continuous elements on a triangular grid. We point out that discretizing the control by piecewise constant Finite Elements will not ensure convergence to the optimal control \({\bar{u}}_{{\gamma ,\delta } }\), in general; cf. [6, Section 4].

For all \(h\in (0,h_0]\) and a suitable \(h_0>0\) let \(\mathcal {T}_h\) denote a collection of open triangular cells \(T \subset \Omega\) with \(h = \max _{T\in \mathcal {T}_h} {\text {diam}}(T)\). We write \(\Omega _h := {\text {int}}( \cup _{T\in \mathcal {T}_h} {\bar{T}} )\). We assume that there are constants \(C>0\) and \(c > \frac{1}{2}\) such that

for all \(h\in (0,h_0]\). We further assume \((\mathcal {T}_h)_{h\in (0,h_0]}\) to be quasi-uniform and \(\Omega _h \subset \Omega _{h^\prime }\) for \(h^\prime \le h\). The assumptions in (10) are rather mild and in part implied if, for example, \(\Omega\) and \((\Omega _h)_{h>0}\) are a family of uniform Lipschitz domains, cf. [31, Sections 4.1.2 & 4.1.3]. We utilize the function spaces

Because \(V_h\hookrightarrow H^1(\Omega _h)\) it follows that \(Y_h\) contains precisely those functions of \(V_h\) that vanish on \(\partial \Omega _h\). We use the standard nodal basis \(\varphi _1, \varphi _2, \dots , \varphi _{\dim ( V_h )}\) in \(V_h\) and assume that it is ordered in such a way that \(\varphi _1, \varphi _2, \dots , \varphi _{\dim ( Y_h )}\) is a basis of \(Y_h\). For every \(u\in L^2(\Omega _h)\) there is a unique \(y_h\in Y_h\) that satisfies

and by defining \(S_h u:=y_h\) we obtain the discrete solution operator \(S_h: L^2(\Omega _h) \rightarrow Y_h\) to the PDE in (OC). The discretized version of (\(\hbox {ROC}_{\gamma ,\delta }\)) is given by

where \(\psi _{\delta ,h}:H^1(\Omega _h)\rightarrow {\mathbb {R}}\) is defined in the same way as \(\psi _\delta\), but with \(\Omega\) replaced by \(\Omega _h\). By standard arguments this problem has a unique optimal solution \({\bar{u}}_{\gamma ,\delta ,h}\). Based on \({\bar{u}}_{\gamma ,\delta ,h}\) we define \({\bar{y}}_{\gamma ,\delta ,h} :=S_h{\bar{u}}_{\gamma ,\delta ,h}\) and \({\bar{p}}_{\gamma ,\delta ,h} :=S_h^*(S_h{\bar{u}}_{\gamma ,\delta ,h} -{y_{\Omega }})\). For \(h\rightarrow 0\) the triple \(({\bar{u}}_{\gamma ,\delta ,h} ,{\bar{y}}_{\gamma ,\delta ,h} , {\bar{p}}_{\gamma ,\delta ,h} )\) converges to the continuous optimal triple \(({\bar{u}}_{\gamma ,\delta } ,{\bar{y}}_{\gamma ,\delta } ,{\bar{p}}_{\gamma ,\delta } )\) in an appropriate sense, as we show next.

6.2 Convergence

In this section we prove convergence of the Finite Element approximation. We will tacitly use that extension-by-zero yields for each \(v\in Y_h \subset H^1_0(\Omega _h)\) a function in \(H^1_0(\Omega )\). Also, we need the following density result.

Lemma 12

Let (10) hold. For each \(\varphi \in C_0^\infty (\Omega )\) there exists a sequence \((\varphi _h)_{h > 0}\) with \(\varphi _h\in Y_h\) for all h such that \(\lim _{h\rightarrow 0^+} \Vert \varphi _h-\varphi \Vert _{H^1(\Omega _h)} = 0\).

Proof

Let \(\varphi \in C_0^\infty (\Omega )\). Due to (10) we have \({\text {supp}}(\varphi )\subset \Omega _h\) for all sufficiently small h. The claim then follows by choosing for \(\varphi _h\) the nodal interpolant of \(\varphi\) since \(\lim _{h\rightarrow 0^+}\Vert \varphi _h-\varphi \Vert _{H^1(\Omega _h)}=0\) for this choice, see [27, Theorem 1.103].□

Theorem 9

Let (10) hold. We have

where \({\bar{u}}_{\gamma ,\delta ,h}\), \({\bar{y}}_{\gamma ,\delta ,h}\) and \({\bar{p}}_{\gamma ,\delta ,h}\) are extended by zero to \(\Omega\).

Proof

For ease of notation we do not change indices in this proof when passing to subsequences. Let \((h_n)_{n\in \mathbb {N}}\) be a zero sequence, without loss of generality monotonically decreasing. From \(j_{\gamma ,\delta ,{h_n}} ({\bar{u}}_{\gamma ,\delta ,{h_n}} )\le j_{\gamma ,\delta ,{h_n}} (0)\le j_{\gamma ,\delta } (0)\) it follows that there is a constant \(C>0\), independent of n, such that \(\Vert {\bar{u}}_{\gamma ,\delta ,{h_n}} \Vert _{H^1(\Omega _{h_n})} \le C\). This implies \(\Vert {\bar{y}}_{\gamma ,\delta ,{h_n}} \Vert _{H^1(\Omega _{h_n})} \le C\). Using extension by zero we find that \(\Vert {\bar{y}}_{\gamma ,\delta ,{h_n}} \Vert _{H^1(\Omega )} \le C\) for some C that is still independent of n. From the compact embedding of \(H^1_0(\Omega )\) into \(L^2(\Omega )\) and the reflexivity of \(H^1_0(\Omega )\) we obtain a subsequence and a \({\hat{y}} \in H^1_0(\Omega )\) such that \({\bar{y}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{y}}\) strongly in \(L^2(\Omega )\) and weakly in \(H_0^1(\Omega )\). Extending \({\bar{u}}_{\gamma ,\delta ,{h_n}}\) by 0 to \(\Omega\) and using the reflexivity of \(L^2(\Omega )\) we obtain on a subsequence that \({\bar{u}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{u}}\) weakly in \(L^2(\Omega )\) for some \({\hat{u}}\in L^2(\Omega )\). Let \(\varphi \in C_0^\infty (\Omega )\) and \((\varphi _{h_n})_{n\in \mathbb {N}}\) be defined as in Lemma 12. Extending \(\varphi _{h_n}\) by zero we have

Thus \({\hat{y}} = S{\hat{u}}\) by the density of \(C_0^\infty (\Omega )\) in \(H^1_0(\Omega )\). Analogous arguments show that the adjoints \({\bar{p}}_{\gamma ,\delta ,{h_n}}\) converge in the same way to some \({\hat{p}}\in H_0^1(\Omega )\) with \({\hat{p}} = S^*({\hat{y}}-y_\Omega )\). It therefore suffices to prove that \({\hat{u}}={\bar{u}}_{\gamma ,\delta }\), i.e., that \({\hat{u}}\) minimizes \(j_{\gamma ,\delta }\), and that \({\bar{u}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{u}}\) strongly in \(L^2(\Omega )\). Let \(u\in H^1(\Omega ) \cap C^\infty (\Omega )\) and denote by \(I_h u \in H^1(\Omega _h)\) the usual nodal interpolant. Then it is well-known, e.g. [27, Theorem 1.103], that \(\Vert u-I_{h_n}u \Vert _{H^1(\Omega _{h_n})} \xrightarrow {n\rightarrow \infty } 0\). Let \({\hat{n}}\in \mathbb {N}\) and \(n\ge {\hat{n}}\). Using \(\Omega _{h_{{\hat{n}}}} \subset \Omega _{h_n}\) and the optimality of \({\bar{u}}_{\gamma ,\delta ,{h_n}}\) we find

where \(J_{{\gamma ,\delta ,h_{{\hat{n}}}} }:L^2(\Omega _{h_{{\hat{n}}}})\times H^1(\Omega _{h_{{\hat{n}}}})\rightarrow {\mathbb {R}}\) is given by

By \(\Vert u-I_{h_n}u \Vert _{H^1(\Omega _{h_n})} \xrightarrow {n\rightarrow \infty } 0\) we obtain

From \(\Vert {\bar{u}}_{\gamma ,\delta ,{h_n}} \Vert _{H^1(\Omega _{h_{{\hat{n}}}})} \le \Vert {\bar{u}}_{\gamma ,\delta ,{h_n}} \Vert _{H^1(\Omega _{h_n})} \le C\) we infer that there exists \({\tilde{u}}\in H^1(\Omega _{h_{{\hat{n}}}})\) such that \({\bar{u}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\tilde{u}}\) weakly in \(H^1(\Omega _{h_{{\hat{n}}}})\) and strongly in \(L^2(\Omega _{h_{{\hat{n}}}})\). On the other hand, we have \({\bar{u}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{u}}\) weakly in \(L^2(\Omega _{h_{{\hat{n}}}})\), so \({\hat{u}}\in H^1(\Omega _{h_{{\hat{n}}}})\) and the convergence \({\bar{u}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{u}}\) holds strongly in \(L^2(\Omega _{h_{{\hat{n}}}})\) and weakly in \(H^1(\Omega _{h_{{\hat{n}}}})\). The semi-continuity properties of \(\psi _{\delta ,h_{{\hat{n}}}}\) and \(\Vert \cdot \Vert _{H^1(\Omega _{h_{{\hat{n}}}})}\) together with the fact that \({\bar{y}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{y}}\) strongly in \(L^2(\Omega _{h_{{\hat{n}}}})\) imply that

Because of \(|\Omega \setminus \Omega _{h_{{\hat{n}}}}| \xrightarrow {{\hat{n}}\rightarrow \infty } 0\) we can infer by dominated convergence for \({\hat{n}}\rightarrow \infty\) that \({\bar{u}}_{\gamma ,\delta ,{h_n}} \xrightarrow {n\rightarrow \infty } {\hat{u}}\) strongly in \(L^2(\Omega )\) and that \(j_{\gamma ,\delta } ({\hat{u}})\le j_{\gamma ,\delta } (u)\) for all \(u\in H^1(\Omega ) \cap C^\infty (\Omega )\). By density, this implies that \({\hat{u}}\) is the minimizer of \(j_{\gamma ,\delta }\), thereby concluding the proof.□

Corollary 1

Let (10) hold. We have

where \({\bar{y}}_{\gamma ,\delta ,h}\) and \({\bar{p}}_{\gamma ,\delta ,h}\) are extended by zero to \(\Omega\).

Proof

Let \(R_h {\bar{y}}_{\gamma ,\delta } \in Y_h\) denote the Ritz projection with respect to A. Extending \({\bar{y}}_{\gamma ,\delta ,h} \in Y_h\) and \(R_h {\bar{y}}_{\gamma ,\delta }\) by zero to \(\Omega\) we clearly have

By definition, \({\bar{y}}_{\gamma ,\delta ,h} -R_h {\bar{y}}_{\gamma ,\delta }\) satisfies

Thus, choosing \(\varphi _h = {\bar{y}}_{\gamma ,\delta ,h} -R_h {\bar{y}}_{\gamma ,\delta }\) and using the ellipticity of \(\mathcal{{A}}\) and \(c_0\ge 0\) in \(\Omega\) together with the Poincaré inequality in \(\Omega\) yields a constant \(C>0\), independent of h, such that \(\Vert {\bar{y}}_{\gamma ,\delta ,h} -R_h {\bar{y}}_{\gamma ,\delta } \Vert _{H^1(\Omega )} \le C \Vert {\bar{u}}_{\gamma ,\delta ,h} -{\bar{u}}_{\gamma ,\delta } \Vert _{L^2(\Omega )} \xrightarrow {h\rightarrow 0^+} 0\), where we also used extension by zero and Theorem 9. Since \(R_h {\bar{y}}_{\gamma ,\delta } \xrightarrow {h\rightarrow 0^+} {\bar{y}}_{\gamma ,\delta }\) in \(Y\), the \(H^1(\Omega )\) convergence \({\bar{y}}_{\gamma ,\delta ,h} \xrightarrow {h\rightarrow 0^+}{\bar{y}}_{\gamma ,\delta }\) follows. The proof for \({\bar{p}}_{\gamma ,\delta ,h} -{\bar{p}}_{\gamma ,\delta }\) is analogous.□

7 Numerical solution

Based on the Finite Element approximation from Sect. 5 we now study an inexact Newton method to compute the discrete solution \(({\bar{y}}_{{\gamma ,\delta ,h} },{\bar{p}}_{{\gamma ,\delta ,h} },{\bar{u}}_{{\gamma ,\delta ,h} })\) and we embed it into a path-following method.

7.1 A preconditioned inexact Newton method for the discrete problems

We prove local convergence of an inexact Newton method when applied to a discretized version of (6) for fixed \({(\gamma ,\delta )} \in {\mathbb {R}}_{>0}^2\). To this end, let us introduce the discrete adjoint-to-control mapping \(u_h\). We recall that the constant \(h_0>0\) is introduced at the beginning of Sect. 5.1. The proof of the following result is similar to the continuous case in Theorems 6, 7 and 13, so we omit it.

Lemma 13

Let \(h\in (0,h_0]\). For every \(p\in L^2(\Omega _h)\) there exists a unique \(u_h=u_h(p)\in V_h\) that satisfies the following discrete version of (2)

The associated solution operator \(u_h: L^2(\Omega _h) \rightarrow V_h\) is Lipschitz continuously Fréchet differentiable. Its derivative \(u_h^\prime (p)\in \mathcal{L} (L^2(\Omega _h), V_h )\) at \(p\in L^2(\Omega _h)\) in direction \(d\in L^2(\Omega _h)\) is given by \(z_h = u_h^\prime (p) d \in V_h\), where \(z_h\) is the unique solution to

With \(u_h\) at hand we can discretize (6) by

The same \(F_h\) is obtained if we consider the optimality conditions of (\(\hbox {ROC}_{\gamma ,\delta ,h}\)) and express them in terms of (y, p). Moreover, \(({\bar{y}}_{\gamma ,\delta ,h} ,{\bar{p}}_{\gamma ,\delta ,h} )\) is the unique root of \(F_h\) and the properties of F from Lemmas 10 and 11 carry over to \(F_h\).

Lemma 14

Let \(h\in (0,h_0]\). The map \(F_h: Y_h \times Y_h \rightarrow Y_h^* \times Y_h^*\) is Lipschitz continuously Fréchet differentiable. Its derivative at \((y,p) \in Y_h \times Y_h\) is given by

Moreover, \(F_h^\prime (y, p)\) is invertible for every \((y,p)\in Y_h \times Y_h\).

Proof

The regularity follows from Lemma 13. Since \(\dim ( Y_h \times Y_h ) = \dim ( Y_h^* \times Y_h^* )\), it is sufficient to show that \(F_h^\prime (y,p)\) is injective. This can be done exactly as in Lemma 11.□

Similar to Theorem 8 we have the following result.

Theorem 10

Let \(h\in (0,h_0]\) and \(\eta \in [0,\infty )\). Then there is a neighborhood \(N\subset Y_h \times Y_h\) of \(({\bar{y}}_{{\gamma ,\delta ,h} },{\bar{p}}_{{\gamma ,\delta ,h} })\) such that for any \((y_0,p_0)\in N\) any sequence \(((y_k,p_k))_{k\in \mathbb {N}}\) that is generated according to \((y_{k+1},p_{k+1})=(y_k,p_k) + (\delta y_k,\delta p_k)\), where \((\delta y_k,\delta p_k)\in Y_h \times Y_h\) satisfies for all \(k\ge 0\)

with \((\eta _k)\subset [0,\eta ]\), converges to \(({\bar{y}}_{{\gamma ,\delta ,h} },{\bar{p}}_{{\gamma ,\delta ,h} })\). The convergence is r-linear if \(\eta <1\), q-linear if \(\eta\) is sufficiently small, q-superlinear if \(\eta _k\rightarrow 0\), and of q-order \(1+\omega\) if \(\eta _k=O(\Vert F_h(y_k,p_k)\Vert _{}^\omega )\). Here, \(\omega \in (0,1]\) is arbitrary.

As a preconditioner for the fully discrete Newton system based on

where \(\mathbf {B}=\mathbf {A}^{-1}\) is the inverse of the stiffness matrix \(\mathbf {A}\), and \(\mathbf {M}\) is the mass matrix. The preconditioner would agree with \(F_h^\prime (y,p)^{-1}\) if \({\mathbf{u}}_{\mathbf{h}}^\prime {\mathbf{(-p)}}\) were zero.

7.2 A practical path-following method

The following Algorithm 2 is a practical path-following inexact Newton method to solve (\(\hbox {ROC}_{\gamma ,\delta ,h}\)). We expect that its global convergence can be shown if \(\rho (\gamma _i,\delta _i)\) and \(\eta _k\) are chosen sufficiently small, but this topic is left for future research. For the choices detailed below, global convergence holds in practice.

The inner loop in lines 3–11 of Algorithm 2 uses an inexact Newton method to compute an approximation \(({\hat{y}}_{i+1}, {\hat{p}}_{i+1})\) of the root of \(F_h\) for fixed \((\gamma _i,\delta _i)\) satisfying \(\Vert F_h({\hat{y}}_{i+1},{\hat{p}}_{i+1})\Vert _{}\le \rho (\gamma _i,\delta _i)\), where \(\rho :{\mathbb {R}}_{>0}^2\rightarrow {\mathbb {R}}_{>0}\). In the implementation we use \(\rho (\gamma ,\delta ) = \max \{10^{-6},\gamma \}\), which may be viewed as inexact path-following. For the forcing term \(\eta _k\) we use the two choices \(\eta _k={\bar{\eta }}_k:=10^{-6}\) and \(\eta _k={\hat{\eta }}_k:=\max \{10^{-6},\min \{10^{-k-1},\sqrt{\delta _i}\}\}\), where \(k=k(i)\). For \({\bar{\eta }}_k\) we have \({\bar{\eta }}_k\le \Vert F_h(y_k,p_k)\Vert _{}\) since we terminate the inner loop if \(\Vert F_h(y_k,p_k)\Vert _{} < 10^{-6}\). The choice \(\eta _k={\bar{\eta }}_k\) is related to quadratic convergence, while \(\eta _k={\hat{\eta }}_k\) corresponds to superlinear convergence, cf. Theorem 10. We also terminate gmres if the Euclidean norm of \(F_h(y_k, p_k) + F_h^\prime ( y_k, p_k ) (\delta y_k, \delta p_k)\) drops below \(\eta _k\) since this seemed beneficial in the numerical experiments.

To compute the control \(u_h(-p_k)\) that satisfies (11) we use a globalized Newton method that can be shown to converge q-quadratically for arbitrary starting points \(u^0\in V_h\). In fact, since (11) is equivalent to \(u_h(p)\) being the minimizer of the smooth and strongly convex problem

standard globalization techniques for Newton’s method will ensure these convergence properties (e.g., Newton’s method combined with an Armijo line search [8, Section 9.5.3]). The method terminates when the Newton residual falls below a threshold that decreases with \((\gamma _i,\delta _i)\). The linear systems are solved using SciPy’s sparse direct solver spsolve. As an alternative we tested a preconditioned conjugate gradients method (PCG). The results were mixed: The use of PCG diminished the total runtime of Algorithm 2 if all went well, but broke down on several occasions for smaller values of \((\gamma _i,\delta _i)\).

In lines 12–14 it is decided whether to accept \(({\hat{y}}_{i+1},{\hat{p}}_{i+1})\) as a solution and terminate the algorithm; if not, then we continue the path-following by updating \((\gamma _i,\delta _i)\) with the factor \(\sigma _i\). We select \(\sigma _i\) based on the number of Newton steps that are needed to compute the implicit controls \(\{u_h(-p_k)\}_k\) in outer iteration i. If this number surpasses a predefined \(m\in \mathbb {N}\), then we choose \(\sigma _i>\sigma _{i-1}\). If it belongs to [0, 0.75m], then we choose \(\sigma _i<\sigma _{i-1}\). Otherwise, we let \(\sigma _i=\sigma _{i-1}\). In addition, we enforce \(\sigma _i\ge 0.25\) for all i since we found in the numerical experiments that choosing \(\sigma _i\) too small can slow down or prevent convergence in some cases once \((\gamma _i,\delta _i)\) is very small, cf. Table 3 below. The weighing \(1/\beta\) in the termination criterion is made since the amplitude of the adjoint state is roughly of order \(\beta\) in comparison to the state. In all experiments we use \(\kappa =10^{-3}\).

Algorithm 3 augments the inexact Newton method in lines 3–11 of Algorithm 2 by a non-monotone line search globalization introduced in [42] for Broyden’s method. The non-monotonicity allows to always accept the inexact Newton step and yields potentially larger step sizes than descent-based strategies. The intention is to keep the number of trial step sizes low since every trial step size requires the evaluation of \(F_h\) and hence a recomputation of \(u_h(-p_k)\). In the numerical experiments we use \(\tau =10^{-4}\) and we observe that in the vast majority of iterations full steps are taken, i.e., \(\lambda _k=1\). To briefly discuss convergence properties of the globalized inexact Newton method, let us assume for simplicity that \(u_h(-p_k)\) is determined exactly for each k. By following the arguments of [42] we can show that for sufficiently small \(\eta _k\) the sequence \(((y_k,p_k))_{k\in \mathbb {N}}\) obtained by ignoring lines 4–7 must either be unbounded or converge to the unique root of \(F_h\); a key ingredient in the corresponding proof is that \(F_h'(y,p)\) is invertible for all (y, p), which we have demonstrated in Lemma 14. In particular, if \(((y_k,p_k))_{k\in \mathbb {N}}\) is bounded, then the globalized inexact Newton method in lines 3–11 terminates finitely. While we always observed this termination in practice, the question whether \(((y_k,p_k))_{k\in \mathbb {N}}\) can be unbounded remains open. Furthermore, as in [42] it follows that if \(((y_k,p_k))_{k\in \mathbb {N}}\) converges, then eventually step size 1 is always accepted, in turn ensuring that the convergence rates of Theorem 10 apply.

All norms without index in Algorithm 2 and 3 are \(L^2(\Omega _h)\) norms.

8 Numerical results

We provide numerical results for two examples. Our main goal is to illustrate that Algorithm 2 can robustly compute accurate solutions of (OC). The results are obtained from a Python implementation of Algorithm 2 using DOLFIN [46, 47], which is part of FEniCS [3, 45]. The code for the second example is available at https://arxiv.org/abs/2010.11628.

8.1 Example 1: an example with explicit solution

The first example has an explicit solution and satisfies the assumptions used in this work. We consider (OC) for an arbitrary \(\beta >0\) with non-convex \(C^\infty\) domain \(\Omega = B_{4\pi }(0) \setminus \overline{B_{2\pi }(0)}\) in \({\mathbb {R}}^2\), \(\mathcal{{A}} =-\Delta\) and \(c_0\equiv 0\). The desired state is

where \(r(x,y)=\sqrt{x^2+y^2}\), and the optimal state \({\bar{y}}\) is

with constants A, B, C whose values are contained in “Appendix 4”. The optimal control is

i.e., \({\bar{u}}\) has value 1 on the disc \(B_{3\pi }(0) \setminus \overline{B_{2\pi }(0)}\) and value 0 on the disc \(B_{4\pi }(0) \setminus {B_{3\pi }(0)}\). The optimal value is \(j({\bar{u}})\approx 24.85 \beta ^2 + 59.22 \beta\). In “Appendix 4” we provide details on the construction of this example and verify that \(({\bar{y}},{\bar{u}})\) is indeed the optimal solution of (OC). If not stated otherwise, we use \(\beta =10^{-3}\).

We use unstructured triangulations that approximate \(\partial \Omega\) increasingly better as the meshes become finer, cf. (10). Figure 1 depicts the optimal control \({\bar{u}}_h\), optimal state \({\bar{y}}_h\) and negative optimal adjoint state \(-{\bar{p}}_h\), which were computed by Algorithm 2 on a grid with 1553207 degrees of freedom (DOF).

We begin by studying convergence on several grids. We use the fixed ratio \((\gamma _i/\delta _i)\equiv 10^2\) and apply Algorithm 2 with \((\gamma _0,\delta _0)=(1,0.01)\) and \(({\hat{y}}_0,{\hat{p}}_0)=(0,0)\). Table 1 shows #it, which represents the total number of inexact Newton steps for (y, p), and #it\(_u\), which is the total number of Newton steps used to compute the implicit function u. Table 1 also contains the errors

as well as

where \(\Omega _*\) represents a reference grid with \(\text{DOF}=1553207\). To evaluate the errors, \({\hat{u}}_{\text{final}}\), \({\hat{y}}_{\text{final}}\) and \({\hat{p}}_{\text{final}}\) are extended to \(\Omega _*\) using extrapolation. Table 2 provides details for the run from Table 1 with \(\text{DOF}=97643\) and \(\eta _k={\hat{\eta }}_k\). Table 2 includes \(\tau ^i:=\Vert ({\hat{y}}_{i+1},\beta ^{-1}{\hat{p}}_{i+1}) -({\hat{y}}_i,\beta ^{-1}{\hat{p}}_i)\Vert _{H^1(\Omega _h)}\), which appears in the termination criterion of Algorithm 2, and \(\tau _u^i:=\Vert u_h({\hat{p}}_{i+1})-u_h({\hat{p}}_i)\Vert _{L^2(\Omega _h)}\).

Table 1 indicates convergence of the computed solutions \(({\hat{u}}_{\text{final}},{\hat{y}}_{\text{final}},{\hat{p}}_{\text{final}})\) to \(({\bar{u}},{\bar{y}},{\bar{p}})\) and of the objective value \(j_{\gamma _{\text{final}},\delta _{\text{final}},h}\) to \({\bar{j}}\). It also suggests that convergence takes place at certain rates with respect to h.

Moreover, the total number of Newton steps both for (y, p) and for u stays bounded as DOF increases, which suggests mesh independence. The choice \(\eta _k={\bar{\eta }}_k\) frequently yields lower numbers of Newton steps for (y, p) and for u, yet the runtime (not depicted) is consistently higher than for \(\eta _k={\hat{\eta }}_k\) since more iterations of gmres are required to compute the step for (y, p). Specifically, using \({\hat{\eta }}_k\) saves between 5% and 36% of runtime, with \(36\%\) being the saving on the finest grid. (Since the runtime depends on many factors, these numbers are intended as reference points rather than exact values.) In the vast majority of iterations, step size 1 is accepted for \((y_k,p_k)\). For instance, all of the 52 iterations required for \(\text{DOF}=97643\) and \(\eta _k={\hat{\eta }}_k\) use full steps; for \(\text{DOF}=6251\) and \(\eta _k={\bar{\eta }}_k\), 86 of the 87 iterations use step size 1.

Table 3 displays the effect of fixing \((\sigma _i)\equiv \sigma\) in Algorithm 2. The mesh uses \(\text{DOF}=24443\) and is the same as in Table 1.

For \(\sigma =0.1\) the iterates failed to converge for both forcing terms once \(\gamma _{12}=10^{-12}\) is reached because \(u_h(-p_k)\) could not be computed to sufficient accuracy within the 200 iterations that we allow for this process. Together with the case \(\sigma =0.2\) in Table 3 this shows that small values of \(\sigma _i\) can increase the number of steps required for u and even prevent convergence. We therefore enforce \(\sigma _i\ge 0.25\) for all i in all other experiments, although this diminishes the efficacy of Algorithm 2 in some cases.

We now turn to the robustness of Algorithm 2. We emphasize that in our numerical experience the robustness of algorithms for optimal control problems involving the TV seminorm in the objective is a delicate issue. Table 4 displays the iteration numbers required by Algorithm 2 for different values of \(\beta\) on the mesh with \(\text{DOF}=24443\) along with the error \(\mathcal{{E}} _u\) for \(\eta _k={\hat{\eta }}_k\) for the two choices \((\gamma _i/\delta _i) \equiv 10^2\) and \((\gamma _i/\delta _i)\equiv 1\). The omitted values for \(\beta =10^{-3}\) and \((\gamma _i/\delta _i) \equiv 10^2\) are identical to those from Table 1 for \(\text{DOF}=24443\) and \(\eta _k={\hat{\eta }}_k\). Table 5 provides iteration numbers and errors for various fixed choices of \((\gamma _i/\delta _i)\) on the mesh with \(\text{DOF}=24443\) for \(\beta =10^{-3}\), \(\eta _k={\bar{\eta }}_k\) and \((\sigma _i)\equiv 0.5\). For the ratios \(10^{-1}\) and \(10^{-2}\) we increased \(\kappa\) from \(10^{-3}\) to \(5\cdot 10^{-3}\) to obtain convergence. Since our goal is to demonstrate robustness, no further changes are made although this would lower the iteration numbers.

Tables 4 and 5 suggest that Algorithm 2 is able to handle a range of parameter values without modification of its internal parameters.

8.2 Example 2

From Sect. 3 onward we have used that \(\Omega\) is of class \(C^{1,1}\). To show that Algorithm 2 can still solve (OC) if \(\Omega\) is only Lipschitz, we consider an example from [23, section 4.2] on the square \(\Omega = [-1,1]^2\). We have \(\mathcal{{A}} =-\Delta\), \(c_0\equiv 0\), \(\beta =10^{-4}\) and \(y_\Omega = 1_D\), where \(1_D:\Omega \rightarrow \{0,1\}\) is the characteristic function of the square \(D=(-0.5,0.5)^2\). We use uniform triangulations and denote by \(n+1\) the number of nodes in coordinate direction. Figure 2 depicts the optimal control \({\bar{u}}_h\), optimal state \({\bar{y}}_h\) and negative optimal adjoint state \(-{\bar{p}}_h\), which were computed with \(n=1024\). Apparently, \({\bar{u}}_h\) is piecewise constant.

Throughout, we use the fixed ratio \((\gamma _i/\delta _i)\equiv 10^{-2}\) and apply Algorithm 2 with \((\gamma _0,\delta _0)=(0.01,1)\) and \(({\hat{y}}_0,{\hat{p}}_0)=(0,0)\). As in example 1, cf. Table 5, other ratios for \(\gamma _i/\delta _i\) can be employed as well. We only provide results for \({\bar{\eta }}_k\) since the forcing term \({\hat{\eta }}_k\) does not yield lower runtimes in this example; both forcing terms produce the same errors, though. Table 6 displays iteration numbers and errors for different grids, while Table 7 shows details for \(n=256\).

Table 6 hints at possible mesh independence for (y, p), but indicates that the number of Newton steps for u increases with n. The depicted errors are computed by use of a reference solution that is obtained by Algorithm 2 with \(\eta _k={\bar{\eta }}_k\) on the mesh with \(n=1024\). As in the first example it seems that convergence with respect to h takes place at certain rates. The majority of iterations use full Newton steps for (y, p). For instance, all but one of the 50 iterations for \(n=256\) use step length one. We also repeated the experiments from Table 6 with \(y_\Omega\) rotated by 30, respectively, 45 degree. The omitted results are similar to those in Table 6, which further illustrates the robustness of our approach.

Table 8 shows the outcome of Algorithm 2 if a sequence of nested grids is used, where the grids are refined once \(\gamma _i<10^{-4}\), \(\gamma _i < 10^{-6}\) and \(\gamma _i<10^{-8}\), respectively. In this example, nesting reduces the runtime by about \(57\%\) while providing the same accuracy as a run for \(n=512\), cf. the last line of Table 6.

Table 9 addresses the robustness of Algorithm 2 with respect to \(\beta\). The computations are carried out on nested grids and the displayed iteration numbers are those for the finest grid, which has \(n=128\). The reference solution is computed for \(n=256\). The final grid change happens once \(\gamma _i<10^{-8}\).

Table 9 indicates that Algorithm 2 is robust with respect to \(\beta\). As in example 1 it is possible to achieve lower iteration numbers through manipulation of the algorithmic parameters. For instance, if the final grid change for \(\beta =10^{-5}\) happens once \(\gamma _i<10^{-9}\) instead of \(\gamma _i<10^{-8}\), then only (41, 638) iterations are needed on the final grid instead of (70, 958).

9 Summary

We have studied an optimal control problem with controls from BV in which the control costs are given by the TV seminorm, favoring piecewise constant controls. By smoothing the TV seminorm and adding the \(H^1\) norm we obtained a family of auxiliary problems whose solutions converge to the optimal solution of the original problem in appropriate function spaces. For fixed smoothing and regularization parameter we showed local convergence of an infinite-dimensional inexact Newton method applied to a reformulation of the optimality system that involves the control as an implicit function of the adjoint state. Based on a convergent Finite Element approximation a practical path-following algorithm was derived, and it was demonstrated that the algorithm is able to robustly compute the optimal solution of the control problem with considerable accuracy. To verify this, a two-dimensional test problem with known solution was constructed.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Acar, R., Vogel, C.R.: Analysis of bounded variation penalty methods for ill-posed problems. Inverse Probl. 10(6), 1217–1229 (1994). https://doi.org/10.1088/0266-5611/10/6/003

Allendes, A., Fuica, F., Otárola, E.: Adaptive finite element methods for sparse PDE-constrained optimization. IMA J. Numer. Anal. 40(3), 2106–2142 (2019). https://doi.org/10.1093/imanum/drz025

Alnæs, M.S., Blechta, J., Hake, J., Johansson, A., Kehlet, B., Logg, A., Richardson, C., Ring, J., Rognes, M.E., Wells, G.N.: The FEniCS project version 1.5. Arch. Numer. Softw. 3(100), 9–23 (2015). https://doi.org/10.11588/ans.2015.100.20553

Ambrosio, L., Fusco, N., Pallara, D.: Functions of Bounded Variation and Free Discontinuity Problems. Oxford Mathematical Monographs. The Clarendon Press, Oxford University Press, Oxford (2000)

Attouch, H., Buttazzo, G., Michaille, G.: Variational analysis in Sobolev and BV spaces. Applications to PDEs and optimization. 2nd revised edn. MPS/SIAM Series on Optimization, vol. 6. SIAM (2014). https://doi.org/10.1137/1.9781611973488

Bartels, S.: Total variation minimization with finite elements: convergence and iterative solution. SIAM J. Numer. Anal. 50(3), 1162–1180 (2012). https://doi.org/10.1137/11083277X

Bergounioux, M., Bonnefond, X., Haberkorn, T., Privat, Y.: An optimal control problem in photoacoustic tomography. Math. Models Methods Appl. Sci. 24(12), 2525–2548 (2014). https://doi.org/10.1142/S0218202514500286

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Bredies, K., Holler, M.: A pointwise characterization of the subdifferential of the total variation functional (2012). Preprint IGDK1754

Brokate, M., Kersting, G.: Measure and Integral. Birkhäuser/Springer, Cham (2015). https://doi.org/10.1007/978-3-319-15365-0

Casas, E., Clason, C., Kunisch, K.: Approximation of elliptic control problems in measure spaces with sparse solutions. SIAM J. Control. Optim. 50(4), 1735–1752 (2012). https://doi.org/10.1137/110843216

Casas, E., Clason, C., Kunisch, K.: Parabolic control problems in measure spaces with sparse solutions. SIAM J. Control. Optim. 51(1), 28–63 (2013)

Casas, E., Kogut, P.I., Leugering, G.: Approximation of optimal control problems in the coefficient for the \(p\)-Laplace equation. I: convergence result. SIAM J. Control Optim. 54(3), 1406–1422 (2016). https://doi.org/10.1137/15M1028108

Casas, E., Kruse, F., Kunisch, K.: Optimal control of semilinear parabolic equations by BV-functions. SIAM J. Control. Optim. 55(3), 1752–1788 (2017). https://doi.org/10.1137/16M1056511

Casas, E., Kunisch, K.: Optimal control of semilinear elliptic equations in measure spaces. SIAM J. Control. Optim. 52(1), 339–364 (2014). https://doi.org/10.1137/13092188X

Casas, E., Kunisch, K.: Analysis of optimal control problems of semilinear elliptic equations by BV-functions. Set-Valued Var. Anal. 27(2), 355–379 (2019). https://doi.org/10.1007/s11228-018-0482-7

Casas, E., Kunisch, K., Pola, C.: Some applications of BV functions in optimal control and calculus of variations. ESAIM Proc. 4, 83–96 (1998). https://doi.org/10.1051/proc:1998022

Casas, E., Kunisch, K., Pola, C.: Regularization by functions of bounded variation and applications to image enhancement. Appl. Math. Optim. 40(2), 229–257 (1999). https://doi.org/10.1007/s002459900124

Casas, E., Ryll, C., Tröltzsch, F.: Sparse optimal control of the Schlögl and Fitzhugh-Nagumo systems. Comput. Methods Appl. Math. 13(4), 415–442 (2013). https://doi.org/10.1515/cmam-2013-0016

Casas, E., Vexler, B., Zuazua, E.: Sparse initial data identification for parabolic PDE and its finite element approximations. Math. Control Relat. Fields 5(3), 377–399 (2015). https://doi.org/10.3934/mcrf.2015.5.377

Chan, T.F., Zhou, H.M., Chan, R.H.: Continuation method for total variation denoising problems. In: Luk, F.T. (ed.) Advanced Signal Processing Algorithms. International Society for Optics and Photonics, vol. 2563, pp. 314–325. SPIE, New York (1995). https://doi.org/10.1117/12.211408

Clason, C., Kruse, F., Kunisch, K.: Total variation regularization of multi-material topology optimization. ESAIM Math. Model. Numer. Anal. 52(1), 275–303 (2018). https://doi.org/10.1051/m2an/2017061

Clason, C., Kunisch, K.: A duality-based approach to elliptic control problems in non-reflexive Banach spaces. ESAIM Control Optim. Calc. Var. 17(1), 243–266 (2011). https://doi.org/10.1051/cocv/2010003

Elvetun, O.L., Nielsen, B.F.: The split Bregman algorithm applied to PDE-constrained optimization problems with total variation regularization. Comput. Optim. Appl. 64(3), 699–724 (2016). https://doi.org/10.1007/s10589-016-9823-3

Engel, S., Kunisch, K.: Optimal control of the linear wave equation by time-depending BV-controls: A semi-smooth Newton approach. Math. Control Relat. Fields 10(3), 591–622 (2020). https://doi.org/10.3934/mcrf.2020012

Engel, S., Vexler, B., Trautmann, P.: Optimal finite element error estimates for an optimal control problem governed by the wave equation with controls of bounded variation. IMA J. Numer. Anal. (2020). https://doi.org/10.1093/imanum/draa032

Ern, A., Guermond, J.L.: Theory and practice of finite elements. In: Applied Mathematical Sciences, vol. 159. Springer (2004). https://doi.org/10.1007/978-1-4757-4355-5

Grisvard, P.: Elliptic problems in nonsmooth domains, vol. 69, reprint of the 1985 hardback edn. SIAM (2011). https://doi.org/10.1137/1.9781611972030

Hafemeyer, D.: Optimale Steuerung von Differentialgleichungen mit BV-Funktionen. Bachelor’s thesis, Technische Universität München, Munich (2016)

Hafemeyer, D.: Regularization and discretization of a BV-controlled elliptic problem: a completely adaptive approach. Master’s thesis, Technische Universität München, Munich (2017)

Hafemeyer, D.: Optimal control of parabolic obstacle problems—optimality conditions and numerical analysis. Ph.D. thesis, Technische Universität München, Munich (2020). http://nbn-resolving.de/urn/resolver.pl?urn:nbn:de:bvb:91-diss-20200508-1524287-1-1

Hafemeyer, D., Mannel, F.: A path-following inexact Newton method for optimal control in BV (extended version) (2022). arXiv:2010.11628

Hafemeyer, D., Mannel, F., Neitzel, I., Vexler, B.: Finite element error estimates for one-dimensional elliptic optimal control by BV functions. Math. Control Relat. Fields 10(2), 333–363 (2020). https://doi.org/10.3934/mcrf.2019041

Herzog, R., Stadler, G., Wachsmuth, G.: Directional sparsity in optimal control of partial differential equations. SIAM J. Control. Optim. 50(2), 943–963 (2012). https://doi.org/10.1137/100815037

Hintermüller, M., Kunisch, K.: Total bounded variation regularization as a bilaterally constrained optimization problem. SIAM J. Appl. Math. 64(4), 1311–1333 (2004). https://doi.org/10.1137/S0036139903422784

Hintermüller, M., Stadler, G.: An infeasible primal-dual algorithm for total bounded variation-based Inf-convolution-type image restoration. SIAM J. Sci. Comput. 28(1), 1–23 (2006). https://doi.org/10.1137/040613263

Hinze, M.: A variational discretization concept in control constrained optimization: the linear-quadratic case. Comput. Optim. Appl. 30(1), 45–61 (2005). https://doi.org/10.1007/s10589-005-4559-5

Hinze, M., Tröltzsch, F.: Discrete concepts versus error analysis in PDE-constrained optimization. GAMM-Mitt 33(2), 148–162 (2010). https://doi.org/10.1002/gamm.201010012

Kato, T.: Perturbation theory for linear operators. Die Grundlehren der mathematischen Wissenschaften, Band 132. Springer (1966). https://doi.org/10.1007/978-3-662-12678-3

Kelley, C.T.: Iterative Methods for Linear and Nonlinear Equations, vol. 16. SIAM (1995). https://doi.org/10.1137/1.9781611970944

Li, C., Stadler, G.: Sparse solutions in optimal control of PDEs with uncertain parameters: the linear case. SIAM J. Control. Optim. 57(1), 633–658 (2019). https://doi.org/10.1137/18M1181419

Li, D., Fukushima, M.: A derivative-free line search and global convergence of Broyden-like method for nonlinear equations. Optim. Methods Softw. 13(3), 181–201 (2000). https://doi.org/10.1080/10556780008805782

Li, H., Wang, C., Zhao, D.: Preconditioning for PDE-constrained optimization with total variation regularization. Appl. Math. Comput. 386, 125470 (2020). https://doi.org/10.1016/j.amc.2020.125470

Lieberman, G.M.: Boundary regularity for solutions of degenerate elliptic equations. Nonlinear Anal. 12(11), 1203–1219 (1988). https://doi.org/10.1016/0362-546X(88)90053-3

Logg, A., Mardal, K.A., Wells, G.N., et al.: Automated Solution of Differential Equations by the Finite Element Method. Springer, New York (2012). https://doi.org/10.1007/978-3-642-23099-8

Logg, A., Wells, G.N.: Dolfin: automated finite element computing. ACM Trans. Math. Softw. 37(2), 25 (2010). https://doi.org/10.1145/1731022.1731030

Logg, A., Wells, G.N., Hake, J.: DOLFIN: A C++/Python Finite Element Library, Chapter 10. Springer, New York (2012). https://doi.org/10.1007/978-3-642-23099-8_10

Neitzel, I., Prüfert, U., Slawig, T.: Strategies for time-dependent PDE control with inequality constraints using an integrated modeling and simulation environment. Numer. Algorithms 50(3), 241–269 (2009). https://doi.org/10.1007/s11075-008-9225-4

Pieper, K.: Finite element discretization and efficient numerical solution of elliptic and parabolic sparse control problems. Ph.D. thesis, Technische Universität München, Munich (2015). https://nbn-resolving.de/urn/resolver.pl?nbn:de:bvb:91-diss-20150420-1241413-1-4

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60, 259–268 (1992). https://doi.org/10.1016/0167-2789(92)90242-F

Rudin, W.: Real and Complex Analysis, 3rd edn. McGraw-Hill, New York (1987)

Savaré, G.: Regularity results for elliptic equations in Lipschitz domains. J. Funct. Anal. 152(1), 176–201 (1998). https://doi.org/10.1006/jfan.1997.3158

Schiela, A.: An interior point method in function space for the efficient solution of state constrained optimal control problems. Math. Program. 138(1–2 (A)), 83–114 (2013). https://doi.org/10.1007/s10107-012-0595-y

Stadler, G.: Elliptic optimal control problems with \(L^1\)-control cost and applications for the placement of control devices. Comput. Optim. Appl. 44(2), 159–181 (2009). https://doi.org/10.1007/s10589-007-9150-9

Troianiello, G.M.: Elliptic Differential Equations and Obstacle Problems. The University Series in Mathematics. Plenum Press, New York (1987). https://doi.org/10.1007/978-1-4899-3614-1

Weiser, M., Gänzler, T., Schiela, A.: A control reduced primal interior point method for a class of control constrained optimal control problems. Comput. Optim. Appl. 41(1), 127–145 (2008). https://doi.org/10.1007/s10589-007-9088-y

Acknowledgements

Dominik Hafemeyer acknowledges support from the graduate program TopMath of the Elite Network of Bavaria and the TopMath Graduate Center of TUM Graduate School at Technische Universität München. He received a scholarship from the Studienstiftung des deutschen Volkes. He receives support from the IGDK Munich-Graz. Funded by the Deutsche Forschungsgemeinschaft, grant no 188264188/GRK1754.

Funding

Open access funding provided by University of Graz.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note