Abstract

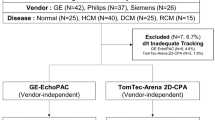

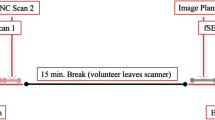

Despite standardization efforts, vendors still use specific proprietary software algorithms for echocardiographic strain measurements, which result in high inter-vendor variability. Using vendor-independent software could be one solution. Little is known, however, how vendor specific image characteristics can influence tracking results of such software. We therefore investigated the reproducibility, accuracy, and scar detection ability of strain measurements on images from different vendors by using a vendor-independent software. A vendor-independent software (TomTec Image Arena) was used to analyse datasets of 63 patients which were obtained on machines from four different ultrasound machine vendors (GE, Philips, Siemens, Toshiba). We measured the tracking feasibility, inter-vendor bias, the relative test-re-test variability and scar discrimination ability of strain measurements. Cardiac magnetic resonance delayed enhancement images were used as the reference standard of scar definition. Tracking feasibility on vendor datasets were significantly different (p < 0.001). Variability of global longitudinal strain (GLS) measurements was similar among the vendors whereas variability of segmental longitudinal strain (SLS) showed modest difference. Relative test-re-test variability of GLS and SLS showed no relevant differences. No significant difference in scar detection capability was observed. Average GLS and SLS values were similar among vendors. Reproducibility of GLS measurements showed no difference among vendors and was in acceptable range. SLS reproducibility was high but similar for all vendors. No relevant difference was found for identifying regional dysfunction. Tracking feasibility showed a substantial difference among images from different vendors. Our findings demonstrate that tracking results depend mainly on the software used and show little influence from vendor specific image characteristics.

Similar content being viewed by others

Data availability

Data available on request due to privacy/ethical restrictions.

References

Lang RM, Badano LP, Mor-Avi V et al (2015) Recommendations for cardiac chamber quantification by echocardiography in adults: an update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. Eur Heart J Cardiovasc Imaging 16:233–271. https://doi.org/10.1093/ehjci/jev014

Galderisi M, Cosyns B, Edvardsen T et al (2017) Standardization of adult transthoracic echocardiography reporting in agreement with recent chamber quantification, diastolic function, and heart valve disease recommendations: an expert consensus document of the European Association of Cardiovascular Imag. Eur Heart J Cardiovasc Imaging 18:1301–1310. https://doi.org/10.1093/ehjci/jex244

Farsalinos KE, Daraban AM, Ünlü S et al (2015) Head-to-Head Comparison of Global Longitudinal Strain Measurements among Nine Different Vendors The EACVI/ASE Inter-Vendor Comparison Study. J Am Soc Echocardiogr 28:1171-1181.e2. https://doi.org/10.1016/j.echo.2015.06.011

Mirea O, Pagourelias ED, Duchenne J et al (2018) Variability and Reproducibility of Segmental Longitudinal Strain Measurements: A Report from the EACVI-ASE Strain Standardization Task Force. JACC Cardiovasc Imaging 11:15–24. https://doi.org/10.1016/j.jcmg.2017.01.027

Ünlü S, Mirea O, Pagourelias ED et al (2019) Layer-specific segmental longitudinal strain measurements: capability of detecting myocardial scar and differences in feasibility, accuracy, and reproducibility, among four vendors a report from the EACVI-ASE strain standardization task force. J Am Soc Echocardiogr Off Publ Am Soc Echocardiogr 32:624-632.e11. https://doi.org/10.1016/j.echo.2019.01.010

Ünlü S, Duchenne J, Mirea O et al (2020) Impact of apical foreshortening on deformation measurements: a report from the EACVI-ASE strain standardization task force. Eur Heart J Cardiovasc Imaging 21:337–343. https://doi.org/10.1093/ehjci/jez189

Mirea O, Pagourelias ED, Duchenne J et al (2018) Intervendor differences in the accuracy of detecting regional functional abnormalities: a report from the EACVI-ASE strain standardization task force. JACC Cardiovasc Imaging 11:25–34. https://doi.org/10.1016/j.jcmg.2017.02.014

Unlu S, Mirea O, Duchenne J et al (2018) Comparison of feasibility, accuracy, and reproducibility of layer-specific global longitudinal strain measurements among five different vendors: a report from the EACVI-ASE strain standardization task force. J Am Soc Echocardiogr 31:374-380.e1. https://doi.org/10.1016/j.echo.2017.11.008

Voigt J-U, Pedrizzetti G, Lysyansky P et al (2015) Definitions for a common standard for 2D speckle tracking echocardiography: consensus document of the EACVI/ASE/Industry task force to standardize deformation imaging. J Am Soc Echocardiogr 28:183–193. https://doi.org/10.1016/j.echo.2014.11.003

Nagata Y, Takeuchi M, Mizukoshi K et al (2015) Intervendor variability of two-dimensional strain using vendor-specific and vendor-independent software. J Am Soc Echocardiogr 28:630–641. https://doi.org/10.1016/j.echo.2015.01.021

DeLong ER, DeLong DM, Clarke-Pearson DL (1988) Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics 44:837–845

Acknowledgements

SU, OM and EP received research grants of the European Association of Cardiovascular Imaging. The study was further supported by a dedicated grant of the American Society of Echocardiography. JUV holds a personal research mandate of the Flemish Research Foundation (FWO) and received a research grant of the University Hospitals Leuven.

The following are the participating companies Jamie Hamilton (Epsilon) Stefano Pedri (ESAOTE), Peter Lysyansky (GE), Gunnar Hansen (GE), Yasuhiro Ito (Hitachi), Tomoaki Chono (Hitachi), Jane Vogel (Philips), David Prater (Philips), Joo Hyun Song (Samsung), Jin Yong Lee (Samsung), Helene Houle (Siemens), Bogdan Georgescu (Siemens), Rolf Baumann (TOMTEC), Bernhard Mumm (TOMTEC), Yashuhiko Abe (Canon), Willem Gorissen (Canon).

Funding

The study was further supported by a dedicated grant of the American Society of Echocardiography.

Author information

Authors and Affiliations

Consortia

Contributions

The listed authors contributed to the manuscript, in the following manner: conception and design or analysis and interpretation of data, or both (SÜ, OM, SB and JUV); manuscript drafting or critical revision for important intellectual content (SÜ,OM, EDP, JD, SB, JB, JT, LB and JUV); final approval of the manuscript submitted (LB, JT, JUV).

Corresponding author

Ethics declarations

Conflicts of interest

All authors report no relationships that could be construed as a conflict of interest.

Ethics approval

The study was approved by the University Hospitals Leuven ethics committee.

Consent to participate

All subjects gave written informed consent prior to inclusion.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

10554_2020_2155_MOESM1_ESM.pdf

Supplementary Figure 1: Percentage of apical views with acceptable (green) tracking quality and rejected views (red) per vendor and apical view. Tracking was performed by a vendor-independent software on images acquired with high-end machines from the each vendor. Supplementary Figure 2: Percentage of excluded segments (red) per image source. Tracking was performed by a vendor-independent software on images acquired with high-end machines from the each vendor. Supplementary Figure 3: Pair-wise vendor-vs.-vendor comparisons of average global longitudinal strain measurements of the same multi-vendor software depending on the image source. The correlation coefficient (R²) is indicated. p value is < 0.001 for each correlation plot. The line of identity is shown in red. Supplementary Figure 4: Bland-Altman plots for the pair-wise vendor-vs.-vendor comparisons of average global longitudinal strain measurements using the same multi-vendor software, but images from different vendors. Difference of the global longitudinal strain measurement of vendors (y-axis) and the average global longitudinal strain measurements of vendors (x-axis). The bias is shown by the dotted green lines and the levels of agreement (1.95*SD) by solid red lines. Supplementary Figure 5: Comparison between average segmental end systolic (ES) and post systolic (PSS) longitudinal strain measurements in images from one vendor (y-axis) and the mean of all vendors (x-axis) using the same multi-vendor software. The correlation coefficient (R²) is indicated. p value is < 0.001 for each correlation plot. The line of identity is shown in red. Supplementary Figure 6: Pair-wise vendor-vs.-vendor comparisons of average segmental peak (PS), end systolic (ES) and post systolic (PSS) longitudinal strain measurements when applying the same multi-vendor software to images of the respective vendor. The correlation coefficient (R²) is indicated. p value is < 0.001 for each correlation plot. The line of identity is shown in red. Supplementary Figure 7: Bland-Altman plots for the pair-wise vendor-vs.-vendor comparisons of segmental peak (PS), end systolic (ES) and post systolic (PSS) longitudinal strain measurements when applying the same multi-vendor software to images of the respective vendor. Difference of the segmental longitudinal strain measurement of vendors (y-axis) and the average global longitudinal strain measurements of vendors (x-axis) are presented. The bias is shown by the dotted green lines and the levels of agreement (1.95*SD) by solid red lines. Supplementary Figure 8: Average left ventricular segmental longitudinal end systolic and post-systolic strain measurements with standard deviations for normal segments and segments with scar. All measurements were performed using the same multi-vendor software. Dashed marks are used for scar. Student t test analysis for differences between normal segments (solid bars) and segments with scar (hatched bars). p value is < 0.001 for each comparison for all vendors. For post-hoc tests (Bonferroni) analysis of the repeated measures ANOVA, results are shown as ; * different from GE, ¥ different from Toshiba, # different from Siemens. Supplementary Figure 9: Receiver operating characteristic curves, area under the curve (AUC) and 95% CI for end systolic strain (ES), post systolic strain (PSS) and post systolic index (PSI) indicating the ability to discriminate between normal segments and segments with subendocardial scars when applying the same multi-vendor software to images of the respective vendor. Significant pairwise comparisons (p < 0.05) are shown as ;* different from GE, ¥ different from Toshiba, # different from Siemens (pdf 3357 kb)

Rights and permissions

About this article

Cite this article

Ünlü, S., Mirea, O., Bézy, S. et al. Inter-vendor variability in strain measurements depends on software rather than image characteristics. Int J Cardiovasc Imaging 37, 1689–1697 (2021). https://doi.org/10.1007/s10554-020-02155-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10554-020-02155-2