Abstract

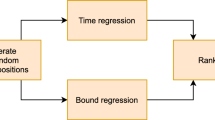

Motivated by its implications in the development of general purpose solvers for decomposable Mixed Integer Programs (MIPs), we address a fundamental research question, that is how to exploit data-driven techniques to obtain automatic decomposition methods. We preliminary investigate the link between static properties of MIP input instances and good decomposition patterns. We devise a random sampling algorithm, considering a set of generic MIP base instances, and generate a large, balanced and well diversified set of decomposition patterns, that we analyze with machine learning tools. We also propose and test a minimal proof of concept framework performing data-driven automatic decomposition. The use of supervised techniques highlights interesting structures of random decompositions, as well as proving (under certain conditions) that data-driven methods are fruitful in our context, triggering at the same time perspectives for future research.

Similar content being viewed by others

References

Abdi, H., & Williams, L. J. (2010). Principal component analysis. Wiley Interdisciplinary Reviews: Computational Statistics, 2(4), 433–459.

Achterberg, T. (2009). SCIP: solving constraint integer programs. Mathematical Programming Computation, 1(1), 1–41.

Achterberg, T., Koch, T., & Martin, A. (2006). MIPLIB 2003. Operations Research Letters, 34(4), 361–372.

Basso, S., & Ceselli, A. (2017). Asynchronous column generation. In Proceedings of the ninteenth workshop on algorithm engineering and experiments (ALENEX) (pp. 197–206).

Basso, S., Ceselli, S., & Tettamanzi, A. (2018). Understanding good decompositions: An exploratory data analysis. Technical report, Università degli Studi di Milano. http://hdl.handle.net/2434/487931.

Bergner, M., Caprara, A., Ceselli, A., Furini, F., Lübbecke, M., Malaguti, E., et al. (2015). Automatic Dantzig–Wolfe reformulation of mixed integer programs. Mathematical Programming A, 149(1–2), 391–424.

Bettinelli, A., Ceselli, A., & Righini, G. (2010). A branch-and-price algorithm for the variable size bin packing problem with minimum filling constraint. Annals of Operations Research, 179, 221–241.

Brooks, J. P., & Lee, E. K. (2010). Analysis of the consistency of a mixed integer programming-based multi-category constrained discriminant model. Annals of Operations Research, 174(1), 147–168.

Burges, C. (1998). A tutorial on support vector machines for pattern recognition. Data Mining and Knowledge Discovery, 2(2), 121–167.

Ceselli, A., Liberatore, F., & Righini, G. (2009). A computational evaluation of a general branch-and-price framework for capacitated network location problems. Annals of Operations Research, 167, 209–251.

Delorme, M., Iori, M., & Martello, S. (2016). Bin packing and cutting stock problems: Mathematical models and exact algorithms. European Journal of Operational Research, 255(1), 1–20.

Desaulniers, G., Desrosiers, J., & Solomon, M. M. (Eds.). (2005). Column generation. Berlin: Springer.

FICO xpress webpage. (2017). http://www.fico.com/en/products/fico-xpress-optimization-suite. Last accessed March, 2017

Fisher, R. A. (1992). Statistical methods for research workers. In S. Kotz & N. L. Johnson (Eds.), Breakthroughs in statistics. Springer series in statistics (perspectives in statistics). New York, NY: Springer.

Gamrath, G., & Lübbecke, M. E. (2010). Experiments with a generic Dantzig–Wolfe decomposition for integer programs. LNCS 6049 (pp. 239–252).

GUROBI webpage. (2017). http://www.gurobi.com. Last accessed March, 2017

He, H., & Garcia, E. A. (2009). Learning from imbalanced data. IEEE Transactions on Knowledge and Data Engineering, 21(9), 1263–1284.

Hutter, F., Xu, L., Hoos, H. H., & Leyton-Brown, K. (2014). Algorithm runtime prediction: Methods & evaluation. Artificial Intelligence, 206(1), 79–111.

IBM Cplex webpage. (2016). http://www-01.ibm.com/software/commerce/optimization/cplex-optimizer/index.html. Last accessed August, 2016

Khalil, E. B. (2016). Machine learning for integer programming. In Proceedings of the twenty-fifth international joint conference on artificial intelligence.

Koch, T., Achterberg, T., Andersen, E., Bastert, O., Berthold, T., Bixby, R. E., et al. (2011). MIPLIB 2010. Mathematical Programming Computation, 3(2), 103–163.

Kruber, M., Luebbecke, M. E., & Parmentier, A. (2016). Learning when to use a decomposition. RWTH technical report 2016-037.

Larose, D. T., & Larose, C. D. (2015). Data mining and predictive analytics. Hoboken: Wiley.

Mitzenmacher, M., & Upfal, E. (2005). Probability and computing: Randomized algorithms and probabilistic analysis. New York, NY: Cambridge University Press.

Puchinger, J., Stuckey, P. J., Wallace, M. G., & Brand, S. (2011). Dantzig–Wolfe decomposition and branch-and-price solving in G12. Constraints, 16(1), 77–99.

R Core Team. (2016). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/.

Ralphs, T. K., & Galati, M. V. (2017). DIP—decomposition for integer programming. https://projects.coin-or.org/Dip. Last accessed March, 2017.

Schrijver, A. (1998). Theory of linear and integer programming. Hoboken: Wiley.

Smola, A. J., & Scholkopf, B. (2004). A tutorial on support vector regression. Statistics and Computing, 14, 199–222.

Vanderbeck, F. (2017). BaPCod—A generic branch-and-price code. https://wiki.bordeaux.inria.fr/realopt/pmwiki.php/Project/BaPCod. Last accessed March, 2017.

Vanderbeck, F., & Wolsey, L. (2010). Reformulation and decomposition of integer programs. In M. Jünger, Th M Liebling, D. Naddef, G. L. Nemhauser, W. R. Pulleyblank, G. Reinelt, G. Rinaldi, & L. A. Wolsey (Eds.), 50 years of integer programming 1958–2008. Berlin: Springer.

Wang, J., & Ralphs, T. (2013). Computational experience with hypergraph-based methods for automatic decomposition in discrete optimization. In C. Gomes & M. Sellmann (Eds.), Integration of AI and OR techniques in constraint programming for combinatorial optimization problems. LNCS 7874 (pp. 394–402).

Wolsey, L. (1998). Integer programming. Hoboken: Wiley.

Acknowledgements

The authors wish to thank the guest editors and three anonymous reviewers: their insightful comments allowed to substantially improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

A. Ceselli: The work has been partially funded by Regione Lombardia—Fondazione Cariplo, Grant No. 2015-0717, project REDNEAT, and partially undertook when the second author was visiting INRIA Sophia Antipolis—I3S CNRS Université Côte d’Azur.

A Appendix

A Appendix

1.1 A.1 Base instances of the dataset

For each instance of the dataset, we present in Table 4 its name, its source (MIPLIB), the overall number of variables (Var.), the number of integer (Int.) and binary (Bin.) variables, the overall number of constraints (Constr.) and the percentage of non-zeroes in the constraint matrix (Nzs). We also report the average time required to solve a decomposition (Time) and the index of dispersion (variance over mean) for both the average time (D(Time)) and the average bound (D(Bound)). Statistics of few decompositions that could not be solved in a reasonable time (weeks), or that could not be solved at all, are excluded.

Initially, further base optimization instances (macrophage, harp2, opt1217) were considered but they were discarded during preprocessing operations.

1.2 A.2 Features of the dataset

For each base optimization problem instance the following features were measured:

number of variables

number of generic integer variables

number of binary variables

number of continuous variables

total number of constraints

number of equality constraints

number of inequality constraints

For each decomposition we instead measured:

number of blocks

average, min, max, standard deviation on the number of variables in blocks

average, min, max, standard deviation on the number of generic integer variables in blocks

average, min, max, standard deviation on the number of binary variables in blocks

average, min, max, standard deviation on the number of continuous variables in blocks

average, min, max, standard deviation on the number of constraints in blocks

average, min, max, standard deviation on the density of blocks (fraction of nonzero coefficients)

average, min, max number of equality constraints in blocks

average, min, max number of inequality constraints in blocks

average, min, max standard deviation of mean constraints right hand side coefficients (rhs) in blocks

average, min, max standard deviation of rhs ranges (max rhs − min rhs) in blocks

average, min, max, standard deviation of blocks “shape” (number of variables divided by the number of constraints in each block)

average, min, max, standard deviation of “Total Unimodularity Coefficient” of blocks

average, min, max, standard deviation of mean objective function coefficients in each block

average, min, max, standard deviation of the objective function coefficients range (maximum coefficient − minimum coefficient) in each block

number of blocks with both positive and negative coefficients in the objective function

number of variables in the border

number of generic integer variables in the border

number of binary variables in border

number of continuous variables in border

number of constraints in border

density of border (fraction of nonzero coefficients)

number of equality constraints in border

number of inequality constraints in border

average, stdandard deviation and range of rhs in the border

Rights and permissions

About this article

Cite this article

Basso, S., Ceselli, A. & Tettamanzi, A. Random sampling and machine learning to understand good decompositions. Ann Oper Res 284, 501–526 (2020). https://doi.org/10.1007/s10479-018-3067-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-018-3067-9