Abstract

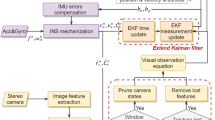

In a GPS-denied environment, even the combination of GPS and Inertial Navigation System (INS) cannot provide location reliably and accurately. We propose a new denoised stereo Visual Odometry VO/INS/GPS integration system for autonomous navigation based on tightly coupled fusion. The presented navigation system can estimate the location of the vehicle in either GPS-denied or low-texture environments. Because of the random walk characteristics of the drift error of the inertial measurement units (IMU), the errors of the states grow with time. To correct these growing errors, a continuous update of observations is necessary. For this purpose, the system state vector is augmented with the extracted features from a stereo camera. Consequently, we utilize the measurements of extracted features from consecutive frames and GPS-derived information to make these updates. Moreover, we apply the discrete wavelet transform (DWT) technique before data fusion to improve the signal-to-noise ratio (SNR) of the inertial sensor measurements and attenuate high-frequency noises while conserving significant information like vehicle motion. To verify the performance of the proposed method, we utilize four flight benchmark datasets with top speeds of 5 m/s, 10 m/s, 15 m/s, and 17.5 m/s, respectively, collected over an airport runway by a quad rotor. The results demonstrate that the proposed VO/INS/GPS navigation system has a superior performance and is more stable than the VO/INS and GPS/INS methods in either GPS-denied or low-texture environments; it outperforms them by approximately 66% and 54%, respectively.

Similar content being viewed by others

Data availability

The datasets are publicly available at: https://github.com/KumarRobotics/msckf_vio/wiki/Dataset.

References

Cheng Y, Maimone M, Matthies L (2005) Visual odometry on the Mars exploration rovers. In: IEEE International Conference System, Man Cybern, Oct 12–12, p 903–910

Chermak L, Aouf N, Richardson MA (2017) Scale robust IMU-assisted KLT for stereo visual odometry solution. Robotica 35(9):1864–1887. https://doi.org/10.1017/S0263574716000552

Chiang KW (2004) INS/GPS integration using neural networks for land vehicular navigation applications. UCGE Reports, Alberta

Chu CC, Lie FAP, Lemay L, Egziabher DG (2011) Performance comparison of tight and loose INS-Camera integration. In: Proceedings of the ION ITM 2011, Institute of Navigation, Portland, United States, September 19–23, p 3516–3526

Chu T, Guo N, Backén S, Akos D (2012) Monocular camera/IMU/GNSS integration for ground vehicle navigation in challenging GNSS environments. Sensors 12(3):3162–3185. https://doi.org/10.3390/s120303162

El-Shafie A, Najah A, Karim OA (2014) Amplified wavelet-ANFIS- based model for GPS/INS integration to enhance vehicular navigation system. Neural Comput Appl 24:1905–1916. https://doi.org/10.1007/s00521-013-1430-y

Feng J, Zhang C, Sun B, Song Y (2017) A fusion algorithm of visual odometry based on feature-based method and direct method. In: Chinese Automation Congress (CAC), Jinan, China, October 20–22, p 1854–1859

Harris CG, Pike JM (1987) 3D positional integration from image sequences. Image Vis Comput 6(2):233–236. https://doi.org/10.1016/0262-8856(88)90003-0

Huster A, Frew EW, Rock SM (2002) Relative position estimation for AUVs by fusing bearing and inertial rate sensor measurements. In: Oceans '02 MTS/IEEE, Biloxi, USA, October 29–31, p 1863–1870

Jiang W, Wang L, Niu X, Zhang Q, Zhang H, Tang M, Hu X (2014) High-precision image aided inertial navigation with known features: observability analysis and performance evaluation. Sensors 14(10):19371–19401. https://doi.org/10.3390/s141019371

Kaloop MR, Li H (2011) Sensitivity and analysis GPS signals based bridge damage using GPS observations and wavelet transform. Measurement 44(5):927–937. https://doi.org/10.1016/j.measurement.2011.02.008

Lee HK, Choi KW, Kong D, Won J (2013) Improved Kanade-Lucas-Tomasi tracker for images with scale changes. In: IEEE International conference on consumer electronics (ICCE), Las Vegas, NV, USA, January 11–14, p 33–34

Li W, Zhao J (2009) Wavelet-based denoising method to online measurement of partial discharge. In: Asia-pacific power and energy engineering conference (APPEEC), Wuhan, China, March 27–3, p 1–3

Lupton T, Sukkarieh S (2011) Visual-inertial-aided navigation for high-dynamic motion in built environments without initial conditions. IEEE Trans Rob 28(1):61–76. https://doi.org/10.1109/TRO.2011.2170332

Mohamed SA, Haghbayan MH, Westerlund T, Heikkonen J, Tenhunen H, Plosila J (2019) A survey on odometry for autonomous navigation systems. IEEE Access 7:97466–97486. https://doi.org/10.1109/ACCESS.2019.2929133

Mostafa MM, Moussa AM, El-Sheimy N, Sesay AB (2018) A smart hybrid vision aided inertial navigation system approach for UAVs in a GNSS denied environment. Navigation 65(4):533–547. https://doi.org/10.1002/navi.270

Nguyen T (2019) Computationally-efficient visual inertial odometry for autonomous vehicle. Dissertation, Memorial University of Newfoundland.

Ramezani M, Khoshelham K, Kneip L (2017a) Omnidirectional visual-inertial odometry using multi-state constraint Kalman filter. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, September 24–28, p 1317–1323

Ramezani M, Acharya D, Gu F, Khoshelham K (2017) Indoor positioning by visual-inertial odometry. ISPRS Ann Photogramm, Remote Sens Spatial Inf Sci 4:371

Santoso F, Garratt MA, Anavatti SG (2016) Visual–inertial navigation systems for aerial robotics: Sensor fusion and technology. IEEE Trans Autom Sci Eng 14(1):260–275. https://doi.org/10.1109/TASE.2016.2582752

Scaramuzza D, Fraundorfer F (2011) Visual odometry [tutorial]. IEEE Robot Autom Mag 18(4):80–92. https://doi.org/10.1109/MRA.2011.943233

Schwaab M, Plaia D, Gaida D, Manoli, Y (2017) Tightly coupled fusion of direct stereo visual odometry and inertial sensor measurements using an iterated information filter. In: DGON inertial sensors and systems (ISS), Karlsruhe, Germany, September 18–22, p 1–20

Shin EH (2001) Accuracy improvement of low cost INS/GPS for land applications. Dissertation, University of Calgary.

Simon D (2006) Optimal state estimation: Kalman, H infinity, and nonlinear approaches. Wiley, Hoboken

Solin A, Cortes S, Rahtu E, Kannala J (2018) Inertial odometry on handheld smartphones. In: 21st International conference on information fusion (FUSION), Cambridge, UK, July 10–13, p 1–5

Sun K, Mohta K, Pfrommer B, Watterson M, Liu S, Mulgaonkar Y, Kumar V (2018) Robust stereo visual inertial odometry for fast autonomous flight. IEEE Rob Autom Lett 3(2):965–972. https://doi.org/10.1109/LRA.2018.2793349

Szeliski R (2010) Computer vision: algorithms and applications. Springer, Washington

Titterton D, Weston JL (2004) Strapdown inertial navigation technology, 2nd edn. Institution of Engineering Technology, Stevenage

Veth M, Raquet J, Pachter M (2006) Stochastic constraints for efficient image correspondence search. IEEE Trans Aerosp Electron Syst 42(3):973–982. https://doi.org/10.1109/TAES.2006.4439212

Xian Z, Hu X, Lian J (2015) Fusing stereo camera and low-cost inertial measurement unit for autonomous navigation in a tightly-coupled approach. J Navig 68(3):434–452. https://doi.org/10.1017/S0373463314000848

Yang G, Zhao L, Mao J, Liu X (2019) Optimization-Based, simplified stereo visual-inertial odometry with high-accuracy initialization. IEEE Access 7:39054–39068. https://doi.org/10.1109/ACCESS.2019.2902295

Zhang Z, Scaramuzza D (2018) A tutorial on quantitative trajectory evaluation for visual (-inertial) odometry. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, October 1–5, p 7244–7251

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Rotational matrices

In continuation, the rotational matrices of the suggested system process model are given. The \({C}_{B}^{W}\left({\lambda }_{\mathrm{WB}}\left(t\right)\right)\) and \(R({\lambda }_{\mathrm{WB}}\left(t\right))\) in (6) and (7) are as follows:

where \({\varphi },\uptheta ,\) and \(\uppsi\) are the roll, pitch, and yaw angles, respectively.

Appendix 2

Jacobian matrices

In this section, the Jacobian matrices of the proposed system continuous model are computed. The \({F}_{sys}\left(t\right)\) matrix in (16) can be shown by:

Meanwhile,

and

The \({B}_{sys}\left(t\right)\) matrix in (16) can be calculated by:

Finally, the \({G}_{sys}\left(t\right)\) process noise matrix in Eq. (16) can be obtained as follows:

and

where the value of \(i\) depends on the number of the detected features (\(\mathrm{N}\)) in the phase of the initialization. Also, \(\times\) in (41) represents the cross product.

Rights and permissions

About this article

Cite this article

Nezhadshahbodaghi, M., Mosavi, M.R. & Hajialinajar, M.T. Fusing denoised stereo visual odometry, INS and GPS measurements for autonomous navigation in a tightly coupled approach. GPS Solut 25, 47 (2021). https://doi.org/10.1007/s10291-021-01084-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10291-021-01084-4