Abstract

Uncertainty has been the perceived Achilles heel of the radiology report since the inception of the free-text report. As a measure of diagnostic confidence (or lack thereof), uncertainty in reporting has the potential to lead to diagnostic errors, delayed clinical decision making, increased cost of healthcare delivery, and adverse outcomes. Recent developments in data mining technologies, such as natural language processing (NLP), have provided the medical informatics community with an opportunity to quantify report concepts, such as uncertainty. The challenge ahead lies in taking the next step from quantification to understanding, which requires combining standardized report content, data mining, and artificial intelligence; thereby creating Knowledge Discovery Databases (KDD). The development of this database technology will expand our ability to record, track, and analyze report data, along with the potential to create data-driven and automated decision support technologies at the point of care. For the radiologist community, this could improve report content through an objective and thorough understanding of uncertainty, identifying its causative factors, and providing data-driven analysis for enhanced diagnosis and clinical outcomes.

Similar content being viewed by others

Introduction

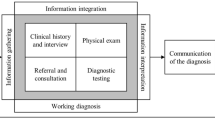

A number of deficiencies exist in current reporting strategies, which were discussed in the first article of this reporting series.1 These reporting deficiencies can be characterized according to content, communication, analysis, and organization. Content refers to the facts and observations the radiologist has identified in accordance with the imaging data acquired. Communication refers to the manner in which this contextual data is presented, so that accurate and timely clinical action can be initiated, to effect-optimized clinical outcomes. Analysis is the method in which the data is interpreted, and encompasses the combination of imaging, technical, and clinical data. The goal is to consider these disparate data elements in combination; so as to arrive at a reproducible and factual conclusion. Organization/structure refers to the manner in which this report content is created, with the hope that a clear and reproducible message will be gleaned by the reader, regardless of educational, training, and occupational variability.

As medical practice has become increasingly dependent upon imaging for clinical diagnosis, surveillance, and treatment; the criticality of optimizing imaging report data becomes heightened.2,3 Primary care physicians at the point of care rely on accurate, timely, and unequivocal imaging reports to guide clinical decision making, and expect report deliverables to conform to established best practice standards. The reality however is far from the ideal, leaving many clinical practitioners with some degree of uncertainty and lack of confidence in their subsequent clinical actions. This has the potential to produce a number of negative “downstream” ramifications including delayed diagnosis and treatment, increased cost of care, and adverse clinical actions. The challenge ahead for medical imaging providers is to critically and objectively analyze report deficiencies, develop community-wide standards in conjunction with evidence-based medicine (EBM), utilize technology to automate and refine data assimilation and analysis, and integrate clinical and imaging data into reporting. The ultimate goal is the creation of a dynamic and intuitive system which can provide real-time decision support, education and training, and outcomes analysis. The only practical way to accomplish these goals is through report data mining, which is the subject of this manuscript.

Understanding Uncertainty in Reporting

In addition to the aforementioned four listed categories of report deficiencies, we must consider the underlying report requirements of compliance with standards, diagnostic confidence, clarity, and reproducibility. In effect, a report should be able to withstand the test of time. If one was to retrospectively review imaging reports after the fact, one should be able to clearly and unequivocally understand the data being presented and clinical recommendations being made. If this report data is presented in a structured manner, the ability to analyze this data (both retrospectively and prospectively) provides a mechanism for research, education, and new technology development.

One of the longstanding and well-reported deficiencies of radiology reporting is equivocation and uncertainty, with the “hedge” often described as the official plant of the radiology community.4,5 Uncertainty has been described as “a state of having limited knowledge where it is impossible to exactly describe existing state or future outcome”.6

This definition brings to light two critical concepts within medical reporting; knowledge and outcomes. While it is often stated that knowledge is power (Sir Francis Bacon, Religious Meditations, Of Heresies, 1597), knowledge in a vacuum is of limited practical value in medicine. Knowledge in the context of improving clinical outcomes is however of extreme value, and can be thought of as the ultimate goal of all medical practitioners and the reports they create. How then, do we go about accomplishing this task?

In order to better understand knowledge, we must first understand uncertainty, which we will equate to a lack of diagnostic confidence. There are a number of causes for uncertainty (or lack of diagnostic confidence) in medicine, as listed in Table 1. These can be illustrated by taking a practical exam from the radiology reporting repertoire, the abdominal CT performed in the evaluation of appendicitis. Technical, medico-legal, psychological, anatomical, and clinical factors can all come into play when analyzing the introduction of uncertainty into such a report. From a technical standpoint, the acquisition device, acquisition parameters, protocol selection, image processing, and contrast administration all have the potential to adversely affect contrast and spatial resolution, which in turn limits the diagnostic confidence on the part of the interpreting radiologist. From an anatomic standpoint; anatomic variation, patient body habitus, and volume averaging artifact may adversely affect the radiologist’s diagnostic confidence in providing a definitive diagnosis. Clinical data related to medical/surgical history, laboratory data, and physical exam findings also play an important role in diagnostic confidence and often lead to the infamous (and ubiquitous) statement, “clinical correlation recommended”. Psychological and medico-legal factors often relate to the personality and prior experience of the radiologist. A radiologist who has passive personality traits, a prior history of medical malpractice, or is anxious or tired at the time of interpretation may opt to “hedge” his/her bets through equivocal report language (e.g., cannot exclude, should be considered). Regardless of the factors at play in creating report uncertainty, it is often a source of contention between the report author (e.g., radiologist) and reader (e.g., clinician). Clinicians often regard radiology reports devoid of uncertainty as superior to those with equivocation. But does report uncertainty adversely affect clinical outcomes? Are there situations where the introduction of uncertainty actually improves outcomes? To answer these questions, we should look outside of radiology and better understand the potential pros and cons of report uncertainty.

Uncertainty has been well studied in medical laboratory testing, in which uncertainty of measurement and has been described as the doubt associated with the trueness of a medical laboratory result.7 Uncertainty of measurement ultimately allows a clinician better understanding as to the clinical significance of the reported value. By looking at uncertainty in this manner, one could theorize that uncertainty can be both “positive” and “negative” in perception. The negative perception of uncertainty relates to the idea that uncertainty reflects a lack of conviction or understanding on the part of the reporting individual. The positive perception of uncertainty conveys the idea that not all reported observations are real (or true), but in actuality may represent an erroneous observation. As such, the uncertainty alerts the reader that additional data is required before acceptance of the data and initiation of clinical action. In the context of laboratory reporting, this can take the form of a technical error, resulting in the creation of a spurious finding (i.e., abnormal test result). Before committing to clinical action (e.g., pharmaceutical administration), the physician must validate the abnormal test result, in order to avoid misappropriated clinical therapy and a potential adverse action.

If one applies this concept of uncertainty to the medical imaging domain (i.e., doubt associated with the trueness of a medical imaging finding), both positive and negative perceptions of uncertainty can be realized. Negative perceptions are commonly derived from the radiologist who introduces language aimed at transferring responsibility to the referring physician (e.g., “clinical correlation recommended”, “cannot exclude malignancy”). Positive perceptions may be derived when a radiologist attempts to alert the reader that an observed imaging finding may not necessarily be pathologic in nature. Examples include anatomic variations, technical deficiencies, and artifacts; which can be equated to the errant laboratory test result due to technical limitations. The end result is that uncertainty is inevitable in medical reporting. The key to the successful management of uncertainty is to reliably differentiate between “good” and “bad” uncertainty, which can be facilitated through data mining.

Quantifying Uncertainty though Data Mining

An example of how report uncertainty can be objectively analyzed through data mining can be illustrated in the analysis of mammography reports.8 A multi-institutional mammography database consisting of 141,787 reports was analyzed, using natural language processing (NLP) software, which was externally validated through a manual report audit. The frequency of uncertainty in mammography reporting was subsequently analyzed in order to better understand the context in which uncertainty is reported.

Uncertainty was recorded in 19.2% of diagnostic mammography reports, versus 2.8% of screening mammography reports. When correlating with BIRADS codes (which are an indirect measure of report clinical significance), terms of uncertainty were identified in the following order of frequency (p < 0.001):

-

1.

11.0% of all reports containing findings of indeterminate clinical significance (BIRADS 0 and 3).

-

2.

10.5% of all reports containing findings of high clinical significance (BIRADS 4 and 5).

-

3.

4.3% of all reports containing findings of low clinical significance (BIRADS 2).

When analyzing the context in which uncertainty is recorded, it was determined that the type of finding plays an important role in reporting uncertainty (p < 0.01). Those findings with the highest frequency of associated uncertainty included mass (46.0% uncertainty), calcifications (37.1% uncertainty), and asymmetric density (13.4% uncertainty).

While these data provide several interesting insights as to how and when uncertainty is introduced in radiology reporting, they open the door to many unanswered questions, which are currently the subject of ongoing analyses:

-

1.

What impact does individual radiologist education and training play in report uncertainty?

-

2.

To what extent is uncertainty dependent upon institutional and/or geographic variations?

-

3.

What role does technology play in predicting (and counteracting) report uncertainty?

-

4.

How does uncertainty translate into clinical outcomes (e.g., positive and negative predictive values)?

-

5.

What role to specific imaging characteristics (e.g., size, morphology) play in report uncertainty?

Data Mining Today and Knowledge Discovery Tomorrow

The only reliable manner in which these questions can be accurately addressed is through large-scale data mining. Unfortunately, NLP is limited in its ability to analyze conventional free-text radiology reports, which are replete with real world ambiguity, language complexity, and inferencing. The same uncertainty which has long been the anathema of radiology reporting is also one of the primary detractors for functional data mining using NLP. In short, NLP is capable of processing report data, but not necessarily understanding it.9

There is an urgent need for development of a new generation of computational tools to assist end-users in the extraction of useful data from the rapidly expanding volumes of digital data in medicine (which is textual, numerical, and graphical). Historically, the concept of identifying useful patterns within large datasets has been referred to as data mining, which has been largely restricted to statistical analysis. The preferred concept is that of knowledge discovery in databases (KDD), which refers to the use of artificial intelligence techniques to discover useful knowledge from data, through a multi-step process (Table 2).10 Data mining represents one step in the overall KDD process, using specific algorithms for extracting patterns from data. KDD, on the other hand, provides additional and more comprehensive steps in the analytical process including data preparation, data selection, data cleaning, incorporation of context-specific pre-existing knowledge, and proper interpretation of the results derived through data mining.

One of the most important derived benefits of KDD (as opposed to statistical data mining alone) is the mapping of low-level data into other forms which may be more compact, more abstract, or more useful. The unifying goal of KDD is the extraction of high-level knowledge from low-level data, in the context of large datasets.11

In order to illustrate the potential utility of data mining and KDD within medicine, we will again use the example of mammography data analysis in the diagnosis of breast cancer. The multitude of potential data points for analysis is listed in Table 3, in conjunction with the various stakeholders, technologies, and clinical parameters of interest.

On the most superficial level, we can evaluate radiologist interpretation accuracy, as currently mandated by the Mammography Quality Standards Act (MQSA). In these analyses, each radiologist interpreting mammograms must have periodic calculation of positive and negative predictive values, which is analyzed and cross-referenced with local and national peer statistics. Suppose for example, one or more radiologists within an imaging department are shown to have interpretation scores outside of the norm (e.g., higher than normal number of “missed” breast cancer cases). In order to ascertain, the potential contributing factors, a number of radiologist-specific data are selected for analysis. These may include the radiologist education (e.g., general versus sub-specialty trained), continuing education, and exam volume. The null hypotheses would presume that radiologists with advanced training, higher mammography exam volumes, and frequent continuing medical education (CME) would tend to demonstrate the highest performance metrics (as determined by positive and negative predictive values).

These analyses could readily be performed using data mining techniques, assuming the requisite data is available in a reliable and standardized format. While this data would not be expected to be included in a traditional radiology report database, it could be available in an administrative radiology database, which could in turn be cross-referenced with the pertinent radiology report database.

An additional analysis aimed at analyzing radiologist report accuracy could utilize standardized data within the report database to ascertain which specific report findings have the greatest impact on individual radiologist report accuracy. As an example, a radiologist may have low diagnostic accuracy measures for one finding (e.g., micro-calcifications), as opposed to another finding (e.g., mass), in which his/her accuracy measures exceed his/her peers. In this scenario, analysis of the report database can be used to identify technology and educational interventions to improve performance. In addition to focusing CME programs on evaluation of micro-calcifications, the radiologist may also elect to integrate computer-aided detection (CAD) software into his/her mammography interpretation workflow. An additional level of data-driven analysis can further target improved performance by identifying specific morphologic and distribution characteristics (e.g., pleomorphic) of micro-calcifications which tend to pose the greatest interpretation challenge for the radiologist. If the CAD raw data was made available, the radiologist could evaluate competing CAD vendors to assess the sensitivity/specificity of each competing software program, as it specifically relates to diagnostic assessment of pleomorphic micro-calcifications. The report database can go one step further and provide a pathology proven image database, which can be queried according to finding (e.g., micro-calcifications), pathology (e.g., ductal carcinoma in situ [DCIS], morphology (e.g., pleomorphic) and BIRADS code (e.g., BIRADS 4). In this manner, the report database has the potential to provide several complementary roles; as a facilitator of targeted education and training, data-driven technology selection, and user-specific outcomes analysis.

As previously mentioned, many of the data contained within Table 3 would not be expected to be contained within the report database, but nonetheless have the potential to have an important role in data analysis. As an example, if one was to try to identify contributing factors to explain one imaging department’s clinical performance in breast cancer detection through screening mammography, they would undoubtedly want to know what role image quality may play. In order to assess image quality, a number of disparate data elements must be considered relating to quality assurance, image acquisition, and image processing. One could not reliably analyze these data accurately without analyzing the impact technology and individual stakeholders have in contributing to overall image quality. Suppose for example, one of the mammography acquisition devices provides lower contrast and/or spatial resolution than a competing unit within the same department. Alternatively, one could see where different levels of technologist education and training could have an effect on QA assessment, where departmental workflow calls for the technologist performing each study also provide QA assessment of the images obtained. Lastly, we may identify that image quality variability is most affected in patients with large and dense breasts. One can easily see that the potential number of confounding variables becomes exponential, as we increase the breadth and depth of data collection and analysis.

Creating Knowledge Infrastructure

In order to optimize uncertainty in reporting, we must accomplish the following:

-

1.

Understand its use (when, where, why, and by whom).

-

2.

Categorize in an objective and hierarchical method (degree, good vs. bad).

-

3.

Develop data mining technology to record, track, and analyze.

-

4.

Correlate with clinical outcomes.

-

5.

Provide point-of-care feedback for real-time decision support.

Uncertainty in radiology reporting can be categorized according to the clinical context in which it is manifested. Uncertainty can arise during visual inspection of the imaging dataset, in determining the validity of an observation. In viewing a mammographic image, is the perceived abnormality real or artifactual (e.g., summation artifact)? The next item for consideration is whether the observed finding is normal or pathologic (e.g., anatomic variant)? The third consideration is determination of the clinical significance of the finding (e.g.. diagnosis).

In order to reliably answer these questions, the radiologist can turn to the knowledge database for assistance. This database would consist of technical, imaging, and clinical data directly attributable to the examination being interpreted.

The clinical database would include the following:

-

1.

Historical patient medical and surgical data.

-

2.

Clinical indication prompting the ordered exam.

-

3.

Supporting laboratory and pathology data.

-

4.

Molecular data, providing genetic predisposition to disease states.

-

5.

Physical exam findings.

-

6.

Clinical test data.

-

7.

Pharmacology.

The next database available for decision support would be the imaging database, which would include the following:

-

1.

Anatomic–pathologic correlation (e.g., specific disease entities based upon anatomic localization).

-

2.

Image-centric decision support (e.g., morphologic/textural analysis, computer-aided diagnosis).

-

3.

Atlas of anatomic variants.

-

4.

Historical imaging data/reports.

-

5.

Pathology-specific electronic teaching files.

-

6.

Appropriateness criteria.

The third database would be technical and include the following data:

-

1.

Image quality assessment (e.g., motion, positioning, contrast/spatial resolution)

-

2.

Anatomy and disease specific image processing

-

3.

Protocol optimization (e.g., acquisition parameters, collimation

-

4.

Noise reduction filters

-

5.

Quality control (e.g., monitors, acquisition device)

-

6.

Contrast optimization

By leveraging these databases, the interpreting radiologist could objectively determine whether the clinical and imaging data submitted for interpretation are sufficient, what specific limiting factors exist (and to what degree), and what specific tools can be used to improve diagnostic confidence. The database might even have the capability of mathematically calculating a degree of diagnostic confidence score by correlating the observed metrics from the study being interpreted, with reference exam data contained in the database. In this manner, a radiologist could objectively determine the degree of uncertainty (i.e., diagnostic confidence) based upon available data and incorporate this into the report. In the event that the recorded uncertainty differs from the calculated uncertainty, the radiologist will be alerted as to the discrepancy, and elect to modify the report accordingly.

Here is a hypothetical example as to how the Knowledge Database would be of use in the interpretation of a radiology exam, determination of uncertainty, and clinical care decision making. The case involves a patient seen in the emergency room for an acute neurologic deficit, suspicious for stroke. The sequence of events is as follows:

-

1.

The emergency room (ER) physician places an order for a non-contrast head CT, with the clinical indication “rule out stroke”.

-

2.

The patient is transported to the radiology department, where he/she undergoes the requested head CT, with the images compromised by motion artifact.

-

3.

The radiologist interpreting the CT imaging study notices a subtle poorly defined area of decreased attenuation in the left parietal lobe, and reports as follows:

subtle, poorly defined hypodensity left parietal lobe, cannot exclude acute ischemic infarct….follow-up MRI is recommended, as clinically indicated”

-

4.

The ER physician reads the report and elects to consult a neurologist before ordering additional imaging or clinical tests.

-

5.

Due to the fact that the neurologist is in his/her office and unable to examine the patient immediately, they ask the ER physician to order the recommended MRI.

-

6.

The patient is subsequently transported back to the radiology department and undergoes an MRI of the brain with diffusion imaging, to evaluate for stroke.

-

7.

The radiologist reviews the MRI images in conjunction with the earlier CT exam and determines that the area of prior concern (left parietal lobe) is not reproduced on MRI. He/she does however identify two abnormal areas within the right frontal and left occipital lobes on the diffusion images and reports as follows:

two areas of abnormal signal intensity are identified within the right frontal and left occipital lobes, suggestive of acute ischemic events. The multi-vascular distribution of these infarcts would be suspicious for embolic disease, correlation with MR or CT angiography is recommended.

-

8.

Upon return to the ER, the nurse notes that the patient’s clinical status has deteriorated, and places the patient on cardiac monitoring.

-

9.

The neurologist arrives and conducts a bedside neurologic exam, discovering that the patient has a profound weakness on her left side along with a visual field deficit. While examining the patient he/she also sees that the cardiac rate and rhythm is abnormal and suggests a cardiology consultation. His/her additional request for MR angiography is placed on hold, due to the patient’s declining status.

-

10.

When the cardiologist arrives, he/she learns (from an old hospital discharge summary) that the patient has a pre-existing diagnosis of atrial fibrillation, which was treated with medication to control the heart rate. This is confirmed by the cardiologist on physical exam and review of the cardiac monitor tracing.

-

11.

The cardiologist performs a portable bedside echocardiogram and learns that the patient has a thrombus in the left atrium, in association with the atrial fibrillation.

-

12.

Definitive diagnosis is now established (i.e., embolic stroke), and appropriate treatment is begun.

In reviewing the sequence of events, several observations can be made with regards to the deficient access, ordering, communication, interpretation, and analysis of data.

-

1.

There was deficient clinical data provided to facilitate CT and MRI interpretation. Physical exam findings, focality, and duration of symptoms, and the pre-existing cardiac history would have significantly altered report content.

-

2.

The CT exam initially ordered by the ER physician was not the optimum imaging study to evaluate acute stroke, and resulted in delayed diagnosis.

-

3.

The equivocation of the radiologist in both the CT and MRI reports was in part due to the lack of supporting clinical data and limitations in image quality (i.e., motion).

-

4.

The failure to engage in direct communication between the radiologist and clinician further exacerbated the errors and delays in diagnosis and treatment.

-

5.

Multiple consultations were ordered prior to a complete and thorough review of the data, which led to a diffusion of clinical responsibility and lack of accountability.

In the interpretation of any imaging exam, supporting clinical data is of great importance in determining whether a given imaging abnormality has clinical relevance. For stroke evaluation, the following data may be of value to the interpreting radiologist:

-

1.

Clinical indication: the exact nature and duration of symptoms.

-

2.

Physical exam findings: deficits on neurologic exam which assist in anatomic localization.

-

3.

Clinical test data: ancillary test data to improve diagnostic confidence (e.g., transcranial Doppler, echocardiography).

-

4.

Laboratory data: lab tests which help define stroke risk (e.g., cholesterol profile)

-

5.

Molecular data: genetic/proteomic data defining patient’s genetic predisposition to disease.

-

6.

Pharmacology: current drugs which may influence stroke risk or alter therapeutic options.

-

7.

Past medical/surgical history: prior history of ischemic events (e.g., TIAs)

-

8.

Lifestyle: factors related to lifestyle altering stroke risk (e.g., exercise, obesity, smoking, stress).

The greater availability of supporting clinical data at the time if image interpretation, the greater degree of diagnostic confidence (i.e., certainty), which will in turn impact the success and timeliness of treatment. Multiple studies have shown diagnostic accuracy of stroke improves in the presence of supportive clinical data.12 – 14 At the same time, optimization of the imaging data also plays a significant role in report optimization and improved diagnostic confidence.15,16 Optimizing exam selection, image quality, protocol refinement, image processing, and use of decision support technologies all play a potential role. The key is to develop supporting technologies which can record, track, and analyze image data in order to provide assistance to the technologist and radiologist in real-time.

Current computerized physician order entry (CPOE) systems exist which can assist clinicians in order entry, define the optimal imaging exam based upon clinical context, and track utilization data.17,18 This can in effect create an important accountability measure at the first step in the imaging process. At the time of order entry, the clinician can be forced to provide context-specific clinical data before an order can be placed. Those clinicians who attempt to “game the system” by entering insufficient or erroneous data in order to complete the order will ultimately be exposed through data mining and outcome analysis. The system could in turn place heightened surveillance on the physician in question and/or mandate additional education and training prior to continued use. In the example cited, the ER physician ordering the head CT to “rule out stroke”, could have been presented data as to the comparative diagnostic efficacy of CT and MRI, along with the recommendation for MRI. Based upon the supporting clinical data entered, the database could assist the technologist and radiologist in protocol optimization. Had the prior history of atrial fibrillation been reported, additional MR or CT angiographic sequences may have been selected to better delineate the intracranial arterial anatomy and potential embolus.

One of the cardinal rules in radiology is that anatomy often predicts the diagnosis. If one were to localize an imaging abnormality on an anatomic reference map (i.e., brain mapping), a differential diagnosis could in turn be generated. This is the concept behind the probabilistic brain atlas.19 – 21 If the radiologist interpreting the CT or MRI exam had mapped the imaging abnormality onto a reference image, the computer could provide anatomic and pathologic data to assist in the interpretation process. In the cited example of the CT scan, if the radiologist marked the area of suspicion (left parietal lobe), the computer could in turn provide the expected neurologic deficit. In this particular case, where the neurologic deficit did not match this area and the radiologist would have realized the imaging finding was inconsistent with the clinical presentation. Alternatively, if the clinical data was input into the brain map, it would visually display which region of the brain would account for such a deficit, which in effect would visually outline the expected stroke territory. When the imaging data “matches” the clinical data, the radiologist would be able to render an interpretation with a high degree of confidence. An imaging finding which does not match the clinical data (e.g., neurologic deficit) would suggest one of two possibilities; either the clinical data is incorrect or the imaging finding is incidental in nature. This ability to visually cross reference clinical and imaging data provides the radiologist and clinician with an interesting and informative decision support tool, which can in turn improve diagnostic confidence.

Another opportunity for imaging technology development lies in the assessment and characterization of image quality. A poor quality image (e.g., motion artifact) leads to uncertainty (i.e., lack of diagnostic confidence) and potential for misdiagnosis. If computer-based algorithms were developed to automatically record image quality parameters at the point of image capture, both qualitatively and quantitatively, an objective image quality database could be derived.22 This would create an educational resource for technologists, identify optimal exposure parameters, provide immediate feedback for repeating poor quality images, and create an objective means with which radiologists can use in reporting. Instead of subjective and nonspecific terms in current reporting (e.g., interpretation accuracy is limited by technical deficiencies), the report can contain objective quality-centric data derived from the image quality database. This also provides for a measure for accountability on the parts of the technologist and radiologist, whom are expected to continuously monitor and intervene in poor quality imaging studies.

In the end, the goal of the Knowledge Discovery database is to create an automated mechanism for tracking clinical, technical, and imaging data with the goal of optimizing clinical outcomes. This clinical improvement is derived from the ability to improve diagnostic confidence and holding stakeholders and technologies accountable.

Conclusion

Data mining represents the future in medical innovation, by providing data-driven analysis for optimizing clinical diagnosis and treatment and the development of medicine (EBM) standards and guidelines. In their present free-text form, radiology reports limit large-scale data mining, although search technologies such as natural language processing (NLP) offer some utility in the statistical analysis of report data. If and when structured reporting solutions become widely adopted by the radiologist community, data mining capabilities will be greatly expanded, and eventually lead to the creation of KDD. These technologies have the potential to transcend medical practice, through the creation of automated decision support at the point of care. The data-driven analysis can provide for customized feedback based upon the individual end-users occupational status and training, the technologies being utilized, and the specific context (e.g., disease) in which it is being utilized. One of the most important areas in which this data architecture can offer value in improving overall report quality and clinical outcomes is the characterization of uncertainty (i.e., diagnostic confidence), which is a complex and multi-factorial problem in the current reporting paradigm. By converting uncertainty into a quantifiable metric we can begin to elucidate the contributions and interaction effects technology, stakeholders, and imaging datasets play in radiologists’ ability to render accurate and reproducible interpretations.

The same KDD technology used to analyze report uncertainty can also be applied to analyzing other components within the radiology report including pathologic findings, descriptive data (e.g., size, morphology, focality), follow-up recommendations, temporal change, and differential diagnosis. The medical community as a whole (and radiology community in particular) should begin to understand the available opportunities through large-scale data mining and proactively assist in the development, testing, and implementation of knowledge discovery. The establishment of a broad medical data infrastructure predicated on objective data analysis creates new and exciting educational, research, and clinical opportunities; in which the medical informatics community can play a major role. Rather than dismiss the opportunity out of fear, we must remember these words of Marie Curie, “nothing in life is to be feared, it is only to be understood”. This technology offers the medical community improved understanding and knowledge, along with the potential of minimizing its own uncertainty.

References

Reiner BI: The challenges, opportunities, and imperative of structure reporting in medical imaging. J Digit Imaging 22:562–568, 2009

Bhargavan M, Sunshine JH: Utilization of radiology services in the United States: levels and trends in modalities, regions, and populations. Radiology 234:824–832, 2005

Reiner B, Siegel E, Flagle C, et al: Impact of filmless imaging on utilization of radiology services. Radiology 215:163–167, 2000

Hall FM: Language of the radiology report: primer for residents and wayward radiologists. AJR 175:1239–1242, 2000

Hall FM, Mouson JS: The radiologic hedge (letter). AJR 154:903–904, 1990

Uncertainty http://en.wikipedia.org/wiki/uncertainty

The American Association for Laboratory Accreditation http://www.a2la.org/policies/P603e_Annex.pdf

Reiner B, Goel R, Siegel E, et al: Quantifying diagnostic confidence in free-text mammography reporting. Radiological Society of North America, Chicago, 2008

Natural language processing http://www.cnlp.org/publications/03NLP.LIS.Encyclopedia.pdf.

Brachman R, Anand T: The process of knowledge discovery in databases: a human-centered approach. In: Fayyad U, Piatetsky-Shapiro G, Smyth P, Uthurusamy R Eds. Advances in knowledge discovery and data mining. AAAI, Menlo Park, 1996, pp 37–58

AAAI Symposium https://www.aaai.org/aitopics/assets/PDF/AIMag17

Goldstein LB, Jones MR, Matchar DB, et al: Improving the reliability of stroke subgroup classification using the trial of ORG 10172 in acute stroke treatment (TOAST) criteria. Stroke 32:1091–1097, 2001

Adams Jr, HP, Bendixen BH, Kapelle LJ, et al: Classifications of subtype of acute ischemic stroke. Definitions for use in a multi-center trial. TOAST. Trial of ORG 10172 in acute stroke treatment. Stroke 24:35–41, 1993

Allen CMC: Clinical diagnosis of the acute stroke syndrome. QJ Med 52:515–523, 1983

Gonzalez RG, Schaefer PW, Buonanno FS, et al: Diffusion weighted MR imaging; diagnostic accuracy in patients imaged within 6 hours of stroke symptom onset. Radiology 210:155–162, 1999

Chalela JA, Kidwell CS, Nentwich LM, et al: MRI and CT in emergency assessment of patients with suspected acute stroke: a prospective comparison. Lancet 369:293–298, 2007

Rosenthal DI, Weilburg JB, Schultz T, et al: Radiology order entry with decision support: initial clinical experience. J Am Coll Radiol 10:799–806, 2006

Khorasani R: Clinical decision support in radiology: what is it, why do we need it, and what key features make it effective? J Am Coll Radiol 2:142–143, 2006

Mazziotta J, Toga A, Evans A, et al: A probabilistic atlas and reference system for the human brain: International Consortium for Brain Mapping (ICBM). Philos Trans R Soc Lond B Biol Sci 356:1293–1327, 2001

van der Kouwe AJ, Benner T, Fischl B, et al: On-line anatomic slice positioning for brain MR imaging. Neuroimage 27:222–230, 2005

Thompson PM, MacDonald D, Mega MS, et al: Detection and mapping of abnormal brain structure with a probabilistic atlas of cortical surfaces. J Comput Assist Tomogr 4:567–581, 1997

Reiner BI: Automating quality assurance for digital radiography. J Am Coll Radiol 7:486–490, 2009

Acknowledgment

This work was supported by an educational/research grant from Fuji Medical Systems, USA.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Reiner, B. Uncovering and Improving Upon the Inherent Deficiencies of Radiology Reporting through Data Mining. J Digit Imaging 23, 109–118 (2010). https://doi.org/10.1007/s10278-010-9279-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-010-9279-4