Abstract

In Ciaramella et al. (2020) we defined a new partition of unity for the Bank–Jimack domain decomposition method in 1D and proved that with the new partition of unity, the Bank–Jimack method is an optimal Schwarz method in 1D and thus converges in two iterations for two subdomains: it becomes a direct solver, and this independently of the outer coarse mesh one uses! In this paper, we show that the Bank–Jimack method in 2D is an optimized Schwarz method and its convergence behavior depends on the structure of the outer coarse mesh each subdomain is using. For an equally spaced coarse mesh its convergence behavior is not as good as the convergence behavior of optimized Schwarz. However, if a stretched coarse mesh is used, then the Bank–Jimack method becomes faster then optimized Schwarz with Robin or Ventcell transmission conditions. Our analysis leads to a conjecture stating that the convergence factor of the Bank–Jimack method with overlap L and m geometrically stretched outer coarse mesh cells is \(1-O(L^{\frac {1}{2 m}})\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 2001, Randolph E. Bank and Peter K. Jimack [3] introduced a new domain decomposition solver for the Bank–Holst adaptive meshing paradigm [2] for the adaptive solution of elliptic partial differential equations. The novel feature of the Bank–Jimack method (BJM) is that each of the subproblems is defined over the entire domain, but outside of the subdomain, a coarse mesh is used. A variant of this new domain decomposition solver using an augmented Lagrange multiplier technique is analyzed in [5] in the context of the abstract Schwarz framework. The BJM in [3] is formulated as a residual correction method, and it is not easy to interpret how and what information is transmitted between subdomains through the outer coarse mesh each subdomain has. A similar difficulty of interpretation existed as well for Additive Schwarz and Restricted Additive Schwarz [11, 15, 25]. This is very different compared to classical domain decomposition methods where this is well understood: classical Schwarz methods [24] exchange information through Dirichlet transmission conditions and use overlap, FETI [12, 13] and Neumann–Neumann methods [6, 22, 23] use Dirichlet and Neumann conditions without overlap, and optimized Schwarz methods (OSMs), which go back to Lions, [20] use Robin or higher order transmission conditions and work with or without overlap, see [14, 15] for an introduction and historic perspective of OSMs. In [10], we showed for a one-dimensional Poisson problem and two subdomains that if one introduces a more general partition of unity, then the BJM becomes an optimal Schwarz method, i.e. a direct solver for the problem converging in two iterations, and this independently of how coarse the outer mesh is. The BJM thus faithfully constructs a Robin type transmission condition involving the Dirichlet to Neumann map in 1D. We analyze here the BJM for the Poisson equation in 2 dimension and two subdomains, and show that with the modified partition of unity, the method can be interpreted as an OSM. Its convergence now depends on the structure of the outer coarse mesh each subdomain uses. In case of equally spaced coarse meshes, we prove that the asymptotic convergence factor is not as good as for an OSM. If one uses however a stretched coarse mesh, i.e. a mesh which becomes gradually more and more coarse in a specific way as one gets further away from the subdomain boundary, the method converges faster than the classical zeroth and second-order OSMs. Based on extensive numerical and asymptotic studies of the analytical convergence factor and the position of coarse points, we conjecture an asymptotic formula for the contraction factor of the BJM. Our analysis also indicates a close relation of the BJM to the class of sweeping type preconditioners [19], since the outer coarse mesh can be interpreted as an implementation of a PML transmission condition, but the BJM is not restricted to sequential decompositions without cross points.

Our paper is organized as follows: in Section 2, the BJM is recalled for a general PDE problem and its generalization by a partition of unity function is introduced (for the influence of partitions of unity on overlapping domain decomposition methods, see [16]). Moreover, for the Laplace problem in two dimensions the BJM is described in detail. The convergence analysis of the BJM is carried out in Section 3, where it is proved to be equivalent to an OSM. This important relation allows us to obtain sharp convergence results. Section 4 is devoted to extensive numerical experiments leading to our conjecture. Finally, our conclusions are presented in Section 5.

2 The Bank–Jimack Domain Decomposition Method

In this section, we give a precise description of the BJM, and introduce our model problem and the Fourier techniques that we will use.

2.1 Description of the Method

Let us consider a general self-adjointFootnote 1 linear elliptic PDE \({\mathscr{L}}u=f\) in a domain Ω with homogeneous Dirichlet boundary conditions on ∂Ω. Discretizing the problem on a global fine mesh leads to a linear system Au = f, where the matrix A is the discrete counterpart of \({\mathscr{L}}\), u is the vector of unknown nodal values on the global fine mesh, and f is the load vector.

To describe the BJM, we decompose Ω into two overlapping subdomains, Ω = Ω1 ∪Ω2. The unknown vector u is partitioned accordingly as \(\boldsymbol {u}=\begin {bmatrix} \boldsymbol {u}_{1}^{\top }, \boldsymbol {u}_{s}^{\top }, \boldsymbol {u}_{2}^{\top } \end {bmatrix}^{\top }\), where u1 is the vector of unknowns on the nodes in Ω1 ∖Ω2, us is the vector of unknowns on the nodes in the overlap Ω1 ∩Ω2, and u2 is the vector of unknowns on the nodes in Ω2 ∖Ω1. We can then write the linear system Au = f in block-matrix form,

The idea of the BJM is to consider two further meshes on Ω, one identical to the original fine mesh in Ω1, but coarse on Ω ∖Ω1, and one identical to the original fine mesh in Ω2, but coarse on Ω ∖Ω2. This leads to the two further linear systems

with

where we introduced the restriction matrices Mj, j = 1,2, to map the fine-mesh vectors fj to the corresponding coarse meshes, and I1 and I2 are identities of appropriate sizes. Notice that, depending on the chosen discretization scheme, one could get \(C_{1} = \widetilde {B}_{1}^{\top }\) and \(C_{2} = \widetilde {B}_{2}^{\top }\), which leads to symmetric matrices \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\). However, this symmetry is not generally guaranteed, as we are going to see in the next sections. The BJM as a stationary iteration is then described by Algorithm 1.

In [10] we studied the BJM for a one-dimensional problem and showed that, in general, it does not lead to a convergent stationary iteration.Footnote 2 To correct this behavior we introduced a discrete partition of unity D1 + D2 = I, where I is the identity matrix and D1 and D2 are two matrices that for a one-dimensional problem must have the form (× denote arbitrary entries satisfying the sum condition)

Using these matrices, we modified the BJM by replacing Step 2.3 in Algorithm 1 with

This leads to an iterative method that we proved to be convergent and equivalent to an optimal Schwarz method [18] for the one-dimensional Poisson problem [10]. In [10] we also showed, by direct numerical experiments, that this equivalence does not hold for the two-dimensional Poisson problem. Our goal here is to analyze the convergence of the BJM for two-dimensional problems. Notice that, in what follows, we always refer to BJM as the method obtained by using (2.4) in Algorithm 1.

2.2 The BJM for the Poisson Equation in 2D

Let us consider the problem

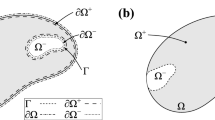

where Δ is the Laplace operator and f is a sufficiently regular right-hand side function. We consider a uniform grid in Ω = (0,1) × (0,1) of N interior points in each direction and mesh size \(h:=\frac {1}{N+1}\); see, e.g., Fig. 1a. We then discretize (2.5) by a second-order finite-difference scheme, which leads to a linear system Au = f, where \(A \in \mathbb {R}^{N^{2} \times N^{2}}\) is the classical (pentadiagonal) discrete Laplace operator. This system can be easily partitioned as in (2.1). We assume that the vector of unknowns u is obtained as \({\boldsymbol u}=[{\boldsymbol u}_{1}^{\top },\dots ,{\boldsymbol u}_{N}^{\top }]^{\top }\), where \({\boldsymbol u}_{j} \in \mathbb {R}^{N}\) contains the unknown values on the j th column of the grid. In this case, the matrix A can be expressed in the Kronecker format A = Iy ⊗ Ax + Ay ⊗ Ix, where Ax and Ay are N × N one-dimensional discrete Laplace matrices in directions x and y, and Ix and Iy are N × N identity matrices.

(a) A global fine mesh on Ω = (0,1) × (0,1) and the decomposition Ω = Ω1 ∪Ω2. (b) Global fine mesh in direction x (top row), and two partially coarse meshes corresponding to the left subdomain Ω1 (middle row) and to the right subdomain Ω2 (bottom row). The black dots represent the x-coordinates of the interfaces, namely mh and ℓh

The BJM requires two partially-coarse grids. We assume that, in direction x our decomposition Ω = Ω1 ∪Ω2 has n1 interior points Ω1 ∖Ω2, n2 interior points in Ω2 ∖Ω1, and ns points in Ω1 ∩Ω2; see Fig. 1a. For our analysis, the coarsening is performed only in x-direction,Footnote 3 as shown in Fig. 1b, while the grid in direction y is maintained fine. The partially coarse-grids have m2 coarse points in Ω2 ∖Ω1 (Fig. 1b, middle row) and m1 coarse points in Ω1 ∖Ω2 (Fig. 1b, bottom row) and the corresponding mesh sizes are h1 and h2.Footnote 4 If we denote by Ax,1 and Ax,2 the one-dimensional finite-difference Laplace matrices in x-direction, then the partially-coarse matrices \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\) are

where Ix,1 and Ix,2 are identities of sizes n1 + ns + m2 and m1 + ns + n2, respectively. Notice that, the matrices Ax,1 and Ax,2 are classical second-order finite difference matrices in 1D, defined on the union of two uniform grids. Therefore, the only entries that differ from a standard finite-difference formula are the ones corresponding to the stencil across the mesh changes. For example, the five-point formulas for Ax,1 on fine and coarse meshes are \((A_{x,1} v)_{j} = \frac {-v_{j-1}+2v_{j} -v_{j+1}}{h^{2}}\), for j ≤ n1 + n2, and \((A_{x,1} {\boldsymbol v})_{j} = \frac {-v_{j-1}+2v_{j} -v_{j+1}}{{h_{1}^{2}}}\), for j ≥ n1 + n2 + 2, while at the point across the mesh change (see also Fig. 1), we have

The matrices \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\) can be partitioned exactly as in (2.2), and the restriction matrices T1 and T2 have now the forms

where \(\widehat {M}_{1} \in \mathbb {R}^{m_{1} \times n_{1}}\) and \(\widehat {M}_{2} \in \mathbb {R}^{m_{2} \times n_{2}}\) are one-dimensional restriction matrices and \(I_{n_{s}+n_{2}}\) and \(I_{n_{1}+n_{s}}\) are identity matrices of sizes ns + n2 and n1 + ns. It remains to describe the matrices \(D_{1} \in \mathbb {R}^{Nn_{s} \times Nn_{s}}\) and \(D_{2} \in \mathbb {R}^{Nn_{s} \times Nn_{s}}\) used in (2.4). These form a partition of unity, that is \(D_{1}+D_{2}=I_{Nn_{s}}\), where \(I_{Nn_{s}}\) is an identity of size Nns, and have the forms

We have then described all the components that allow us to use the BJM (namely Algorithm 1) for the two-dimensional Poisson problem (2.5).

The choice of discretization by the finite-difference method allows us to perform a detailed convergence analysis based on the diagonalization obtained in Section 2.3.

2.3 A Discrete Fourier Expansion and the η −Δ Equation in 1D

The finite-difference matrices A, \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\) have similar structures based on Kronecker-product expansions: the matrix components in direction y are the same and are not coarsened. Hence, the one-dimensional discrete Laplace matrix Ay appears unchanged in A, \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\), while the matrix Ax appearing in A is replaced in \(A_{{\varOmega }_{1}}\) by Ax,1 and in \(A_{{\varOmega }_{2}}\) by Ax,2.

It is important to notice that Ay is a tridiagonal Toeplitz matrix having values 2/h2 on the main diagonal and values − 1/h2 on the first upper and lower diagonals. It is well-known that Ay can be diagonalized as U⊤AyU = Λ, where \({\Lambda }=\text {diag}(\lambda _{1},\dots ,\lambda _{N})\) with λj > 0, and the columns of the orthogonal matrix \(U\in \mathbb {R}^{N\times N}\) are normalized discrete Fourier sine modes. If one now defines \(\widehat {U}:= U \otimes I_{x}\), then it is possible to block-diagonalize A,

where we used the property (C1 ⊗ C2)(C3 ⊗ C4) = (C1C3) ⊗ (C2C4), for any matrices C1, C2, C3, and C4 such that the matrix products C1C3 and C2C4 can be formed. Defining the vectors \(\widehat {{\boldsymbol u}} := \widehat {U}^{\top } {\boldsymbol u}\) and \(\widehat {{\boldsymbol f}} := \widehat {U}^{\top } {\boldsymbol f}\) and decomposing them as \(\widehat {{\boldsymbol u}} = [ \widehat {{\boldsymbol u}}_{1}^{\top },\dots ,\widehat {{\boldsymbol u}}_{N}^{\top }]^{\top }\) and \(\widehat {{\boldsymbol f}} = [\widehat {{\boldsymbol f}}_{1}^{\top } ,\dots ,\widehat {{\boldsymbol f}}_{N}^{\top } ]^{\top }\), we obtain that the linear system Au = f can be equivalently written as

This is the discrete version of a Fourier sine diagonalization of the continuous problem (2.5); see, e.g, [8]. Notice that each component \(\widehat {{\boldsymbol u}}_{j} \in \mathbb {R}^{N}\) still represents a vector of nodal values on the j th row of the discretization grid. Hence, we can decompose it as \(\widehat {{\boldsymbol u}}_{j} = [\widehat {{\boldsymbol u}}_{j,1}^{\top }, \widehat {{\boldsymbol u}}_{j,s}^{\top }, \widehat {{\boldsymbol u}}_{j,2}^{\top }]^{\top }\), where \(\widehat {{\boldsymbol u}}_{j,1} \in \mathbb {R}^{n_{1}}\) has values on the nodes in Ω1 ∖Ω2, \(\widehat {{\boldsymbol u}}_{j,2} \in \mathbb {R}^{n_{2}}\) has values on the nodes in Ω2 ∖Ω1, and \(\widehat {{\boldsymbol u}}_{j,s} \in \mathbb {R}^{n_{s}}\) has values on the nodes in Ω1 ∩Ω2.

Now, using the block-diagonalized form (2.9)–(2.10), we will rewrite the BJM algorithm for each component j of \(\widehat {{\boldsymbol u}}\). Given an approximation uk obtained at the k th iteration of Algorithm 1, one can compute \(\widehat {{\boldsymbol u}}^{k} = U^{\top } {\boldsymbol u}^{k}\) and \(\widehat {{\boldsymbol r}}^{k} = U^{\top } {\boldsymbol r}^{k}\) and rewrite Step 2.1 as

Similarly as for the system Au = f, we can transform the residual subsystems of Step 2.2. To do so, we define \(\widehat {U}_{i}:= U \otimes I_{x,i}\), for i = 1,2, such that \(\widehat {{\boldsymbol v}}^{k} = \widehat {U}_{1}^{\top } {\boldsymbol v}^{k}\) and \(\widehat {{\boldsymbol w}}^{k} = \widehat {U}_{2}^{\top } {\boldsymbol w}^{k}\), and write the subsystems \(A_{{\varOmega }_{1}} \boldsymbol {v}^{k} = T_{2} \boldsymbol {r}^{k}\) and \(A_{{\varOmega }_{2}} \boldsymbol {w}^{k} = T_{1} \boldsymbol {r}^{k}\) as

which allows us to obtain

Now, using the structures of \(A_{{\varOmega }_{i}}\) given in (2.6), we obtain

for i = 1,2, and recalling the matrices Ti, defined in (2.7), we get

and

Replacing (2.13), (2.14) and (2.15) into (2.12), we rewrite the residual systems in Step 2.2 as

for \(j=1,\dots ,N\). It remains to study (2.4) (with the matrices Di defined in (2.8)) that represents Step 2.3. This equation can be written in the compact form

where \({D_{1}^{e}} \in \mathbb {R}^{N \times (n_{1}+n_{s}+m_{2})}\) and \({D_{2}^{e}} \in \mathbb {R}^{N \times (m_{1}+n_{s}+n_{2})}\) are given by

Now, using (2.17) we get

and recalling the structures of \({D_{1}^{e}}\) and \({D_{2}^{e}}\), we obtain

Equations (2.11), (2.16) and (2.18) represent the BJM for each discrete Fourier component \(\widehat {\boldsymbol {u}}_{j}^{k}\). Clearly the iterative process for each component does not depend on the others, and it suffices to study the convergence of each component separately.

A closer inspection of the matrices in (2.11) and (2.16) reveals that the BJM for one component \(\widehat {\boldsymbol {u}}_{j}^{k}\) is exactly the BJM for the solution of a discretized one-dimensional η −Δ problem of the form

where \(\widehat {u}_{j}\) is the j th coefficient of the Fourier sine expansion of u, \(\widehat {f}_{j}\) is the j th Fourier coefficient of f, and ηj = (πj)2. Hence, if we would know a continuous representation of the BJM for the solution to (2.19), then we could perform a Fourier convergence analysis similarly as it is often done at the continuous level for other one-level domain decomposition methods; see, e.g., [7, 8, 14]. This is exactly the focus of Section 3, where we will show that the BJM for the one-dimensional η −Δ boundary value problem is an OSM. This equivalence will allow us to perform a detailed Fourier convergence analysis of the BJM.

3 Convergence Analysis of the BJM

Motivated by the results in Section 2.3, we study now the BJM for the solution of a one-dimensional discrete η −Δ problem and prove that this is equivalent to a discrete OSM, see for example [26]. Our analysis will reveal that the BJM produces implicitly some particular Robin parameters, dependent on η, in the equivalent OSM. Since the chosen discretization for the OSM is consistent and convergent, one can pass to the limit from the discrete to the continuous level. Therefore, we will obtain that the continuous limit of the BJM is an OSM, where the Robin parameters are the continuous limits of the discrete Robin parameters of the BJM. Once this equivalence interpretation is established, we will study the dependence of the continuous convergence factor of the BJM with respect to η (hence the Fourier frequency), to the size of the overlap, to the number of coarse points and their location.

The main steps of the described analysis are organized in four subsections. In Section 3.1 we recall the OSM, derive its convergence factor at the continuous level and then obtain a discretization based on the finite-difference method for non-uniform grids; see, e.g., [27]. In Section 3.2, we show the equivalence between the BJM and the discrete OSM and discuss the BJM convergence factor in the continuous limit. Sections 3.3 and 3.4 focus on the analysis of the BJM convergence factor for uniform and non-uniform coarse grids.

3.1 The OSM for the One-Dimensional η −Δ Equation

To recall the OSM for

we consider an overlapping domain decomposition (0,1) = (0,β := xℓ) ∪ (α := xm,1); see Fig. 1b, top row. Given an appropriate initialization pair \(({u_{1}^{0}},{u_{2}^{0}})\), the OSM for (3.1) is

for \(k=1,2,\dots \), where p12 and p21 are two positive parameters that can be optimized to improve the convergence of the iteration; see, e.g., [14]. This optimization process gives the name Optimized Schwarz Method to the scheme (3.2). In fact, the convergence factor of the method depends heavily on p12 and p21. To compute this convergence factor, we can assume that f = 0 (working by linearity on the error equations). The general solution of the first subproblem in (3.2) with f = 0 is of the form \(u_{1}(x)=A_{1}e^{\sqrt {\eta }x}+B_{1}e^{-\sqrt {\eta }x}\). Using the boundary condition u1(0) = 0, we find that A1 = −B1 and we thus have \(u_{1}(x)=2A_{1}\sinh (\sqrt {\eta }x)\). Similarly, \(u_{2}(x)=A_{2}e^{\sqrt {\eta }x}+B_{2}e^{-\sqrt {\eta }x}\), and since u2(1) = 0, we find that \(B_{2}=-A_{2} e^{2\sqrt {\eta }}\) and we thus have \(u_{2}(x)=2A_{2}e^{\sqrt {\eta }}\sinh (\sqrt {\eta }(x-1))\). Using the Robin transmission condition at x = α in the second subproblem of (3.2), we find

which leads to

Similarly, using the Robin condition at the point x = β in the first subproblem of (3.2) we find

Replacing \({A_{1}^{k}}\) from (3.4) at iteration k − 1 into (3.3) shows that the convergence factor over a double iteration of the OSM is

Notice that the convergence factor ρ depends on η, the two Robin parameters p12 and p21, and on the positions of the interfaces α and β (hence the length of the overlap L := β − α).

To obtain a discrete formulation of the OSM, we consider two uniform grids of size h in the subdomains (0,β) and (α,1) as the ones shown in Fig. 1b, top row. Using the finite-difference method applied to these grids, we discretize the two subproblems in (3.2) and obtain the linear systems

where \(A_{\text {OSM},j} \in \mathbb {R}^{(n_{j}+n_{s})\times (n_{j}+n_{s})}\) and \(\boldsymbol {f}_{j} \in \mathbb {R}^{n_{j}+n_{s}}\), j = 1,2, are

and the matrices \(F_{1} \in \mathbb {R}^{n_{1}+n_{s} \times n_{2}+n_{s}}\) and \(F_{2} \in \mathbb {R}^{n_{2}+n_{s} \times n_{1}+n_{s}}\) are such that

for any \(\boldsymbol {g} \in \mathbb {R}^{n_{2}+n_{s}}\) and \(\boldsymbol {h} \in \mathbb {R}^{n_{1}+n_{s}}\). Notice that, since η,p12,p21 > 0 for any h > 0 the matrices AOSM,1 and AOSM,2 are strictly diagonally dominant, hence invertible. Therefore, the OSM (3.6) is a stationary method whose standard form (see, e.g., [9]) is

where \(M_{\text {OSM}} = \begin {small} \begin {bmatrix} A_{\text {OSM,1}} & 0 \\ 0 & A_{\text {OSM,2}} \\ \end {bmatrix} \end {small}\) and \(N_{\text {OSM}} = \begin {small} \begin {bmatrix} 0 & F_{1} \\ F_{2} & 0 \\ \end {bmatrix} \end {small}\). This is sometimes also called an optimized block Jacobi algorithm; see, e.g., [26]. If convergent, this iterative procedure generates a sequence that converges to the solution of the augmented problem

In our analysis, another equivalent form of the the discrete OSM (3.7) will play a crucial role. This is the so-called optimized restricted additive Schwarz (ORAS) method, which is defined as

where \(\widehat {\boldsymbol {r}}^{k} = \boldsymbol {f} - A \widehat {\boldsymbol {u}}^{k}\), \(R_{1} \in \mathbb {R}^{(n_{1}+n_{s}) \times N}\), and \(R_{2} \in \mathbb {R}^{(n_{2}+n_{s}) \times N}\) are restriction matrices of the form

while \(\widetilde {R}_{1} \in \mathbb {R}^{(n_{1}+n_{s}) \times N}\) and \(\widetilde {R}_{2} \in \mathbb {R}^{(n_{2}+n_{s}) \times N}\) are similar restriction matrices, but corresponding to a non-overlapping decomposition satisfying \(\widetilde {R}_{1}^{\top } \widetilde {R}_{1} + \widetilde {R}_{2}^{\top } \widetilde {R}_{2} = I_{N}\); see [26] for more details. It is proved in [26] that (3.8) and (3.7) are equivalent for any R1 and R2, as the ones considered in this section, that induce a consistent matrix splitting.

3.2 The BJM as an OSM for the One-Dimensional η −Δ Equation

Let us first recall the BJM for the one-dimensional problem (3.1) and state explicitly all the matrices that we need for our analysis. We consider the grids shown in Fig. 1b and the finite-difference method for non-uniform grids; see, e.g., [27]. The full problem on the global fine mesh (Fig. 1b, top row) is

where \(A \in \mathbb {R}^{N \times N}\) is a tridiagonal symmetric matrix that we decompose as

The matrices \(A_{1} \in \mathbb {R}^{n_{1} \times n_{1}}\), \(A_{s} \in \mathbb {R}^{n_{s} \times n_{s}}\), and \(A_{2} \in \mathbb {R}^{n_{2} \times n_{2}}\), are tridiagonal and have the form

while \(B_{1} \in \mathbb {R}^{n_{1} \times n_{s}}\) and \(B_{2} \in \mathbb {R}^{n_{2} \times n_{s}}\) are zero except for one corner entry:

Hence for a given approximation \(\boldsymbol {u}^{k}=[(\boldsymbol {u}_{1}^{k})^{\top },(\boldsymbol {u}_{s}^{k})^{\top },(\boldsymbol {u}_{s}^{k})^{\top }]^{\top }\), the residual rk is

The correction problems on the two partially coarse grids (Fig. 1b, middle and bottom rows), are

where \(A_{{\varOmega }_{1}} \in \mathbb {R}^{(n_{1} + n_{s} + m_{2}) \times (n_{1} + n_{s}+ m_{2})}\), \(A_{{\varOmega }_{2}}\in \mathbb {R}^{(n_{2} + n_{s} + m_{1}) \times (n_{2} + n_{s} + m_{1})}\), \(T_{1} \in \mathbb {R}^{(n_{2} + n_{s} + m_{1}) \times N}\), and \(T_{2} \in \mathbb {R}^{(n_{1} + n_{s} + m_{2}) \times N}\) have the forms given in (2.2), with A1, As, A2, B1, and B2 as above. The matrices \(C_{1} \in \mathbb {R}^{n_{s} \times m_{1}}\) and \(C_{2} \in \mathbb {R}^{n_{s} \times m_{2}}\) are

The matrices \(\widetilde {A}_{1} \in \mathbb {R}^{m_{1} \times m_{1}}\) and \(\widetilde {B}_{1} \in \mathbb {R}^{m_{1} \times n_{s}}\) in the BJM method in Algorithm 1 are

while \(\widetilde {A}_{2} \in \mathbb {R}^{m_{2} \times m_{2}}\) and \(\widetilde {B}_{2} \in \mathbb {R}^{m_{2} \times n_{s}}\) are

We do not need to specify the restriction matrices M1 and M2, because they multiply the residual components r1 and r2, which are zero as shown in the upcoming Lemma 3.1. The matrices Mj do not play any role in the convergence of the method if our new partition of unity is used. However, if the original partition of unity proposed in [3] is considered, then they contributes to the convergence behavior. Finally, the partition of unity diagonal matrices \(D_{1} \in \mathbb {R}^{n_{s} \times n_{s}}\) and \(D_{2} \in \mathbb {R}^{n_{s} \times n_{s}}\) have the structures given in (2.3). Notice that, since η > 0, the tridiagonal matrices \(\widetilde {A}_{{\varOmega }_{1}}\) and \(\widetilde {A}_{{\varOmega }_{1}}\) are strictly diagonally dominant for any h,h1,h2 > 0, hence invertible.

The BJM in Algorithm 1 consists of iteratively computing the residual (3.11), solving the two correction problems (3.12) and then computing the new approximation using (2.4). We are now ready to prove the equivalence between the BJM and the discrete OSM. To do so, we need an important property of the BJM proved in the next lemma.

Lemma 3.1

The BJM for the solution of (3.10) (and based on (3.11), (3.12), and (2.4) with all the matrices described above) produces for any initial guess u0 and arbitrary partitions of unity satisfying (2.3) zero residual components outside the overlap, \(\boldsymbol {r}_{1}^{k}=\boldsymbol {r}_{2}^{k}=0\), for k = 1,2,…

Proof

We only sketch the proof here, since the result is proved in detail in [10]. Moreover, we consider only \(\boldsymbol {r}_{1}^{k}\), because the proof for \(\boldsymbol {r}_{2}^{k}\) is similar. Using equations (3.11) and (2.4), we compute

since \(\boldsymbol {r}_{1}^{k-1}-A_{1}\boldsymbol {v}_{1}^{k-1}=B_{1}\boldsymbol {v}_{s}^{k-1}\) because of (3.12) at k − 1. Now using the structures of B1, D1 and D2 we get

independently of the middle elements of D1,Footnote 5 and thus \(B_{1}\boldsymbol {v}_{s}^{k-1}-B_{1}D_{1}\boldsymbol {v}_{s}^{k-1}=0\). By a similarly calculation, one can show that \(B_{1}D_{2}\boldsymbol {w}_{s}^{k-1}=0\), also independently of the middle elements of D2, which proves that \(\boldsymbol {r}_{1}^{k}=0\) for k = 1,2,… □

Since \(\widetilde {A}_{1}\) and \(\widetilde {A}_{2}\) are invertible, the Schur-complement matrices \(A_{s} - C_{2} \widetilde {A}_{2}^{-1} \widetilde {B}_{2}\) (of \(A_{{\varOmega }_{1}}\)) and \(A_{s} - C_{1} \widetilde {A}_{1}^{-1} \widetilde {B}_{1}\) (of \(A_{{\varOmega }_{2}}\)) are well-defined and we can compute the entries we need for our analysis using the following lemma.Footnote 6

Lemma 3.2

The first element of the inverse of the n × n tridiagonal matrix

is given by \(\mu (n):=(T^{-1})_{1,1} = \frac {{\lambda _{2}^{n}}-{\lambda _{1}^{n}}}{{\lambda _{2}^{n}}(a_{1}+b_{1}\lambda _{1})-{\lambda _{1}^{n}}(a_{1}+b_{1}\lambda _{2})}\), \(\lambda _{1,2}:=\frac {a}{2}\pm \sqrt {\frac {a^{2}}{4}-1}\).

Proof

The first element of the inverse of T is the first component u1 of the solution of the linear system

The solution satisfies the recurrence relation − uj+ 1 + auj − uj− 1 = 0, j = 2,3,…,n − 1, whose general solution is \(u_{j}=C_{1}{\lambda _{1}^{j}}+C_{2}{\lambda _{2}^{j}}\) with λ1,2 the characteristic roots of λ2 − aλ + 1 = 0 given in the statement of the lemma. The two boundary conditions to determine the constants C1,2 are

Solving this linear system for C1,2 gives (using that 3 − i = 2 if i = 1 and 3 − i = 1 if i = 2)

Inserting these constants into uj and evaluating at j = 1 gives

which upon simplification, using the Vieta relations satisfied by the roots, i.e. λ1λ2 = 1 and λ1 + λ2 = a, leads to the result. □

Lemma 3.3

The matrices \(C_{2}\widetilde {A}_{2}^{-1}\widetilde {B}_{2}\) and \(C_{1}\widetilde {A}_{1}^{-1}\widetilde {B}_{1}\) are given by

with the function μ(n) from Lemma 3.2.

Proof

For the first result, using the sparsity patterns of C2 and \(\widetilde {B}_{2}\), we obtain

and we thus need to find the first entry of \(\widetilde {A}_{2}^{-1}\). Defining \(a_{1}:=\frac {2h_{1}}{h}+\eta {h_{1}^{2}}\), \(b_{1}:=\frac {-2h_{1}}{h+h_{1}}\), and \(a:=2+\eta {h_{1}^{2}}\), and multiplying by \({h_{1}^{2}}\), we obtain precisely a matrix like in Lemma 3.2,

and therefore \((({h_{1}^{2}}\widetilde {A}_{2})^{-1})_{11}=\mu (m_{2})\), which shows the first claim. For the second one, it suffices to notice that Lemma 3.2 also holds if the matrix is reordered from top left to bottom right, and can thus be used again. □

Notice that the matrices \(C_{2}\widetilde {A}_{2}^{-1}\widetilde {B}_{2}\) and \(C_{1}\widetilde {A}_{1}^{-1}\widetilde {B}_{1}\) are operators which are non-zero only on the last column of the overlap. Therefore, as we are going to see in the rest of this section, they can be interpreted as generalized Robin boundary conditions. Now, using the Schur-complements \(A_{s} - C_{2} \widetilde {A}_{2}^{-1} \widetilde {B}_{2}\) (of \(A_{{\varOmega }_{1}}\)) and \(A_{s} - C_{1} \widetilde {A}_{1}^{-1} \widetilde {B}_{1}\) (of \(A_{{\varOmega }_{2}}\)), we can introduce the matrices \(\widehat {A}_{1}\) and \(\widehat {A}_{2}\):

which allow us to prove the following result.

Lemma 3.4

The matrices \(\widehat {A}_{1}\) and \(\widehat {A}_{2}\) are invertible and the inverses of \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\) have the forms

and

where \(\overline {B}_{1} = [ 0 , \widetilde {A}_{2}^{-1}\widetilde {B}_{2} ]\) and \(\overline {B}_{2} = [ \widetilde {A}_{1}^{-1}\widetilde {B}_{1} , 0 ]\).

Proof

We prove the result for \(\widehat {A}_{1}\). The proof for \(\widehat {A}_{2}\) can be obtained exactly by the same arguments. Recalling that η > 0, a direct inspection of the matrix \(A_{{\varOmega }_{1}}\) reveals that it is strictly diagonally dominant. Hence, \(\det (A_{{\varOmega }_{1}})\neq 0\). Now, consider the block structure of \(A_{{\varOmega }_{1}}\) given in (2.2). Since \(\widetilde {A}_{2}\) is invertible, we factorize \(A_{{\varOmega }_{1}}\) as

where \(I_{n_{1}}\), \(I_{n_{s}}\), and \(I_{m_{2}}\) are identity matrices of sizes n1, ns, and m2. This factorization allows us to write \(0 \neq \det (A_{{\varOmega }_{1}}) = \det (\widetilde {A}_{2})\det (\widehat {A}_{1})\), which implies that \(\det (\widehat {A}_{1})\neq 0\). Now, a straightforward calculation using the previous factorization allows us to get (3.13). □

Now, we notice that the BJM can be written (using (3.12) and (2.4)) in the compact form

where the block-diagonal matrices \(\widetilde {T}_{1} \in \mathbb {R}^{(n_{1}+ n_{s}+m_{2})\times N}\) and \(\widetilde {T}_{2} \in \mathbb {R}^{(m_{1}+ n_{s}+n_{2})\times N}\) are

A direct calculation using Lemma 3.1 (hence that \(\boldsymbol {r}_{1}^{k}=0\) and \(\boldsymbol {r}_{2}^{k}=0\)) and Lemma 3.4 (hence the formulas (3.13) and (3.14)) allows us to obtain

where the matrices R1 and R2 are the ones given in (3.9). Since the results proved in Lemma 3.1 are independent of the middle diagonal entries of D1 and D2, we can choose them such that the equalities

are fulfilled. Therefore, the BJM (3.15) becomes

which is already very similar to the ORAS method (3.8). Now, a direct comparison of \(\widehat {A}_{1}\) and AOSM,1, which uses the results of Lemma 3.3, reveals that they are equal except for the bottom-right corner elements, which are

Similarly, \(\widehat {A}_{2}\) and AOSM,2 are equal except for the top-left corner elements, which are

Therefore, if one chooses

then \(\widehat {A}_{j} = A_{\text {OSM},j}\) for j = 1,2. Replacing this equality into (3.17), we obtain that the BJM is equivalent to the ORAS method (3.8), and hence to the discrete OSM (3.6). We summarize our findings in the following theorem.

Theorem 3.5

If the partition of unity matrices D1 and D2 have the forms (2.3) and are chosen such that the equalities (3.16) hold, and if the Robin parameters of the discrete OSM (3.6) are chosen as in (3.18), then the BJM is equivalent to the ORAS method (3.8) and to the discrete OSM (3.6).

Notice that Theorem 3.5 has the following important consequence. Since the discrete OSM (3.6) is obtained by a consistent and convergent discretization of the continuous OSM (3.2), we find that, in the limit for \(h\rightarrow 0\), the continuous counterpart of the BJM is the OSM (3.2). This will allow us to study in Sections 3.3 and 3.4 the convergence factor of the BJM at the continuous level. For this purpose, from now on, we denote by p12(h,η,h1) and p21(h,η,h2) the two Robin parameters of (3.18) to stress their dependence on the discretization size h, the (Fourier) parameter η and the coarse mesh sizes h1 and h2. Notice that μ(m2) and μ(m1) in (3.18) depend on h, h1, h2 and η (see Lemma 3.3). Recalling the results obtained in Section 3.1, the continuous BJM convergence factor is given by (3.5), where p12 and p21 are the limits for \(h\rightarrow 0\) (with m1 and m2 fixed) of the parameters chosen in Theorem 3.5.

It is important to remark at this point that the first coarse points, namely the point n1 + ns + 1 for the first mesh and the point m1 for the second mesh, are located at distance h from the interfaces. With this choice we were able to define discrete finite-difference derivatives across these points and in Sections 3.3 and 3.4 we will take limits for \(h\rightarrow 0\), while keeping the numbers m1 and m2 of the coarse points fixed.

Finally, we wish to remark that all the calculations performed in this section, except for the precise formulas for μ(m2) and μ(m1) in Lemma 3.3, remain valid if, instead of uniform coarse grids, one considers two coarse grids which are non-uniform, in the sense that the m1 points in Ω1 ∖Ω2 and the m2 points in Ω2 ∖Ω1 are not uniformly distributed, leading to invertible matrices \(\widetilde {A}_{1}\) and \(\widetilde {A}_{2}\). Therefore, the equivalence between BJM and OSM remains valid also in the case of non-uniform coarse grids.

3.3 Uniform Coarse Grid

The goal of this section is to study the contribution of uniform coarse grids to the convergence of the BJM for the solution to (2.5). For simplicity, we assume that the two partially coarse grids have the same number of coarse points m := m1 = m2. To satisfy this condition, we fix the size of the overlap L and choose \(\alpha =\frac {1-L}{2}\) and \(\beta =\frac {1+L}{2}\). In this case, we also have that \(h_{1}=h_{2}=\frac {1-\beta -h}{m}\). We consider the cases of m = 2, m = 3, and m = 4 coarse points.

For the sake of clarity, we first summarize the structure of our analysis. For each given m ∈{2,3,4}, we first consider the corresponding BJM Robin parameters, whose explicit formulas can be obtained as in Lemma 3.3, and then pass to the limit for \(h \rightarrow 0\) to get their continuous counterparts. These continuous parameters will be replaced into the formula (3.5), which will give us the continuous convergence factor of the BJM corresponding to the given m, to a fixed (Fourier) parameter η, and to the size of the overlap L. For fixed m and given values of L we will numerically compute the maximum of the convergence factor with respect to the (Fourier) parameter η. This will allow us to study the deterioration of the contraction factor for decreasing size L of the overlap. While performing this analysis, we compare the convergence of the BJM to the one of the OSM with optimized parameter.

From the convergence factor ρ of the OSM in (3.5), we see that choosing

gives ρ = 0 for the frequency η. These are thus the optimal parameters for this frequency, and make the OSM a direct solver for the corresponding error component.

For m = 2 coarse points, proceeding as in the proof of Lemma 3.2 to compute the corresponding μ(m2) = μ(m1) and using (3.18), we get the (discrete) BJM Robin parameters

Recalling that \(h_{1}=h_{2}=\frac {1-\beta -h}{2}\) and taking the limit for \(h \rightarrow 0\), we obtain

We see that the Robin parameters \(\hat {p}_{12}\) and \(\hat {p}_{21}\) are rational functions of the Fourier parameter η with coefficients depending on the outer subdomain sizes 1 − β and α. In Fig. 2, we compare the Robin parameter \(\hat {p}_{12}\) of the BJM for m = 2 (blue line) with the optimal Robin parameter \(p_{12}^{\ast }\) of the OSM (black dashed line) for three different values of the overlap L. We observe that for small η the Robin parameters of both methods are quite close, which indicates that the BJM method performs well for low-frequency error components. This is clearly visible in Fig. 3, where we plot the corresponding convergence factors (as functions of η) inserting \(\hat {p}_{12}\) and \(\hat {p}_{12}\) into (3.5)Footnote 7 for two different overlaps L, using \(\alpha =\frac {1-L}{2}\) and \(\beta =\frac {1+L}{2}\). We also see that the convergence factor clearly has a maximum at some η2(L), whose corresponding error mode converges most slowly, and convergence deteriorates when L becomes small. In Fig. 4 (left), we present the value η2(L) as functions of L and observe that it grows like O(L− 1). The corresponding contraction factor, namely \(\bar {\rho }_{2}(L) := \max \limits _{\eta } \rho _{2}(\eta ,L) := \max \limits _{\eta }\rho \big (\eta ,\hat {p}_{12}(\eta ,L),\hat {p}_{21}(\eta ,L),\alpha =\frac {1-L}{2},\beta =\frac {1+L}{2}\big )\), is shown as function of L in Fig. 4 (right-dashed blue line, represented as 1 − ρ2(L)). Here, one can observe clearly that as L gets smaller the convergence deteriorates with an order O(L1/2).

Let us now discuss the behavior \(\bar {\rho }_{2}(L)=1-O(L^{\frac {1}{2}})\) shown in Fig. 4 (right): it was proved in [14] that the convergence factor of the OSM with overlap L behaves like \(\rho _{OSM}^{\star }=1- O(L^{\frac {1}{3}})\) with Robin transmission conditions, and \(\rho _{OSM}^{\star }=1- O(L^{\frac {1}{5}})\) with second-order (Ventcell) transmission conditions. Hence, the OSM performs better than the BJM with a uniform coarse grid with m = 2 uniformly distributed coarse points,Footnote 8 since convergence deteriorates more slowly when the overlap L goes to zero.

We have seen that, for only two points the BJM is already a good method for low frequencies, since the parameters \(\hat {p}_{12}\) and \(\hat {p}_{21}\) are very close to the optimal ones \(p_{12}^{\ast }\) and \(p_{21}^{\ast }\) for relatively small η. However, the convergence factor deteriorates with L faster than for the OSM. It is natural to ask: does the behavior of the BJM improve if more coarse points are used? The answer is surprisingly negative! In fact, the convergence factor remains of order \(1-O(L^{\frac {1}{2}})\). To see this, we now repeat the analysis for uniform coarse grids with m = 3 and m = 4 points. For m = 3, we find the analog of (3.19) with \(E_{2}(\tilde {h})\) replaced by

and for the corresponding optimized parameters when h goes to zero the analog of (3.20) with the rational function \(R_{2}(\tilde {L})\) replaced by

In Fig. 2 (red lines) we show the Robin parameters of the BJM with m = 3 coarse points as a function of η and we compare it to the optimal Robin parameters of the OSM. We observe that they are closer compared to the m = 2 point case. This seems to suggest an improvement of the convergence factor, but the plots of the convergence factor in Fig. 3 show that this improvement is only minor compared to the case of m = 2 coarse mesh points. This is also confirmed by the results in Fig. 4 (right): we see that \(\bar {\rho }_{3}=1-O(L^{\frac {1}{2}})\), similar to the m = 2 coarse point case. The same happens for the m = 4 coarse mesh point case, where

and we show the corresponding contraction factor in Figs. 3 (black lines) and 4 (right). Again we see that \(\bar {\rho }_{4}(L) = 1 - O(L^{1/2})\).

We thus conclude that the convergence factor of the BJM with a uniform coarse grid always behaves as \(1-O(L^{\frac {1}{2}})\) independently of the number of coarse points of the grids. This shows that the OSM has a better convergence factor compared to the BJM with uniform coarse grids since its convergence factor behaves as \(1-O(L^{\frac {1}{3}})\), but BJM with uniform coarse grids converges better than classical Schwarz, which has a convergence factor 1 − O(L), see [14]. Is the uniformity of the coarse grids the limiting factor for BJM? We address this in the next section.

3.4 Stretched Coarse Grid

We now consider stretched coarse grids, and start with m = 2 non-uniformly distributed coarse points with grid sizes \({h_{1}^{1}}\), \({h_{1}^{2}}\), \({h_{2}^{1}}\), and \({h_{2}^{2}}\), see Fig. 5 (second and third rows). Using the finite-difference method, we discretize our problem and obtain the two linear systems \(A_{{\varOmega }_{1}}\boldsymbol {v}=T_{2} \boldsymbol {f}\) and \(A_{{\varOmega }_{2}}\boldsymbol {w}=T_{1} \boldsymbol {f}\), where \(A_{{\varOmega }_{1}}\) and \(A_{{\varOmega }_{2}}\) have the block-structures given in (2.2) with the blocks corresponding to the coarse parts of the grids that are

Proceeding as in Section 3.3 we find after some calculations discrete BJM parameters of the form (3.19), but with \(E_{2}(\tilde {h})\) replaced by

with

We now use the relations \({h_{1}^{2}}=1-\beta -{h_{1}^{1}}-h\) and \({h_{2}^{2}}=\alpha -{h_{2}^{1}}-h\), and take the limit for \(h \rightarrow 0\) to get the continuous Robin parameters of the BJM (3.20) with the rational function \(R_{2}(\tilde {L})\) replaced by

which shows that the coefficients in the rational function in η can now be controlled by the mesh parameter \(\tilde {h}^{1}\)! To understand the impact of this new degree of freedom from the coarse mesh, we assume for simplicity that \(\alpha =\frac {1-L}{2}\) and \(\beta =\frac {1+L}{2}\), and \({h_{1}^{1}}={h_{2}^{1}}\) and \({h_{1}^{2}}={h_{2}^{2}}\). Inserting \(\hat {p}_{12}\) and \(\hat {p}_{21}\) into (3.5) and minimizing the maximum of the resulting convergence factor (3.5) over all frequencies η (using the MATLAB function fminunc), we find the best choice for the mesh stretching \(h_{1}^{1\star }(L)\) that makes the convergence factor as small as possible. We show in Fig. 6 the behavior of the Robin parameter \(\hat {p}_{12}(\eta )\) (blue lines) compared to the OSM parameter \(p^{\star }_{12}(\eta )\) (black dashed lines) for different overlaps L. Clearly, the curves are very different from the ones corresponding to the uniform mesh (Fig. 2) which are very stable with respect to the overlap L. In the stretched case, the coarse mesh is strongly influenced by the overlap: the smaller the overlap, the more work needs/can be done in the optimization of the coarse points. The corresponding convergence factors are shown in Fig. 7 (blue lines), where one can now observe how they have two maxima. Hence, the optimization of the coarse points is solved when an equioscillation of the two maxima is obtained. If one compares these plots to the ones presented in Fig. 3, the enormous improvement obtained by optimizing the position of the m = 2 coarse points is clearly visible. This behavior is even more evident if one compares the deterioration of \(\bar {\rho }_{2}\) of Fig. 4 (right) with the corresponding one of Fig. 8 (right - blue line): we observe that now the deterioration of the contraction factors with respect of the overlap is \(\bar {\rho }_{2}(L)=1-O(L^{\frac {1}{4}})\). In Fig. 8 (left - blue line) we show the dependence of the optimized mesh position \(h_{1}^{1\star }\) on L. We observe that

Finally, in Fig. 9 (left) we show the dependence of the frequencies η1 and η2 (the maximum points) on L and we observe that

We prove these numerical observations in the next theorem.

Theorem 3.6

(Optimized stretched grid for m = 2) The Bank–Jimack Algorithm 1 with partition of unity (2.8), overlap L, and two equal subdomains \(\alpha =\frac {1-L}{2}\) and \(\beta =\frac {1+L}{2}\) has for m = 2 and overlap L small the optimized stretched grid points and associated contraction factor

Proof

The system of equations satisfied when the maxima of ρ2(η,L) equioscillate as shown at the optimum in Fig. 3 is

To solve this non-linear system asymptotically, we insert the ansatz \(h_{1}^{1\star }=h_{2}^{1\star }:=C_{h^{1}}\sqrt {L}\) and \(\eta _{1}:=C_{\eta _{1}}L^{-\frac 12}\) and \(\eta _{2}:=C_{\eta _{2}}L^{-\frac 32}\) into the system (3.22), expand for overlap L small and find the relations

The solution is \( C_{\eta _{1}}= C_{\eta _{2}}=8\) and \(C_{h^{1}}=\frac 12\), which leads when inserted with the ansatz into ρ2(η1,L) to (3.21) after a further expansion for L small. □

We thus conclude that the convergence factor of the BJM with an optimized stretched coarse mesh with m = 2 points behaves better than the convergence factor of the OSM with Robin transmission conditions which is \(\rho _{OSM}= 1-O(L^{\frac {1}{3}})\), but worse than OSM with second order (Ventcell) transmission conditions, which is \(\rho _{OSM}= 1-O(L^{\frac {1}{5}})\); see [14].

Let us now consider the case of m = 3 non-uniformly distributed coarse points with sizes \({h_{1}^{1}}\), \({h_{1}^{2}}\), and \({h_{1}^{3}}\), see Fig. 5 (fourth and fifth rows). Notice also the geometric relations \({h_{2}^{3}}=\alpha -(h+{h_{2}^{1}}+{h_{2}^{2}})\) and \({h_{1}^{3}}=1-\beta -(h+{h_{1}^{1}}+{h_{1}^{2}})\). Similar calculations as before (see also [21]) lead after expanding for h going to zero to the continuous Robin parameters of the BJM (3.20) with the rational function \(R_{2}(\tilde {L})\) replaced by

We thus have now two parameters from the stretched mesh from each side to optimize the convergence factor! We set again \(\alpha =\frac {1-L}{2}\) and \(\beta =\frac {1+L}{2}\), and \({h_{1}^{j}}={h_{2}^{j}}\), j = 1,2,3, and inserting \(\hat {p}_{12}\) and \(\hat {p}_{21}\) into the convergence factor (3.5) and minimizing the maximum of the resulting convergence factor over all frequencies η, we find the best choice for the mesh stretching \(h_{1}^{1\star }(L)\), \(h_{1}^{2\star }(L)\) that makes the convergence factor as small as possible, shown in Fig. 7 for a typical example in red. We notice that now three local maxima are present and equioscillate. In Fig. 8 (left), we show how the optimized choice of the stretched mesh parameters \(h_{1}^{1\star }(L)\), \(h_{1}^{2\star }(L)\) decay when the overlap L becomes small, and observe that

Similarly, in Fig. 9 (middle) we find for the maximum points η1, η2, and η3 the asymptotic behavior

Theorem 3.7

(Optimized stretched grid for m = 3) Under the same assumptions as in Theorem 3.6, the Bank–Jimack Algorithm 1 has for m = 3 and overlap L small the optimized stretched grid points and associated contraction factor

Proof

The system of equations satisfied when the maxima of ρ2(η,L) equioscillate as shown at the optimum in Fig. 3 is

Inserting the ansatz \(h_{1}^{1\star }=h_{2}^{1\star }:=C_{h^{1}}L^{\frac 23}\), \(h_{1}^{2\star }=h_{2}^{2\star }:=C_{h^{2}}L^{\frac 13}\), and \(\eta _{1}:=C_{\eta _{1}}L^{-\frac 13}\), \(\eta _{2}:=C_{\eta _{2}}L^{-\frac 33}\), \(\eta _{2}:=C_{\eta _{2}}L^{-\frac 53}\) into the system (3.24), we can solve the system asymptotically for the constants when the overlap L becomes small, which leads to (3.23). □

The analysis for m = 4 stretched coarse points follows the same lines, and we find after a longer computation for the continuous Robin parameters of the BJM (3.20) with the rational function \(R_{2}(\tilde {L})\) replaced by (see also [21] for details)

with the numerator and denominator given by

which leads to the results shown in Figs. 7, 8 (middle), 9 (right), which show that

and for the maximum points we find

Theorem 3.8

(Optimized stretched grid for m = 4) Under the same assumptions of Theorem 3.6, the Bank–Jimack Algorithm 1 has for m = 4 and overlap L small the optimized stretched grid points and associated contraction factor

Proof

We proceed as in the proof of Theorems 3.6 and 3.7. □

These results for optimized stretched coarse grids with m = 2, m = 3, and m = 4 points lead us to formulate the following conjecture:

Conjecture 3.9

The Bank–Jimack Algorithm 1 with partition of unity (2.8), overlap L, and two equal subdomains \(\alpha =\frac {1-L}{2}\) and \(\beta =\frac {1+L}{2}\), has for overlap L small the optimized stretched grid point locations and associated contraction factor

This result shows that one should choose a geometric coarsening related to the overlap to form the outer coarse grid leading to the best performance for the Bank–Jimack domain decomposition algorithm. A practical approach is to just take a geometrically stretched grid with respect to the overlap size,

and then to sum the step sizes hj and scale the result to the size of the outer remaining domain, say \(\hat {L}\), to get the actual mesh sizes \(\tilde {h}_{j}\) to use,

This direct geometric stretching including the last grid cell is preasymptotically even a bit better, as one can see in Fig. 10.

Asymptotic stretching from Conjecture 3.9 (red) compared to the direct geometric stretching in (3.26) (blue) for overlap sizes \(L=\frac {1}{10^{j}}\), j = 2,3,4,5

4 Numerical Experiments

In this section, we present numerical experiments to illustrate our theoretical results. We start with experiments for equally spaced coarse meshes, and compare their performance with the optimized geometrically stretched ones. We consider both a case of constant overlap L and a case where the overlap is proportional to the mesh size. We then also explore numerically the influence of coarsening the meshes in the direction tangential to the interface. In all these cases, we study the performance of the BJM as a stationary method and as a preconditioner for GMRES. We discretize the Poisson equation (2.5) (defined on a unit square Ω = (0,1)2) using n2 (interior) mesh points where n = 2ℓ − 1, for ℓ = 5,6,7, is the number of interior points on the global fine mesh in each direction (Fig. 1). The results corresponding to a uniform coarsening in direction x are presented in Section 4.1. Section 4.2 focuses on optimized stretched coarsening in direction x. Finally, in Section 4.3 we study the effect of the coarsening in both directions x and y.

4.1 Uniform Coarsening in Direction x

We start with the equally spaced coarse mesh case, coarsened only along the x axis. At first, we consider the case with a constant overlap \(L=\frac {1}{16}\), which corresponds to ns = 3,5,9 for ℓ = 5,6,7, respectively. Moreover, to test the methods in the cases studied by our theoretical analysis, we consider m = 2,3,4 coarse mesh points. The results of the numerical experiments are shown in Figs. 11 and 12. The former shows the decay of the error corresponding to the BJM as a stationary iteration, while the latter presents the decay of the GMRES residuals along the iterations. All the plots show that the effect of the number of coarse points on the convergence is very mild. This corresponds to the results discussed in Section 3.3 and shown in Fig. 4 (right): if the overlap L is constant, the contraction factor does not improve significantly if more (uniformly distributed) coarse points are considered. The same effect can be observed in the GMRES convergence.

Decay of the error of the BJM (stationary) iteration for m = 2 (left), m = 3 (middle) and m = 4 (right) uniformly distributed coarse points (in direction x) and constant overlap \(L=\frac {1}{16}\). Notice that, in each plot, the solid curves representing the theoretical convergence estimates coincide since they correspond to the same overlap L

Now, we wish to study the effect of the new partition of unity proposed in [10] and constructed using (2.3). This was used in all the experiments discussed above. If we use the original partition of unity, we already know from [10] that the BJM does not converge as a stationary method. Therefore, we use it only as a preconditioner for GMRES and obtain the results depicted in Fig. 13. By comparing the results of this figure with the ones of Fig. 12, we see that the effect of the new partition of unity is tremendous: GMRES converges much faster and is very robust against mesh refinements. For further information on the influence of the partition of unity on Schwarz methods, see [16].

Decay of the residual of the GMRES iteration preconditioned by BJM with the original partition of unity used in [3] for m = 2 (left), m = 3 (middle) and m = 4 (right) uniformly distributed coarse points (in direction x) and constant overlap \(L=\frac {1}{16}\)

Now, let us now consider an overlap proportional to the mesh size, namely L = 2h, and repeat the experiments already described. The corresponding results are shown in Figs. 14, 15 and 16. As before, we observe that the BJM method (as stationary iteration and as preconditioner) is robust against the number of coarse mesh points. In this case, the convergence deteriorates with mesh refinement since the overlap L gets smaller proportionally to h. Finally, we observe again the great impact of the new partition of unity by comparing Figs. 15 and 16.

Decay of the residual of the GMRES iteration preconditioned by BJM with the original partition of unity used in [3] for m = 2 (left), m = 3 (middle) and m = 4 (right) uniformly distributed coarse points (in direction x) and overlap L = 2h

4.2 Stretched Coarsening in Direction x

In this section, we repeat the experiments presented in Section 4.1, but we optimize the position of the coarse mesh points by minimizing numerically the contraction factor (as in Section 3.4). We begin with the case of constant overlap \(L=\frac {1}{16}\). The corresponding numerical results are shown in Figs. 17 and 18. These results show that optimizing the coarse mesh leads to a faster method which is robust against the mesh refinement. However, due to the constant overlap, there is only little improvement with respect to the constant coarsening case. To better appreciate the effect of the mesh optimization, we consider the case with overlap L = 2h. The corresponding results are shown in Figs. 19 and 20. By comparing these results with the ones of Figs. 14 and 15, one can see clearly the improvement of the BJM convergence: the number of iterations (for both stationary and preconditioned GMRES methods) are essentially halved in the case of finer meshes.

4.3 Coarsening in Direction x and y

We conclude our numerical experiments by studying the effect of a (uniform) coarsening in both x and y directions. As before, we consider both cases \(L=\frac {1}{16}\) and L = 2h. The results shown in Figs. 21, 22, 23 and 24 indicate that a coarsening in direction y does not have a significant impact on the convergence of the BJM method.

5 Conclusions

We provided a detailed convergence analysis of the Bank–Jimack domain decomposition method for the Laplace problem and two subdomains. Our analysis reveals that one should coarsen the outer mesh each subdomain uses in a geometric progression related to the size of the overlap if one wants to get good convergence, and arbitrarily weak dependence on the overlap size is possible (see also [17] for a different technique reaching this). In order for these results to hold one has to use a slightly modified partition of unity in the Bank–Jimack algorithm, without which the convergence of the method is much worse. We obtained our results by an asymptotic process as the subdomain mesh size goes to zero, and thus the results hold at the continuous level.

A possibility for further optimization at the discrete level is the observation that the maxima in the optimized method, shown in Fig. 7, occur for very high values of η which represent a Fourier frequency, and thus may lie outside of the frequencies representable on the mesh used. This can be seen quantitatively for example from the stretched case for m = 4, where the largest \(\eta _{4}=O(L^{-\frac 74})\), and the highest Fourier frequency can be estimated as η = O(h− 1), see [14]. Hence, if the overlap is of the order of the mesh size, L = h, η4 would be already much larger than what the grid can represent, and we see in fact from (3.25) that only half the number of bumps would need to be taken in consideration for the optimization.

Notes

BJM is also defined for non-self-adjoint problems. We assume this here only to simplify the notation.

This problem does not appear in the formulation based on Lagrange multipliers proposed in [5].

In our numerical experiments, we will test also coarsening in both directions.

Notice that the first coarse points, namely the point number n1 + ns + 1 for the first mesh and the point number m1 for the second mesh, are located at distance h from the interfaces. This choice is motivated by the fact that we will define discrete (finite-difference) derivatives across these points and then in Section 3 take limits for \(h\rightarrow 0\), while keeping the numbers m1 and m2 of coarse points fixed.

To evaluate the convergence factor numerically one needs to factor out an exponential in the hyperbolic trigonometric functions to avoid overflow.

Note that one of these grid points was merged into the interface when taking the limit as h goes to zero, so the grid has m = 2 mesh cells of the same size, with only m − 1 = 1 grid point in the middle left. The same also happens for other values of m, there are m mesh cells, but only m − 1 grid points separating them in the outer grid.

References

Bank, R.E.: Marching algorithms for elliptic boundary value problems. ii: the variable coefficient case. SIAM J. Numer. Anal. 14, 950–970 (1977)

Bank, R.E., Holst, M.: A new paradigm for parallel adaptive meshing algorithms. SIAM J. Sci. Comput. 22, 1411–1443 (2000)

Bank, R.E., Jimack, P.K.: A new parallel domain decomposition method for the adaptive finite element solution of elliptic partial differential equations. Concurr. Comput. Pract. Exp. 13, 327–350 (2001)

Bank, R.E., Rose, D.J.: Marching algorithms for elliptic boundary value problems. i: the constant coefficient case. SIAM J. Numer. Anal. 14, 792–829 (1977)

Bank, R.E., Vassilevski, P.S.: Convergence analysis of a domain decomposition paradigm. Comput. Vis. Sci. 11, 333–350 (2008)

Bourgat, J.F., Glowinski, R., Tallec, P.L., Vidrascu, M.: Variational formulation and algorithm for trace operator in domain decomposition calculations. In: Domain Decomposition Methods, pp 3–16. SIAM, Philadelphia, PA (1989)

Chaouqui, F., Ciaramella, G., Gander, M.J., Vanzan, T.: On the scalability of classical one-level domain-decomposition methods. Vietnam J. Math. 46, 1053–1088 (2018)

Ciaramella, G., Gander, M.J.: Analysis of the parallel Schwarz method for growing chains of fixed-sized subdomains: Part I. SIAM J. Numer. Anal. 55, 1330–1356 (2017)

Ciaramella, G., Gander, M.J.: Iterative Methods and Preconditioners for Systems of Linear Equations. Fundamentals of Algorithms Series. SIAM, Philadelphia, PA (2022)

Ciaramella, G., Gander, M.J., Mamooler, P.: The domain decomposition method of Bank and Jimack as an optimized schwarz method. In: Haynes, R. et al. (eds.) Domain Decomposition Methods in Science and Engineering XXV. Lecture Notes in Computational Science and Engineering, vol. 138, pp 285–293. Springer, Cham (2020)

Efstathiou, E., Gander, M.J.: Why restricted additive Schwarz converges faster than additive Schwarz. BIT Numer. Math. 43, 945–959 (2003)

Farhat, C., Mandel, J., Roux, F.X.: Optimal convergence properties of the FETI domain decomposition method. Comput. Methods Appl. Mech. Eng. 115, 365–385 (1994)

Farhat, C., Roux, F.-X.: A method of finite element tearing and interconnecting and its parallel solution algorithm. Int. J. Numer. Methods Eng. 32, 1205–1227 (1991)

Gander, M.J.: Optimized Schwarz methods. SIAM J. Numer. Anal. 44, 699–731 (2006)

Gander, M.J.: Schwarz methods over the course of time. Electron. Trans. Numer. Anal. 31, 228–255 (2008)

Gander, M.J.: Does the partition of unity influence the convergence of schwarz methods?. In: Haynes, R. et al. (eds.) Domain Decomposition Methods in Science and Engineering XXV. Lecture Notes in Computational Science and Engineering, vol. 138, pp 3–15. Springer, Cham (2020)

Gander, M.J., Golub, G.H.: A non-overlapping optimized schwarz method which converges with an arbitrarily weak dependence on H. In: Fourteenth International Conference on Domain Decomposition Methods (2002)

Gander, M.J., Halpern, L., Nataf, F.: Optimal convergence for overlapping and non-overlapping schwarz waveform relaxation. In: Lai, C.-H., Bjørstad, P., Cross, M., Widlund, O. (eds.) Eleventh International Conference of Domain Decomposition Methods (1999)

Gander, M.J., Zhang, H.: A class of iterative solvers for the Helmholtz equation: Factorizations, sweeping preconditioners, source transfer, single layer potentials, polarized traces, and optimized Schwarz methods. SIAM Rev. 61, 3–76 (2019)

Lions, P.-L.: On the schwarz alternating method III: A variant for nonoverlapping subdomains. In: Third International Symposium on Domain Decomposition Methods for Partial Differential Equations, vol. 6, pp 202–223. SIAM, Philadelphia, PA (1990)

Mamooler, P.: The Domain Decomposition Method of Bank and Jimack as an Optimized Schwarz Method. PhD thesis, University of Geneva (2019)

Mandel, J.: Balancing domain decomposition. Int. J. Numer. Methods Biomed. Eng. 9, 233–241 (1993)

Mandel, J., Brezina, M.: Balancing domain decomposition for problems with large jumps in coefficffients. Math. Comput. 65, 1387–1401 (1996)

Schwarz, H.A.: ÜBer einen grenzübergang durch alternierendes Verfahren. Vierteljahrsschrift der Naturforschenden Gesellschaft in Zürich 15, 272–286 (1870)

St-Cyr, A., Gander, M.J., Thomas, S.J.: Optimized multiplicative, additive and restricted additive Schwarz preconditioning. SIAM J. Sci. Comput. 29, 2402–2425 (2007)

St-Cyr, A., Gander, M.J., Thomas, S.J.: Optimized restricted additive schwarz methods. In: Widlund, O.B., Keyes, D.E. (eds.) Domain Decomposition Methods in Science and Engineering XVI. Lecture Notes in Computational Science and Engineering, vol. 55, pp 213–220. Springer, Berlin, Heidelberg (2007)

Süli, E.: An Introduction to the Numerical Analysis of Partial Differential Equations, vol. February. Lecture notes, University of Oxford (2005)

Funding

Open access funding provided by University of Geneva.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to the 70th birthday of Alfio Quarteroni.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ciaramella, G., Gander, M.J. & Mamooler, P. How to Best Choose the Outer Coarse Mesh in the Domain Decomposition Method of Bank and Jimack. Vietnam J. Math. 50, 867–899 (2022). https://doi.org/10.1007/s10013-022-00571-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10013-022-00571-6