Abstract

HTTP Adaptive Streaming (HAS) is a streaming video technique widely used over the Internet. However, it has many drawbacks that degrade its user Quality of Experience (QoE). Our investigation involves several HAS clients competing for bandwidth inside the same home network. Studies have shown that managing the bandwidth between HAS clients using traffic-shaping methods improves the QoE. Additionally, the TCP congestion control algorithm in the HAS server may also impact the QoE, because every congestion control variant has its own method to control the congestion window size. Based on previous work, we describe two traffic-shaping methods, the Hierarchical Token Bucket shaping Method (HTBM) and the Receive Window Tuning Method (RWTM), as well as four popular congestion control variants: NewReno, Vegas, Illinois, and Cubic. In this paper, our objective is to provide a detailed comparative evaluation of combining these four congestion control variants with these two shaping methods. The main result indicates that Illinois with RWTM offers the best QoE without causing congestion. Results were validated through experimentation and objective QoE analytical criteria.

Similar content being viewed by others

1 Introduction

HTTP Adaptive Streaming (HAS) is a streaming video technique based on downloading video segments of short duration. These segments are called chunks, and they are streamed from a HAS server to a HAS client through the network. Each chunk is encoded at multiple quality levels. After requesting a chunk by an HTTP GET request message, when the chunk is received, the player on the client side stores it in a playback buffer. The HAS player operates in one of two states: a buffering state and a steady state. During the first state, the player requests a new chunk as soon as a previous chunk has been downloaded, until the playback buffer is filled. However, during the steady state, the player requests chunks periodically in order to maintain a constant playback buffer size. The steady state includes periods of activity (ON periods) followed by periods of inactivity (OFF periods) [9, 11].

The Quality of Experience (QoE) of an HTTP-adaptive stream depends primarily on three criteria:

-

1.

Video quality level stability [1, 11]: a frequent change of video quality level bothers the user. Therefore, quality level fluctuation should be avoided to improve the QoE.

-

2.

Fidelity to optimal quality level selection: the user prefers to watch the best video quality level, when possible. Therefore, the HAS player should select the optimal quality level, which is the highest feasible quality level allowed by the available bandwidth.

-

3.

Convergence speed: the user prefers to view the optimal quality level as soon as possible. Accordingly, the HAS player should rapidly select the optimal quality level. The delay that the player requires before the optimal quality level has been attained is called the convergence speed [1].

We studied a general use case in which several HAS clients are located in the same home network. In this use case, QoE degradations can be grouped into two main causes:

-

Congestion events: video packets sent from the server to the client pass through many network devices. Each device has one or many queues that use a queuing discipline to schedule network packets. The implemented algorithm decides whether to route or drop incoming packets in order to avoid network congestion. The main bottleneck occurs near the home gateway, and more precisely, in the link between DSLAM and home gateway [12]. In fact, the DSLAM may considerably reduce the bandwidth offered to the home network, and it is more likely to drop packets than any other network device. To minimize network effects on the delivery, the TCP protocol implements a “congestion control algorithm” on the sender side, which reduces the bitrate of packets sent to the receiver when a packet is lost. However, this bitrate reduction may degrade QoE. In addition, there are many congestion control variants with different methods of managing the congestion window size, cwnd, and detecting congestion events. These differences may change the QoE between variants.

-

Concurrence with other streams—OFF periods issue: the HAS player estimates the available bandwidth by computing the download bitrate for each chunk when it has finished downloading; this is done by dividing the chunk size by its download duration. As a consequence, the player cannot estimate the available bandwidth during OFF periods, because no data are being received. When a HAS stream concurs with other streams in the same home network, accurate bandwidth estimation becomes more difficult. For example, when two HAS streams are competing for bandwidth and the ON period of the first player coincides with the OFF period of the second player, the first player will overestimate its available bandwidth. This overestimation may lead the player to select a higher quality level for the next chunk. This selection may lead to a congestion event and a resulting fluctuation of quality levels between the two players. Research has demonstrated that traffic shaping can considerably limit this problem [1, 2, 11, 21, 25]. Traffic shaping consists of selecting a target bitrate for each HAS session in the home network based on bitrates of the available quality levels and the available bandwidth. It then shapes the outgoing traffic to each HAS client based on the selected target bitrate.

The objective of our study is to combine two solution categories, TCP congestion control variants to reduce the negative effects of congestion events, and traffic-shaping methods, to restrict the drawbacks of the concurrence between HAS streams in the home gateway. The optimal combination will have the highest grade of QoE, i.e., the best possible video quality level stability, best fidelity to optimal quality level selection, and best convergence speed.

We note that there are many implementations of HAS that are currently deployed, such as Dynamic Adaptive Streaming over HTTP (MPEG-DASH), Microsoft Smooth Streaming (MSS), Apple HTTP Live Streaming (HLS), and Adobe HTTP Dynamic Streaming (HDS). For this reason, we wish to emphasize that in this paper we only choose HAS traffic-shaping methods that do not change the HAS implementation either in the player or in the server. This choice provides adaptability to any HAS client or server implementations and thus offers a larger scope of application of our presented work.

The remainder of this paper is organized as follows. In Sect. 2, we describe the background and related works. In Sect. 3, we detail our methodology and experimental implementation. Section 4 presents the results and discussion. In Sect. 5, we conclude the paper and suggest future directions for our work.

2 Background and related work

In this section, we describe the TCP congestion control variants and the HAS traffic-shaping methods used in this work, and explain the distinctions between them.

2.1 TCP congestion control variants

All TCP congestion control variants have two common phases: a slow start phase and a congestion avoidance phase. The slow start phase consists of increasing cwnd rapidly by one maximum segment size (MSS) for each received acknowledgment (ACK), i.e., the cwnd value is doubled for each round trip time (RTT). This rapidity has an objective of reaching a high bitrate within a short duration. When the cwnd size exceeds a threshold called ssthresh, the TCP congestion control algorithm switches to the second phase: the congestion avoidance phase. This phase slowly increases the cwnd until a congestion event is detected.

TCP congestion control variants are classified according to two main criteria [13]:

-

1.

The first criterion is the increase of cwnd during the congestion avoidance phase and the decrease of cwnd immediately following congestion detection. Generally, the increase is additive, and the cwnd size increases by one MSS for each RTT. For decreasing cwnd, the standard variants employ multiplicative decreasing, i.e., the cwnd size is weighted by a multiplicative decrease factor (1−β), where 0 < β < 1. This category is called the Additive Increase Multiplicative Decrease (AIMD) approach. Other variants using different techniques are classified as non-AIMD approaches.

-

2.

The second criterion is the method by which the algorithm detects congestion. We distinguish three modes: loss-based, delay-based, and loss–delay-based modes. The loss-based mode considers any detection of packet loss as a congestion event. A majority of TCP congestion control variants that use the loss-based mode consider receiving three duplicated ACKs from the receiver as an indication of a packet loss and, as a consequence, as an indication of a congestion event. However, the delay-based mode considers a significant increase in the RTT value as the only indication of a congestion event. The third mode, the hybrid mode, combines the delay-based and loss-based modes to improve congestion detection.

In order to facilitate our study, we chose four well-known congestion control variants, and we classify them according to the two criteria cited above:

-

NewReno [3]: this variant is designed as the standard TCP congestion control approach. It uses the AIMD approach with the loss-based mode. Two mechanisms are employed immediately following congestion detection: fast retransmit and fast recovery [14]. Fast retransmit consists of performing a retransmission of what appears to be the missing packet (i.e., when receiving three duplicate ACKs), without waiting for the retransmission timer to expire. After the fast retransmit algorithm sends this packet, the fast recovery algorithm governs the transmission of new data until a non-duplicate ACK arrives. The reason for using fast recovery is to allow the continual sending of packets when the receiver is still receiving packets, even if some packets are lost.

-

Vegas [4]: this non-AIMD variant is an Additive Increase Additive Decrease (AIAD) variant. It is a delay-based variant that accurately estimates RTT for every sent packet and adjusts cwnd size based on actual throughput and expected throughput. If RTT increases, cwnd decreases by one MSS, and vice versa. Vegas is the smoothest TCP congestion control variant [15]; it is able to allocate a fair share of bandwidth with minimal packet loss events.

-

Illinois [5]: This is a TCP loss-delay-based congestion variant that employs a particular classification of the AIMD approach, C-AIMD, which involves a concave window size curve. Packet loss is used for primary congestion inference to determine the direction (increase or decrease) of cwnd, with a delay for secondary congestion inference to adjust the value of the window size change. More precisely, when the average queueing delay is small (small increase of RTT), the sender supposes that the congestion is not imminent and specifies a large additive increase α and small multiplicative decrease β. In the opposite case, when the average queuing delay is large (large increase of RTT), the sender supposes that the congestion is imminent and selects a small α and large β. Illinois measures RTT for each received ACK to update α and β. Moreover, it retains the same fast recovery and fast retransmit phases as NewReno. Illinois was designed for high-speed and high-latency networks, where the bandwidth-delay product is relatively high. Consequently, it enables higher throughput than NewReno.

-

Cubic [6]: this variant is loss-based, but it uses a non-AIMD approach. A cubic function is used to increase the cwnd in the congestion avoidance phase immediately after the fast recovery phase, and a multiplicative decrease approach is used to update the cwnd after congestion event detection. The cubic function has a concave region followed by a convex region. The plateau between the two regions, or the inflection point (denoted by W max), corresponds to the window size just before the last congestion event. The cubic function enables a slow growth around W max to enhance the stability of the bandwidth, and enables a fast growth away from W max to improve scalability of the protocol. Upon receiving an ACK during the congestion avoidance phase at time t, Cubic computes the new value of cwnd corresponding to the cubic function at time t. As a consequence, Cubic uses the time instead of the RTT to increase the cwnd. Cubic employs a new slow-start algorithm called HyStart [8] (hybrid slow start), which finds a safe exit point to the slow start, the ssthresh value, at which the slow start can finish and safely move to congestion avoidance before cwnd overshoot occurs. HyStart employs the RTT delay increase and the inter-arrival time between consecutive ACKs to identify the safe exit point, and to modify the ssthresh value [8]. This variant does not make any change to the fast recovery and fast retransmit of standard NewReno. Cubic is the smoothest loss-based congestion control variant [15]: it is characterized by a congestion window that falls less abruptly and that remains constant over a wide range of elapsed time. It is also designed for high-speed and high-latency networks. Cubic is implemented and used by default in Linux kernels since version 2.6.19.

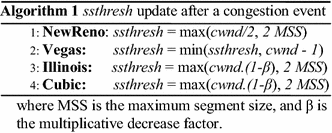

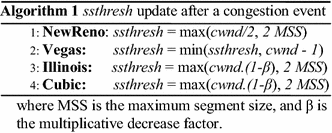

For precise analysis, based on the descriptions of congestion control algorithm variants and their source code, we describe below the update of the congestion window size, cwnd, and the slow start threshold value, ssthresh, for different events:

-

Congestion events: there are two cases

-

When a congestion event is detected, the Fast Recovery/Fast Retransmit (FR/FR) phase reduces the ssthresh value and sets the cwnd value to ssthresh+3, for the purpose of remaining in the congestion avoidance phase. The ssthresh value after a congestion event is updated as follows:

-

When the retransmission timeout expires before receiving any ACK of the retransmitted packet, ssthresh is reduced as indicated in Algorithm 1, and cwnd is set to a small value and restarts from the slow start phase.

-

-

Idle period: when the server sends a packet after an idle period that exceeds the retransmission timeout (RTO), cwnd and ssthresh are computed for the four congestion control variants as in Algorithm 2:

In the HAS context, an idle period coincides with an OFF period between two consecutive chunks. An OFF period whose duration exceeds RTO is denoted by OFF*.

Furthermore, we additionally want to emphasize that the rate of a TCP connection can be approximated, if we assume that transients due to slow start and fast recovery can be neglected, by \(\hbox{min} \,(cwnd,\,\,rwnd)/RTT\) [26], where rwnd is the TCP receive window indicated by the receiver and RTT is the round trip time between the sender and the receiver. Obviously, this approximation is valid for the four TCP congestion control variants described above. As we have shown, these variants employ different algorithms to modify the congestion window, cwnd, during the congestion avoidance phase and after congestion detection. Accordingly, the generated rate as well as its variation over time is different from one variant to another.

2.2 Traffic-shaping methods

Many studies have been conducted to improve HAS performance for cases in which several HAS clients are located in the same home network. The ON–OFF periods characterizing the HAS player in its steady state involve three substantial problems when HAS players are competing: player instability, unfairness between players, and bandwidth underutilization [9]. The cause of these problems is the inability to estimate the available bandwidth during the OFF period, because no data are being received.

Three types of solutions are proposed to improve HAS user experience: client-based, server-based, and gateway-based solutions. They differ with respect to the device in which the shaping solution is implemented. Below, we cite relevant methods for each type of solution:

-

The client-based solution involves only the HAS client in order to reduce its OFF period durations. One of the recent client-based methods is proposed in the FESTIVE method [7]. It randomizes the events of chunk requests inside the player in order to reduce the periodicity of ON periods. Consequently, most of the incorrect estimations of bandwidth could be avoided when several HAS clients compete for bandwidth. However, this method is not efficient enough to prevent all incorrect estimations. In addition, it modifies the HAS player implementation, which is contradictory to our specifications described in Sect. 1. Moreover, the client-based solution does not provide the coordination between HAS clients that is required to further improve bandwidth estimations and QoE.

-

The server-based solution involves only the HAS server. It proceeds according to two steps: first, finding the optimal quality level for each provided HAS flow, and second, shaping the sending rate of the HAS server according to the encoding rate of this level. In [25], the authors propose a server-based method: it consists in detecting the oscillations between quality levels on the server side and deciding which optimal quality level must be selected. Although this method improves the QoE, it cannot conveniently respond to the typical use cases of several concurrent HAS clients that do not share the same HAS server: the shared link is on the HAS client side. Moreover, this server-based solution requires an additional processing task, which becomes burdensome and costly when many HAS clients are demanding video contents from the same HAS server. In addition, the server-based solution is unable to acquire information about the other competing flows with their corresponding HAS clients. Hence, the selection of the optimal quality level at the server is a vague estimation. This estimation is less accurate than a quality level selection based on a sufficient knowledge about the access network of the corresponding HAS client(s).

-

The gateway-based solution that consists of applying the HAS traffic shaping in the gateway is more convenient than client-based and server-based solutions; in fact, the gateway can acquire information about the HAS traffic of all clients of the same home network, which is not possible either at the server or at the client. In addition, the gateway-based solution is able to perform traffic shaping without inducing any modification of HAS implementation code either in the server or the client. Hence, in this paper, our evaluations only consider the gateway-based shaping solution. For the gateway-based solution, the authors assumed that the home gateway can intercept the manifest file during the HAS session initialization and can obtain the characteristics of the available video quality levels of every session. This solution introduces a bandwidth manager in the gateway that defines a shaping rate for each connected active HAS client in the home network. The bandwidth manager should be able to update the number of active connected HAS clients in the home gateway by sniffing the SYN and FIN flags in TCP packets. Therefore, the difference between the gateway-based methods is the manner in which they shape the bandwidth for each HAS session. The two main gateway-based methods found in the literature and used in our comparative study are HTBM [1] and RWTM [2]. They are briefly described in the following.

2.2.1 HTBM

HTBM uses the Hierarchical Token Bucket (HTB) queuing discipline to shape the HTTP-adaptive streams. HTB is integrated in Linux with the traffic controller tool of the iproutes2 utility package. It uses one link, designated as the parent class, to emulate several slower links, designated as the children classes. Different types of traffic may be served by the emulated links. HTB is exploited by the bandwidth manager of HTBM in order to define a child class for each HAS session. HTB also employs the tokens and buckets concept, combined with the class-based system for better control over traffic and for shaping in particular [16]. A fundamental component of the HTB queuing discipline is the borrowing mechanism: children classes borrow tokens from their parent once they have exceeded the shaping rate. A child class will continue to try to borrow until it reaches a defined threshold of shaping, at which point it will begin to queue packets that will be transmitted when more tokens become available.

Accordingly, using HTBM enables the shaping of the HTTP-adaptive streams for each HAS session in the gateway, as indicated by the bandwidth manager, by merely delaying packets that are received from the HAS server. The authors of [1] indicate that HTBM improves the user’s QoE; it improves the stability of video quality level, the fidelity to optimal quality level, and the convergence speed.

2.2.2 RWTM

The second shaping method, Receive Window Tuning Method (RWTM), is a gateway-based shaping method that was proposed in [2]. It is implemented in the TCP layer and is based on TCP flow control at the receiver side. Indeed, during a TCP session, each receiver specifies the maximum number of bytes that it is able to buffer. This value is called the receiver’s advertised window, denoted by rwnd, and its size is specified in the rwnd field in the header of each TCP packet sent from the receiver to the sender. The sender receives the rwnd size from the receiver and limits its sending window, W, so that the number of packets sent in each RTT does not exceed rwnd; W = min(rwnd, cwnd).

The RWTM method consists of modifying, in the gateway, the rwnd field of each TCP ACK packet sent from a HAS client C to its HAS server S in order to limit the sending rate of the HAS server to rwnd/RTT C–S . RWTM uses the defined shaping rate of the bandwidth manager for each connected active HAS client, and estimates the RTT C–S value to compute the next value of rwnd. We note that the estimation of RTT C–S is accomplished using only TCP ACK packets sent from HAS clients to the HAS server by using passive estimation. The rwnd is computed once for each ON period. RWTM was tested in [2] and [21], and results indicated that RWTM enhances the user’s QoE: it improves the stability, the fidelity, and the convergence speed.

In [21], we showed that RWTM outperforms HTBM when using the Cubic variant as congestion control on the server side. However, due to the dissimilarity of TCP variants, an extended evaluation using other variants and additional scenarios will give us a better understanding of the interaction between shaping methods and TCP variants.

3 Methodology and experimental implementation

In this section, we provide a description of the metrics used to measure performance, the scenarios that cover many operating conditions, and the framework that has been developed to emulate our use case.

3.1 Performance metrics

We define three metrics in this section that we use to evaluate the QoE and to understand how each combination behaves. To do so, we present in Table 1 the main parameters that are used to define the metrics.

We note that the optimal quality level value, L C,opt(i), corresponds to the quality level that the client C should select at time i under the shaping rate defined by the bandwidth manager. This shaping rate is chosen in a manner that ensures the fairest share of the available home bandwidth between clients with prioritization to achieve the maximum use of the available home bandwidth. This entails that some clients could have a higher quality level than others when their fair share of available home bandwidth is not sufficient to maximize the use of the available home bandwidth. Below, we define analytically three performance metrics that describe the three criteria of QoE mentioned in Sect. 1.

3.1.1 Video quality-level stability

Many research studies indicate that HAS users are likely to be sensitive to frequent and significant quality-level switches [22, 23]. We use the instability metric, \(IS_{C} (K)\), which measures the instability for client C for a K-second test duration in conformity with its description in [7] as the following equation:

where \(IS_{C} (K)\) is the weighted sum of all encoding bitrate switching steps observed within the last K seconds divided by the weighted sum of the encoding bitrates selected in the last K seconds. The lower the \(IS_{C} (K)\) value is, the higher the stability of the video quality level is.

More precisely, this formula uses the encoding bitrates of the selected quality levels over time, \(Q_{C} (i)\), instead of the quality level index over time, \(L_{C} (i)\). In fact, the absolute difference between two encoding bitrates that are displayed on the client side during two successive seconds, \(K - i - 1\) and \(K - i\), and denoted by \(\left| {Q_{C} (K - i) - Q_{C} (K - i - 1)} \right|\), gives more significant indication of the observed video quality change than when using the absolute difference between the quality level indexes. Hence, we can offer an adequate representation of the user expectation.

Moreover, in this formula, the authors of [7] use the weight function \(w\left( i \right) = K - i\) in order to add a linear penalty to more recent quality level switches. In fact, their justification is that the switching of quality level is becoming more disturbing for users’ experience when the video playback position is far from the beginning of the video stream.

3.1.2 Fidelity to optimal quality level

In [7], the authors define two additional goals to achieve within our use case: (1) fairness between players: players should be able to converge to an equitable allocation of network resources; (2) efficiency among players: players should choose the highest feasible quality levels to maximize the user’s experience. Furthermore, in [9], the authors address the bandwidth underutilization issue that may prevent the possible improvement of QoE. So, maximizing the use of bandwidth can be considered as a QoE criterion. Accordingly, in order to provide one formula that satisfies these three criteria, we define our metric called infidelity to optimal quality level.

The infidelity metric, \({\text{IF}}_{\text{C}} (K)\), of client C for a K-second test duration, measures the duration of time over which the HAS client C requests optimal quality:

The lower the \({\text{IF}}_{\text{C}} (K)\) value is, the higher the fidelity to optimal quality is.

Here, we note that the theoretical optimal quality level \(L_{C,opt} (i)\) aims to resolve the dilemma between the two criteria of maximum use and fair share of bandwidth between HAS players. In fact, considering that only the fair share of bandwidth may cause bandwidth underutilization, in some cases it may leave some residual bandwidth allocated to nobody. Hence, based on the optimal quality level, the value of the infidelity metric is representative of user expectation.

3.1.3 Convergence speed

The convergence speed metric was previously defined in [1]. We provide an analytical definition as follows:

This metric is the time that the player of HAS client C takes to reach and remain at the optimal quality level for at least T seconds during a K-second test duration. The reason of selecting this criterion for evaluating the QoE in our use case is observations made in [1, 2, 9, 21]: they show that when HAS players compete for bandwidth, the convergence to optimal quality level may take several seconds or may be very difficult to be achieved. Accordingly, the speed of this convergence is a valuable QoE criterion for our evaluations. The lower the \(V_{C,T} (K)\) value is, the faster the convergence to the optimal quality level is.

Additionally, we define two other metrics (CNG and fr OFF* , described below) that enable us to measure the reaction of home gateway and HAS players.

3.1.4 Congestion rate

The congestion detection events influence to an extreme degree both the QoS and QoE of HAS because the server decreases its sending rate after each congestion detection. Hence, by analyzing the code description of the four TCP congestion control algorithms (NewReno, Vegas, Illinois, and Cubic), we found that the congestion event appears when the value of parameter slow start threshold (ssthresh) decreases (see Algorithm 1). Hence, we define a metric called congestion rate, denoted by \(CNG_{C - S} (K)\), that computes the rate of congestion events that are detected on the server side, corresponding to the HAS flow between client C and server S during a K-second test duration as shown in Eq. (4):

where \(D_{C - S}^{ssthresh} (K)\) is the number of times the ssthresh has been decreased for the C–S HAS session during the K-second test duration.

3.1.5 Frequency of OFF* periods per chunk

This metric is important to measure the frequency of OFF* periods. An OFF period whose duration exceeds RTO is denoted by OFF* (as indicated in Sect. 2.1). This frequency is equal to the total number of OFF* periods divided by the total number of downloaded chunks. This metric is denoted by fr OFF* .

For result analysis, we use the QoE metrics to quantitatively discuss the user’s experience, and use CNG and fr OFF* metrics to explain the performance of each combination of traffic method and congestion variant.

3.2 Scenarios

We define five scenarios that are typical of concurrence between HAS clients in the same home network (scenarios 1, 2, and 3), and how the HAS client reacts when some changes occur (scenarios 4 and 5):

-

1.

Both clients start to play simultaneously and continue for 3 min. This scenario illustrates how clients compete.

-

2.

Client 1 starts to play, the second client starts after 30 s, and both continue together for 150 s. This scenario shows how the transition from one client to two clients occurs.

-

3.

Both clients start to play simultaneously, client 2 stops after 30 s, and client 1 continues alone for 150 s. This scenario shows how a transition from two clients to one takes place.

-

4.

Only one client starts to play and continues for 3 min. At 30 s, we simulate a heavy congestion event with a provoked packet loss of 50 % of the received packets at the server over a 1-s period. This scenario shows the robustness of each combination against the congestions that are induced by external factors, such as by other concurrent flows in the home network.

-

5.

Only one client is playing alone for 3 min. We vary the standard deviation value of RTT C–S (round trip time between the client and the server) for each set of tests. This scenario investigates the robustness against RTT C–S instability.

The test duration was selected to be 3 min to offer sufficient delay for players to stabilize.

3.3 General framework

We propose a testbed architecture presented in Fig. 1 that emulates our use case described in Sect. 1. The choice of only two clients is sufficient to show the behavior of concurrence between many HAS flows in the same home network.

In this section, we describe the configurations of each component presented in Fig. 1.

3.3.1 HAS clients

We used two Linux machines as HAS clients. We developed an emulated player in each client that reproduces the behavior of the HAS player without decoding and displaying a video stream. The playback buffer size is specified to be 15 chunks, and the chunk duration is 2 s. In [18], the authors indicate that the bitrate adaptation algorithm depends on bandwidth estimation and playback buffer occupancy. Furthermore, players also define an aggressiveness level, as described in a previous study [19]. For example, the Netflix player is more aggressive than the Smooth Streaming player [19]. An aggressive player enables the user to ask for a video quality level that is slightly higher than the estimated available bandwidth. Moreover, aggressiveness is important for minimizing the “downward spiral effect” phenomenon [20]. This phenomenon consists of underestimating the available bandwidth, which leads to a lower video quality level selection. Accordingly, taking into consideration [18, 19], and [20], we used a bitrate adaptation algorithm based on bandwidth estimation in which we define an aggressiveness ρ C (t) at time t that depends on playback buffer occupancy as follows:

where \(R_{\text{C}} (t)\) is the filling level of the playback buffer at time t, B C is the size of the playback buffer of client C, and σ is the aggressiveness constant. The fuller the playback buffer is, the closer to σ the aggressiveness is.

All tests use a HAS player with an aggressiveness constant of σ = 0.2. This enables the HAS player to add a maximum of 20 % to its available BW estimation.

3.3.2 Home network

In the modeled home network, the clients are connected directly to the gateway. The total download bitrate, or home available bandwidth, is limited to 8 Mbps. We choose this value because it is lower than twice the video encoding bitrate of the highest quality level. Accordingly, two clients in the home network cannot select the highest quality level at the same time. In this case, one client should select quality level no 4 and the other should select the quality level no 3 as optimal qualities. We do not test a use case in which two clients have the same optimal quality level, because this is a very specific case, and dissimilarity between optimal quality levels is more general.

3.3.3 Home gateway

The emulated home gateway consists of a Linux machine configured as a network bridge to forward packets between the home network and the best effort network.

We emulate the queuing discipline of the home gateway by using the Stochastic Fairness Queueing discipline (SFQ) [24]. SFQ is a classless queuing discipline that we configured using the Traffic Controller emulation tool (tc). SFQ schedules packets based on flow identification (the source and destination IP addresses and the source port) and injects them into hash buckets during the enqueuing process. Each bucket represents a unique flow. Additionally, SFQ employs Round Robin fashion for dequeuing packets by taking into consideration the bucket classification. The goal of using buckets for enqueuing and Round Robin for dequeuing is to ensure fairness between flows so that the queue is able to forward data in turn and prevents any single flow from drowning out the remaining flows. We also configured SFQ in order to support the Drop Tail queue management algorithm when the queue becomes full. Hence, this configuration of the queuing discipline is classified as a Drop Tail class. The queue length of SFQ, which is indicated by parameter limit within the tc tool, is set to the bandwidth-delay product.

In the gateway, we implemented a bandwidth manager that selects a shaping rate for each connected active HAS client in a manner such that each client should attain its optimal quality level described in Sect. 3.1. The shaping rate for each client was chosen as indicated in [1] and [2]; it is 10 % higher than the encoding bitrate of the optimal quality level for each client. The two shaping methods HTBM and RWTM are implemented in the gateway, and they shape bandwidth in accordance with the decisions of the bandwidth manager.

3.3.4 Best effort network

The best effort network is characterized by the presence of network devices to route packets. The round trip time RTT C–S (t) in a best effort network is modeled as follows [10]:

where a C–S is a fixed propagation delay between client C and server S, q(t) is the queue length of a single congested router (the home gateway in our use case), and ς is the transmission capacity of the router. q(t)/ς models the queuing processing delay. To comply with Eq. (6), we used the normal distribution with a mean value a C–S and a standard deviation equal to 0.07.a C–S . The standard deviation emulates the queuing processing delay q(t)/ς. This emulation is accomplished by using the “netem delay” parameter of the traffic controller tool in the gateway machine interface.

3.3.5 HAS server

The HAS server is modeled by an HTTP Apache Server installed on a Linux machine operating on Debian version 3.2. We can change the congestion control variant of the server by varying the parameter net.ipv4.tcp_congestion_control. All tests use five video quality levels denoted by 0, 1, 2, 3, and 4. Their encoding bitrates are constant and equal to 248, 456, 928, 1632, and 4256 kbps, respectively. HTTP version 1.1 is used to enable a persistent connection.

4 Results

In this section, we compare the different combinations of TCP congestion control variants in the server and shaping methods in the gateway in the five scenarios. Altogether, we evaluate eight combinations: four TCP congestion control variants combined with two shaping methods. We evaluate QoE by discussing the QoE metrics IS, IF, and V. We also use the CNG and fr OFF* metrics to observe how each combination reacts. For each scenario, we repeated each test 60 times and we computed an average value of each metric. The number of 60 runs is justified by the fact that the difference of the average results obtained after 40 and 60 runs are lower than 6 %. This observation was verified for all scenarios. Accordingly, 60 runs are sufficient to achieve statistically significant results.

This section is organized as follows. First, we begin by evaluating performance in scenario 1, and we analyze the variation of cwnd for each combination. Second, we evaluate the performance of scenarios 2 and 3 to study the effect of transition from one to two clients (and vice versa) on the performance of each studied combination. Third, we present the performance of scenario 4 to measure the robustness of the combinations against induced congestions. Fourth, we study scenario 5 to measure the robustness against the instability of RTT C–S for each combination. Finally, we discuss all results by presenting a summary of observations and defining the combination that is suitable for each particular case.

4.1 Scenario 1

In this scenario, two clients are competing for BW and are playing simultaneously. The available home bandwidth permits only one client to have the highest quality level, no 4. We make the assumption that the client who gets the highest quality level no 4 is identified as client 1. Optimally, the first player in our use case should obtain quality level no 4 with an encoding bitrate of 4256 kbps, and the second player should have quality level no 3 with an encoding bitrate of 1632 kbps.

In this section, we present our evaluation results and discuss them. Then, we analyze the cwnd variation for each combination in order to understand the reason for the observed results.

4.1.1 Measurements of performance metrics

The average values of QoE metric measurements for client 1 and client 2 are listed in Tables 2, 3, respectively.

Our first overall observation is the large dissimilarity between QoE measurements of the different combinations. This observation is a valuable result that confirms that each combination induces a change of HAS player behavior. Consequently, using HAS traffic shaping without taking into consideration the TCP congestion control employed in the HAS server cannot guarantee a good user experience; hence, the prominence of our proposed work.

The results show that traffic shaping considerably improves the QoE metric measurements for a majority of cases, especially for instability, which is largely reduced (e.g., a reduction of instability rate by a factor of 2.6 from 4.95 to 1.89 % when employing HTBM with NewReno, and a reduction by a factor of 4.5 from 7.47 to 1.63 % when employing RWTM with Cubic, as shown in Table 2). Furthermore, RWTM shows better performance than HTBM in the majority of cases. Moreover, client 2 always has better performance than client 1 with both shaping methods: the reason is that the optimal quality level of client 2 (i.e., quality level no 3) is lower than that of client 1 (i.e., quality level no 4): obviously, the quality level no 3 is easier to achieve. In addition, the gap between the QoE metric measurements of the two shaping methods is higher for client 1 than client 2: for example, when considering the Cubic variant, the gap of infidelity rate of client 1 between RWTM and HTBM is 15.43 % (5.02 vs 20.45 %); this is higher than that of client 2, which is equal to 1.86 % (2.61 vs 4.47 %). Consequently, the dissimilarity of performance between different combinations is more visible for client 1. For this reason, we limit our observation to client 1 in the remaining text of this subsection.

Concerning the QoE measurements, based on Table 2, we present the most important observations related to client 1:

-

Combining NewReno or Vegas variants with HTBM or RWTM does not improve the QoE. Additionally, these four combinations have high infidelity value (near 50 %) and very high congestion speed value (around 90–100 ms), but a low value of instability. These values indicate that the player was stable at a low quality level during the first half of the test duration and has difficulties converging to its optimal quality level.

-

HTBM has better QoE with Illinois than with Cubic: it is slightly more stable, 16 % more faithful to optimal quality, and converges 2.4 times faster.

-

RWTM has better QoE with Cubic than with Illinois: it is slightly more stable, slightly more faithful to optimal quality level, and converges 1.24 times faster.

In order to be more accurate in our analysis, we use the two defined metrics: the frequency of OFF* periods per chunk, fr OFF* , and the congestion rate, CNG. In Table 4, we present the average value over 60 runs for each metric and for each combination, related to client 1 and scenario 1.

RWTM presents a negligible congestion rate, while HTBM has a very high rate of congestion, especially when the Cubic variant is used. Moreover, HTBM reduces the frequency fr OFF* better than RWTM, mainly with Illinois and Cubic. These results have a direct relationship to the shaping methods described in Sect. 2.2:

-

HTBM was designed to delay incoming packets, which causes an additional queuing delay. In all of the tests, we verified that HTBM induces a queueing delay of around 100 ms in scenario 1 for client 1. On one hand, this delay causes an increase of congestion rate because it increases the risks of queue overflow in the gateway, even when the QoE is good, such as with Cubic or Illinois variants. The dissimilarity of congestion rate between congestion controls variants is investigated in the next Sect. 4.1.2. On the other hand, the RTT C–S value also jumps from 100 to 200 ms, which increases the retransmission timeout value, RTO, to approximately 400 ms, hence reducing OFF* periods. The fr OFF* of HTBM is noticeably lower than RWTM and the case without shaping (W/o). In addition, the assertion “the higher the QoE metric measurement, the lower the fr OFF* value” seems to be valid; for example, HTBM presents better QoE with Illinois than with Cubic, and fr OFF* is lower with Illinois than with Cubic.

-

Nevertheless, RWTM was designed to limit the value of the receiver’s advertised window, rwnd, of each client. Therefore, no additional queuing delay is induced by RWTM. Hence, the congestion rate is very low. Additionally, the RTT C–S estimation is performed only once per chunk. So, the cwnd value is constant during the ON period, even if RTT C–S varies. In our configuration, the standard deviation of RTT C–S is equal to 7 ms, i.e., 0.07.a C–S , as described in Sect. 3.3. Consequently, eliminating OFF* periods will not be possible. Instead, the fr OFF* value will be bounded to a minimum value that characterizes RWTM when the QoE measurements are the most favorable. When testing with the four congestion control variants, this fr OFF* value is equal to 0.24 for the selected standard deviation. This means that RWTM can guarantee, in the best case, one OFF* period every 4.17 chunks. This frequency is useful, and will be discussed in the next subsection and in further detail in scenario 5.

4.1.2 Analysis of cwnd variation

To explain the results of scenario 1, we used the tcp_probe module in the HAS server. This module shows the evolution of the congestion window, cwnd, and the slow start threshold, ssthresh, during each run. For each combination, we selected a run the performance values of which are the nearest to its average values of Tables 2, 4, i.e., instability IS, infidelity IF, convergence speed V, frequency of OFF* periods per chunk fr OFF* , and congestion rate CNG. Then, we present their cwnd and ssthresh evolution in Figs. 2, 3, 4, 5, 6, 7, 8, 9. We also indicate the moment of convergence by a vertical bold dotted line. We observed that this moment corresponds to the second from which the TCP congestion control is often processing under the congestion avoidance phase; i.e., when cwnd > ssthresh. In addition, from the moment of convergence, we observe that ssthresh becomes more stable and is practically close to a constant value.

Figure 2 shows that the combination NewReno with HTBM cannot guarantee convergence to the optimal quality level. The congestion rate is not very high compared with other TCP congestion variants. After 50 s, cwnd was able to reach the congestion avoidance phase for short durations, but the continuous increase of cwnd with the additive increase approach caused the detection of congestion. Moreover, the multiplicative decrease approach after congestions employed by NewReno was very aggressive; in effect, as described in Sect. 2.2, the new cwnd value will be reduced by half (more precisely, to cwnd/2 + 3 MSS following the FR/FR phase) and ssthresh will also be reduced to cwnd/2. This aggressive decrease prevents the server from rapidly reaching a desirable cwnd value and, as a consequence, prevents the player from correctly estimating the available bandwidth and causes a lower quality level selection. Furthermore, the fr OFF* value was relatively high (around 0.3 OFF* period per chunk), which is more than twice that of the Illinois and Cubic variants. This value is also caused by the multiplicative decrease approach that generates a lower quality level selection. Due to the shaping rate that adapts the download bitrate of the client to its optimal quality level, the chunk with a lower quality level will be downloaded more rapidly, which results in causing more frequent OFF* periods. For this reason, the player was not able to stabilize on the optimal quality level, resulting in a poor QoE.

When combining NewReno with RWTM, we observed that test results diverged and could be classified into two categories: those with an infidelity value of 100 % and that do not converge (Fig. 3a), and those with a low value of infidelity and that converge rapidly (Fig. 3b). In both figures, ssthresh is always invariable. Both figures have no congestion events, which is due to the use of RWTM.

The OFF* periods are more frequent in Fig. 3a (fr OFF* = 0.68) than in Fig. 3b (fr OFF* = 0.23). Although both figures present a constant value of ssthresh, we observe that the only difference between them is the initial value of ssthresh. Figure 3a has a lower value of ssthresh than Fig. 3b: 27 MSS vs 69 MSS. The additive increase approach of NewReno during the congestion avoidance phase prevents the server from rapidly increasing the cwnd value during ON periods. Therefore, the player was not able to reach the optimal quality level no 4 at any time. The cause of the dissimilarity between the initial values of ssthresh in the two figures is explained in [17]. Some implementations of NewReno use the size of the receiver’s advertised window, rwnd, to define the initial value of ssthresh, but in fact, this value may be arbitrarily chosen. Accordingly, the combination of NewReno with RWTM could have high QoE if the initial value of ssthresh is well-chosen.

When combining Vegas with HTBM, we obtain a cwnd variation, as shown in Fig. 4. The convergence moment (at 87 s in Fig. 4) occurs when cwnd becomes often set higher than ssthresh (i.e., TCP congestion control is often processing under the congestion avoidance phase) and ssthresh is often set at the same value. We can observe the additive increase and additive decrease aspect of cwnd in the congestion avoidance phase after convergence. The additive decrease of cwnd involved in Vegas is caused by the queuing delay increases resulting from HTBM. This additive decrease has the advantage of maintaining a high throughput and reducing the dropping of packets in the gateway. Therefore, the congestion rate, CNG, is relatively low because it is reduced in Fig. 4 from around 75 congestion events per 100 s to only 15. The additive decrease also has the advantage of promoting convergence to the optimal quality level, unlike multiplicative decrease. As a result, the delay-based aspect with the additive decrease approach improves the stability of the HAS player after convergence. In contrast, Vegas uses a slightly low value of ssthresh (60 MSS) and employs the additive increase approach for cwnd updates during the congestion avoidance phase. As a consequence, the server cannot rapidly increase the cwnd value during the ON period, which results in slow convergence. Therefore, the player was not able to reach the optimal quality level no 4 at any time before the moment of convergence. Consequently, the frequency of the OFF* period increases before the convergence moment; hence, the high value of fr OFF* .

The performance worsens when Vegas is combined with RWTM. As presented in Fig. 5, the player was not able to converge. Instead, we observed many timeout retransmissions characterized by ssthresh reduction and cwnd restarting from slow start. The timeout retransmissions are generated by Vegas when only a duplicate ACK is received and the timeout period of the oldest unacknowledged packet has expired [4]. Because of that, Vegas generates more timeout retransmissions than NewReno. Hence, the CNG value is worse than in the other combinations of RWTM. Moreover, OFF* periods are frequent during the first 45 s, because the player requests quality level no 3. Subsequently, OFF* periods become less frequent (they occur only at 79, 125, 138, 150, 165, and 175 s) because the player was able to switch to an optimal quality level (no 4). Hence fr OFF* related to the whole test duration is equal to an acceptable value (0.29 OFF* period per chunk). The player becomes able to request the optimal quality level no 4 predominantly in the second period (after 45 s), but it is incapable of being stable for more than 60 s because of the retransmission timeout events.

When we use the loss-delay-based variant Illinois, significant improvement of performance is observed with the two shaping methods.

In Fig. 6, despite the rapid convergence, a high rate of congestions (that reduces the ssthresh and cwnd values but maintains the cwnd higher than ssthresh, as described in Algorithm 1) and timeout retransmissions (that reduces ssthresh, drops cwnd, and begins from the slow start phase) was recorded. Consequently, the frequent reduction of ssthresh was the cause of the high rate of CNG: in this example, CNG is equal to 51.11. CNG is higher than that recorded for NewReno. The cause is the high value of ssthresh of approximately 115 MSS. The variable ssthresh was able to rapidly return to a fixed value after retransmissions, due to the update of α and β using accurate RTT C–S estimation (see Sect. 2.1). As a consequence, cwnd restarts from the slow start phase after timeout detection and rapidly reaches the high value of ssthresh. Hence, the HAS player converges despite high congestion. In addition, OFF* periods were negligible, with only two periods after congestion. This is why fr OFF* was very low (0.03). In the congestion avoidance phase, cwnd was able to increase and reach high values, even during short timeslots. This was due to the concave curve of cwnd generated by Illinois, which is more aggressive than NewReno. As a consequence, the player could be stabilized with optimal quality level no 4.

When using RWTM with Illinois, the player converges, as presented in Fig. 7. The congestion rate is very low (CNG = 0.55), but congestions are caused by the aggressiveness of Illinois (the concave curve of cwnd in the C-AIMD approach) and its high ssthresh value (120 MSS). Congestions slow down the convergence speed and slightly reduce the QoE due to the multiplicative decrease approach of Illinois. As shown in Fig. 7, one congestion event delayed the convergence time to 27 s. In addition, Illinois has the ability to select the suitable ssthresh value (110 MSS in Fig. 7) that minimizes congestion events in the future, in spite of the sensitivity of RWTM to congestions. OFF* periods still exist, but with low frequency (fr OFF* = 0.22).

The Cubic variant yielded good performances with both shaping methods. The variations of cwnd when Cubic is combined with HTBM and RWTM are presented in Figs. 8, 9, respectively.

In Fig. 8, the player converges tardily after a delay of 33 s. The cause is mainly the low value of ssthresh that is selected by the Cubic algorithm. As explained in Sect. 2.1, the HyStart algorithm, implemented in Cubic, defines this ssthresh in order to have a less aggressive increase of cwnd. The ssthresh becomes lower when the RTT C–S increases. Knowing that HTBM increases RTT C–S by introducing an additional queuing delay, HyStart decreases ssthresh to be approximately 57 MSS. This is why the player cannot upgrade to its optimal quality level no. 4 before convergence. The second cause is the multiplicative decrease approach of Cubic and the high rate of congestions caused by HTBM. This second cause makes the convergence to optimal quality level more difficult because the server is not able to increase its reduced congestion window cwnd during the ON period, as it should be increased.

After convergence, many congestions were recorded, and OFF* periods were negligible. The ssthresh becomes more stable around 75 MSS: this is well-set by the HyStart algorithm. This enhances stability in the congestion avoidance phase with a more uniform increase of cwnd, as shown between 60 and 80 s in Fig. 8. Furthermore, there is a set of large cubic curves with inflection points close to the ssthresh value. The variable cwnd is more present in the convex region, which is more aggressive when moving away from the inflection point.

In Fig. 9, the player converges rapidly in only 8 s. The ssthresh begins with a low value (60 MSS) for a few seconds during the buffering state, and then the HyStart algorithm implemented in Cubic rapidly adjusts the ssthresh value and enables the server to be more aggressive. Comparing with Fig. 7, selecting a lower initial value of ssthresh is better for accelerating convergence, because otherwise there are more risks of congestion that slow down the convergence speed.

Congestions are infrequent: only two congestions are visible in Fig. 9 at seconds 70 and 130, and they are resolved by fast retransmission in accordance with Algorithm 1 and by using Hystart. As a consequence, separated congestion events do not dramatically affect the performance, as when Illinois is used with RWTM (Fig. 9). The Cubic algorithm chooses the inflection point to be around 140 MSS, which is much higher than the ssthresh value, so that the concave region becomes more aggressive than the convex region. The OFF* periods persist, even with Cubic, but with a low frequency: fr OFF* = 0.22.

Accordingly, the Cubic variant is able to adjust its congestion window curve in different situations. When many congestions occur, the cubic curve becomes rather convex to carefully increase cwnd. When many OFF* periods occur, the cubic curve becomes rather concave, and is thus more aggressive than the concave curve of Illinois in order to rapidly achieve the desired send bitrate and compensate for the reduction of the cwnd value. However, Cubic begins by estimating a low value of ssthresh that is adjusted over time by the HyStart algorithm, which is beneficial only when using RWTM as a shaping method. Using HTBM slows down convergence considerably and affects the infidelity metric.

4.2 Scenarios 2 and 3

In this section, we present the five performance measurements of client 1 for the first three scenarios described in Sect. 3.2. We make the assumption that the optimal quality level of client 1 is no. 4. We do not present NewReno and Vegas variants because they demonstrated low performance. The average values of QoE metrics for client 1 in the first three scenarios are listed in Table 5, and the average values of CNG and fr OFF* in the first three scenarios are listed in Table 6. Both tables show the total mean values (denoted by MV) over the three scenarios. MVs are the global performance values proposed for consideration to compare between different combinations.

Both tables indicate two valuable points:

On one hand, RWTM has better QoE metric measurements than HTBM with both Cubic and Illinois variants. Moreover, RWTM not only has a lower congestion rate, CNG, than HTBM, but it also has a negligible CNG with the two TCP variants for all three scenarios. RWTM also preserves a constant value of fr OFF* . Consequently, even the transition from one to two clients and vice versa (i.e., scenarios 2 and 3, respectively) does not disturb RWTM, which preserves its inherit characteristics of negligible congestion rate and its fr OFF* rate around 0.25. This preservation has positive consequences for the user’s QoE. Although the gap between the QoE metrics measurements of {Cubic RWTM} and {Illinois RWTM} is not very significant, {Cubic RWTM} yields better values. Accordingly, we can say that the use of Cubic or even Illinois is beneficial for improving the user’s experience, with a slight preference for Illinois.

On the other hand, HTBM presents better QoE with Illinois than with the Cubic variant. In conjunction, it has a fivefold lower congestion rate (49.35 vs 246.92) and lower OFF* period frequency fr OFF* . This observation is valid not only for total mean values, MV, but also with every scenario (1, 2, and 3). Therefore, Illinois is distinctly better than Cubic for the HTBM shaping method, even when the number of active HAS clients in the home gateway changes between one and two clients. Accordingly, the loss-delay-based variant with the C-AIMD approach used by Illinois has more favorable impacts on QoE, CNG, and fr OFF* than the loss-based variant with the AIAD approach using the HyStart algorithm employed by Cubic.

4.3 Scenario 4

The objective of this section is to evaluate the robustness of each combination against the congestions that are induced by other flows. Therefore, we employed scenario 4, as described in Sect. 3.2, in which a heavy congestion is induced. To be able to compare performances correctly, a reference scenario, denoted by WL, consisting of a HAS client working alone in the home network, is used. No loss is observed in the reference scenario. We do not present the NewReno and Vegas variants because they showed poor performance. Altogether, we have four combinations to evaluate: Cubic and Illinois combined with two shaping methods, HTBM and RWTM. The average values of the QoE metrics of the client in scenario 4 are provided in Table 7, and the average values of CNG and fr OFF* are listed in Table 8.

The measurements in the two tables indicate three major observations:

-

The lowest QoE metric measurements are recorded for the Cubic variant for both shaping methods: their instability is around 5–6 %, their infidelity is near 50 %, and their convergence speed is approximately 125 ms. We also notice that the congestion rate, CNG, is very high, between 120 and 220, and the frequency fr OFF* is important around 0.4 (i.e., one OFF* period occurs for every 2.5 chunks, on average). We observe not only lower measurements, but also a higher degradation rate in performance: the gap of QoE metric measurements, CNG and fr OFF* , is clearly large between scenario WL and scenario 4. Accordingly, Cubic is not suitable as a TCP congestion control variant of the HAS server for both shaping methods when heavy congestion occurs. This result can be verified by examining Figs. 8 and 9 in Sect. 4.1.2, where Cubic has difficulties with rapidly defining the suitable ssthresh value before convergence and after congestion, respectively.

-

The RWTM shaping method presents higher degradation in QoE metric measurements than HTBM when we compare scenario WL to scenario 4 for both TCP congestion variants, Cubic and Illinois. The cause is mainly related to the fact that HTBM is used to generate congestion events and maintain high QoE under normal circumstances, which is not the case with RWTM. Accordingly, we can say that RWTM is more sensitive to induced congestions than HTBM. This result can be verified when examining Figs. 7 and 9 in Sect. 4.1.2, in which a single congestion event instantaneously degrades performance.

From the first and the second observations, we can deduce that the best combination that maintains optimal QoE metric measurements with low degradation and has the lowest frequency of OFF* periods, fr OFF* , is ensured by the combination {Illinois HTBM}.

-

The second-best combination is {Illinois RWTM}. Here, the QoE metric measurements are acceptable, but the degradation rate is higher than the best combination {Illinois HTBM}. This degradation indicates that {Illinois RWTM} cannot adequately resist against induced congestions, especially when we have a highly congested link between client and server. However, this combination could successfully be used with a link under less frequent congestions.

4.4 Scenario 5

In this section, we present the performance metric measurements when the standard variation of RTT C–S varies. The behavior of the resulting curves will indicate how performance degrades when the standard deviation of RTT C–S increases. The variation of QoE (instability, infidelity, and convergence speed) is presented in Fig. 10, and the variation of CNG and fr OFF* is listed in Fig. 11. We have two major observations:

On the one hand, the {Illinois RWTM} (purple cross) and the {Cubic RWTM} (red square) curves are convex and close to each other and are predominantly below the other curves. The three QoE metric values are good until an RTT C–S standard deviation of around 40 ms, where performance degradation begins to be visible. Moreover, the combination {Illinois RWTM} preserves its performance better and has a less aggressive degradation rate for higher RTT C–S standard deviation, especially from 35 ms. Accordingly, we can say that using RWTM with Illinois is safer when RTT C–S is very unstable. Otherwise, {RWTM Cubic} can also be used, most usefully when the standard deviation of RTT C–S is lower than 35 ms. We also observe in Fig. 11 that both combinations have a similarly low congestion rate, CNG, and similar frequency of OFF* periods, fr OFF* . Based on this observation, we can deduce that RWTM preserves its inherent characteristics with Cubic and Illinois variants, and that the degradation of QoE metric measurements for highly unstable RTT C-S is mainly caused by the congestion control algorithms used by Cubic and Illinois variants. Since RWTM seems to be more adequate with Illinois, we can say that the loss-delay-based and C-AIMD approach of Illinois helps more than the loss-based and cubic RTT-independent approach of Cubic to preserve good performance for highly unstable RTT C–S values.

On the other hand, HTBM is less robust against RTT C–S instability. The green and the blue curves that present the combination of HTBM with Illinois and Cubic, respectively, show a significant degradation of QoE metric measurements when the standard deviation of RTT C–S is above 14 ms. However, {HTBM Illinois} is more sensitive to RTT C–S instability than {HTBM Cubic}. This means that combining the loss-delay-based congestion control variant Illinois with the HTBM shaping method that increases the queuing delay, entails harmful drawbacks for QoE when the RTT C-S is unstable. We can also validate this observation in Fig. 11: the congestion rate CNG of {HTBM Illinois} and {HTBM Cubic} are predominantly close to each other, but the frequency of OFF* periods explodes with {HTBM Illinois} for RTT C–S standard deviation higher than 20 ms. This implies that the additional delay caused by HTBM is practically the same for both congestion control variants Cubic and Illinois, but the effects on fr OFF* and QoE are quite different and involve more drawbacks for the Illinois variant. In contrast, HTBM with Cubic has fewer drawbacks and presents QoE metrics measurements that are relatively constant for instability and infidelity from RTT C–S standard deviations around 20 ms. This results can be explained by the fact that the Cubic variant does not use RTT C–S to compute its congestion window cwnd during the congestion avoidance phase, as explained in Sect. 2.1.

5 Discussion

After comparing the results of five scenarios, we have made numerous observations, but in this subsection, we want to summarize the most important observations. First, NewReno and Vegas variants do not provide good performance in the HAS context, excepting the combination {NewReno RWTM} that could perform well if the initial value of ssthresh is well-chosen. Second, we summarize the observations of the five scenarios for the four combinations in Table 9. Thus, we assign a score for each combination that ranges between “–” and “++”: – (bad), - (insufficient), ± (average), + (good), and ++ (excellent). This score is based on the analysis of results for each scenario.

The best combination is {Illinois RWTM}: it yields good performance when two clients compete for bandwidth and is robust against high RTT C–S variation, but it is somewhat vulnerable to heavy congestions that could be caused by external factors. In the second position, we have two combinations:

-

{Cubic RWTM}: Unfortunately, it is very vulnerable to congestions and slightly sensitive to high RTT C–S variation.

-

{Illinois HTBM}: It has the advantage of being robust against heavy congestions. However, it is very sensitive to RTT C–S variation. Furthermore, it causes a high rate of congestion in the gateway that could disturb other sessions in concurrence with HAS sessions.

6 Conclusion and future work

A comparative evaluation has been developed in order to study the effect of combining two well-known traffic-shaping methods (HTBM and RWTM) in the gateway with four very common TCP congestion algorithms (NewReno, Vegas, Illinois, and Cubic) in the server in the context of HTTP adaptive streaming technique. We examined the user’s QoE by applying objective metrics. Furthermore, we observed the evolution of the congestion window on the server side in order to explain the behavior of each combination and its relationship with QoE metrics. We also used the congestion rate and the frequency of OFF periods that exceeds retransmission timeout as indicators. We have addressed many scenarios: two HAS clients competing for the home bandwidth simultaneously, adding or removing a HAS client, inducing a heavy congestion in the gateway, and increasing the instability of the round trip time, RTT, between the HAS server and the HAS client. The results show that there is a significant discordance in performance between combinations. The best combination that improves the QoE, reduces the congestion rate, and reduces the OFF periods in the majority of scenarios is when combining the loss-delay-based congestion control variant, Illinois, which uses the C-AIMD approach, with the TCP flow control-based method, RWTM. The characteristics of Illinois and RWTM seem to be similarly robust against high RTT instability. This combination does not disturb other real-time streams in the home network because it does not induce additional queueing delay and it considerably reduces the congestion rate. However, this combination is slightly vulnerable to heavy congestions that could be caused by external factors such as other concurrent streams.

Having extended our knowledge about the combination of TCP congestion control variants with shaping methods in this work, we intend as future work to design a new TCP congestion control variant that is compatible with all specifications of HAS and shaping methods.

References

Houdaille, R., Gouache, S.: Shaping HTTP adaptive streams for a better user experience. In: 3rd Multimedia Systems Conference, pp. 1–9. ACM (2012)

Ameur, C.B., Mory, E., Cousin, B.: Shaping HTTP adaptive streams using receive window tuning method in home gateway. In: 33rd Performance Computing and Communications Conference, pp. 1–2. IEEE (2014)

Floyd, S., Henderson, T., et al.: The NewReno modification to TCP fast recovery algorithm. RFC 3782 (2012)

Brakmo, L.S., O’Malley, S.W., Peterson, L.L.: TCP Vegas: new techniques for congestion detection and avoidance. ACM SIGCOMM Computer Commun. Rev. 24(4), 24–35 (1994)

Liu, S., Başar, T., Srikant, R.: TCP-Illinois: a loss-and delay-based congestion control algorithm for high-speed networks. Perform. Eval. J. 65(6–7), 417–440 (2008). (ScienceDirect)

Liu, S., Başar, T., Srikant, R.: CUBIC: a new TCP-friendly high-speed TCP variant. ACM SIGOPS Oper. Syst. Rev. 42(5), 64–74 (2008). (ACM)

Jiang, J., Sekar, V., Zhang, H.: Improving fairness, efficiency, and stability in HTTP-based adaptive video streaming with festive. In: 8th International Conference on Emerging Networking Experiments and Technologies, pp. 97–108. ACM (2012)

Ha, S., Rhee, I.: Taming the elephants: new TCP slow start. Computer. Netw. J. 55(9), 2092–2110 (2011). (ScienceDirect)

Akhshabi, S., Anantakrishnan, L., et al.: What happens when HTTP adaptive streaming players compete for bandwidth? In: 22nd International Workshop on Network and Operating System Support for Digital Audio and Video, pp. 9–14. ACM (2012)

Misra, V., Gong, W.B., Towsley, D.: Fluid-based analysis of a network of AQM routers supporting TCP flows with an application to RED. ACM SIGCOMM Computer. Commun. Rev. 30(4), 151–160 (2000). (ACM)

Villa, B.J., Heegaard, P.E.: Group based traffic shaping for adaptive HTTP video streaming by segment duration control. In: 27th International Conference on Advanced Information Networking and Applications, pp. 830–837. IEEE (2013)

Stewart, L., Hayes, D., et al.: Multimedia-unfriendly TCP congestion control and home gateway queue management. In: 2nd ACM Conference on Multimedia Systems, pp. 35–44. ACM (2011)

David, H., Armitage, G.: Improved coexistence and loss tolerance for delay based TCP congestion control. In 35th Conference on Local Computer Networks, pp. 24–31. IEEE (2010)

Ho, C.Y., Chen, Y.C., et al.: Fast retransmit and fast recovery schemes of transport protocols: a survey and taxonomy. Computer. Netw. J. 52(6), 1308–1327 (2008). (ScienceDirect)

Jamal, H., Sultan, K.: Performance analysis of TCP congestion control algorithms. Int. J. Computer. Commun. 2(1), 18–24 (2008)

Brown, M.A.: Traffic Control HOWTO. Guide to IP Layer Network (2006)

Allam, M., Paxson, V., Stevens, W.R.: TCP congestion control. RFC 2581 (1999)

Yin, X., Sekar, V., Sinopoli, B.: Toward a principled framework to design dynamic adaptive streaming algorithms over HTTP. In: 13th ACM Workshop on Hot Topics in Networks, pp. 1–9. ACM (2014)

Akhshabi, S., Narayanaswamy, S., et al.: An experimental evaluation of rate-adaptive video players over HTTP. Signal. Process. Image. Commun. 27(4), 271–287 (2012)

Huang, T.Y., Handigol, N., et al.: Confused, timid, and unstable: picking a video streaming rate is hard. In: The Internet Measurement Conference, pp. 225–238. ACM (2012)

Ameur, C.B., Mory, E., Cousin, B.: Evaluation of gateway-based shaping methods for HTTP adaptive streaming. In: Quality of Experience-based Management for Future Internet Applications and Services Workshop (QoE-FI), pp. 1777–1782. IEEE International Conference on Communications (2015)

Cranley, N., Perry, P., Murphy, L.: User perception of adapting video quality. In: International Journal of Human-Computer Studies, pp. 637–647 (2006)

Mok, R.K.P., Chan, E., et al.: Inferring the QoE of HTTP video streaming from user-viewing activities. In: 1st ACM SIGCOMM Workshop on Measurements Up the Stack, pp. 31–36. ACM (2011)

Dumazet, E.: Stochastic Fairness Queuing Discipline. http://manpages.ubuntu.com/manpages/trusty/man8/tc-sfq.8.html. Ubuntu Manuals (2010)

Akhshabi, S., Anantakrishnan, L., et al.: Server-based traffic shaping for stabilizing oscillating adaptive streaming players. In: 23rd ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, pp. 19–24. ACM (2013)

Le Boudec, J.Y.: Rate Adaptation, Congestion Control and Fairness: A Tutorial, pp. 1–44. Ecole Polytechnique Federale de Lausanne (EPFL) (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by L. Zhou.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ben Ameur, C., Mory, E. & Cousin, B. Combining traffic-shaping methods with congestion control variants for HTTP adaptive streaming. Multimedia Systems 24, 1–18 (2018). https://doi.org/10.1007/s00530-016-0522-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-016-0522-9