Abstract

We show that in analytic sub-Riemannian manifolds of rank 2 satisfying a commutativity condition spiral-like curves are not length minimizing near the center of the spiral. The proof relies upon the delicate construction of a competing curve.

Similar content being viewed by others

1 Introduction

The regularity of geodesics (length-minimizing curves) in sub-Riemannian geometry is an open problem since forty years. Its difficulty is due to the presence of singular (or abnormal) extremals, i.e., curves where the differential of the end-point map is singular (it is not surjective). There exist singular curves that are as a matter of fact length-minimizing. The first example was discovered in [12] and other classes of examples (regular abnormal extremals) are studied in [11]. All such examples are smooth curves.

When the end-point map is singular, it is not possible to deduce the Euler–Lagrange equations with their regularizing effect for minimizers constrained on a nonsmooth set. On the other hand, in the case of singular extremals the necessary conditions given by Optimal Control Theory (Pontryagin Maximum Principle) do not provide in general any further regularity beyond the starting one, absolute continuity or Lipschitz continuity of the curve.

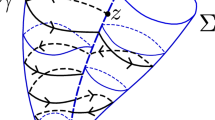

The most elementary kind of singularity for a Lipschitz curve is of the corner-type: at a given point, the curve has a left and a right tangent that are linearly independent. In [10] and [4] it was proved that length minimizers cannot have singular points of this kind. These results have been improved in [14]: at any point, the tangent cone to a length-minimizing curve contains at least one line (a half line, for extreme points), see also [5]. The uniqueness of this tangent line for length minimizers is an open problem. Indeed, there exist other types of singularities related to the non-uniqueness of the tangent. In particular, there exist spiral-like curves whose tangent cone at the center contains many and in fact all tangent lines, see Example 2.6 below. These curves may appear as Goh extremals in Carnot groups, see [8] and [9, Section 5]. For these reasons, the results of [14] are not enough to prove the nonminimality of spiral-like extremals. Goal of this paper is to show that curves with this kind of singularity are not length-minimizing.

Let M be an n-dimensional, \(n\ge 3\), analytic manifold endowed with a rank 2 analytic distribution \({\mathcal {D}} \subset TM\) that is bracket generating (Hörmander condition). An absolutely continuous curve \(\gamma \in AC([0,1];M)\) is horizontal if \({\dot{\gamma }} \in {\mathcal {D}}(\gamma )\) almost everywhere. The length of \(\gamma \) is defined fixing a metric tensor g on \({\mathcal {D}}\) and letting

The curve \(\gamma \) is a length-minimizer between its end-points if for any other horizontal curve \({\bar{\gamma }}\in AC([0,1];M)\) such that \({\bar{\gamma }} (0) = \gamma (0)\) and \({\bar{\gamma }} (1) = \gamma (1)\) we have \(L(\gamma )\le L({\bar{\gamma }})\).

Our notion of horizontal spiral in a sub-Riemannian manifold of rank 2 is fixed in Definition 1.1. We will show that spirals are not length-minimizing when the horizontal distribution \({\mathcal {D}}\) satisfies the following commutativity condition. Fix two vector fields \(X_1,X_{2}\in \mathcal {D}\) that are linearly independent at some point \(p\in M\). For \(k\in \mathbb {N}\) and for a multi-index \(J=(j_1,\dots ,j_k)\), with \( j_i\in \{1,2\} \), we denote by \( X_J=[X_{j_1},[ \dots ,[ X_{j_{k-1} }, X_{j_k}]\cdots ]]\) the iterated commutator associated with J. We define its length as the length of the multi-index J, i.e., \(\mathrm {len}(X_J)= \mathrm {len}(J) = k\). Then, our commutativity assumption is that, in a neighborhood of the point p,

This condition is not intrinsic and depends on the basis \(X_1,X_2\) of the distribution \(\mathcal {D}\). After introducing exponential coordinates of the second type, the vector fields \(X_1,X_2\) can be assumed to be of the form (2.3) below, and the point p will be the center of the spiral.

A curve \(\gamma \in AC([0,1];M)\) is horizontal if \({\dot{\gamma }}(t) \in \mathcal {D}(\gamma (t) )\) for a.e. \(t\in [0,1]\). In coordinates we have \(\gamma = (\gamma _1,\ldots ,\gamma _n)\) and, by (2.3), the \(\gamma _j\)’s satisfy for \(j=3,\ldots , n\) the following integral identities

When \(\gamma (0)\) and \(\gamma _1,\gamma _2\) are given, these formulas determine in a unique way the whole horizontal curve \(\gamma \). We call \(\kappa \in AC([0,1];\mathbb {R}^2)\), \(\kappa =(\gamma _1,\gamma _2)\), the horizontal coordinates of \(\gamma \).

Definition 1.1

(Spiral) We say that a horizontal curve \(\gamma \in AC([0,1];M)\) is a spiral if, in exponential coordinates of the second type centered at \(\gamma (0)\), the horizontal coordinates \(\kappa \in AC([0,1];\mathbb {R}^2)\) are of the form

where \(\varphi \in C^1( ]0,1]; \mathbb {R}^+)\) is a function, called phase of the spiral, such that \(|\varphi (t)|\rightarrow \infty \) and \(|{\dot{\varphi }}(t)| \rightarrow \infty \) as \(t\rightarrow 0^+\). The point \(\gamma (0)\) is called center of the spiral.

A priori, Definition 1.1 depends on the basis \(X_1,X_2\) of \(\mathcal {D}\), see however our comments about its intrinsic nature in Remark 2.5. Without loss of generality, we shall focus our attention on spirals that are oriented clock-wise, i.e., with a phase satisfying \(\varphi (t)\rightarrow \infty \) and \({\dot{\varphi }}(t) \rightarrow -\infty \) as \(t\rightarrow 0^+\). Such a phase is decreasing near 0. Notice that if \(\varphi (t)\rightarrow \infty \) and \({\dot{\varphi }}(t)\) has a limit as \(t\rightarrow 0^+\) then this limit must be \(-\infty \).

Our main result is the following

Theorem 1.2

Let \((M,{\mathcal {D}},g)\) be an analytic sub-Riemmanian manifold of rank 2 satisfying (1.2). Any horizontal spiral \(\gamma \in AC( [0,1];M)\) is not length-minimizing near its center.

Differently from [4, 5, 10, 14] and similarly to [13], the proof of this theorem cannot be reduced to the case of Carnot groups, the infinitesimal models of equiregular sub-Riemanian manifolds. This is because the blow-up of the spiral could be a horizontal line, that is indeed length-minimizing.

The nonminimality of spirals combined with the necessary conditions given by Pontryagin Maximum Principle is likely to give new regularity results on classes of sub-Riemannian manifolds, in the spirit of [1]. We think, however, that the main interest of Theorem 1.2 is in the deeper understanding that it provides on the loss of minimality caused by singularities.

The proof of Theorem 1.2 consists in constructing a competing curve shorter than the spiral. The construction uses exponential coordinates of the second type and our first step is a review of Hermes’ theorem on the structure of vector-fields in such coordinates. In this situation, the commutativity condition (1.2) has a clear meaning explained in Theorem 2.2, that may be of independent interest.

In Sect. 3, we start the construction of the competing curve. Here we use the specific structure of a spiral. The curve obtained by cutting one spire near the center is shorter. The error appearing at the end-point will be corrected modifying the spiral in a certain number of locations with “devices” depending on a set of parameters. The horizontal coordinates of the spiral are a planar curve intersecting the positive \(x_1\)-axis infinitely many times. The possibility of adding devices at such locations arbitrarily close to the origin will be a crucial fact.

In Sect. 4, we develop an integral calculus on monomials that is used to estimate the effect of cut and devices on the end-point of the modified spiral. Then, in Sect. 5, we fix the parameters of the devices in such a way that the end-point of the modified curve coincides with the end-point of the spiral. This is done in Theorem 5.1 by a linearization argument. Sections 3–5 contain the technical core of the paper.

We use the specific structure of the length-functional in Sect. 6, where we prove that the modified curve is shorter than the spiral, provided that the cut is sufficiently close to the origin. This will be the conclusion of the proof of Theorem 1.2.

We briefly comment on the assumptions made in Theorem 1.2. The analyticity of M and \({\mathcal {D}}\) is needed only in Sect. 2. In the analytic case, it is known that length-minimizers are smooth in an open and dense set, see [15]. See also [3] for a \(C^1\)-regularity result when M is an analytic manifold of dimension 3.

The assumption that the distribution \({\mathcal {D}}\) has rank 2 is natural when considering horizontal spirals. When the rank is higher there is room for more complicated singularities in the horizontal coordinates, raising challenging questions about the regularity problem.

Dropping the commutativity assumption (1.2) is a major technical problem: getting sharp estimates from below for the effect produced by cut and devices on the end-point seems extremely difficult when the coefficients of the horizontal vector fields depend also on nonhorizontal coordinates, see Remark 4.3.

We thank Marco F. Sica for his help with the pictures and the referee for his comments that improved the exposition of the paper.

2 Exponential coordinates at the center of the spiral

In this section, we introduce in M exponential coordinates of the second type centered at a point \(p \in M\), that will be the center of the spiral.

Let \(X_1, X_2\in {\mathcal {D}}\) be linearly independent at p. Since the distribution \(\mathcal {D}\) is bracket-generating we can find vector-fields \(X_3,\ldots , X_n\), with \(n = \mathrm {dim}(M)\), such that each \(X_i\) is an iterated commutator of \(X_1,X_2\) with length \(w_i =\mathrm {len}(X_i)\), \(i=3,\ldots ,n\), and such that \(X_1,\ldots ,X_n\) at p are a basis for \(T_pM\). By continuity, there exists an open neighborhood U of p such that \(X_1(q),\dots ,X_n(q)\) form a basis for \(T_qM\), for any \(q\in U\). We call \(X_1,\ldots ,X_n\) a stratified basis of vector-fields in M.

Let \(\varphi \in C^\infty (U;\mathbb {R}^n)\) be a chart such that \(\varphi (p)=0\) and \(\varphi (U)=V\), with \(V\subset \mathbb {R}^n \) open neighborhood of \(0\in \mathbb {R}^n\). Then \({\widetilde{X}}_1=\varphi _*X_1,\ldots , {\widetilde{X}}_n=\varphi _*X_n\) is a system of point-wise linearly independent vector fields in \(V\subset \mathbb {R}^n\). Since our problem has a local nature, we can without loss of generality assume that \(M=V= \mathbb {R}^n\) and \(p=0\).

After these identifications, we have a stratified basis of vector-fields \(X_1,\dots ,X_n\) in \(\mathbb {R}^n\). We say that \(x=(x_1,\dots ,x_n)\in \mathbb {R}^n\) are exponential coordinates of the second type associated with the vector fields \(X_1,\dots ,X_n\) if we have

We are using the notation \(\Phi _s^{X}=\exp (sX)\), \(s\in \mathbb {R}\), to denote the flow of a vector-field X. From now on, we assume without loss of generality that \(X_1,\ldots ,X_n\) are complete and induce exponential coordinates of the second type.

We define the homogeneous degree of the coordinate \(x_i\) of \(\mathbb {R}^n\) as \( w_i=\mathrm {len}(X_i)\). We introduce the 1-parameter group of dilations \(\delta _\lambda :\mathbb {R}^n\rightarrow \mathbb {R}^n\), \(\lambda >0\),

and we say that a function \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is \(\delta \)-homogeneous of degree \(w\in \mathbb {N}\) if \( f(\delta _\lambda (x))=\lambda ^wf(x)\) for all \(x\in \mathbb {R}^n\) and \(\lambda >0\). An example of \(\delta \)-homogeneous function of degree 1 is the pseudo-norm

The following theorem is proved in [6] in the case of general rank. A more modern approach to nilpotentization can be found in [2] and [7].

Theorem 2.1

Let \(\mathcal {D}= \mathrm {span}\{X_1, X_2\}\subset TM \) be an analytic distribution of rank 2. In exponential coordinates of the second type around a point \(p\in M\) identified with \(0\in \mathbb {R}^n\), the vector fields \(X_1\) and \(X_2\) have the form

for \(x\in U\), where U is a neighborhood of 0. The analytic functions \(a_{j}\in C^\infty (U)\), \(j=3,\ldots ,n\), have the structure \(a_{j}=p_{j}+r_{j}\), where:

-

(i)

\(p_{j}\) are \(\delta \)-homogeneous polynomials of degree \(w_j-1\) such that \(p_j(0,x_2,\dots ,x_n)=0\);

-

(ii)

\(r_{j}\in C^\infty (U)\) are analytic functions such that, for some constants \(C_1,C_2>0\) and for \(x\in U\),

$$\begin{aligned} |r_{j}(x)|\le C_1\Vert x\Vert ^{w_j} \quad \text {and}\quad |\partial _{x_i } r_{j}(x)|\le C_2\Vert x\Vert ^{w_j-w_i}. \end{aligned}$$(2.4)

Proof

The proof that \(a_{j}=p_{j}+r_{j}\) where \(p_{j}\) are polynomials as in (i) and the remainders \(r_{j}\) are real-analytic functions such that \(r_{j}(0)=0\) can be found in [6]. The proof of (ii) is also implicitly contained in [6]. Here, we add some details. The Taylor series of \(r_j\) has the form

where \(\mathcal {A}_\ell = \{\alpha \in \mathbb {N}^n: \alpha _1 w_1 + \cdots +\alpha _n w_n =\ell \}\), \(x^\alpha = x_1^{\alpha _1}\cdots x_n^{\alpha _n}\) and \(c_{\alpha \ell }\in \mathbb {R}\) are constants. Here and in the following, \(\mathbb {N}=\{0,1,2,\ldots \}\). The series converges absolutely in a small homogeneous cube \(Q_\delta =\{ x\in \mathbb {R}^n: \Vert x\Vert \le \delta \}\) for some \(\delta >0\), and in particular

Using the inequality \(|x^\alpha |\le \Vert x\Vert ^\ell \) for \(\alpha \in \mathcal {A}_\ell \), for \(x\in Q_\delta \) we get

The estimate for the derivatives of \(r_j\) is analogous. Indeed, we have

where \(\alpha -\text {e}_i \in \mathcal {A}_{\ell -w_i} \) whenever \(\alpha \in \mathcal {A}_\ell \). Above, \(\text {e}_i=(0,\ldots ,1,\ldots ,0)\) with 1 at position i is the canonical ith versor of \(\mathbb {R}^n\). Thus the leading term in the series has homogeneous degree \(w_j-w_i\) and repeating the argument above we get the estimate \(|\partial _{x_i } r_{j}(x)|\le C_2\Vert x\Vert ^{w_j-w_i}\) for \(x\in Q_\delta \). \(\square \)

When the distribution \({\mathcal {D}}\) satisfies the commutativity assumption (1.2) the coefficients \(a_j\) appearing in the vector-field \(X_2\) in (2.3) enjoy additional properties. In the next theorem, the specific structure of exponential coordinates of the second type will be very helpful in the computation of various derivatives. In particular, in Lemma 2.3 we need a nontrivial formula from [6, Appendix A], given in such coordinates.

Theorem 2.2

If \({\mathcal {D}}\subset T M\) is an analytic distribution of rank 2 satisfying (1.2) then the functions \(a_3,\ldots ,a_n\) of Theorem 2.1 depend only on the variables \(x_1\) and \(x_2\).

Proof

Let \(\Gamma :\mathbb {R}\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) be the map \( \Gamma (t,x) =\Phi _{t}^{X_2}(x), \) where \( x\in \mathbb {R}^n\) and \( t\in \mathbb {R}\). Here, we are using the exponential coordinates (2.1). In the following we omit the composition sign \(\circ \). Defining \(\Theta :\mathbb {R}^3\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) as the map \(\Theta _{t,x_1,x_2}(p)=\Phi _{-(x_2+t)}^{X_2} \Phi _{-x_1}^{X_1}\Phi _{t}^{X_2}\Phi _{x_1}^{X_1}\Phi _{x_2}^{X_2}(p), \) we have

We claim that there exists a \(C>0\) independent of t such that, for \(t\rightarrow 0\),

We will prove claim (2.5) in Lemma 2.3 below. From (2.5) it follows that there exist mappings \(R_{t}\in C^\infty (\mathbb {R}^n,\mathbb {R}^n)\) such that

and such that \(|R_t|\le C t^2\) for \(t\rightarrow 0\).

By the structure (2.3) of the vector fields \(X_1\) and \(X_2\) and since \(\Theta _{t,x_1,x_2}\) is the composition of \(C^\infty \) maps, there exist \(C^\infty \) functions \(f_j=f_j(t,x_1,x_2)\) such that

By (1.2), from (2.6) and (2.7) we obtain

and we conclude that

Thus the coefficients \(a_j(x_1,x_2)=\frac{d}{dt}f_j (t,x_1,x_2)|_{t=0}\), \(j=3,\ldots ,n\), depend only on the first two variables, completing the proof. \(\square \)

In the following lemma, we prove our claim (2.5).

Lemma 2.3

Let \({\mathcal {D}} \subset T M\) be an analytic distribution satisfying (1.2). Then for any \(j=3,\ldots ,n\) the claim in (2.5) holds.

Proof

Let \(X=X_j\) for any \(j=3,\ldots ,n\) and define the map \(T_{t,x_1,x_2;s} ^X =\Theta _{t,x_1,x_2}\Phi _s^X-\Phi _s^X\Theta _{t,x_1,x_2}\). For \(t=0\) the map \(\Theta _{0,x_1,x_2}\) is the identity and thus \(T_{0,x_1,x_2;s} ^X=0\). So, claim (2.5) follows as soon as we show that

for any \(s\in \mathbb {R}\) and for all \(x_1,x_2\in \mathbb {R}\).

We first compute the derivative of \(\Theta _{t,x_1,x_2}\) with respect to t. Letting \(\Psi _{t,x_1}=\Phi _{-x_1}^{X_1}\Phi _{t}^{X_2}\Phi _{x_1}^{X_1}\) we have \( \Theta _{t,x_1,x_2}=\Phi _{-(x_2+t)}^{X_2}\Psi _{t,x_1}\Phi _{x_2}^{X_2}, \) and, thanks to [6, Appendix A], the derivative of \(\Psi _{t,x_1}\) at \(t=0\) is

where \(W_\nu = [X_1,[ \cdots ,[X_1,X_2] \cdots ]]\) with \(X_1\) appearing \(\nu \) times and \(c_{\nu ,x_1}=(-1)^\nu x_1^\nu /\nu !\). In particular, we have \(c_{0,x_1}=1\). Then the derivative of \(\Theta _{t,x_1,x_2}\) at \(t=0\) is

because the term in the sum with \(\nu =0\) is \(d \Phi _{-x_2} ^{X_2} \big (X_2(\Phi _{x_2}^{X_2}) \big )= X_2\). Inserting this formula for \( {\dot{\Theta }}_{0,x_1,x_2}\) into

we obtain

In order to prove that \( \dot{T}_{0,x_1,x_2; s} ^{X}\) vanishes for all \(x_1,x_2\) and s, we have to show that

for any \(\nu \ge 1\) and for any \(x_2\) and s. From \(\Phi _0^{X}=\mathrm {id}\) it follows that \( g(x_2,0)=0\). Then, our claim (2.9) is implied by

Actually, this is a Lie derivative and, namely,

Notice that \(h(0,s) = - d \Phi _{-s}^X [X, W_\nu ] =0\) by our assumption (1.2). In a similar way, for any \(k\in \mathbb {N}\) we have

with \(X_2\) appearing k times. Since the function \(x_2\mapsto h(x_2,s)\) is analytic our claim (2.10) follows. \(\square \)

We conclude this sections with some general remarks.

Remark 2.4

By Theorem 2.2, we can assume that \(a_ j (x) = a_j(x_1,x_2)\) are functions of the variables \(x_1,x_2\). In this case, formula (1.3) for the coordinates of a horizontal curve \(\gamma \in AC([0,1];M)\) reads, for \(j=3,\ldots ,n\),

Remark 2.5

Definition 1.1 of horizontal spiral is stable with respect to change of coordinates in the following sense.

After fixing exponential coordinates, we have that \(0\in {\mathbb {R}}^n\) is the center of the spiral \(\gamma :[0,1]\rightarrow \mathbb {R}^n\), with horizontal projection \(\kappa (t)\) as in Definition 1.1.

We consider a diffeomorphism \({\widetilde{F}}\in C^\infty (\mathbb {R}^n;\mathbb {R}^n)\) such that \({\widetilde{F}}(0)=0\). In the new coordinates, our spiral \(\gamma \) is \(\zeta (t) ={\widetilde{F}}(\gamma (t))\). We define the horizontal coordinates of \(\zeta \) in the following way: the set \(d_0{\widetilde{F}}( {\mathcal {D}}(0)) \), where \(d_0{\widetilde{F}}\) is the differential of \({\widetilde{F}}\) at 0, is a 2-dimensional subspace of \(\mathbb {R}^n= \mathrm {Im}(d_0{\widetilde{F}})\); denoting by \(\pi :\mathbb {R}^n \rightarrow d_0{\widetilde{F}}( {\mathcal {D}}(0)) \) the orthogonal projection, we define the horizontal coordinates of \(\zeta \) as \(\xi (t) = \pi ({\widetilde{F}}(\gamma (t))\).

We claim that \(\xi \), in the plane \(d_0{\widetilde{F}}( {\mathcal {D}}(0))\), is of the form (1.4), with a phase \(\omega \) satisfying \(|\omega |\rightarrow \infty \) and \( |\dot{\omega }|\rightarrow \infty \). In particular, these properties of \(\xi \) are stable up to isometries of the plane. Then, we can assume that \(\xi (t)=(F_1(\gamma (t)),F_2(\gamma (t)))\), with \(F_i:\mathbb {R}^n\rightarrow \mathbb {R}\) of class \(C^\infty \), for \(i=1,2\). In this setting, we will show that \(|{\dot{\omega }} |\rightarrow \infty \).

The function \(s(t) = |\xi (t) | = |(F_1(\gamma (t)), F_2(\gamma (t)))|\) satisfies

Define the function \(\omega \in C^1((0,1])\) letting \(\xi (t) = s(t) \mathrm {e} ^{i \omega (s(t))}\). Then differentiating the identity obtained inverting

we obtain

where the function \(\Phi (x) = F_1 (x) \nabla F_2(x) -F_2 (x) \nabla F_1( x) \) has the Taylor development as \(x\rightarrow 0\)

Observe that from (2.11) it follows that \(|{\dot{\gamma }}_j(t)| = O(t)\) for \(j\ge 3\). Denoting by \({\bar{\nabla }}\) the gradient in the first two variables, we deduce that as \(t\rightarrow 0^+\) we have

with

Inserting the last identity and \({\dot{\kappa }} = \mathrm {e} ^{i\varphi } + i t {\dot{\varphi }} \mathrm {e} ^{i\varphi }\) into (2.14), after some computations we obtain

where \( \det (d_0 {\bar{F}} (0))\ne 0\) is the determinant Jacobian at \(x_1=x_2=0\) of the mapping \((x_1,x_2)\mapsto (F_1(x_1,x_2,0), F_2(x_1,x_2,0))\). Now the claim \(|\dot{\omega }(s)|\rightarrow \infty \) as \(s\rightarrow 0^+\) easily follows from (2.12), (2.13) and from \(|{\dot{\varphi }}(t)|\rightarrow \infty \) as \(t\rightarrow 0^+\).

Example 2.6

An interesting example of horizontal spiral is the double-logarithm spiral, the horizontal lift of the curve \(\kappa \) in the plane of the form (1.4) with phase \(\varphi (t)=\log (-\log t)\), \(t\in (0,1/2]\). In this case, we have

and clearly \(\varphi (t)\rightarrow \infty \) and \({\dot{\varphi }}(t)\rightarrow -\infty \) as \(t\rightarrow 0^+\). In fact, we also have \(t{\dot{\varphi }} \in L^\infty (0,1/2)\), which means that \(\kappa \) and thus \(\gamma \) is Lipschitz continuous. This spiral has the following additional properties:

-

(i)

for any \(v\in \mathbb {R}^2\) with \(|v|=1\) there exists an infinitesimal sequence of positive real numbers \((\lambda _n)_{ n\in \mathbb {N}}\) such that \(\kappa (\lambda _n t)/\lambda _n \rightarrow tv \) locally uniformly, as \(n\rightarrow \infty \);

-

(ii)

for any infinitesimal sequence of positive real numbers \((\lambda _n)_{ n\in \mathbb {N}} \) there exists a subsequence and a \(v\in \mathbb {R}^2\) with \(|v|=1\) such that \(\kappa (\lambda _{n_k} t)/\lambda _{n_k} \rightarrow tv \) as \(k\rightarrow \infty \), locally uniformly.

This means that the tangent cone of \(\kappa \) at \(t=0\) consists of all half-lines in \(\mathbb {R}^2\) emanating from 0.

3 Cut and correction devices

In this section, we begin the construction of the competing curve. Let \(\gamma \) be a spiral with horizontal coordinates \(\kappa \) as in (1.4). We can assume that \(\varphi \) is decreasing and that \(\varphi (1)=1\) and we denote by \(\psi :[1,\infty )\rightarrow (0,1]\) the inverse function of \(\varphi \). For \(k\in \mathbb {N}\) and \(\eta \in [0,2\pi )\) we define \(t_{k \eta }\in (0,1]\) as the unique solution to the equation \(\varphi (t_{k\eta }) = 2\pi k + \eta \), i.e., we let \(t_{k\eta } = \psi (2\pi k + \eta )\). The times

will play a special role in our construction. The points \(\kappa (t_k)\) are in the positive \(x_1\)-axis.

For a fixed \(k\in \mathbb {N}\), we cut the curve \(\kappa \) in the interval \([t_{k+1},t_{k}]\) following the line segment joining \(\kappa (t_{k+1})\) to \(\kappa (t_{k})\) instead of the path \(\kappa \), while we leave unchanged the remaining part of the path. We call this new curve \(\kappa _{k}^{\mathrm {cut}}\) and, namely, we let

We denote by \(\gamma _{k}^{\mathrm {cut}}\in AC ([0,1];M) \) the horizontal curve with horizontal coordinates \(\kappa _{k}^{\mathrm {cut}}\) and such that \(\gamma _{k}^{\mathrm {cut}}(0)=\gamma (0)\). For \(t\in [0,t_{k+1}]\), we have \(\gamma _{k}^{\mathrm {cut}}(t)=\gamma (t)\). To correct the errors produced by the cut on the end-point, we modify the curve \(\kappa _{k}^{\mathrm {cut}}\) using a certain number of devices. The construction is made by induction.

We start with the base construction. Let \(\mathscr {E}= (h,\eta ,\varepsilon )\) be a triple such that \(h\in \mathbb {N}\), \(0<\eta <\pi /4\), and \(\varepsilon \in \mathbb {R}\). Starting from a curve \(\kappa :[0,1]\rightarrow \mathbb {R}^2\), we define the curve \(\mathrm {D}(\kappa ;\mathscr {E}):[0,1+2|\varepsilon |]\rightarrow \mathbb {R}^2\) in the following way:

We denote by \(\mathrm {D}(\gamma ;\mathscr {E})\) the horizontal curve with horizontal coordinates \(\mathrm {D}(\kappa ;\mathscr {E})\). We let \({\dot{\mathrm {D}}}(\gamma ;\mathscr {E})=\frac{d}{dt}\mathrm {D}(\gamma ;\mathscr {E})\) and we indicate by \(\mathrm {D}_i(\gamma ;\mathscr {E})\) the i-th coordinate of the corrected curve in exponential coordinates.

In the lifting formula (2.11), the intervals where \(\dot{\gamma }_2 = 0\) do not contribute to the integral. For this reason, in (3.2) we may cancel the second and fourth lines, where \({\dot{\mathrm {D}}}_2(\gamma ;\mathscr {E})=0\), and then reparameterize the curve on [0, 1]. Namely, we define the discontinuous curve \(\overline{\mathrm {D}}(\kappa ;\mathscr {E}):[0,1]\rightarrow \mathbb {R}^2\) as

The “formal” ith coordinate of the curve \(\overline{\mathrm {D}}(\kappa ;\mathscr {E})\) is given by

The following identities with \(\varepsilon >0\) can be checked by an elementary computation

When \(\varepsilon <0\) there are similar identities. With this notation, the final error produced on the ith coordinate by the correction device \(\mathscr {E}\) is

The proof of this formula is elementary and can be omitted.

We will iterate the above construction a certain number of times depending on a collections of triples \(\mathscr {E}\). We first fix the number of triples and iterations.

For \(i=3,\ldots ,n\), let \({\mathcal {B}}_i=\{(\alpha ,\beta )\in \mathbb {N}^2\, :\, \alpha +\beta =w_i-2\}\), where \(w_i\ge 2 \) is the homogeneous degree of the coordinate \(x_i\). Then, the polynomials \(p_i\) given by Theorem 2.1 and Theorem 2.2 are of the form

for suitable constants \(c_{\alpha \beta }\in \mathbb {R}\). We set

and we consider an \((\ell -2)\)-tuple of triples \(\bar{\mathscr {E}}= (\mathscr {E}_3,\ldots ,\mathscr {E}_\ell )\) such that \(h_\ell<h_{\ell -1}<\cdots<h_3<k\). Each triple is used to correct one monomial.

Without loss of generality, we simplify the construction in the following way. In the sum (3.6), we can assume that \(c_{\alpha \beta }=0\) for all \((\alpha ,\beta )\in {\mathcal {B}} _i\) but one. Namely, we can assume that

and with \(c_{\alpha _i\beta _i}=1\). In this case, we have \(\ell = n\) and we will use \(n-2\) devices associated with the triples \(\mathscr {E}_3,\ldots , \mathscr {E}_{n}\) to correct the coordinates \(i=3,\ldots ,n\). By the bracket generating property of the vector fields \(X_1\) and \(X_2\) and by the stratified basis property for \(X_1,\ldots ,X_n\), the pairs \(( \alpha _i, \beta _i)\) satisfy the following condition

In the general case (3.6), we use a larger number \(\ell \ge n\) of devices, one for each monomial \(x_1^{\alpha +1}x_2^\beta \) appearing in \(p_3(x_1,x_2),\ldots ,p_n(x_1,x_2)\), and we correct the error produced by the cut on each monomial. The argument showing the nonminimality of the spiral will be the same. So, from now on in the rest of the paper we will assume that the polynomials \(p_i\) are of the form (3.8) with (3.9).

Now we clarify the inductive step of our construction. Let \(\mathscr {E}_3 = (h_3,\eta _3,\varepsilon _3)\) be a triple such that \( h_3< k \). We define the curve \(\kappa ^{(3)} = \mathrm {D}( \kappa _{k}^{\mathrm {cut}};\mathscr {E}_3)\). Given a triple \(\mathscr {E}_4 = (h_4,\eta _4,\varepsilon _4)\) with \(h_4< h_3 \) we then define \(\kappa ^{(4)} = \mathrm {D}( \kappa ^{(3)};\mathscr {E}_4)\). By induction on \(\ell \in \mathbb {N}\), given a triple \(\mathscr {E}_\ell = (h_\ell ,\eta _\ell ,\varepsilon _\ell )\) with \(h_\ell < h_ {\ell -1}\), we define \(\kappa ^{(\ell )} = \mathrm {D}( \kappa ^{(\ell -1)};\mathscr {E}_\ell )\). When \(\ell =n\) we stop.

We define the planar curve \(\mathrm {D}(\kappa ;k, {{\bar{\mathscr {E}}} }) \in AC ([0,1+2 {\bar{\varepsilon }}];\mathbb {R}^2)\) as \(\mathrm {D}(\kappa ;k,{{\bar{\mathscr {E}}} }) = \kappa ^{(n)}\) according to the inductive construction explained above, where \({\bar{\varepsilon }} = |\varepsilon _3|+\cdots + |\varepsilon _n |\). Then we call \(\mathrm {D}( \gamma ;k,{\bar{\mathscr {E}}}) \in AC ([0,1+2 {\bar{\varepsilon }}];M)\), the horizontal lift of \(\mathrm {D}(\kappa ;k,{\bar{\mathscr {E}}})\) with \(\mathrm {D}(\gamma ;k,\mathscr {E})(0) =\gamma (0)\), the modified curve of \(\gamma \) associated with \({\bar{\mathscr {E}}}\) and with cut of parameter \(k\in \mathbb {N}\). There is a last adjustment to do. In \([0,1+2 {\bar{\varepsilon }}]\) there are \(2(n-2)\) subintervals where \({\dot{\kappa }}_2^{(n)}=0\). On each of these intervals the coordinates \(\mathrm {D}_j ( \gamma ;k,{\bar{\mathscr {E}}}) \) are constant. According to the procedure explained in (3.2)–(3.4), we erase these intervals and we parametrize the resulting curve on [0, 1]. We denote this curve by \({\bar{\gamma }} = \overline{\mathrm {D}}( \gamma ;k,{\bar{\mathscr {E}}}) \).

Definition 3.1

(Adjusted modification of \(\gamma \)) We call the curve \({\bar{\gamma }} = \overline{\mathrm {D}}( \gamma ;k,{\bar{\mathscr {E}}}): [0,1]\rightarrow M\) the adjusted modification of \(\gamma \) relative to the collections of devices \({\bar{\mathscr {E}}}=(\mathscr {E}_3,\ldots ,\mathscr {E}_n)\) and with cut of parameter k.

Our next task is to compute the error produced by cut and devices on the end-point of the spiral. For \(i=3,\ldots , n\) and for \(t\in [0,1]\) we let

When \(t<t_{k+1}\) or \(t>t_k\) we have \(\dot{\kappa }_2=\dot{{\bar{\kappa }}}_2\) and so the definition above reads

By the recursive application of the argument used to obtain (3.5), we get the following formula for the error at the final time \({\bar{t}} =t_{h_n}\):

In (3.11) and in the following, we use the following notation for the intervals:

with \(t_{h_2}= t_k\). We used also the fact that on \([0,t_{k+1}]\) we have \(\gamma ={\bar{\gamma }}\).

On the interval \(F_k\) we have \(\dot{{\bar{\kappa }}}_2 =0\) and thus

On the intervals \(A_j\) we have \(\kappa ={\bar{\kappa }}\) and thus

because the functions \(a_i\) depend only on \(\kappa \). Finally, on the intervals \(B_j\) we have \({\bar{\kappa }}_1 = \kappa _1+ \varepsilon _j\) and \(\kappa _2 ={\bar{\kappa }}_2\) and thus

Our goal is to find \(k\in \mathbb {N}\) and devices \({\bar{\mathscr {E}}}\) such that \( E_i^{k,{\bar{\mathscr {E}}} }=0\) for all \(i=3,\ldots , n\) and such that the modified curve \(\mathrm {D}(\gamma ;k,{\bar{\mathscr {E}}})\) is shorter than \(\gamma \).

4 Effect of cut and devices on monomials and remainders

Let \(\gamma \) be a horizontal spiral with horizontal coordinates \(\kappa \in AC([0,1];\mathbb {R}^2)\) of the form (1.4). We prove some estimates about the integrals of the polynomials (3.8) along the curve \(\kappa \). These estimates are preliminary to the study of the errors introduced in (3.11).

For \(\alpha ,\beta \in \mathbb {N}\), we associate with the monomial \(p_{\alpha \beta }(x_1,x_2)= x_1^{\alpha +1}x_2^{\beta }\) the function \( \gamma _{\alpha \beta }\) defined for \(t\in [0,1]\) by

When \(p _ i = p_{\alpha \beta }\), the function \(\gamma _{\alpha \beta }\) is the leading term in the ith coordinate of \(\gamma \) in exponential coordinates. In this case, the problem of estimating \(\gamma _i(t)\) reduces to the estimate of integrals of the form

where \(\omega \le \eta \) are angles, \(t_\omega =\psi (\omega )\) and \(t_\eta =\psi (\eta )\). For \(\alpha ,\beta \in \mathbb {N}\), \(h\in \mathbb {N}\) and \(\eta \in (0,\pi /4)\) we also let

where in the second equality we set \(\vartheta =\varphi (t)\).

Proposition 4.1

There exist constants \(0<c_{\alpha \beta }< C_{\alpha \beta }\) depending on \(\alpha ,\beta \in \mathbb {N}\) such that for all \(h\in \mathbb {N}\) and \(\eta \in (0,\pi /4)\) we have

Before proving this proposition, we notice that the integrals \( I_{\omega \eta }^{\alpha \beta }\) in (4.1) are related to the integrals

Lemma 4.2

For any \(\alpha ,\beta \in \mathbb {N}\) and \(\omega \le \eta \) we have the identity

where we set \(D^{\alpha \beta }_\omega = \cos ^{\alpha +1}(\omega )\sin ^{\beta +1}(\omega )\).

Proof

Inserting into \(I_{\omega \eta }^{\alpha \beta }\) the identities \(\kappa _1(t)=t\cos (\varphi (t))\), \(\kappa _2(t)=t\sin (\varphi (t))\), and \({\dot{\kappa }} _2(t)=\sin (\varphi (t))+t\cos (\varphi (t))\dot{\varphi }(t)\) we get

and, integrating by parts in the first integral, this identity reads

Grouping the trigonometric terms and then performing the change of variable \(\varphi (t)=\vartheta \), we get

This is our claim. \(\square \)

Proof of Proposition 4.1

From (4.5) with \(D^{\alpha \beta }_{2h\pi } =0 \) we obtain

where \(c_{\alpha \beta } \eta ^{\beta +1}\le D^{\alpha \beta }_\eta \le \eta ^{\beta +1}\), because \(\eta \in (0,\pi /4)\), and

The claim follows. \(\square \)

Remark 4.3

We will use the estimates (4.3) in the proof of the solvability of the end-point equations. In particular, the computations above are possible thanks to the structure of the monomials \(p_i\): here, their dependence only on the variables \(x_1\) and \(x_2\), ensured by (1.2), is crucial. When the coefficients \(a_i\) depend on all the variables \(x_1,\ldots ,x_n\), repeating the same computations seems difficult. Indeed, in the integrals (4.1) and (4.4) there are also the coordinates \(\gamma _3,\ldots ,\gamma _n\). Then, the new identity (4.5) becomes more complicated because other addends appear after the integration by parts, owing to the derivatives of \(\gamma _3,\ldots ,\gamma _n\). Now, by the presence of these new terms the estimates from below in (4.3) are difficult, while the estimates from above remain possible.

We denote by \(\kappa _\varepsilon \) the rigid translation by \(\varepsilon \in \mathbb {R}\) in the \(x_1\) direction of the curve \(\kappa \). Namely, we let \(\kappa _{\varepsilon ,1}=\kappa _1+\varepsilon \) and \(\kappa _{\varepsilon ,2}=\kappa _2\). Recall the notation \(t_h =\psi (2\pi h)\) and \(t_{h\eta } =\psi (2\pi h+\eta )\), for \(h\in \mathbb {N}\) and \(\eta >0\). In particular, when we take \(\varepsilon _j\), \(h_j\) and \(\eta _j\) related to the jth correction-device, we have \(\kappa _{\varepsilon _j}|_{B_j}={\bar{\kappa }}|_{B_j}\).

In the study of the polynomial part of integrals in (3.15) we need estimates for the quantities

Lemma 4.4

We have

where \(O(\varepsilon ^2)/\varepsilon ^2\) is bounded as \(\varepsilon \rightarrow 0\).

Proof

The proof is an elementary computation:

\(\square \)

We estimate the terms in (3.13). The quantities \(\Delta _i^\gamma \) are introduced in (4.6).

Lemma 4.5

Let \(\gamma \) be a horizontal spiral with phase \(\varphi \). For all \(i=3,\ldots , n\) and for all \(k\in \mathbb {N}\) large enough we have

Proof

By (4.5) with vanishing boundary contributions, we obtain

so we are left with the estimate of the integral of \(r_i\). Using \(\kappa _2 = t \sin (\varphi (t))\) we get

where we let

From (1.4), we have \(|\kappa (t)| \le t\) for all \(t\in [0,1]\). By part (ii) of Theorem 2.1 we have \(|r_i(x)|\le C \Vert x\Vert ^{w_i}\) for all \(x\in \mathbb {R}^n\) near 0, with \(w_i = \alpha _i+\beta _i+2\). It follows that \(|r_i(\kappa (t))|\le C t ^{w_i}\) for all \(t\in [0,1]\), and \(|R_i(t)| \le C t^{w_i+1}\). We deduce that

and the claim follows. \(\square \)

Now we study the integrals in (3.15). Let us introduce the following notation

Lemma 4.6

Let \(\gamma \) be a horizontal spiral with phase \(\varphi \). Then for any \(j=3,\ldots ,n\) and for \(|\varepsilon _j|<t_{h_j\eta _j}\), we have

where \(C>0\) is constant.

Proof

For \(t\in B_j\) we have \(\kappa _2(t)={\bar{\kappa }}_2(t)\) and \({\bar{\kappa }}_1(t)=\kappa _1(t)+\varepsilon _j\). By Lagrange Theorem it follows that

where \(\kappa ^*(t)=(\kappa _1^*(t),\kappa _2(t))\) and \(\kappa _1^*(t)=\kappa _1(t) + \sigma _j\), \(0<\sigma _j<\varepsilon _j\). By Theorem 2.1 we have \(|\partial _1r_i(x)|\le C \Vert x\Vert ^{w_i-1}\) and so, also using \(\sigma _j<\varepsilon _j<t\),

This implies \(|\delta _{r_i}^\gamma (t)|\le C |\varepsilon _j|t^{w_i-1}\).

Now, the integral we have to study is

We integrate by parts the integral without \(\dot{\varphi }\), getting

Since the boundary term is 0, we obtain

and thus

\(\square \)

Remark 4.7

We stress again the fact that, when the coefficients \(a_i\) depend on all the variables \(x_1,\ldots ,x_n\), the computations above become less clear. As a matter of fact, there is a non-commutative effect of the devices due to the varying coordinates \(\gamma _3,\ldots ,\gamma _n\) that modifies the coefficients of the parameters \(\varepsilon _j\).

5 Solution to the end-point equations

In this section we solve the system of equations \(E_i^{k,\bar{{\mathcal {E}}}}=0\), \(i=3,\ldots ,n\). The homogeneous polynomials \(p_j\) are of the form \(p_j(x_1,x_2) = x_1^{\alpha _j+1} x_2 ^ {\beta _j}\), as in (3.8).

The quantities (3.13), (3.14) and (3.15) are, respectively,

where we used the short-notation \(I^{\alpha _i\beta _i}_k=I^{\alpha _i\beta _i}_{2\pi k, 2\pi (k+1) }\). So the end-point equations \(E_i^{k,\bar{{\mathcal {E}}}}=0\) read

with

We will regard k, \({h_j}\), and \(\eta _j\) as parameters and we will solve the system of equations (5.2) in the unknowns \(\varepsilon =(\varepsilon _3,\ldots ,\varepsilon _n)\). The functions \(f_i:\mathbb {R}^{n-2}\rightarrow \mathbb {R}\) are analytic and the data \(b_i\) are estimated from above by (4.8):

Theorem 5.1

There exist real parameters \(\eta _3,\ldots , \eta _n>0\) and integers \(h_3>\cdots >h_n\) such that for all \(k\in \mathbb {N}\) large enough the system of equations (5.2) has a unique solution \(\varepsilon = (\varepsilon _3,\ldots ,\varepsilon _n)\) satisfying

for a constant \(C>0\) independent of k.

Proof

We will use the inverse function theorem. Let \(A=\big ( a_{ij}\big ) _{i,j =3,\ldots ,n}\in M_{n-2} (\mathbb {R})\) be the Jacobian matrix of \(f= (f_3,\ldots , f_n)\) in the variables \(\varepsilon =(\varepsilon _3,\ldots ,\varepsilon _n)\) computed at \(\varepsilon =0\). By (4.6) and Lemma 4.6 we have

Here, we are using the fact that for \(h_j\rightarrow \infty \) we have

The proof of Theorem 5.1 will be complete if we show that the matrix A is invertible.

We claim that there exist real parameters \(\eta _3,\ldots , \eta _n>0\) and positive integers \(h_3>\cdots >h_n\) such that

The proof is by induction on n. When \(n=3\), the matrix A boils down to the real number \(a_{33}\). From (5.5) and (4.3) we deduce that for any \(\eta _3\in (0,\pi /4)\) we have

We can choose \(h_3\in \mathbb {N}\) as large as we wish.

Now we prove the inductive step. We assume that (5.6) holds when A is a \((n-3)\times (n-3)\) matrix, \(n\ge 4\). We develop \(\det (A)\) with respect to the first column using Laplace formula:

where

are the determinants of the minors. By the inductive assumption, there exist \(\eta _4,\ldots ,\eta _{n}\in (0,\pi /4)\) and integers \( h_4>\dots >h_n \) such that \(|P_i|>0\). By (4.3), for any \(\eta _3\in (0,\pi /4) \) we have the estimates

for absolute constants \(0<c_0<C_0\). The leading (larger) \(|a_{i3}|\) can be found in the following way. On the set \({\mathcal {A}} = \{ (\alpha _i,\beta _i) \in \mathbb {N}\times \mathbb {N}: i=3 ,\ldots , n\}\) we introduce the order \((\alpha ,\beta )<(\alpha ',\beta ')\) defined by the conditions \(\alpha +\beta < \alpha '+\beta '\), or \(\alpha +\beta = \alpha '+\beta '\) and \(\beta <\beta '\). We denote by \((\alpha _\iota ,\beta _\iota )\in {\mathcal {A}}\), for some \(\iota =3,\ldots ,n\), the minimal element with respect to this order relation.

We claim that, given \(\varepsilon _0>0\), for all \(h_3 > h_4\) large enough and for some \( 0< \eta _3<\pi /4\) the following inequalities hold:

In the case when \(i=3,\ldots ,n\) is such that \(\alpha _i+ \beta _i =\alpha _\iota +\beta _\iota \), then we have \(\beta _i>\beta _\iota \). By (5.9) and (4.2), inequality (5.10) is implied by \(\eta _3^{\beta _i -\beta _\iota } |P_i| \le \varepsilon _0|P_\iota |\), possibly for a smaller \(\varepsilon _0\). So we fix \(\eta _3\in (0,\pi /4)\) independently from \(h_3\) such that

In the case when \(i=3,\ldots ,n\) is such that \(\alpha _i+ \beta _i >\alpha _\iota +\beta _\iota \), inequality (5.10) is implied by

This holds for all \(h_3\in \mathbb {N}\) large enough.

Now we can estimate from below the determinant of A using (5.10). We have

and the last inequality holds for all \(h_3\in \mathbb {N}\) large enough, after fixing \(\eta _3>0\). This ends the proof of the theorem. \(\square \)

6 Nonminimality of the spiral

In this section we prove Theorem 1.2. Let \(\gamma \in AC([0,1];M)\) be a horizontal spiral of the form (1.4). We work in exponential coordinates of the second type centered at \(\gamma (0)\).

We fix on \(\mathcal {D}\) the metric g making orthonormal the vector fields \(X_1\) and \(X_2\) spanning \(\mathcal {D}\). This is without loss of generality, because any other metric is equivalent to this one in a neighborhood of the center of the spiral. With this choice, the length of \(\gamma \) is the standard length of its horizontal coordinates and for a spiral as in (1.4) we have

In particular, \(\gamma \) is rectifiable precisely when \(t{\dot{\varphi }} \in L^1(0,1)\), and \(\kappa \) is a Lipschitz curve in the plane precisely when \(t {\dot{\varphi }} \in L^ \infty (0,1)\).

For \(k\in \mathbb {N}\) and \(\bar{\mathscr {E}} = (\mathscr {E}_3,\ldots ,\mathscr {E}_n)\), we denote by \(\mathrm {D}(\gamma ;k,\bar{\mathscr {E}})\) the curve constructed in Sect. 3. The devices \(\mathscr {E}_j =(h_j,\eta _j,\varepsilon _j)\) are chosen in such a way that the parameters \(h_j,\eta _j\) are fixed as in Theorem 5.1 and \(\varepsilon _3, \ldots ,\varepsilon _n\) are the unique solutions to the system (5.2), for k large enough. In this way the curves \(\gamma \) and \( \mathrm {D}(\gamma ;k,\bar{\mathscr {E}})\) have the same initial and end-point.

We claim that for \(k\in \mathbb {N}\) large enough the length of \(\mathrm {D}(\gamma ;k,\bar{\mathscr {E}})\) is less than the length of \(\gamma \). We denote by \( \Delta L(k) =L(\gamma )- L(\mathrm {D}(\gamma ;k,\bar{\mathscr {E}})) \) the difference of length and, namely,

By (5.4), there exists a constant \(C_1>0\) independent of k such that the solution \(\varepsilon =(\varepsilon _3, \ldots ,\varepsilon _n)\) to the end-point equations (5.2) satisfies

We used (4.3) and the fact that \(w_i\ge 2\). The new constants \(C_2,C_3\) do not depend on k.

By (6.2) and (6.3), the inequality \(\Delta L(k)> 0\) is implied by

where \(C_4\) is a large constant independent of k. For any \(k\in \mathbb {N}\), we split the interval \(F_k= F_k^+\cup F_k^-\) where

On the set \(F_k^+\) we have

where the last inequality holds for all \(k\in \mathbb {N}\) large enough, and namely as soon as \( 3 C_4 t_k <1\). On the set \(F_k^-\) we have

where the last inequality holds for all \(k\in \mathbb {N}\) large enough, by our assumption on the spiral

Now (6.5) and (6.6) imply (6.4) and thus \(\Delta L(k)>0\). This ends the proof of Theorem 1.2.

References

Barilari, D., Chitour, Y., Jean, F., Prandi, D., Sigalotti, M.: On the regularity of abnormal minimizers for rank 2 sub-Riemannian structures. J. Math. Pures Appl. 9)133, 118–138 (2020)

Bellaïche, A.: The tangent space in sub-Riemannian geometry. Sub-Riemann. Geom. Prog. Math. 144, 1–78 (1996)

da Silva, A.B., Figalli, A., Parusiński, A., Rifford, L.: Strong Sard Conjecture and regularity of singular minimizing geodesics for analytic sub-Riemannian structures in dimension 3. arXiv:1810.03347

Hakavuori, E., Le Donne, E.: Non-minimality of corners in subRiemannian geometry. Invent. Math. 206(3), 693–704 (2016)

Hakavuori, E., Le Donne, E.: Blowups and blowdowns of geodesics in Carnot groups. arXiv:1806.09375

Hermes, H.: Nilpotent and high-order approximations of vector field systems. SIAM Rev. 33(2), 238–264 (1991)

Jean, F.: Control of Nonholonomic Systems: From Sub-Riemannian Geometry to Motion Planning. Springer Briefs in Mathematics, Springer, Cham (2014)

Le Donne, E., Leonardi, G.P., Monti, R., Vittone, D.: Extremal curves in nilpotent Lie groups. Geom. Funct. Anal. 23, 1371–1401 (2013)

Le Donne, E., Leonardi, G.P., Monti, R., Vittone, D.: Extremal polynomials in stratified groups. Commun. Anal. Geom. 26, 723–757 (2018)

Leonardi, G.P., Monti, R.: End-point equations and regularity of sub-Riemannian geodesics. Geom. Funct. Anal. 18(2), 552–582 (2008)

Liu, W., Sussmann, H.: Shortest paths for sub-Riemannian metrics on rank-two distributions. Mem. Am. Math. Soc. 118, 564, x+104 (1995)

Montgomery, R.: Abnormal minimizers. SIAM J. Control Optim. 32(6), 1605–1620 (1994)

Monti, R.: Regularity results for sub-Riemannian geodesics. Calc. Var. Partial Differ. Equ. 49(1–2), 549–582 (2014)

Monti, R., Pigati, A., Vittone, D.: Existence of tangent lines to Carnot–Carathéodory geodesics. Calc. Var. Partial Differ. Equ. 57(3), Paper No. 75, 18 (2018)

Sussmann, H.: A regularity theorem for minimizers of real-analytic subRiemannian metrics. In: Proceedings of the 53rd IEEE Conference on Decision and Control, pp. 4801–4806 (2015)

Funding

Open access funding provided by Universitá degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Malchiodi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.