Abstract

Large real-world networks typically follow a power-law degree distribution. To study such networks, numerous random graph models have been proposed. However, real-world networks are not drawn at random. Therefore, Brach et al. (27th symposium on discrete algorithms (SODA), pp 1306–1325, 2016) introduced two natural deterministic conditions: (1) a power-law upper bound on the degree distribution (PLB-U) and (2) power-law neighborhoods, that is, the degree distribution of neighbors of each vertex is also upper bounded by a power law (PLB-N). They showed that many real-world networks satisfy both properties and exploit them to design faster algorithms for a number of classical graph problems. We complement their work by showing that some well-studied random graph models exhibit both of the mentioned PLB properties. PLB-U and PLB-N hold with high probability for Chung–Lu Random Graphs and Geometric Inhomogeneous Random Graphs and almost surely for Hyperbolic Random Graphs. As a consequence, all results of Brach et al. also hold with high probability or almost surely for those random graph classes. In the second part we study three classical \(\textsf {NP}\)-hard optimization problems on PLB networks. It is known that on general graphs with maximum degree \(\Delta\), a greedy algorithm, which chooses nodes in the order of their degree, only achieves a \(\Omega (\ln \Delta )\)-approximation for Minimum Vertex Cover and Minimum Dominating Set, and a \(\Omega (\Delta )\)-approximation for Maximum Independent Set. We prove that the PLB-U property with \(\beta >2\) suffices for the greedy approach to achieve a constant-factor approximation for all three problems. We also show that these problems are APX-hard even if PLB-U, PLB-N, and an additional power-law lower bound on the degree distribution hold. Hence, a PTAS cannot be expected unless P = NP. Furthermore, we prove that all three problems are in MAX SNP if the PLB-U property holds.

Similar content being viewed by others

1 Introduction

A wide range of real-world networks exhibit a degree distribution that resembles a power-law [4, 39]. This means that the number of vertices with degree k is proportional to \(k^{-\beta }\), where \(\beta >1\) is the power-law exponent, a constant intrinsic to the network. This applies to Internet topologies [24], the Web [7, 35], social networks [1], power grids [43], and literally hundreds of other domains [40]. Networks with a power-law degree distribution are also called scale-free networks and have been widely studied.

To capture the degree distribution and other properties of scale-free networks, a multitude of random graph models have been proposed. These models include Preferential Attachment [7], the Configuration Model [2], Chung–Lu Random Graphs [18] and Hyperbolic Random Graphs [34]. Despite the multitude of random models, none of the models truly has the same set of properties as real world networks.

This shortcoming of random graph models motivates studying deterministic properties of scale-free models, as these deterministic properties can be checked for real-world networks. To describe the properties of scale-free networks without the use of random graphs, Aiello et al. [2] define \((\alpha , \beta )\)-Power Law Graphs. The problem of this model is that it essentially demands a perfect power law degree distribution, whereas the degree distributions of real networks normally exhibit slight deviations from power-laws. Therefore, \((\alpha , \beta )\)-Power Law Graphs are too constrained and do not capture most real networks.

To allow for those deviations in the degree distribution Brach et al. [11] define buckets containing nodes of degrees \(\left[ 2^i, 2^{i+1}\right)\). If the number of nodes in each bucket is at most as high as for a power-law degree sequence, a network is said to be power-law bounded, which we denote as a network with property PLB-U. They also define the property of PLB neighborhoods: A network has PLB neighborhoods if every node of degree k has at most as many neighbors of degree at least k as if those neighbors were picked independently at random with probability proportional to their degree. This property we abbreviate as PLB-N. PLB-U and PLB-N allow some degrees of parameterization: Both properties assume a power law distribution with power-law exponent \(\beta >2\), a possible shift \(t\geqslant 0\), and a scaling factor \(c_1\) and \(c_3\) respectively. A shift of t means that the number of nodes of degree k is proportional to \((k+t)^{-\beta }\). A formal definition of both properties can be found in Sect. 3. Brach et al. [11] showed experimentally that PLB-(U,N) properties hold for many real-world networks, which implies that the mentioned graph problems can be solved faster on these real-world networks than worst-case lower bounds for general graphs suggest.

2 Our Contribution

2.1 PLB Properties in Power-Law Random Graph Models

The PLB-(U,N) properties are designed to describe power-law graphs in a way that allows analyzing algorithms deterministically. As already mentioned, there is a multitude of random graph models [2, 7, 18, 34], which can be used to generate power-law graphs. Brach et al. [11] proved that the Erased Configuration Model [2] with a power-law degree distribution follows PLB-U and w. h. p. also PLB-N. In the Configuration Model a graph with a given degree sequence is sampled uniformly at random. This is done by generating \(\deg (v)\) stubs for each node \(v\in V\) and then matching these stubs independently and uniformly at random to create edges. In the Erased Configuration Model loops and multiple edges are removed in order to generate a simple graph. Since the Erased Configuration Model has a fixed degree sequence, it is relatively easy to prove the PLB-U property, but it is quite technical to prove the PLB-N property. There are other power-law random graph models, which are based on expected degree sequences, e.g. Chung–Lu Random Graphs [18]. Brach et al. argued that for showing the PLB-U property on these models, a typical concentration statement does not work, as it accumulates the additive error for each bucket. They leave it as a challenging open question, whether other random graph models also produce graphs with PLB-(U,N) properties with high probabilityFootnote 1.

The models we consider in Sect. 4 are Geometric Inhomogeneous Random Graphs, Hyperbolic Random Graphs, and Chung–Lu Random Graphs.

Geometric Inhomogeneous Random Graphs satisfy PLB-(U,N): Geometric Inhomogeneous Random Graphs (GIRGs) [12, 13, 33] consider an expected degree vector and an underlying geometry.

In GIRGs, all nodes draw a position uniformly at random and each edge (i, j) exists independently with a probability depending on \(\frac{w_i\cdot w_j}{W}\) and the distance of i and j in the underlying geometry. We show:

Theorem 4.11

Let G be a GIRG whose weight sequence \(\mathbf {w}\) follows a general power-law with exponent \(\beta '>2\). Then, for all \(2<\beta <\beta '\) and \(t=0\) there are constants \(c_1\) and \(c_3\) such that G fulfills PLB-U and PLB-N with high probability.

Hyperbolic Random Graphs satisfy PLB-(U,N): Hyperbolic Random Graphs (HRGs) [34] assume an underlying hyperbolic space. Each node is positioned uniformly at random in this space and connected to other nodes with a probability proportional to its hyperbolic distance to them. For Hyperbolic Random Graphs we show the following:

Theorem 4.14

Let G be a HRG with \(\alpha _H>\frac{1}{2}\). Then, G almost surely fulfills PLB-U and PLB-N with \(\beta =2\alpha _H+1-\eta\), \(t=0\), any constant \(\eta >0\), and some constants \(c_1\) and \(c_3\).

Chung–Lu Random Graphs satisfy PLB-(U,N): Chung–Lu Random Graphs (CLRGs) [18] assume a sequence of expected degrees \(w_1,\ w_2,\ldots ,\ w_n\) and each edge (i, j) exists independently at random with probability \(\min (1,\frac{w_i\cdot w_j}{W})\), where \(W=\sum _{i=1}^{n}{w_i}\). We show the following theorem:

Theorem 4.16

Let G be a CLRG whose weight sequence \(\mathbf {w}\) follows a general power-law with exponent \(\beta '>2\). Then, for all \(2<\beta <\beta '\) and \(t=0\) there are constants \(c_1\) and \(c_3\) such that G fulfills PLB-U and PLB-N with high probability.

2.2 Algorithmic Results

The above results imply that all results of Brach et al. [11] also hold w. h. p. for Geometric Inhomogeneous Random Graphs and Chung–Lu Random Graphs and almost surely for Hyperbolic Random Graphs. Therefore, the problems transitive closure, maximum matching, determinant, PageRank, matrix inverse, counting triangles and maximum clique have faster algorithms on Chung–Lu and Geometric Inhomogeneous Random Graphs w. h. p. and on Hyperbolic Random Graphs almost surely.

In this work we additionally consider the three classical \(\textsf {NP}\)-complete problems Minimum Dominating Set(MDS), Maximum Independent Set(MIS) and Minimum Vertex Cover(MVC) on PLB-U networks. For the first two problems, positive results are already known for \((\alpha , \beta )\)-Power Law Graphs, which are a special case of graphs with the PLB-U property and an additional power law lower bound on the degree distribution (PLB-L). Note that this deterministic graph class is much more restrictive and does not cover typical real-world graphs. On the contrary, our positive results only assume the PLB-U property. Our algorithmic results can therefore be applied to real-world networks after measuring the respective constants of the PLB-model. In Sect. 5 we prove our main lemma, Lemma 5.2 (the Potential Volume Lemma). Using the Potential Volume Lemma, we prove lower bounds for the size of MDS, MIS and MVC in the order of \(\Theta (n)\) on PLB-U networks with exponent \(\beta >2\). This essentially means, even taking all nodes as a solution gives a constant factor approximation. Furthermore, in Theorem 5.7 we prove that the greedy algorithm actually achieves a better constant approximation ratio for Minimum Dominating Set. The positive results from Sect. 5 also hold for \((\alpha , \beta )\)-Power Law Graphs.

Brach et al. [11] proved that for PLB-(U,N) networks with \(\beta >3\) finding a maximum clique is solvable in polynomial time. This result gives rise to the question whether the PLB-N property can be helpful in solving other NP-complete problems on power-law graphs in polynomial time. In Sect. 6 we consider the mentioned NP-Complete problems MDS, MIS and MVC and prove that these problems are APX-hard even for PLB-(U,L,N) networks with \(\beta >2\). Therefore, at least for the three problems we considered, even the PLB-N property is not enough to make those problems polynomial-time solvable. As a side product we also get a lower-bound on the approximability of the respective problems under some complexity theoretical assumptions. Since the negative results for \((\alpha , \beta )\)-Power Law Graphs imply the same non-approximability on graphs with PLB-(U,L), we only consider simple graphs with PLB-(U,L,N) in Sect. 6.

Finally, we show that all three problems are in MAX-SNP for graphs with PLB-U and \(\beta >2\). This implies that, if we reduce any of those problems to Max 3-Sat, there exists an \(fpt-\)algorithm where the parameter is the maximum number of satisfiable clauses minus a lower-bound on this number, which is linear in the total number of clauses. This parameter can be considerably smaller than the solution size of the original problem.

2.2.1 Dominating Set

Given a Graph \(G=(V,E)\), a Minimum Dominating Set (MDS) is a subset \(S\subseteq {V}\) of minimum size such that for each \(v\in {V}\) either v or a neighbor of v is in S. MDS cannot be approximated within a factor of \((1-\varepsilon )\,\ln |V|\) for any \(\varepsilon >0\) [25] unless \(\textsf {NP}\subseteq \textsf {DTIME}(|V|^{\log \log |V|})\) and not to within a factor of \(\ln \Delta - c\ln \ln \Delta\) for some \(c>0\) [16] unless \(\textsf {P}=\textsf {NP}\), although a simple greedy algorithm achieves an approximation ratio of \(1+\ln \Delta\) [32]. We also know that even for sparse graphs, MDS cannot be approximated within a factor of \(o(\ln (n))\), since we could have a graph with a star of \(n-\sqrt{n}\) nodes to which an arbitrary graph of the \(\sqrt{n}\) remaining nodes is attached [36]. Furthermore, if we parameterize Dominating Set with the size of the solution as a parameter, it is \(\textsf {W[2]}-\)complete [21].

MDS has already been studied in the context of \((\alpha , \beta )\)-Power Law Graphs. Pandurangan, and Park [26] showed that the problem remains \(\textsf {NP}\)-hard for \(\beta >0\). Shen et al. [46] proved that there is no \(\left( 1+\frac{1}{3120\zeta (\beta )3^\beta }\right)\)-approximationFootnote 2 for \(\beta >1\) unless \(\textsf {P}=\textsf {NP}\). They also showed that the greedy algorithm achieves a constant approximation factor for \(\beta >2\), showing that in this case the problem is APX-hard. Gast, Hauptmann, and Karpinski [29] also proved a logarithmic lower bound on the approximation factor when \(\beta \leqslant 2\).

For graphs with the PLB-U property and power law exponent \(\beta >2\) we will show a lower bound on the size of the minimum dominating set in the range of \(\Theta (n)\), which already gives us a constant factor approximation by taking all nodes. This also means that any brute-force algorithm which runs in exponential time is in FPT when we take the solution size as a parameter.

In contrast to \((\alpha , \beta )\)-Power Law Graphs the PLB-U property captures a wide range of real networks, making it possible to transfer our results to them. All our upper bounds are in terms of the following two expressions, which depend on the parameters \(c_1\), \(\beta\) and t of the PLB-U property (cf. Definition 3.1):

In the rest of the paper we assume the parameters \(c_1\), \(\beta\) and t to be constants, which implies that \(a_{\beta ,t}\) and \(b_{c_1,\beta ,t}\) are constant as well.

Theorem 5.3

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the minimum dominating set is of size at least

Furthermore, we will show that the greedy algorithm actually achieves a lower approximation factor than the one we get from the bound in Theorem 5.3 (see Fig. 3 for a comparison).

Theorem 5.7

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the classical greedy algorithm for Minimum Dominating Set (cf. [22]) has an approximation factor of at most

Note that in networks with PLB-U the maximum degree can be \(\Delta =\Theta (n^{\frac{1}{\beta -1}})\). That means the simple bound for the greedy algorithm gives us only an approximation factor of \(\ln (\Delta +1)=\Theta (\log n)\).

In Minimum Connected Dominating Set we are looking for a smallest dominating set S with the extra property that the induced subgraph of S in G is connected. For this related problem we prove the following constant approximation factor for the greedy algorithm introduced by Ruan et al. [44] (Table 1).

Theorem 5.8

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the greedy algorithm for Minimum Connected Dominating Set (cf. [44]) has an approximation factor of at most

Furthermore, we show that Minimum Dominating Set remains \(\textsf {APX}\)-hard on networks with PLB-U and \(\beta >2\), even with the PLB-L and PLB-N property. Finally, we prove that on networks with PLB-U and \(\beta >2\), Minimum Dominating Set is in MAX SNP (Table 2).

2.2.2 Independent Set

For a graph \(G=(V,E)\), Maximum Independent Set (MIS) consists of finding a subset \(S\subseteq {V}\) of maximum size, such that no two different vertices \(u,v\in S\) are connected by an edge. MIS cannot be approximated within a factor of \(\Delta ^\varepsilon\) for some \(\varepsilon >0\) unless \(\textsf {P}=\textsf {NP}\) [6], although a simple greedy algorithm achieves an approximation factor of \(\frac{\Delta +2}{3}\) [30]. We also know from Turán’s Theorem that every graph with an average degree of \({\bar{d}}\) has a maximum independent set of size at least \(\frac{n}{{\bar{d}}+1}\). This lower bound can already be achieved by the same greedy algorithm [30, Theorem 1]. When we consider parameterized Independent Set with solution size as the parameter, it is W[1]-complete [21].

MIS has also been studied in the context of \((\alpha , \beta )\)-Power Law Graphs. Ferrante et al. [26] showed that the problem remains \(\textsf {NP}\)-hard for \(\beta >0\). Shen et al. [46] proved that for \(\beta >1\) there is no \(\left( 1+\frac{1}{1120\zeta (\beta )3^\beta }-\varepsilon \right)\)-approximation unless \(\textsf {P}=\textsf {NP}\) and Hauptmann and Karpinski [31] gave the first non-constant bound on the approximation ratio of MIS for \(\beta \leqslant 1\).

Since the PLB-U property with \(\beta >2\) induces a constant average degree, the greedy algorithm already gives us a constant approximation factor for Maximum Independent Set on networks with these properties. Although we can not give better bounds for the maximum independent set, Theorem 5.3 immediately implies a lower bound for the size of all maximal independent sets.

Theorem 5.13

In a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), every maximal independent set is of size at least

It is easy to see that these lower bounds do not hold in sparse graphs in general, since in a star the center node also constitutes a maximal independent set.

Furthermore, we show that Maximum Independent Set remains \(\textsf {APX}\)-hard in networks with PLB-U and \(\beta >2\), even with the PLB-L and PLB-N property. Finally, we prove that on networks with PLB-U and \(\beta >2\), Maximum Independent Set is also in MAX SNP.

2.2.3 Vertex Cover

Given a graph \(G=(V,E)\), Minimum Vertex Cover (MVC) consists of finding a subset \(S\subseteq V\) of minimum size such that each edge \(e\in E\) is incident to at least one node from S. MVC cannot be approximated within a factor of \(10\sqrt{5}-21\approx 1.3606\) unless P=NP, whereas the simple algorithm which greedily constructs a maximal matching achieves an approximation ratio of 2 [41]. Unfortunatly, the greedy algorithm based on node degrees only achieves an approximation factor of \(\ln \Delta\) (Theorem 5.15).

MVC has also been studied in the context of \((\alpha , \beta )\)-Power Law Graphs. Shen et al. [46] proved that there is no PTAS for \(\beta >1\) under the Unique Games Conjecture.

We can show that in networks with PLB-U and without isolated vertices the minimum vertex cover has to have a size of at least \(\Theta (n)\). This follows immediately from Theorem 5.3, since in a graph without isolated nodes every vertex cover is also a dominating set:

Theorem 5.16

In a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the minimum vertex cover is of size at least

Also, we show that Minimum Vertex Cover remains \(\textsf {APX}\)-hard in networks with PLB-U and \(\beta >2\), even with the PLB-L and PLB-N property. After that we prove that on networks with PLB-U and \(\beta >2\), Minimum Vertex Cover is in MAX SNP.

3 Preliminaries and Notation

We mostly consider undirected multigraphs \(G=(V,E)\) without loops, where V denotes the set of vertices and E the multiset of edges with \(n=|V|\). In the following we will refer to multigraphs as graphs and state explicitly if we talk about simple graphs. Throughout the paper we use \(\deg (v)\) to denote the degree of node v. Furthermore, we use \(d_{min}\) and \(\Delta\) to denote the minimum and maximum degree of the graph respectively. For a set \(S\subseteq V\), we let \(\textsc {vol}(S)=\sum _{v\in S}\deg (v)\) denote the volume of S. We use \(b_i\) to denote the set of nodes \(v\in V\) with \(\deg (v)\in [2^i,2^{i+1})\). For \(v\in V\) we let \(N(v)=\left\{ u\in V\mid \left\{ u,v\right\} \in E\right\}\) denote the exclusive neighborhood of v and we let \(N^{+}(v)=\left\{ u\in V\mid u=v \vee \left\{ u,v\right\} \in E\right\}\) denote the inclusive neighborhood of v. Analogously, for a set \(S\subseteq V\), we let \(N^{+}(S)=\left\{ v\in V\mid \exists u\in S:v\in N^{+}(u)\right\}\) denote the inclusive neighborhood of S and we let \(N(S)=N^{+}(S)\setminus S\) denote the exclusive neighborhood of S. Furthermore, for \(v\in V\) we let \(N^{r}(v)=\left\{ u\in V\mid {\text {dist}}(u,v)\leqslant r\right\}\) denote the r-closed neighborhood of v, where \({\text {dist}}(u,v)\) denotes the length of a shortest path from u to v in G. Analogously, for a set of nodes \(S\subseteq V\) we let \(N^{r}(S)=\bigcup _{v\in S}N^{r}(v)\) denote the r-closed neighborhood of S. If not stated otherwise \(\log\) denotes the logarithm of base 2 and \(\ln\) denotes the natural logarithm.

Now we give a formal definition of the PLB-U, PLB-L and PLB-N properties.

Definition 3.1

(PLB-U [11]) Let G be an undirected n-vertex graph and let \(c_1>0\) be a universal constant. We say that G is power law upper-bounded (PLB-U) for some parameters \(1<\beta ={\mathcal {O}}(1)\) and \(t\geqslant 0\) if for every integer \(d\geqslant 0\), the number of vertices v, such that \(\deg (v)\in \left[ 2^d,2^{d+1}\right)\) is at most

Definition 3.2

(PLB-L) Let G be an undirected n-vertex graph and let \(c_2>0\) be a universal constant. We say that G is power law lower-bounded (PLB-L) for some parameters \(1<\beta ={\mathcal {O}}(1)\) and \(t\geqslant 0\) if for every integer \(\left\lfloor \log d_{min}\right\rfloor \leqslant d \leqslant \left\lfloor \log \Delta \right\rfloor\), the number of vertices v, such that \(\deg (v)\in \left[ 2^d,2^{d+1}\right)\) is at least

Since the PLB-U property alone can capture a much broader class of networks, for example empty graphs and rings, this lower-bound is important to restrict networks to real power-law networks. In the definition of PLB-L \(d_{min}\) and \(\Delta\) are necessary because in real world power law networks there are no nodes of lower or higher degree, respectively.

It is also noteworthy, that not all values of \(c_1\) (respectively \(c_2\)) are eligible, given t, \(\beta\), and n. These constants have to be big (small) enough for the buckets to encompass all n nodes. If this is the case, we call \(c_1\) (\(c_2\)) admissable.

Definition 3.3

(PLB-N [11]) Let G be an undirected n-vertex graph with PLB-U for some parameters \(1<\beta ={\mathcal {O}}(1)\) and \(t\geqslant 0\). We say that G has PLB neighborhoods (PLB-N) if for every vertex v of degree k, the number of neighbors of v of degree at least k is at most \(c_3\max \left( \log n, (t+1)^{\beta -2}k\sum _{i=k}^{n-1}{i(i+t)^{-\beta }}\right)\) for some universal constant \(c_3>0\).

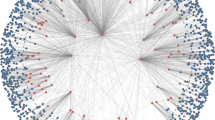

Brach et al. [11] assume that PLB-U and PLB-N for the same graph have the same values of t and \(\beta\). This makes sense, since both bounds describe the same (power-law) degree sequence. Henceforth, in the rest of this paper, we will assume that PLB-U, PLB-N, and PLB-L for the same graph also have the same values of t and \(\beta\). How well the degree distributions of real networks and randomly generated power-law graphs fit into the PLB-U and PLB-L bounds can be seen in Fig. 1.

Total number of nodes with degrees in ranges \(\left[ 2^i, 2^{i+1}\right)\) for \(i\geqslant 0\) for the giant components of some real-world networks from the SNAP dataset [37] and a Hyperbolic Random Graph. Upper bounds ( ) fitted to function PLB-U (see Definition 3.1). Lower bounds (

) fitted to function PLB-U (see Definition 3.1). Lower bounds ( ) fitted to function PLB-L (see Definition 3.2) with t and \(\beta\) the same as for PLB-U. As can be seen the constants \(c_1\), \(c_2\), \(\beta\), and t of PLB-U and PLB-L are rather small. Additionally, in most cases \(c_1\) and \(c_2\) are close together, which indicates a good fit. Furthemore, our constants differ from those by Brach et al. [11], since they first converted their networks to directed graphs, which is not necessary in our case (Color figure online)

) fitted to function PLB-L (see Definition 3.2) with t and \(\beta\) the same as for PLB-U. As can be seen the constants \(c_1\), \(c_2\), \(\beta\), and t of PLB-U and PLB-L are rather small. Additionally, in most cases \(c_1\) and \(c_2\) are close together, which indicates a good fit. Furthemore, our constants differ from those by Brach et al. [11], since they first converted their networks to directed graphs, which is not necessary in our case (Color figure online)

Throughout the paper we will also make repeated use of the following Lemma, which is a more precise version of [11, Lemma 2.2]. It will help us relate power-law bounds to the bucketed bounds of the PLB properties.

Lemma 3.4

Let \(1\leqslant a\leqslant b/2\), for \(a,b\in \mathbb {N}\), and let \(c>0\) be a constant. Then

Proof

\(\square\)

Furthermore, we will use the following standard Chernoff Bounds (cf. [23, Theorem 1.1]) to show that our random models generate graphs with PLB-U and PLB-N.

Theorem 3.5

Let \(X:=\sum _{i\in [n]}X_i\), where \(X_i\) for \(i\in [n]\) are independently distributed in [0, 1]. Then, for \(0<\varepsilon <1\),

and

If \(t> 2\cdot e\cdot \mathbb {E}\left[ X\right]\), then

4 Power-Law Random Graphs and the PLB properties

In this section we analyze some well-known power law random graph models and prove that w. h. p. or almost surely graphs generated by these models have PLB-U and PLB-N properties. We consider \((\alpha ,\beta )\)-Power Law Graphs, Chung–Lu Random Graphs, Geometric Inhomogeneous Random Graphs, and Hyperbolic Random Graphs, because they are common models and rather easy to analyze. Furthermore, they assume independence or some geometrically implied sparseness of edges, which is important for establishing the PLB-N property.

4.1 \((\alpha , \beta )\)-Power Law Graph

First, we consider \((\alpha , \beta )\)-Power Law Graphs. Note that \((\alpha , \beta )\)-Power Law Graphs are no random graph model. Instead, they are classes of graphs whose degree distributions follow a power-law with scaling \(e^\alpha\) and exponent \(\beta\). We show that this already ensures the PLB-U property. We will also see that they satisfy PLB-N if they are generated with the Erased Configuration Model. This follows with a result by Brach et al. [11].

Formally, \((\alpha ,\beta )\)-Power Law Graphs are defined as follows.

Definition 4.1

(\((\alpha ,\beta )\)-Power Law Graph [3]) An \((\alpha ,\beta )\)-Power Law Graph is an undirected multigraph with the following degree distribution depending on two given values \(\alpha\) and \(\beta\). For \(1\leqslant i\leqslant \Delta =\left\lfloor e^{\alpha /\beta }\right\rfloor\) there are \(y_i=\left\lfloor \frac{e^\alpha }{i^\beta }\right\rfloor\) nodes of degree i.

Intuitively those graphs already satisfy PLB-U by definition. The following theorem confirms this intuition. Remember that throughout the paper \(\zeta\) denotes the Riemann zeta function.

Theorem 4.2

The \((\alpha ,\beta )\)-Power Law Graph with \(\beta >1\) has the PLB-U property with \(c_1=\frac{1}{\zeta (\beta )}\), \(t=0\) and exponent \(\beta\).

Proof

It holds that the number of nodes of degree between \(2^d\) and \(2^{d+1}-1\) is at most

due to the definition of the degree distribution and the fact that \(n=\left\lfloor \zeta (\beta )e^\alpha \right\rfloor\) for \(\beta >1\). \(\square\)

Since the degree sequence of those graphs follow a power-law, we can show that PLB-L holds as well.

Theorem 4.3

The \((\alpha ,\beta )\)-Power Law Graph with \(\beta >1\) has the PLB-L property with \(c_1=\frac{1}{2\zeta (\beta )}\), \(t=0\) and exponent \(\beta\).

Proof

The number of nodes of degree i is exactly \(\left\lfloor \frac{e^\alpha }{i^\beta }\right\rfloor\). Since \(i\leqslant \left\lfloor e^{\alpha /\beta }\right\rfloor\), this number is at least one. Therefore \(\left\lfloor \frac{e^\alpha }{i^\beta }\right\rfloor \geqslant \frac{1}{2}\frac{e^\alpha }{i^\beta }\). It now holds that the number of nodes of degree between \(2^d\) and \(2^{d+1}-1\) is at least

due to the definition of the degree distribution and the fact that \(n=\zeta (\beta )e^\alpha\) for \(\beta >1\).\(\square\)

Since \((\alpha ,\beta )\)-Power Law Graphs describe classes of graphs with given degree distributions, PLB-N is not satisfied automatically. However, one can sample those graphs randomly with the Erased Configuration Model [2] to guarantee PLB-N with high probability. The Configuration Model gets a degree sequence as input and randomly generates a multigraph with this sequence. This is done by creating \(\deg (u)\) many stubs for each node u and then connecting stubs uniformly at random. In the Erased Configuration Model loops and multi-edges are erased after this process in order to generate simple graphs. Brach et al. [11] proved that random networks created by the Erased Configuration Model whose prescribed degree sequence follows PLB-U, also follow PLB-U and PLB-N with high probability. This yields the following lemma, which concludes our section on \((\alpha ,\beta )\)-Power Law Graphs.

Lemma 4.4

([11]) A random \((\alpha ,\beta )\)-Power Law Graph with \(\beta >1\) created with the Erased Configuration Model has the PLB-U and PLB-N properties with high probability.

4.2 Geometric Inhomogeneous Random Graphs

In this section we consider the very general model of Geometric Inhomogeneous Random Graphs (GIRGs), which was introduced by Bringmann et al. [12]. In this model, nodes are distributed uniformly at random on some underlying ground space. The probability of creating an edge between two nodes then depends on given weights for those nodes and on their distance in the ground space. Formally, the model is defined as follows.

Definition 4.5

(Geometric Inhomogeneous Random Graphs (GIRGs) [12, 14]) A Geometric Inhomogeneous Random Graph is a simple graph \(G=(V,E)\) with the following properties. For \(|V|=n\) let \(w=(w_1,\ldots , w_n)\) be a sequence of positive weights. Let \(W=\sum _{i=1}^n w_i\) be the total weight. For any vertex v, draw a point \(x_v\) uniformly at random from the d-dimensional torus \({\mathbb {T}}^d=\mathbb {R}^d\setminus \mathbb {Z}^d\) with \(d\in \mathbb {N}^{+}\). We connect vertices \(u\ne v\) independently with probability \(p_{uv}=p_{uv}(r)\), which depends on the weights \(w_u\), \(w_v\) and on the positions \(x_u\), \(x_v\), more precisely, on the distance \(r=\left\| x_u-x_v\right\|\). For some fixed constant \(\alpha >1\) the edge probability is

The definition of GIRGs lends itself to encompass a multitude of models with a high degree of freedom regarding expected degree distributions and underlying geometries. Thus, we need additional constraints to show that this model generates instances that are power-law bounded. One straightforward condition is that the expected node degrees are power-law distributed. The following definition captures this condition formally.

Definition 4.6

(General Power-law [12]) A weight sequence \(\mathbf {w}\) is said to follow a general power-law with exponent \(\beta > 2\) if \(w_{\min }:=\min \left\{ w_v\mid v\in V\right\} =\Omega (1)\) and if there is a \({\bar{w}}={\bar{w}}(n)\geqslant n^{\omega (1/\log \log n)}\) such that for all constants \(\eta >0\) there are \(\varepsilon _1,\varepsilon _2>0\) with

where the first inequality holds for all \(w_{\min }\leqslant w \leqslant {\bar{w}}\) and the second holds for all \(w\geqslant w_{\min }\).

Note that the former definition states that for any choice of \(\eta >0\) one can find constants \(c_1\) and \(c_2\) with the desired properties. However, for PLB-L, PLB-U, and PLB-N to hold it is sufficient to choose a fixed \(\eta\) and constants \(\varepsilon _1\) and \(\varepsilon _2\) that satisfy the property. Another property implied by the general power-law is that the maximum degree is \(\Delta =\mathcal {O}\left( n^{1/(\beta -\eta -1)}\right)\). We will use this property in the proof of Theorem 4.11.

We are now going to prove that GIRGs fulfill PLB-U and PLB-N. For this we need the following theorem and auxiliary lemmas by Bringmann et al. [13].

Theorem 4.7

([13]) Let G be a GIRG with a weight sequence that follows a general power-law with exponent \(\beta\) and average degree \(\Theta (1)\). Then, with high probability the degree sequence of G follows a general power law with exponent \(\beta\) and average degree \(\Theta (1)\), i.e for all constants \(\eta >0\) there exist constants \(\varepsilon _3,\varepsilon _4 >0\) such that w. h. p.

where the first inequality holds for all \(1\leqslant d\leqslant {\bar{w}}\) and the second holds for all \(d\geqslant 1\).

The following three lemmas are necessary to prove Theorem 4.11. Lemma 4.8 states that the marginal edge probability between two nodes u and v is essentially \(\min \left\{ 1,\frac{w_u w_v}{W}\right\}\). Furthermore, even conditioned on a node’s position \(x_u\), all edges between u and other nodes are present independently.

Lemma 4.8

([13]) Fix \(u\in [n]\) and \(x_u\in {\mathbb {T}}^d\). All edges \(\left\{ u,\ v\right\}\), \(u\ne v\), are independently present with probability

In the proof of Theorem 4.11 we will only use the marginal edge probability from the former lemma. Lemma 4.9 bounds the expected node degrees asymptotically.

Lemma 4.9

([13]) For any \(v\in [n]\) in a Geometric Inhomogeneous Random Graph, we have

The former two lemmas imply that we can use standard Chernoff bounds to bound node degrees, but we also need the following auxiliary lemma. It bounds the expected volume of nodes with given maximum or minimum weights.

Lemma 4.10

([13]) Let \(\mathbf {w}\) be a general power-law weight sequence with exponent \(\beta\) and let \(W_{\geqslant w}=\sum _{\mathbf {w}_v:\mathbf {w}_v\geqslant w}{\mathbf {w}_v}\) and \(W_{\leqslant w}=\sum _{\mathbf {w}_v:\mathbf {w}_v\leqslant w}{\mathbf {w}_v}\). Then the total weight satisfies \(W=\Theta (n)\). Moreover, for all sufficiently small \(\eta >0\),

-

(i)

\(W_{\geqslant w}=\mathcal {O}(nw^{2-\beta +\eta })\) for all \(w\geqslant w_{\min }\),

-

(ii)

\(W_{\geqslant w}=\Omega (nw^{2-\beta -\eta })\) for all \(w_{\min }\leqslant w \leqslant {\bar{w}}\),

-

(iii)

\(W_{\leqslant w}=\mathcal {O}(n)\) for all w, and

-

(iv)

\(W_{\leqslant w}=\Omega (n)\) for all \(w=\omega (1)\).

We are now ready to prove our first main theorem: For GIRGs whose weight sequence follows a general power-law with exponent \(\beta '\), we can always find constants \(c_1\) and \(c_2\) such that PLB-U and PLB-N are satisfied for \(t=0\) and any power law exponent \(2<\beta <\beta '\). Intuitively, this holds, since PLB-U and PLB-N only demand upper bounds on the number of nodes in a bucket or the neighborhood of a node. By decreasing the power-law exponent in PLB-U and PLB-N, these upper bounds only get less restrictive.

The proof works as follows. First, we show that Theorem 4.7 implies PLB-U. Second, we show PLB-N by bounding the ranges of node degrees depending on weights. We show that only nodes of sufficiently high weights can have a degree of at least k. It now suffices to consider those nodes with high weights as potential neighbors of a degree-k node. Since the edges between a degree-k node and its potential neighbors are drawn independently, we can use a Chernoff bound to show that the number of neighbors of degree at least k is concentrated around its expected value. All these statements hold with high probability. Thus, we can simply collect the error probabilities without considering dependencies. This shows PLB-N.

Theorem 4.11

Let G be a GIRG whose weight sequence \(\mathbf {w}\) follows a general power-law with exponent \(\beta '>2\). Then, for all \(2<\beta <\beta '\) and \(t=0\) there are constants \(c_1\) and \(c_3\) such that G fulfills PLB-U and PLB-N with high probability.

Proof

First, we show that G fulfills PLB-U with high probability. Let \(k=2^d\). It now holds that

due to Theorem 4.7 and Lemma 3.4. This means that G has the PLB-U property with \(\beta =\beta '-\eta\), \(t=0\) and \(c_1=\varepsilon _4\frac{\beta '-1-\eta }{1-2^{-\beta '+1+\eta }}\).

Now we show that G also fulfills PLB-N with high probability. To this end, we consider a node v with weight \(w_v\). We first bound the range into which the degree \(\deg (v)\) of v can fall with high probability. We pessimistically assume that \(\deg (v)\) takes its lower bound, because then the number of possible neighbors of degree at least \(\deg (v)\) is maximized. Further, we assume that all other nodes’ degrees take their respective upper bounds. Within these bounds, only nodes with high enough weights can reach a degree of at least \(\deg (v)\). We call these nodes potential neighbors of v. Finally, we bound the number of edges between v and its potential neighbors. This can be done with a standard Chernoff bound, since all edges between v and its potential neighbors are drawn independently.

Due to Lemma 4.8 we can use standard Chernoff bounds as stated in Theorem 3.5 to bound the degrees of nodes. According to Lemma 4.9 there are constants \(c_7, c_8 > 0\) such that

holds for all \(v\in V\). Let c be an appropriately chosen constant. For a node \(v\in V\) with \(w_v\geqslant c\ln n\) it holds that

and

For a sufficiently large constant c it holds w. h. p. that

Thus, the degrees of nodes with weights at least logarithmic in n are concentrated around their weights. However, this does not hold for nodes with smaller weights. Therefore, we will consider them separately. First, we show that nodes with smaller weights cannot reach much higher degrees than \(c\cdot \ln n\). Thus, for nodes of high weight and high degree they do not have to be considered as neighbors. This is because PLB-N is only concerned with neighbors of same or higher degree. Second, we show that nodes with smaller weights also comply with PLB-N. Remember that PLB-N allows a node of degree k to have \(c_3\cdot \log n\) neighbors of degree at least k. Since nodes with small weights of at most \(c\cdot \ln n\) have at most \(\mathcal {O}(\log n)\) neighbors in total with high probability, this property holds.

Let us now consider nodes \(v\in V\) with low weights \(w_v < c\ln n\). Due to the third statement in Theorem 3.5 it holds that

since \(2e\cdot c_8\cdot c\ln n>2e\cdot c_8\cdot w_v\geqslant 2e\cdot \mathbb {E}\left[ \deg (v)\right]\). If we choose c sufficiently large, it holds w. h. p. that the degrees of these nodes are at most \(2e\cdot c_8\cdot c\ln n={\mathcal {O}}(\log n)\). Thus, nodes with low weights already comply with the bound from PLB-N. Also, \(2e\cdot c_8\cdot c\ln n\) is smaller than the lower bound from inequality (1) for nodes with weight \(w_u\geqslant 4e\frac{c_8}{c_7}c\ln n\).

Therefore, with high probability no node of weight \(w_u\geqslant 4e\frac{c_8}{c_7}c\ln n\) can have a node with weight \(w_v < c\ln n\) as a neighbor of same or higher degree.

Before we focus on nodes with high weight \(w_u\geqslant 4e\frac{c_8}{c_7}c\ln n\), we still have to consider nodes \(v\in V\) with intermediate weights \(c\ln n\leqslant w_v < 4e\frac{c_8}{c_7}c\ln n\). Since the degrees of those nodes are concentrated around \(w_v\), it holds that \(\deg (v)\leqslant 6e\frac{{c_8}^2}{c_7}c\ln n={\mathcal {O}}(\log n)\) w. h. p. due to inequality (1). This also complies with the bounds from PLB-N.

It remains to show that PLB-N also holds for nodes \(v\in V\) with high weights \(w_v \geqslant 4e\frac{c_8}{c_7}c\ln n\). Again, we can assume \(\deg (v)\geqslant \frac{1}{2}c_7\cdot w_v\geqslant 2e\cdot c_8\cdot c\ln n\). As we have seen, no node u with \(w_u<c\ln n\) can reach a degree of \(\deg (v)\) with high probability. That means, the only nodes that can reach a degree of at least \(\deg (v)\) w. h. p. are those with

due to inequality (1). These are the potential neighbors of v with degree at least \(\deg (v)\). Let X be the number of edges between v and these potential neighbors. Now it holds that

due to Lemmas 4.8 and 4.10. We can assume that the expected value is at most \(c_9\cdot w_v^{3-\beta '+\eta }\). Again, we can use the Chernoff bounds from Theorem 3.5 to bound the number of these edges. If \(c_9\cdot w_v^{3-\beta '+\eta }< c\ln n\) it holds that

In that case the number of neighbors with degree at least \(\deg (v)\) is at most \(2\cdot e\cdot c\ln n\) with high probability. As in the case of low weight nodes, this complies with PLB-N. If \(c_9\cdot w_v^{3-\beta '+\eta }\geqslant c\ln n\) it holds that

This is a high probability if c is chosen appropriately high. It now holds w. h. p. that

due to inequality (1). In order to get an expression that resembles PLB-N, we use Lemma 3.4. Although \(\deg (v)\) is a random variable, for all realizations \(\deg (v)=k\) within our high probability bounds, we can apply the lemma with \(a=k\), \(b=n\), and \(c=\beta '-\eta -2\). The requirement \(1\geqslant a\geqslant b/2\) holds, since \(1\geqslant k\) holds due to inequality (1) and \(a\leqslant \Delta =\mathcal {O}\left( n^{1/(\beta '-\eta -1)}\right) =o(n)\) is implied by the general power law. Lemma 3.4 now yields

This implies that the number of neighbors of v with degree at least \(\deg (v)\) is at most \({\mathcal {O}}\left( \deg (v)\sum _{i=\deg (v)}^{n-1}{i^{1-\beta '+\eta }}\right)\), which is at most

for \(\beta =\beta '-\eta\), a suitable constant \(c_3\) and \(t=0\). Thus, in all cases PLB-N holds as desired.\(\square\)

We have proven that GIRGs with a power-law weight sequence generate power-law bounded graphs with high probability. There are two other random graph models which are highly related to GIRGs and for which we can show PLB-(U,N) as well. First, Hyperbolic Random Graphs, which were shown to be a special case of GIRGs by Bringmann et al. [12]. Second, Chung–Lu Random Graphs, which generate random instances in a very similar way to Geometric Inhomogeneous Random Graphs, but without an underlying geometry. Therefore, we consider both of these models as special cases of GIRGs and analyze them in the following subsections.

4.2.1 Hyperbolic Random Graphs

In this section we show PLB-U and PLB-N in Hyperbolic Random Graphs (HRGs). In HRGs, nodes are uniformly distributed on the hyperbolic plane. Then, two nodes are connected with probability proportional to their hyperbolic distance. The following definition formalizes the model.

Definition 4.12

(Hyperbolic Random Graph (HRG) [34]) Let \(\alpha _{H}>0,\) \(C_{H}\in {\mathbb {R}},\) \(T_H>0\), \(n\in {\mathbb {N}}\) and \(R=2\log n + C_H\). The Hyperbolic Random Graph \(G_{\alpha _H,C_H,T_H}(n)\) is a simple graph with vertex set \(V=[n]\) and the following properties:

-

Every vertex \(v\in [n]\) draws coordinates \((r_v,\phi _v)\) independently at random, where the angle \(\phi _v\) is chosen uniformly at random in \([0,2\pi )\) and the radius \(r_v\in [0,R]\) is random according to density \(f(r)=\frac{\alpha _H \sinh (\alpha _H r)}{\cosh (\alpha _H R)-1}\).

-

Every potential edge \(e=\left\{ u,v\right\} \in \left( {\begin{array}{c}[n]\\ 2\end{array}}\right)\) is present independently with probability

$$\begin{aligned} p_H(d(u,v))=\left( 1+e^{\frac{d(u,v)-R}{2T_H}}\right) ^{-1}, \end{aligned}$$where d(u, v) is the hyperbolic distance between u and v, i.e. the non-negative solution of the equation

$$\begin{aligned} \cosh {(d(u,v))}=\cosh {(r_x)}\cosh {(r_y)}-\sinh {(r_x)}\sinh {(r_y)}\cos {(\phi _x-\phi _y)}. \end{aligned}$$

Bringmann et al. [12] show that this model is a special case of GIRGs with input parameters

and

for \(v\in V\). They also show this this weight sequence follows a general power law.

Lemma 4.13

([12]) Let \(\alpha _H>\frac{1}{2}\). Then for all \(\eta =\eta (n)=\omega (\frac{\log \log n}{\log n})\), with probability \(1-n^{-\Omega (n)}\) the induced weight sequence \(\mathbf {w}\) follows a power law with parameter \(\beta =2\alpha _H+1\).

Thus, we can apply Theorem 4.11 to show that HRGs also satisfy PLB-U and PLB-N.

Theorem 4.14

Let G be a HRG with \(\alpha _H>\frac{1}{2}\). Then, G almost surely fulfills PLB-U and PLB-N with \(\beta =2\alpha _H+1-\eta\), \(t=0\), any constant \(\eta >0\), and some constants \(c_1\) and \(c_3\).

4.2.2 Chung–Lu Random Graphs

In this section we consider another random graph model related to GIRGs, Chung–Lu Random Graphs (CLRGs). As in GIRGs, nodes have weights which influence the edge probability. However, there is no notion of distance between nodes. The following formal definition makes this clear.

Definition 4.15

(Chung–Lu Random Graph (CLRG) [17]) A Chung–Lu Random Graph is a simple graph \(G=(V,E)\). Given a weight sequence \({\mathbf {w}}=(w_1,w_2,\ldots ,w_n)\) the edges between nodes \(v_i\) and \(v_j\) exist independently with probability \(p_{ij}\) proportional to \(\min \left( 1,\frac{w_iw_j}{W}\right)\), where \(W=\sum _{i=1}^{n}w_i\).

Geometric Inhomogeneous Random Graphs and Chung–Lu Random Graphs are highly related. In fact, the definition of GIRGs was originally inspired by the one for CLRGs. We can see that the same properties we used to show PLB-(U,N) for GIRGs also hold for CLRGs. Most importantly, we used the marginal edge probability between each pair of nodes in a Geometric Inhomogeneous Random Graph. However, this marginal probability is exactly the edge probability of Chung–Lu Random Graphs. From this property and the independence of edges in CLRGs one can derive all lemmas in exactly the same way, but for CLRGs instead of GIRGs. Especially, we can derive PLB-(U,N) as stated in the following theorem.

Theorem 4.16

Let G be a CLRG whose weight sequence \(\mathbf {w}\) follows a general power-law with exponent \(\beta '>2\). Then, for all \(2<\beta <\beta '\) and \(t=0\) there are constants \(c_1\) and \(c_3\) such that G fulfills PLB-U and PLB-N with high probability.

5 Greedy Algorithms

In this section we try to understand why simple greedy algorithms work efficiently on power-law bounded graphs. The problems we consider are Minimum Dominating Set, Minimum Vertex Cover, and Maximum Independent Set. We will show that graphs with PLB-U only have dominating sets, vertex covers, and maximal independent sets of size \(\Theta (n)\). This already implies that greedy algorithms for these problems achieve a constant approximation factor. However, for MDS and some variants of it, we can derive better approximation factors for greedy algorithms than the ones implied by our lower bounds on the solution size. We are able to derive these results with the help of the Potential Volume Lemma (Lemma 5.2), which we introduce in the next section.

Definition 5.1

A greedy algorithm is an \(\alpha\)-approximation for problem P if it produces a solution set S with \(\alpha \geqslant \frac{|S|}{|\textsc {opt}|}\) if P is a minimization problem and with \(\alpha \geqslant \frac{|\textsc {opt}|}{|S|}\) if P is a maximization problem.

5.1 Analysis of Greedy Algorithms on PLB-U Networks

This section will be dedicated to proving our main lemma, the Potential Volume Lemma (Lemma 5.2).

The idea of the lemma is inspired by lower-bounding the size of a dominating set as done by Shen et al. [46] and by Gast et al. [29] in the context of \((\alpha ,\beta )\)-Power-Law Graphs: In order to dominate all nodes, the volume of the dominating set has to be high enough, more precisely \(\sum _{x\in S} \deg (x)+1\geqslant n\) for any dominating set S. However, in a power-law bounded graph at least a constant fraction of nodes are necessary to reach such a high volume.

The Potential Volume Lemma generalizes this idea and gives us upper bounds on the volume of sets of nodes depending on their size. Moreover, the lemma also works for other well-behaved non-decreasing functions on node degrees. It essentially states that for a graph with PLB-U, a set of nodes S, and a nice non-decreasing function h

This property can be used to derive lower bounds on the size of optimal solutions. For some greedy algorithms we can also derive upper bounds on the solution size in terms of \(\sum _{x\in \textsc {opt}}{h(\deg (x))}\). We can then use the lemma with \(S=\textsc {opt}\) to derive upper bounds on the greedy solution size with respect to the size and volume of an optimal solution, yielding improved approximation ratios.

The proof of the lemma can be outlined as follows. First, for any set S it holds that \(\sum _{x\in S}{h(\deg (x))}/|S|\leqslant \sum _{x\in S'}{h(\deg (x))}/|S'|\) for any set \(S'\) of size at most |S| that contains the \(|S'|\) nodes of highest degree. This is intuitively clear if we interpret these expressions as average values of \(h(\deg (\cdot ))\) per node and remember that h is non-decreasing. Thus, we can achieve an upper bound by considering the average over the highest buckets which contain at most |S| nodes in total. Using PLB-U and assuming that each bucket d contains as many nodes as possible and that those nodes all have maximum permitted degree \(2^d-1\), we get an upper bound on this average.

Before we state the lemma formally, remember that we use the following shorthand notations:

To get a feeling of how big \(a_{\beta ,t}\) and \(b_{c_1,\beta ,t}\) are, let us consider the “ideal” case \(c_1=1\) and \(t=0\). For \(\beta \rightarrow 2\) both values approach infinity, although \(a_{\beta ,t}\) is orders of magnitude smaller. For \(\beta \rightarrow \infty\) it holds that

Figure 2 shows how large these values are for intermediate power-law exponents \(\beta \in [2.5,3]\).

In the lemma, we assume the graph to have a certain minimum number of nodes, which is not a big restriction. Further, we assume the feasible solution to be of size at most \(|V|-1\). When applying the lemma we will always use \(S=\textsc {opt}\). Having \(|S| = |\textsc {opt}| \leqslant n-1\) is not a big restriction, since \(|\textsc {opt}|=n\) is usually a degenerate case. For MDS and MIS \(|\textsc {opt}|=n\) would imply a graph without edges. This cannot be the case, since we forbid isolated vertices. For MVC it always holds that \(|\textsc {opt}|\leqslant n-1\).

Lemma 5.2

(Potential Volume Lemma)

Let \(G=(V,E)\) be a graph without loops and isolated vertices. Also, let G have the PLB-U property for some \(\beta >2\), some constant \(c_1>0\), and some constant \(t\geqslant 0\), and let

Let \(S\subseteq {V}\) be a feasible solution with \(|S|<n\), let \(g:\mathbb {R}^+\rightarrow \mathbb {R}^+\) be a continuously differentiable function and let \(h(x):=g(x)+C\) for some constant C such that

-

(i)

g non-decreasing,

-

(ii)

\(g(2x)\leqslant c\cdot g(x)\) for all \(x\geqslant 2\) and some constant \(c>0\),

-

(iii)

\(g'(x)\leqslant \frac{g(x)}{x}\) for all \(x\geqslant 1\),

then it holds that

where \(M(n)\geqslant 1\) is chosen such that \(\sum _{x\in S}{\deg (x)}\geqslant M(n)\).

Proof

Without loss of generality assume the nodes of G were ordered by increasing degree, i.e. \(V(G)=\left\{ v_1,v_2,\ldots ,v_n\right\}\) with \(\deg (v_1)\leqslant \deg (v_2)\leqslant \ldots \leqslant \deg (v_n)\). Let \(n':=2^{\left\lfloor \log (n-1)\right\rfloor +1}-1\). This is the maximum degree of the bucket an \((n-1)\)-degree node is in. For \(j\in \mathbb {N}\) let

i.e. the maximum number of nodes of degree at least \(2^j\) that G can have according to the PLB-U property, and let

We can interpret \(s(\ell )\) as the index j of the smallest bucket, such that the total number of nodes in buckets j to \(\left\lfloor \log (n-1)\right\rfloor\) is at most \(\ell\).

It now holds for all \(\ell \in \mathbb {R}\) with \(\ell \leqslant |S|\) that

due to the fact that g, and therefore also h, is non-decreasing. To upper bound the numerator on the right-hand side we assume that in each bucket we have the maximum (fractional) number of nodes of maximum degree, leading to at most

Now let \(k:=s(|S|)\). It has to hold that \(k\geqslant 1\), since otherwise the total upper bounds from all buckets would sum up to at most \(|S|< n\). For \(\ell =D(k)\) we get

since \(h(x)=g(x)+C\) for some constant C and the numerator for that second term just sums up to \(C\cdot D(k)\). One more note of caution: W.l.o.g. we assume \(|S|\geqslant 1\). In the case of equality, we need \(s(1)\leqslant \left\lfloor \log (n-1)\right\rfloor\). Otherwise the last bucket already contains more nodes than our solution, i.e. we could not take any complete bucket. For the desired inequality to hold, we must ensure

It holds that

since \(2^{\left\lfloor \log (n-1)\right\rfloor }\geqslant \frac{n}{2}\). We can see that this is at most 1 for

We start estimating Eq. (2) by deriving an upper bound on the numerator. Using properties (i) and (ii) we derive

Plugging this into the numerator gives

It is easy to check that the function \(g(x)\cdot x^{-\beta }\) is non-increasing by property (iii) and the fact that \(\beta >2\). We can now estimate the sum in equation (3) by an integral

Using integration by parts we get

since \(\beta >2\). Due to property (iii) it holds that \(x^{1-\beta }\cdot g'(x)\leqslant x^{-\beta }\cdot g(x)\), giving us

and therefore

by plugging it into the integration by parts. Thus, equation (4) yields

Plugging this into Eq. (3) now gives

Now we still need a lower bound on D(k). It holds that

where the last line follows by observing

This holds because \(\frac{2^{k+1}+t}{2^k+t}\geqslant \frac{2+t}{1+t}\), since \(2^k\geqslant 1\).

Plugging Eqs. (5) and (6) into Eq. (2) and lower-bounding \(\sum _{i=2^k}^{n'}{(i+t)^{-\beta }}\) with \((2^k+t)^{-\beta }\) gives us an upper bound of

It now suffices to find an upper bound for \(2^k+t\), since \(g(2^k+t)\) is non-decreasing. Due to \(\sum _{x\in S}{\deg (x)}\geqslant M(n)\) and the choice of k it holds that

To upper bound the left-hand side, we can use Eq. (5) with \(g(x)=x\) and \(2^{k-1}\) in place of \(2^k\). It is easy to check, that this function satisfies (i), (ii) with \(c=2\) and (iii) as needed. This yields

or equivalently

Now we can plug Eqs. (9) into (7) to get the result as desired.\(\square\)

5.2 Minimum Dominating Set

As we have stated in Sect. 5.1, the idea for lower-bounding the size of dominating sets is the same as the one by Shen et al. [46] and by Gast et al. [29]: Every dominating set S has to satisfy \(n\leqslant \sum _{x\in S} \deg (x)+1\). If we assume PLB-U, the Potential Volume Lemma tells us how big S has to be in order to satisfy this inequality.

Theorem 5.3

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the minimum dominating set is of size at least

Proof

Let \(\textsc {opt}\) denote an arbitrary minimum dominating set. It holds that

and since we assume that there are no nodes of degree 0, it also holds that

giving us \(M(n):=\frac{n}{2}\). We can choose \(h(x):=x+1\) with \(g(x)=x\). Now g satisfies (i), (ii) with \(c=2\) and (iii). With Lemma 5.2 we can now derive

which proves the theorem.\(\square\)

The theorem implies that one is guaranteed a constant approximation factor by simply taking all nodes.

Corollary 5.4

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), every dominating set has an approximation factor of at most

However, we can show that using the classical greedy algorithm (c.f. Algorithm 1) actually guarantees a better approximation factor under PLB-U (see Fig. 3). This is the case, because the greedy algorithm is guaranteed to find a solution C with \(C\leqslant \sum _{x\in \textsc {opt}}{H_{\deg (x)+1}}\). We can then use the Potential Volume Lemma to upper-bound this expression in terms of \(|\textsc {opt}|\), resulting in a better approximation factor. The rest of this section is dedicated to showing this result.

The following inequality can be derived from an adaptation of the proof for the greedy Set Cover algorithm to the case of unweighted Dominating Set.

Theorem 5.5

([32])

Let C be the solution of the greedy algorithm and let \(\textsc {opt}\) be an optimal solution for Dominating Set. Then it holds that

where \(H_k\) is the k-th harmonic number.

Proof

The idea of the proof is to distribute the cost of taking a node \(v\in C\) amongst the nodes that are newly dominated by v. For example, if the algorithm chooses a node v which newly dominates v, \(v_1\), \(v_2\) and \(v_3\), the four nodes each get a cost of 1/4. At the end of the algorithm it holds that \(\sum _{v\in V}{c(v)}=|C|\).

Now we look at the optimal solution \(\textsc {opt}\). Since all nodes \(v\in V\) have to be dominated by at least one \(x\in \textsc {opt}\), we can assign each node to exactly one \(x\in \textsc {opt}\) in its neighborhood, i.e. we partition the graph into stars S(x) with the nodes x of the optimal solution as their centers.

Now let us analyze how the greedy algorithm constructs its solution. Choose one \(x\in \textsc {opt}\) arbitrary but fixed. Let us have a look at the time a node \(u\in S(x)\) gets dominated. Let d(x) be the number of non-dominated nodes from S(x) right before u gets dominated. Due to the choice of the algorithm, a node v had to be chosen which dominated at least d(x) nodes. This means u gets a cost c(u) of at most 1/d(x). Now we look at the nodes from S(x) in reverse order of them getting dominated in the algorithm. The last node to get dominated has a cost of at most 1, the next-to-last node gets a cost of at most 1/2 and so on. Since \(|S(x)|\leqslant \deg (x)+1\) the costs to cover S(x) are at most \(\frac{1}{\deg (x)+1}+\frac{1}{\deg (x)}+\ldots +1=H_{\deg (x)+1}\). This gives us the inequality

as desired.\(\square\)

From the former theorem, one can easily derive the following corollary.

Corollary 5.6

The greedy algorithm gives a \(H_{\Delta +1}\)-approximation for Dominating Set, where \(\Delta\) is the maximum degree of the graph.

The problem of the corollary is, that it assumes all nodes of an optimal solution to have maximum degree \(\Delta\). However, if we assume PLB-U, we can get a better bound on the degrees of nodes in an optimal solution. By using the inequality from Theorem 5.5 together with the Potential Volume Lemma, we can derive the following approximation factor for the greedy algorithm.

Theorem 5.7

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the classical greedy algorithm for Minimum Dominating Set (cf. [22]) has an approximation factor of at most

Proof

From the analysis of the greedy algorithm we know that for its solution C and an optimal solution \(\textsc {opt}\) it holds that

where \(H_k\) denotes the k-th harmonic number. We can now choose \(h(x)=g(x)+1\) with \(g(x)=\ln (x+1)\). g(x) satisfies (i), (ii) with \(c=\log _{3}(5)\) and (iii). As we assume there to be no nodes of degree 0, it holds that

since all nodes have to be covered. We can now use Lemma 5.2 with \(S=\textsc {opt}\) to derive that

\(\square\)

It is not easy to see that this is an improvement over the approximation factor implied by the lower bound. Figure 3 displays both approximation factors for several realistic parameter settings. Note that for \(\beta \rightarrow 2\) both factors approach infinity, although the one of the greedy algorithm does so much slower. For \(\beta \rightarrow \infty\) the approximation factor from the lower bound approaches

and the approximation factor of the greedy algorithm approaches

We also calculated both factors for the fitted PLB-U bounds from Fig. 1. See Table 3 for a comparison: For all real-world networks the approximation factor guaranteed by the greedy algorithm is orders of magnitude better than the trivial bound.

For Minimum Connected Dominating Set we get a very similar bound. In this variant of Minimum Dominating Set we are looking for a smallest dominating set S whose induced subgraph in G is connected.

Theorem 5.8

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the greedy algorithm for Minimum Connected Dominating Set (cf. [44]) has an approximation factor of at most

Proof

From [44, Theorem 3.4] we know that for the solution C of the greedy algorithm and an optimal solution \(\textsc {opt}\) it holds that

We can now choose \(h(x)=g(x)+1\) with \(g(x)=x\). g(x) satisfies (i), (ii) with \(c=2\) and (iii). As we assume there to be no nodes of degree 0, it holds that

since all nodes have to be covered. We can now use Lemma 5.2 with \(S=\textsc {opt}\) to derive that

\(\square\)

Our main lemma can be used to improve upon a multitude of results. Another example are algorithms which use only local information for optimization problems: Borgs, Brautbar, Chayes, Khanna, and Lucier [10] consider sequential algorithms where the graph topology is unknown and only vertices from the local neighborhood can be added to the solution. We call an algorithm an \(r^+\)-local algorithm if its local information is limited to \(N^{r}(S)\) plus the degree of the vertices in \(N^{r}(S)\).

First, we analyze the \(1^+\)-local algorithm for Minimum Dominating Set from [10] (Algorithm 2). The following theorem is from the original paper by Borgs et al. [10].

Theorem 5.9

([10]) Let S be the solution of Algorithm 2 and let \(\textsc {opt}\) be an optimal solution for Minimum Dominating Set. Then it holds that

and that

Similar to Theorem 5.7 we can show the following result.

Theorem 5.10

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), let S be the solution of Algorithm 2 and let \(\textsc {opt}\) be an optimal solution for Dominating Set. Then it holds that

Proof

Due to Theorem 5.9 with probability at least \(1-e^{-|\textsc {opt}|}\) it holds that

where \(H_k\) denotes the k-th harmonic number. From Theorem 5.3 we know that \(|\textsc {opt}|=\Theta (n)\), so this probability is \(1-e^{-\Omega (n)}\). Now we choose \(h(x)=g(x)+3\) with \(g(x)=\ln (x)\). g(x) satisfies (i), (ii) with \(c=2\) and (iii). We still assume our graph to not have isolated nodes. It therefore holds that

because all nodes have to be covered. We use Lemma 5.2 with \(S=\) optto get that

\(\square\)

5.3 Maximum Independent Set

In this section we consider Maximum Independent Set on networks with PLB-L or PLB-U. We are going to derive lower bounds on the size of maximum and maximal independent sets linear in n as well as a constant approximation factor.

In general graphs the greedy approximation gives us the following result.

Theorem 5.11

([45]) The greedy algorithm which prefers smallest node degrees gives a \((\Delta +1)\)-approximation for MIS in graphs of degree at most \(\Delta\).

However, if we assume PLB-L we can achieve lower bounds on the size of a maximum independent set linear in n.

Lemma 5.12

A graph with the PLB-L property with parameters \(\beta >2\), \(c_2>0\) and \(t\geqslant 0\), has an independent set of size at least \(\frac{c_2(t+1)^{\beta -1}}{(t+d_{min})^{\beta }(d_{min}+1)}\cdot n\) or of size at least \(\frac{c_2}{(t+1)}\cdot n\) if we assume G to be connected and \(d_{min}=1\).

Proof

This is easy to see by just counting the number of nodes of degree \(d_{min}\). There are at least

of these nodes. Since each of these nodes can have at most \(d_{min}\) other nodes of the same degree as a neighbor, the independent set is at least of size \(\frac{c_2(t+1)^{\beta -1}}{(t+d_{min})^{\beta }(d_{min}+1)}n\). If \(d_{min}=1\) and G is connected, none of the degree-1 nodes can be neighbors, thus giving us \(c_2 n (t+1)^{\beta -1}(t+d_{min})^{-\beta }=\frac{c_2}{(t+1)}n\).\(\square\)

This is also a lower bound on the solution size a greedy algorithm can achieve, if it chooses nodes of minimum (remaining) degree first. We can even go a step further and show that all maximal independent sets have to be quite big, even if we only have the PLB-U property. This holds since every maximal independent set is also a dominating set.

Theorem 5.13

In a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), every maximal independent set is of size at least

Proof

It holds that every maximal independent set S is also a dominating set. Due to Theorem 5.3, the size of the minimum dominating set is at least

giving us the result.\(\square\)

The former theorem implies that every maximal independent set is a constant-factor approximation of the maximum independent set. This also holds for the greedy algorithm.

Corollary 5.14

In a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), every maximal independent set has an approximation factor of at most

We can compare these bounds to the ones from Lemma 5.12. For \(d_{min}=1\), it holds that the approximation factor \(\frac{(t+d_{min})^{\beta }(d_{min}+1)}{c_2(t+1)^{\beta -1}}\) simplifies to \(\frac{2(t+1)}{c_2}\). This is a realistic parameter as we can see in Fig. 1. In Table 4 we also compare the two approximation factors from Lemma 5.12 and Theorem 5.13 for the real-world networks we fitted in Fig. 1. For all real-world networks the approximation factor from Lemma 5.12 is better. However, the two results have different requirements: Lemma 5.12 requires PLB-L, while Theorem 5.13 requires PLB-U. Thus, they are only comparable for graphs which have both properties.

5.4 Minimum Vertex Cover

In this section we consider the Minimum Vertex Cover problem on graphs with PLB-U. Again, we can achieve lower bounds on the size of a minimum vertex cover linear in n by noticing that every vertex cover in a graph without isolated vertices is also a dominating set. Although there is a very easy 2-approximation for general graphs by greedily constructing a maximal matching [27], our result implies that in power-law bounded graphs without isolated vertices, every vertex cover is a constant-factor approximation of the minimum vertex cover.

It has to be noted that one can use a greedy algorithm similar to Algorithm 1 to achieve the following result.

Theorem 5.15

The greedy algorithm which prefers highest node degrees gives a \(H_{\Delta }\)-approximation for Vertex Cover, where \(\Delta\) is the maximum degree of the graph.

Proof

We can analyze the algorithm in a similar manner as in Theorem 5.5. The difference is that now the algorithm considers covered edges instead of dominated vertices. The cost to add a node to the vertex cover is now distributed among its newly covered edges. We can now consider an optimal solution \(\textsc {opt}\) and partition the edges into sets E(x) with the nodes \(x\in \textsc {opt}\) as their common incident nodes. Thus, for a vertex cover C and an optimal solution \(\textsc {opt}\) it holds that

Here, we only have \(H_{\deg (x)}\) instead of \(H_{\deg (x)+1}\), since the costs are only distributed among incident edges and not the inclusive neighborhoods of nodes. This yields the approximation factor as stated.\(\square\)

We could now use the Potential Volume Lemma to get an approximation factor similar to the one the greedy algorithm for dominating set achieves on graphs with PLB-U, see Theorem 5.7. It is interesting to see that even this naive greedy algorithm based on node degrees achieves a constant-factor approximation on graphs with PLB-U, while it only achieves a \(H_{\Delta }\)-approximation on general graphs. However, the algorithm does not achieve an approximation factor better than 2. Therefore, we concentrate on lower-bounding the size of any vertex cover.

Theorem 5.16

In a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), the minimum vertex cover is of size at least

Proof

The bound follows from Theorem 5.3, since every vertex cover in a graph without isolated vertices is a dominating set.\(\square\)

Corollary 5.17

For a graph without loops and isolated vertices and with the PLB-U property with parameters \(\beta >2\), \(c_1>0\) and \(t\geqslant 0\), every vertex cover has an approximation factor of at most

6 Hardness of Approximation

In this section we show hardness of approximation for simple graphs with the PLB-(U,L,N) properties. Let us start with a few definitions.

Definition 6.1

(NP optimization problem [19]) An NP optimization problem (NPO) A is a fourtouple (I, sol, m, goal) such that

-

1.

I is the set of instances of A and it is recognizable in polynomial time.

-

2.

Given an instance \(x\in I\), sol(x) denotes the set of feasible solutions of x. These solutions are short, that is, a polynomial p exists such that, for any \(y\in sol(x)\), \(|y|\leqslant p(|x|)\). Moreover, it is decidable in polynomial time whether, for any x and for any y such that \(|y|\leqslant p(|x|)\), \(y\in sol(x)\).

-

3.

Given an instance x and a feasible solution y of x, m(x, y) denotes the positive integer measure of y. The function m is computable in polynomial time is also called the objective function.

-

4.

\(goal\in \left\{ \max ,\min \right\}\).

We can see that MDS, MIS, and MVC are problems in NPO.

Definition 6.2

(Class \(\textsf {APX}\) [22]) \(\textsf {APX}\) is the class of all \(\textsf {NPO}\) problems that have polynomial-time r-approximation for some constant \(r>1\).

The notion of hardness for the approximation class \(\textsf {APX}\) is similar to the hardness of the class \(\textsf {NP}\), but instead of employing a polynomial time reduction it uses an approximation-preserving \(\textsf {PTAS}\)-reduction.

Definition 6.3

(\(\textsf {APX}\)-Completeness [22]) An optimization problem A is said to be \(\textsf {APX}\)-complete iff A is in \(\textsf {APX}\) and every problem in the class \(\textsf {APX}\) is \(\textsf {PTAS}\)-reducible to A.

6.1 Approximation Hardness for Simple Graphs

In this section we prove hardness of approximation for the optimization problems we consider. The following definition and lemma by Shen et al. [46] provide us with a framework to transfer inapproximability results on classes of graphs to power-law bounded graphs. Note that both the definition and the lemma assume an optimal substructure problem. Due to Shen et al. [46] optimal substructure problems are optimization problems whose optimal solution on a graph is the union of all optimal solutions on the maximal connected components of this graph. This includes the three problems we consider. Our idea to use this framework is to “‘hide”’ graphs from other classes inside power-law bounded graphs. If the optimal solution size of the hidden graph is big enough, the inapproximability of the class it belongs to carries over. The process of hiding a graph inside another one constitutes an embedded-approximation-preserving reduction as formalized in the following definition.

Definition 6.4

([46]) Given an optimal substructure problem O, a reduction from an instance on graph \(G =(V, E)\) to another instance on a (power law) graph \(G' = (V', E')\) is called embedded-approximation-preserving if it satisfies the following properties:

-

(1)

G is a subset of maximal connected components of \(G'\);

-

(2)

The optimal solution of O on \(G'\), opt \((G')\), is upper bounded by \(C\cdot\) opt(G) where C is a constant correspondent to the growth of the optimal solution.

Having shown an embedded-approximation-preserving reduction, we can use the following lemma to show hardness of approximation.

Lemma 6.5

([46]) Given an optimal substructure problem O and a graph G such that no polynomial time algorithm with approximation factor \(\varepsilon\) exists for O on G unless \(P=NP\). Let \(\delta =\frac{\varepsilon C}{(C-1)\varepsilon +1}\) if O is a maximization problem and let \(\delta =\frac{\varepsilon +C-1}{C}\) if O is a minimization problem. If there exists an embedded-approximation-preserving reduction from G to another graph \(G'\), where opt \((G')\leqslant C\cdot\) opt(G), then no polynomial time algorithm with approximation factor \(\delta\) exists for O on \(G'\) unless \(P=NP\).

We will use this framework as follows: First, we show how to reduce cubic graphs to graphs with PLB-U, PLB-L and PLB-N via an embedded-approximation-preserving reduction. Then, we derive the value of C as in Definition 6.4 for each problem we consider. Last, we use Lemma 6.5 together with the known inapproximability results on cubic graphs to derive the approximation hardness on graphs with PLB-U, PLB-L and PLB-N.

We start by showing how to reduce cubic graphs to simple graphs with PLB-U, PLB-L and PLB-N. Then we can use Lemma 6.5 to show inapproximability. The idea of our reduction is as follows: First, we add the cubic graph G. We choose the total number of nodes N of the constructed graph \(G_{PLB}\) high enough so that all n nodes of G fit into bucket 1 according to PLB-L and PLB-U. Then, we fill each bucket d until it complies with PLB-L by adding stars \(S_{2^d}\) (star gadgets). If the nodes of degree one we add with star gadgets reach their minimum according to PLB-L, we start adding cliques \(K_{2^d}\) (clique gadgets) instead. This is done until all buckets barely comply with PLB-L. Afterward the process is repeated until we reach or exceed N nodes in total for the first time. At this point all buckets comply with both PLB-L and PLB-U. We will show that we have to switch to clique gadgets only for buckets d, where d is a constant. Hence, all nodes in our construction have at most a constant number of neighbors of same or higher degree. Thus, the constructed graph also satisfies PLB-N.

Lemma 6.6

Any cubic graph G with \(|V(G)|=n\) sufficiently large can be reduced to a simple graph \(G_{PLB}\) having the PLB-U, PLB-L and PLB-N properties for any \(\beta >2\), any \(t\geqslant 0\), any \(c_3\), and any admissable \(c_1\) and \(c_2\). The reduction takes polynomial time and produces a graph with \(N=c\cdot n\) nodes, where

Proof

Suppose we are given \(\beta\), t, and \(c_1>c_2>0\) admissable. Let n be the number of nodes in graph G and let \(N\in \mathbb {N}:N=cn\) be the number of nodes in \(G_{PLB}\) for some constant c to be determined. Like in Lemma 6.5 we have to ensure a number of conditions to get a valid degree sequence. First, we have to ensure that the bound PLB-U implies on bucket 1 is high enough to encompass all n nodes of the cubic graph G. The only thing we can still choose in order to do so is \(N=c\cdot n\). However, if we choose N so that n is very close to the PLB-U bound, it might happen that we actually add more than N nodes. Thus, we choose N so that n is at most as high as the bound implied by PLB-L on bucket 1. Due to the admissability of \(c_2\) this means that the number of nodes we added is smaller than N, or put differently, it ensures \(c>1\). This means that PLB-U is satisfied for that bucket as well, since \(c_1>c_2\). It holds that

From this we get

Moreover, we will choose c equal to that lower bound. Note that for this choice of c it holds that \(c\geqslant 1\), since \(c_2\) is supposed to be admissable and thus the PLB-L lower bound for bucket 1 must be smaller than N. Then we choose the maximum degree \(\Delta\) such that

and \(d_{min}=1\).

Since \(c_1\) and \(c_2\) are admissible, we can fit exactly N nodes between PLB-L and PLB-U. First, we hide G in bucket 1. Then, we try and add for each bucket \(d\geqslant 2\) the number of stars of size \(2^d+1\) it needs to reach its lower bound. Since bucket 0 gets all the degree-one nodes of these star gadgets, we have to be careful not to overfill it. Therefore, we start with bucket \(\left\lfloor \log _2(\Delta )\right\rfloor\) and fill the buckets with star gadgets until bucket 0 has reached its lower bound or we are done. If bucket 0 is full, we switch to clique gadgets: Each bucket \(d\geqslant 1\) then gets enough \((2^{d}+1)\)-cliques to comply to their lower bound. This does not add any more degree-one nodes. In the end, we also fill bucket 0 with enough 2-cliques, if necessary. By filling a bucket d with stars we do not deviate from the (admissable) lower bound of that bucket; by filling it with cliques we might deviate by \(2^{d}-1\).

Now we want to know when we have to switch to clique gadgets. We will see that this happens at some bucket x, which contains nodes of constant degrees. This means, every node of degree at least \(2^{x+1}\) is center of a star and thus fulfills PLB-N. Nodes of smaller degree satisfy PLB-N as well, since they only have a constant number of neighbors at all.

It holds that we add at most

degree-one nodes if we start at bucket x. Since \(2^d\leqslant i\), we get

and upper-bounding this sum with an integral yields

This term is at most \(\frac{c_2}{t+1}N\) for a sufficiently large constant x and if n is sufficiently large. This holds, since \(\Delta ={N}^{\frac{1}{\beta -1}}=o(N)\). We can choose

for example. This means, we can fill each bucket \(d\geqslant x\) to its lower bound with star gadgets. Smaller buckets (with high-degree nodes) can be filled with star gadgets until PLB-L for bucket 0 is reached. Then, those buckets are filled with clique gadgets. This means, we add at most \(2^d-1\) nodes above PLB-L for those buckets. Since d is constant, the gap between PLB-L and PLB-U for bucket d is linear in N. Thus, for high enough n, the number of nodes in each bucket still complies with both bounds.