Abstract

We propose a novel technique for three-dimensional three-component (3D3C) interfacial flow measurement. It is based on the particle streak velocimetry principle. A relatively long integration time of the camera is used for capturing the movement of tracer particles as streaks on the sensor. The velocity along these streaks is extracted by periodically changing the illumination using a known pattern. A dye with different absorption characteristics in two distinct wavelengths is used to color the fluid. The depth of particles relative to the fluid interface can then be computed from their intensities when illuminated with light sources at those two different wavelengths. Hence, from our approach, a bichromatic, periodical illumination together with an image processing routine for precisely extracting particle streak features is used for measuring 3D3C fluid flow with a single camera. The technique is applied to measuring turbulent Rayleigh–Bénard convection at the free air--water interface. Using Lagrangian statistics, we are able to demonstrate a clear transition from the Batchelor regime to the Richardson regime, both of which were postulated for isotropic turbulence. The relative error of the velocity extraction of our new technique was found to be below 0.5 %.

Similar content being viewed by others

1 Introduction

Interfacial transport processes of momentum, heat and mass across fluid boundary layers are of major importance in many industrial applications, environmental processes and fluid dynamic research. Since turbulent transport in the boundary layer of an interface plays a key role in the understanding of these processes, many investigators focused on the theoretical description of turbulent flow fields in the presence of an interface as well as on the development of numerical and experimental techniques. Although all these efforts have spawned a detailed theoretical description, for example, the advection-diffusion equation and the Navier--Stokes equation, the physical workings are far from being completely understood. The interdisciplinary interest in the said processes is shown by publications in biophysical research (Berthe et al. 2010); Kertzscher et al. 1999), engineering (Burgmann et al. 2008) and environmental physics (Banner and Peirson 1998). Main challenges associated with measuring flow information close to an interface are the inherent three-dimensionality of turbulence that implies the measurement of volumetric three-dimensional information and the coupling of obtained flow information with the position of the interface.

Therefore, in the recent past, volumetric three-dimensional three-component (3D3C) measurement techniques have become an important prerequisite for gaining insights into the fluid dynamic processes. This has led to a long established line of research to develop such techniques. Existing particle-based flow measurement techniques can be grouped as proposed by Adrian (1991) in three main categories:

-

laser speckle velocimetry (LSV), which assesses flow information by analyzing random interference patterns caused by a high seeding density of tracer particles,

-

particle tracking velocimetry (PTV), which uses lower seeding densities to enable a tracking of single particles through a long exposure time or multiple images in a sequence and

-

particle image velocimetry (PIV), which extracts the flow information from a fluid with a medial seeding density where the displacements of small groups of particles within an interrogation window are analyzed statistically.

Most proposed approaches in third category use correlation-based methods to find similar particle constellations within an interrogation window in consecutive image pairs. This mode is often referred to as “standard PIV.” In the recent history of PIV, there were multiple attempts to obtain 3D3C data. These approaches can also be distinguished according to the technique used to obtain 3D-velocity information and the methods used to measure volumetric datasets. The most popular method to access three components (3C) of the velocity field is to measure the out-of-plane velocity using stereoscopic methods (Prasad 2000), extended by a technique called multi-plane PIV by using multiple laser-sheets (Kähler and Kompenhans 2000; Müller et al. 2001; Liberzon et al. 2004; Cenedese and Paglialunga 1989; Brücker 1996) or intensity-graded light sheets (Dinkelacker et al. 1992) for the reconstruction of the third velocity component. In the recent past, many methods were proposed to access volumetric information from 3D flow fields. This was achieved using holographic measurements (Hinsch 2002; Sheng et al. 2008), by combining PIV with Doppler global velocimetry (PIV/DGV) (Wernet 2004), using tomography (Elsinga et al. 2006; Schröder et al. 2008), defocusing-based approaches (Pereira et al. 2000, 2007; Willert and Gharib 1992), scanning-light-sheet methods (Burgmann et al. 2008; Hoyer et al. 2005; Brücker 1995), color-coded depth resolving techniques (Zibret et al. 2003; McGregor et al. 2007) and absorption-based methods (Jehle and Jähne, 2008; Berthe et al. 2010).

This paper introduces a new measurement technique to obtain Lagrangian flow characteristics in the boundary region of an interface. To get robust and volumetric 3D3C data, we developed a hybrid method that combines particle streak velocimetry (PSV) with an interfacial depth estimation method called bichromatic depth extraction first introduced by Jehle and Jähne (2008). The method uses a single camera to record PSV data in the plane of the interface. The scene is illuminated by two light-emitting diode (LED) arrays of different wavelength (λ1, λ2) from above. During the long-time exposure of the PSV image acquisition, all moving tracer particles are imaged as streak structures. These streaks are the result of a mapping of particle trajectories that were integrated through time and projected on the image plane. To avoid a loss of temporal information during the exposure, the light sources are intensity-modulated with a periodical signal, coding temporal information in the image. The result is streak patterns as shown in Fig. 1 with a periodic intensity change along their course. For the extraction of volumetric Lagrangian flow features from the recorded particle streak image sequences, an extraction algorithm was developed. In a first detection step, the algorithm extracts the position of the streak’s centerline and the intensity signal along this line. Afterward, the instantaneous particle velocity and depth are computed from this signal. Finally, a matching routine is used to track single particles through the image sequence and extract the correspondent Lagrangian information, that is, the time-dependent three-dimensional particle position and velocity.

Zoomed pseudo-color image of a single particle recorded with a sinusoidal, bichromatic illumination (F = 8Hz) and an exposure time t exp = 1s. The blue line shows the position of the centerline, computed by the feature extraction algorithm described in Sect. 3 of this publication

To test the validity and applicability of this new method, we applied it to a semi-artificial test sequence with highly accurate ground truth from (Berthe et al. 2010) and to the measurement of buoyancy-driven, turbulent Rayleigh–Bénard convection. The properties of this type of turbulent flow are well understood and make an assessment of the statistical properties derived from our technique feasible. Also, due to the long characteristic time constant, a long integration of our measurements is necessary to derive valid statistics. Errors in the measurements would thus add up and become apparent. All this makes this type of setting an ideal verification experiment for the newly proposed technique. The turbulent flow is analyzed by applying Lagrangian pair dispersion statistics. Our measurements clearly show a transition from a t 2 scaling in the Batchelor regime to a t 3 behavior as predicted by the Richardson law as described in (Salazar and Collins 2009).

1.1 Interfacial measurement technique

For the understanding of interfacial transport processes, a detailed knowledge of flow mechanisms close to the boundary is of fundamental importance. The need of collocated measurements of fluid flow within the thin interfacial boundary layer and its topography poses a major challenge, as summarized by Turney et al. (2009). Especially for curved and/or dynamically moving interfaces, flow fields are often highly complex. This necessitates volumetric 3D measurement techniques which extract flow features in a Lagrangian frame of reference. Moreover, for most analyses the motion relative to the interface is sought. To this end, all stereoscopic, holographic and tomographic methods need to be extended by an additional interface tracking approach to allow for a coordinate transform between the inherent fixed laboratory reference frame and the moving interface (Banner and Peirson 1998; Turney et al. 2009; Law et al. 1999). The standard approach for interface tracking in 2D measurements is usually achieved by employing an additional camera to observe the intersection line between a laser-sheet and the interface. This additional measurement in conjunction with cross calibration issues may lead to significant sources of error. This problem becomes highly challenging for tracking 2D topographies, as required by volumetric measurements.

Absorption-based depth estimation methods, as proposed by Debaene et al (2005), present a promising alternative which circumvents these experimental difficulties. In this approach, the distance away from the interface is encoded by the particle’s intensity according to Lambert--Beer’s law. Other depth from attenuation methods also uses the imaged intensity in a similar way to assess information from interfacial regions (Liu and Sullivan 1998). The technique that is presented in this paper is an extension of current absorption-based techniques. To test its validity and applicability, it is applied to the free air--water interface. This scenario represents a challenging, yet highly relevant application with a moving interface and a thin boundary layer that has a vast influence on the interfacial exchange of heat, mass and momentum.

Refraction at curved interfaces presents a severe obstacle for most interfacial measurement techniques. In the presented study, curved interfaces inhibit a precise measurement for two reasons. Firstly, the determination of the tracer particle position can be corrupted by a curved interface since particles are imaged through the interface. Secondly, the presented method relies on a homogeneous illumination which can be distorted by focusing effects. For some interfaces these refraction artifacts can be avoided by using refractive index matching methods (Budwig 1994).

1.2 Bichromatic depth estimation approach

The development of a dual-wavelength method for absorption-based depth estimation was motivated by a method used in bio-fluid-mechanics (Debaene et al. 2005) where the exponential absorption characteristic of a dye was used to extract the depth of reflecting tracer particles in a fluid. However, the gray-value of an imaged tracer particle does not only depend on its depth as given by Lambert--Beer’s law. It also depends on their reflectance properties. This is mainly dominated by the size of the particle. In Debaene et al. (2005), great care was taken to use only particles of identical size. However, this is impractical for a number of applications and also error prone.

To overcome these restrictions, the bichromatic depth estimation as introduced by Jehle and Jähne (2008) utilizes an absorbing dye and two light sources with different wavelengths λi, i = {1, 2}. In combination with a standard PIV approach it was successfully applied to the free air--water surface of a laminar falling film (Jehle and Jähne 2008) and the turbulent flow field of a buoyant convection. In medical research it was combined with an optical flow--based method to obtain interfacial flow fields in a displacement pediatric blood pump (Berthe et al. 2009). Key idea of the bichromatic depth estimation is to choose a dye with a wavelength-dependent penetration depth z *i that shows a significant difference for the wavelengths of the light sources used. For each wavelength λ i the intensity decay in the dyed liquid due to absorption can be described by Lambert--Beer’s Law as function of the depth z away from the interface given by

Here I(z, λ i ) is the intensity of the particle and I 0(λ i ) is the intensity directly at the interface. The factor 2 in the exponent was introduced because the light has to pass the distance z twice (down and up).

By computing the intensity ratio of both wavelengths I 1 = (z,λ1) and I 2 = (z,λ2), the bias introduced by small variance in the particle size and reflectivity cancels out and does not influence depth estimation’s quality. Therefore, the depth z results from:

From this reciprocal information, the distance of particles to the interface can be computed independently of their reflectance properties. Since it is not possible to measure the intensity of both reflected light sources simultaneously, we have to illuminate in an alternating fashion. For a detailed analysis of the bichromatic depth extraction’s precision, we refer to Jehle and Jähne (2008) and Berthe et al. (2010).

1.3 Particle streak velocimetry

As shown in recent publications (Sellent et al. 2010), the use of time-integrated, long-exposure images to obtain information about moving objects has become an important topic in image processing. Particle streak velocimetry (PSV) is often also referred to as Streak Photography or Particle Streak Tracking (PST). In PSV long exposure times are used to map particle trajectories by a temporal integration in the image plane. To the best of our knowledge, this method was first introduced by Fage and Townend (1932) in a study to visualize characteristics of turbulent flow fields in circular and rectangular pipes. Afterward, streak photometry was also used to visualize flow characteristics by Prandtl (1957). First measurements to obtain quantitative information from particle streak images were conducted by Dimotakis et al. (1981), Dickey et al. (1984) and Adamczyk and Rimai (1988). All three studies used computerized evaluation routines to extract the mean direction and the mean velocity by subtracting the end-points of each streak. The study by Wung and Tseng (1992) can be seen as an early precursor of the technique presented in this paper since this is the first time that temporal information was coded along the streak structure by changing the intensity of the illumination during exposure.

In the recent past many approaches were introduced to extend this method that was originally developed to measure two velocity components in a plane (2D2C), to measure a third velocity component (Wung and Tseng 1992; Müller et al. 2001) or volumetric data (Sinha and Kuhlman 1992; Rosenstiel and Rolf-Rainer Grigat 2010; Biwole et al. 2009; Dixon et al. 2011). A newly proposed method, published in Dixon et al. (2011), even uses a holographic PSV technique to measure volumetric flow features. In this approach blurred holograms are recorded by imaging particles that move during the exposure time. From the radial intensity profile of the particles in the hologram, the magnitude and direction of the in-plane velocity can be computed.

Key idea of particle streak velocimetry (PSV) is that tracer particles are imaged over a certain long exposure time t exp, instead of imaging the tracer in single-shot recordings with a very short integration time. The intensity distribution on the CCD-chip at a certain point in time I(x) is given by the well-known Airy-Function, which in polar coordinates \(\left(\rho, \varphi\right)\) is given by

Here J 1 is the first-order Bessel Function, \(k=\frac{2 \pi}{\lambda}\) is the wavenumber, and a controls the radius. This function can be approximated by a Gaussian bell-curve as follows (Leue et al. 1996). The logarithm of the Airy-Function can be decomposed into a second-order Taylor expansion given by

By replacing \(\sigma = \frac{\sqrt{2}}{k a},\) the first- and second-order terms can be rewritten to a Gaussian bell-curve in polar coordinates.

The gray-value distribution g(x) on the CCD-Chip, caused by the intensity distribution of a particle that travels along a trajectory \({\mathbf{X}}(t),\,t \in (0,t_{{{\text{exp}}}}) \) during the exposure time, can therefore be modeled by a simple time integral

where \(G(\cdot)\) is a two-dimensional Gaussian distribution. For a set of N tracer particles with trajectories \({\mathbf{X}}_{l} (t),\,t \in (0,t_{{{\text{exp}}}})\) with \(l=0,\ldots,N-1\) the gray-value distribution in a PSV image is given by the sum over all trajectories.

The result is images that contain information on the particle trajectories recorded during the exposure time. Unfortunately, particles with a horizontal velocity below a certain threshold do not result in detectable streak patterns. To prevent a bias toward higher horizontal velocities, the optical axis in PSV measurements is commonly adjusted perpendicular to the principal direction of the observed flow field. In interfacial turbulence measurements, the optical axis should be directed perpendicular to boundary layer since pure vertical particle movements are unlikely to occur due to the anisotropic restriction of the flow. The aim of all PSV approaches is to extract flow features from these streak images by an approximation of the particle trajectories \(\hat{\bf X}_N\). These trajectories can in a second step be used to extract Lagrangian fluid-flow features. Compared to the other volumetric 3D PIV approaches, this principle has a set of advantages. Rosenstiel and Rolf-Rainer Grigat (2010) describe the advantages of PSV/PST compared with PIV (i.e., no limitation to narrow measurement areas of volumes, simple application to 3D3C measurements, less calibration effort, cost efficiency, simplicity, low seeding density). Main obstacle of PSV measurements is the loss of temporal information due to the time integral in (6). The consequence is that the standard PSV approach enables the extraction of the particles’ average velocity during the exposure time interval (dividing streak length by exposure time), but the sign of the velocity remains unclear. This central restriction of PSV is called direction ambiguity. In the recent development of PSV algorithms, several strategies were introduced to overcome this problem. A very intuitive solution for that problem as used, for example, by Scholzen and Moser (1996) is to use an additional camera with a short exposure time to determine the streaks direction. Drawback is that by adding an additional camera one of the main advantages of PSV, the simplicity and the cost efficiency are not longer given. Adamczyk and Rimai (1988), Müller et al. (2001); Rosenstiel and Rolf-Rainer Grigat (2010) proposed the use of an asymmetric timing pattern, strobed on the illumination signal to code the flow direction on the streak structures. Another advantage is that this method enables to check for particles that left the laser-sheet. On the other hand, strobing the illumination during one exposure creates a problem because the streak fractions generated by the strobing have to be matched correctly for the extraction of a velocity vector. A slightly different approach that does not need a matching of streak fractions was used by Wung and Tseng (1992) who change the illumination intensity asymmetrically during the exposure. In our approach, intensity information codes velocity information. Therefore, we developed a matching algorithm that iteratively combines single PSV images from a sequence to trajectories by tracking particles through the image stack. This enables us to assign a direction to every streak that was detected in at least two subsequent images. As a by-product, this matching algorithm allows to track particles that comprise too slow horizontal movements within their trajectories. In these cases, lost information is reconstructed using the neighboring streaks.

Another general shortcoming of PSV and PTV methods is the low spatial sampling rate that is introduced by a systematic restriction to low seeding densities. Nonetheless, due to the sub-pixel precise centerline extraction and the frequency-based velocity extraction, the sparse data comprise a high spatial and temporal precision that allows for accurate statistical analyses of the Lagrangian velocity information.

1.4 Bichromatic extension to the standard PSV approach

The method presented in this paper can be seen as an extension of the standard 2D PSV as described in (Adrian 1991), by a bichromatic depth estimation approach as introduced by Jehle and Jähne (2008) and a frequency-based velocity extraction. Moving particles are illuminated with an alternating two-wavelength illumination that is used to code velocity information in the long-exposure images. In contrast to all existing methods, we did not solve the extraction of single streaks by a threshold-based segmentation followed some fitting routine (Müller et al. 2001; Rosenstiel and Rolf-Rainer Grigat 2010), but implemented an tensor-based, iterative centerline extraction routine (described in Sect. 3). Advantage of this extraction strategy is that it enables a sub-pixel precise extraction of the trajectory’s centerline and intensity. The method also incorporates additional knowledge by assuming a Gaussian intensity profile perpendicular to the streak as a consequence of (6). Since we used an alternating bichromatic illumination and an absorbing dye, the intensity signal along the streak can be used to compute the distance between particle and interface for all points on the particles trajectory using (2).

Instantaneous velocity extraction

As mentioned above temporal information about the particle velocities is lost due to the integration in (6). To circumvent this restriction and to gain the precision of the extracted velocity, the alternating intensity signal on the streak structure, caused by the intensity modulation of the light sources with a constant frequency F, is used to recover temporal information. This is achieved by a frequency analysis using the Hilbert Huang Transform (Huang et al. 1999). It is obvious that the spatial frequency coded on a streak must be higher, when the particle moves slower and vice versa. This correlation between the instantaneous horizontal velocity v h (x) and the spatial frequency is described by the following equation:

Here α is the pixel size that allows to convert \([\hbox {px}]\rightarrow[\hbox{mm}], F\) is the modulation frequency of the illumination, and f(x) is the instantaneous spatial frequency at a certain position x along the particles trajectory.

2 Measurement setup

Large effort was put in the design of a volumetric, bichromatic illumination that enables an alternating, high-frequent and sinusoidal intensity modulation of two 5x5 LED arrays (ENFIS UNO Tile Array Blue 465 nm and ENFIS UNO Tile Array Violet 405 nm). Both light sources were defocused using plano-convex lenses to obtain a homogeneous illumination within the volume of interest. A two-channel operational transconductance amplifier OTA was used to modulate the output of an arbitrary function generator (AFG) (AFG3000 Tectronix, Inc.) on the light sources as sketched in Fig. 2. The linearity of the light output was found to be sufficient within the used parameters. Since the bichromatic depth estimation relies on the difference in the penetration depth at both wavelengths λ1 and λ2, care has to be taken in selecting the optimum dye. By measuring their spectral properties, a number of dyes were measured in an absorption spectrometer. Tartrazine (also known as E number E102, CI 19140 or FD&C Yellow 5) was found to be a feasible dye. The particle streak images were recorded using a 12-bit gray-value camera (XCL-5005 B/W, Sony Electronics Inc.) with 5Mpx resolution. The image acquisition was controlled by a microEnable IV VD4-CL frame-grabber (SILICONSOFTWARE GmbH). A GPIO/Trigger Board (SILICONSOFTWARE GmbH) was used for the synchronization of both illumination signals fired by the AFG and the frame-grabber. A measurement workflow, to control the setting and synchronization of frame-grabber and AFG as well as to store the recorded images in 16bit tagged image file format (tiff) images, was implemented in a development environment for image processing and the automatization of measurement devices and cameras called heurisko®. All image processing and flow feature extraction algorithms described in this publication were implemented in a C++ framework on a 64bit Linux System. The source code of our framework will be made available in an open-source license as supplemental material to this article.

Sketch of the experimental setup.a and b represent the LED arrays with 405 and 465 nm; c 5Mpx CCD camera that records 12bit gray-value images; d measurement volume seeded with silver-coated hollow glass spheres with 100 μm diameter and Tartrazine (E102); the intensity modulation of both light sources and the synchronization with the frame-grabber is achieved using a arbitrary function generator (e) and a trigger card in the desktop pc used for the data acquisition

2.1 Calibration and measurement

The accuracy of the depth extraction using (2) depends strongly on a precise estimation of the penetration depths z *i and initial intensity ratio \(\frac{I_{02}}{I_{01}}\) for both wavelengths \(\lambda_i\ i\in{1,2}\) (Berthe et al. 2010). This makes a calibration measurement of the exact extinction behavior of the dye for the given concentration and wavelengths indispensable to guarantee a high accuracy of the approach.

In all measurements of this study we used a white, diffuse target that was placed in the measurement volume at a defined angle to the optical axis and illuminated the calibration target with both wavelengths separately. Using these calibration measurements we extracted the initial intensity ratio from the part of the calibration target that was above the surface. The penetration depths for both wavelengths were determined by an exponential fit on the gray-values along the target.

3 Feature extraction

The extraction of the flow information can be separated into a set of subtasks. Each image from a recorded sequence is preprocessed followed by the centerline extraction and the depth and velocity estimation. In the last step the direction ambiguity of the velocity is solved using a matching algorithm, successively comparing each image with its predecessor and its successor. The resulting volumetric, Lagrangian trajectories describe the paths of single tracer particles over several images.

3.1 Boundary tensor

In the field of image processing, many tensor-based approaches were developed to extract structures and their orientation from image data. The most famous approach is the well-known Structure Tensor S which is a first-order, gradient-based approach (Bigun 2006).

In this equation g x and g y are the image gradients computed using a suitable filter. The horizontal bars indicate an spatial averaging which is usually performed using a Gaussian window function. The main drawback of the structure tensor is that it does not perform well at the ends of streak structures. For this reason we decided to use a second-order tensor called Boundary Tensor for the low-level feature extraction. As described in detail by Köthe (2006), this tensor can be computed from the sum of a first- and a second-order band-pass Riesz transform b and A of an image.

From this tensor one can easily extract several invariants that will be used as streak features in the next steps. The tensor trace represents a boundary energy called boundary strength.

The local orientation θ and its energy E edge can be computed from the elements of \({\fancyscript{B}}\) as well.

Having these three features for every pixel in the input image, we can use this information to extract all streak structures and their intensities with sub-pixel precision. For a more detailed description of the boundary tensor and a characterization of its edge and boundary detection abilities, we refer to Köthe and Felsberg (2005) and Köthe (2006).

3.2 Iterative centerline extraction

The imaged gray-values, the boundary strength E boundary and the local orientation θ are used in the next step to perform a sub-pixel precise extraction of the centerline (CL) of each streak. The CL extraction routine stepwise lowers a threshold called sensitivity level. Each pixel that is contained in an already extracted streak is disabled. If now a pixel is above the sensitivity level and not disabled, the algorithm starts to extract the corresponding streak structure using 7 steps (cf. Fig. 3) that are repeated until the streak is completely extracted.

-

1.

Initialize the streak width with σ = 1, startPos = current position

-

2.

Extract the gray-values from a cross-sectional line b with elements \(b_i, i=1\dots N\) perpendicular to the streak structure (the direction of the streak structure is known from the local orientation θ computed using the boundary tensor).

-

3.

Use a nonlinear Levenberg--Marquard (LM) fit to estimate the offset A, the amplitude B and the width σ of a Gaussian bell-curve \(g(x) = A + B * {\text{exp}}(-\frac{(x -\mu)^2}{2 \sigma^2})\) on the gray-value profile along b. The initial guess for the parameters is: \(\tilde{\mu} = N/2; \tilde{A} = \min(b_i, \forall i=1\dots N); \tilde{B} = \max(b_i, \forall i=1\dots N)-A. \)

-

4.

The sub-pixel precise position of the CL is computed from the current pixel position and the position of the maximum μ of the Gaussian bell-curve. Since the LM fit considers multiple intensity values and a model of the intensity course of the scattered light, the amplitude B provides a much more reliable information on the streaks intensity at the position of the centerline.

-

5.

IF σ < threshold THEN Shift the current position by a predefined step sizes s = (0,1][px] in the direction of the local orientation and go to step 2 ELSE Go to the startPos and travel along the streak in the reverse direction until the width σ is larger than a certain threshold.

Principle of the iterative centerline extraction. The gray values are the original image data; black arrows show the local orientation θ weighted with the edgeness E edge computed from the boundary tensor \({\fancyscript{B}}\). The Gaussian bell-curves (green) are the result of a LM fit perpendicular to θ. The extrema of these bell-curves are used for a sub-pixel precise extraction of the structure’s centerline (CL)

The result is a list of sub-pixel precise coordinates of the CL position and the intensities at these positions. The achievable precision of the horizontal trajectory position is therefore better than the pixel-resolution of the object space imaged by the camera.

3.3 Frequency analysis

Since the frequency of the periodic intensity modulation of the alternating two-wavelength illumination can be set precisely to a constant value, it is possible to estimate the horizontal velocity of a particle from the spatial intensity signal along the corresponding streak structure in the image.

In the field of signal processing, many attempts to extract signal frequencies with the highest possible precision in the frequency and temporal domains were made. The most promising approaches are the Windowed Fourier Transform (sometimes also called short time Fourier Transform (STFT) (Gabor 1946), the Hilbert Huang Transform (HHT), which can be used to extract an instantaneous frequency and a instantaneous amplitude of the input signal (Smith 2007), and the Wigner-Ville Transform, which can be seen as a method for measuring the local time-frequency energy (Martin and Flandrin 1985). In order to obtain an instantaneous frequency information that enables the extraction of the particle velocity at every point along the streak, we perform a HHT on the intensity signal extracted along the streaks CL. Additionally, we make use of the instantaneous amplitude signal, since it contains information on the particle depth (cf. Fig. 2).

3.4 Hilbert transform

In its original form, the Hilbert transform (HT) was developed for the analysis of arbitrary time series of a variable s(t). In our approach, we apply it to the periodical intensity signal I(x) that is defined along the CL of a particle streak. The difference between the number of extrema and the number of zero-crossings in the signal has to be less than or equal to 1 in order to obtain a meaningful results from the Hilbert transform. Therefore, I(x) was corrected by subtracting a nonperiodical offset o(x) computed using an empirical mode decomposition (EMD). The HT itself is defined in its integral form to be

where P is the Cauchy principal value. The original signal I(x) together with its HT signal Y(x) forms a complex pair Z(x) called analytic signal. Z(x) can then be expressed in its polar form by an amplitude signal a(x) and a phase of the signal ϕ(x).

In this formula the amplitude is given by \(a=\sqrt{I(x)^2+Y(x)^2}\) and the phase of the signal by \(\phi(x)=\arctan\left(\frac{Y(x)}{I(x)}\right). \) The instantaneous frequency f(x) of the intensity signal I(x) is therefore given by the derivative of the phase signal

A more detailed description on the EMD and the HT can be found in the review paper by Huang et al. (1999). We can compute the instantaneous horizontal velocity v h (x) for each position along the streak from the known pixel size α and the known (constant) frequency of the intensity modulation of the light sources F using (8).

In Fig. 4 we visualized the result of the EMD and the HT for a single decelerating particle measured in the Rayleigh–Bénard experiment described in Sect. 4. In the upper plot one can clearly see that the deceleration causes an increase in the spatial frequency and the intensity. The change of the spatial frequency from \(f_1\approx 0.06\frac{1}{\hbox{px}}\) to \(f_2\approx0.15\frac{1}{\hbox{px}}\) corresponds to a velocity change from v h1 = 3 mm s−1 to v h2 = 1.2 mm s−1.

Frequency analysis of the intensity signal caused by a decelerating particle. Upper plot: Extracted intensity of a single streak is plotted as a function of the position on the centerline (blue crosses). The frequency of the oscillatory gray-value course along the CL of recorded streaks is directly connected with the horizontal particle velocity (8). From the EMD we obtain a nonperiodical offset o (red), an amplitude a and the phasing of the signal ϕ. The green and yellow lines indicate the connection between these signal properties. Lower plot: The spatial derivation of signal’s phasing is the instantaneous frequency. Both ends of the frequency signal show artifacts, which are a result of boundary effects of the EMD and the convolution with the Hilbert kernel

3.5 Depth reconstruction

For a bichromatic depth extraction, the intensity of the reflected light from both wavelengths is needed. Since the light sources are activated in an alternating manner, the pure signal of each wavelength can be obtained from the extrema of the intensity signal. The remaining signal is always a superposition of both wavelengths. To enable a depth extraction for each point along the streak, we use the offset o(x) from the EMD and the amplitude signal a(x) computed from the analytic signal in (14). In order to obtain a continuous depth signal along the streak, we used (2) and inserted the sum of amplitude and offset (cf. Fig. 4).

where \(\kappa = \frac{z_{*1}z_{*2}}{z_{*1}+z_{*2}}\) and \(\gamma=\ln\left(\frac{I_{02}}{I_{01}}\right)\) are constants that depend on the dye concentration, the intensities of the illumination signals and their wavelengths. Due to the fact that a precise estimation of γ and κ has a direct influence on the quality of the depth estimation, we developed a calibration routine that is applied prior to each measurement (cf. Sect. 2). As a result, the corresponding relative measurement error made with the used wavelength--dye combination can be approximated to be 5 % of the smaller penetration depth z *2.

3.6 Construction of lagrangian trajectories

To solve the problem of directional ambiguity, described in Sect. 1, we make use of information from the previous and the following bPSV image in a sequence. We track the trajectories of single particles through multiple exposures of an image sequence in an iterative algorithm. The problem of finding corresponding streaks in subsequent frames can be a challenging task (Rosenstiel and Rolf-Rainer Grigat 2010). In this paper, we propose the use of a heterogeneous Mahalanobis distance measure (17) to track a particle along its trajectory through a set of images. The width σ and the orientation at the streak end, extracted from the local orientation θ, are used to define a proper distance measure and threshold a feasible trajectory matching between consecutive frames. This allows us to successfully perform a robust matching in most cases.

In a first step of the matching algorithm, both streak ends \({\fancyscript{E}_1= \{{\bf e}_1, \sigma_1, \phi_1\}}\) and \({\fancyscript{E}_2 =\{{\bf e}_2, \sigma_2, \phi_2\}}\) at the end-point positions e 1 and e 2 are inspected. Based on the given features a distance metric \(d\left(\cdot, \cdot\right)\) between two arbitrary streak endings k and l is defined by

For a visualization of this adaptive end-point distance metric, Fig. 5 shows a single equidistant line (red line). One can clearly see that the orientation of the main axis of the ellipsoid formed by the equidistant line is inclined by the local orientation ϕ l . The scaling of this distance metric in both directions (ϕ l and ϕ l + 90°) is given by two independent parameters based on the streak features. W is the perpendicular scaling parameter that depends on the streak width σ and is defined to be \(W = \frac{\sigma_k + \sigma_l}{2}\). In streak direction the scaling parameter L was chosen to be proportional to the streak length. By using this adaptive distance metric, much larger Euclidean distances in streak direction than perpendicular to θ k can be selected for matching of two streak ends. A distance threshold of \(d(\cdot,\cdot) \leq d_t\) is applied for discarding incorrect pairs of streaks. This approach makes successful matching feasible at higher particle densities than possible for nearest neighbor matching with an isotropic distance metric.

The Mahalanobis distance metric (17) is defined by an angle θ, a width scaling W and a length scaling L. Since it forms ellipsoidal equidistant lines the threshold for a feasible assignment d t also forms an ellipse (red line). In the matching routine a pair is assumed to be a feasible matching if their end-point distance is smaller than d t

A recorded streak image sequence can now be used to assemble a structure termed trajectory successively from the individual streaks. In this iterative matching routine only streaks with a distance smaller than d t were matched to form a trajectory. Gaps present between single streaks in a trajectory are closed by fitting a spline to them. This results in a continuous functional description of all particle features such as horizontal position, depth and velocity along the whole trajectory. To prepare the spline fit, we combine the horizontal information obtained in the middle-line extraction and the depth to a trajectory position x(x) = (x 1, x 2, z). The horizontal velocity v h (x) from the Hilbert Huang transform (8) and the trajectory position vector x are then fitted by means of a smoothing spline (Hastie 2003). Afterward, an iterative sampling routine is used to compute the Lagrangian position \({\tilde{\bf x}}(t)\) and the Lagrangian velocity \(\tilde{v}_h(t)\) from the vectors x(x) and v h (x). This is achieved by adding a time dependence to the position on the centerline \(x \in CL\). Starting with t 0 = 0 at the first point of a trajectory \(\tilde{x}(t=0)=x(0), \) the Lagrangian variables are sampled in small, equidistant time steps \(t_{\epsilon}\) along the middle-line position x in the following way.

In addition to the three-dimensional Lagrangian particle position vector \({\tilde{\bf x}}(t)\) a three-component velocity vector is computed by decomposing v h (t) into v 1 and v 2. The third velocity component is therefore given by the temporal derivative of the depth v 3 = dz/dt.

After the construction of each Lagrangian trajectory, we use the horizontal velocity from (20) for a self-validation. By integrating \(\tilde{v}_h(t)\) over time, we compare the obtained trajectory length with the sum over the lengths of all streaks corresponding to the trajectory. A large difference between these two measures indicates an error in the velocity extraction or an incomplete trajectory. For the following experiments, we allow a maximal deviation of 5 %, all trajectories that are above this threshold are rejected.

3.7 Lagrangian two-particle dispersion

When it comes to the analysis of turbulent processes based on Lagrangian statistics, one of the most intuitive approaches is the observation of particle distances and their progression through time (Boffetta et al. 2000). In the special case of the two-particle dispersion, sometimes also called pair dispersion, one observes the distance vectors R between a set of particle pairs that start at time t 0 with an initial separation r 0 = |R(0)|.

In this formula \({\tilde{\bf x}}_i(t)\) is the Lagrangian particle position of particle i. Averaging the squared absolute value of the distance over many Lagrangian trajectories

was used as a statistical feature for the description of turbulent processes in many theoretical, numerical and experimental studies (Emran and Schumacher 2010; Ott and Mann 2000; Bewley et al. 2008). Already Richardson (1926) described a statistical scaling behavior of R(t) for isotropic turbulences that predicted a t 3 proportionality to occur after a certain time t b . For shorter timescales, when R(0) and R(t) are within the so-called dissipation subrange, Batchelor (1952) found a t 2 scaling behavior in the so-called Batchelor regime. Both scaling laws are valid for particle pairs with an inertial separation within the so-called inertial subrange, that is, particles with a r 0 in between the Kolmogorov scale η K and the integral length scale L. As a result of the improvement of numerical and experimental methods in the field of turbulent fluid dynamics, many recent studies address these scaling properties. For deeper insights and a list of recent publications on this topic, we refer the review of Salazar and Collins (2009).

For the computation of Lagrangian particle pairs, we analyzed the whole set of trajectories that results from a image sequence. Out of all possible trajectory pairs, we extracted those which had a distance \(|{\tilde{\bf x}}_1(t_p)-{\tilde{\bf x}_2}(t_p)| =r_0\) at some point in time t p . From this point in time until the trajectory pair not longer coexists, this particle pair contributes to the Lagrangian average \(\langle.\rangle_L\) in (22).

As mentioned by Ott and Mann (2000), it is desirable to choose a small initial separation r 0 which introduces a dilemma, because choosing a smaller r 0 means reducing the number of valid particle pairs and causes a smaller sample size in the Lagrangian statistic. For the Lagrangian two-particle statistics extracted in the course of this study, we computed the pair dispersion for initial distances within η K ≪ r 0 ≪ 10η k .

4 Experiments

4.1 Benchmark datasets

To validate the velocity estimation, a set of benchmark datasets proposed by Berthe et al. (2010) were used. These datasets were measured using a particle mounted on an automated three-dimensional traversing system in a dyed liquid. The particle was moved on different trajectories and recorded by a single camera recording short-exposure PIV data. From each PIV image sequence containing N images I t with \(t=0 \ldots N-1\) with gray-values i x,y,t , a single PSV image was computed by integrating through time and modulating the image intensities with a time-dependent, sinusoidal function.

In this equation \(\zeta [\hbox{Hz}]\) is the PIV camera frame-rate, F [Hz] is the simulated illumination frequency, and t is the number of the current time steps. As shown in Fig. 6, the integrated PSV data look similar to the data obtained in our long-exposure PSV measurements (cf.: Fig. 1). The illumination frequency F was varied in a range where the number of periods along the streak was larger than two (to obtain a robust frequency signal) and that the wavelength on the streak was at least 10 px long [to avoid errors due to the Nyquist-Shannon sampling theorem (Nyquist 1924)]. The intensity modulation frequency F for the circular measurement #1, where the particle was moving with a constant velocity of v = 25 mm s−1, was varied between 0.2 and 2.0 Hz. The slower linear measurements #2–#5, where the particles were moved with v = 9.1 mm s−1, were varied between 0.2 and 1.4 Hz.

Streak-extraction results on PIV measurements by Berthe et al. (2010), integrated using equation (23) to obtain streak images. The blue lines show the extracted middle line. In the validation experiment the illumination frequency was varied to validate the performance of the algorithm for different spatial frequencies along the streaks

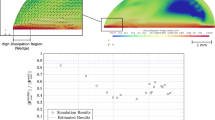

Results The results on these semi-artificial dataset indicate that the proposed algorithm enables the extraction of horizontal particle trajectories as well as their horizontal velocity based on a frequency analysis of the reflected light from an intensity-modulated light source. As summarized in Table 1, the extracted average velocity matches very well the ground truth velocity given by the speed of the traversing unit. For the circular dataset as well as for the linear particle tracks shown in Fig. 6, the standard deviation of the extracted velocity values along the streak is smaller than 0.5 % (cf. Fig. 7).

Result of the velocity extraction on the datasets #1–#5. Equation (23) was used to simulate different illumination frequencies between 0.2 and 2 Hz. The y-axis on the right side belongs to the circular dataset #1 and the axis on the left to the four linear ones (#2–#5)

The distributions of the datasets #2 to #5 are shown in a box-and-whisker diagram provided with the supplementary data of this paper.

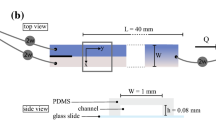

4.2 Rayleigh–Bénard convection experiment

To prove the applicability of the proposed technique in a real-world measurement, bPSV datasets in the water-sided boundary layer of a turbulent Rayleigh–Bénard (RB) convection were recorded. In the field of fluid mechanics, convection-driven turbulent flows belong to the best-studied flow fields. In various studies RB-convection was analyzed based on Eulerian (Lohse and Xia 2010) and Lagrangian statistics (Schumacher 2009; Emran and Schumacher 2010; Sreenivasan and Schumacher 2009; Gasteuil et al. 2007). Since bPSV measurements provide densely sampled, Lagrangian information about position and velocity, we focus on a Lagrangian interpretation of the measured data.

Experimental setup.

For the generation of a well-defined turbulent RB-convection, a rectangular vessel with the dimensions (H = 147mm, L = 405mm, W = 415mm) was built from 3.3-mm-thick BOROFLOAT® glas. For the heating we mounted a mirrored box with two infrared heating tubes (Heraeus Noblelight, Art.Nr.: 45132877) with P max = 1kW and \(T_{{{\text{max}}}} = 1.2 \times 10^{3} \;^{^\circ } {\text{C}}\) each under the vessel. A sketch of the experimental setup is shown in Fig. 8. Due to the high transmission of 93 % at wavelengths between 2.0 and 2.7 nm, most of the infrared light penetrates the bottom plate of the vessel and is absorbed within the first millimeter of the water. The cooling at the water surface is mostly due to evaporation and radiation. In all experiments the vessel was filled up to a height of \(\tilde{H} = 50\hbox{mm}\) and the heating power was set to 945W. Measurements were taken once the system reached its state of equilibrium at a water temperature of T≈51°C. The temperature difference \(\Updelta T=23.6\,^\circ\hbox{C}\pm 0.3\,^\circ\hbox{C}\) between the bottom plate and the air directly above the water surface was measured by a Pt100 thermo sensor using a GMH 3710 thermometer (GREISINGER electronic GmbH). All temperatures were averaged over several minutes. The absorbing dye Tartrazine was added to the liquid from a stock solution with 1g Tartrazine per liter, to obtain a concentration of 12 mg l−1 Tartrazine in the water. This concentration enables a particle extraction down to the depth of 10mm below the interface. Every pixel of the 5Mpx sensor imaged a square of 30 μm x 30 μm in the interfacial region. From the pixel size and the penetration depth, the spatial uncertainty of the method can be approximated to be \(\Updelta x = \Updelta y \leq 30\,\mu\hbox{m}\) and \(\Updelta z \leq 500\,\mu\hbox{m}\). For the seeding we used neutrally buoyant silver-coated hollow ceramic spheres with a mean diameter of 100 μm and a mean density of 0.9 ± 0.3 g cm3 (Potters Industries Inc. Conduct-O-Fil® AGSL150-16TRD). These tracer particles were sorted in advance based on their sedimentation behavior in water to obtain a narrower density distribution. During the experiment, the experimental setup was covered with light-absorbing material to ensure that the light that is reflected by the particles only originates from the LED arrays used.

Scaling Parameters-To enable a comparison of different flow phenomena and to characterize turbulent flow field, a set of scaling parameter can be introduced. Most important in this context is the Rayleigh number, which is used to relate the convective heat transfer in a buoyancy-driven flow field to the conductive heat transfer. For a turbulent Rayleigh–Bénard flow field it is defined by

In this equation, α is the isobaric thermal expansion coefficient, g represents the gravitational acceleration, \(\Updelta T\) is the temperature difference between the heated bottom of the vessel and the free water surface, and κ is the thermal diffusivity. In equilibrium the Rayleigh–Bénard convection obtained in this study falls in a Rayleigh number regime of \(Ra \approx 7.4\,\times\,10^{10}\). The second important scaling parameter analysis of convection-driven turbulent flow fields is the dimensionless Reynolds number

which combines a characteristic length scale l, a characteristic velocity u and the kinematic viscosity ν (Tennekes and Lumley 1992). This characteristic number is the ratio of inertial forces (defined by the product ul) to the viscous forces. In this approximation l, which is sometimes also called integral scale, is given by the size of the largest eddies, while u corresponds to their velocity. As described by Taylor (1935), the rate of energy that is supplied by large-scale motions and generates a cascade of smaller-scale eddies that are at a certain scale dissipated by viscous processes can be estimated from the observed large-scale dynamics following

Using this scale relation, one can also approximate the well-known Kolmogorov microscale of length η K and time τ K using (25) and (26).

In the present study we estimated the characteristic length of the large-scale eddies l by visual inspection from the measured image series, and the characteristic velocity was approximated from the root mean squared (rms) velocity of the fastest 10 % of all measured trajectories. Slower trajectories, corresponding to small-scale turbulences, were ignored in this averaging. Together with the kinematic viscosity of water ν55 °C = 0.511 × 10−6 m2 s−1 (Kestin et al. 1978), the measured turbulent Rayleigh–Bénard convection can be characterized by Re = 98, \(\eta_K= 3.2\cdot 10^{-4}\hbox{m}\) and τ K = 200ms.

An analysis of the extracted particle velocity distributions and the acceleration distributions showed no evidence for a corrupting influence of the previously mentioned systematic bias toward high velocities. Nonetheless, this special topic is to be addressed in further studies.

Lagrangian statistics Among all Lagrangian statistical measures of turbulent flow fields that were studied in the past, the particle dispersion is one of the most prominent characteristics. In this contribution we focus on the most simple particle dispersion measure called the pair dissipation. As described in the previous section, the pair dispersion \(\langle |R(t) - R(0)|^2\rangle_L\) is computed from a set of bPSV trajectories on the basis of an initial pair separation R(0). Based on the theoretical studies by Richardson (1926) and Batchelor (1952), the two-particle dispersion in an isotropic and homogeneous turbulent flow has a t 2 behavior in the so-called Batchelor regime for times t ≪ t b . In this regime, the dissipation behavior is strongly influenced by dispersion processes. This regime is followed by the Richardson--Obukhov (R–O) regime, where the dispersion behavior is described by the Richardson--Obukhov (R–O) t 3 law.

In this equation C 2 and g 3d are constants and t b is the Batchelor time, where the transition from the Batchelor regime to the (R–O) regime takes place. The time T L corresponds to the Lagrangian integral time scale. For a detailed description theory behind the R–O law and the dissipation behavior of Lagrangian particle pairs, we refer to the recently published review from Salazar and Collins (2009). At this point we want to point out that the observed interfacial turbulence is far from being homogeneous and isotropic. On the one hand, the presence of an interface restricts the size of the eddies in the boundary region. This causes a higher number of small-scale eddies in regions near the interface. On the other hand, the convection is anisotropic because of the vertical temperature gradient. Nonetheless, a transition from the Bachelor regime to the R–O regime can be observed in the Lagrangian statistics of the measured interfacial flow-filed. Figure 10 shows the two-particle dispersion of one turbulent Rayleigh–Bénard measurement normalized by t −3. As a result of this normalization, the Batchelor regime is represented by a − 1 slope, while the R–O regime results in a plateau for particle dissipation with a small inertial pair separation.

Sketch of the convection vessel used to generate the turbulent RB-convection. The side walls and the bottom plate (a) consist of 3.3-mm-thick BOROFLOAT\(^{\circledR}\) glass, which is nearly transparent for the infrared light, radiated from the heating tubes (d). All sidewalls (c) of the box that contains the heating elements are mirrored to reflect the infrared light to the bottom plate of the vessel where it is absorbed in the first few millimeter of the water-body (b), since water behaves like a black-body for infrared light

3D particle pair dispersion as a function of the time scaled with the Kolmogorov timescale for four initial pair separations (R(0) = 3.8η K , 4.6η K , 6.5η K , 9.4η K ). The pair dispersion was normalized by t −3. For small initial pair separations r 0, three regimes are clearly distinguishable. The dissipation subrange corresponds to the ∼ t −1 part, the inertial subrange is represented by the plateau in the curves of small inertial pair separation R(0) (gray box), followed by the diffusion subrange

5 Conclusion

The bichromatic particle streak velocimetry (bPSV) method represents a highly accurate and simple-to-use measurement technique for extracting Lagrangian 3D3C flow information in turbulent interfacial flow fields. This new measurement technique relies only on one camera and extracts particle positions relative to the fluid interface. Horizontal position and velocity of the particle trajectories are extracted from long-exposure images using a harmonically modulated illumination to code temporal information along the recorded particle streaks. The vertical information is obtained from a bichromatic depth extraction using an absorbing dye and a dual-wavelength illumination. The approach is highly robust to a wide range of encountered velocities and illumination frequencies. This was shown on a previously published PIV benchmark dataset (Berthe et al. 2010). The deviation of the extracted mean velocity from the ground truth lies within the standard deviation of lower than 0.5 % of the velocity.

Also, the interfacial flow field of a turbulent Rayleigh–Bénard convection was analyzed using bPSV. In this regime, the turbulence is highly anisotropic. Previously, only limited experimental data existed for this type of forced turbulence. Pair dispersion rates for different inertial pair separations were extracted from the measured Lagrangian trajectories. In the Lagrangian statistics, a clear transition from an inertial subrange which corresponds to a Batchelor regime with a t 2 scaling behavior to a t 3 in the Richardson regime was found. Both regimes were originally defined for isotropic turbulence. Our studies confirm the applicability of this theory in the anisotropic case as well. These results clearly demonstrate the accuracy and applicability of bPSV for research in interfacial flows. Currently, the only limitation of the approach arises at time-dependent, freely moving interfaces due to refraction processes taking place. In a next step, we will address these issues. bPSV will thus become a “new stone in the wall” for fluid flow measurements in challenging interfacial flows.

References

Adamczyk A, Rimai L (1988) 2-Dimensional particle tracking velocimetry (PTV): technique and image processing algorithms. Exp Fluids 6:373–380

Adrian R (1991) Particle-imaging techniques for experimental fluid mechanics. Ann Rev Fluid Mech 23:261–304

Banner ML, Peirson WL (1998) Tangential stress beneath wind-driven air water interfaces. J Fluid Mech 364:115–145

Batchelor GK (1952) The effect of homogeneous turbulence on material lines and surfaces. Proc R Soc Lond A Math Phys Sci 213(1114):349–366. doi:10.1098/rspa.1952.0130

Berthe A, Kondermann D, Garbe C, Affeld K, Jäne B, Ketzscher U (2009) The wall piv measurement technique for near wall flow fields in biofliud mechanics. In: Nitsche W, Dobriloff C (eds) Imaging measurement methods for flow analysis, vol 106. Springer, Berlin, pp 11–20, doi:10.1007/978-3-642-01106-1_2

Berthe A, Kondermann D, Christensen C, Goubergrits L, Garbe C, Affeld K, Kertzscher U (2010) Three-dimensional, three-component wall-piv. Exp Fluids 48. doi:10.1007/s00348-009-0777-4

Bewley G, Sreenivasan K, Lathrop D (2008) Particles for tracing turbulent liquid helium. Exp Fluids 44(6):887–896

Bigun J (2006) Vision with direction a systematic introduction to image processing and computer vision. Springer, Berlin. doi:10.1007/b138918

Biwole PH, Yan W, Zhang Y, Roux J (2009) A complete 3D particle tracking algorithm and its applications to the indoor airflow study. Meas Sci Technol 20(11):115,403. doi:10.1088/0957-0233/20/11/115403

Boffetta G, Celani A, Cencini M, Lacorata G, Vulpiani A (2000) Nonasymptotic properties of transport and mixing. Chaos 10(1):50–60

Brücker C (1995) Digital-particle-image-velocimetry (dpiv) in a scanning light-sheet: 3D starting flow around a short cylinder. Exp Fluids 19:255–263

Brücker C (1996) 3D PIVvia spatial correlation in a color-coded light-sheet. Exp Fluids 21:312–314

Budwig R (1994) Refractive index matching methods for liquid flow investigations. Exp Fluids 17:350–355, http://dx.doi.org/10.1007/BF01874416. doi:10.1007/BF01874416

Burgmann S, Dannemann J, Schröder W (2008) Time-resolved and volumetric piv measurements of a transitional separation bubble on an sd7003 airfoil. Exp Fluids 44:609–622. doi:10.1007/s00348-007-0421-0

Cenedese A, Paglialunga A (1989) A new technique for the determination of the third velocity component with piv. Exp Fluids 8:228–230. doi:10.1007/BF00195799

Debaene P, Kertzscher U, Gouberits I, Affeld K (2005) Visualization of a wall shear flow. J Vis 8(4):305-313

Dickey TD, Hartman B, Hurst E, Isenogle S (1984) Measurement of fluid flow using streak photography. Am J Phys 52:216-219. doi:10.1119/1.13695

Dimotakis PE, Debussy FD, Koochesfahani MM (1981) Particle streak velocity field measurements in a two-dimensional mixing layer. Phys Fluids 24:995–999. doi:10.1063/1.863481

Dinkelacker F, Schäfer M, Ketterle W, Wolfrum J, Stolz W, Köhler J (1992) Determination of the third velocity component with PTA using an intensity graded light sheet. Exp Fluids 13:357–359. doi:10.1007/BF00209511

Dixon L, Cheong FC, Grier DG (2011) Holographic particle-streak velocimetry. Opt Express 19(5):4393–4398. doi:10.1364/OE.19.004393

Elsinga GE, Scarano F, Wieneke B (2006) Tomographic particle image velocimetry. Exp Fluids 41:933–947

Emran MS, Schumacher J (2010) Lagrangian tracer dynamics in a closed cylindrical turbulent convection cell. Phys Rev E 82:016303. doi:10.1103/PhysRevE.82.016303

Fage A, Townend HCH (1932) An examination of turbulent flow with an ultramicroscope. Proc R Soc Lond Ser A 135(828):656–677. doi:10.1098/rspa.1932.0059

Gabor D (1946) Theory of communication. J IEE 93:429–446

Gasteuil Y, Shew WL, Gibert M, Chillá F, Castaing B, Pinton JF (2007) Lagrangian temperature, velocity, and local heat flux measurement in Rayleigh-Bénard convection. Phys Rev Lett 99:234,302. doi:10.1103/PhysRevLett.99.234302

Hastie T (2003) The elements of statistical learning. Data mining, inference, and prediction. Springer, Berlin

Hinsch KD (2002) Holographic particle image velocimetry. Meas Sci Technol 13:R61–R72

Hoyer K, Holzner M, Lüthi B, Guala M, Liberzon A, Kinzelbach W (2005) 3D scanning particle tracking velocimetry. Exp Fluids 39:923–934. doi:10.1007/s00348-005-0031-7

Huang NE, Shen Z, Long SR (1999) A new view of nonlinear water waves. Annu Rev Fluid Mech 31:417–457

Jehle M, Jähne B (2008) A novel method for three-dimensional three-component analysis of flow close to free water surfaces. Exp Fluids 44:469–480. doi:10.1007/s00348-007-0453-5

Kertzscher U, Affeld K, Goubergrits L, Schade M (1999) A new method to measure the wall shear rate in models of blood vessels. In: Proceedings of the European medical & biological engineering conference, Wien, Österreich, November 4–7, Suppl2, pp 1412–1413

Kestin J, Sokolov M, Wakeham WA (1978) Viscosity of liquid water in the range −8°C to 150°C. J Phys Chem Ref Data 7:941–948

Kähler C, Kompenhans J (2000) Fundamentals of multiple plane stereo particle image velocimetry. Exp Fluids 29:70–77

Köthe U (2006) Low-level feature detection using the boundary tensor. In: Visualization and processing of tensor fields, Springer, Berlin, pp 63–79

Köthe U, Felsberg M (2005) Riesz-transforms versus derivatives: on the relationship between the boundary tensor and the energy tensor. In: Kimmel R, Sochen N, Weickert J (eds) Scale space and PDE methods in computer vision, Springer, Berlin, pp 179–191

Law CNS, Khoo BC, Chew TC (1999) Turbulence structure in the immediate vicinity of the shear-free air-water interface induced by a deeply submerged jet. Exp Fluids 27:321–331. doi:10.1007/s003480050356

Leue C, Hering F, Geißler P, Jähne B (1996) Segmentierung von Partikelbildern in der Strämungsvisualisierung. In: Jähne B, Geibßler P, Haußecker H, Hering F (eds) Proceedings of 18th DAGM-symposium Mustererkennung, Informatik Aktuell, pp 118–129

Liberzon A, Gurka R, Hetsroni G (2004) XPIV-multi-plane stereoscopic particle image velocimetry. Exp Fluids 36:355–362

Liu T, Sullivan J (1998) Luminescent oil-film skin-friction meter. AIAA J 36(8):1460–1465

Lohse D, Xia KQ (2010) Small-scale properties of turbulent Rayleigh-Bénard convection. Annu Rev Fluid Mech 42(1):335–364

Martin W, Flandrin P (1985) Wigner-ville spectral analysis of nonstationary processes. IEEE Trans Acoust Speech Signal Process 33(6):1461–1470. doi:10.1109/TASSP.1985.1164760

McGregor T, Spence D, Coutts D (2007) Laser-based volumetric colour-coded three-dimensional particle velocimetry. Opt Lasers Eng 45(8):882–889

Müller D, Müller B, Renz U (2001) Three-dimensional particle-streak tracking (PST) velocity measurements of a heat exchanger inlet flow. Exp Fluids 30(6):645–656. doi:10.1007/s003480000242

Nyquist H (1924) Certain factors affecting telegraph speed. Bell Syst Tech J 3:324

Ott S, Mann J (2000) An experimental investigation of the relative diffusion of particle pairs in three-dimensional turbulent flow. J Fluid Mech 422:207–223

Pereira F, Gharib M, Dabiri D, Modarress M (2000) Defocusing PIV: a three component 3D DPIV measurement technique. Application to bubbly flows. Exp Fluids 29:S78–S84

Pereira F, Lu J, Casta no Graff E, Gharib M (2007) Microscale 3D flow mapping with μDDPIV. Exp Fluids 42:589–599. doi:10.1007/s00348-007-0267-5

Prandtl L (1957) Strömungslehre. Vieweg, Braunschweig

Prasad AK (2000) Stereoscopic particle image velocimetry. Exp Fluids 29:103–116

Richardson LF (1926) Atmospheric diffusion shown on a distance-neighbour graph. Proc R Soc Lond Ser A 110(756):709–737. doi:10.1098/rspa.1926.0043

Rosenstiel M, Rolf-Rainer Grigat (2010) Segmentation and classification of streaks in a large-scale particle streak tracking system. Flow Meas Instrum 21(1):1–7. doi:10.1016/j.flowmeasinst.2009.10.001

Salazar JPLC, Collins LR (2009) Two-particle dispersion in isotropic turbulent flows. Ann Rev Fluid Mech 41(1):405. doi:10.1146/annurev.fluid.40.111406.102224

Scholzen F, Moser A (1996) Three-dimensional particle streak velocimetry for room airflow with automatic stereo-photogrammetric image processing. In: Proceedings of 5TH international conference on air distribution in rooms, ROOMVENT ’96, pp 555–562

Schröder A, Geisler R, Elsinga G, Scarano F, Dierksheide U (2008) Investigation of a turbulent spot and a tripped turbulent boundary layer flow using time-resolved tomographic piv. Exp Fluids 44:305–316. doi:10.1007/s00348-007-0403-2

Schumacher J (2009) Lagrangian studies in convective turbulence. Phys Rev E 79:056301. doi:10.1103/PhysRevE.79.056301

Sellent A, Eisemann M, Goldlücke B, Cremers D, Magnor M (2010) Motion field estimation from alternate exposure images. IEEE Trans Pattern Anal Mach Intell (TPAMI)

Sheng J, Malkiel E, Katz J (2008) Using digital holographic microscopy for simultaneous measurements of 3d near wall velocity and wall shear stress in a turbulent boundary layer. Exp Fluids 45:1023–1035. doi:10.1007/s00348-008-0524-2

Sinha SK, Kuhlman PS (1992) Investigating the use of stereoscopic particle streak velocimetry for estimating the three-dimensional vorticity field. Exp Fluids 12:377–384. doi:10.1007/BF00193884

Smith JO (2007) Mathematics of the discrete fourier transform (DFT). W3K Publishing, Stanford

Sreenivasan KR, Schumacher J (2009) Lagrangian views on turbulent mixing of passive scalars. Philos Transact R Soc A Math Phys Eng Sci 368(1916):1561–1577

Taylor GI (1935) Statistical theory of turbulence. Proc R So Lond A Math Phys Sci 151(873):421–444. doi:10.1098/rspa.1935.0158

Tennekes H, Lumley JL (1992) A first course in turbulence, 14th edn. MIT Press, Cambridge, first Edition (1972)

Turney DE, Anderer A, Banerjee S (2009) A method for three-dimensional interfacial particle image velocimetry (3D-IPIV) of an air-water interface. Meas Sci Technol 20(4):045403; 1–12. doi:10.1088/0957-0233/20/4/045403

Wernet MP (2004) Planar particle imaging doppler velocimetry: a hybrid piv/dgv technique for three-component velocity measurements. Meas Sci Technol 15(10):2011

Willert CE, Gharib M (1992) Three-dimensional particle imaging with a single camera. Exp Fluids 12:353–358

Wung T, Tseng F (1992) A color-coded particle tracking velocimeter with application to natural convection. Exp Fluids 13(4):217–223

Zibret D, Bailly Y, Cudel C, Prenel J (2003) Direct 3d flow investigations by means of rainbow volumic velocimetry (rvv). Proc PSFVIP 4(3):5

Acknowledgments

We gratefully acknowledge the financial support by the DFG Graduate College GRK1114 “Optische Messtechniken für die Charakterisierung von Transportprozessen an Grenzflächen.”

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Voss, B., Stapf, J., Berthe, A. et al. Bichromatic particle streak velocimetry bPSV. Exp Fluids 53, 1405–1420 (2012). https://doi.org/10.1007/s00348-012-1355-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00348-012-1355-8