Abstract

We consider stochastic dynamics of a population which starts from a small colony on a habitat with large but limited carrying capacity. A common heuristics suggests that such population grows initially as a Galton–Watson branching process and then its size follows an almost deterministic path until reaching its maximum, sustainable by the habitat. In this paper we put forward an alternative and, in fact, more accurate approximation which suggests that the population size behaves as a special nonlinear transformation of the Galton–Watson process from the very beginning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 The model

A large population often starts from a few individuals who colonize a new habitat. Initially, in abundance of resources and lack of competition it grows rapidly until reaching the carrying capacity. Then the population fluctuates around the carrying capacity for a very long period of time, until, by chance, it eventually dies out, see, e.g., Haccou et al. (2007); Hamza et al. (2016).

This cycle is captured by a stochastic model of density dependent branching process \(Z=(Z_n, n\in {\mathbb {Z}}_+)\) generated by the recursion

started at an initial colony size \(Z_0\). The random variables \(\xi _{n,j}\) take integer values and, for each \(n\in {\mathbb {N}}\), are conditionally i.i.d. given all previous generations

The object of our study is the density process of the population \({{\overline{Z}}}_{n}:= Z_{n}/K\) relative to the carrying capacity parameter \(K>0\). The common distribution of the random variables \(\xi _{n,j}\) is assumed to depend on the density \({{\overline{Z}}}_{n-1}\):

and is determined by the functions \(p_\ell :{\mathbb {R}}_+\mapsto [0,1]\).

Both processes Z and \({{\overline{Z}}}\) are indexed by K, but this dependence is suppressed in the notation. The mean and the variance of offspring distribution when the density process has value x are denoted by

assumed to exist. Consequently,

If the offspring mean function satisfies

the process Z has a supercritical reproduction below the capacity K, critical reproduction at K and a subcritical reproduction above K. Thus a typical trajectory of Z grows rapidly until it reaches the vicinity of K. It then stays there fluctuating for a very long period of time and gets extinct eventually if \(p_0(x)>0\) for all \(x\in {\mathbb {R}}_+\). Thus the lifespan of such population roughly divides between the emergence stage, at which the population establishes itself, the quasi-stationary stage around the carrying capacity and the decline stage which ends up with inevitable extinction.

Remark 1

While (4) is typical for populations with quasi stable equilibrium at the capacity, it is not needed in the proofs and will not be assumed in what follows.

1.2 Large initial colony

A more quantitative picture can be obtained by considering the dynamics for the density process derived from (1) by setting \(f(x):=xm(x)\), dividing by K and rearranging:

The second term on the right has zero mean and conditional variance

Consequently (5) can be viewed as a deterministic dynamical system perturbed by small noise of orderFootnote 1\(O_{\textsf{P}}(K^{-1/2})\). If the initial colony size is relatively large, i.e., proportional to the carrying capacity:

then \({{\overline{Z}}}_n \xrightarrow [K\rightarrow \infty ]{{\textsf{P}}} x_n\) where \(x_n\) follows the unperturbed deterministic dynamics

started at \(x_0\). If (4) is assumed, \(x=1\) is the stable fixed point of f and if, in addition, f is an increasing function, then the sequence \(x_n\) increases to 1 with n when \(x_0<1\). This limit also implies that the probability of early extinction tends to zero as \(K\rightarrow \infty \).

Moreover, the stochastic fluctuations about the deterministic limit converge to a Gaussian process \(V=(V_n, n\in {\mathbb {Z}}_+)\) in distribution:

where \(V_n\) satisfies the recursion, Klebaner and Nerman (1994),

with N(0, 1) i.i.d. random variables \(W_n\)’s.

Roughly speaking, this implies that when K is large, \(Z_n\) grows towards the capacity K along the deterministic path \(K x_n\) and its fluctuations are of order \(O_{\textsf{P}}(K^{1/2})\):

If \(p_0(x)>0\) for all \(x\in {\mathbb {R}}_+\) and (4) is imposed, zero is an absorbing state and hence the population gets extinct eventually. Large deviations analysis, see for example Klebaner and Zeitouni (1994); Klebaner et al. (1998), and Jung (2013); Högnäs (2019), shows that the mean of the time to extinction \(\tau _e =\inf \{n\ge 0: Z_n=0\}\) grows exponentially with K. In this paper we are concerned with the establishment stage of the population, which occurs well before the ultimate extinction, on the time scale of \(\log K\).

1.3 Small initial colony

When \(Z_0\) is a fixed integer, say \(Z_0=1\), then \(Z_0/K\rightarrow x_0=0\) and, since \(f(0)=0\), the solution to (6) is \(x_n=0\) for all \(n\in {\mathbb {N}}\). In this case the approximation (7) ceases to provide useful information. An alternative way to describe the stochastic dynamics in this setting was suggested recently in Barbour et al. (2016); Chigansky et al. (2018, 2019). It is based on the long known heuristics (Kendall 1956; Whittle 1955; Metz 1978), according to which such a population behaves initially as the Galton–Watson branching process and, if it manages to avoid extinction at this early stage, it continues to grow towards the carrying capacity following an almost deterministic curve.

This heuristics is made precise in Chigansky et al. (2019) as follows. We couple Z to a supercritical Galton–Watson branching process \(Y=(Y_n, n\in {\mathbb {Z}}_+)\) started at \(Y_0=Z_0=1\),

with the offspring distribution identical to that of Z at zero density size

This coupling is defined under assumption (a1.) below in Sect. 3.2.

Denote by \(\rho :=m(0)>1\), defineFootnote 2\(n_c:= n_c(K)=[\log _\rho K^c]\) for some \(c\in (\frac{1}{2},1)\) and let \({{\overline{Y}}}_n:=Y_n/K\) be the density of Y. Then \({{\overline{Z}}}_n=Z_n/K\) is approximated in Chigansky et al. (2019) by

where \(f^k\) stands for the k-th iterate of f. As is well known (Athreya and Ney 1972)

where W is an a.s. finite random variable. Moreover, under certain technical conditions on f, the limit

can be shown to exist and define a continuous function.

Theorem 1

(Chigansky et al. 2019) Let \(n_1:=n_1(K)=[\log _\rho K]\) then

In particular, this result implies that when K is a large integer power of \(\rho \) the distribution of \({{\overline{Z}}}_{n_1}\) is close to that of H(W). Moreover,

where \(x_n\) solves (6) started from the random initial condition H(W). This approximation also captures the early extinction event since \(H(0)=0\) and \({\textsf{P}}(W=0)={\textsf{P}}(\lim _n Y_n=0)\), the extinction probability of the Galton–Watson process Y.

1.4 This paper’s contribution

In this paper we address the question of the rate of convergence in (10). Note that if the probabilities in (2) are constant with respect to x then \(f(x)=\rho x\), consequently \(H(x)=x\), and the processes Z and Y coincide. In this case

where the order of convergence is implied by the CLT for the Galton–Watson process (Heyde 1970) by which \(\sqrt{\rho ^{n}} (\rho ^{-n}Y_{n} -W)\) converges in distribution to a mixed normal law as \(n\rightarrow \infty \). Thus it can be expected that at best the sequence in (10) is of order \(O_{{\textsf{P}}}(K^{-1/2})\) as \(K\rightarrow \infty \). However, the best rate of convergence in the approximation in Theorem 1 described above, is achieved with \(c=\frac{5}{8}\) and it is only \(O_{\textsf{P}}(K^{-1/8}\log K)\). This can be seen from a close examination of the proof in Chigansky et al. (2019).

The goal of this paper is to put forward a different approximation with much faster rate of convergence of order \(O_{\textsf{P}}(K^{-1/2}\log K)\). This is still slower than the rate achievable in the density independent case, but only by a logarithmic factor. The new proof highlights a better understanding of population dynamics at the emergence stage, which shows that, in fact, a sharper approximation is given by the Galton–Watson process transformed by a nonlinear function H arising in deterministic dynamics (9).

It is not clear at the moment whether the \(\log K\) factor is avoidable and whether a central limit type theorem holds. These questions are left for further research.

2 The main result

We will make the following assumptions.

-

a1.

The offspring distribution \( F_x(t) = \sum _{\ell \le t} p_\ell (x) \) is stochastically decreasing with respect to the population density: for any \(y\ge x\),

$$\begin{aligned} F_y(t)\ge F_x(t), \quad \forall t\in {\mathbb {R}}_+. \end{aligned}$$ -

a2.

The second moment of the offspring distribution, cf. (3),

$$\begin{aligned} m_2(x) = \sigma ^2(x)+m(x)^2 \end{aligned}$$is L-Lipschitz for some \(L>0\).

-

a3.

The function \(f(x)=xm(x)\) has two continuous bounded derivatives andFootnote 3

$$\begin{aligned} \Vert f'\Vert _\infty = f'(0)=\rho . \end{aligned}$$

Remark 2

Assumption (a1.) means that the reproduction drops with population density. In particular, it implies that \(x\mapsto m(x)\) is a decreasing function and hence,

which is only slightly weaker than (a3.). The assumption (a2.) is technical.

Remark 3

The distribution of the process \({{\overline{Z}}}\) does not depend on the values of \(\{p_\ell (0), \ \ell \in {\mathbb {Z}}_+\}\) for any K, while the distribution of W and, therefore, of H(W) does. This is not a contradiction since our assumptions imply continuity of \(x\mapsto p_\ell (x)\) at \(x=0\) for all \(\ell \in {\mathbb {Z}}_+\). Indeed, \( m(x) = \int _0^\infty (1-F_x(t))dt \) and therefore

where the convergence holds since m(x) is differentiable and a fortiori continuous at \(x=0\). By the stochastic order assumption (a1.), \(F_x(t)-F_0(t)\ge 0\) for any \(t\ge 0\). Since both \(F_x\) and \(F_0\) are discrete with jumps at integers, for any \(s\ge 0\),

This in turn implies that \(p_\ell (x)\rightarrow p_\ell (0)\) as \(x\rightarrow 0\) for all \(\ell \).

Theorem 2

Under assumptions (a1.)–(a3.)

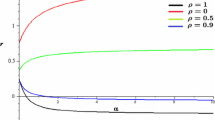

Example 1

The binary splitting model from Chigansky et al. (2018) satisfies the above assumptions. Another example is Geometric offspring distribution

where \(q:{\mathbb {R}}_+\mapsto [0,1]\) is a decreasing function. This distribution satisfies the stochastic order condition (a1.). The normalization \(m(0)=\rho \) and \(m(1)=1\) implies that \(q(0)=\rho /(1+\rho )\) and \(q(1)=1/2\). A direct calculation shows that, e.g.,

satisfies both (a2.) and (a3.).

Example 2

Stochastic Ricker model (Högnäs 1997) is given by a density dependent branching process with the offspring distribution

where \(\gamma >0\) is a constant, \(q_\ell \), \(\ell \ge 1\) is a given probability distribution, and no offspring are produced with probability \(1-e^{-\gamma x}\). This model satisfies the stochastic ordering assumption (a1.). The mean value of the distribution \(q_\ell \) is denoted by \(e^r\), to emphasize the relation to the deterministic Ricker model. With such notation,

Under normalization \(m(0)=\rho \) and \(m(1)=1\) this becomes

A direct calculation verifies the assumptions (a2.) and (a3.).

3 Proof of Theorem 2

We will construct the process Z defined in (1) and the Galton–Watson process Y from (8) on a suitable probability space so that \(Y_n\ge Z_n\) for all \(n\in {\mathbb {N}}\) and the trajectories of these processes remain sufficiently close at least for relatively small n’s (Sect. 3.2). We will then show that H is twice continuously differentiable (Sect. 3.1) and use Taylor’s approximation to argue (Sect. 3.3) that

This convergence combined with (11) implies the result. Below we will write C for a generic constant whose value may change from line to line.

3.1 Properties of \({\textbf{H}}\)

In this section we establish existence of the limit (9) under the standing assumptions and verify its smoothness. The proof of existence relies on a result on functional iteration, shown in Baker et al. (2020).

Lemma 3

(Baker et al. 2020, Lemma 1) Let \(x_{m,n}\) be the sequence generated by the recursion

subject to the initial condition \(x_{0,n}=x/\rho ^n>0\), where \(\rho >1\) and \(C\ge 0\) are constants. There exists a locally bounded function \(\psi :{\mathbb {R}}_+\mapsto {\mathbb {R}}_+\) such that for any \(n\in {\mathbb {N}}\)

Throughout we will use the notation \(H_n(x):=f^n(x/\rho ^n)\).

Lemma 4

Under assumption (a3.) there exists a continuous function \(H:{\mathbb {R}}_+\mapsto {\mathbb {R}}_+\) and a locally bounded function \(g:{\mathbb {R}}_+\mapsto {\mathbb {R}}_+\) such that

Proof

By assumption (a3.)

and hence for any \(x,y\in {\mathbb {R}}_+\)

with \(C=\Vert f''\Vert _\infty /\rho \). Thus the sequence \(x_{m,n}:= f^m(x/\rho ^n)\) satisfies

and \(x_{0,n}=x/\rho ^n\). By Lemma 3 there exists a locally bounded function \(\psi \) such that for any \(n\in {\mathbb {N}}\)

The bound (14) also implies

where, in view of (15),

Since f has bounded second derivative and \(f'(0)=\rho \), cf. (13),

Plugging this bound into (16) and iterating n times we obtain

where we defined

Thus the limit \(H(x)=\lim _{n\rightarrow \infty } f^n(x/\rho ^n)\) exists and satisfies the claimed bound with \(g(x)={\widetilde{g}}(x)/(1-\rho ^{-1})\). Continuity of H follows since \(H_n\) are continuous for each n and the convergence is uniform on compacts. \(\square \)

Corollary 5

f is topologically semiconjugate to its linearization at the origin:

Proof

Since f is continuous

\(\square \)

The next lemma shows that H is strictly increasing in a vicinity of the origin and is therefore a local conjugacy.

Lemma 6

There exists an \(a>0\) such that H is strictly increasing on [0, a] and

Proof

Let \(c:= \Vert f''\Vert _\infty \) and \(r:= \rho /c\) then

Since f is \(\rho \)-Lipschitz and \(f(0)=0\), for any \(j=1,\ldots ,n\) and \(x\in [0,r)\),

and hence for all \(x\in [0,r)\)

where we used the Bernoulli inequality. Thus we can choose a number \(a\in (0,r)\) such that \(H_n'(x)\ge 1/2\) for all \(x\in [0,a]\). It then follows that for any \(y>x\) in the interval [0, a]

Taking the limit \(n\rightarrow \infty \) implies that H is strictly increasing on [0, a]. Being continuous, H is invertible and (17) holds by Corollary 5. \(\square \)

Remark 4

Under additional assumption that f is strictly increasing on the whole \({\mathbb {R}}_+\), the function H is furthermore a global conjugacy, i.e. (17) holds on \({\mathbb {R}}_+\).

The next lemma establishes differentiability of H.

Lemma 7

H has continuous derivative

where the series converges uniformly on compacts.

Proof

Step 1. Let us first argue that the infinite product in (18)

is well defined. By assumption (a3.), f is \(\rho \)-Lipschitz and hence \(f^n\) is \(\rho ^n\)-Lipschitz. Consequently, \(H_n\) is 1-Lipschitz for all \(n\in {\mathbb {N}}\) and so is H. This will be used in the proof on several occasions. Let \(c:=\Vert f''\Vert _\infty \) and \(r:=\frac{1}{2}\rho /c\), then

For \(x>0\) define the function \(j(x):=[\log _\rho (x/r)]\). Then for any \(j>j(x)\),

where \(\dagger \) holds since \(-\log (1-u)\le 2u\) for all \(u\in [0,\frac{1}{2}]\). The partial products in (19) can be written as

In view of the estimate (21), \(G_n(x)\) converges to \(G(x):=T(x)\exp (L(x))\) for any \(x\in {\mathbb {R}}_+\) where \(L(x)=\lim _n L_n(x)\). Furthermore,

where we used the bound \(|T(x)|\le 1\). For any \(R>0\) and all \(x\in [0,R]\) the estimate (21) implies

and thus, in view of the bound (22), we obtain

Since \(G_n\) is continuous for any n, this uniform convergence implies that G is continuous as well.

Step 2. To show that H(x) is differentiable and to verify the claimed formula for the derivative, it remains to show that the sequence of derivatives

converges to G uniformly on compacts. Fix an \(R>0\), define \(J(R)=[\log _\rho (R/r)]\) and, for \(n>J(R)\), let

and

Since \(\Vert f'\Vert _\infty =\rho \) all these functions are bounded by 1 and

Since \(f'\) is continuous and the convergence \(H_n\rightarrow H\) is uniform on compacts, it follows that

and hence, to complete the proof, we need to show that

To this end, in view of Corollary 5,

and hence

Consequently, for all \(x\in (0,R]\),

Here the bound \(\dagger \) holds since for \(j> J(R)\) both arguments of \(f'\) are smaller than r and thus (20) applies. The inequality \(\ddagger \) is true since H is 1-Lipschitz. The inverses in the last line of (25) are well defined for \(n\ge k:=[\log _\rho (R/H(a))]+1\) where a is the constant guaranteed by Lemma 6. Moreover, for all such n

Moreover, the sequence of functions \(D_n(x):= \rho ^n H^{-1}( x\rho ^{-n})\) is decreasing on [0, R] for all n large enough:

where the inequality holds since \(H^{-1}\) is increasing near the origin. It follows now from Dini’s theorem that the convergence in (26) is uniform:

The convergence in (24) holds since both \(Q_n\) and \(P_n\) are bounded by 1 and

\(\square \)

Lemma 8

H has continuous second derivative

Proof

The partial products in (18)

satisfy

where the convention \(0/0=0\) is used. By assumption (a3.), \(f''/f'\) is bounded uniformly on a vicinity of the origin. \(H'\) is continuous by Lemma 7 and therefore is bounded on compacts. Hence the series is compactly convergent. By Lemma 7, so is \(G_n\). Thus \(G'_n(x)\) converges compactly, its limit is continuous and coincides with \(H''(x)\). \(\square \)

3.2 The auxiliary Galton–Watson process

Let \((U_{n,j}: n\in {\mathbb {N}}, j\in {\mathbb {Z}}_+)\) be an array of i.i.d. U([0, 1]) random variables and define

where \(F_x(t)\) is the offspring distribution function when the population density is x, cf. assumption (a1.). Then \({\textsf{P}}(\xi _{n,j}(x)=k)=p_k(x)\) for all \(k\in {\mathbb {Z}}_+\). Let \(\eta _{n,j}:=\xi _{n,j}(0)\). By assumption (a1.)

Let \(Z=(Z_n, n\in {\mathbb {Z}}_+)\) and \(Y=(Y_n, n\in {\mathbb {Z}}_+)\) be processes generated by the recursions

started from the same initial conditions \(Z_0=Y_0=1\). By construction these processes coincide in distribution with (1) and (8) respectively. Moreover, in view of (28), by induction

3.3 The approximation

In view of (11),

Since H has continuous derivative it follows that

Thus to prove the assertion of Theorem 2 it remains to show that

The process \({{\overline{Y}}}_n = K^{-1} Y_n\) satisfies

By Taylor’s approximation and in view of Corollary 5

where

with \(\theta _{n-1}(K)\ge 0\) satisfying

Since \(\Vert f'\Vert _\infty = \rho \) is assumed, f is \(\rho \)-Lipschitz. By subtracting equation (5) from (30) we obtain the bound for \(\delta _n:= | H({{\overline{Y}}}_n)-{{\overline{Z}}}_n|\):

subject to \(\delta _0 = |H(1/K)-1/K|\), where we defined

Consequently,

and it is left to show that the contribution of each term at time \(n_1=[\log _ \rho K]\) is of order \(O_{\textsf{P}}(K^{-1/2}\log K)\) as \(K\rightarrow \infty \).

3.3.1 Contribution of the initial condition

Since \(H(0)=0\) and, by (18), \(H'(0)=1\), Taylor’s approximation implies that for all K large enough

and, consequently, \( |\rho ^{n_1} \delta _0| \le CK^{-1}. \)

3.3.2 Contribution of \(R_n(K)\)

To estimate the residual, defined in (31), let us show first that the family of random variables

is bounded in probability as \(K\rightarrow \infty \). By equation (32),

If \(H''\) is bounded then (34) is obviously bounded. Let us proceed assuming that \(H''\) is unbounded. Define \( \psi (M):= \max _{x\le M} |H''(x)|. \) By continuity, \(\psi (M)\) is finite, continuous and increases to \(\infty \). Let \(\psi ^{-1}\) be its generalized inverse

Since \(\psi \) is continuous and unbounded, \(\psi ^{-1}\) is nondecreasing (not necessarily continuous) and \(\psi ^{-1}(t)\rightarrow \infty \) as \(t\rightarrow \infty \). Then for any \(R\ge 0\), by the union bound,

This proves that (34) is bounded in probability. The contribution of \(R_n(K)\) in (33) can now be bounded as

where

Hence

3.3.3 Contribution of \(\varepsilon ^{(3)}\)

By conditional independence of \(\eta _{n,j}\)’s

In view of (29), the sequence \(D_m:= {{\overline{Y}}}_{m}-{\overline{Z}}_{m}\ge 0\) satisfies

where the last bound holds in view of (29) and the well known formula for the second moment of the Galton–Watson process. Since \(D_0=0\) it follows that

Hence the contribution of \(\varepsilon ^{(3)}\) in (33) is bounded by

3.3.4 Contribution of \(\varepsilon ^{(2)}\)

By assumption (a2.),

where \(\dagger \) holds by (28). Hence \(\varepsilon ^{(2)}\) contributes

3.3.5 Contribution of \(\varepsilon ^{(1)}\)

The function \( g(x): = H'(x)-1 \) is continuously differentiable with \(g(0)=0\) and thus Taylor’s approximation gives

where \( \zeta _{n-1}(K) \) satisfies \(0\le \zeta _{n-1}(K)\le \rho {{\overline{Y}}}_{n-1}\). Here

It follows that

It is then argued as in Sect. 3.3.2 that

Notes

The usual notations for probabilistic orders is used throughout. In particular, for a sequence of random variables \(\zeta (K)\) and a sequence of numbers \(\alpha (K)\searrow 0\) as \(K\rightarrow \infty \), the notation \(\zeta (K)=O_{\textsf{P}}(\alpha (K))\) means that the sequence \(\alpha (K)^{-1}\zeta (K)\) is bounded in probability.

[x] and \(\{x\}=x-[x]\) denote the integer and fractional part of \(x\in {\mathbb {R}}_+\).

\(\Vert f\Vert _\infty =\sup _x |f(x)|\)

References

Athreya KB, Ney PE (1972) Branching processes. Die Grundlehren der mathematischen Wissenschaften, Band 196, p 287. Springer, New York

Baker J, Chigansky P, Jagers P, Klebaner FC (2020) On the establishment of a mutant. J Math Biol 80(6):1733–1757. https://doi.org/10.1007/s00285-020-01478-x

Barbour AD, Chigansky P, Klebaner FC (2016) On the emergence of random initial conditions in fluid limits. J Appl Probab 53(4):1193–1205. https://doi.org/10.1017/jpr.2016.74

Chigansky P, Jagers P, Klebaner FC (2018) What can be observed in real time PCR and when does it show? J Math Biol 76(3):679–695. https://doi.org/10.1007/s00285-017-1154-1

Chigansky P, Jagers P, Klebaner FC (2019) Populations with interaction and environmental dependence: from few, (almost) independent, members into deterministic evolution of high densities. Stoch Models 35(2):108–118. https://doi.org/10.1080/15326349.2019.1575755

Haccou P, Jagers P, Vatutin VA (2007) Branching processes: variation, growth, and extinction of populations. Cambridge studies in adaptive dynamics, vol. 5, p 316. Cambridge University Press, Cambridge, IIASA, Laxenburg

Hamza K, Jagers P, Klebaner FC (2016) On the establishment, persistence, and inevitable extinction of populations. J Math Biol 72(4):797–820. https://doi.org/10.1007/s00285-015-0903-2

Heyde CC (1970) A rate of convergence result for the super-critical Galton-Watson process. J Appl Probab 7(2):451–454. https://doi.org/10.2307/3211980

Högnäs G (1997) On the quasi-stationary distribution of a stochastic Ricker model. Stoch Process Appl 70(2):243–263. https://doi.org/10.1016/S0304-4149(97)00064-1

Högnäs G (2019) On the lifetime of a size-dependent branching process. Stoch Models 35(2):119–131. https://doi.org/10.1080/15326349.2019.1578241

Jung B (2013) Exit times for multivariate autoregressive processes. Stoch Process Appl 123(8):3052–3063. https://doi.org/10.1016/j.spa.2013.03.003

Kendall DG (1956) Deterministic and stochastic epidemics in closed populations. In: Proceedings of the third Berkeley symposium on mathematical statistics and probability, 1954–1955, vol. IV, pp 149–165. University of California Press, Berkeley-Los Angeles, CA

Klebaner FC, Nerman O (1994) Autoregressive approximation in branching processes with a threshold. Stoch Process Appl 51(1):1–7. https://doi.org/10.1016/0304-4149(93)00000-6

Klebaner FC, Zeitouni O (1994) The exit problem for a class of density-dependent branching systems. Ann Appl Probab 4(4):1188–1205

Klebaner FC, Lazar J, Zeitouni O (1998) On the quasi-stationary distribution for some randomly perturbed transformations of an interval. Ann Appl Probab 8(1):300–315. https://doi.org/10.1214/aoap/1027961045

Metz JAJ (1978) The epidemic in a closed population with all susceptibles equally vulnerable; some results for large susceptible populations and small initial infections. Acta Biotheor 27(1):75–123. https://doi.org/10.1007/BF00048405

Whittle P (1955) The outcome of a stochastic epidemic: a note on Bailey’s paper. Biometrika 42(1–2):116–122. https://doi.org/10.1093/biomet/42.1-2.116

Acknowledgements

The research was supported by ARC grant DP220100973.

Funding

Open access funding provided by Hebrew University of Jerusalem.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bauman, N., Chigansky, P. & Klebaner, F. An approximation of populations on a habitat with large carrying capacity. J. Math. Biol. 88, 44 (2024). https://doi.org/10.1007/s00285-024-02069-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-024-02069-w