Abstract

In this paper, we rigorously study the problem of cost optimisation of hybrid (mixed) institutional incentives, which are a plan of actions involving the use of reward and punishment by an external decision-maker, for maximising the level (or guaranteeing at least a certain level) of cooperative behaviour in a well-mixed, finite population of self-regarding individuals who interact via cooperation dilemmas (Donation Game or Public Goods Game). We show that a mixed incentive scheme can offer a more cost-efficient approach for providing incentives while ensuring the same level or standard of cooperation in the long-run. We establish the asymptotic behaviour (namely neutral drift, strong selection, and infinite-population limits). We prove the existence of a phase transition, obtaining the critical threshold of the strength of selection at which the monotonicity of the cost function changes and providing an algorithm for finding the optimal value of the individual incentive cost. Our analytical results are illustrated with numerical investigations. Overall, our analysis provides novel theoretical insights into the design of cost-efficient institutional incentive mechanisms for promoting the evolution of cooperation in stochastic systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Literature review. Evolutionary Game Theory made its debut in 1973 with John Maynard Smith’s and George R. Price’s work on the formalisation of animal contests, thus successfully using Classical Game Theory to create a new framework that could predict the evolutionary outcomes of the interaction between competing individuals. Ever since, it has been widely used to study myriad questions in various disciplines like Evolutionary Biology, Ecology, Physics, Sociology and Computer Science, including what the mechanisms underlying the emergence and stability of cooperation are (Nowak 2006) and how to mitigate climate change and its risks (Santos and Pacheco 2011).

Cooperation is the act of paying a cost in order to convey a benefit to somebody else. Although it initially seems against the Darwinian theory of natural selection, cooperation has been, is, and will be a vital part of life, from cellular clusters to bees to humans (Sigmund 2010; Nowak and Highfield 2011). Several mechanisms for promoting the evolution of cooperation have been identified, including kin selection, direct reciprocity, indirect reciprocity, network reciprocity, group selection and different forms of incentives (Nowak 2006; Sigmund 2010; Perc et al. 2017; Rand and Nowak 2013; Van Lange et al. 2014). The current work focuses on institutional incentives (Sasaki et al. 2012; Sigmund et al. 2010; Wang et al. 2019; Duong and Han 2021; Cimpeanu et al. 2021; Sun et al. 2021; Van Lange et al. 2014; Gürerk et al. 2006), which are a plan of actions involving the use of reward (i.e., increasing the payoff of cooperators) and punishment (i.e., reducing the payoff of defectors) by an external decision-maker, in particular, how they can be used in combination (i.e. hybrid or mixed incentives) in a cost-efficient way for maximising the levels of cooperative behaviour in a well-mixed, finite population of self-regarding individuals.

Promoting and implementing cooperation via incentives is costly to the incentive-providing institution, such as the United Nations or the European Union (Ostrom 2005; Van Lange et al. 2014). Thus, it is crucial to understand how to minimise the said cost while ensuring a desirable standard of cooperation. In this work, players interact via cooperation dilemmas, both the pairwise Donation Game (DG) and its multi-player version, the Public Goods Game (PGG) (Sigmund 2010; Nowak 2006). These games have been widely adopted to model social dilemma situations in which collective rationality leads to individual irrationality.

Several theoretical models studied how to combine institutional reward and punishment for enhancing the emergence and stability of cooperation (Chen and Perc 2014; Góis et al. 2019; Berenji et al. 2014; Hilbe and Sigmund 2010; Sun et al. 2021; Gürerk et al. 2006). However, little attention has been given to addressing the cost optimisation of providing incentives. Chen et al. Sasaki et al. (2015) looked at a rewarding policy that switches the incentive from reward to punishment when the frequency of cooperators exceeds a certain threshold. This policy establishes cooperation at a lower cost and under a wider range of conditions than either reward or punishment alone, in both well-mixed and spatial populations. Others have applied the ‘carrot and stick’ idea to criminal recidivism and rehabilitation as now the justice system is switching its focus to reintegrating wrongdoers into society after the penalty has been served. Berenji et al.’s work (Berenji et al. 2014) studied the game where players decide to permanently reform or continue engaging in criminal activity, eventually reaching a state where they are considered incorrigible. Since resources may be limited, they fixed the combined rehabilitation and penalty costs per crime. The most successful strategy in reducing crime is to optimally allocate resources so that after being having served the penalty, criminals are reintroduced into society via impactful programs. Wang et al. (2019) explored the optimal incentive that not only minimises the total cost, but also guarantees a sufficient level of cooperation in an infinite and well-mixed population via optimal control theory.

This work however does not take into account various stochastic effects of evolutionary dynamics such as mutation and non-deterministic behavioural update. In a deterministic system of cooperators and defectors, once the latter disappear, there is no further change in the system and hence no further interference is needed. When mutation is present, defectors can reappear and become numerous over time, requiring the external institution to spend more on providing further incentives. Moreover, the intensity of selection - how strongly an individual bases their decision to copy another individual’s strategy on their fitness difference - is overlooked. When selection is weak, providing incentives would make little difference in causing behavioural change as no individual would be motivated to copy another and all changes in strategy would be due to noise. When selection is strong, incentives that ensure a minimum fitness advantage to cooperators would ensure a positive behavioural change as the players would be more likely to copy one another. Recently, in Zhang et al. (2022) by simulating the weak prisoner’s dilemma in finite populations, the authors find that a combination of appropriate punishment and reward mechanisms can promote cooperation’s prosperity regardless of how large or small the temptation to defect is.

Whilst the above works were concerned with well-mixed populations, the following two studies deal with spatial ones. Szolnoki and Perc (2013) looked at whether there are evolutionary advantages in correlating positive and negative reciprocity, as opposed to adopting only reward or punishment. Their work supports others that use empirical data. In those studies, the data fails to support the central assumption of the strong reciprocity model that negative and positive reciprocity are correlated. In a different work (Szolnoki and Perc 2017), the authors showed how second-order free-riding on antisocial punishment restores the effectiveness of prosocial punishment, providing an evolutionary escape from adverse effects of antisocial punishment. Both these works use Monte Carlo simulations.

Moreover, several works have provided insights into how best to promote the emergence of collective behaviours such as cooperation and fairness while also considering the institutional costs of providing incentives (Liu et al. 2018; Chen and Perc 2014; Duong and Han 2021; Cimpeanu et al. 2021, 2019; Han and Tran-Thanh 2018; Cimpeanu et al. 2023), see also (Wang et al. 2021) for a recent survey on these papers. Indeed, most relevant to our work are Duong and Han (2021) and Han and Tran-Thanh (2018), which derived analytical conditions for which a general incentive scheme can guarantee a given level of cooperation while at the same time minimising the total cost of investment. These results are highly sensitive to the intensity of selection. They also studied a class of incentive strategies that make an investment whenever the number of players with a desired behaviour reaches a certain threshold \(t\in \{1,\ldots ,N-1\}\) (N is the population size), showing that there is a wide range of values for the threshold that outperforms standard institutional incentive strategies - those which invest in all players, i.e. the threshold is \(t=N-1\) (Sasaki et al. 2015). These works however did not study the cost-efficiency of the mixed incentive scheme, which is the focus of the present work.

Overview of contribution of this paper. As mentioned above, in this work, we consider a well-mixed, finite population of self-regarding individuals where players interact via cooperation dilemmas (DG and PGG) and rigorously study the problem of cost optimisation of hybrid institutional incentives (combination of reward and punishment) for maximising the levels of cooperative behaviour (or guaranteeing at least a certain levels of cooperation). This problem is challenging due to the number of parameters involved such as the number of individuals in the population, the strength of selection, the game-specific quantities, as well as the efficiency ratios of providing the corresponding incentive. In particular, the Markov chain modelling the evolutionary process is of order equal to the population size, which is large but finite. The calculation of the entries of the corresponding fundamental matrix is intricate, both analytically and computationally.

Our present work provides a rigorous and delicate analysis of this problem, combining techniques from different branches of Mathematics including Markov chain theory, polynomial theory, and numerical analysis. The main results of the paper can be summarised as follows (detailed and precise statements are provided in Sect. 2).

-

(i)

We show that a mixed incentive scheme can offer a more cost-efficient approach for providing incentives while ensuring the same level or standard of cooperation in the long-run.

-

(ii)

We obtain the asymptotic behaviour of the cost function in the limits of neutral drift, strong selection as well as infinite population sizes.

-

(iii)

We prove the existence of a phase transition for the cost function, obtaining the critical threshold of the strength of selection at which the monotonicity of the cost function changes and finding the optimal value of the individual incentive cost.

Furthermore, we provide numerical simulations to illustrate the analytical results.

1.1 Organisation of the paper

The rest of the paper is organised as follows. In Sect. 2 we present the model, methods, and the main results. Our main results include Proposition 1 on the efficiency of the combined reward and punishment incentives compared to implementing them separately, Theorem 1 on the asymptotic behaviour (neutral drift, strong selection, and infinite population limits) of the cost function, and Theorem 2 on the optimal incentive. In Sect. 3 we provide detailed computations and proofs of Theorem 2. Proof of Theorem 1 is given in Sect. 4. Summary and further discussions are provided in Sect. 5. Finally, Sect. 6 contains the proof of Proposition 1, detailed computations, and proofs for the technical results.

2 Model, methods, and main results

In this section, we present the model, methods, and main results of the paper. We first introduce the class of games, namely cooperation dilemmas, that we are interested in throughout this paper.

2.1 Cooperation dilemmas

We consider a well-mixed, finite population of N self-regarding individuals (players) who engage with one another using one of the following one-shot (i.e. non-repeated) cooperation dilemmas, the Donation Game (DG) or its multi-player version, the Public Goods Game (PGG). Strategy wise, each player can choose to either cooperate (C) or defect (D).

Let \(\Pi _C(j)\) be the average payoff of a C player (cooperator) and \(\Pi _D(j)\) that of a D player (defector), in a population with j C players and \((N-j)\) D players. As can be seen below, the difference in payoffs \(\delta = \Pi _C(j) - \Pi _D(j)\) in both games does not depend on j. For the two cooperation dilemmas considered in this paper, namely the Donation Games and the Public Goods Games, it is always the case that \(\delta < 0\). This does not cover some weak social dilemmas such as the Snowdrift Game, where \(\delta >0\) for some j, the general prisoners’ dilemma, and the collective risk game (Sun et al. 2021), where \(\delta \) depends on j. We will investigate these games in future research (see Sect. 5 for further discussion).

2.1.1 Donation game (DG)

The Donation Game is a form of Prisoners’ Dilemma in which cooperation corresponds to offering the other player a benefit B at a personal cost c, satisfying that \(B > c\). Defection means offering nothing. The payoff matrix of DG (for the row player) is given as follows

Denoting \(\pi _{X,Y}\) the payoff of a strategist X when playing with a strategist Y from the payoff matrix above, we obtain

Thus,

2.1.2 Public goods game (PGG)

In a Public Goods Game, players interact in a group of size n, where they decide to cooperate, contributing an amount \(c > 0\) to a common pool, or to defect, contributing nothing to the pool. The total contribution in a group is multiplied by a factor r, where \(1< r < n\) (for the PGG to be a social dilemma), which is then shared equally among all members of the group, regardless of their strategy. Intuitively, contributing nothing offers one a higher amount of money after redistribution.

The average payoffs, \(\Pi _C(j)\) and \(\Pi _D(j)\), are calculated based on the assumption that the groups engaging in a public goods game are given by multivariate hypergeometric sampling. Thereby, for transitions between two pure states, this reduces to sampling, without replacement, from a hypergeometric distribution. More precisely, we obtain (Hauert et al. 2007)

Thus,

2.2 Cost of institutional reward and punishment

To reward a cooperator (respectively, punish a defector), the institution has to pay an amount \(\theta /a\) (resp., \(\theta /b\)) so that the cooperator’s (defector’s) payoff increases (decreases) by \(\theta \), where \(a, b > 0\) are constants representing the efficiency ratios of providing the corresponding incentive.

In an institutional enforcement setting, we assume that the institution has full information about the population composition or statistics at the time of decision-making. That is, given the well-mixed population setting, we assume that the number j of cooperators in the population is known. Thus, if both reward and punishment are feasible options (i.e., mixed incentives), the institution can minimise its cost by choosing the minimum of \(\frac{j}{a}\) and \(\frac{N-j}{b}\). Thus, the key question here is: what is the optimal value of the individual incentive cost \(\theta \) that ensures a sufficient desired level of cooperation in the population (in the long-run) while minimising the total cost spent by the institution?

Note that, as discussed above, this mixed incentive, also known as the ‘carrot and stick’ approach, has been shown efficient for promoting cooperation in both pairwise and multi-player interactions (Sasaki et al. 2015; Hilbe and Sigmund 2010; Sun et al. 2021; Góis et al. 2019; Gürerk et al. 2006). However, these works have not studied cost optimisation and have not shown whether this approach is actually more cost-efficient and by how much.

2.2.1 Deriving the expected cost of providing institutional incentives

In this model, we adopt the finite population dynamics with the Fermi strategy update rule (Traulsen and Nowak 2006), stating that a player X with fitness \(f_X\) adopts the strategy of another player Y with fitness \(f_Y\) with a probability given by \(P_{X,Y}=\left( 1 + e^{-\beta (f_Y-f_X)}\right) ^{-1}\), where \(\beta \) represents the intensity of selection. We compute the expected number of times the population contains j C players, \(1 \le j \le N-1\). For that, we consider an absorbing Markov chain of \((N+1)\) states, \(\{S_0,..., S_N\}\), where \(S_j\) represents a population with j C players. \(S_0\) and \(S_N\) are absorbing states. Let \(U = \{u_{ij}\}_{i,j = 1}^{N-1}\) denote the transition matrix between the \(N-1\) transient states, \(\{S_1,..., S_{N-1}\}\). The transition probabilities can be defined as follows, for \(1\le i \le N-1\):

The entries \(n_{ik}\) of the so-called fundamental matrix \({\mathcal {N}}=(n_{ik})_{i,k=1}^{N-1}= (I-U)^{-1}\) of the absorbing Markov chain gives the expected number of times the population is in the state \(S_j\) if it is started in the transient state \(S_i\) (Kemeny 1976). As a mutant can randomly occur either at \(S_0\) or \(S_N\), the expected number of visits at state \(S_i\) is thus, \(\frac{1}{2} (n_{1i} + n_{N-1,i})\). The total cost per generation is

Hence, the expected total cost of interference for mixed reward and punishment is

As a comparison, we recall the cost for reward and punishment incentives, \(E_r\) and \(E_p\), respectively, when being used separately (Duong and Han 2021)

By comparing (2) and (3) one clearly expects that the efficiency ratios a and b strongly affect the incentive cost. In the cost functions \(E_r\) and \(E_p\), they are just scaling factors and do not affect the analysis of these functions. This is not the case in the combined incentive. One of the main objectives of this paper is to reveal explicitly the influence of a and b on the cost function. From a mathematical point of view, the presence and interplay of a and b make the analysis of the combined incentive much harder than that of the separate ones.

Remark

(On the interference scheme) In the mixed incentive setting being considered in this paper, the institution either rewards every cooperator or punishes every defector, depending on which one is less costly. Although being rather unsophisticated, this incentive strategy is typically considered in the literature of institutional incentives modelling. However, other interference schemes are also investigated in many works, for instance, the institution only rewards C players whenever their frequency or number in the population does not exceed a given threshold t, where \(1\le t\le N-1\). The scheme studied in this paper corresponds to the case where \(t=N-1\). We refer the reader to Han and Tran-Thanh (2018) and references therein for more information about different interference schemes. We plan to generalise the results of this paper to more complicated incentive strategies in future work, see Sect. 5 for further discussion.

2.2.2 Cooperation frequency

Since the population consists of only two strategies, the fixation probabilities of a C (D) player in a homogeneous population of D (C) players when the interference scheme is carried out are, respectively, Novak (2006)

Computing the stationary distribution using these fixation probabilities, we obtain the frequency of cooperation

Hence, this frequency of cooperation can be maximised by maximising

The fraction in Equation (4) can be simplified as follows (Nowak 2006)

In the above transformation, \(u_{i,i-1}\) and \(u_{i,i-1}\) are the probabilities to decrease or increase the number of C players (i.e. i) by one in each time step, respectively.

We consider non-neutral selection, i.e. \(\beta > 0\) (under neutral selection, there is no need to use incentives as no player is likely to copy another player and any changes in strategy that happen are due to noise as opposed to payoffs). Assuming that we desire to obtain at least an \(\omega \in [0,1]\) fraction of cooperation, i.e. \(\frac{\rho _{D,C}}{\rho _{D,C}+\rho _{C,D}} \ge \omega \), it follows from equation (5) that

Therefore it is guaranteed that if \(\theta \ge \theta _0(\omega )\), at least an \(\omega \) fraction of cooperation can be expected. This condition implies that the lower bound of \(\theta \) monotonically depends on \(\beta \). Namely, when \(\omega \ge 0.5\), it increases with \(\beta \) and when \(\omega < 0.5\), it decreases with \(\beta \).

To summarise, we obtain the following constrained minimisation problem

Remark

(On the formula of the cost of the mixed incentive) In the derivation of the cost function (2), we assumed that the population is equally likely to start in the homogeneous state \(S_0\) as well as in the homogeneous state \(S_N\). However, in general, this might not be correct. For example, if cooperators are very likely to fixate in a population of defectors, but defectors are unlikely to fixate in a population of cooperators, mutants are on average more likely to appear in the homogeneous cooperative population (that is in \(S_N\)). Similarly, the population might also be likely to appear in \(S_0\) rather than \(S_N\). In general, in the long-run, the population will start at \(i = 0\) (\(i = N\), respectively) with probability equal to the frequency of D (C) computed at the equilibrium, \(f_D = 1/(r+1)\) (\(f_C = r/(r+1)\), respectively), where \(r = e^{\beta (N-1)(\delta + \theta )}\). Thus generally, the expected number of visits at state \(S_i\) will be \( f_D n_{1i} + f_C n_{N-1,i}\). Therefore, instead of (2), in the general setting the formula for the cost function should be

In practice, in many works based on agent-based simulations (Chen and Perc 2014; Cimpeanu et al. 2023; Szolnoki and Perc 2017; Han et al. 2018; Sasaki et al. 2015), it is often assumed that mutation is negligible and simulations end whenever the population fixates in a homogeneous state. Moreover, these simulations usually assume that the initial population starts at a homogeneous state or has a uniform distribution of different types. In this work, we thus assume an equal likelihood that the population starts at one of the homogeneous states and our formula (2) captures such scenarios. This assumption enables us to analytically study the cost function and its behaviour. As will be clear in the subsequent sections, the analysis is already very complicated in this simplified setting. Our results encapsulate the intermediate-run dynamics, an approximation that is valid if the time-scale is long enough for one type to reach fixation, but too short for the next mutant to appear. Our findings might thus be more practically useful for the optimisation of the institutional budget for providing incentives on an intermediate timescale.

We will study this problem in the most general case, where the initial population can start at an arbitrary state, in future work. The cost function can be obtained from extensive agent-based simulations of the evolutionary process. However, this approach is very computationally expensive especially when one wants to analytically study the cost function as a function of the individual incentive cost for large population sizes, which is the focus of this paper. Previous works have already shown that outcomes from our adopted evolutionary processes (small-mutation limit) are in line with extensive agent-based simulations, e.g. in Han (2013); Van Segbroeck et al. (2012); Hauert et al. (2007); Sigmund et al. (2010).

2.3 Main results of the present paper

Proposition 1

(Combined incentives vs separate ones) It is always more cost efficient to use the mixed incentive approach than a separate incentive, reward or punishment,

If \(\frac{b}{a}\le \frac{1}{N-1}\), then \(E_{mix}(\theta )=E_r(\theta )\). If \(\frac{b}{a}\ge N-1\), then \(E_{mix}(\theta )=E_p(\theta )\). That is, if providing reward for a cooperator is much more cost-efficient for the institution than punishing a defector, i.e., when \(b/a \ge N-1\), then it is optimal to use reward entirely. Symmetrically, if punishment is much more efficient, i.e. \(a/b \ge N-1\), then it is optimal to use punishment entirely. Otherwise, a mixed approach is more cost-efficient. Note however that the mixed approach require the institution to be able to observe the population composition (i.e. the number of cooperators in the population, j).

The proof of this Proposition will be given in Sect. 6.2 and see Fig. 2 for an illustration.

The following number is central to the analysis of this paper

It plays a similar role as the harmonic number \(H_N\) in Duong and Han (2021), where a similar cost optimisation problem but for a separate reward or punishment incentive is studied. However, unlike the harmonic function, which has a growth of \(\ln N+\gamma \) (where \(\gamma \) is the Euler-Mascheroni constant) as \(N\rightarrow +\infty \), we will show that \(H_{N,a,b}\) is always bounded and its asymptotic behaviour is given by (see more details in Proposition 4)

Now, our second main result below studies the asymptotic behaviour (neutral drift limit, strong selection limit, and infinite population limit) of the cost function \(E_{mix}(\theta )\).

Theorem 1

(Asymptotic behaviour of the cost function)

-

(i)

(Growth of the cost function) The cost function satisfies the following lower and upper bound estimates

$$\begin{aligned}{} & {} \frac{N^2\theta }{2}\Big (H_{N,a,b}+\frac{1}{\max (a,b)(N-1)}\Big )\le E_{mix}(\theta )\\{} & {} \quad \le N(N-1)\theta \Big (H_{N,a,b}+\frac{1}{\min (a,b)\lfloor \frac{(N-1)}{2} \rfloor }\Big ). \end{aligned}$$In particular, since \(H_{N,a,b}\) is uniformly bounded (with respect to N), it follows that the cost function grows quadratically with respect to N.

-

(ii)

Neutral drift limit:

$$\begin{aligned} \lim \limits _{\beta \rightarrow 0}E_{mix}(\theta )=\theta N^2 H_{N,a,b}. \end{aligned}$$ -

(iii)

Strong selection limit:

$$\begin{aligned} \lim \limits _{\beta \rightarrow +\infty }E_{mix}(\theta )={\left\{ \begin{array}{ll} \frac{N^2\theta }{2}\Big (H_{N,a,b} + \frac{1}{a(N-1)}\Big ), \quad \text {for}\quad \theta <-\delta ,\\ \frac{N A}{2}\Big [2N H_{N,a,b}+\frac{1}{a(N-1)}+\frac{1}{b(N-1)}\\ \quad -\frac{\min (2/a, (N-2)/b)}{2(N-2)}-\frac{\min ((N-1)/a,1/b)}{N-1}\Big ], \quad \text {for}\quad \theta =-\delta ,\\ \frac{N^2\theta }{2}\bigg [H_{N,a,b}+\frac{1}{b(N-1)}\bigg ] \quad \text {for}\quad \theta >-\delta . \end{array}\right. } \end{aligned}$$ -

(iv)

Infinite population limit:

$$\begin{aligned} \lim _{N\rightarrow +\infty }\frac{E_{mix}(\theta )}{\frac{N^2\theta }{2}H_{a,b}}={\left\{ \begin{array}{ll} 1+e^{-\beta |\theta -c|} \quad \text {for DG},\\ 1+ e^{-\beta |\theta -c(1-\frac{r}{n})|}\quad \text {for PGG}. \end{array}\right. } \end{aligned}$$(9)

It is worth noticing that the neutral drift and strong selection limits of the cost function do not depend on the underlying games, but the infinite population limit does.

The proof of this Theorem will be given in Sect. 4. Figures 4 and 5 provide numerical simulations of the neutral drift and strong selection limits and the infinite population one.

The following result provides a detailed analysis for the minimisation problem (7) for \(a=b\). Note that since \(N\ge 2\), this case belongs to the interesting regime where \(\frac{1}{N-1}\le \frac{b}{a}\le N-1\), see Proposition 1 above. Mathematically, this case is distinguishable since it gives rise to many useful and beautiful symmetric properties and cancellations, see Sect. 3. We also numerically investigate the case \(a\ne b\) and conjecture that the result also holds true.

Theorem 2

(Optimisation problems and phase transition phenomenon)

-

1.

(Phase transition phenomena and behaviour under the threshold) For \(a=b\), there exists a threshold value \(\beta ^*\) given by

$$\begin{aligned} \beta ^*=-\frac{F^*}{\delta }>0, \end{aligned}$$with

$$\begin{aligned} F^*=\min \{F(u):\quad u>1\}, \end{aligned}$$where \(F(u):=\frac{Q(u)}{uP(u)}-\log (u)\) for

$$\begin{aligned} P(u)&:=(1+u)\Bigg [\Big (\sum _{j=0}^{N-2}\Big (H_{N,a,b} + \frac{\min (\frac{j+1}{a},\frac{N-j-1}{b})}{(j+1)(N-j-1)}\Big ) u^j\Big )\Big (\sum _{j=1}^{N-1} j u^{j-1}\Big )\nonumber \nonumber \\ {}&\qquad \qquad -\Big (\sum _{j=1}^{N-2}\Big (H_{N,a,b} + \frac{\min (\frac{j+1}{a},\frac{N-j-1}{b})}{(j+1)(N-j-1)}\Big ) j u^{j-1}\Big )\Big (\sum _{j=0}^{N-1}u^j\Big )\Bigg ]\nonumber \nonumber \\&\qquad \qquad -\Big (\sum _{j=0}^{N-2}\Big (H_{N,a,b} + \frac{\min (\frac{j+1}{a},\frac{N-j-1}{b})}{(j+1)(N-j-1)}\Big ) u^j\Big )\Big (\sum _{j=0}^{N-1}u^j\Big ) \end{aligned}$$(10)and

$$\begin{aligned} Q(u)&:=(1+u)\Big (\sum _{j=0}^{N-2}\Big (H_{N,a,b} + \frac{\min (\frac{j+1}{a},\frac{N-j-1}{b})}{(j+1)(N-j-1)}\Big ) u^j\Big )\Big (\sum _{j=0}^{N-1} u^{j}\Big ), \end{aligned}$$(11)with \(u:=e^x\), such that \(\theta \mapsto E_{mix}(\theta )\) is non-decreasing for all \(\beta \le \beta ^*\) and it is non-monotonic when \(\beta >\beta ^*\). As a consequence, for \(\beta \le \beta ^*\)

$$\begin{aligned} \min \limits _{\theta \ge \theta _0}E_{mix}(\theta )=E_{mix}(\theta _0). \end{aligned}$$(12) -

2.

(Behaviour above the threshold value) For \(\beta >\beta ^*\), the number of changes of sign of \(E_{mix}'(\theta )\) is at least two for all N and there exists an \(N_0\) such that the number of changes is exactly 2 for \(N\le N_0\). As a consequence, for \(N\le N_0\), there exist \(\theta _1<\theta _2\) such that for \(\beta >\beta ^*\), \(E_{mix}(\theta )\) is increasing when \(\theta < \theta _1\), decreasing when \(\theta _1<\theta <\theta _2\), and increasing when \(\theta >\theta _2\). Thus, for \(N\le N_0\),

$$\begin{aligned} \min \limits _{\theta \ge \theta _0}E_{mix}(\theta )=\min \{E_{mix}(\theta _0),E_{mix}(\theta _2)\}. \end{aligned}$$

The proof of this Theorem is detailed in Sect. 3.

We use Algorithm 1 to find the optimal cost per capita \(\theta \), denoted by \(\theta ^*\) (represented by the red line in the figures), that minimises \(E_{mix}(\theta )\) while ensuring a minimum level of cooperation \(\omega \), where \(N=3\) for DG with \(B = 2, c = 1\). The left image illustrates the behaviour of the cost function for \(\beta = 1\), the middle one for \(\beta =5\), while the right one for \(\beta = 10\). The critical threshold value for the strength of selection is \(\beta ^* = 3.67\) and the desired level of cooperation is \(\omega =0.7\). The numerical results obtained are in accordance with Theorem 2: for \(\beta \le \beta ^*\), the cost function increases, while for \(\beta >\beta ^*\) it is not monotonic (colour figure online)

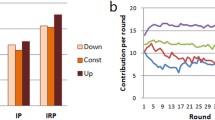

Comparison of the total costs for mixed incentives (blue), only reward (orange), and only punishment (green) as a function of the cost per capita \(\theta \), for different values of N and \(\beta \) for DG with \(B = 2, c = 1\). The first row illustrates the behaviour of the different cost functions when \(N=3\) with \(\beta = 0.01, 0.5, 1\) respectively, while the second row presents the comparison between the cost functions for \(N=10\) with \(\beta = 0.01, 0.5, 1\), respectively. As proven in Proposition 1, mixed incentives are less costly than either reward or punishment (colour figure online)

2.4 Algorithms

Based on the results above, we describe below an algorithm for the computation of the critical threshold \(\beta ^*\) of the strength selection.

Algorithm 1

(Finding optimal cost of incentive \(\theta ^\star \))

Inputs: i) \(N\le N_0\): population size, ii) \(\beta \): intensity of selection, iii) game and parameters: DG (c and B) or PGG (c, r and n), iv) \(\omega \): minimum desired cooperation level, v) a and b: reward and punishment efficiency ratios.

-

(1)

Compute \(\delta \) \(\Big \{in DG: \delta = - (c + \frac{B}{N-1})\); in PGG: \(\delta = -c \left( 1 - \frac{r(N-n)}{n(N-1)} \right) \Big \}\).

-

(2)

Compute \(\theta _0 = \frac{1}{(N-1)\beta } \log \left( \frac{\omega }{1-\omega }\right) - \delta \);

-

(3)

Compute

$$\begin{aligned} F^*=\min \{F(u): u>1\}, \end{aligned}$$where F(u) is defined in (31).

-

(4)

Compute \(\beta ^*=-\frac{F^*}{\delta }\).

-

(5)

If \(\beta \le \beta ^*\):

$$\begin{aligned} \theta ^*=\theta _0,\quad \min E_{mix}(\theta )=E_{mix}(\theta _0). \end{aligned}$$ -

(6)

Otherwise (i.e. if \(\beta >\beta ^*\))

-

(a)

Compute \(u_2\) that is the largest root of the equation \(F(u)+\beta \delta =0\).

-

(b)

Compute \(\theta _2=\frac{\log u_2}{\beta }-\delta \).

-

If \(\theta _2 \le \theta _0\): \(\theta ^*=\theta _0,\quad \min E_{mix}(\theta )=E_{mix}(\theta _0)\);

-

Otherwise (if \(\theta _2 > \theta _0\)):

-

If \(E_{mix}(\theta _0)\le E_{mix}(\theta _2)\): \(\theta ^*=\theta _0,\quad \min E_{mix}(\theta )=E_{mix}(\theta _0)\);

-

if \(E(\theta _2)< E(\theta _0)\): \(\theta ^*=\theta _2,\quad \min E_{mix}(\theta )=E_{mix}(\theta _2)\).

-

-

-

(a)

Output: \(\theta ^*\) and \(E_{mix}(\theta ^*)\).

3 The expected cost function, phase transition, and the minimisation problem

In this section, we study in detail the cost function, establishing the phase transition phenomena and solving the minimisation problem 7 of finding the optimal incentive, thus proving Theorem 2.

We consider the case

and focus on the most important case when \(a=b\). This is due to Proposition 1, when \(\frac{b}{a}\) is not in this interval, the mixed incentive problem reduces to either the reward or the punishment problem that has been studied in Duong and Han (2021).

3.1 The cost function and its derivative

In this section, we provide the explicit computation for the cost function and its derivative. The class of cooperation dilemmas, namely DG and PGG, introduced in Sect. 2 are of crucial importance in the analysis of this paper. This is because, as already mentioned, the difference in payoffs between a cooperator and a defector, \(\delta =\Pi _C(i)-\Pi _D(i)\), does not depend on the state i, which gives rise to explicit and analytically tractable formulas for the entries of the fundamental matrix \({\mathcal {N}}\) of the absorbing Markov chain describing the population dynamics. These entries are given in the following lemma, whose detailed proof can be found in Duong and Han (2021).

Lemma 1

The entries \(n_{1,i}\) and \(n_{N-1,i}\), \(i=1,\ldots , N-1\), of the fundamental matrix \({\mathcal {N}}\) (see its definition underneath (1)) are given by

where \(x=x(\theta ):=\beta (\theta +\delta )\).

Using Lemma 1, we obtain a more explicit formula for the cost function as follows:

To study the monotonicity of the cost function, in the following lemma, we compute the derivative of \(E_{mix}(\theta )\) with respect to \(\theta \).

Lemma 2

The total cost of interference for the mixed institutional incentive is given by

Its derivative is given by

where \(P(u)=f(x)g'(x)-f'(x)g(x)\) and \(Q(u)=f(x)g(x)\) for \(f(x)=(1+u)\sum _{j=0}^{N-2} u^j\Big (H_{N,a,b} + \frac{\min (\frac{j+1}{a},\frac{N-j-1}{b})}{(j+1)(N-j-1)}\Big )\) and \(g(x)=\sum _{j=0}^{N-1} u^j\) with \(u=e^x\).

See Sect. 6.3 for a proof of this lemma.

3.2 The polynomial P

This section contains details about

The following proposition studies the properties of P.

Proposition 2

Let P(u) be the polynomial defined in (29). Then it is a polynomial of degree \(2N-4\),

where the leading coefficient \(p_{2N-4}\) is positive. When \(a=b\), the coefficients of P are anti-symmetric, that is we have

As a consequence, when \(a=b\), P has exactly one positive root, which is equal to 1.

The proof of this proposition is lengthy and delicate. The case \(a=b\) is special since it gives rise to many useful symmetric properties and nice cancellations. To focus on the main points here, we postpone the proof to the Appendix, see Sect. 6.4.

3.3 The derivative of F

In this section, we study the derivative of the function F defined in (31). The analysis of this section will play an important role in the study of the phase transition of the cost function in the next section.

We have

where

The sign of \(F'(u)\) (thus, the monotonicity of F) is the same as that of the polynomial M. The next proposition presents some properties of M.

Proposition 3

The following statements hold

-

1.

M is a polynomial of degree \(4N-6\),

$$\begin{aligned} M(u)=\sum _{i=0}^{4N-6} m_i u^{i}, \end{aligned}$$where the leading coefficient is

$$\begin{aligned} m_{4N-6}=a_{N-2}a_{N-3}>0. \end{aligned}$$ -

2.

When \(a=b\), the coefficients of M are symmetric, that is for all \(i=0,\ldots , 4N-6\)

$$\begin{aligned} m_{i}=m_{4N-6-i}. \end{aligned}$$ -

3.

M has at least two positive roots, one is less than 1 and the other is bigger than 1. For sufficiently small N, namely \(N\le N_0\), M has exactly two positive roots, \(u_1\) and \(u_2\), where \(u_1<1<u_2\). As a consequence, for \(1<u<u_2\), \(F'(u)<0\), thus F is decreasing. While for \(u_2<u\), \(F'(u)>0\), thus F is increasing.

The proof of this proposition is presented in Sect. 6.5. We conjecture that the sequence of M has exactly two changes of signs, and thus M has exactly two positive roots.

3.4 The phase transition and the minimisation problem

In this section, we study the phase transition problem, which describes the change in the behaviour of the cost function when varying the strength of selection \(\beta \), and the optimal incentive problem, thus proving Theorem 2. We focus on the case \(a=b\).

Proof of Theorem 2

It follows from Proposition 2 that for \(0<u\), \(P(u)>0\) if and only if \(u>1\).

Thus according to the argument at the end of Sect. 3.1, if \(u\le 1\) then \(E'_{mix}>0\) (thus \(E_{mix}\) is increasing); and for \(u> 1\) we have (see (32))

where the function F is (see (31))

Since Q(u) is a polynomial of degree \(2N-2\) and uP(u) is a polynomial of degree \(2N-3\) and their leading coefficients are both positive,

This, together with the fact that F is smooth on \((1,+\infty )\), we deduce that there exists a global minimum of F in the interval \((1,+\infty )\)

Let

Then it follows from the above formula of \(E'_{mix}(\theta )\) that for \(\beta \le \beta ^*\), \(E_{mix}'(\theta )\ge 0\). Thus for \(\beta \le \beta ^*\), \(E_{mix}(\theta )\) is always increasing. For \(\beta >\beta ^*\), the sign of \(E_{mix}'(\theta )\) depends on the sign of the term \(F(u)+\beta \delta \). For arbitrary N, the equation \(F(u)=-\beta \delta \) has at least two roots, thus the sign of the term \(F(u)+\beta \delta \) changes at least twice, therefore E is not monotonic. In particular, for \(N\le N_0\), since F is decreasing in \((1,u_2)\) and increasing in \((u_2,+\infty )\), where \(F^*=F(u_2)<-\beta \delta \), the equation \(F(u)=-\beta \delta \) has two roots \(1<\bar{u_1}<u_2<{\bar{u}}_2\), and \(F(u)+\beta \delta <0\) when \({\bar{u}}_1< u< {\bar{u}}_2\) while \(F(u)+\beta \delta \ge 0\) when \(u\in (1,{\bar{u}}_1]\cup [{\bar{u}}_2,+\infty )\), see Fig. 3 for an illustration.

Behaviour of F and determination of the critical threshold \(\beta ^*\). For sufficiently small N, i.e. \(N\le N_0\), since F is decreasing in \((1,u_2)\) and increasing in \((u_2,+\infty )\), where \(F^*=F(u_2)<-\beta \delta \), the equation \(F(u)=-\beta \delta \) has two roots \(1<\bar{u_1}<u_2<{\bar{u}}_2\), and \(F(u)+\beta \delta <0\) when \({\bar{u}}_1< u< {\bar{u}}_2\) while \(F(u)+\beta \delta \ge 0\) when \(u\in (1,{\bar{u}}_1]\cup [{\bar{u}}_2,+\infty )\)

Hence \(E_{mix}'(\theta )<0\) when \({\bar{u}}_1< u< {\bar{u}}_2\) while \(E'_{mix}(\theta )\ge 0\) when \(u\in (1,{\bar{u}}_1]\cup [{\bar{u}}_2,+\infty )\). Thus \(E_{mix}(\theta )\) is increasing in \((1,{\bar{u}}_1)\), decreasing in \(({\bar{u}}_1,{\bar{u}}_2)\), and increasing again in \(({\bar{u}}_2,+\infty )\). In term of the variable \(\theta \), \(E_{mix}(\theta )\) is increasing in \((-\delta ,\theta _1)\), decreasing in \((\theta _1,\theta _2)\), and increasing again in \((\theta _2,+\infty )\), where

As a consequence, for \(N\le N_0\),

This completes the proof of this theorem. \(\square \)

4 Asymptotic behaviour of the expected cost function

In this section, we study the asymptotic behaviour (neutral drift, strong selection, and infinite population limits) of the cost function, proving the main results in Theorem 1. To this end, we will first need some auxiliary technical lemmas.

4.1 Some auxiliary lemmas

The first auxiliary lemma is an elementary inequality which will be used to estimate the cost function. Its proof can be found in Duong and Han (2021).

Lemma 3

For all \(x\in {\mathbb {R}}\), we have

In the next lemma, we provide lower and upper bounds for the number \(H_{N,a,b}\) defined in (8). As mentioned in the introduction, this number plays a similar role as the harmonic number \(H_n\) in Duong and Han (2021). However, unlike the harmonic number, we will show that \(H_{N,a,b}\) is always bounded and has a finite limit as \(N\rightarrow +\infty \).

Lemma 4

It holds that

The proof of this lemma is given in Sect. 6.6.

The following proposition characterises the asymptotic limit of \(H_{N,a,b}\) as \(N\rightarrow +\infty \), which will be used later to obtain asymptotic limits of the cost function.

Proposition 4

It holds that

Proof

We recall that \(H_{N,a,b}=\sum \limits _{j=1}^{N-1}\frac{1}{j(N-j)}\min (\frac{j}{a},\frac{N-j}{b})\). As in the proof of the above lemma, we will link \(H_{N,a,b}\) to the harmonic number \(H_N\) by splitting the sum at an appropriate point, which allows us to determine the minimum between j/a and \((N-j)/a\) explicitly, given by

We have

Let \({\hat{j}}=N-j\). Thus:

Note that \(N-N_{a,b}=N(1-\frac{a}{a+b})=N\frac{b}{a+b}\) and \(N_{a,b}=N\frac{a}{a+b}.\) Thus both \(N_{a,b}\) and \(N-N_{a,b}\) go to \(+\infty \) as \(N\rightarrow +\infty \). Then it follows from the following well-known asymptotic behaviour of the harmonic number (see (52))

we obtain the following asymptotic behaviour of \(H_{N,a,b}\)

This completes the proof of the proposition. \(\square \)

The following lemma provides lower and upper bounds for the cost function, which show that it grows quadratically with respect to the population size.

Lemma 5

It holds that

where

Proof

Let \(H_{N,a,b}=\sum \limits _{j=1}^{N-1}\frac{1}{j(N-j)}\min (\frac{j}{a},\frac{N-j}{b})\). We have

Using Lemma 3, we get

Let \(m=\min \limits _{i}\frac{\min (\frac{i+1}{a},\frac{N-i-1}{b})}{(i+1)(N-i-1)}\) and \(M=\max \limits _{i}\frac{\min (\frac{i+1}{a},\frac{N-i-1}{b})}{(i+1)(N-i-1)}\). Since

we obtain the following estimates

Thus for \(\theta >0\) we have

which completes the proof of the lemma. \(\square \)

We can further estimate m and M in (16) from below and above respectively in terms of the population size N as follows.

Lemma 6

For m and M defined in (16), it holds that

As a consequence, we have

The proof of this lemma is given in Sect. 6.7.

4.2 Neutral drift limit

In this section, we study the neutral drift limit of the cost function, proving the second part of Theorem 1.

Proposition 5

(neutral drift limit) It holds that

It is worth noting that the neutral drift limit of the cost function depends on the population size N, the reward and punishment efficiency ratios a and b through the number \(H_{N,a,b}\), but does not depend on the underlying game.

Proof

We recall that \(x=\beta (\theta +\delta )\). Since \(\beta \rightarrow 0\) implies \(x\rightarrow 0\), it follows from the formula of \(E_{mix}(\theta )\) that

\(\square \)

4.3 Strong selection limits

In this section, we study the strong selection limits of the cost function, proving the third statement of Theorem 1.

Proposition 6

(strong selection limits)

Similarly to the neutral drift limit, the strong selection limit of the cost function depends on the population size N, the reward and punishment efficiency ratios a and b, but does not depend on the underlying game.

Proof

To establish the strong selection limit \(\lim \limits _{\beta \rightarrow +\infty } E_{mix}(\theta )\), we rewrite \(E_{mix}(\theta )\) in a more convenient form as follows:

where \(h_j=\frac{\min (\frac{j+1}{a}, \frac{N-j-1}{b})}{(j+1)(N-j-1)}\).

Now, note that:

where

By putting all of the above together, we obtain that:

Recall that \(x=\beta (\theta +\delta )\) with \(\delta \) being the difference of the average payoffs between a cooperator and a defector. We study 3 cases:

-

1.

If \(\theta <-\delta \), then \(j(\theta +\delta )<0\), so \(e^{jx}=\Big [e^{j(\theta +\delta )}\Big ]^{\beta }\) for all \(1\le j \le N-1\). Thus, \(\lim \limits _{\beta \rightarrow +\infty } e^{jx}=\lim \limits _{\beta \rightarrow +\infty } \Big [e^{j(\theta +\delta )}\Big ] = 0\). Therefore,

$$\begin{aligned} \lim \limits _{\beta \rightarrow +\infty }E_{mix}(\theta )&=\frac{N^2\theta }{2}\frac{1}{(1+0)}\eta _0 \\ {}&=\frac{N^2\theta }{2}\bigg (H_{N,a,b}+\frac{1}{a(N-1)}\bigg ). \end{aligned}$$ -

2.

If \(\theta =-\delta \), then \(x=0\). So \(E_{mix}(\theta )=E_{mix}(-\delta )=-\frac{N^2\delta }{2N}\sum \limits _{j=0}^{N-1} \eta _j\). We have

$$\begin{aligned} \sum \limits _{j=0}^{N-1} \eta _j&=\eta _0 + \sum _{j=1}^{N-2}\eta _j+\eta _{N-1} \\ {}&=H_{N,a,b}+\frac{1}{a(N-1)}+H_{N,a,b}+\frac{1}{b(N-1)}\\ {}&\quad +\sum _{j=1}^{N-2}\Big (2 H_{N,a,b}+h_j+h_{j-1}\Big ) \\ {}&=2(N-1) H_{N,a,b}+\frac{1}{a(N-1)}+\frac{1}{b(N-1)}\\ {}&\quad +H_{N,a,b}-h_1+H_{N,a,b}-h_{N-2} \\ {}&= 2N H_{N,a,b}+\frac{1}{a(N-1)}+\frac{1}{b(N-1)}\\&\quad -\frac{\min (2/a, (N-2)/b)}{2(N-2)}-\frac{\min ((N-1)/a,1/b)}{(N-1)}. \end{aligned}$$Therefore,

$$\begin{aligned} E_{mix}(-\delta )= & {} -\frac{N \delta }{2}\Big [2N H_{N,a,b}+\frac{1}{a(N-1)}+\frac{1}{b(N-1)}\\{} & {} -\frac{\min (2/a, (N-2)/b)}{2(N-2)}-\frac{\min ((N-1)/a,1/b)}{(N-1)}\Big ]. \end{aligned}$$ -

3.

If \(\theta >-\delta \), then we obtain

$$\begin{aligned} \lim \limits _{\beta \rightarrow +\infty } E_{mix}(\theta )&= \frac{N^2\theta }{2} \lim \nolimits _{\beta \rightarrow +\infty } \frac{ \sum \nolimits _{j=0}^{N-1} \eta _j e^{jx}}{\sum \nolimits _{j=0}^{N-1} e^{jx}} \\&=\frac{N^2\theta }{2}\eta _{N-1} \\&=\frac{N^2\theta }{2}\bigg [H_{N,a,b}+\frac{1}{b(N-1)}\bigg ], \end{aligned}$$since

$$\begin{aligned} \lim \limits _{\beta \rightarrow +\infty } \frac{ \sum \nolimits _{j=0}^{N-1} \eta _j e^{jx}}{\sum \nolimits _{j=0}^{N-1} e^{jx}}&=\frac{e^{(N-1)x}\sum \nolimits _{j=0}^{N-1} e^{(j-(N-1))x}}{e^{(N-1)x}\sum \nolimits _{j=0}^{N-1} e^{(j-(N-1))x}} \\&=\frac{\sum \nolimits _{j=0}^{N-1} \eta _j e^{(j-(N-1))x}}{\sum \nolimits _{j=0}^{N-1} e^{(j-(N-1))x}}, \end{aligned}$$so \(e^{(j-(N-1))x}=e^{(j-(N-1))\beta (\theta +\delta )}=\bigg [ e^{(j-(N-1))(\theta +\delta )}\bigg ]^{\beta }\) for all \(0\le j \le N-2\). Thus \(\lim \nolimits _{\beta \rightarrow +\infty } e^{(j-(N-1))x}=0\) for all \(0\le j \le N-2\).\(\square \)

Neutral drift and strong selection limits. We calculate numerically the expected total cost for the mixed incentive, varying the intensity of selection \(\beta \). The dashed red lines represent the corresponding theoretical limiting values obtained in Proposition 5 for the neutral drift limit, while the dashed green lines represent the corresponding theoretical limiting values obtained in Proposition 6 for the strong selection limit. We observe that numerical results are in close accordance with those obtained theoretically. Results are obtained for DG with \(N = 2500, B = 2, c = 1\) (left) and for PGG with \(N = 2500, c = 1, r = 3, n = 5\) (right) (colour figure online)

4.4 Infinite population limits

In this section, we establish the infinite population limits of the cost function, proving the fourth statement of Theorem 1.

Proposition 7

(infinite population limits) We have

Unlike the neutral drift and strong selection limits, the infinite population limit of the cost function strongly depends on the underlying game. The strength of selection, \(\beta \), is fixed. See Fig. 5 below for an illustration of these limits.

Proof

Using

we can estimate

where \({\underline{h}}=\min h_j\) and \({\overline{h}}=\max h_j\).

We recall that \(x=\beta (\theta +\delta )\), where

Let

Then we have

Since for fixed \(\theta \), x equals to 0 for only one value of N, we can consider \(x\ne 0\) when we study the limit \(N\rightarrow +\infty \). We have

In addition, for DG, we have

While for PGG, we have

If \(\theta -c(1-\frac{r}{n}) \le 0\), then

Substituting these above limits in (22), it follows that, if \(\theta -c(1-\frac{r}{n}) \le 0\), we have

If \(\theta -c(1-\frac{r}{n})>0\), then we have

We have

From the above limits, (22) and (23) we obtain

Hence for PGG

From (19), (20), and (21), we obtain, for DG

and similarly, from (19), (20), and (24), we get, for PGG

Now we study the limit of \(\frac{H_{N,a,b}+{\underline{h}}}{H_{a,b}}\) and of \(\frac{H_{N,a,b}+{\overline{h}}}{H_{a,b}}\) as \(N\rightarrow +\infty \).

For \(i=1,\ldots , N_{a,b}\), \(h_i=\frac{1}{a(N-i)}< \frac{1}{a(N-N_{a,b})}\) as \(N-i\ge N-N_{a,b}\). Since \(\lim \limits _{N\rightarrow +\infty }\frac{1}{a(N-N_{a,b})}=0\), it follows that \(\lim \limits _{N\rightarrow +\infty } h_i=0\).

For \(i=N_{a,b}+1,\ldots , N-2\), \(h_i=\frac{1}{b(i+1)}< \frac{1}{b(N_{a,b}+1)}\) as \(i\ge N_{a,b}+1\). Since \(\lim \limits _{N\rightarrow +\infty }\frac{1}{b(N_{a,b}+2)}=0\), it follows that \(\lim \limits _{N\rightarrow +\infty } h_i=0\).

As \(N_{a,b}=\lfloor \frac{N}{\frac{b}{a}+1} \rfloor \) \(\rightarrow +\infty \) for \(N\rightarrow +\infty \), we deduce that \(h_j \rightarrow 0\) as \(N\rightarrow +\infty \) for any j,

Therefore, from (18), (25), and (27) we obtain, for DG:

and from (18), (26) and (27), for PGG:

\(\square \)

Large population size limit. We calculate numerically the expected total cost for the mixed incentive, varying the population size N. The dashed orange lines represent the corresponding theoretical limiting values obtained in Proposition 7 for the large population size limit, \(N\rightarrow +\infty \). We observe that numerical results are in close accordance with those obtained theoretically. Results are obtained for DG with \(N = 2500, B = 2, c = 1, \beta = 1, \theta = 1\) (left) and for PGG with \(N = 2500, c = 1, r = 3, n = 5, \beta = 1, \theta = 1\) (right) (colour figure online)

5 Discussion

The use of institutional incentives such as reward and punishment is an effective tool for the promotion of cooperation in social dilemmas, as proven both by theoretical (Hauert et al. 2007; Sasaki et al. 2015; Sigmund et al. 2010; Han 2022; Duong and Han 2021) and experimental results (Ostrom 2005; Van Lange et al. 2014; Jia-Jia et al. 2014). In past works, although mixed incentives were used, the aspect of minimisation of the cost function while ensuring a minimum level of cooperation was overlooked. Moreover, existing works that consider this question usually omit the stochastic effects that drive population dynamics, namely when the strength of selection, \(\beta \), varies.

In this work, we used a stochastic evolutionary game theoretic approach for finite, well-mixed populations and obtained theoretical results for the optimal cost of mixed incentives that ensure a desired level of cooperation, for a given intensity of selection, \(\beta \). We show that this cost strongly depends on the value of \(\beta \) due to the existence of a phase transition in the cost function for providing mixed incentives. This behaviour is missing in works that consider a deterministic evolutionary approach (Wang et al. 2019). We also characterised asymptotic behaviours of the cost function and showed that mixed incentives are always cost efficient than either using only reward or only punishment. In particular, we successfully obtained an infinite population limit, as well as those for neutral drift and strong selection.

For the mathematical analysis of the mixed incentive cost function to be possible, we made some assumptions. Firstly, in order to derive the analytical formula for the frequency of cooperation, we assumed a small mutation limit (Rockenbach and Milinski 2007; Nowak et al. 2004; Sigmund 2010). Despite the simplified assumption, this small mutation limit approach has wide applicability to scenarios which go well beyond the strict limit of very small mutation rates (Zisis et al. 2015; Hauert et al. 2007; Sigmund et al. 2010; Rand et al. 2013). If we were to relax this assumption, the derivation of a closed form for the frequency of cooperation would be intractable. Secondly, we focused on two important cooperation dilemmas, the Donation Game and the Public Goods Game. Both have in common that the difference in average payoffs between a cooperator and a defector does not depend on the population composition. This special property allowed us to simplify the fundamental matrix of the Markov chain to a tridiagonal form and apply the techniques of matrix analysis to obtain a closed form of its inverse matrix. In games with more complex payoff matrices such as the general prisoners’ dilemma and the collective risk game (Sun et al. 2021), this property no longer holds (e.g. in the former case the payoff difference, \(\Pi _C(i)-\Pi _D(i)\), depends additively on i) and the technique in this paper cannot be directly applied. In these scenarios, we might consider other approaches to approximate the inverse matrix, exploiting its block structure.

More recent works looked at the effect of indirect exclusion on cooperation, having found that the introduction of indirect exclusion could induce the stable coexistence of cooperators and defectors or the dominance of cooperators, successfully promoting cooperation (Liu and Chen 2022). Looking at prior agreement before an interaction takes place also showed that cooperation would be increased. Thus, individuals choose whether to take part in a social dilemma and those who do are rewarded (Ogbo et al. 2022; Han 2022). Our future work will consider cost-efficiency in these different form of institutional incentives, including the problem of providing incentives whenever the frequency or number of cooperators (or defectors) in the population does not exceed a given threshold.

We furthermore aim to investigate the optimisation problems of incentives such as reward, punishment, and exclusion in complex networks. There has been little attention to providing analytical results for cost-efficient incentives in structured populations or in more complex games, so this would also be an interesting research avenue. Finally, since time is most precious, we intent to explore the time that the system needs in order to achieve a desired level of cooperation.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

Bijan B, Tom C, D’Orsogna Maria R (2014) Recidivism and rehabilitation of criminal offenders: a carrot and stick evolutionary game. PLoS One 9(1):e85531

Chen X, Perc M (2014) Optimal distribution of incentives for public cooperation in heterogeneous interaction environments. Front Behav Neurosci 8:248

Cimpeanu T, Han TA, Santos FC (2019) Exogenous rewards for promoting cooperation in scale-free networks. In: ALIFE 2019, pp 316–323. MIT Press

Cimpeanu T, Perret C, Han TA (2021) Cost-efficient interventions for promoting fairness in the ultimatum game. Knowl Based Syst 233:107545

Cimpeanu T, Santos FC, Han TA (2023) Does spending more always ensure higher cooperation? an analysis of institutional incentives on heterogeneous networks. dynamic games and applications, pp 1–20

Hong DM, Anh HT (2021) Cost efficiency of institutional incentives for promoting cooperation in finite populations. Procee R Soc A 477(2254):20210568

Góis AR, Santos FP, Pacheco JM, Santos FC (2019) Reward and punishment in climate change dilemmas. Sci Rep 9(1):1–9

Özgür G, Bernd I, Bettina R (2006) The competitive advantage of sanctioning institutions. Science (New York, N.Y.) 312:108–11,05

Han TA (2013) The emergence of commitments and cooperation. Intention Recognition, Commitment and Their Roles in the Evolution of Cooperation: From Artificial Intelligence Techniques to Evolutionary Game Theory Models, pp 109–121

Han TA (2022) Institutional incentives for the evolution of committed cooperation: ensuring participation is as important as enhancing compliance. J R Soc Interface 19(188):20220036

Han TA, Lynch S, Tran-Thanh L, Santos FC (2018) Fostering cooperation in structured populations through local and global interference strategies. In: proceedings of the 27th international joint conference on artificial intelligence, pp 289–295

Han TA, Tran-Thanh L (2018) Cost-effective external interference for promoting the evolution of cooperation. Sci Rep 8(1):1–9

Hauert C, Traulsen A, Brandt H, Nowak MA, Sigmund K (2007) Via freedom to coercion: the emergence of costly punishment. Science 316(5833):1905–1907

Hilbe C, Sigmund K (2010) Incentives and opportunism: from the carrot to the stick. Procee R Soc B Biol Sci 277(1693):2427–2433

Kemeny J (1976) Perspectives on the micro-macro distinction. Sociol Rev 24(4):731–752

Linjie L, Xiaojie C (2022) Indirect exclusion can promote cooperation in repeated group interactions. Procee R Soc A Math Phys Eng Sci 478(2263):20220290

Linjie L, Shengxian W, Xiaojie C, Matjaž P (2018) Evolutionary dynamics in the public goods games with switching between punishment and exclusion. Chaos Interdiscip J Nonlinear Sci 28(10):103105

Novak Martin A (2006) Evolutionary Dynamics: Exploring the Equations of Life. Harvard University Press, Cambridge

Nowak MA (2006) Five rules for the evolution of cooperation. Science 314(5805):1560–1563

Nowak MA, Highfield R, et al (2011) Supercooperators. Canongate Edinburgh

Nowak MA, Sasaki A, Taylor C, Fudenberg D (2004) Emergence of cooperation and evolutionary stability in finite populations. Nature 428(6983):646–650

Ogbo Ndidi B, Aiman E, Anh HT (2022) Evolution of coordination in pairwise and multi-player interactions via prior commitments. Adapt Behav 30(3):257–277

Elinor O (2005) Understanding institutional diversity. Princeton University Press, Princeton

Perc M, Jordan JJ, Rand DG, Wang Z, Boccaletti S, Szolnoki A (2017) Statistical physics of human cooperation. Phys Rep 687:1–51

Rand DG, Nowak MA (2013) Human cooperation. Trends Cogn Sci 17(8):413–425

Rand David G, Tarnita Corina E, Hisashi O, Nowak Martin A (2013) Evolution of fairness in the one-shot anonymous ultimatum game. Procee Nat Acad Sci 110(7):2581–2586

Bettina R, Manfred M (2007) The efficient interaction of indirect reciprocity and costly punishment. Nature 444:718–23,01

Santos F, Pacheco Jorge M (2011) Risk of collective failure provides an escape from the tragedy of the commons. Procee Nat Acad Sci 108(26):10421–10425

Sasaki T, Brännström Å, Dieckmann U, Sigmund K (2012) The take-it-or-leave-it option allows small penalties to overcome social dilemmas. Procee Nat Acad Sci 109(4):1165–1169

Tatsuya S, Xiaojie C, Åke B, Ulf D (2015) First carrot, then stick: how the adaptive hybridization of incentives promotes cooperation. J R Soc Interface 12:20140935,01

Karl S (2010) The calculus of selfishness. The Calculus of Selfishness. Princeton University Press, Princeton

Sigmund K, De Silva H, Traulsen A, Hauert C (2010) Social learning promotes institutions for governing the commons. Nature 466:7308

Weiwei S, Linjie L, Xiaojie C, Attila S, Vasconcelos Vítor V (2021) Combination of institutional incentives for cooperative governance of risky commons. Iscience 24(8):102844

Attila S, Perc M (2017) Second-order free-riding on antisocial punishment restores the effectiveness of prosocial punishment. Phys Rev X 7:041027

Szolnoki A, Perc M (2013) Correlation of positive and negative reciprocity fails to confer an evolutionary advantage: phase transitions to elementary strategies. Phys Rev X 3:041021

Traulsen A, Nowak MA (2006) Evolution of cooperation by multilevel selection. Procee Nat Acad Sci 103(29):10952–10955

Van Lange Paul AM, Bettina R, Toshio Y (2014) Reward and punishment in social dilemmas. Oxford University Press, Oxford

Van Segbroeck S, Pacheco JM, Lenaerts T, Santos FC (2012) Emergence of fairness in repeated group interactions. Phys Rev Lett 108(15):158104

Wang S, Chen X, Szolnoki A (2019) Exploring optimal institutional incentives for public cooperation. Commun Nonlinear Sci Numer Simul 79:104914

Wang S, Liu L, Chen X (2021) Incentive strategies for the evolution of cooperation: analysis and optimization. Europhysics Lett 136(6):68002

Jia-Jia W, Li C, Zhang B-Y, Cressman R, Tao Y (2014) The role of institutional incentives and the exemplar in promoting cooperation. Sci Rep 4(1):1–6

Zhang M, Zhang X, Qu C, Wang G, Lu X (2022) The combination of social reward and punishment is conducive to the cooperation and heterogeneity of social relations. Chaos Interdiscip J Nonlinear Sci 32(10):103104

Zisis I, Di Guida S, Han TA, Kirchsteiger G, Lenaerts T (2015) Generosity motivated by acceptance-evolutionary analysis of an anticipation game. Sci Rep 5(1):1–11

Acknowledgements

The research of MHD was supported by EPSRC Grants EP/W008041/1 and EP/V038516/1. We would like to thank the anonymous referees for their instructive comments and suggestions, which were very helpful for us to improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this appendix, we provide explicit calculations for some small population cases, as well as detailed proofs and computations of technical results in the previous sections.

1.1 Small population examples

1.1.1 \(N=3\)

The cost function is

The function F is

The critical threshold is

1.1.2 \(N=4\)

The cost function is

The function F is

The critical threshold is

1.1.3 \(N=5\)

The cost function is

The function F is

The critical threshold is

1.2 Proof of Proposition 1

In this section, we prove Proposition 1.

Proof

We recall that:

and

The first statement follows directly from these formulae, the fact that \(n_{1,j}, n_{N-1,j}>0\), and the elementary inequalities

Now we prove the second statement. The third one is similar. Suppose that \(\frac{b}{a}\le \frac{1}{N-1}\). We have

which implies that

Thus, \(\min \Big (\frac{j}{a},\frac{N-j}{b}\Big )=\frac{j}{a}\) for all \(j=1,2,\ldots ,N-1\), where N is the number of individuals in the population. Therefore,

\(\square \)

1.3 Proof of Lemma 2

In this section, we give the proof of Lemma 2.

Proof

Since \(x=\beta (\theta +\delta )\), we have

Let \(u:=e^x\). From the formula of \(f(x)=(1+e^x)\Big [\big (1+e^x+\ldots +e^{(N-2)x}\big )H_{N,a,b} + \sum _{j=1}^{N-1}\frac{e^{(j-1) x}}{j(N-j)}\min \Big (\frac{j}{a}, \frac{N-j}{b}\Big )\Big ]\) and \(g(x)=1+e^x+\ldots +e^{(N-1)x}\), it is more convenient to express the right-hand side of (28) in terms of u. We have

Therefore

where we define

We also have

Note that \(x-\beta \delta =\beta \theta >0\) and\(f(x)g(x)>0\). It follows that if \(f(x)g'(x)-f'(x)g(x)\le 0\) then \(E_{mix}'(\theta )>0\). Therefore, we only need to consider the case \(f(x)g'(x)-f'(x)g(x)=u P(u)> 0\) (i.e., when \(P(u)>0\)). Let \(u=e^x\) and define

Then

The next step is to understand the sign of the term \(F(u)+\beta \delta \), which is required to understand the polynomials P and Q. In the next section, we analyse the polynomial P (Q is explicit and is much simpler). \(\square \)

1.4 Proof of Proposition 2

In this section, we provide a proof of Proposition 2. We delicately analyse the coefficients of P and employ the discrete integration by parts techniques from numerical analysis.

Proof

Let \(m_j=\frac{\min (\frac{j+1}{a},\frac{N-j-1}{b})}{(j+1)(N-j-1)}\) and let \(a_j:=H_{N,a,b}+m_j\) for \(j=0,\ldots , N-2\). Suppose that \(P(u)=\sum _{j=0}^{2N-4}p_j u^j\). Using the following formula of product of two polynomials:

we have

Hence

where for \(k=0,\ldots , 2N-4\)

Therefore, we obtain

that is for \(k=0,\ldots , 2N-3\)

In particular,

Hence, in particular, if \(a=b\) then

Now we will show that when \(a=b\), \(p_{N-2}=0\) and \(p_{k}+p_{2N-4-k}=0\) for all \(1\le k\le N-3\).

For \(1\le k\le N-3\):

In addition,

Therefore, for \(1\le k\le N-3\), we have

For \(k=N-2\), we have

It follows from the above computations that

Hence

We have

Hence

Therefore, recalling that

Hence,

If \(a=b\), then

hence, in this case, we have \(p_{N-2}=0\).

For \(N-1\le k\le 2N-5\):

Similarly,

Hence, for \(N-1\le k\le 2N-5\)

For \(N-1\le k\le 2N-5\), let \(k:=2N-4-{\hat{k}}\), then \(1\le {\hat{k}}\le N-3\) and

Now we will show that \(p_{2N-4-{\hat{k}}}+p_{{\hat{k}}}=0\), for all \(1\le {\hat{k}}\le N-3\). We have

To proceed further, we need to simplify the two sums

using discrete integration by parts techniques.

For the first sum in \(S_1\), by changing of index j to \({\hat{k}}-j+1\), we get

Similarly, by changing of index j to \({\hat{k}}-j\) we can rewrite the second sum in \(S_1\) as

Hence, we have

By proceeding similarly, we can transform \(S_2\) as

Substituting (38) and (39) back into (37) we have

It follows immediately from the formula of \(m_j\) that when \(a=b\).

and \(m_{j}=m_{N-2-j}\) for \(j=0,\ldots , N-2\).

Therefore, we have

So we have

where in the last equality, we have used the fact that \(m_{N-{\hat{k}}-3}=m_{{\hat{k}}+1}\).

Next we show that \(p_k<0\) for \(k=1,\ldots , N-3\). Substituting (38) back into (36) we get for \(k=1,\ldots , N-3\)

In the above expression, only the first term is positive, the other ones are negative. We will show that the first term is dominated by the last term. We recall that \(H_{N,a,b}=\sum _{j=0}^{N-2}m_j\) and for \(a=b\), then \(m_j=m_{N-2-j}\). Hence

Substituting this back to (40) we obtain

Since \(k\le N-3\) we have

Therefore, \(p_k<0\) for all \(k=0,\ldots , N-3\). In conclusion, we have for \(0\le k\le N-2\)

As a consequence, when \(a=b\), the sequence of coefficients of P has exactly one change of sign. Thus according to Descartes’ rule of sign, P has exactly one positive root. Because \(p_k=-p_{2N-4-k}\) for all \(k=0,\ldots , N-2\), the root is equal to 1. This finishes the proof of this proposition. \(\square \)

1.5 Proof of Proposition 3

In this section, we provide a detailed proof of Proposition 3.

Proof

Recall that

where

Thus Q is a polynomial of degree \(2N-2\) and the leading coefficient is \(q_{2N-2}=a_{N-2}\), which is

Hence \(Q'(u)\) is a polynomial of degree \(2N-3\) whose leading coefficient is \((2N-2)a_{N-2}\).

According to Proposition 2P is a polynomial of degree \(2N-4\) whose leading coefficient is

Hence \(P'\) is a polynomial of degree \(2N-5\) whose leading coefficient is \((2N-4)a_{N-3}\).

It follows that M is a polynomial of degree

with the leading coefficient

We write

Next we prove that the coefficients of M are symmetric, that is \(m_{i}=m_{4N-6-i}\) for all \(i=0,\ldots , 4N-6\). In fact, we observe that

Thus to prove that the coefficients of M are symmetric is equivalent to showing that

We now prove this equality. We have

where we have used the fact that \(a_j=a_{N-2-j}\) for all \(j=0,\ldots , N-2\). It follows that

Therefore

We now do similar computations for P. We have

where we have used the fact that \(p_{2N-4-i}=-p_i\) for all \(i=0,\ldots , 2N-4\). It also follows that

Thus

Therefore, from (43) to (48) we have

Hence

Using (43) and (46), we can simplify further the last line in the above expression as follows

Substituting this back into (49) we obtain

which is the desired equality (41). Hence the coefficients of M are symmetric.

Since \(m_0=m_{4N-6}>0\), it implies that

Next we show that M has at least to positive root by showing that \(M(1)<0\).

From (13), since \(P(1)=0\) we have

Clearly \(Q(1)= 2N \sum _{j=0}^{N-2}a_j >0\). Since \(P(u) = \sum _{k=0}^{2N-4} p_k u^k\), we have \(P'(u) = \sum _{k=0}^{2N-4} k p_k u^{k-1}\). Hence \(P'(1)=\sum _{k=0}^{2N-4} k p_k\). According to Proposition 2\(p_k=-p_{2N-4-k}\) for \(k=0,\ldots , N-3\) and \(p_{N-2}=0\). Thus we can rewrite \(P'(1)\) as follows.

Note that in the above computations, to go from the second equality to the third one, we have used a change of variable \({\hat{k}}= 2N-4-k\). Since \(p_{2N-4-k}>0\) for all \(k=0,\ldots , N-3\), \(P'(1)>0\) for all \(N\ge 3\). Thus, as \(M(1)=-Q(1)P'(1)\) and \(Q(1)>0, P'(1)>0\), we obtain that \(M(1)<0\). This, together with (50) and since M is a polynomial, we deduce, by the Intermediate Value Theorem, that M(u) has at least 2 roots, one in the interval (0, 1) and another in the interval \((1,+\infty )\). \(\square \)

1.6 Proof of Lemma 4

Proof

We have

We observe that

and

Thus we have

Let \(H_{N,1,1}=\sum \limits _{j=1}^{N-1} \frac{\min (j,N-j)}{j(N-j)}\), which is exactly \(H_{N,a,b}\) when \(a=b=1\). Then we have

where

is the harmonic number. This important number plays a central role in number theory. Its appearance in our analysis, as well as in Duong and Han (2021), is rather interesting. Using the above relationship and the following well-known estimates for the harmonic number

where \(\gamma =0.5772156649\) is the celebrated Euler-Mascheroni constant, we obtain the following lower and upper bounds for \(H_{N,1,1}\):

Substituting these estimates back to (51) we obtain the desired lower and upper bounds for \(H_{N,a,b}\) and complete the proof of this lemma. \(\square \)

1.7 Proof of Lemma 6

Proof

We have, for \(i=0,\ldots ,N-2\)

We have

Therefore, we obtain

Similarly for M

for \(i=0,\ldots ,N-2\).

We have

Thus

Substituting the lower bound for m and upper bound for M into (15) we obtain the desired estimates for \(E_{mix}\). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Duong, M.H., Durbac, C.M. & Han, T.A. Cost optimisation of hybrid institutional incentives for promoting cooperation in finite populations. J. Math. Biol. 87, 77 (2023). https://doi.org/10.1007/s00285-023-02011-6

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-023-02011-6