Abstract

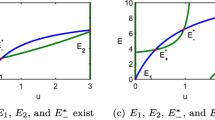

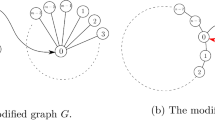

Recently, we introduced the class of matrix games under time constraints and characterized the concept of (monomorphic) evolutionarily stable strategy (ESS) in them. We are now interested in how the ESS is related to the existence and stability of equilibria for polymorphic populations. We point out that, although the ESS may no longer be a polymorphic equilibrium, there is a connection between them. Specifically, the polymorphic state at which the average strategy of the active individuals in the population is equal to the ESS is an equilibrium of the polymorphic model. Moreover, in the case when there are only two pure strategies, a polymorphic equilibrium is locally asymptotically stable under the replicator equation for the pure-strategy polymorphic model if and only if it corresponds to an ESS. Finally, we prove that a strict Nash equilibrium is a pure-strategy ESS that is a locally asymptotically stable equilibrium of the replicator equation in n-strategy time-constrained matrix games.

Similar content being viewed by others

Notes

In classical matrix games, this monomorphism is the same as the mixed strategy given by the polymorphic population. This is no longer true in general when the effect of time constraints is considered and there are two or more pure strategies in use by the polymorphic population.

That is, we assume each activity (i.e. active or inactive) can happen during a random time duration, which is exponentially distributed. This is quite different from the situation where the time duration of an action is part of the strategy of the player, for instance, the war of attrition (e.g. Eriksson et al. 2004), and dispersal-foraging game (Garay et al. 2015).

Observe that the Holling functional response are defined in a similar way (Holling 1959; Garay and Móri 2010). Also, observe that we do not use the term “payoff” for matrix games under time constraints. Instead, we use “intake” for the entries \(a_{ij}\) and “fitness” for (1) to avoid ambiguity since both these concepts have been called payoff in other circumstances.

This assumes, without loss of generality, that the time unit is chosen in such a way that the fixed rate an active individual meets an individual at random is 1.

The numerator 1 on the right-hand sides of (2) is the length of the active part of an activity cycle and the denominator is the expected time of an activity cycle for the focal individual whose phenotype is \( \mathbf {p}\) (first equation) or \(\mathbf {p}^{*}\) (second equation). That is, the left-hand and right-hand sides are both equal to the proportion of active individuals in the mutant (first equation) and resident (second equation) population. Note that \(\rho \) and \(\rho ^{*}\) depend on \( \mathbf {p}^{*},\mathbf {p}\) and \(\varepsilon \). Therefore, if it is necessary to emphasize this dependence, we use the notations \(\rho _{\mathbf { p}}(\mathbf {p}^{*},\mathbf {p},\varepsilon )\) and \(\rho _{\mathbf {p} ^{*}}(\mathbf {p}^{*},\mathbf {p},\varepsilon )\), respectively, instead of \(\rho \) and \(\rho ^{*}\), respectively.

In the following, \(\mathbf {p}^{*}\) is often thought of as the resident strategy or as an ESS according to Definition 2.1. However, since this does not need to be the case, it is better to regard \(\mathbf {p}^{*} \) only as a phenotype that is distinguished from the others.

Note that, \(\tilde{\varrho }(\mathbf {x})\) and \(\tilde{\mathbf {h}}(\mathbf {x})\) are well-defined since at least one of the \(x_{i}\) is positive.

Section 4 considers the pure-strategy polymorphic model where the only phenotypes present in the population use one of the n pure strategies.

See Footnote 6.

The proof of Theorem 4.1 shows that this result generalizes to the polymorphic model of the previous section in that stability of a strict NE \(\mathbf {p}^{*}\) continues to hold for the replicator equation extended to sets of mixed strategy phenotypes that include \(\mathbf {p}^{*}\).

From (16), \(\hat{x}_1=p_1^*\frac{\rho _{\mathbf p^*}}{\rho _1(\mathbf p^*)}\) and \(\hat{x}_2=p_2^*\frac{\rho _{\mathbf p^*}}{\rho _2(\mathbf p^*)}\).

Another connection between ecology and our evolutionary dynamics is the fact that the replicator equation with n pure strategies in classical matrix games is equivalent to a Lotka-Volterra system with \(n-1\) species (Hofbauer and Sigmund 1998).

This is always true in the two cases (i) \(m=1\) and \(\mathbf p_0\not =\mathbf p_1\) and (ii) \(\mathbf p_0,\ldots ,\mathbf p_m\) are distinct pure strategies.

For example, to see that \(\bar{\rho }(\hat{\mathbf h}(\mathbf x),\mathbf e_2,\hat{\eta })=\bar{\rho }(\mathbf p^*,\mathbf e_2, \eta )=\bar{\varrho }(\mathbf x)\) it is enough to observe that they satisfy the equation \(\varrho =1/[1+ \bar{\mathbf h}(\mathbf x)T\varrho \bar{\mathbf h}(\mathbf x)]\) with \(\varrho \) as the unknown and use Lemma 6.1 about uniqueness.

If \(\mathbf p^*\not =\mathbf e_1\) we can similarly find an appropriate \(\varepsilon _0(\mathbf e_1)\) and if \(\mathbf p^*=\mathbf e_1\) then all other strategies lie on the segment between \(\mathbf p^*\) and \(\mathbf e_2\), so we should only deal with the “side” of \(\mathbf p^*\) between \(\mathbf p^*\) and \(\mathbf e_2\).

References

Broom M, Luther RM, Ruxton GD, Rychtar J (2008) A game-theoretic model of kleptoparasitic behavior in polymorphic populations. J Theor Biol 255:81–91

Broom M, Rychtar J (2013) Game-theoretical models in biology. Chapman & Hall/CRC Mathematical and Computational Biology, London

Charnov EL (1976) Optimal foraging: attack strategy of a mantid. Am Nat 110:141–151

Cressman R (1992) The stability concept of evolutionary game theory (a dynamic approach), vol 94. Lecture notes in biomathematics, Springer, Berlin

Cressman R (2003) Evolutionary dynamics and extensive form games. MIT Press, Cambridge

Eriksson A, Lindgren K, Lundh T (2004) War of attrition with implicit time cost. J Theor Biol 230:319–332

Garay J, Móri TF (2010) When is the opportunism remunerative? Commun Ecol 11:160–170

Garay J, Varga Z, Cabello T, Gámez M (2012) Optimal nutrient for aging strategy of an omnivore: Liebig’s law determining numerical response. J Theor Biol 310:31–42

Garay J, Cressman R, Xu F, Varga Z, Cabello T (2015a) Optimal forager against ideal free distributed prey. Am Nat 186:111–122

Garay J, Varga Z, Gámez M, Cabello T (2015b) Functional response and population dynamics for fighting predator, based on activity distribution. J Theor Biol 368:74–82

Garay J, Csiszár V, Móri TF (2017) Evolutionary stability for matrix games under time constraints. J Theor Biol 415:1–12

Hofbauer J, Sigmund K (1998) Evolutionary games and population dynamics. Cambridge University Press, Cambridge

Holling CS (1959) The components of predation as revealed by a study of small mammal predation of the European pine sawfly. Can Entomol 9:293–320

Krivan V, Cressman R (2017) Interaction times change evolutionary outcomes: two-player matrix games. J Theor Biol 416:199–207

Maynard Smith J (1982) Evolution and the theory of games. Cambridge University Press, Cambridge

Sirot E (2000) An evolutionarily stable strategy for aggressiveness in feeding groups. Behav Ecol 11:351–356

Taylor PD, Jonker LB (1978) Evolutionary stable strategies and game dynamics. Math Biosci 40:145–156

Acknowledgements

This work was partially supported by the Hungarian National Research, Development and Innovation Office NKFIH [Grant Numbers K 108615 (to T.F.M.) and K 108974 and GINOP 2.3.2-15-2016-00057 (to J.G.)]. This project has received funding from the European Union Horizon 2020: The EU Framework Programme for Research and Innovation under the Marie Sklodowska-Curie Grant Agreement No. 690817 (to J.G., R.C. and T.V.). R.C. acknowledges support provided by an Individual Discovery Grant from the Natural Sciences and Engineering Research Council (NSERC).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 A.1. Preliminaries

We first state some technical lemmas necessary later, although sometimes they are used only tacitly.

Lemma 6.1

(Garay et al. 2017, Lemma 2, p. 7) The following system of nonlinear equations in n variables,

where the coefficients \(c_{ij}\) are positive numbers, has a unique solution in the unit hypercube \([0,1]^{n}\).

We also claim the following.

Lemma 6.2

The solution \(\mathbf {u}=(u_1,u_2,\ldots ,u_n)\in [0,1]^{n}\) of (24)

-

(i)

is a continuous function in

$$\begin{aligned}\mathbf {c}:=(c_{11},\ldots , c_{1n},c_{21},\ldots ,c_{2n},\ldots , c_{n1},\ldots ,c_{nn})\in \mathbb {R}_{\ge 0}^{n^2}, \end{aligned}$$ -

(ii)

has positive coordinates uniformly separated from zero, namely

$$\begin{aligned} \frac{1}{1+\mathop {\sum }\nolimits _{ij}c_{ij}}\le u_l\le 1\quad (1\le l \le n). \end{aligned}$$

Proof

To the proof of (i), assume that the sequence

For each positive integer k, let \(\mathbf {u}_k= (u_{1}^{(k)},u_{2}^{(k)},\ldots ,u_n^{(k)})\) be the unique solution of the system of equations

in the unit hypercube \([0,1]^{n}\). As \(\mathbf {u}_k\in [0,1]^{n}\) it is a bounded sequence. To see that \(\mathbf {u}_k\) tends to \(\mathbf {u}\) it, therefore, suffices to prove that any \(\mathbf {u}_{k_l}\) convergent subsequence of \(\mathbf {u}_k\) tends to the same \(\mathbf {u}\). Denote by \(\hat{\mathbf {u}}\) the limit of \(\mathbf {u}_{k_l}\). Since

is a continuous function in \((\mathbf {b},\mathbf {x})\) (consider it as a function of \(n^2+n\) variables on \(\mathbb {R}_{\ge 0}^{n^2}\times [0,1]^{n}\)), it follows that

which implies that

Because of the uniqueness of the solution, the limit \(\hat{\mathbf {u}}\) must be the same for any convergent subsequent of \(\mathbf {u}^{(k)}\) and \(\hat{\mathbf u}\) must be equal to \(\mathbf u\). Since \(\mathbf {u}^{(k)}\) tends to this unique solution in \([0,1]^n\), the solution is continuous in \(\mathbf {c}\).

The lower estimate in (ii) is apparent from (24) because \(u_i\le 1\) for every \(1\le i \le n\).\(\square \)

Corollary 6.3

Consider a population of types \(\mathbf p_0,\mathbf p_1,\ldots ,\mathbf p_m\in S_n\) with frequencies \(x_0,x_1,\ldots ,x_m\).

-

(i)

The active part \(\varrho _i\) of the different types given by the solution of the system:

$$\begin{aligned} \varrho _i=\frac{1}{1+\mathbf p_iT[\sum _{j=0}^mx_j\varrho _j\mathbf p_j]} \end{aligned}$$continuously depends on \(\mathbf {x}=(x_0,x_1,\ldots ,x_m)\). We use the notation \(\varrho _i(\mathbf {x})=\varrho _i(\mathbf {x},\mathbf p_0,\ldots ,\mathbf p_m)\) in this sense.

-

(ii)

Let

$$\begin{aligned} \bar{\varrho }(\mathbf {x})=\bar{\varrho }(\mathbf {x},\mathbf p_0, \ldots ,\mathbf p_m): =\sum _{i=0}^{m}x_i \varrho _i(\mathbf {x},\mathbf p_0,\ldots ,\mathbf p_m) \end{aligned}$$and

$$\begin{aligned} \bar{\mathbf h}(\mathbf {x})=\bar{\mathbf h}(\mathbf {x},\mathbf p_0,\ldots ,\mathbf p_m): =\sum _{i=0}^{m}\frac{x_i \varrho _i(\mathbf {x},\mathbf p_0,\ldots ,\mathbf p_m)}{\bar{\varrho }(\mathbf {x},\mathbf p_0,\ldots ,\mathbf p_m)}\mathbf p_i. \end{aligned}$$If \(\mathbf {y}\) is another frequency distribution such that \(\bar{\mathbf h}(\mathbf {y}) =\bar{\mathbf h}(\mathbf {x})=:\bar{\mathbf h}\), then both \(\bar{\varrho }(\mathbf {x})=\bar{\varrho }(\mathbf {y})\) and \(\varrho _i(\mathbf {x})=\varrho _i(\mathbf {y})\) (\(0\le i\le m\)).

-

(iii)

If \(\bar{\mathbf h}\) can be uniquely represented as a convex combination of \(\mathbf p_0,\ldots ,\mathbf p_m\)Footnote 14 then \(x_i\) must be equal to \(y_i\) for every i.

-

(iv)

If \(\mathbf p\) is a convex combination of \(\mathbf p_0,\ldots ,\mathbf p_m\) then there is an \(\mathbf x=(x_0,x_1,\ldots ,x_m)\in S_{m+1}\) such that \(\bar{\mathbf h}(\mathbf x)=\mathbf p\). Namely, if \(\mathbf p=\sum _{i=0}^m\alpha _i \mathbf p_i\) then

$$\begin{aligned} x_i=\frac{\rho _{\mathbf p}}{\rho _i(\mathbf p)}\alpha _i \end{aligned}$$where \(\rho _{\mathbf p}\) is the unique solution in [0, 1] to the equation

$$\begin{aligned} \rho =\frac{1}{1+\mathbf {p}T\rho \mathbf {p}} \end{aligned}$$(25)and \(\rho _i(\mathbf p)\) denotes the expression

$$\begin{aligned} \frac{1}{1+\mathbf {p}_{i}T\rho _{\mathbf p}\mathbf {p}} \quad 0\le i\le m. \end{aligned}$$(26)

Proof

-

(i)

The continuity of \(\varrho _i\) in \(\mathbf {x}\) immediately follows from Lemma 6.2. (Set \(c_{ij}\) equal to \(\mathbf p_iT x_j\mathbf p_j\).)

-

(ii)

Since \(\bar{\varrho }(\mathbf x)=(1-x)\varrho _0(\mathbf x)+ x \tilde{\varrho }(\mathbf x)\), Proposition 3.1 shows that

$$\begin{aligned} \bar{\varrho }(\mathbf {x})=\frac{1}{1+\bar{\mathbf h} T\bar{\varrho }(\mathbf {x})\bar{\mathbf h}} \end{aligned}$$and

$$\begin{aligned} \bar{\varrho }(\mathbf {y})=\frac{1}{1+\bar{\mathbf h}T\bar{\varrho } (\mathbf {y})\bar{\mathbf h}} \end{aligned}$$hold (as if the population consisted of only \(\bar{\mathbf h}\)-strategists). Lemma 6.1 says that the equation

$$\begin{aligned} \bar{\varrho }=\frac{1}{1+\bar{\mathbf h}T\bar{\varrho }\bar{\mathbf h}} \end{aligned}$$(with \(\bar{\varrho }\) as the unknown) has a unique solution in [0, 1] which implies at once that \(\bar{\varrho }(\mathbf {x})=\bar{\varrho }(\mathbf {y})\). Also,

$$\begin{aligned} \varrho _i=\frac{1}{1+\mathbf p_iT\sum _jx_j\varrho _j\mathbf p_j} =\frac{1}{1+\mathbf p_iT\bar{\varrho }\bar{\mathbf h}} \end{aligned}$$has a unique solution (in the unknowns \(\varrho _i\)) in \([0,1]^{n+1}\) for every i from which \(\varrho _i(\mathbf {x})=\varrho _i(\mathbf {y})\) follows for every \(0\le i \le m\).

-

(iii)

This is an immediate consequence of (ii) by comparing the coefficients in the representations \(\bar{\mathbf h}(\mathbf {x})\) and \(\bar{\mathbf h}(\mathbf {y})\).

-

(iv)

Take the frequencies

$$\begin{aligned} x_i=\frac{\rho _{\mathbf p}}{\rho _{i}(\mathbf p)}\alpha _{i}. \end{aligned}$$Then, by (25) and (26), we have that

$$\begin{aligned} \sum _{i=0}^mx_i&=\sum _{i=0}^m \frac{\rho _{\mathbf p}}{\rho _{i}(\mathbf p)}\alpha _{i}= \sum _{i=0}^m\alpha _i \frac{1+\mathbf {p}_iT\rho _{\mathbf p}\mathbf {p}}{1+\mathbf {p}T\rho _{\mathbf p}\mathbf {p}}=1, \end{aligned}$$that is \(\mathbf x=(x_0,x_1,\ldots ,x_m)\) is a frequency distribution. Consider the polymorphic population of strategies \(\mathbf p_0,\mathbf p_1,\ldots ,\mathbf p_m\) with this frequency distribution \(\mathbf x\). Then, \(\rho _0(\mathbf p), \rho _1(\mathbf p),\ldots ,\rho _m(\mathbf p)\) satisfy the following system of equations corresponding the system (5) with \(\mathbf p^*=\mathbf p_0\):

$$\begin{aligned} \varrho _{i} =\frac{1}{1+\mathbf {p}_{i}T\big [\sum _{j=0}^{m}x_{j}\varrho _{j}\mathbf {p}_j\big ]}. \end{aligned}$$(27)Indeed, by a simple replacement, we get that

$$\begin{aligned} \frac{1}{1+\mathbf {p}_{i}T\big [\sum _{j=0}^{m}x_{j} \rho _{j}(\mathbf p)\mathbf {p}_{j}\big ]}&= \frac{1}{1+\mathbf {p}_{i}T\big [\sum _{j=0}^{m} \alpha _j\frac{\rho _{\mathbf p}}{\rho _j(\mathbf p)} \rho _{j}(\mathbf p)\mathbf {p}_{j}\big ]}\\&= \frac{1}{1+\mathbf {p}_{i}T\big [\sum _{j=0}^{m} \rho _{\mathbf p}\alpha _j\mathbf {p}_{j}\big ]}= \frac{1}{1+\mathbf {p}_{i}T \rho _{\mathbf p}\mathbf p}=\rho _i(\mathbf p) \end{aligned}$$On the other hand, \(\varrho _0(\mathbf x), \varrho _1(\mathbf x),\ldots ,\varrho _n(\mathbf x)\) are also the solutions of the system of equations (27). By the uniqueness (see Lemma 6.1 in A.1.) we have that \(\varrho _i(\mathbf x)=\rho _i(\mathbf p)\). Consequently, we have that

$$\begin{aligned} \bar{\varrho }(\mathbf x)=\sum _{i=0}^m x_i \varrho _i(\mathbf x)= \sum _{i=0}^m x_i \rho _i(\mathbf p)= \sum _{i=0}^m \left( \frac{\rho _{\mathbf p}}{\rho _i(\mathbf p)}\alpha _i\right) \rho _i(\mathbf p)= \rho _{\mathbf p}\sum _{i=0}^m\alpha _i=\rho _{\mathbf p} \end{aligned}$$and

$$\begin{aligned} \bar{\mathbf h}(\mathbf x)=\frac{1}{\bar{\varrho }(\mathbf x)}\sum _{i=0}^m x_i \varrho _i(\mathbf x)\mathbf p_i= \frac{1}{\rho _{\mathbf p}}\sum _{i=0}^m \left( \frac{\rho _{\mathbf p}}{\rho _i(\mathbf p)}\alpha _i\right) \rho _i(\mathbf p) \mathbf p_i= \sum _{i=0}^m\alpha _i\mathbf p_i=\mathbf p. \end{aligned}$$\(\square \)

Lemma 6.4

Let \(\mathbf p,\mathbf r\in S_2\). Denote by \(\varrho _{\mathbf p}(\varepsilon ),\varrho _{\mathbf r}(\varepsilon )\) the unique solution in \([0,1]^2\) of the system:

Furthermore, \(\bar{\varrho }(\varepsilon ):=(1-\varepsilon )\varrho _{\mathbf p}(\varepsilon )+ \varepsilon \varrho _{\mathbf r}(\varepsilon )\) and

Then \(\mathbf q(0)=\mathbf p\), \(\mathbf q(1)=\mathbf r\) and \(\mathbf q(\varepsilon )\) uniquely runs through the line segment between \(\mathbf p\) and \(\mathbf r\) as \(\varepsilon \) runs from 0 to 1 in such a way that \(0\le \varepsilon _1<\varepsilon _2\le 1\) implies that \(||\mathbf q(\varepsilon _1)-\mathbf p||<||\mathbf q(\varepsilon _2)-\mathbf p||\).

Proof

Corollary 6.3 (i) with the choice \(\mathbf p_0=\mathbf p\) and \(\mathbf p_1=\mathbf r\) shows that \(\varrho _{\mathbf p}(\varepsilon )\) and \(\varrho _{\mathbf r}(\varepsilon )\) are continuous in \(\varepsilon \in [0,1]\). Therefore, both \(\bar{\varrho }(\varepsilon )\) and \(\mathbf q(\varepsilon )\) are continuous in \(\varepsilon \). Since \(\mathbf q(0)=\mathbf p\) and \(\mathbf q(1)=\mathbf q\) the Bolzano-Darboux property of continuous function (intermediate value theorem) ensures that \(\mathbf q(\varepsilon )\) runs through the line segment between \(\mathbf p\) and \(\mathbf r\) as \(\varepsilon \) runs from 0 to 1. Furthermore, by Corollary 6.3 (iii), \(\mathbf q(\varepsilon _1)\not = \mathbf q(\varepsilon _2)\) also holds. Since \(\mathbf q(0)=\mathbf p\), it follows that \(||\mathbf q(\varepsilon _1)-\mathbf p||<||\mathbf q(\varepsilon _2)-\mathbf p||\).\(\square \)

1.2 A.2.

Lemma 6.5

Let \(\mathbf p^*\in S_2\) and \(\hat{\mathbf x}=(\hat{x}_1,\hat{x}_2)\in S_2\) be the unique solution of \(\bar{\mathbf h}(\mathbf x)=\mathbf p^*\). Then \(\mathbf p^*\) is an ESS if and only if there is a \(\delta >0\) such that

whenever \(|x_1-\hat{x}_1|<\delta .\)

Proof

Consider a \(\mathbf p^*\in S_2\). Without loss of generality, it can be assumed that \(\mathbf p^*\not = \mathbf e_2\). We use the following notations:

Similarly, we introduce the notations \(\hat{\varrho }(\mathbf x)\) and \(\hat{\mathbf h}(\mathbf x)\), respectively, as follows:

Assume that \(\hat{x}_2<x_2\) (the case \(x_2<\hat{x}_2\) can be handled in the same way). It is easy to check this is equivalent to the inequality

which implies that \(\bar{\mathbf h}(\mathbf x)\) lies on the line segment between \(\hat{\mathbf h}(\mathbf x)\) and \(\mathbf e_2\). By Lemma 6.4, it is also true that \(\bar{\mathbf h}(\mathbf x)\) is located on the line segment between \(\mathbf p^*\) and \(\mathbf e_2\). Thus, there is an \(\hat{\eta }= \hat{\eta }(\mathbf x)\) and an \(\eta =\eta (\mathbf x)\), respectively, between 0 and 1, such that

and

respectively. Observe that \(\bar{\rho }(\hat{\mathbf h}(\mathbf x),\mathbf e_2,\hat{\eta })=\bar{\rho }(\mathbf p^*,\mathbf e_2, \eta )=\bar{\varrho }(\mathbf x)\) and \(\rho _{\mathbf e_2} (\hat{\mathbf h}(\mathbf x),\mathbf e_2,\hat{\eta })= \rho _{\mathbf e_2} (\mathbf p^*,\mathbf e_2, \eta )=\varrho _2(\mathbf x).\)Footnote 15 Note that \(\eta \) and \(x_2\) mutually determine each other so we can write \(\eta =\eta (x_2)\) or \(x_2=x_2(\eta )\) depending on what is given. A similar observation is true for the relationship between \(\hat{\eta }\) and \(x_2\).

Suppose, for some \(x_2\), we have

Multiply both sides of inequality (32) by \((1-\eta )\) then add \(\eta \varrho _2(\mathbf x) \mathbf e_2 A \bar{\varrho }(\mathbf x)\bar{\mathbf h}(\mathbf x)\) to get that

By subtracting \(\hat{\eta }\varrho _2(\mathbf x) \mathbf e_2 A \bar{\varrho }(\mathbf x)\bar{\mathbf h}(\mathbf x)\) from both sides of (33), simplifying by \((1-\hat{\eta })\) and using (30) we obtain inequality:

Multiplying (34) by \(\hat{\eta }\) and adding \((1-\hat{\eta }) \rho _{\hat{\mathbf h}(\mathbf x)} (\hat{\mathbf h}(\mathbf x),\mathbf e_2,\hat{\eta }) \hat{\mathbf h}(\mathbf x) A \bar{\varrho }(\mathbf x)\bar{\mathbf h}(\mathbf x)\) to both sides results in

where the right-hand side is just equal to \(x_1W_1(\mathbf x)+x_2 W_2(\mathbf x)\). As regards the left-hand side, observe that, by Lemma 6.1, \(\rho _{\hat{\mathbf h}(\mathbf x)}(\hat{\mathbf h}(\mathbf x),\mathbf e_2,\hat{\eta })= \hat{\varrho }(\mathbf x)\).Footnote 16 Therefore the left-hand side just equals \(\hat{x}_1W_1(\mathbf x)+\hat{x}_2 W_2(\mathbf x)\). The above reasoning shows the equivalence of inequalities (32), (33), (34) and (35) which means that

holds if and only if inequality (32) does.

If \(\mathbf p^*\) is an ESS then consider \(\varepsilon _0\) from Definition 2.1. By continuity (see Corollary 6.3), there exists a \(\delta >0\) such that if \(0<|x_2-\hat{x}_2|<\delta \) then \(0<\eta (x_2)<\varepsilon _0\). Therefore, (32) holds which, as we have just seen, is equivalent to (36).

Conversely, assume the existence of a \(\delta >0\) such that (36) holds whenever \(0<|x_2-\hat{x}_2|<\delta \). By Corollary 6.3 and Lemma 6.4, there is an \(\varepsilon _0(\mathbf e_2)\) such that \(0<|x_2(\eta )-\hat{x}_2|<\delta \) whenever \(0<\eta <\varepsilon _0\).Footnote 17 Consider the strategy \(\mathbf r\in S_2\) with \(r_2>p_2^*\) (the case \(r_2<p_2^*\) can be treated in a similar way). Then \(\mathbf r\) is on the segment between \(\mathbf p^*\) and

or on the segment between \(\mathbf h(\mathbf e_2,\varepsilon _0(\mathbf e_2))\) and \(\mathbf e_2\). In the former case set \(\varepsilon _0(\mathbf r)\) to be 1, in the latter one set \(\varepsilon _0(\mathbf r)\) to be the unique \(\zeta _0\) for which \(\mathbf h(\mathbf e_2,\varepsilon _0(\mathbf e_2))=\mathbf h(\mathbf r,\zeta _0)\) (such \(\zeta _0\) exists by Lemma 6.4). Then, again by Lemma 6.4, for any \(0<\zeta <\varepsilon _0(\mathbf r)\) there is an \(0<\eta <\varepsilon _0(\mathbf e_2)\) with \(\mathbf h(\mathbf e_2,\eta )=\mathbf h(\mathbf r,\zeta )\). Following the reasoning in the proof of Proposition 3.1 we see that \(\bar{\rho }(\mathbf p^*,\mathbf e_2,\eta )=\bar{\rho }(\mathbf p^*,\mathbf r,\zeta )\) and, hence, \(\rho _{\mathbf p^*}(\mathbf p^*,\mathbf e_2,\eta )= \rho _{\mathbf p^*}(\mathbf p^*,\mathbf r,\zeta )\). From these observations and the argument above that inequalities (32), (33), (34) and (35) are equivalent, we conclude that the ensuing inequalities are equivalent with each other:

We have seen that the last inequality is true for any \(0<\eta <\varepsilon _0(\mathbf e_2)\) whenever (36) holds for \((\hat{x}_1,\hat{x}_2)\). This proves that \(\mathbf p^*\) is an ESS.\(\square \)

Rights and permissions

About this article

Cite this article

Garay, J., Cressman, R., Móri, T.F. et al. The ESS and replicator equation in matrix games under time constraints. J. Math. Biol. 76, 1951–1973 (2018). https://doi.org/10.1007/s00285-018-1207-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-018-1207-0