Abstract

The computational complexity of the VertexCover problem has been studied extensively. Most notably, it is NP-complete to find an optimal solution and typically NP-hard to find an approximation with reasonable factors. In contrast, recent experiments suggest that on many real-world networks the run time to solve VertexCover is way smaller than even the best known FPT-approaches can explain. We link these observations to two properties that are observed in many real-world networks, namely a heterogeneous degree distribution and high clustering. To formalize these properties and explain the observed behavior, we analyze how a branch-and-reduce algorithm performs on hyperbolic random graphs, which have become increasingly popular for modeling real-world networks. In fact, we are able to show that the VertexCover problem on hyperbolic random graphs can be solved in polynomial time, with high probability. The proof relies on interesting structural properties of hyperbolic random graphs. Since these predictions of the model are interesting in their own right, we conducted experiments on real-world networks showing that these properties are also observed in practice.

Similar content being viewed by others

1 Introduction

The VertexCover problem is one of the most fundamental NP-complete graph problems. Given an undirected graph G on n vertices the goal is to find the smallest vertex subset S, such that each edge in G is incident to at least one vertex in S. Since, by definition, there can be no edge between two vertices outside of S, these remaining vertices form an independent set. Therefore, one can easily derive a maximal independent set from a minimal vertex cover and vice versa.

Due to its NP-completeness there is probably no polynomial time algorithm for solving VertexCover. The best known algorithm for IndependentSet runs in 1.1996npoly(n) [26]. To analyze the complexity of VertexCover on a finer scale, several parameterized solutions have been proposed. One can determine whether a graph G has a vertex cover of size k by applying a branch-and-reduce algorithm. The idea is to build a search tree by recursively considering two possible extensions of the current vertex cover (branching), until a vertex cover is found or the size of the current cover exceeds k. Each branching step is followed by a reduce step in which reduction rules are applied to make the considered graph smaller. This branch-and-reduce technique yields a simple \(\mathcal {O}(2^{k} \text {poly}(n))\) algorithm, where the exponential portion comes from the branching. The best known FPT (fixed-parameter tractable) algorithm runs in \(\mathcal {O}(1.2738^{k} + kn)\) time [12], and unless ETH (exponential time hypothesis) fails, there can be no 2o(k)poly(n) algorithm [11].

While these FPT approaches promise relatively small running times if the considered network has a small vertex cover, the optimal solution is large for many real-world networks. Nevertheless, it was recently observed that applying a branch-and-reduce technique on real instances is very efficient [1]. Some of the considered networks had millions of vertices, yet an optimal solution (also containing millions of vertices) was computed within seconds. Most instances were solved so quickly since the expensive branching was not necessary at all. In fact, the application of the reduction rules alone already yielded an optimal solution. Most notably, applying the dominance reduction rule, which eliminates vertices whose neighborhood contains a vertex together with its neighborhood, reduces the graph to a very small remainder on which the branching, if necessary, can be done quickly. We trace the effectiveness of the dominance rule back to two properties that are often observed in real-world networks: a heterogeneous degree distribution (the network contains many vertices of small degree and few vertices of high degree) and high clustering (the neighbors of a vertex are likely to be neighbors themselves).

We formalize these key properties using hyperbolic random graphs to analyze the performance of the dominance rule. Introduced by Krioukov et al. [20], hyperbolic random graphs are obtained by randomly distributing vertices in the hyperbolic plane and connecting any two that are geometrically close. The resulting graphs feature a power-law degree distribution and high clustering [18, 20] (the two desired properties), which can be tuned using parameters of the model. Additionally, the generated networks have a small diameter [17, 19]. All of these properties have been observed in many real-world networks such as the internet, social networks, as well as biological networks like protein-protein interaction networks [2, 3, 14]. Furthermore, Boguná, Papadopoulos, and Krioukov showed that the internet can be embedded into the hyperbolic plane such that routing packages between network participants, greedily with respect to the hyperbolic distance, leads to routes that are very close to the shortest paths in the graph [10]. This correlation between hyperbolic distances and path lengths gives reason to believe that the network fits naturally into the hyperbolic plane.

Recently it has been shown that on hyperbolic random graphs VertexCover can be approximated in quasi-linear time within a factor of 1 + o(1), asymptotically almost surely [8]. Here, we extend this work by showing that VertexCover can be solved exactly in polynomial time on hyperbolic random graphs, with high probability. This is done by proving that even a single application of the dominance reduction rule reduces a hyperbolic random graph to a remainder with small pathwidth on which VertexCover can then be solved efficiently. Our analysis provides an explanation for why VertexCover can be solved efficiently on practical instances. We note that, while our analysis makes use of the underlying hyperbolic geometry, the algorithm itself is oblivious to it. Since our proof relies on certain structural properties of hyperbolic random graphs, we conducted experiments to test whether these are also found in real-world networks. Our results indicate that these predictions actually match the real world for a significant fraction of networks.

2 Preliminaries

Let G = (V, E) be an undirected graph. We denote the number of vertices in G with n. The neighborhood of a vertex v is defined as N(v) = {w ∈ V ∣{v, w}∈ E} and the size of the neighborhood, called the degree of v, is denoted by \(\deg (v)\). For a subset \(S \subseteq V\), we use G[S] to denote the induced subgraph of G obtained by removing all vertices in V ∖ S.

The Hyperbolic Plane

The hyperbolic plane \(\mathbb {H}^{2}\) is an infinite two-dimensional surface of constant negative curvature. For a detailed introduction to hyperbolic geometry, we refer the reader to the book by Ramsay and Richtmyer [23]. There are several models that can be used to represent \(\mathbb {H}^{2}\) (see [23, Chapter 7.8]). In this paper, we use the native representation (also called polar-coordinate model) of the hyperbolic plane, which is defined as follows. After choosing a designated pole \(O \in \mathbb {H}^{2}\), together with a polar axis, i.e., a reference ray starting at O, a point p is uniquely identified by its radius r(p), denoting the hyperbolic distance to O, and its angle (or angular coordinate) φ(p), denoting the angular distance between the polar axis and the line through p and O. The hyperbolic distance between two points p and q is given by

where \(\cosh (x) = (e^{x} + e^{-x}) / 2\), \(\sinh (x) = (e^{x} - e^{-x}) / 2\) (both growing as ex/2 ± o(1)), and Δφ(p, q) = π −|π −|φ(p) − φ(q)|| denotes the angular distance between p and q. If not stated otherwise, we assume that computations on angles are performed modulo 2π.

We use Bp(r) to denote a disk of radius r centered at p, i.e., the set of points with hyperbolic distance at most r to p. Such a disk has an area of \(2\pi (\cosh (r) - 1)\) and circumference \(2\pi \sinh (r)\). Thus, the area and the circumference of a disk in the hyperbolic plane grow exponentially with its radius. In contrast, this growth is polynomial in Euclidean space. Therefore, representing hyperbolic shapes in the Euclidean geometry results in a distortion. In the native representation, used in our figures, circles can appear teardrop-shaped (see Fig. 2).

Hyperbolic Random Graphs

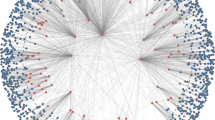

Hyperbolic random graphs are obtained by distributing n points uniformly at random within the disk BO(R), as explained below, and connecting any two of them if and only if their hyperbolic distance is at most R; see Fig. 1. The disk radius R (which matches the connection threshold) depends on n, as well as the power-law exponent β = 2α + 1 (for α ∈ (1/2, 1)) and the average degree κ of the generated network, both of which are assumed to be constant. More precisely, R is given by

The coordinates for the vertices are drawn as follows. For vertex v the angular coordinate, denoted by φ(v), is drawn uniformly at random from [0, 2π). The radius of v, denoted by r(v), is sampled according to the probability density function

for r ∈ [0, R]. For r > R, f(r) = 0. This function grows exponentially as r approaches R. The joint distribution function of angles and radii is then given by

A hyperbolic random graph with 979 vertices, average degree 8.3, and a power-law exponent of 2.5. In such a graph the red vertices and edges are removed by the dominance reduction rule, with high probability. Additionally, the remaining subgraph in the outer band (consisting of the blue vertices and edges) has a small path width, with high probability

Note that we obtain power-law exponents β ∈ (2,3). Exponents outside of this range are atypical for hyperbolic random graphs. On the one hand, for β < 2 the average degree of the generated networks is divergent. On the other hand, for β > 3 hyperbolic random graphs degenerate: They decompose into smaller components, none having a size linear in n. The obtained graphs have logarithmic tree width [9], meaning the VertexCover problem can be solved efficiently in that case.

The probability for a given vertex to lie in a certain area A of the disk is given by its probability measure \(\mu (A) = \iint _{A} f(r, \varphi ) \mathrm {d}\varphi \mathrm {d}r\). The hyperbolic distance between two vertices u and v increases with increasing angular distance between them. The maximum angular distance such that they are still connected by an edge is bounded by [18, Lemma 6]

Interval Graphs and Circular Arc Graphs

In an interval graph each vertex v is identified with an interval on the real line and two vertices are adjacent if and only if their intervals intersect. The interval width of an interval graph G, denoted by iw(G), is its maximum clique size, i.e., the maximum number of intervals that intersect in one point. For any graph the interval width is defined as the minimum interval width over all of its interval supergraphs. Circular arc graphs are a superclass of interval graphs, where each vertex is identified with a subinterval of the circle called circular arc or simply arc. The interval width of a circular arc graph G is at most twice the size of its maximum clique, since one obtains an interval supergraph of G by mapping the circular arcs into the interval [0, 2π] on the real line and replacing all intervals that were split by this mapping with the whole interval [0, 2π]. Consequently, for any graph G, if k denotes the minimum over the maximum clique number of all circular arc supergraphs \(G^{\prime }\) of G, then the interval width of G is at most 2k.

Treewidth and Pathwidth

A tree decomposition of a graph G is a tree T where each tree node represents a subset of the vertices of G called a bag, and the following requirements have to be satisfied: Each vertex in G is contained in at least one bag, all bags containing a given vertex in G form a connected subtree of T, and for each edge in G, there exists a bag containing both endpoints. The width of a tree decomposition is the size of its largest bag minus one. The treewidth of G is the minimum width over all tree decompositions of G. The path decomposition of a graph is defined analogously to the tree decomposition, with the constraint that the tree has to be a path. Additionally, as for the treewidth, the pathwidth of a graph G, denoted by pw(G), is the minimum width over all path decompositions of G. Clearly the pathwidth is an upper bound on the treewidth. It is known that for any graph G and any k ≥ 0, the interval width of G is at most k + 1 if and only if its pathwidth is at most k [13, Theorem 7.14]. Consequently, if \(k^{\prime }\) is the maximum clique size of a circular arc supergraph of G, then \(2k^{\prime } - 1\) is an upper bound on the pathwidth of G.

Probabilities

Since we are analyzing a random graph model, our results are of probabilistic nature. To obtain meaningful statements, we show that they hold with high probability, i.e., with probability \(1 - \mathcal {O}(n^{-1})\). The following Chernoff bounds consider the probability for a random variable to deviate too much from its expected value. This is a useful tool for showing that certain events occur with high probability.

Theorem 1 (Chernoff Bound [15, Theorem 1.1])

Let \(X_{1}, \dots , X_{n}\) be independent random variables with Xi ∈{0,1} and let X be their sum. Then, for ε ∈ (0,1)

Usually, it is sufficient to show that a random variable does not exceed a certain upper bound, with high probability. The following corollary shows that an upper bound on the expected value suffices to obtain concentration.

Corollary 1

Let \(X_{1}, \dots , X_{n}\) be independent random variables with Xi ∈ {0,1}, let X be their sum, and let f(n) be an upper bound on \(\mathbb {E}[X]\). Then, for all ε ∈ (0,1) it holds that

Proof

Consider a random variable \(X^{\prime }\) with \(f(n) = \mathbb {E}[X^{\prime }]\) such that \(X \le X^{\prime }\) for every outcome. Note that \(X^{\prime }\) exists as \(f(n) \ge \mathbb {E}[X]\). Since \(X \le X^{\prime }\), it holds that

Using Theorem 1 we can derive that

□

3 Vertex Cover on Hyperbolic Random Graphs

Reduction rules are often applied as a preprocessing step, before using a brute force search or branching in a search tree. They simplify the input by removing parts that are easy to solve. For example, an isolated vertex does not cover any edges and can thus never be part of a minimum vertex cover. Consequently, in a preprocessing step all isolated vertices can be removed, which leads to a reduced input size without impeding the search for a minimum.

The dominance reduction rule was previously defined for the IndependentSet problem [16], and later used for VertexCover in the experiments by Akiba and Iwata [1]. Formally, vertex u dominates a neighbor v ∈ N(u) if \((N(v) \setminus \{u\}) \subseteq N(u)\), i.e., all neighbors of v are also neighbors of u. We say u is dominant if it dominates at least one vertex. The dominance rule states that u can be added to the vertex cover (and afterwards removed from the graph), without impeding the search for a minimum vertex cover. To see that this is correct, assume that u dominates v and let S be a minimum vertex cover that does not contain u. Since S has to cover all edges, it contains all neighbors of u. These neighbors include v and all of v’s neighbors, since u dominates v. Therefore, removing v from S leaves only the edge {u, v} uncovered which can be fixed by adding u instead. The resulting vertex cover has the same size as S. When searching for a minimum vertex cover of G, it is thus safe to assume that u is part of the solution and to reduce the search to G[V ∖{u}].

In the remainder of this section, we study the effectiveness of the dominance reduction rule on hyperbolic random graphs and conclude that VertexCover can be solved efficiently on these graphs. Our results are summarized in the following main theorem.

Theorem 2

Let G be a hyperbolic random graph on n vertices. Then the VertexCover problem on G can be solved in poly(n) time, with high probability.

The proof of Theorem 2 consists of two parts that make use of the underlying hyperbolic geometry. In the first part, we show that applying the dominance reduction rule once removes all vertices in the inner part of the hyperbolic disk with high probability, as depicted in Fig. 1. We note that this is independent of the order in which the reduction rule is applied, as dominant vertices remain dominant after removing other dominant vertices. In the second part, we consider the induced subgraph containing the remaining vertices near the boundary of the disk (blue vertices in Fig. 1). We prove that this subgraph has a small pathwidth, by showing that there is a circular arc supergraph with a small interval width. Consequently, a tree decomposition of this subgraph can be computed efficiently. Finally, we obtain a polynomial time algorithm for VertexCover by first applying the reduction rules and afterwards solving VertexCover on the remaining subgraph using dynamic programming on the tree decomposition of small width.

3.1 Dominance on Hyperbolic Random Graphs

Recall that a hyperbolic random graph is obtained by distributing n vertices in a hyperbolic disk BO(R) and that any two are connected if their distance is at most R. Consequently, one can imagine the neighborhood of a vertex u as another disk Bu(R). Vertex u dominates another vertex v if its neighborhood disk completely contains that of v (both constrained to BO(R)), as depicted in Fig. 2 (left). We define the dominance areaD(u) of u to be the area containing all such vertices v. That is, \(D(u) = \{ p \in B_{O}(R) \mid B_{p}(R) \cap B_{O}(R) \subseteq B_{u}(R) \}\). The result is illustrated in Fig. 2 (right). We note that it is sufficient for a vertex v to lie in D(u) in order to be dominated by u, however, it is not necessary.

Given the radius r(u) of vertex u we can now compute a lower bound on the probability that u dominates another vertex, i.e., the probability that at least one vertex lies in D(u), by determining the measure μ(D(u)). To this end, we first define δ(r(u), r(v)) to be the maximum angular distance between two vertices u and v such that v lies in D(u).

Lemma 1

Let u, v ∈ BO(R) be two points. Then, v ∈ D(u) if and only if r(v) ≥ r(u) and Δφ(u, v) ≤ δ(r(u), r(v)), where

Proof

To prove the claim, we consider the possible positions that v can have relative to u and identify the ones for which v ∈ D(u) holds.

Assume without loss of generality that φ(u) = 0, as depicted in Fig. 3. By definition, v ∈ D(u) if and only if \(B_{v}(R) \cap B_{O}(R) \subseteq B_{u}(R)\). First note that this is not the case if r(v) < r(u), as then for the point p = (R − r(v), π) it holds that p ∈ Bv(R) ∩ BO(R) but p∉Bu(R) for all φ(v) ∈ [0, 2π). For the case when r(v) ≥ r(u), it was shown that \(B_{v}(R) \cap B_{O}(R) \subseteq B_{u}(R)\) holds when u and v have the same angular coordinate [7, Lemma 1]. This shows that the first condition (r(v) ≥ r(u)) is necessary for v to be in the dominance area of u, and it remains to determine the maximum angular deviation between the two points, such that this is still the case.

Left: Vertex v is in the dominance area of u, since Bv(R) ∩ BO(R) (red area) is contained in Bu(R). The intersections \(i_{u, v}, i^{\prime }_{u, v}\) mark the separation between Bv(R) ∖ Bu(R) (green area) and the rest of Bv(R). If v is rotated in counterclockwise direction, iv, O and iu, v move along the red lines towards iu, O. Right: Vertex v is rotated such that iu, v = iu, O

To this end, we argue about intersections of Bu(R), Bv(R), and BO(R), which we use as indicators whether v ∈ D(u) holds. For now assume that φ(v) = φ(u) and consider the two intersections \(i_{u, v}, i^{\prime }_{u, v}\) of Bu(R) with Bv(R), as depicted in Fig. 3 (left). Since \(B_{v}(R) \cap B_{O}(R) \subseteq B_{u}(R)\) holds by [7, Lemma 1] and since circles are convex, we know that Bv(R) ∖ Bu(R) (the green area in Fig. 3 (left)) lies outside of BO(R) and so do the two intersections \(i_{u, v}, i^{\prime }_{u, v}\). For the same reason, we know that iv, O, the intersection of Bv(R) with BO(R) with φ(iv, O) ∈ [0, π], lies in Bu(R). It follows that, for the analogously defined intersection iu, O we have φ(iv, O) ≤ φ(iu, O).

We now relax the assumption that φ(v) = φ(u) and instead imagine that we increase the angle between u and v by some δ > 0, which denotes a counterclockwise rotation of v around the origin. (For symmetry reasons the argumentation about a clockwise rotation is analogous.) Then, iu, v and \(i^{\prime }_{u, v}\) move along the boundary of Bu(R) and, in particular, iu, v moves towards iu, O. Note that at the same time iv, O moves towards iu, O as well. Both movements are depicted using red lines in Fig. 3 (left). As long as iu, v has not surpassed iu, O, neither of the two intersections of Bv(R) with Bu(R) lies inside of BO(R), which means that Bv(R) ∖ Bu(R) remains outside of BO(R) and we maintain the property that \(B_{v}(R) \cap B_{O}(R) \subseteq B_{u}(R)\). As we keep increasing δ, we eventually get to the point where iu, v reaches iu, O, as depicted in Fig. 3 (right). Note that at this point we also have iv, O = iu, v. Consequently, if we were to rotate v any further, we would have iv, O ∉ Bu(R), meaning Bv(R) ∩ BO(R) would no longer be a subset of Bu(R). It follows that \(B_{v}(R) \cap B_{O}(R) \subseteq B_{u}(R)\) if and only if φ(iv, O) ≤ φ(iu, O).

To compute the maximum angular distance between u and v such that this is the case, we again start with the assumption that φ(v) = φ(u) = 0, and determine the maximum angle δ(r(u), r(v)) such that φ(iv, O) + δ(r(u), r(v)) ≤ φ(iu, O). Since iu, O and iv, O have radius R and hyperbolic distance R from u and v, respectively, we can apply (2) to compute their angular coordinates as φ(iu, O) = θ(r(u), R) and φ(iv, O) = θ(r(v), R), respectively. Substituting these angles in the above inequality yields θ(r(v), R) + δ(r(u), r(v)) ≤ θ(r(u), R). We can now solve for δ(r(u), r(v)) and apply (2) to obtain

□

Using Lemma 1 we can now compute the probability for a given vertex to lie in the dominance area of u. We note that this probability grows roughly like 2/π ⋅ e−r(u)/2, which is a constant fraction of the measure of the neighborhood disk of u which grows as α/(α − 1/2) ⋅ 2/π ⋅ e−r(u)/2 [18, Lemma 3.2]. Consequently, the expected number of vertices that u dominates at least is a constant fraction of the expected number of its neighbors.

Lemma 2

Let u be a vertex with radius r(u) ≥ R/2. The probability for a given vertex to lie in D(u) is given by

Proof

The probability for a given vertex v to lie in D(u) is obtained by integrating the probability density (given by (1)) over D(u).

Since r(u) ≥ R/2 and r ∈ [r(u), R] we have \({{\varTheta }}(e^{-3/2 \cdot r(u)}) - {{\varTheta }}(e^{-3/2 \cdot r}) = \pm \mathcal {O}(e^{-3/4 \cdot R})\) and \((1 + {{\varTheta }}(e^{-\alpha R} - e^{-2 \alpha r})) = (1 + \mathcal {O}(e^{- \alpha R}))\). Due to the linearity of integration, constant factors within the integrand can be moved out of the integral, which yields

The remaining integrals can be computed easily and we obtain

It remains to simplify the remaining error terms. To do this, we consider the three summands in the above expression separately, starting with the first. There, the error term can be expanded to obtain

Now recall that R is defined as \(R = 2\log \left (2n/ (\pi \kappa ) \cdot (\alpha /(\alpha - 1/2))^{2} (1 + o(1)) \right )\), which is equivalent to \(R = 2\log (n) + C\) for some constant \(C \in \mathbb {R}\), since α and κ are assumed to be constants. Moreover, since r(u) ≥ R/2 holds by assumption, we have eαr(u) = ω(1) and thus \(\mathcal {O}(1) - e^{\alpha r(u)} = - \mathcal {O}(e^{\alpha r(u)})\). We obtain

Again, since \(R = 2\log (n) + C\) for a constant C, we have e−αR = o(1) and thus \(\mathcal {O}(e^{-\alpha (R - r(u))}) = \mathcal {O}(e^{\alpha r(u)})\). Therefore, the error term further simplifies to \((1 - \mathcal {O}(e^{-\alpha (R - r(u))}))\) and (3) becomes

Now consider the second summand. Since α is constant, so is the first fraction. Moreover, as \(R = 2\log (n) + C\) for a constant C, we have \((1 + \mathcal {O}(e^{-\alpha R})) = (1 + o(1)) = \mathcal {O}(1)\). And since r(u) ≤ R, the exponent in the last factor is non-positive, from which we can conclude that this factor is also \(\mathcal {O}(1)\). The second summand therefore simplifies to \(\mathcal {O}(e^{-R/2}) = \mathcal {O}(n^{-1})\). Finally, the last summand can be reduced to \(\mathcal {O}(e^{-3/4 \cdot R}) = \mathcal {O}(n^{-3/2})\), which yields

Combining the last two summands then yields the claim. □

The following lemma shows that, with high probability, all vertices that are not too close to the boundary of the disk dominate at least one vertex.

Lemma 3

Let G be a hyperbolic random graph on n vertices, with power-law exponent 2α + 1 and average degree κ. Then, there is a constant c > 2/(κ(1 − 1/(2α))2), such that all vertices u with \(r(u) \le \rho = R - 2\log \log (n^{c})\) are dominant, with high probability.

Proof

Vertex u is dominant if at least one vertex lies in D(u). To show this for any u with r(u) ≤ ρ, it suffices to show it for r(u) = ρ, since μ(D(u)) increases with decreasing radius. To determine the probability that at least one vertex lies in D(u), we use Lemma 2 and obtain

By substituting \(R = 2\log \left (2n/ (\pi \kappa ) \cdot (\alpha /(\alpha - 1/2))^{2} (1 + o(1)) \right )\), we obtain

Moreover, since 1/(1 + x) = 1 −Θ(x) for \(x \in \mathbb {R}\) with x = ±o(1), we can conclude that

The probability of at least one vertex falling into D(u) is now given by

Consequently, for large enough n we can choose c > 2/(κ(1 − 1/(2α))2), such that the probability of a vertex at radius ρ being dominant is at least 1 − Θ(n− 2), allowing us to apply the union bound. □

Corollary 2

Let G be a hyperbolic random graph on n vertices, with power-law exponent 2α + 1 and average degree κ. Then, there exists a constant c > 2/(κ(1 − 1/(2α))2), such that all vertices with radius at most \(\rho = R - 2\log \log (n^{c})\) are removed by the dominance rule, with high probability.

By Corollary 2 the dominance rule removes all vertices of radius at most ρ. Consequently, all remaining vertices have radius at least ρ. We refer to this part of the disk as outer band. More precisely, the outer band is defined as BO(R) ∖ BO(ρ). It remains to show that the pathwidth of the subgraph induced by the vertices in the outer band is small.

3.2 Pathwidth in the Outer Band

In the following, we use G|r(v)≥r = G[{v ∈ V }∣r(v) ≥ r] to denote the induced subgraph of G that contains all vertices with radius at least r. To show that the pathwidth of G|r(v)≥ρ (the induced subgraph in the outer band) is small, we first show that there is a circular arc supergraph \(\hat {G}|_{r(v) \ge \rho }\) of G|r(v)≥ρ with a small maximum clique. We use \(\hat {G}\) to denote a circular arc supergraph of a hyperbolic random graph G, which is obtained by assigning each vertex v an angular interval Iv on the circle, such that the intervals of two adjacent vertices intersect. More precisely, for a vertex v, we set Iv = [φ(v) − θ(r(v), r(v)), φ(v) + θ(r(v), r(v))]. Intuitively, this means that the interval of a vertex contains a superset of all its neighbors that have a larger radius, as can be seen in Fig. 4. The following lemma shows that \(\hat {G}\) is actually a supergraph of G.

Lemma 4

Let G = (V, E) be a hyperbolic random graph. Then \(\hat {G}\) is a supergraph of G.

Proof

Let {u, v}∈ E be any edge in G. To show that \(\hat {G}\) is a supergraph of G we need to show that u and v are also adjacent in \(\hat {G}\), i.e., Iu ∩ Iv ≠ ∅. Without loss of generality assume r(u) ≤ r(v). Since u and v are adjacent in G, the hyperbolic distance between them is at most R. It follows, that their angular distance Δφ(u, v) is bounded by θ(r(u), r(v)). Since θ(r(u), r(v)) ≤ θ(r(u), r(u)) for r(u) ≤ r(v), we have Δφ(u, v) ≤ θ(r(u), r(u)). As Iu extends by θ(r(u), r(u)) from φ(u) in both directions, it follows that φ(v) ∈ Iu. □

Note that \(\hat {G}\) is still a supergraph of G, after removing a vertex from both G and \(\hat {G}\). Consequently, \(\hat {G}|_{r(v) \ge \rho }\) is a supergraph of G|r(v)≥ρ. It remains to show that \(\hat {G}|_{r(v) \ge \rho }\) has a small maximum clique number, which is given by the maximum number of arcs that intersect at any angle. To this end, we first compute this number at a given angle, which we set to 0 without loss of generality. Let Ar denote the area of the disk containing all vertices v with radius r(v) ≥ r whose interval Iv intersects 0, as illustrated in Fig. 5. The following lemma describes the probability for a given vertex to lie in Ar.

Lemma 5

Let G be a hyperbolic random graph and let r ≥ R/2. The probability for a given vertex to lie in Ar is bounded by

Proof

We obtain the measure of Ar by integrating the probability density function over Ar. Due to the definition of Iv we can conclude that Ar includes all vertices v with radius r(v) ≥ r whose angular distance to 0 is at most θ(r(v), r(v)), defined in (2). We obtain,

As before, we can conclude that \((1 + {{\varTheta }}(e^{-\alpha R} - e^{-2 \alpha r})) = (1 + \mathcal {O}(e^{-\alpha R}))\), since r ≥ R/2. By moving constant factors out of the integral, the expression can be simplified to

We split the sum in the integral and deal with the resulting integrals separately.

By placing 1/(1 − α) ⋅ e−(1−α)r outside of the parentheses we obtain

Simplifying the remaining error terms then yields the claim. □

We can now bound the maximum clique number in \(\hat {G}|_{r(v) \ge \rho }\) and with that its interval width \(\text {iw}(\hat {G}|_{r(v) \ge \rho })\).

Theorem 3

Let G be a hyperbolic random graph on n vertices and let r ≥ R/2. Then there exists a constant c such that, with high probability, it holds that \(\text {iw}(\hat {G}|_{r(v) \ge r}) = \mathcal {O}(\log (n))\), if \(r \ge R - 1/(1 - \alpha ) \cdot \log \log (n^{c})\), and otherwise

Proof

We start by determining the expected number of arcs that intersect at a given angle, which can be done by computing the expected number of vertices in Ar, using Lemma 5:

It remains to show that this bound holds with high probability at every angle. To this end, we apply a Chernoff bound (Corollary 1) to conclude that for any ε ∈ (0,1) it holds that

In order to see that this probability is sufficiently small, we first take a closer look at \(g(r^{\prime })\) with \(r^{\prime } = R - 1/(1 - \alpha ) \cdot \log \log (n^{c})\) and afterwards argue about the different values that r can take relative to \(r^{\prime }\).

Substituting \(R = 2\log \left (2n/(\pi \kappa ) \cdot (\alpha / (\alpha - 1/2))^{2} (1 + o(1)) \right )\) we obtain

Now consider the case where \(r < r^{\prime }\). Then, \(g(r) > g(r^{\prime })\) and applying Corollary 1 with ε = 1/4 yields

For the case, where \(r \ge r^{\prime }\), note that \(\mathbb {E}[|\{ v \in A_{r}\}|]\) decreases with increasing r. Therefore, \(g(r^{\prime }) \in \mathcal {O}(\log (n))\) is a pessimistic but valid upper bound on g(r) and we obtain the same bound on \(\Pr [|\{ v \in A_{r}\}| > 5/4 \cdot g(r^{\prime })]\).

In both cases, we can choose c such that |{v ∈ Ar}|≤ 5/4 ⋅ g(r) holds with probability \(1 - \mathcal {O}(n^{-c^{\prime }})\) for any \(c^{\prime }\) at a given angle. In order to see that it holds at every angle, note that it suffices to show that it holds at all arc endings as the number of intersecting arcs does not change in between arc endings. Since there are exactly 2n arc endings, we can apply the union bound and obtain that the bound holds with probability \(1 - \mathcal {O}(n^{-c^{\prime } + 1})\) for any \(c^{\prime }\) at every angle. Since g(r) is an upper bound on the maximum clique size of \(\hat {G}|_{r(v) \ge r}\), the interval width of \(\hat {G}|_{r(v) \ge r}\) is at most twice as large, as argued in Section 2. □

Since the interval width of a circular arc supergraph of G is an upper bound on the pathwidth of G [13, Theorem 7.14] and since \(\rho \ge R - 1/(1 -~\alpha ) \cdot \log \log (n^{c})\) for α ∈ (1/2,1), we immediately obtain the following corollary.

Corollary 3

Let G be a hyperbolic random graph on n vertices and let G|r(v)≥ρ be the subgraph obtained by removing all vertices with radius at most \(\rho = R - 2\log \log (n^{c})\). Then, with high probability it holds that

We are now ready to prove our main theorem, which we restate for the sake of readability.

Theorem 4

Let G be a hyperbolic random graph on n vertices. Then the VertexCover problem in G can be solved in poly(n) time, with high probability.

Proof

Consider the following algorithm that finds a minimum vertex cover of G. We start with an empty vertex cover S. Initially, all dominant vertices are added to S, which is correct due to the dominance rule. By Lemma 3, this includes all vertices of radius at most \(\rho = R - 2\log \log (n^{c})\), for some constant c, with high probability. Obviously, finding all vertices that are dominant can be done in poly(n) time. It remains to determine a vertex cover of G|r(v)≥ρ. By Corollary 3, the pathwidth of G|r(v)≥ρ is \(\mathcal {O}(\log (n))\), with high probability. Since the pathwidth is an upper bound on the treewidth, we can find a tree decomposition of G|r(v)≥ρ and solve the VertexCover problem in G|r(v)≥ρ in poly(n) time [13, Theorems 7.18 and 7.9]. □

Moreover, linking the radius of a vertex in Theorem 3 with its expected degree leads to the following corollary, which is interesting in its own right. It links the pathwidth to the degree d in the graph \(G|_{\deg (v) \le d} = G[ \{ v \in V \mid \deg (v) \le d\}]\), i.e., the subgraph of G induced by vertices of degree at most d.

Corollary 4

Let G be a hyperbolic random graph and let \(d \le \sqrt {n}\). Then, with high probability, \(\text {pw}(G|_{\deg (v) \le d}) = \mathcal {O}(d^{2 - 2\alpha } + \log (n))\).

Proof

Consider the radius \(r = R - 2 \log (\xi d)\) for some constant ξ > 0, and the graph G|r(v)≥r that is obtained by removing all vertices of radius at most r. In the following, we show that G|r(v)≥r is a supergraph of \(G|_{\deg (v) \le d}\) for large enough ξ. Afterwards, we bound the pathwidth of G|r(v)≥r.

The expected degree of a vertex with radius r is given by

By substituting \(r = R - 2\log (\xi d)\) together with the expression for R, which is given by \(R = 2\log (2n/(\pi \kappa ) \cdot (\alpha / (\alpha - 1/2))^{2} (1 + o(1)))\), we obtain

Note that for large enough n we can choose ξ sufficiently large, such that

for any ε ∈ (0,1). This allows us to apply the second inequality in the Chernoff bound in Theorem 1 to conclude that

First assume that \(d \ge \log (n)^{1/(2 - 2\alpha )}\). We handle the other case later. Note that 1/(2 − 2α) > 1 for α ∈ (1/2,1) and, thus, \(d \ge \log (n)\). Therefore, we can choose n and ξ sufficiently large, such that

Since smaller radius implies larger expected degree, we can derive the same bound for a given vertex of radius at most r. By applying the union bound we obtain that, with high probability, no vertex with radius at most r has degree less than or equal to d. Conversely, all vertices with degree at most d have radius at least r. Consequently, \(\hat {G}|_{r(v) \le r}\) is a supergraph of \(\hat {G}|_{\deg (v) \le d}\).

To prove the claim, it remains to bound the pathwidth of G|r(v)≥r. If \(r > R - 1/(1 - \alpha ) \cdot \log \log (n^{c})\), we can apply the first part of Theorem 3 to obtain \(\text {iw}(\hat {G}|_{r(v) \ge r}) = \mathcal {O}(\log (n))\). Otherwise, we use part two to conclude that the interval width of G|r(v)≥r is at most

As argued in Section 2 the interval width is an upper bound on the pathwidth.

For the case where \(d < \log (n)^{1/(2-2\alpha )}\) (which we excluded above), consider \(G|_{\deg (v) \le d^{\prime }}\) for \(d^{\prime } = \log (n)^{1/(2-2\alpha )} > d\). As we already proved the corollary for \(d^{\prime }\), we obtain \(\text {pw}(G|_{\deg (v) \le d^{\prime }}) = \mathcal {O}(d^{\prime 2 - 2\alpha } + \log (n)) = \mathcal {O}(\log (n))\). As \(G|_{\deg (v) \le d}\) is a subgraph of \(G|_{\deg (v) \le d^{\prime }}\), the same bound holds for \(G|_{\deg (v) \le d}\). □

4 Empirical Evaluation

Our results show that a heterogeneous degree distribution as well as high clustering make the dominance rule very effective. This matches the behavior for real-world networks, which typically exhibit these two properties. However, our analysis actually makes more specific predictions: (I) vertices with sufficiently high degree usually have at least one neighbor they dominate and can thus safely be included in the vertex cover; and (II) the graph remaining after deleting the high-degree vertices has simple structure, i.e., small pathwidth.

To see whether this matches the real world, we ran experiments on 59 networks from several network datasets [4, 5, 21, 22, 24]. Although the focus of this paper is on the theoretical analysis on hyperbolic random graphs, we briefly report on our experimental results; see Table 1 in Appendix. Out of the 59 instances, we can solve VertexCover for 47 networks in reasonable time. We refer to these as easy, while the remaining 12 are called hard. Note that our theoretical analysis aims at explaining why the easy instances are easy.

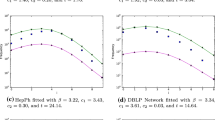

Recall from Lemma 3 that all vertices with radius at most \(R - 2\log \log (n^{c})\), with c > 2/(κ(1 − 1/(2α))2), probably dominate. This corresponds to an expected degree of \(2 \alpha / (\alpha - 1/2) \cdot \log (n)\). Figure 6 shows the percentage of dominant vertices among the ones above this degree, for the considered real-world networks. For more than 66% of the 59 networks, more than 75% of these vertices were in fact dominant (red and blue). For more than 40% of the networks, more than 95% were dominant (blue). Restricted to the 47 easy instances, these increase to 82% and 51% of networks, respectively.

Experiments concerning the pathwidth of the resulting graph are much more difficult, due to the lack of efficient tools. Therefore, we used the tool by Tamaki et al. [25] to heuristically compute upper bounds on the treewidth instead. As in our analysis, we only removed vertices that dominate in the original graph instead of applying the reduction rule exhaustively. On the resulting subgraphs, the treewidth heuristic ran with a 15min timeout. The resulting treewidth is at most 50 for 44% of the networks and at most 5 for 25%, see Fig. 7. Restricted to easy instances, the values increase to 55% and 32%, respectively. Note how on most graphs where almost all high-degree vertices are dominant (blue), we obtained the smallest treewidths. This indicates, that on networks where our first prediction was fulfilled, so was the second one.

Upper bounds on the treewidth of the considered graphs, after removing initially dominant vertices. Dashed and dotted green lines denote a bound of 5 and 50, respectively. Colors represent the percentage of initially dominant high-degree vertices, analogous to Fig. 6. Transparent dots represent hard instances

While hyperbolic random graphs are clearly an idealized representation of real-world graphs, these experiments indicate that the predictions derived from the model match the real world, at least for a significant fraction of networks.

References

Akiba, T., Iwata, Y.: Branch-and-Reduce Exponential/FPT Algorithms in Practice: A Case Study of Vertex Cover. Theor. Comput. Sci. 609, 211–225 (2016). https://doi.org/10.1016/j.tcs.2015.09.023

Albert, R.: Scale-Free networks in cell biology. J. Cell Sci. 118 (21), 4947–4957 (2005). https://doi.org/10.1242/jcs.02714

Albert, R., Barabási, A.L.: Statistical Mechanics of Complex Networks. Rev. Mod. Phys. 74, 47–97 (2002). https://doi.org/10.1103/RevModPhys.74.47

Arenas, A., Barabási, A.L., Batagelj, V., Mrvar, A., Newman, M., Opsahl, T.: Gephi Datasets. https://github.com/gephi/gephi/wiki/Datasets

Batagelj, V., Mrvar, A.: Pajek datasets. http://vlado.fmf.uni-lj.si/pub/networks/data/ (2006)

Blȧsius, T., Fischbeck, P., Friedrich, T.: Katzmann, M.: Solving Vertex Cover in Polynomial Time on Hyperbolic Random Graphs. In: 37Th International Symposium on Theoretical Aspects of Computer Science, STACS. https://doi.org/10.4230/LIPIcs.STACS.2020.25, pp 25:1–25:14. Montpellier, France (2020)

Bläsius, T., Freiberger, C., Friedrich, T., Katzmann, M., Montenegro-Retana, F.: Thieffry, M.: Efficient Shortest Paths in Scale-Free Networks with Underlying Hyperbolic Geometry. In: 45Th International Colloquium on Automata, Languages, and Programming (ICALP), pp 20:1–20:14 (2018). https://doi.org/10.4230/LIPIcs.ICALP.2018.20

Bläsius, T., Friedrich, T., Katzmann, M.: Efficiently Approximating Vertex Cover on Scale-Free Networks with Underlying Hyperbolic Geometry. To appear in the proceedings of the 29th Annual European Symposium on Algorithms (ESA) (2021)

Bläsius, T., Friedrich, T., Krohmer, A.: Hyperbolic Random Graphs: Separators and Treewidth. In: 24Th Annual European Symposium on Algorithms (ESA), pp 15:1–15:16 (2016). https://doi.org/10.4230/LIPIcs.ESA.2016.15

Boguná, M., Papadopoulos, F., Krioukov, D.: Sustaining the Internet with Hyperbolic Mapping. Nat. Commun. 1, 62 (2010). https://doi.org/10.1038/ncomms1063

Cai, L., Juedes, D.: On the Existence of Subexponential Parameterized Algorithms. J. Comput. Syst. Sci. 67, 789–807 (2003). https://doi.org/10.1016/S0022-0000(03)00074-6

Chen, J., Kanj, I. A., Xia, G.: Improved Upper Bounds for Vertex Cover. Theor. Comput. Sci. 411(40), 3736–3756 (2010). https://doi.org/10.1016/j.tcs.2010.06.026

Cygan, M., Fomin, F. V., Kowalik, Ł., Lokshtanov, D., Marx, D., Pilipczuk, M., Pilipczuk, M., Saurabh, S.: Parameterized Algorithms. Springer (2015)

Dorogovtsev, S.: Lectures on Complex Networks. Oxford University Press, Inc. https://doi.org/10.1093/acprof:oso/9780199548927.001.0001 (2010)

Dubhashi, D. P., Panconesi, A: Concentration of Measure for the Analysis of Randomized Algorithms. Cambridge University Press (2012)

Fomin, F. V., Grandoni, F., Kratsch, D.: A Measure & Conquer Approach for the Analysis of Exact Algorithms. J. ACM 56(5), 25:1–25:32 (2009). https://doi.org/10.1145/1552285.1552286

Friedrich, T., Krohmer, A.: On the Diameter of Hyperbolic Random Graphs. SIAM J. Discret. Math. 32(2), 1314–1334 (2018). https://doi.org/10.1137/17M1123961

Gugelmann, L., Panagiotou, K., Peter, U.: Random Hyperbolic Graphs: Degree Sequence and Clustering. In: Automata, Languages, and Programming, pp. 573 – 585. Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-642-31585-5_51 (2012)

Kiwi, M. A., Mitsche, D: A Bound for the Diameter of Random Hyperbolic Graphs. In: Proceedings of the Twelfth Workshop on Analytic Algorithmics and Combinatorics, ANALCO. https://doi.org/10.1137/1.9781611973761.3, pp 26–39. SIAM (2015)

Krioukov, D., Papadopoulos, F., Kitsak, M., Vahdat, A., Boguñá, M.: Hyperbolic Geometry of Complex Networks. Phys. Rev. E 82, 036106 (2010). https://doi.org/10.1103/PhysRevE.82.036106

Kunegis, J.: KONECT: The Koblenz Network Collection. In: International Conference on World Wide Web (WWW), Pp. 1343 – 1350. https://doi.org/10.1145/2487788.2488173 (2013)

Leskovec, J., Krevl, A.: SNAP Datasets: Stanford Large Network Dataset Collection. http://snap.stanford.edu/data (2014)

Ramsay, A., Richtmyer, R.D.: Introduction to Hyperbolic Geometry. Springer. https://doi.org/10.1007/978-1-4757-5585-5 (1995)

Rossi, R.A., Ahmed, N.K.: The Network Data Repository with Interactive Graph Analytics and Visualization. In: Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence. http://networkrepository.com (2015)

Tamaki, H., Ohtsuka, H., Sato, T., Makii, K.: TCS-meiji PACE2017-tracka github.com/TCS-meiji/PACE2017-tracka (2017)

Xiao, M., Nagamochi, H.: Exact Algorithms for Maximum Independent Set. Inf. Comput. 255, 126–146 (2017). https://doi.org/10.1016/j.ic.2017.06.001

Acknowledgements

This research was partially funded by the German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) – project number 390859508.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Special Issue on Theoretical Aspects of Computer Science (STACS 2020)

Guest Editors: Christophe Paul and Markus Bläser

A preliminary version of this paper appeared in [6]

Appendix: Experimental Data

Appendix: Experimental Data

Table 1 (continuing on the next page) shows the raw data of our experiments for which we reported aggregate values in the discussion in Section 4. The percentage of dominant vertices among those with high degree (\(> 2 \alpha / (\alpha -~1/2) \cdot \log n\)) is rounded to whole percentages. Treewidth − 1 indicates that the remaining graph after removing all dominant vertices contained no edge.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bläsius, T., Fischbeck, P., Friedrich, T. et al. Solving Vertex Cover in Polynomial Time on Hyperbolic Random Graphs. Theory Comput Syst 67, 28–51 (2023). https://doi.org/10.1007/s00224-021-10062-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00224-021-10062-9