Abstract

We exhibit a family of convex functionals with infinitely many equal-energy \(C^1\) stationary points that (i) occur in pairs \(v_{\pm }\) satisfying \(\det \nabla v_{\pm }=1\) on the unit ball B in \({\mathbb {R}}^2\) and (ii) obey the boundary condition \(v_{\pm }=\text {id}\) on \( \partial B\). When the parameter \(\epsilon \) upon which the family of functionals depends exceeds \(\sqrt{2}\), the stationary points appear to ‘buckle’ near the centre of B and their energies increase monotonically with the amount of buckling to which B is subjected. We also find Lagrange multipliers associated with the maps \(v_{\pm }(x)\) and prove that they are proportional to \((\epsilon -1/\epsilon )\ln |x|\) as \(x \rightarrow 0\) in B. The lowest-energy pairs \(v_{\pm }\) are energy minimizers within the class of twist maps (see Taheri in Topol Methods Nonlinear Anal 33(1):179–204, 2009 or Sivaloganathan and Spector in Arch Ration Mech Anal 196:363–394, 2010), which, for each \(0\le r\le 1\), take the circle \(\{x\in B: \ |x|=r\}\) to itself; a fortiori, all \(v_{\pm }\) are stationary in the class of \(W^{1,2}(B;{\mathbb {R}}^2)\) maps w obeying \(w=\text {id}\) on \(\partial B\) and \(\det \nabla w=1\) in B.

Similar content being viewed by others

1 Introduction

Let \(\epsilon >1\) be a real parameter, let \({\hat{x}}=x/|x|\) for any non-zero x in \({\mathbb {R}}^2\) and let B be the unit ball in \({\mathbb {R}}^2\). We study the functional \({\mathbb {D}}_\epsilon \) given by

for functions v belonging to the class

and our goal is to find a global minimizer of \({\mathbb {D}}_{\epsilon }\) in \({\mathcal {A}}\).

In this direction, we prove that \({\mathcal {A}}\) contains stationary points of \({\mathbb {D}}_{\epsilon }\) which occur in pairs, \(v_{\pm }^{\epsilon }\), say, such that (a) \({\mathbb {D}}_{\epsilon }(v_+^\epsilon ) = {\mathbb {D}}_{\epsilon }(v_{-}^\epsilon )\) and (b) \(v_{+}^\epsilon \) and \(v_{-}^\epsilon \) are distinguished by the way they ‘buckle’ near the centre 0 of the ball B, each executing a ‘turn’ there through \(\pm \pi /2\). In the incompressible setting, a stationary point \(v_{\pm }^\epsilon \) satisfies, for an appropriate pressure function \(p_{\pm }^{\epsilon }\), the weak Euler–Lagrange equation

where the integrand W of \({\mathbb {D}}_{\epsilon }\) is given by

evaluated at \(F=\nabla v(x)\). By arguing as we do in Sect. 3.1, \(p_{\pm }^{\epsilon }\) is given explicitly in terms of \(v_{\pm }^\epsilon \), and (1.3) can then be written as the PDE

(See Theorem 1.2, Sect. 3.1 for details.) The maps \(v_{\pm }^\epsilon \) are twist maps, i.e. they are of the form \(v_{\pm }^\epsilon (x)=\mathrm {Rot}\,(\pm k(|x|))x\), where k is a suitably chosen function and \(\mathrm {Rot}\,(h)\) stands for the \(2 \times 2\) matrix which rotates vectors by h radians anticlockwise about the origin. Thus twist maps take circles centred at the origin to themselves, only allowing for a change in the polar angle of image points. The main theorem in this paper concerning the existence of the buckling stationary points associated with the functional \({\mathbb {D}}_{\epsilon }\) is as follows:

Theorem 1.1

Let \({\mathbb {D}}_{\epsilon }\) be given by (1.1) and \({\mathcal {A}}\) by (1.2). Then, for each \(\epsilon >\sqrt{2}\), there are infinitely many pairs of \(C^1(B)\) twist maps \(v_{\pm ,j}^{\epsilon }\), \(j\in {\mathbb {N}}\), of the form

where \(k_j^{\epsilon } \in C^\infty (0,1)\), such that for each j:

-

(i)

\({\mathbb {D}}_{\epsilon }(v_{+,j}^{\epsilon }) = {\mathbb {D}}_{\epsilon }(v_{-,j}^{\epsilon })\)

-

(ii)

\(v_{\pm ,j}^{\epsilon }\) is a stationary point of \({\mathbb {D}}_{\epsilon }\) in \({\mathcal {A}}\);

-

(iii)

\(\nabla v_{\pm ,j}^{\epsilon }(0)=\pm J\), where J is the matrix \(\mathrm {Rot}\,(\pi /2)\).

Moreover, \({\mathbb {D}}_{\epsilon }(v_{\pm ,j+1}^{\epsilon })>{\mathbb {D}}_{\epsilon }(v_{\pm ,j}^{\epsilon })\) for all \(j \in {\mathbb {N}}\).

Before setting this in its context, we first gather together the following properties of the integrand W given in (1.4) above:

-

(P1)

the function \(x \mapsto W(x,F;\epsilon )\) is 0-homogeneous on the punctured ball \(B \setminus \{0\}\), and, if \(\epsilon \ne 1\) and \(F \ne 0\), it has no continuous extension to B;

-

(P2)

when written in terms of the components of F, and with \(x=R(\cos \mu , \sin \mu )\),

$$\begin{aligned} W(x,F;\epsilon )&=\left( \frac{1}{\epsilon } \cos ^2 \mu + \epsilon \sin ^2 \mu \right) (F_{11}^2+F_{12}^2) + \left( \frac{1}{\epsilon } \sin ^2 \mu + \epsilon \cos ^2 \mu \right) (F_{21}^2+F_{22}^2) \\&\quad - 2\left( \epsilon - \frac{1}{\epsilon }\right) (F_{11} F_{21}+ F_{12} F_{22}). \end{aligned}$$ -

(P3)

the function \(F \mapsto W(x,F;\epsilon )\) is strongly convex (in F) for each fixed \(x \in B \setminus \{0\}\);

-

(P4)

\(W(x,F;1)=|F|^2\), and in fact \(W(x,F;\epsilon )\) can be viewed as a ‘perturbation’ of W(x, F; 1).

Properties (P1) and (P2) are easily verified. To see (P3), note that \(W(x,F;\epsilon )\) is 2-homogeneous with respect to F, so its Hessian obeys

for any \(2 \times 2\) matrix A. Property (P4), by contrast, is less obvious, so we give the details here. Let J be the \(2 \times 2\) matrix representing a rotation of \(\pi /2\) radians anticlockwise, and note that for any non-zero x in \({\mathbb {R}}^2\) we may write the identity matrix, \(\mathbf{1}\), as \(\mathbf{1}= {\hat{x}}\otimes {\hat{x}}+ J {\hat{x}}\otimes J {\hat{x}}\). Then, by writing the inner product between two matrices \(F_1\) and \(F_2\) as \(F_1 \cdot F_2 = \mathrm{tr}\,( F_1^T F_2)\), we see that \(a \otimes b \cdot c \otimes Jb = (a\cdot c) (b \cdot Jb) = 0\) for any two-vectors a, b, c, and hence

To pass from the second to the third line, note that

by orthogonality. The fourth line follows from the third by using the fact that \(J^T\) is an isometric linear map, and in reaching the final line it helps to know the easily-verified identity \(\mathrm{adj}\,F = J^T F^T J\). Finally, by weighting the first and second of these terms with prefactors \(\frac{1}{\epsilon }\) and \(\epsilon \) respectively, we obtain \(W(x,F;\epsilon )\).

Theorem 1.1 points to the possibility that there are distinct but equal-energy global minimizers of the functional \({\mathbb {D}}_{\epsilon }(\cdot )\). Recall that in the context of energy functionals of the form \(\int _{\Omega } f(\nabla u) \, dx\), where f is strictly polyconvex, \(\Omega \) is a domain homeomorphic to a ball and \(u\arrowvert _{\partial \Omega }\) agrees with an affine map, the question of the uniqueness of minimizers, as set out in Problem 8 in [4], was settled by Spadaro in [24]. To relate that work to ours, first note that property (P3) enables us (via (1.5) below) to extend W to a polyconvex function. Thus by seeking maps \(v_{\pm }^{\epsilon }\) that agree with the identity on \(\partial B\) and which minimize \({\mathbb {D}}_{\epsilon }\), our aim is to tackle a question that is analagous to Problem 8 in [4], but with two important distinctions. These are that (1) admissible maps v, say, are incompressible, i.e. \(\det \nabla v=1\) a.e., and (2) \(x-\)dependent integrands are permitted. For clarity, we stress that while the maps \(v_{\pm }^{\epsilon }\) which feature in this paper are certainly stationary points of \({\mathbb {D}}_{\epsilon }\), we do not know whether they are minimizers. Nevertheless, we believe that to establish their existence and stationarity is an important intermediate step towards this goal. We remark that since Spadaro’s minimizers are such that their Jacobians take different signs on different parts of the domain he considers, they do not seem to be directly relevant to our incompressible setting.

It is perhaps striking that, in the pure displacement problem we consider, the maps \(v_{\pm }^{\epsilon }\) appear to emulate the buckling beam examples frequently cited as examples of non-uniqueness of equilibria in nonlinear elasticity when mixed boundary conditions are appliedFootnote 1. See Fig. 1. Indeed, by viewing W as the finite part of the polyconvex function \({\widehat{W}}\) given by

the functional

can be interpreted as the energy stored by an incompressible material occupying the ball B in a reference configuration. By setting \(\epsilon =1\), \({\widehat{D}}_{1}(v)\) models the stored energy of an isotropic, incompressible neo-Hookean material (see [10, Section 4.10], for example). When \(\epsilon >1\), the connection between nonlinear elasticity and the functional \({\widehat{D}}_{\epsilon }(v)\) is maintained, perhaps tenuously and only as a result of a choice made by the authors, by regarding \(W(x,F;\epsilon )\) as a perturbation of \(|F|^2\), as described in Property (P4) earlier.

Works which tackle non-uniqueness in finite elasticity include but are not limited to [2, 3, 20].Footnote 2 The problems treated are of mixed displacement-traction or pure traction type. Non-uniqueness in the context of pure displacement problems is considered in [6, 16, 19], and we discuss this point further below in relation to the pure displacement problem dealt with in this paper. There is also a significant body of work on the question of uniqueness of equilibria in finite elasticity which includes but is not limited to [16, 17, 22, 23, 26, 29, 30].

The map \(v_{+}^{\epsilon }\) with \(\epsilon = 5.0\), applied to a sector of angle \(\mu = 0.3\). The effect of \(v_{+}^{\epsilon }\) is to ‘buckle’ the ball B near its centre. Stationary points which exhibit more exotic buckling are possible when \(\epsilon > \sqrt{2}\): see Proposition 2.16 and the ensuing discussion, as well as Fig. 5

That there is a global minimizer of \({\mathbb {D}}_{\epsilon }\) in \({\mathcal {A}}\) can be established as follows. Firstly, by property (P3) and [1, Theorem II.4], it follows that the functional \({\mathbb {D}}_\epsilon \) is sequentially lower semicontinuous with respect to weak convergence in \(H^1(B;{\mathbb {R}}^2)\). Then, by applying [11, Theorem 8.20, part 1], which tells us that \({\mathcal {A}}\) is closed with respect to weak convergence in \(H^1(B;{\mathbb {R}}^2)\), the direct method of the calculus of variations yields the existence of a global minimizer of \({\mathbb {D}}_{\epsilon }\) in \({\mathcal {A}}\). When \(\epsilon >\sqrt{2}\), a careful numerical calculation (given in Sect. 4) shows that the global minimizer is not the identity.Footnote 3

It is worth pointing out that pure displacement variational problems with infinitely many equilibria were first proposed by F. John in [16] and later confirmed rigorously in [19], with further analysis in [6]. The domain in these cases was an annulus on which the identity boundary condition was imposed; solutions are distinguished by the integer number of times the outer boundary is rotated relative to the inner boundary. By contrast, the maps \(v_{\pm , j}^{\epsilon }\) we construct here are defined on the ball B, all display a similar buckling behaviour near the origin, and it seems that a different mechanism gives rise to the countable number of solutions we find when \(\epsilon >\sqrt{2}\). We note that the role played by topology in establishing non-uniqueness is studied carefully in [27].

The idea of considering energies that are related to the Dirichlet energy can also be found in the recent work of Taheri and Morris [18], who study minimizers of functionals of the form (see [18, Equation 1.3])

where \(X=X[a,b]\) is the annulus \(\{x \in {\mathbb {R}}^2: a< |x| < b\}\) and where the function F satisfies certain hypotheses ((H1) and (H2), in their notation). The competing functions u are incompressible and agree with the identity on \(\partial X\), and the minimizers are twist maps (which they call whirl maps). Thus their work has significant features in common with ours, but their emphasis is on uniqueness within different homotopy classes. Moreover, our integrand \(W(x,F;\epsilon )\) does not factorize as do theirs, and the fact that buckling takes place at \(x=0\) would seem to rule out the consideration of annuli X[a, b].

Let us note that maps v in \({\mathcal {A}}\) have a continuous representative (see [14, Theorem 2.3.2] or [25, Theorem 5]) and thus their topological degree, \(d(v,\cdot ,\cdot )\), is classically defined. Using this and the change of variables formula [12, Theorem 5.35], it holds that for any open set \(G \subset B\) and any function g in \(L^\infty (B)\),

Since the degree is determined by the trace, and since \(\det \nabla v=1\) a.e., we can take \(g=\chi _{_{v(G)}}\) and, after a short calculation, thereby obtain \(|G|=|v(G)|\). Hence maps in \({\mathcal {A}}\) are measure-preserving. They are also homeomorphisms of the ball B, which fact follows by applying the argument of [18, Theorem 2.1] with B in place of X(a, b), as defined earlier.

We conclude this introduction by describing the system of PDEs that one might expect a stationary point of \({\mathbb {D}}_{\epsilon }\) in \({\mathcal {A}}\) to satisfy. In view of the incompressibility constraint \(\det \nabla v=1\) a.e. in B obeyed by admissible maps v, we seek a so-called pressure term, p, say, such that

For sufficiently smooth functions v and p, the weak form stated above is equivalent to the pointwise equation

supplemented by the conditions implied by membership of v in \({\mathcal {A}}\). However, since we do not a priori know that the functions v and p are sufficiently regular, the approach we take has to be more indirect. We postpone these details to the discussion given at the beginning of Sect. 3, and instead state the main result of this paper concerning the Lagrange multipliers, or pressures, associated with the buckling stationary points described in Theorem 1.1 above.

Theorem 1.2

Let \(v_{\pm ,j}^{\epsilon }\) be a twist map stationary point of \({\mathbb {D}}_{\epsilon }\), as described in Theorem 1.1, of the form \(v_{\pm ,j}^{\epsilon }(x) = \mathrm {Rot}\,(\pm k_j^{\epsilon }(|x|))x\). Then the pressure function

where

is such that

In particular,

and (1.7) is equivalent to

1.1 Plan of the paper

After introducing necessary notation and terminology in Sect. 1.2, the rest of the paper is organised as follows.

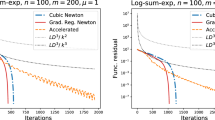

Section 2 is broadly concerned with establishing the existence of non-trivial stationary points of the functional \({\mathbb {D}}_{\epsilon }\) in the class of twist maps defined in (2.7). Using the direct method, we confirm in Sect. 2.1 that there are indeed energy-minimizing twist maps \(v(x)=\mathrm {Rot}\,(k(|x|))x\), say, and in Sect. 2.2 we establish the relevant Euler–Lagrange equation which k must satisfy, together with appropriate natural and imposed boundary conditions. We then transform this equation into a planar, autonomous ODE and, in Sect. 2.3, identify the non-trivial solutions we seek as heteroclinics connecting certain rest points in the phase plane, making extensive use of a conservation law (2.27) that we derive along the way. The asymptotic detail of these heteroclinic orbits shows that when \(1<\epsilon \le \sqrt{2}\) there are no non-trivial solutions, while, for \(\epsilon > \sqrt{2}\), we see in Sect. 2.4 that there are countably many pairs \(v_{\pm ,j}^{\epsilon }\), say, with \(j \in {\mathbb {N}}\), of solutions, all of which agree with the identity map on \(\partial B\). Their energies can be calculated and we give the numerical results in Sect. 4.

Section 3 opens with a description of the strategy we use to find and characterize the pressure p associated with the buckling stationary points, and is then divided into two further sections. We show in the first of these, Sect. 3.1, that the twist maps derived in Sect. 2 are stationary points of \({\mathbb {D}}_{\epsilon }\) in the sense that

We use this to exploit results of [13] and [8] to deduce the existence of an associated Lagrange multiplier belonging to the space \(\cap _{p>1} L^p(B)\). Section 3.2 calculates the pressure in terms of the solution k of the relevant Euler–Lagrange equation derived in Sects. 2.2, and 3 concludes by showing that the two entities—pressure and Lagrange multiplier—are connected by a change of variables involving the stationary point itself.

1.2 Notation

The inner product between two matrices A and B is given by \(A \cdot B = \mathrm{tr}\,(A^T B)\), where, as usual, \(\mathrm{tr}\,A\) denotes the trace of A. For any two vectors a and n in \({\mathbb {R}}^2\), the notation \(a \otimes n\) will denote the matrix of rank one whose (i, j) entry in some chosen basis is \(a_i n_j\). The cofactor of a \(2 \times 2\) matrix A is written \(\mathrm{cof}\,A\) and is calculated by \(\mathrm{cof}\,A = J^T A J\), where J is the \(2 \times 2\) matrix representing a rotation anticlockwise through \(\pi /2\) radians. For invertible A such that \(\det A = 1\), the expression \(\mathrm{cof}\,A = A^{-T}\) is also valid. The convenient notation for the unit vectors \({\hat{x}}=e_{\scriptscriptstyle {R}}(\mu )\) and \(J{\hat{x}}=e_{\tau }(\mu )\) will feature, and we note that in these terms the gradient of a planar map v, when it exists, can be expressed as

where \(v_{\scriptscriptstyle {R}}:=\partial v/\partial R\), \(v_{\tau }:=\frac{1}{R}\partial v/\partial \mu \) and \(R=|x|\).

Our notation for Sobolev spaces and the various types of convergence therein is generally standard, and where it is not it is explained at the relevant stage. Unless stated otherwise, sequences are indexed by the natural numbers. At certain points in the paper, especially Sect. 2.3, some terminology from dynamical systems is used. We believe this is standard; if in doubt, the authoritative references [9, 15] should be consulted. For any set S we denote by \(\chi _S\) the indicator function of S, i.e. \(\chi _S(s)=1\) if s lies in S and \(\chi _S(s)=0\) otherwise. We reserve the notation \(\mathbf{1}\) for the \(2 \times 2\) identity matrix. Finally, we note that the terms ‘map’ and ‘function’ are used interchangeably at various points in the paper, and we trust that no confusion arises as a result.

2 Energy minimizers v obeying an ansatz: existence, non-uniqueness and buckling

Following [28] or [21, Remark 2.1], we define twist maps as follows.

Definition 2.1

Let k belong to \(W^{1,1}((0,1);{\mathbb {R}})\) and let \(x=(R\cos \mu ,R\sin \mu )\). Then v is a twist map if

When \(R\ne 0\), a short calculation shows that

and from this it easily follows that

for any choice of k. By choosing \(k(1)=0\) in the sense of trace, we ensure that \(v(x)=x\) whenever \(x\in \partial B\). Taking v as above, we have

Denoting the prefactor of \(R^2 k'^2(R)\) by \(\Omega (k)\), i.e.

where

and noting that \(W(x,\mathbf{1};\epsilon ) = \epsilon +\frac{1}{\epsilon }\), it follows, after an integration by parts, that

Definition 2.2

Let \({\mathbb {D}}_{\epsilon }(v)\) be given by (1.1), let v be given by (2.1) and let \(\Omega (k)\) and \(p_{\epsilon }\) be given by (2.3) and (2.4) respectively. Then we define

and the class \({\mathcal {C}}\) by

We seek minimizers of \({\mathbb {E}}_{\epsilon }(k)\) in \({\mathcal {C}}\), and to do so it is natural to use the direct method of the calculus of variations.

2.1 Minimizing \({\mathbb {E}}_{\epsilon }\) over \({\mathcal {C}}\)

In order to minimize \({\mathbb {E}}_{\epsilon }\) over \({\mathcal {C}}\) using the direct method we must confirm both that \({\mathcal {C}}\) is closed and that \({\mathbb {E}}_{\epsilon }\) is sequentially lower semicontinuous with respect to a notion of weak convergence that suits the class \({\mathcal {C}}\). This is the point of the next three lemmas, the first of which establishes a useful Poincaré type inequality which applies to members of \({\mathcal {C}}\). In the following we shall abbreviate the space \(H^1((a,b);{\mathbb {R}})\) to \(H^1(a,b)\), where \(a< b\), and we will not relabel subsequences.

Lemma 2.3

There exists a constant \(M>0\) such that for any k in \({\mathcal {C}}\)

Proof

The proof begins, as usual, by supposing for a contradiction that there is a sequence \(k_j\) in \({\mathcal {C}}\) such that

and for which \( \int _0^1 R k_j^2 \, dR \ne 0\) for all j. Form the normalized functions

so that

Fix \(0< \delta < 1\) and note that by (2.9) and (2.10) \({\tilde{k}}_j\arrowvert _{(\delta , 1)}\) is bounded in \(H^1(\delta ,1)\), and hence there is some \({\tilde{k}}(\cdot ,\delta )\) in \(H^1(\delta ,1)\) such that, for a subsequence, \({\tilde{k}}_j\arrowvert _{(\delta , 1)} \rightharpoonup {\tilde{k}}(\cdot ,\delta )\). By (2.9) and sequential weak lower semicontinuity of the functional \(\int _\delta ^1 R^3 {\tilde{k}}_j'^2 \, dR\) we must have \({\tilde{k}}'(\cdot ,\delta )=0\) a.e. on \((\delta ,1)\). By Sobolev embedding, we may assume that \({\tilde{k}}_j \rightarrow {\tilde{k}}(\cdot ,\delta )\) in \(L^2(\delta ,1)\), and hence in particular that a subsequence satisfies \({\tilde{k}}_j \rightarrow {\tilde{k}}(\cdot ,\delta )\) a.e. in \((\delta ,1)\). Now, for all j and all \(\delta \le R \le 1\) we have \({\tilde{k}}_j(R) = \int _R^1 {\tilde{k}}'_j(s) \, ds\), so by letting \(j \rightarrow \infty \) it must be that \({\tilde{k}}(R,\delta ) = \int _R^1 {\tilde{k}}'(s,\delta ) \, ds\) a.e. in \((\delta ,1)\). Both sides are continuous and so they agree everywhere, and since \({\tilde{k}}'(\cdot ,\delta )=0\) by the above, \({\tilde{k}}(\cdot ,\delta ) =0\) on \((\delta ,1)\). Therefore \({\tilde{k}}_j \rightarrow 0\) in \(L^2(\delta ,1)\), and by letting \(\gamma ^2 < 1\) and applying (2.10), it follows that

provided j is large enough.

Now, if \(R^{\frac{1}{2}}|{\tilde{k}}_j(R)| \le \gamma \delta ^{-\frac{1}{2}}\) a.e. in \((0,\delta )\) then by squaring and integrating we contradict (2.11). Hence the set \(\{R \in (0,\delta ): \ R^{\frac{1}{2}}|{\tilde{k}}_j(R)| > \gamma \delta ^{-\frac{1}{2}}\}\) has positive measure, and, since \({\tilde{k}}_j\) is absolutely continuous, there must be \(0< R_\delta < \delta \) such that \(R_\delta ^{\frac{1}{2}}|{\tilde{k}}_j(R_\delta )| > \gamma \delta ^{-\frac{1}{2}}\). This forces \(\int _\delta ^1 R^3 {\tilde{k}}_j'^2 \, dR\) to be bounded away from zero, as we now show.

Claim: With \({\tilde{k}}_j\) as above and \(R_\delta ^{\frac{1}{2}}|{\tilde{k}}_j(R_\delta )| > \gamma \delta ^{-\frac{1}{2}}\),

Proof of Claim Let \(z(R)=2c_0(1-R^{-\frac{1}{2}})\), so that \(R^3z'^2 = c_0^2\) on (0, 1). Choose \(c_0\) so that \(z(R_\delta ) = {\tilde{k}}_j(R_\delta )\), i.e.

Set \(\varphi (R) = R^{\frac{3}{2}}({\tilde{k}}_j(R) -z(R))\) and use convexity to deduce that

To pass from the first to the second line above we have used the facts that \(R^\frac{3}{2}z'\) is constant and \(\varphi \) vanishes at both \(\delta \) and 1 to conclude that \(\int _\delta ^1 R^\frac{3}{2}z' \varphi ' \, dR = 0\). Thus the claim is proved. To conclude the proof of the lemma, note that (2.12) contradicts (2.9).

\(\square \)

Lemma 2.4

For any k in \({\mathcal {C}}\) let

and let \(k_j\) be a sequence in \({\mathcal {C}}\) such that \(||k_j||\) is bounded. Then there is k in \({\mathcal {C}}\) and a subsequence such that the following convergences hold:

Proof

That \(||\cdot ||\) is a norm and not merely a seminorm is clear from Lemma 2.3 and the definition of \({\mathcal {C}}\). Let \(k_j\) be a sequence in \({\mathcal {C}}\) such that \(||k_j||\) is bounded. Let \(w_j(R)=R^{3/2}k_j(R)\), so that \(w_j'=\frac{3}{2}R^{1/2}k_j + R^{3/2}k_j'\) a.e. in (0, 1). Then the sequence \(w_j\) is bounded in \(H^1(0,1)\), and so there is w in \(H^1(0,1)\) such that \(w_j \rightharpoonup w\) in \(H^1(0,1)\). By the Rellich-Kondrachov compactness theorem, we may assume that, for a subsequence, \(w_j \rightarrow w\) strongly in \(L^2(0,1)\). Furthermore, since

and since, by (2.8), the right-hand side is bounded uniformly in j, it must be that \(w_j/R\) converges weakly to some W, say, in \(L^2(0,1)\). Since \(w_j \rightarrow w\) in \(L^2(0,1)\), we can assume that \(w_j \rightarrow w\) a.e. in (0, 1), and hence, in particular, that \(w_j/R \rightarrow w/R\) a.e.. Hence \(W=w/R\) a.e., and so \(w_j/R \rightharpoonup w/R\) in \(L^2(0,1)\) and \(w_j \rightharpoonup w\) in \(H^1(0,1)\). It is now natural to define

and to note that (i) k belongs to \(W^{1,1}_{\text {loc}}((0,1);{\mathbb {R}})\), (ii) the weak derivative \(k'\) satisfies \(R^{3/2}k'=w'-\frac{3w}{2R}\), which belongs to \(L^2(0,1)\), (iii) \(k(1)=0\), by letting \(j \rightarrow \infty \) in the representation \(k_j(R) = \int _R^1 k_j^{'}(s) \, ds\) and then, as in Lemma 2.3, employing a continuity argument, and (iv), by (2.8), \(R^{1/2}k\) belongs to \(L^2(0,1)\). Thus k lies in \({\mathcal {C}}\) and the stated convergences hold by translating information about \(w_j\) into information about \(k_j\). \(\square \)

Lemma 2.5

Let \({\mathbb {E}}_{\epsilon }\) and \({\mathcal {C}}\) be as in Definition 2.2. Then there is a minimizer of \({\mathbb {E}}_{\epsilon }\) in \({\mathcal {C}}\).

Proof

By inspection, \({\mathbb {E}}_{\epsilon }\) is bounded below, so we may assume that there is a sequence \(k_j\) in \({\mathcal {C}}\) such that \({\mathbb {E}}_{\epsilon }(k_j) \rightarrow \inf _{{\mathcal {C}}}{\mathbb {E}}_{\epsilon }\) where the infimum is finite. The uniform bound \(1/\epsilon \le \Omega (k) \le \epsilon \) in particular implies that \(||k_j||\) is bounded, so we may assume without loss of generality that there is k in \({\mathcal {C}}\) such that the convergences described in (2.13), (2.14) and (2.15) hold. The last of these together with the bounded convergence theorem easily implies that the second term in \({\mathbb {E}}_{\epsilon }(k_j)\) obeys

as \(j \rightarrow \infty \). To deal with the first term in \({\mathbb {E}}_{\epsilon }(k_j)\), it is helpful to reuse some of the notation introduced in Lemma 2.4, namely let \(w_j:=R^{3/2}k_j\), and recall that \(R^{3/2}k_j'=w_j'-\frac{3w_j}{2R}\) a.e., with a similar expression for \(R^{3/2}k'\). Then, by convexity,

Let \(f(R) = w'-\frac{3w}{2R}\) and \(g_j=w_j'-w' - \frac{3}{2}\left( \frac{w_j}{R} - \frac{w}{R}\right) \). The rightmost integrand in the line above is then \(\Omega (k_j) f g_j\), which we write as

Now, by (2.13) and (2.14), \(g_j \rightharpoonup 0\) in \(L^2(0,1)\), so \(\int _0^1 \Omega (k) f g_j \, dR \rightarrow 0\) as \(j \rightarrow \infty \). By applying (2.15) together with a suitable dominated convergence theorem, \(\Omega (k_j) f - \Omega (k) f \rightarrow 0\) strongly in \(L^2(0,1)\). Since the sequence \(g_j\) is weakly convergent in \(L^2(0,1)\), it must in particular be bounded in that space. It follows that \(\int _0^1 (\Omega (k_j) f - \Omega (k) f) g_j \, dR \rightarrow 0\) as \(j \rightarrow \infty \), and hence by integrating (2.18), that \(\int _0^1 \Omega (k_j) f g_j \, dR \rightarrow 0\) as \(j \rightarrow \infty \). By (2.15) and Fatou’s lemma, the first term on the right-hand side of (2.17) obeys

Hence

from this and (2.16) we have \(\liminf _{j \rightarrow \infty } {\mathbb {E}}_{\epsilon }(k_j) \ge {\mathbb {E}}_{\epsilon }(k)\), so that k minimizes \({\mathbb {E}}_{\epsilon }\) in \({\mathcal {C}}\). \(\square \)

2.2 Minimizers and the Euler–Lagrange equation

Recall that

and let \(F(R,z,v)= \Omega (z)R^3 v^2 -2p_{\epsilon }R \sin ^2z\), so that \({\mathbb {E}}_{\epsilon }(k)= \int _0^1 F(R,k(R),k'(R))\, dR\). Since \(1/\epsilon \le \Omega (z) \le \epsilon \) for all z, it is clear that

Let \(I\subset (0,1)\) be compact and let \(\varphi \) be a smooth, real-valued function whose support is contained in I. Then if k is a minimizer of \({\mathbb {E}}_{\epsilon }\) in \({\mathcal {C}}\), a standard argument implies that the weak Euler–Lagrange equation

holds, or, in integrated form (via DuBois Reymond’s lemma [7, Lemma 1.8]),

for some constant c. One can now adapt the proof of [7, Theorem 4.1] to conclude that k is smooth on (0, 1) by (i) noting that the ellipticity condition (2.20) implies that \(F_{vv}(R,z,v) > 0\) for all \(R \in I\), (ii) applying the (local) argument of [7, Proposition 4.4] on I and (iii) concluding, by the implicit function theorem argument given in [7, Proposition 4.4], that k is smooth on I. Since the interval \(I \subset (0,1)\) is arbitrary, we reach the following conclusion.

Proposition 2.6

Let k in \({\mathcal {C}}\) minimize \({\mathbb {E}}_{\epsilon }\) given by (2.6). Then k is smooth in (0, 1) and

Moreover, the natural boundary condition

holds.

Proof

The smoothness of k has already been shown, and equation (2.22) follows easily from this and (2.21). Condition (2.23) is derived by taking variations \(k+h \varphi \) of k in \({\mathcal {C}}\), where h is a real parameter and \(\text {spt}\,\varphi \) is compactly contained in [0, 1) and is, in particular, allowed to include 0. In such circumstances the first variation of \({\mathbb {E}}_{\epsilon }\) about k in the direction \(\varphi \), \(\delta {\mathbb {E}}_{\epsilon }(k)[\varphi ]\), both vanishes and obeys

where, for brevity, we have reused the notation \(F(R,z,v)= \Omega (z)R^3 v^2 -2p_{\epsilon }R \sin ^2z\). By (2.21), the integral term vanishes, and, since \(\varphi (0)\) is arbitrary, (2.23) follows. \(\square \)

2.3 Phase plane analysis of the transformed Euler–Lagrange equation

Let \(\epsilon >1\), let \(k \in {\mathcal {C}}\) be a solution of the Euler–Lagrange equation (2.22) subject to (2.23) and let \(\Omega (k)\) be given by (2.3). The change of variables \(R=e^t\) and \(x(t):=k(R)\) transforms (2.22) to the autonomous equation

subject to \(x(0)=0\) and \(e^{2t}{\dot{x}}(t) \rightarrow 0\) as \(t \rightarrow -\infty \). Define \(F: {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) by

and let \(X=(x,y)^T\). Then (2.24) can be written as the autonomous system

subject to \(x(0)=0\) and \(e^{2t}y(t) \rightarrow 0\) as \(t \rightarrow -\infty \). For convenience, let us refer to the variable t as time.

Notice that the change of variables \(X \mapsto -X\) is a symmetry, and that rest points lie at \(X_k:=(k \pi /2,0)^T\) for each \(k \in {\mathbb {Z}}\). A standard linearization analysis, the precise results of which we record later, shows that each point \(X_{2k+1}\) for \(k \in {\mathbb {Z}}\) is a hyperbolic saddle while \(X_{2k}\) is a hyperbolic sink. The phase portrait of (2.26) reveals that the solutions we seek must, in view of the slightly unusual boundary condition ‘at \(-\infty \)’, lie on a heteroclinic orbit connecting \(X_{-1}\) to \(X_0\) and, also owing to the symmetry mentioned before, on a heteroclinic orbit connecting \(X_1\) and \(X_0\). Before describing these orbits and the phase portrait more generally, we first take note of the following conservation law associated with (2.26).

Lemma 2.7

Let \(X(t)=(x(t),y(t))^T\) be a solution of the system (2.26) subject to the conditions \(x(0)=0\) and \(\lim _{t\rightarrow -\infty } e^{2t} y(t) = 0\). Then

Proof

Since the function F in the system (2.26) is smooth in X, according to standard theory for autonomous ODEs the solution is also (locally) smooth as a function of t. Suppose that \(y \ne 0\) and divide the equation \({\dot{y}}=F_2(X)\), given by the second row of (2.26), by the equation \({\dot{x}}=y\). This gives the expression

From this it follows that

and hence, on multiplying by \(y={\dot{x}}\), that

One can now drop the restriction \(y \ne 0\) by noting that (2.28) holds by straightforward differentiation and by applying (2.26).

According to the Proposition 2.6, smooth trajectory

exists, X(t) solves (2.26) subject to \(x(0)=0\) and \(e^{2t} y(t) \rightarrow 0\) as \(t \rightarrow -\infty \). Let \(Z(t)=(2-y^2(t))\Omega (x(t))\), so that (2.28) implies

or

Let \(t_1<t_0<0\) and integrate (2.30) over \([t_1,t_0]\) to obtain

The bounded convergence theorem easily implies that, for fixed \(t_1\), the right-hand side of (2.31) converges to a finite limit as \(t_0 \rightarrow 0_-\), and hence so too must \(e^{4t_0} Z(t_0) = \Omega (x(t_0)) - \Omega (x(t_0)) y^2(t_0)\) converge as \(t_0 \rightarrow 0_-\), implying in particular that \(\lim _{t_0 \rightarrow 0_{-}} y^2(t_0)\) exists. Moreover, by noting that \({\dot{y}} = -2y + O(|x|)\) as \(t \rightarrow 0\), it follows that y is monotonic on \((-\delta ,0)\) for some small \(\delta > 0\) provided y is bounded away from zero on that set, and hence, in such circumstances, \(y_0:=\lim _{t_0 \rightarrow 0_-} y(t_0)\) also exists. We note that y(t) cannot approach 0 as \(t \rightarrow 0_-\) since a hyperbolic sink cannot be reached in finite time other than when \(x \equiv 0\).

Now set \(t_0=0\) and \(t_1=t\) in (2.31) and rearrange to obtain

where \(Z(0)=(2-y_0^2)\epsilon \) has been used. Multiplying through by \(e^{4t}\Omega (x(t))\) and applying the condition \(e^{2t} y(t) \rightarrow 0\) as \(t \rightarrow -\infty \) gives

Putting this expression for \(y_0\) into (2.32) gives

Since \(4\int _{-\infty }^t e^{4(s-t)}\, ds = 1\) for all t, it is clear that (2.34) may also be rewritten as (2.27). \(\square \)

Note that the integrand appearing in (2.34) is positive, which immediately implies that \(|y(t)| < \sqrt{2}\) for all times t. The next result, which we shall need in due course, shows that this simple bound can be used to deduce the range of values of y(t) compatible with \({\dot{y}}(t)=0\).

Lemma 2.8

Let X(t) solve (2.26) and suppose \(F_2(X(t))=0\) for some t. Then if \(y(t)<0\),

Proof

Let \(y(t) = -\sqrt{2} + h\) and note that (2.35) is equivalent to showing

Since \(F_2(X(t))=0\),

which, since \(y(t)<0\) and \(y^2(t)<2\), implies \(\sin 2x(t)>0\), and hence that

Rearranging this gives \(2\sqrt{2} + \epsilon p_{\epsilon }h^2 /2 \le (2+ \sqrt{2}\epsilon p_{\epsilon })h\). In particular, \(h \ne 0\), so

as required. \(\square \)

The following series of results establish that the phase trajectory \(\phi _{(-\infty ,0]}\), defined in (2.29), of the solution to (2.26) forms part of a heteroclinic orbit joining the rest points \(X_{-1}\) to \(X_0\) or \(X_{1}\) to \(X_0\). We begin by noting that the conservation law (2.27) implies that \(\phi _{(-\infty ,0]}\) belongs to a certain ‘box’ in the phase plane.

Lemma 2.9

Suppose that \(X=(x,y)^T\) obeys (2.26) subject to the conditions \(x(0)=0\) and \(e^{2t}y(t) \rightarrow 0\) as \(t \rightarrow -\infty \), and let the trajectory \(\phi _{(-\infty ,0]}\) be given by (2.29). Then

-

(i)

\(\phi _{(-\infty ,0]} \subset [-\pi /2,\pi /2] \times [-\sqrt{2},\sqrt{2}]\);

-

(ii)

if the points \(X_{\pm 1}\) are not \(\alpha -\)limits of \(\phi _{(-\infty ,0]}\) then \(\phi _{(-\infty ,0]}\) crosses the line segment \(L:=(-\pi /2,\pi /2) \times \{0\}\) infinitely often.

Proof

(i) The assumptions of the lemma imply that (2.27) and, equivalently, that (2.34) holds. We have already observed that the latter implies that \(|y(t)| < \sqrt{2}\) for all finite t, so it must be that \(\phi _{(-\infty ,0]} \subset {\mathbb {R}}\times [-\sqrt{2},\sqrt{2}]\). To control the horizontal extent of \(\phi _{(-\infty ,0]}\), suppose for a contradiction that there is \(t^* <0\) such that \(x(t^*)=\pm \pi /2\). Then \(\Omega (x(t^*)) \le \Omega (x(s))\) for all s, and it follows from (2.27) that \(y^2(t^*) \le 0\). This implies that \(\phi _{(-\infty ,0]}\) emanates from one of the rest points \((\pm \pi /2,0)\) at a finite time \(t^*\), which is impossible. Thus if \(X=(x(t),y(t))^T \in \phi _{(-\infty ,0]}\) it must be that \(-\pi /2< x(t) < \pi /2\) for all finite \(t \le 0\). It follows that \(\phi _{(-\infty ,0]}\) is contained in the set \([-\pi /2,\pi /2] \times [-\sqrt{2}, \sqrt{2}]\).

(ii) Either \(\phi _{(-\infty ,0]}\) remains in the set \((-\pi /2,\pi /2) \times (0, \sqrt{2}]\) or it does not. If it does then \(y^2(t)\) must be bounded away from the set \(L:=(-\pi /2,\pi /2) \times \{0\}\). To see this, note that the phase portrait of (2.26) immediately implies that trajectories which approach L either cross it or approach the rest point \(X_0\). But since \(X_0\) is a hyperbolic sink and \(\phi _{(-\infty ,0]}\) is parametrized by \(t \in (-\infty ,0]\), \(X_0\) cannot be an (\(\omega \)-)limit point. Hence there is \(c>0\) such that \(y^2 \ge c\) for all \(t<0\), from which it follows that |x(t)| must be unbounded. In particular, we can find \(t^* < 0\) such that \(x(t^*)=-\pi /2\), contradicting the argument given in the first part of the proof of the lemma. If y crosses L only finitely many times then there is \(T<0\), say, such that \(\phi _{(-\infty ,T)}\) lies either entirely in the lower half-plane or entirely in the upper half-plane. Either way, we can again argue that \(y^2(t)\) must then be bounded away from 0 for all \(t<T'\) for some \(T'<T\), and the same contradiction can be reached. This proves part (ii) of the lemma. \(\square \)

It is clear from (2.26) that \(F(x,0)= -(p_{\epsilon }/\Omega (x)) \sin 2x (0,1)^T\). Hence, as time t decreases the trajectory \(\phi _{(-\infty ,0)}\) must cross \(L^{-}:=(-\pi /2,0) \times \{0\}\) from the upper half-plane, to emerge in the lower half-plane, and that \(L^{+}:=(0,\pi /2) \times \{0\}\) is crossed with the opposite orientation. We exploit this observation in the next result.

Lemma 2.10

Let X and \(\phi _{(-\infty ,0]}\) be as in the statement of Lemma 2.9, suppose that \(X(0)=(0,y_0)^T\) where \(y_0 >0\), and suppose that neither of \(X_{\pm 1}\) is an \(\alpha -\)limit point of the trajectory. Then either:

-

(a)

there exist times \(t_1< t_2 <0\) such that \(x(t_1) \le x(s) \le x(t_2)\) for all \( s \le 0\), or

-

(b)

there exists a sequence of times \(t_k\), where \(t_k \in {\mathbb {N}}\), such that \(t_{k+1}< t_k<0\) for all k and such that for all \(j \in {\mathbb {N}}\),

-

(i)

\(y(t_{2j})=y(t_{2j-1})=0\) for all j, and \(y(t) < 0\) if \(t \in (t_{2j},t_{2j-1})\);

-

(ii)

\(x(t_{2j-1}) \le x(s) \le x(t_{2j}) if t_{2j} \le s \le t_{2j-1}\);

-

(iii)

\(x(t_{2j-1})< 0 < x(t_{2j})\);

-

(iv)

\(x(t_{2j})\) forms a strictly increasing sequence;

-

(v)

\(x(t_{2j-1})\) forms a strictly decreasing sequence.

-

(i)

Proof

Since, by hypothesis, neither of \(X_{\pm 1}\) is an \(\alpha -\)limit point of the trajectory, we can apply Lemma 2.9(ii). Using this and the reasoning immedately preceding the statement of Lemma 2.10, the two possibilities for \(\phi _{(-\infty ,0]}\) must be as shown in Figs. 2 and 3, which correspond respectively to parts (a) and (b) in the statement of the lemma.

(a) Consider Fig 2. Let \(T < 0\) be such that \(\phi _{[T,0]}\) denotes the part of \(\phi _{(-\infty ,0]}\) connecting the points \(P_0\) and \(P_1\), as indicated. On the part of \(\phi _{[T,0]}\) lying in the upper half-plane, where \(y={\dot{x}}\), x(t) decreases monotonically as t decreases, so there is \(t_1 \in (T,0)\) such that \(x(t_1) \le x(t)\) for all \(t \in [t_1,0]\) and \(y(t_1)=0\). Arguing similarly for the part of \(\phi _{[T,0]}\) lying in the lower half-plane, there is \(t_2 \in (T, t_1)\) such that \(x(t_1) \le x(t) \le x(t_2)\) for all \(t \in [t_2,t_1]\), and, again by monotonicity, \(\phi _{[T,t_2]}\) clearly projects onto \([0,x(t_2)]\). Since the trajectory \(\phi _{(-\infty ,0)}\) is contained in the bounded component of \({\mathbb {R}}^2 \setminus (\phi _{[T,0]} \cup [P_0,P_1])\), it must also be the case that \(x(t_1) \le x(s) \le x(t_2)\) for all \(s \in (-\infty ,0]\).

(b) Now consider Fig. 3. Since, by Lemma 2.9(b), \(\phi _{(-\infty ,0]}\) crosses L infinitely often, there must be a strictly decreasing sequence \(t_k\), say, of times such that \(y(t_k)=0\) for points \(X=(x,y)^T\) belonging to \(\phi _{(-\infty ,0]}\). Since \(y_0 >0\) by hypothesis, \(x(t_1)<0\), and, by the observation immediately preceding Lemma 2.10, \(\phi _{(-\infty ,0]}\) crosses from the upper half-plane to the lower half-plane and can only cross back into the upper half-plane at \(x(t_2)>0\). Since \(y\arrowvert _{[t_2,t_1]} <0\), x is monotonically decreasing for s in \([t_{2},t_{1}]\). Continuing in this way, it must be that there are times \(t_{2j}<t_{2j-1}\) for all \(j \in {\mathbb {N}}\) such that \(x(t_{2j-1})<0 < x(t_{2j})\) and \(x(t_{2j-1})\le x(s) \le x(t_{2j})\) for s in \([t_{2j},t_{2j-1}]\). Thus parts (b)(i)-b(iii). Now consider, for \(j \ge 2\), the points \((x(t_{2j+1}),0)\) and \((x(t_{2j-1}),0)\) in the phase plane, and let

for any \(k \in {\mathbb {N}}\). If it were the case that \(x(t_{2j-1}) \le x(t_{2j+1})\) then it would be necessary for the part-trajectories \(\phi _{[t_{2j},t_{2j+1}]}\) and \(\phi _{[t_{2j-2},t_{2j-1}]}\), both of which lie in the upper half-plane, to cross, which is impossible for the smooth trajectory \(\phi _{(-\infty ,0]}\). Hence \(x(t_{2j-1})\) forms a strictly decreasing sequence contained in \([-\pi /2,0)\), which proves part (b)(iv) of the lemma. Part (b)(v) follows similarly.

\(\square \)

The first of two possible trajectories, in this case consistent with Lemma 2.10a. The system (2.26) implies that when a trajectory crosses the x-axis it does so vertically, and that when it crosses the y-axis it does so with slope \(y_{x}=-2\). Furthermore, \({\dot{x}}=y\) implies that, as t increases, x(t) is monotonic increasing in the upper half-plane and monotonic decreasing in the lower half-plane. Thus, as t decreases, trajectories in the upper half-plane flow strictly from right to left, indicated by \(-\!\!\!\!\!\!\!\mathrel {-\!\!\lhd }\!\!\!\!\!-\!\!\!-\), and, in the lower half-plane, strictly from left to right, indicated by \(-\!\!\!\!\mathrel {-\!\!\!\rhd }\!\!\!\!\!-\!\!\!-\). Forward time flow is indicated by \(\rightarrow \!\!\!-\), as usual

Lemma 2.11

Let X and \(\phi _{(-\infty ,0]}\) be as in the statement of Lemma 2.9, and suppose that \(X(0)=(0,y_0)^T\) where \(y_0 >0\). Suppose further that part (b) of Lemma 2.10 holds. Define

and suppose without loss of generality that \(l' \le |l|\). Then there exists \(c>0\) such that for any \(\eta \in (0,\sin ^2 l)\) and all sufficiently large j there are times \(s_j^+\), \(s_j^{-}\), \(t_{c,j}^+\) and \(t_{c,j}^{-}\) and a constant \(q_{\epsilon }\), depending only on \(\epsilon \), such that the following are true:

Proof

Note that \(l'\) is bounded away from 0 because, for all \(j \ge 1\), \(\phi _{(-\infty ,t_{2j-1})}\) lies in the unbounded component of \({\mathbb {R}}^2 \setminus (\phi _{[t_1,0]} \cup [P_0,P_1])\). Consider the part of \(\phi _{(t_{2j},t_{2j-1})}\) of \(\phi _{(-\infty ,0]}\) which connects the two points \((x(t_{2j-1}),0)\) and \((x(t_{2j}),0)\). Since \(\phi _{(t_{2j},t_{2j-1})}\) belongs to the lower half-plane, it is the case that x is strictly monotonic and increasing from \(x(t_{2j-1}) < 0\) to \(x(t_{2j}) >0\) with decreasing t. In particular, by choosing a large enough j, we ensure that x(t) strictly increases from \(x(t_{2j-1})\) belonging to a small right-neighbourhood of l to a point \(x(t_{2j})\) belonging to a small left-neighbourhood of \(l'\) as t ranges in the interval \((t_{2j}, t_{2j-1})\).

Let the functions \((\cos ^2)_{\pm }^{-1}\) denote local inverses of the function \(\cos ^2 \theta \) for \(\theta \in [0,\pi /2)\) in the sense that \((\cos ^2)_{+}^{-1}(\cos ^2\theta )=\theta \) and \((\cos ^2)_{-}^{-1}(\cos ^2\theta )=-\theta \). With \(0< \eta < \sin ^2 l'\), define the quantities \(s_j^{\pm }\) such that \(t_{2j} \le s_j^{+} < s_j^{-}\le t_{2j-1}\) by

and

The notation is chosen so that \(x(s_j^{-})< 0 < x(s_j^{+})\). Since x is strictly monotonic on \((t_{2j}, t_{2j-1})\), the above expressions define \(s_j^{\pm }\) uniquely and they must be ordered as stated. By construction, we therefore have

which is (2.39), with \(s_j^\pm \) obeying (2.37).

The idea behind (2.40) is that the upper bound \(|y(t)|\le \sqrt{2}\) controls the (horizontal) ‘speed’ of \(\phi _{(s_j^{+},s_j^{-})}\), and thereby ensures that \(s_j^{-}\) and \(s_j^{+}\) remain apart. Indeed, since \(y={\dot{x}}\) is bounded in modulus by \(\sqrt{2}\), it follows that

for sufficiently large j, where the second inequality results from examining cases as follows. Suppose first that \(x(s_j^{-})=x(t_{2j-1})\) or \(x(s_j^{+})=x(t_{2j})\) or both. Then it is straightforward to check that

provided j is large enough (using the facts that \(x(t_{2j-1})=:l+\delta _j \rightarrow l\) and \(x(t_{2j})=:l'-\xi _j \rightarrow l' \le |l|\), with obvious notation.) Otherwise, by the equations (2.42) and (2.43) defining \(s_{j}^-\) and \(s_j^+\) above, we have \(x(s_j^{\pm })= \pm \theta _0\), where \(\theta _0:=(\cos ^2)_{+}^{-1}(\cos ^2 l + \eta )>0\). Hence

which is (2.40).

The proof of (2.41) relies on (i) the more refined bound on y proved in Lemma 2.8 and (ii) on the geometry of \(\phi _{(t_{2j},t_{2j-1})}\), as depicted in Fig 3. Suppose that the point \(({\bar{x}},{\bar{y}}) \in \phi _{(t_{2j},t_{2j-1})}\) is such that \({\bar{y}} \le y(t)\) for all \(t_{2j} \le t \le t_{2j-1}\). Since \(y(t_{2j-1})=y(t_{2j})\), it must be that there is at least one t such that \(y(t)={\bar{y}}\) and \({\dot{y}}(t)=0\). By construction, \(\phi _{(t_{2j},t_{2j-1})}\) lies in the lower half-plane, which in particular means that for sufficiently large j there is \(c>0\) and times \(\varvec{c},j\) obeying \(t_{2j}<t_{c,j}^+< t_{c,j}^-< t_{2j-1}\) such that \(y(t_{c,j}^{\pm })=-c\), \(x(t_{c,j}^-)<0<x(t_{c,j}^+)\) and

We now split the interval \((t_{2j},t_{2j-1}) = (t_{2j},t_{c,j}^+) \cup (t_{c,j}^+,t_{c,j}^-) \cup (t_{c,j}^-,t_{2j-1})\) and control the length of each of these subintervals.

First consider the interval \((t_c^{-},t_{2j-1})\). The second equation of (2.26) implies that

while (2.35) and the fact that \(y(t)<0\) implies that

and hence that

Putting inequalities (2.44) and (2.45) together produces the lower bound

where

When (2.46) is integrated over \((t_{c,j}^-,t_{2j-1})\) and the conditions \(y(t_{c,j}^-)=-c\) and \(y(t_{2j-1})=0\) are applied, we obtain

Applying the inequality \(e^{2r} \ge 1+2r\) with \(r=t_{2j-1}-t_{c,j}^-\) and rearranging, we obtain

The corresponding argument for the interval \((t_{2j},t_c^{+})\) yields

It remains to bound \(t_{c,j}^--t_{c,j}^+\) from above. Let \(b_j^{\pm } = x(t_{c,j}^{\pm })\) and let f be the local inverse of \(x\arrowvert _{[b_j^{-},b_j^{+}]}\). Note that its derivative satisfies \(f'(x(t)) = \frac{1}{{\dot{x}}(t)}\) which, since \(y(t) \le -c\) for \(t_{c,j}^+\le t \le t_{c,j}^-\), obeys \(|f'(x(t))| \le 1/c\) on that interval. Hence

so that

By combining (2.47),(2.48) and (2.49) we obtain

which is (2.41). \(\square \)

Lemma 2.12

Suppose that \(X=(x,y)^T\) obeys (2.26) subject to the conditions \(x(0)=0\) and \(e^{2t}y(t) \rightarrow 0\) as \(t \rightarrow -\infty \), and let the trajectory \(\phi _{(-\infty ,0]}\) be given by (2.29). Then, provided X(t) is not identically zero, \(\phi _{(-\infty ,0]}\) must have \(\alpha \)-limit point either \(X_{1}\) or \(X_{-1}\).

Proof

Note that X solves (2.26) subject to the conditions stated above if and only if \(-X\) obeys the same system and conditions. Therefore, either both \(X_{-1}\) and \(X_{1}\) are \(\alpha \)-limit points or neither is. If neither of \(X_{\pm 1}\) is an \(\alpha \)-limit point then, by exchanging X and \(-X\) if necessary and assuming that X(t) is not identically zero, we can suppose \(y(0)>0\). Hence either part (a) or part (b) of Lemma 2.10 must hold.

If (a) holds then there are times \(t_2<t_1<0\) such that \(x(t_1) \le x(s) \le x(t_2)\) for all \(s \le 0\). If \(|x(t_1)| \ge x(t_2)\) then, by definition of \(\Omega (x)\), this means that

almost everywhere in the set \((-\infty ,t_1)\). But, by (2.27),

is then strictly negative, which is a contradiction. If \(|x(t_1)| < x(t_2)\) then the same contradiction can be reached by replacing \(t_1\) with \(t_2\) in the above.

Now assume that part (b) of Lemma 2.10 holds, and let \(t_{2j-1}\), \(t_{2j}\) be the times introduced there. Note that Lemma 2.11 also now applies. By (2.27) and (2.39), we have

The first term on the right-hand side of the line above converges to 0 as \(j \rightarrow \infty \). We will argue, using (2.40) and (2.41) that the second term is bounded away from 0. Indeed, from (2.40), it easily follows that

where \(N=\min \left\{ \frac{l'}{2\sqrt{2}},\sqrt{2}(\sin ^2l' - \eta )^{\frac{1}{2}}\right\} \). Since \(t_{2j-1}< s_j^+< s_j^- < t_{2j}\), we have \(s_j^{-} - t_{2j-1} < t_{2j}-t_{2j-1}\), and so to bound \(e^{4(s_j^{-}-t_{2j-1})}\) away from zero it is enough to bound the right-hand side of (2.41) above uniformly in j.

We may regard c as a small but fixed quantity that is given via the geometry of \(\phi _{(-\infty ,0]}\). Therefore the only way in which the right-hand side of (2.41) could become unbounded as \(j \rightarrow \infty \) is if

-

(I)

at least one of \(b_j^{+}-\pi /2\) and \(b_j^{-}+\pi /2\) tends to 0,

-

(II)

at least one of \(b_j^{\pm }\) tends to 0,

or both, were to hold for a subsequence. We can rule out (II) since it is clear from the construction that for a fixed and sufficiently small c, both \(b_j^{+}\) and \(b_j^{-}\) are bounded away from 0. To rule out (I), note that the behaviour of \(\phi _{(-\infty ,0]}\) in a neighbourhood of \(X_{-1}\), say, is constrained by the behaviour of the linearized system associated with (2.26). Carrying out that analysis in a neighbourhood of \(X_{-1}\) reveals that the invariant stable and unstable manifolds at \(X_{-1}\) are tangent to the vectors \((1,-1\pm \sqrt{2\epsilon ^2 -1})^T\). Recall that \(t_{2j}<t_{c,j}^+< t_{c,j}^-< t_{2j-1}\) are such that \(y(t_{c,j}^{\pm })=-c\), \(x(t_{c,j}^-)<0<x(t_{c,j}^+)\) and \(b_j^{\pm }=x(t_{c,j}^{\pm })\). Therefore if \((b_j^{-},-c)^T\) lies on \(\phi _{(-\infty ,0]}\) and if \(b_j^{-}+\pi /2\) is sufficiently small then \((b_j^{-},-c)^T\) must lie above the line \(y=\xi _{-}(x+\pi /2)\), where \(\xi _{-} = -(1 + \sqrt{2\epsilon ^2 -1})\), i.e.

which easily rearranges to

This bounds \(b_j^{-}\) away from \(-\pi /2\). A similar argument bounds \(b_j^{+}\) away from \(\pi /2\), thereby ruling out (I). In conclusion, it follows from (2.41) that there is a constant \(M>0\) depending on \(l,l',c\) and \(\epsilon \) such that

Inserting (2.52) and (2.51) into (2.50), we obtain

By taking j large enough and bearing in mind the convergence \(x(t_{2j-1}) \rightarrow l\), we obtain the desired contradiction. \(\square \)

We now turn our attention to \(\omega \)-limit points of extensions of \(\phi _{(-\infty ,0]}\).

Lemma 2.13

Let \(X(t)=(x(t),y(t))^{T}\) solve (2.26) on \(-\infty < t \le 0\) subject to the conditions \(x(0)=0\) and \(e^{2t}y(t) \rightarrow 0\) as \(t \rightarrow -\infty \), and suppose that X(t) is not identically zero. Then the trajectory

extends to a heteroclinic orbit

connecting the rest points \(X_{-1}=(-\pi /2,0)^T\) and \(X_0=(0,0)\), with \(\alpha \)-\(\lim \phi _{{\mathbb {R}}}^{+} = X_{-1}\) and \(\omega \)-\(\lim \phi _{{\mathbb {R}}}^{+} = X_{0}\). Similarly, the trajectory

extends to a heteroclinic orbit \(\phi _{{\mathbb {R}}}^{-}\) with \(\alpha \)-\(\lim \phi _{{\mathbb {R}}}^{-} = X_{1}\) and \(\omega \)-\(\lim \phi _{{\mathbb {R}}}^{-} = X_{0}\).

Proof

Lemma 2.12 establishes that the trajectories \(\phi _{(-\infty ,0]}^{\pm }\) defined above coincide with the unstable invariant manifolds \(\Gamma _{X_{\pm 1}}^{u}\) associated with \(X_{\pm 1}\) and belonging to the box \(K:=(-\pi /2,\pi /2) \times (-\sqrt{2},\sqrt{2})\). The linear analysis of (2.26) shows that the part of \(\Gamma _{X_{-1}}^{u}\) in K is initially tangent to \((1,\xi _+)^T\), where \(\xi _+=-1+\sqrt{2\epsilon ^2-1} >0\), and that therefore \(\Gamma _{X_{-1}}^{u}\) must cross the y-axis at \(y(0)>0\) (since it cannot do so at any point in \((-\pi /2,0] \times \{0\}\)). It follows that \(\Gamma _{X_{-1}}^{u}=\phi _{{\mathbb {R}}}^{+}\) in the notation just introduced, and similarly that \(\Gamma _{X_1}^{u}=\phi _{{\mathbb {R}}}^{-}\). This establishes that the \(\alpha \)-limits of these trajectories are as stated. It remains to identify their \(\omega \)-limits, which we do by a process of elimination.

Let us focus on \(\phi _{{\mathbb {R}}}^{+}\) which, being constrained to lie in K, must approach one of \(X_{-1}\), \(X_0\) or \(X_1\) as \(t \rightarrow \infty \). Suppose for a contradiction that \(\omega \)-\(\lim \phi _{{\mathbb {R}}}^{+} = X_{-1}\). Then \(\phi _{{\mathbb {R}}}^{+}\) must enter the third quadrant \({\mathbb {R}}^2_{--}:=\{(x,y)^T: \ x<0, y < 0\}\) while, by symmetry, \(\phi _{{\mathbb {R}}}^{-}\) would enter \({\mathbb {R}}^2_{++}\), with obvious notation. Bearing in mind that trajectories can only change quadrant in a clockwise manner as t increases, we find that \(\phi _{{\mathbb {R}}}^{+}\) and \(\phi _{{\mathbb {R}}}^{-}\) must cross, which is a contradiction. Referring to Fig. 4 may help here.

Now suppose for a contradiction that \(\omega \)-\(\lim \phi _{{\mathbb {R}}}^{+} = X_{1}\). If \(\phi _{{\mathbb {R}}}^{+}\) crosses the line \((0,\pi /2) \times \{0\}\) then it must cycle at least once around the origin before an approach to \(X_1\) is possible. But in so doing the trajectory would cross itself, which is impossible. Hence \(\phi _{{\mathbb {R}}}^{+}\) remains in the upper half-plane and x(t) increases monotonically from \(-\pi /2\) to \(\pi /2\). Let \(\eta >0\) and take t so large that \(\cos ^2 x(t)<\eta \). Using the monotonicity of x(t), define \(t'>0\) by \(\cos ^2 x(t') = 2\eta \), and note that we then have

Using this inequality, we see that

which, when applied to (2.27), gives, on recalling (2.3) and using the bound \(\Omega (x(t)) \le \epsilon \) for all t,

Since \(t'>0\), the second term is negative and bounded away from zero, while the first term can be made arbitrarily small provided t is chosen larger still, if necessary. This contradiction shows that \(\phi _{{\mathbb {R}}}^{+}\) cannot approach \(X_1\), and so it must cross the line \((0,\pi /2) \times \{0\}\) and thereafter remain in the box K.

According to the Poincaré-Bendixson Theorem [9, Theorem 1.115], the \(\omega -\)limit set of \(\phi _{{\mathbb {R}}}^{+}\) is either a fixed point, a regular periodic orbit, or it consists of finitely many fixed points joined by non-closed orbits. The function \( \varphi (x,y):=\epsilon \Omega (x)\) is a Dulac function for the system (2.26) in the sense that

which, in almost exactly the same way as Bendixson’s criterion, [9, Proposition 1.133], rules out regular periodic orbits.

Thus \(X_0\) must feature in the \(\omega -\)limit set (because \(X_1\) and \(X_{-1}\) cannot), and since it is a sink, as the linear analysis of (2.26) readily shows, points X(t) in the orbit \(\phi _{{\mathbb {R}}}^{+}\) must converge to \(X_0\) as \(t \rightarrow \infty \). This concludes the proof for \(\phi _{{\mathbb {R}}}^{+}\), and a similar argument yields \(\omega \)-\(\lim \phi _{{\mathbb {R}}}^{-} = X_{0}\). \(\square \)

2.4 Properties of the functions \(v_{\pm }^\epsilon \)

We recall that the twist maps \(v_{\pm }^\epsilon \) are given by

where \(k:(0,1] \rightarrow {\mathbb {R}}\) solves the ODE (2.22), which for convenience we reprint here

The dependence of k(R) on \(\epsilon \) is through this ODE, and, strictly speaking, each k should be written \(k^\epsilon (R)\), say. However, for brevity we write k(R) when we mean \(k^\epsilon (R)\) solving (2.54).

It was established in Proposition 2.6 that k(R) is smooth on the open interval (0, 1), so it follows that \(v^{\epsilon }_{\pm }\) are smooth on \(B\setminus \{0\}\). We now use properties of the solutions of system (2.26) established in the previous subsection to study the behaviour of \(\nabla v_{\pm }^\epsilon \), and to prove, in particular, that these maps are continuous on the ball B.

Proposition 2.14

Let \(v^{\epsilon }_{\pm }\) be given by (2.1) where k lies in \({\mathcal {C}}\) and satisfies (2.22) subject to (2.23). Then \(\nabla v^{\epsilon }_{\pm }\) is continuous on B.

Proof

By (2.2),

for \(x \ne 0\). Recall that when \(x(t)=k(R)\) and \(R=e^t\), Lemma 2.13 implies that \(y(t)={\dot{x}}(t) \rightarrow 0\) and \(x(t) \rightarrow \pm \pi /2\) as \(t \rightarrow -\infty \). Since \({\dot{x}}(t)=R k'(R)\), we therefore see from the expression above that

Thus as long as \(\nabla v^{\epsilon }_{\pm }(0)\) exists and equals \(\pm J\) the proof will be finished. Let l be a unit vector in \({\mathbb {R}}^2\) and without loss of generality suppose \(h>0\). Then let the polar coordinate representation of hl be \(h e_{\scriptscriptstyle {R}}(\mu )\) and note that

As \(h\rightarrow 0\), \(k(h) \rightarrow \pm \pi /2\), and hence \(\nabla v^{\epsilon }_{\pm }(0)\) exists and equals \(\pm J\). \(\square \)

Remark 2.15

The higher regularity of \(v^{\epsilon }_{\pm }\) naturally depends on \(\epsilon \). To give some idea why, we refer to the linearised analysis of x(t) given in Sect. 4.1, and in particular to (4.9). The latter shows that \(k(R) \sim \xi _0 R^{\gamma -1} - \pi /2\) as \(R \rightarrow 0+\), where \(\gamma = \sqrt{2\epsilon ^2 - 1}>1\) and \(\xi _0\) is a constant. More precisely, in terms of t the solution satisfying \(x(t) \rightarrow -\pi /2\) as \(t \rightarrow -\infty \) obeys \(x(t)=-\pi /2 + \hat{\xi }(t)\) where \(\hat{\xi } \sim \xi \) as \(t \rightarrow -\infty \) and \(\xi (t)=\xi _0 e^{(\gamma -1)t}\). Replacing \(\hat{\xi }\) with \(\xi \) for clarity, a calculation of \(\nabla v^{\epsilon }_{+}\) shows that

It follows that

and hence that the Hölder exponent \(\alpha \), say, of \(\nabla v^{\epsilon }_{+}\) must obey

It follows from this and the fact that stationary points are smooth away from 0 that \(v_{+}^{\epsilon } \in C^{1,\alpha (\epsilon )}(B)\), where \(\alpha (\epsilon )\) is given in (2.55) for the stated range of \(\epsilon \).

Proposition 2.16

Let the hypotheses of Lemma 2.13 hold, and let \(\phi _{{\mathbb {R}}}^{\mp }\) be the heteroclinic orbits connecting \(X_{\pm 1}\) to \(X_0\). Then

-

(i)

if \(1<\epsilon \le \sqrt{2}\), \(\phi _{{\mathbb {R}}}^{\pm }\) does not cross the the \(y-\)axis. Consequently, \(k=0\) is the only possible solution of (2.54) and \(v_{\pm }^\epsilon \equiv \text {id}\);

-

(ii)

if \(\epsilon > \sqrt{2}\), \(\phi _{{\mathbb {R}}}^{\pm }\) crosses the \(y-\)axis an infinite number of times. Consequently, for each \(\epsilon > \sqrt{2}\), there are infinitely many functions \(v_{\pm }^\epsilon = R e_{\scriptscriptstyle {R}}(\mu \pm k(R))\) where k solves (2.22) subject to the conditions \(k(1)=0\) and \(\lim _{R \rightarrow 0} R^3 k'(R)=0\).

Proof

(i) Our contention here is that, when \(\epsilon \in (1,\sqrt{2})\), the heteroclinic \(\phi ^{+}_{{\mathbb {R}}}\) connecting \(X_{-1}\) and \(X_0\) is entirely contained in the region \(\Delta \), which we define to be the interior of the triangle formed by the points \((-\pi /2,\pi /2)\), \(X_0\) and \(X_{-1}\). In particular, it cannot cross the \(y-\)axis. Consider the line S, say, joining the points \((-\pi /2,\pi /2)\) and \(X_0\), and let \(n=(1/\sqrt{2},1/\sqrt{2})\) be its upward-pointing normal. Then, with F(x, y) given by (2.25), we find that on S

Let \(g_{\epsilon }(x):=(x^2-2)\sin (2x)+x\cos (2x)+x \frac{\epsilon ^2+1}{\epsilon ^2-1}\) and note that \(g_{\epsilon }'(x)> 0\) if and only if

For \(1<\epsilon \le \sqrt{2}\) and \(-\pi /2< x < 0\), the infimum of the left-hand side of (2.57) agrees with the supremum of the right-hand side, and in fact for all values apart from \(\epsilon =\sqrt{2}\) and \(x=0\), (2.57) holds with strict inequality. Hence it must be that \(g_{\epsilon }(x) < g_{\epsilon }(0)=0\), from which it follows from (2.56) that \(n \cdot F <0\) at all points along S. Thus trajectories in \(\Delta \) cannot leave by crossing at any point on S. Moreover, since

it is also clear that trajectories cannot leave \(\Delta \) by crossing the lines joining \(X_{-1}\) to \((-\pi /2,\pi /2)\) or \(X_{-1}\) to \(X_0\), and so they must remain in \(\Delta \). By Lemma 2.12, if X solving (2.26) is not identically zero, then its trajectory \(\phi ^{+}_{{\mathbb {R}}}\) has \(\alpha -\)limit point \(X_{-1}\) or \(X_1\), and, by symmetry, we may assume without loss of generality that the former is true. Thus \(\phi ^{+}_{{\mathbb {R}}}\) meets \(\Delta \) non-trivially and hence, by the above, we must have \(\phi ^{+}_{{\mathbb {R}}} \subset \Delta \). In particular, \(\phi ^{+}_{{\mathbb {R}}}\) cannot cross the y-axis, contradicting the assumption that X solving (2.26) and its associated boundary conditions is non-zero. It follows that \(X\equiv 0\) is the only possible solution, which concludes the proof of part (i) of the lemma.

(ii) By Lemma 2.13, if X solving (2.26) and its associated boundary conditions is non-zero then \(X(t) \rightarrow X_0\) as \(t\rightarrow \infty \). Therefore, for sufficiently large T, \(\phi _{(T,\infty )}^{\pm }\) lies in a neighbourhood of \(X_0\) in which the behaviour of solutions to (2.26) is governed by the linear system \({\dot{X}}=\nabla F(X_0)X\). When \(\epsilon >\sqrt{2}\), the eigenvalues of DF(0) are a complex conjugate pair \(-1\pm i \sqrt{1-2\epsilon ^{-2}}\), so that \(\phi _{(-\infty ,T)}^{\pm }\) is an exponentially decaying spiral at \(T \rightarrow \infty \).

To see that there are infinitely many solutions of (2.26) subject to \(x(0)=0\) and \(e^{2t} {\dot{x}}(t) \rightarrow 0\) as \(t \rightarrow -\infty \) when \(\epsilon > \sqrt{2}\), consider the following. Let X(t) lie on \(\phi ^+_{{\mathbb {R}}}\), the heteroclinic connecting \(X_{-1}\) and \(X_0\), and let \(t_j>0\) be one of the times such that \(x(t_j)=0\), noting that \(t_j\) exists precisely because \(\phi ^+_{{\mathbb {R}}}\) describes a spiral when \(\epsilon > \sqrt{2}\). Define \({\widehat{X}}_{j}(t):=X(t+t_j)\) for \(-\infty < t \le 0\). Then

is a new trajectory which satisfies both boundary conditions associated with (2.26). One can do likewise with solutions on \(\phi ^-_{{\mathbb {R}}}\). \(\square \)

When \(\epsilon >\sqrt{2}\), each additional solution \({\widehat{X}}_j(t)=:(x_j(t),y_j(t))\) described above yields a new stationary point of the form \(v^{\epsilon }_{+,j}(x)= Re_{\scriptstyle {R}}(\mu + k_j(R))\), say, in which \(k_j(R)=x_j(t)\) and \(R=e^t\). Properties of the trajectory \(\widehat{\phi }^{+}_{(-\infty ,0]}\) imply that \(v^{\epsilon }_{+,j}\) introduces ‘additional buckling’ near the centre of the ball B, and this is borne out by numerical simulations, an example of which is given in Fig. 5. The first buckle occurs when \(R \sim 0.2\) and the second is just visible when \(R \sim 0.01\). Another family of maps \(v^{\epsilon }_{-,j}\) can be obtained similarly from \(\phi ^-_{{\mathbb {R}}}\), and by symmetry we have \({\mathbb {E}}_{\epsilon }(v^{\epsilon }_{+,j}) = {\mathbb {E}}_{\epsilon }(v^{\epsilon }_{-,j})\) for all j.

The index j is a useful proxy for the number of buckles a solution produces near the centre of B. In Sect. 4 the energies \(E_{j}(\epsilon ):={\mathbb {E}}_{\epsilon }(v^{\epsilon }_{-,j})\) are calculated and are found to form a sequence that increases with j, thereby confirming our natural intuition that the more a solution buckles, the greater the energetic cost.

The map \(v^{5}_{+,2}\) , applied to the sector expressed in plane polar coordinates as \(S:=\{(R,\mu ): \ 0 \le R \le 1, \ 0 \le \mu \le 0.02\}\). Note that the scaling in the vertical axis is chosen carefully to make the effect \(v^{5}_{+,2}\) has on S clearly visible. Inset: magnified image of further buckling near \((R,\mu )=(0,0)\)

3 Stationary points of \({\mathbb {D}}_{\epsilon }\) subject to the area-preserving constraint: Lagrange multipliers with logarithmic growth

The purpose of this section is twofold. Firstly, we make clear that the maps \(v_{\pm }^\epsilon \) are not just stationary points in the class \({\mathcal {C}}\), but that they are also stationary in the larger class \({\mathcal {A}}\) in the sense that

By invoking results of [8, 13], a natural corollary of this condition is that a Lagrange multiplier, or pressure, \(\lambda _{\pm }^{\epsilon }\), say, can be rigorously associated to each function \(v_{\pm }^\epsilon \) through the equation

See Lemmata 3.2, 3.3 and Proposition 3.4 for the details.

Next, since each \(v_{\pm }^\epsilon \) is \(C^1\) and invertible, the change of variables

is valid and yields

Thus the second main purpose of this section is to characterise, as far as possible, the functions \(p_{\pm }^\epsilon \) appearing in (3.3). To do this we exploit the facts that:

-

(i)

each \(p_{\pm }^\epsilon \) is unique up to an additive constant, and

-

(ii)

\(p_{\pm }^\epsilon \) belongs to \(\cap _{s>1} L^s(B)\),

both of which follow from the results of [8, 13], as we explain below. The rest of the argument goes as follows, and with \(U:=v_{\pm }^\epsilon \) for brevity:

- Step 1:

-

find, for any \(\delta \in (0,1)\) a function P, say, depending only on \(R=|x|\), such that

$$\begin{aligned} \nabla U^T(x) \, \mathrm{div}\,D_FW(x,\nabla U(x);\epsilon ) = \nabla P(x) \quad x \in B\setminus B(0,\delta ); \end{aligned}$$(3.4) - Step 2:

-

verify, by an approximation argument that, as a result of (3.4),

$$\begin{aligned}&\int _B D_{F}W(x,\nabla U;\epsilon )\cdot \nabla \varphi (x) \, dx \\&\quad = \int _B P(x)\, \mathrm{cof}\,\nabla U(x)\cdot \nabla \varphi (x) \, dx \quad \forall \varphi \in C_c^1(B,{\mathbb {R}}^2), \end{aligned}$$which is just (3.3) with P in place of \(p_{\pm }^\epsilon \), and

- Step 3:

-

invoke the uniqueness articulated in (ii) above to conclude that there is a constant c, say, depending on \(v_{\pm }^{\epsilon }\) such that

$$\begin{aligned} p_{\pm }^\epsilon = P + c \quad \text {a.e. in } B. \end{aligned}$$(3.5)

The function P determined in Lemma 3.7 is given explicitly in terms k solving (2.54), and the characterisation so obtained shows, in conjunction with (3.5), that:

-

(a)

\(p_{\pm }^\epsilon \) is a smooth, radial function on \(B\setminus \{0\}\), and, to within a constant,

-

(b)

\(p_{\pm }^\epsilon \sim 2 p_{\epsilon }\ln |x|\) as \(|x|\rightarrow 0\), where \(p_{\epsilon }=\epsilon -\frac{1}{\epsilon }\).

Equation (3.4) is a form of the equation

Following, for example, [28, Section 2], in which a useful, formal derivation is given of the strong form of (3.6) in the case of the Dirichlet energy, we note that away from the origin, i.e. where U is smooth, (3.6) together with Piola’s identity \(\mathrm{div}\,\mathrm{cof}\,\nabla U=0\) gives

Equation (3.4) follows by premultiplying both sides by \(\nabla U^T\) and noting that, since \(\det \nabla U = 1\), \( \nabla U^T \mathrm{cof}\,\nabla U = \mathbf{1}\).

3.1 Stationarity in the sense of (3.1)

We begin with the following straightforward algebraic lemma.

Lemma 3.1

Let \(W(x,F;\epsilon )\) be given by (1.4), that is

Let

Then

Proof

For any fixed a in \({\mathbb {R}}^2\), it is the case that

as is readily checked. Taking first \(a={\hat{x}}\) and then \(a=J{\hat{x}}\), (3.8) follows from this, the definition of \(W(x,F;\epsilon )\) and the fact that \(|\mathrm{adj}\,F {\hat{x}}|^2 = |F J{\hat{x}}|^2\). \(\square \)

Throughout the rest of this section, it will be convenient to make the following standing assumptions.

- \({(\mathbf{SA})}_1\):

-

Let

$$\begin{aligned} U(x)=R e_{\scriptscriptstyle {R}}(\mu + k(R)) \quad \ \text {for } x=R e_{\scriptscriptstyle {R}}(\mu ) \end{aligned}$$(3.9)and

$$\begin{aligned} V(y)=R e_{\scriptscriptstyle {R}}(\mu - k(R)) \quad \ \text {for } y=R e_{\scriptscriptstyle {R}}(\mu ) \end{aligned}$$(3.10)denote the functions \(v^{\epsilon }_{+}\) and \(v^{\epsilon }_{-}\) respectively;

- \({(\mathbf{SA})}_2\):

-

The function k(R) in (3.9) and (3.10) solves (2.22) for \(R \in (0,1)\), subject to \(k(1)=0\) and \(\lim _{R \rightarrow 0+} R^3 k'(R) = 0\);

- \({(\mathbf{SA})}_3\):

-

We suppress the dependence of U, V and k on \(\epsilon \), but bear in mind that there is always an \(\epsilon \)-dependence through equation (2.22).

Having introduced U and V as above, we note that

and a direct calculation shows that

and

The aim is now to demonstrate that (3.1) holds, and we do this in two steps. The first, in Lemma 3.2 below, reformulates (3.1) as the equivalent problem of showing that

where V is given by (3.14) and \(\Sigma (R,V)\) is a vector quantity defined in (3.19) below. Lemma 3.3 confirms (3.15), and thereby completes the proof of (3.1).

Lemma 3.2

Let \(({\mathbf{SA}})_1\)—\(({\mathbf{SA}})_3\) be in force, and suppose that U given by (3.11) satisfies (3.1). Let

and

Then it is necessary and sufficient that

i.e. \(\mathrm{curl}\,(\Sigma (R,V)/R)=0\) in \({\mathcal {D}}'(B)\).

Proof

We start by applying (3.8) to (3.1) and then we set \(x=V(y)\). By inspection, \(V=U^{-1}\), so \(y=U(x)\)

Here we have used the fact that \(\nabla U(x) = \mathrm{adj}\,\nabla V(y)\), together with simple properties of the inner product in \({\mathbb {R}}^{2 \times 2}\). Now, by (3.14),

and so

In order to calculate \(C(V,\epsilon ) \mathrm{adj}\,\nabla V(y)\mathrm{cof}\,\nabla V(y)\), we use (3.12) together with (3.7), (3.16), (3.17) and (3.18) to obtain the following three identities

and

Hence, by applying (3.23), (3.24) and (3.25) to (3.22), we have

Now, a short calculation shows that for any \(\phi \) in \(C_c^\infty (B,{\mathbb {R}}^2)\) and any function g in \(C^1(0,1)\), it holds that

while

is straightforward. We also require

which is true provided \([R^2 k' Q_2 \phi ]_{0+}^1=0\). The upper limit vanishes because \(\phi \) has compact support in B, while the lower limit is zero by appealing to Proposition 2.14. The latter shows that \(\nabla U\) is continuous on the ball B, which in particular implies that \(Rk'\) is bounded as \(R \rightarrow 0+\). Hence it is certainly the case that \(R^2k' \rightarrow 0\), so that (3.27) holds.

Hence, by integrating (3.26), we must have

The integrand of the right-hand side of the last equation agrees with \((\Sigma (R,V)/R)\cdot \phi \) provided

where \(Q_3\) is given by (3.18). But a short calculation confirms that

and, similarly,

Hence (3.28) holds, and the proof is complete. \(\square \)

Lemma 3.3

Let \(({\mathbf{SA}})_1\)—\(({\mathbf{SA}})_3\) be in force, and suppose that \(\Sigma (R,V)\) is given by (3.19). Then condition (3.20) is satisfied, and hence U is a stationary point of \({\mathbb {D}}_{\epsilon }\) in the sense of (3.1).

Proof

Since any function \(\phi \in C_c^{\infty }(B,{\mathbb {R}}^2)\) such that \(\mathrm{div}\,\phi =0\) can be written as \(\phi = J \nabla \eta \), where \(\eta \in C_c^{\infty }(B)\), it follows that (3.20) is equivalent to showing that

Now

so it remains to calculate \(e_{\tau } \cdot \Sigma \) and \(e_{\scriptscriptstyle {R}} \cdot \Sigma \). To do this, the following facts, which are easily derived from (3.16), (3.17) and (3.18) and (3.12), are needed:

It follows that

By (2.54), the collection of terms in parentheses vanishes on (0, 1), and so we conclude that

It is not necessary to calculate \(\Sigma (R,V)\cdot e_{\scriptscriptstyle {R}}\) in detail. Instead, it is enough to observe that the coefficients of \(e_{\scriptscriptstyle {R}}\) and \(e_{\tau }\) in \(Q_1,Q_2\) and \(Q_3\) depend only on R. Hence,

which vanishes trivially. Using this and (3.30) in (3.29) concludes the proof of the lemma. \(\square \)

We are now in a position to apply the results of [13] or [8] to deduce the existence of a Lagrange multiplier satisfying (3.2).

Proposition 3.4

Let the conditions of Lemma 3.3 be in force. Then there exists \(\lambda \in \cap _{s>1}L^s(B)\) such that

Proof

Proceeding as in the first three lines of the proof of Lemma 3.2, and in the notation introduced there, (3.21) and (3.1) imply that the linear functional

vanishes on divergence-free \(\phi \). Let \(\alpha > 1\). Since V belongs to \(C^1(B)\), it follows that F can be extended by a density argument to a linear functional \(F: W_0^{1,\alpha } \rightarrow {\mathbb {R}}\) which, again, vanishes on divergence-free functions, suitably interpreted. In the notation of [13, Equation 2.1], F is now defined on the class \({\mathcal {U}}^{1,\alpha }(B)\), and so [13, Theorem 3.1] applies. Thus there is \(\lambda \in L^{\alpha '}(B)\) such that

Since \(\alpha >1\) was arbitrary, it follows that \(\lambda \in \cap _{s>1} L^s(B)\), as claimed. \(\square \)

Remark 3.5

Whilst [13, Theorem 3.1] is perfectly adequate for our needs, we note that the conclusion of Proposition 3.4 can also be deduced directly from [8]. Their estimates, in particular [8, Theorem 2.2] for the pressure in terms of Hardy spaces \(h^r\) for \(r \in [1,\infty )\), go beyond those of [13], under suitable hypothesis. To see (3.31) using [8, Theorem 2.2], and following their proof of [8, Theorem 3.1], introduce \(g \in C_c^1(B, {\mathbb {R}}^2)\) such that \(F(\phi ) = \langle g, \phi \rangle \), where \( \langle g, \phi \rangle \) is the standard \(L^2\)-inner product. Since \(\langle g, \phi \rangle =0\) for all divergence-free \(\phi \) in \(C_c^{\infty }(B,{\mathbb {R}}^2)\), and, in the case at hand, \(|\langle g, \phi \rangle | \le c ||\nabla v||_{\infty }\) for some constant c, it follows that \(\langle g, \phi \rangle \) is a continuous linear functional on \(C_c^{\infty }(B)\). Hence, by a classical result ([27, Proposition 1.1]), there is \(\lambda \) in \({\mathcal {D}}'(B)\) such that \(g=-\nabla \lambda \) with the property that \(\langle g, \phi \rangle = \langle \lambda , \mathrm{div}\,\phi \rangle \) for all \(\phi \in C_c^{\infty }(B,{\mathbb {R}}^2)\). This is (3.31). Equation (3.32) then gives

where \(f(y):=2C(V(y),\epsilon )\mathrm{adj}\,\nabla V(y)\mathrm{cof}\,\nabla V(y)\) belongs to \(L^{\alpha }(B)\) for all \(\alpha > 1\) and hence, by the remark preceding [8, Theorem 2.2], to \(h^\alpha (B)\) for \(\alpha > 1\). Thus, by [8, Theorem 2.2], \(\lambda \) belongs to \(h^{\alpha }(B)\) for each \(\alpha > 1\), from which we recover \(\lambda \in \cap _{s > 1}L^s(B)\), as in the statement of Proposition 3.4.

3.2 Properties of the pressure

We now follow Steps 1–3 of our argument as set out in the introduction to Sect. 3. Specifically, we begin by seeking a function P(x) obeying (3.34) below, i.e. for each \(\delta >0\), and with \(({\mathbf{SA}})_1\)—\(({\mathbf{SA}})_3\) in force, we wish to solve

To do so we require a short technical lemma whose aim is to calculate efficiently \(\mathrm{div}\,D_FW(x,\nabla U;\epsilon )\) when U is given by (3.11).

Lemma 3.6

Let \(U=R e_{\scriptscriptstyle {R}}(\mu + k(R))\) represent one of the functions \(v_{\pm }^\epsilon \), and suppose \(\delta >0\). Then

where

Proof

Using (3.8) together with (3.11) gives

Hence, for any \(\eta \in C_c^{\infty }(B\setminus B(0,\delta ),{\mathbb {R}}^2)\),

A short calculation shows that the right-hand side of the last equation is \(-\int _B H \cdot \eta \, dx\), where H is given by (3.36). Since \(\eta \) is arbitrary, (3.35) follows.

\(\square \)

The next result identifies the function P satisfying (3.34) by calculating \(\nabla U^T \mathrm{div}\,D_FW(x,\nabla U;\epsilon )\), which turns out to be a function of the form \(g'(R)e_{\scriptscriptstyle {R}}\) for a suitably chosen g, and then setting \(P(x)=g(R)\). The details are as follows.

Lemma 3.7

Let U represent one of the functions \(v_{\pm }^\epsilon \), and define \(P \in C^\infty (B \setminus \{0\})\) by

Then P is such that

Proof

By (3.13) and Lemma 3.6, we see that

and, by letting \(H=H^{\scriptscriptstyle {R}} e_{\scriptscriptstyle {R}}+ H^\tau e_{\tau }\) and using (3.11), this gives

Using (3.36) and then (2.54), we see that

To deal with the coefficient of \(e_{\scriptscriptstyle {R}}\) in (3.38), for brevity we first label each occurrence of \(R^2k''+3Rk'\) in \(H^{\scriptscriptstyle {R}}\) and \(H^{\tau }\) with \(\gamma \), say, and calculate

The second and last terms cancel, since (2.54) can easily be rearranged to read

Using (3.39), the remaining terms can be written as

Using the easily-checked identity

the term with prefactor \(R^2 k'^2\) is

while the other term can be handled by using the identity

Thus

By choosing P as in (3.37), we see that (3.34) holds. \(\square \)

This completes Step 1 of the argument outlined at the start of Sect. 3. Step 2 consists in showing that with P and U as in Lemma 3.7, the equation

holds. This can be done using a short cut-off function argument, which we now outline.

Lemma 3.8

Let \(({\mathbf{SA}})_1\)—\(({\mathbf{SA}})_3\) be in force, and let P be given by (3.37). Then

Proof

It has already been established in the introduction to Sect. 3 that (3.34), as derived above, is equivalent to the pointwise condition

so that it is certainly the case that

for any \(\varphi \in C_c^\infty (B\setminus B(0,\delta ),{\mathbb {R}}^2)\) and any \(\delta \in (0,1)\), say. Let \(\eta ^{\delta }\) be a smooth cut-off function which satisfies \(\eta ^{\delta }(x)=0\) if \(x \in B(0,\delta )\), \(\eta ^{\delta } = 1\) if \(x \in B \setminus B(0,2\delta )\), \(|\nabla \eta ^{\delta }| \le \frac{c}{\delta }\), and \(0 \le \eta ^\delta \le 1\) on B. Let \(\phi \in C_c^{\infty }(B,{\mathbb {R}}^2)\) be arbitrary, take \(\varphi :=\eta ^{\delta } \phi \) in (3.41) and, for brevity, let \(Y(x)=D_FW(x,\nabla U;\epsilon ) - P(x) \, \mathrm{cof}\,\nabla U\). The result is that

the first term of which converges to \(\int _B Y \cdot \nabla \phi \, dx\) as \(\delta \rightarrow 0\), while the second obeys, for any \(s > 1\) and some constant c depending only on \(\eta \), the estimate

by Hölder’s inequality. By Lemma 3.9 below, we see that Y belongs to \(\cap _{s>1}L^s(B)\), and so by taking \(s-1>s/2\) in (3.42), i.e. \(s>2\),

This concludes the proof of the lemma. \(\square \)

The following result is needed to complete Step 2.

Lemma 3.9