Abstract

In the present paper, we consider the Cauchy problem of the system of quadratic derivative nonlinear Schrödinger equations. This system was introduced by Colin and Colin (Differ Integral Equ 17:297–330, 2004). The first and second authors obtained some well-posedness results in the Sobolev space \(H^{s}({\mathbb R}^d)\). We improve these results for conditional radial initial data by rewriting the system radial form.

Similar content being viewed by others

1 Introduction

We consider the Cauchy problem of the system of nonlinear Schrödinger equations:

where \(\alpha \), \(\beta \), \(\gamma \in {\mathbb R}\backslash \{0\}\) and the unknown functions u, v, w are d-dimensional complex vector-valued. System (1.1) was introduced by Colin and Colin in [6] as a model of laser–plasma interaction. (See also [7, 8].) They also showed that the local existence of the solution of (1.1) in \(H^s({\mathbb R}^d)\) for \(s>\frac{d}{2}+3\). System (1.1) is invariant under the following scaling transformation:

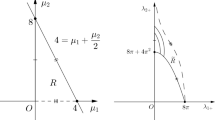

and the scaling critical regularity is \(s_{c}=\frac{d}{2}-1\). We put

We note that \(\kappa =0\) does not occur when \(\theta \ge 0\) for \(\alpha \), \(\beta \), \(\gamma \in {\mathbb R}\backslash \{0\}\).

First, we introduce some known results for related problems. System (1.1) has quadratic nonlinear terms which contain a derivative. A derivative loss arising from the nonlinearity makes the problem difficult. In fact, Mizohata [21] considered the Schrödinger equation

and proved that the uniform bound

is a necessary condition for the \(L^{2} ({\mathbb R}^d)\) well-posedness. Furthermore, Christ [5] proved that the flow map of the nonlinear Schrödinger equation

is not continuous on \(H^{s} ({\mathbb R}^d)\) for any \(s\in {\mathbb R}\). From these results, it is difficult to obtain the well-posedness for quadratic derivative nonlinear Schrödinger equation in general. For the system of quadratic derivative nonlinear equations, it is known that the well-posedness holds. In [15], the first author proved the well-posedness of (1.1) in \(H^s({\mathbb R}^d)\), where s is given in Table 1.

Recently, in [16], the first and second authors have improved this result by using the generalization of the Loomis–Whitney inequality introduced in [2] and [3]. They proved the well-posedness of (1.1) in \(H^s({\mathbb R}^d)\) for \(s\ge \frac{1}{2}\) if \(d=2\) and \(s>\frac{1}{2}\) if \(d=3\), under the condition \(\kappa \ne 0\) and \(\theta < 0\). In [15], the first author also proved that the flow map is not \(C^2\) for \(s<1\) if \(\theta = 0\) and for \(s<\frac{1}{2}\) if \(\theta < 0\) and \(\kappa \ne 0\). Therefore, the well-posedness obtained in [15] and [16] is optimal except the case \(d=3\) and \(s=\frac{1}{2}\) (which is scaling critical) as far as we use the iteration argument. In particular, the optimal regularity is far from the scaling critical regularity if \(d\le 3\) and \(\theta \le 0\).

We point out that the results in [15, 16] do not contain the scattering of the solution for \(d\le 3\) under the condition \(\theta =0\) (and also \(\theta <0\)). In [17], Ikeda, Katayama, and Sunagawa considered the system of quadratic nonlinear Schrödinger equations

under the mass resonance condition \(m_1+m_2=m_3\) (which corresponds to the condition \(\theta =0\) for (1.1)), where \(u=(u_1,u_2,u_3)\) is \({\mathbb C}^3\)-valued, \(m_1\), \(m_2\), \(m_3\in {\mathbb R}\backslash \{0\}\), and \(F_j\) is defined by

with some constants \(C_{1,\alpha ,\beta }\), \(C_{2,\alpha ,\beta }\), \(C_{3,\alpha ,\beta }\in {\mathbb C}\). They obtained the small data global existence and the scattering of the solution to (1.5) in the weighted Sobolev space for \(d=2\) under the mass resonance condition and the null condition for the nonlinear terms (1.6). They also proved the same result for \(d\ge 3\) without the null condition. In [18], Ikeda, Kishimoto, and Okamoto proved the small data global well-posedness and the scattering of the solution to (1.5) in \(H^s ({\mathbb R}^d)\) for \(d\ge 3\) and \(s\ge s_c\) under the mass resonance condition and the null condition for the nonlinear terms (1.6). They also proved the local well-posedness in \(H^s ({\mathbb R}^d)\) for \(d=1\) and \(s\ge 0\), \(d=2\) and \(s>s_c\), and \(d=3\) and \(s\ge s_c\) under the same conditions. (The results in [15] for \(d\le 3\) and \(\theta =0\) say that if the nonlinear terms do not have null condition, then \(s=1\) is optimal regularity to obtain the well-posedness by using the iteration argument.)

Recently, in [23], Sakoda and Sunagawa have considered (1.5) for \(d=2\) and \(j=1,\ldots , N\) with

where \(u_j^{\#}=u_j\) if \(j=1,\ldots ,N\), and \(u_j^{\#}=\overline{u_j}\) if \(j=N+1,\ldots ,2N\). They obtained the small data global existence and the time decay estimate for the solution under some conditions for \(m_1,\cdots m_N\) and the nonlinear terms (1.7), where the conditions contain (1.1) with \(\theta =0\). There exists the blow-up solutions for the system of nonlinear Schrödinger equations. Ozawa and Sunagawa [22] gave the examples of the derivative nonlinearity which causes the small data blow-up for a system of Schrödinger equations. There are also some known results for a system of nonlinear Schrödinger equations with no derivative nonlinearity [12,13,14].

The aim in the present paper is to improve the results in [15, 16] for conditional radial initial data in \({\mathbb R}^2\) and \({\mathbb R}^3\). The radial solution to (1.1) is only trivial solution since the nonlinear terms of (1.1) are not radial form. Therefore, we rewrite (1.1) into a radial form. Here, we focus on \(d=2\). Let \({\mathcal {S}}({\mathbb R}^2)\) denote the Schwartz class. If \(w=(w_1,w_2)\in ({\mathcal {S}}({\mathbb R}^2))^2\) satisfies

for any \(\xi =(\xi _1,\xi _2)\in {\mathbb R}^2\) and \(x=(x_1,x_2)\in {\mathbb R}^2\), then there exists a scalar potential \(W\in C^1({\mathbb R}^2)\) satisfying

and

Indeed, if we put

for some \(a_1\), \(a_2\in {\mathbb R}\), then W satisfies (1.9) by the first equality in (1.8). Furthermore, W also satisfies (1.10) by the second equality in (1.8). We note that the first equality in (1.8) is equivalent to

which is the irrotational condition.

Remark 1.1

If \(d=3\), we can also obtain the radial scalar potential \(W\in C^1({\mathbb R}^3)\) of \(w=(w_1,w_2,w_3)\in ({\mathcal {S}}({\mathbb R}^3))^3\) by assuming the conditions

instead of (1.8).

Definition 1

We say \(f\in {\mathcal {S}}'({\mathbb R}^d)\) is radial if it holds that

for any \(\varphi \in {\mathcal {S}}({\mathbb R}^d)\) and rotation \(R:{\mathbb R}^d\rightarrow {\mathbb R}^d\).

Remark 1.2

If \(f\in L^1_{\mathrm{loc}}({\mathbb R}^d)\), then Definition 1 is equivalent to

Now, we consider the system of nonlinear Schrödinger equations:

instead of (1.1), where \(d=2\) or 3, and

The norm for an equivalent class \([f]\in {\widetilde{H}}^{s+1}({\mathbb R}^d)\) is defined by

which is well defined since \( {\widetilde{H}}^{s+1}({\mathbb R}^d)\) is a quotient space. System (1.12) is obtained by substituting \(w=\nabla W\) and \(w_0=\nabla W_0\) in (1.1).

Definition 2

We say \((u,v,[W])\in C([0,T];{\mathcal {H}}^s({\mathbb R}^d))\) is a solution to (1.12) if

hold for any \(t\in [0,T]\). This definition does not depend on how we choose a representative W.

Now, we give the main results in this paper.

Theorem 1.1

Assume \(\kappa \ne 0\).

-

(i)

Let \(d=2\). Assume that \(s\ge \frac{1}{2}\) if \(\theta =0\) and \(s>0\) if \(\theta <0\). Then, (1.12) is locally well posed in \({\mathcal {H}}^s({\mathbb R}^2)\).

-

(ii)

Let \(d=3\). Assume that \(\theta \le 0\) and \(s\ge \frac{1}{2}\). Then, (1.12) is locally well posed in \({\mathcal {H}}^s({\mathbb R}^3)\).

-

(iii)

Let \(d=3\). Assume that \(\theta \le 0\) and \(s\ge \frac{1}{2}\). Then, (1.12) is globally well posed in \({\mathcal {H}}^s({\mathbb R}^3)\) for small data. Furthermore, the solution scatters in \({\mathcal {H}}^s({\mathbb R}^3)\).

Remark 1.3

\(s=0\) for \(d=2\), and \(s=\frac{1}{2}\) for \(d=3\) are scaling critical regularity for (1.1).

We obtain the following.

Theorem 1.2

Let \(d=2\) and \(\theta =0\). Then, the flow map of (1.12) is not \(C^2\) in \({\mathcal {H}}^s({\mathbb R}^2)\) for \(s<\frac{1}{2}\).

Remark 1.4

Theorem 1.2 says that the well-posedness in Theorem 1.1 for \(\theta =0\) is optimal as far as we use the iteration argument.

Remark 1.5

It is interesting that the result for 2D radial initial data is better than that for 1D initial data. Actually, the optimal regularity for 1D initial data is \(s= 1\) if \(\theta =0\), and \(s= \frac{1}{2}\) if \(\theta <0\) and \(\kappa \ne 0\), which are larger than the optimal regularity for 2D radial initial data. The reason is the following. We use the angular decomposition, and each angular localized term has a better property. For radial functions, the angular localized bound leads to an estimate for the original functions. (See (2.15).)

We note that if \(\nabla W_0=w_0\) holds and (u, v, [W]) is a solution to (1.12) with \((u,v,[W])|_{t=0}=(u_0,v_0,[W_0])\in {\mathcal {H}}^s({\mathbb R}^d)\), then \((u,v,\nabla W)\) is a solution to (1.1) with \((u,v,\nabla W)|_{t=0}=(u_0,v_0,w_0)\in (H^s_{\mathrm{rad}}({\mathbb R}^d))^d\times (H^s_{\mathrm{rad}}({\mathbb R}^d))^d\times H^s({\mathbb R}^d)\). The existence of a scalar potential \(W_0\in {\widetilde{H}}^{s+1}_{\mathrm{rad}}({\mathbb R}^d)\) will be proved for \(w_0\in {\mathcal {A}}^s({\mathbb R}^d)\) with \(s>\frac{1}{2}\) (see Proposition 3.2), where

Therefore, we obtain the following.

Theorem 1.3

Let \(d=2\) or 3. Assume that \(\theta = 0\) and \(s>\frac{1}{2}\). Then, (1.1) is locally well posed in \((H^s_{\mathrm{rad}}({\mathbb R}^d))^d\times (H^s_{\mathrm{rad}}({\mathbb R}^d))^d\times {\mathcal {A}}^s({\mathbb R}^d)\).

Remark 1.6

For \(d=3\), Theorem 1.1 can be obtained by almost the same way as in [15]. In Proposition 4.4 (i) of [15], the author used the Strichartz estimate

and

with an admissible pair \((q,r)=(3,\frac{6d}{3d-4})\) for \(d\ge 4\). But this trilinear estimate does not hold for \(d=3\). This is the reason why the well-posedness in \(H^{s_c}({\mathbb R}^3)\) could not be obtained in [15]. For the radial function \(u_0\in L^2({\mathbb R}^3)\), it is known that the improved Strichartz estimate ([24], Corollary 6.2)

It holds that

for \(N_1\sim N_2\sim N_3\ge 1\). Therefore, for \(d=3\), we can obtain the same estimate in Proposition 4.4 (i). Because of such reason, we omit more detail of the proof for \(d=3\) and only consider \(d=2\) in the following sections.

Notation. We denote the spatial Fourier transform by \({\widehat{\cdot }}\) or \({\mathcal {F}}_{x}\), the Fourier transform in time by \({\mathcal {F}}_{t}\) and the Fourier transform in all variables by \({\widetilde{\cdot }}\) or \({\mathcal {F}}_{tx}\). For \(\sigma \in {\mathbb R}\), the free evolution \(e^{it\sigma \Delta }\) on \(L^{2}\) is given as a Fourier multiplier

We will use \(A\lesssim B\) to denote an estimate of the form \(A \le CB\) for some constant C and write \(A \sim B\) to mean \(A \lesssim B\) and \(B \lesssim A\). We will use the convention that capital letters denote dyadic numbers, e.g. \(N=2^{n}\) for \(n\in {\mathbb N}_0:={\mathbb N}\cup \{0\}\), and for a dyadic summation, we write \(\sum _{N}a_{N}:=\sum _{n\in {\mathbb N}_0}a_{2^{n}}\) and \(\sum _{N\ge M}a_{N}:=\sum _{n\in {\mathbb N}_0, 2^{n}\ge M}a_{2^{n}}\) for brevity. Let \(\chi \in C^{\infty }_{0}((-2,2))\) be an even, non-negative function such that \(\chi (t)=1\) for \(|t|\le 1\). We define \(\psi (t):=\chi (t)-\chi (2t)\), \(\psi _1(t):=\chi (t)\), and \(\psi _{N}(t):=\psi (N^{-1}t)\) for \(N\ge 2\). Then, \(\sum _{N}\psi _{N}(t)=1\). We define frequency and modulation projections

Furthermore, we define \(Q_{\ge M}^{\sigma }:=\sum _{L\ge M}Q_{L}^{\sigma }\) and \(Q_{<M}:=Id -Q_{\ge M}\).

The rest of this paper is planned as follows. In Section 2, we will give the bilinear estimates which will be used to prove the well-posedness. In Sect. 3, we will give the proof of Theorems 1.1 and 1.3. In Sect. 4, we will give the proof of Theorem 1.2.

2 Bilinear Estimates

In this section, we prove the bilinear estimates. First, we define the radial condition for time–space function.

Definition 3

We say \(u\in {\mathcal {S}}'({\mathbb R}_t\times {\mathbb R}_x^2)\) is radial with respect to x if it holds that

for any \(\varphi \in {\mathcal {S}}({\mathbb R}_t\times {\mathbb R}_x^2)\) and rotation \(R:{\mathbb R}^2\rightarrow {\mathbb R}^2\), where \(\varphi _R\in {\mathcal {S}}({\mathbb R}_t\times {\mathbb R}_x^2)\) is defined by \(\varphi _R(t,x)=\varphi (t,R(x))\).

Next, we define the Fourier restriction norm, which was introduced by Bourgain in [4].

Definition 4

Let \(s\in {\mathbb R}\), \(b\in {\mathbb R}\), \(\sigma \in {\mathbb R}\backslash \{0\}\).

-

(i)

We define \(X^{s,b}_{\sigma }:=\{u\in {\mathcal {S}}'({\mathbb R}_t\times {\mathbb R}_x^2)|\ \Vert u\Vert _{X^{s,b}_{\sigma }}<\infty \}\), where

$$\begin{aligned} \begin{aligned} \Vert u\Vert _{X^{s,b}_{\sigma }}&:= \Vert \langle \xi \rangle ^s\langle \tau +\sigma |\xi |^2\rangle ^b{\widetilde{u}}(\tau ,\xi )\Vert _{L^2_{\tau \xi }} \sim \left( \sum _{N\ge 1} \sum _{L\ge 1}N^{2s}L^{2b}\Vert Q_{L}^{\sigma }P_{N}u\Vert _{L^2}^2\right) ^{\frac{1}{2}}. \end{aligned} \end{aligned}$$ -

(ii)

We define \({\widetilde{X}}^{s+1,b}_{\sigma }:=\{u\in {\mathcal {S}}'({\mathbb R}_t\times {\mathbb R}_x^2)|\ \nabla u\in X^{s,b}_{\sigma }\}/ {\mathcal {N}}\) with the norm

$$\begin{aligned} \Vert [u]\Vert _{{\widetilde{X}}^{s+1,b}_{\sigma }}:=\Vert \nabla u\Vert _{X^{s,b}_{\sigma }}, \end{aligned}$$where \({\mathcal {N}}:= \{u\in {\mathcal {S}}'({\mathbb R}_t\times {\mathbb R}_x^2)|\ \nabla u =0 \}\).

-

(iii)

We define

$$\begin{aligned} \begin{aligned} X^{s,b}_{\sigma ,\mathrm{rad}}&:= \{u\in X^{s,b}_{\sigma }|\ u\ \mathrm{is\ radial\ with\ respect\ to}\ x\},\\ {\widetilde{X}}^{s,b}_{\sigma ,\mathrm{rad}}&:= \{[u]\in {\widetilde{X}}^{s+1,b}_{\sigma }|\ u\ \mathrm{is\ radial\ with\ respect\ to}\ x\}. \end{aligned} \end{aligned}$$

We put

We note that if \((\sigma _1,\sigma _2,\sigma _3)\in \{(\beta , \gamma , -\alpha ), (-\gamma , \alpha , -\beta ), (\alpha , -\beta , -\gamma )\}\), then it hold that \({\widetilde{\theta }}=\theta \) and \(|{\widetilde{\kappa }}|=|\kappa |\).

The following bilinear estimate plays a central role to show Theorem 1.1.

Proposition 2.1

Let \(\sigma _1\), \(\sigma _2\), \(\sigma _3\in {\mathbb R}\backslash \{0\}\) satisfy \({\widetilde{\kappa }}\ne 0\). Let \(s\ge \frac{1}{2}\) if \({\widetilde{\theta }}=0\) and \(s>0\) if \({\widetilde{\theta }}<0\). Then there exists \(b'\in (0,\frac{1}{2})\) and \(C>0\) such that

hold for any \(u\in X^{s,b'}_{\sigma _1,\mathrm{rad}}\), \(v\in X^{s,b'}_{\sigma _2,\mathrm{rad}}\), and \([U]\in {\widetilde{X}}^{s+1,b'}_{\sigma _1,\mathrm{rad}}\).

Remark 2.1

Since \(\Vert \partial _1(uv)\Vert _{X^{s,-b'}_{-\sigma _3}} +\Vert \partial _2(uv)\Vert _{X^{s,-b'}_{-\sigma _3}}\sim \Vert |\nabla |(uv)\Vert _{X^{s,-b'}_{-\sigma _3}}\), (2.1) implies

To prove Proposition 2.1, we first give the Strichartz estimate.

Proposition 2.2

(Strichartz estimate (cf. [11, 19])). Let \(\sigma \in {\mathbb R}\backslash \{0\}\) and (p, q) be an admissible pair of exponents for the 2D Schrödinger equation, i.e. \(p>2\), \(\frac{1}{p}+\frac{1}{q}=\frac{1}{2}\). Then, we have

for any \(\varphi \in L^{2}({\mathbb R}^{2})\).

The Strichartz estimate implies the following. (See the proof of Lemma 2.3 in [10].)

Corollary 2.3

Let \(L\in 2^{{\mathbb N}_0}\), \(\sigma \in {\mathbb R}\backslash \{0\}\), and (p, q) be an admissible pair of exponents for the Schrödinger equation. Then, we have

for any \(u \in L^{2}({\mathbb R}\times {\mathbb R}^{2})\).

Next, we give the bilinear Strichartz estimate.

Proposition 2.4

We assume that \(\sigma _{1}\), \(\sigma _{2}\in {\mathbb R}\backslash \{0\}\) satisfy \(\sigma _1+\sigma _2\ne 0\). For any dyadic numbers \(N_1\), \(N_2\), \(N_3\in 2^{{\mathbb N}_0}\) and \(L_1\), \(L_2\in 2^{{\mathbb N}_0}\), we have

where \(N_{\min }= \min \nolimits _{1\le i\le 3}N_i\), \(N_{\max }= \max \nolimits _{1\le i\le 3}N_i\).

Proposition 2.4 can be obtained by the same way as Lemma 1 in [9]. (See also Lemma 3.1 in [15].)

Corollary 2.5

Let \(b'\in (\frac{1}{4},\frac{1}{2})\), and \(\sigma _{1}\), \(\sigma _{2}\in {\mathbb R}\backslash \{0\}\) satisfy \(\sigma _1+\sigma _2\ne 0\), We put \(\delta =\frac{1}{2}-b'\). For any dyadic numbers \(N_1\), \(N_2\), \(N_3\in 2^{{\mathbb N}_0}\) and \(L_1\), \(L_2\in 2^{{\mathbb N}_0}\), we have

The proof is given in Corollary 2.5 in [16].

2.1 The Estimates for Low Modulation

In this subsection, we assume that \(L_{\text {max}} \ll N_{\max }^2\).

Lemma 2.6

We assume that \(\sigma _1\), \(\sigma _2\), \(\sigma _3 \in {\mathbb R}\setminus \{0 \}\) satisfy \({\widetilde{\kappa }}\ne 0\) and \((\tau _{1},\xi _{1})\), \((\tau _{2}, \xi _{2})\), \((\tau _{3}, \xi _{3})\in {\mathbb R}\times {\mathbb R}^{2}\) satisfy \(\tau _{1}+\tau _{2}+\tau _{3}=0\), \(\xi _{1}+\xi _{2}+\xi _{3}=0\). If \(\max \nolimits _{1\le j\le 3}|\tau _{j}+\sigma _{j}|\xi _{j}|^{2}| \ll \max \nolimits _{1\le j\le 3}|\xi _{j}|^{2}\), then we have

Since the above lemma is the contrapositive of the following lemma which was utilized in [15], we omit the proof.

Lemma 2.7

(Lemma 4.1 in [15]) We assume that \(\sigma _{1}\), \(\sigma _{2}\), \(\sigma _{3} \in {\mathbb R}\backslash \{0\}\) satisfy \({\widetilde{\kappa }}\ne 0\) and \((\tau _{1},\xi _{1})\), \((\tau _{2}, \xi _{2})\), \((\tau _{3}, \xi _{3})\in {\mathbb R}\times {\mathbb R}^{2}\) satisfy \(\tau _{1}+\tau _{2}+\tau _{3}=0\), \(\xi _{1}+\xi _{2}+\xi _{3}=0\). If there exist \(1\le i,j\le 3\) such that \(|\xi _{i}|\ll |\xi _{j}|\), then we have

Lemma 2.6 suggests that if \( \max \nolimits _{1\le j\le 3}|\tau _{j}+\sigma _{j}|\xi _{j}|^{2}| \ll \max \nolimits _{1\le j\le 3}|\xi _{j}|^{2}\) then we can assume

We first introduce the angular frequency localization operators which were utilized in [1].

Definition 5

[1]. We define the angular decomposition of \({\mathbb R}^2\) in frequency. We define a partition of unity in \({\mathbb R}\),

For a dyadic number \(A \ge 64\), we also define a partition of unity on the unit circle,

We observe that \(\omega _j^A\) is supported in

We now define the angular frequency localization operators \(R_j^A\),

For any function \(u: \, {\mathbb R}\, \times \, {\mathbb R}^2 \, \rightarrow {\mathbb C}\), \((t,x) \mapsto u(t,x)\), we set \((R_j^A u ) (t, x) = (R_j^Au( t, \cdot )) (x)\). This operator localizes function in frequency to the set

Immediately, we can see

The next lemma will be used to obtain Proposition 2.1 for the case \({\widetilde{\theta }}=0\).

Lemma 2.8

Let N, \(L_1\), \(L_2\), \(L_3\), \(A\in 2^{{\mathbb N}_0}\). We assume that \(\sigma _{1}\), \(\sigma _{2}\), \(\sigma _{3} \in {\mathbb R}\backslash \{0\}\) satisfy \({\widetilde{\theta }}=0\) and \((\tau _{1},\xi _{1})\), \((\tau _{2}, \xi _{2})\), \((\tau _{3}, \xi _{3})\in {\mathbb R}\times {\mathbb R}^{2}\) satisfy \(\tau _{1}+\tau _{2}+\tau _{3}=0\), \(\xi _{1}+\xi _{2}+\xi _{3}=0\), \(|\xi _i|\sim N_i\), \(|\tau _i+\sigma _i|\xi _i|^2|\sim L_i\), and \((\tau _i, \xi _i)\in {{\mathfrak {D}}}_{j_i}^A\)\((i=1,2,3)\) for some \(j_{1}\), \(j_{2}\), \(j_3\in \{0,1,\ldots ,A-1\}\). If \(N_1\sim N_2\sim N_3\), \(L_{\max }:=\max \nolimits _{1\le i\le 3}L_i\le N_{\max }^2 A^{-2}\), and \(A\gg 1\) hold, then we have \(\min \{|j_1-j_2|, |A-(j_{1}-j_{2})|\}\lesssim 1\), \(\min \{|j_2-j_3|, |A-(j_{2}-j_{3})|\}\lesssim 1\), and \(\min \{|j_1-j_3|, |A-(j_{1}-j_{3})|\}\lesssim 1\).

Proof

Because \(0={\widetilde{\theta }}=\sigma _1\sigma _2\sigma _3(\frac{1}{\sigma _1}+\frac{1}{\sigma _2}+\frac{1}{\sigma _3})=\sigma _1\sigma _2+\sigma _2\sigma _3+\sigma _3\sigma _1\), we have

We put \(p:=\mathrm{sgn}(\sigma _1+\sigma _3)=\mathrm{sgn}(\sigma _2+\sigma _3)\), \(q:=\mathrm{sgn}(\sigma _3)\). Let \(\angle (\xi _{1},\xi _{2})\in [0,\pi ]\) denote the smaller angle between \(\xi _{1}\) and \(\xi _{2}\). Since

we have

Therefore, we obtain

This implies

Therefore, we get \(\min \{|j_1-j_2|, |A-(j_{1}-j_{2})|\}\lesssim 1\). By the same argument, we also get \(\min \{|j_2-j_3|, |A-(j_{2}-j_{3})|\}\lesssim 1\) and \(\min \{|j_1-j_3|, |A-(j_{1}-j_{3})|\}\lesssim 1\). \(\square \)

Now we introduce the necessary bilinear estimates to obtain Proposition 2.1 for the case \({\widetilde{\theta }}<0\).

Theorem 2.1

(Theorem 2.8 in [16]) We assume that \(\sigma _{1}\), \(\sigma _{2}\), \(\sigma _{3} \in {\mathbb R}\backslash \{0\}\) satisfy \({\widetilde{\kappa }}\ne 0\) and \({\widetilde{\theta }}<0\). Let \(L_{\max }:= \max \nolimits _{1\le j\le 3} (L_1, L_2, L_3) \ll |{\widetilde{\theta }}| N_{\min }^2\), \(A \ge 64\), and \(|j_1 - j_2| \lesssim 1\). Then the following estimates hold:

Proposition 2.9

(Proposition 2.9 in [16]) We assume that \(\sigma _{1}\), \(\sigma _{2}\), \(\sigma _{3} \in {\mathbb R}\backslash \{0\}\) satisfy \({\widetilde{\kappa }}\ne 0\) and \({\widetilde{\theta }}<0\). Let \(L_{\text {max}} \ll |{\widetilde{\theta }}| N_{\min }^2\) and \( 64 \le A \le N_{\text {max}}\), \(16 \le |j_1 - j_2 |\le 32\). Then the following estimate holds:

2.2 Proof of Proposition 2.1

By the duality argument, we have

where we used \((Q_{L_3}^{-\sigma _3}f,{\overline{g}})_{L^2_{tx}}=(f,\overline{Q_{L_3}^{\sigma _3}g})_{L^2_{tx}}\). Since \(|\nabla |(uv)\) and \((\Delta U)v\) are radial with respect to x, we can assume w is also radial with respect to x. Therefore, to obtain (2.1), it suffices to show that

for the radial functions u, v, and w, where we put

and used \((Q_{L_3}^{-\sigma _3}f,{\overline{g}})_{L^2_{tx}}=(f,\overline{Q_{L_3}^{\sigma _3}g})_{L^2_{tx}}\). By Plancherel’s theorem, we have

We only consider the case \(N_1 \lesssim N_2 \sim N_3\), because the remaining cases \(N_2 \lesssim N_3 \sim N_1\) and \(N_3 \lesssim N_1 \sim N_2\) can be shown similarly. It suffices to show that

for some \(b'\in (0,\frac{1}{2})\), \(c\in (0,b')\), and \(\epsilon >0\). Indeed, from (2.13) and the Cauchy–Schwarz inequality, we obtain

We put \(L_{\max }:= \max \nolimits _{1\le j\le 3} (L_1, L_2, L_3)\).

Case 1 High modulation, \(\displaystyle L_{\max } > rsim N_{\max }^2\)

In this case, the radial condition is not needed. We assume \(L_1 > rsim N_{\max }^2\sim N_2^2\). By the Cauchy–Schwarz inequality and (2.5), we have

where \(\delta := \frac{1}{2}-c\). Therefore, we obtain

Thus, it suffices to show that

Since \(\delta =\frac{1}{2}-c\), we have

Therefore, by choosing \(b'\) and c as \(\max \{\frac{3-s}{6},\frac{3}{8}\}<c<b'<\frac{1}{2}\) for \(s>0\), we get (2.14).

Case 2: Low modulation, \(\displaystyle L_{\max }\ll N_{\max }^2\)

By Lemma 2.6, we can assume \(N_1 \sim N_2 \sim N_3\) thanks to \(\displaystyle L_{\max }\ll N_{\max }^2\). We assume \(L_{\text {max}} = L_3\) for simplicity. The other cases can be treated similarly.

\(\circ \) The case \({\widetilde{\theta }}=0\)

Let \(A:= L_{\max }^{-\frac{1}{2}} N_{\max }\sim L_{3}^{-\frac{1}{2}} N_{1}\). We decompose \({\mathbb R}^3 \times {\mathbb R}^3\times {\mathbb R}^3\) as follows:

Since \(L_{\text {max}} \le N_{\max }^2 (L_{\max }^{-\frac{1}{2}} N_{\max })^{-2} = N_{\max }^2 A^{-2}\), by Lemma 2.8, we can write

with \(u_{N_1,L_1, j_1}:= R_{j_1}^A u_{N_1, L_1}\), \(v_{N_2,L_2, j_2}:= R_{j_2}^A v_{N_2, L_2}\) and \(w_{N_3,L_3, j_3}:= R_{j_3}^A v_{N_3, L_3}\), where

We note that \(\# J(j_1)\lesssim 1\). By using the Hölder inequality and Corollary 2.3 with \(p=q=4\), we get

Since u, v, and w are radial respect to x, we have

Therefore, we obtain

This estimate gives the desired estimate (2.13) for \(s\ge \frac{1}{2}\) by choosing \(b'\) and c as \(\frac{5}{12}\le c<b'<\frac{1}{2}\).

\(\circ \) The case \({\widetilde{\theta }}<0\)

We decompose \({\mathbb R}^3 \times {\mathbb R}^3\) as follows:

We can write

For the former term, by using the Hölder inequality, Theorem 2.1, and (2.15), we get

For the latter term, by using Proposition 2.9, (2.15), and \(L_1L_2L_3\lesssim N_{1}^6\) that we get

The above two estimates give the desired estimate (2.13) for \(s>0\) by choosing \(b'\) and c as \(\max \{\frac{3-s}{6},\frac{1}{3}\}< c<b'<\frac{1}{2}\). \(\square \)

3 Proof of the Well-Posedness

In this section, we prove Theorems 1.1 and 1.3. For a Banach space H and \(r>0\), we define \(B_r(H):=\{ f\in H \,|\, \Vert f\Vert _H \le r \}\). Furthermore, we define \({\mathcal {X}}^{s,b}_{T}\) as

where \(X^{s,b}_{\alpha ,\mathrm{rad},T}\) and \(X^{s,b}_{\beta ,\mathrm{rad},T}\) are the time localized spaces defined by

with the norm

Also, \({\widetilde{X}}^{s+1,b}_{\gamma ,\mathrm{rad},T}\) is defined by the same way. Now, we restate Theorem 1.1 for \(d=2\) more precisely.

Theorem 3.1

Let \(s\ge \frac{1}{2}\) if \(\theta =0\) and \(s>0\) if \(\theta <0\). For any \(r>0\) and for all initial data \((u_{0}, v_{0}, [W_{0}])\in B_r({\mathcal {H}}^s({\mathbb R}^2))\), there exist \(T=T(r)>0\) and a solution \((u,v,[W])\in {\mathcal {X}}^{s,b}_T\) to system (1.12) on [0, T] for suitable \(b>\frac{1}{2}\). Such solution is unique in \(B_R({\mathcal {X}}^s_T)\) for some \(R>0\). Moreover, the flow map

is Lipschitz continuous.

Remark 3.1

Since \(X^{s,b}_T\hookrightarrow C([0,T];H^s({\mathbb R}^2))\) holds for \(b>\frac{1}{2}\), we have \({\mathcal {X}}^{s,b}_T\hookrightarrow C([0,T];{\mathcal {H}}^s({\mathbb R}^2))\).

To prove Theorem 3.1, we give the linear estimate.

Proposition 3.1

Let \(s\in {\mathbb R}\), \(\sigma \in {\mathbb R}\backslash \{0\}\), \(b\in (\frac{1}{2},1]\), \(b'\in [0,1-b]\) and \(0<T\le 1\).

-

(1)

There exists \(C_1>0\) such that for any \(\varphi \in H^s({\mathbb R}^2)\), we have

$$\begin{aligned} \Vert e^{it\sigma \Delta }\varphi \Vert _{X^{s,b}_{\sigma ,T}} \le C_1\Vert \varphi \Vert _{H^s}. \end{aligned}$$ -

(2)

There exists \(C_2>0\) such that for any \(F \in X^{s,-b'}_{\sigma ,T}\), we have

$$\begin{aligned} \left\| \int _{0}^{t}e^{i(t-t')\sigma \Delta }F(t')\hbox {d}t'\right\| _{X^{s,b}_{\sigma ,T}} \le C_2T^{1-b'-b}\Vert F\Vert _{X^{s,-b'}_{\sigma ,T}}. \end{aligned}$$ -

(3)

There exists \(C_3>0\) such that for any \(u \in X^{s,b}_{\sigma ,T}\), we have

$$\begin{aligned} \Vert u\Vert _{X^{s,b'}_{\sigma , T}}\le C_3T^{b-b'}\Vert u\Vert _{X^{s,b}_{\sigma , T}}. \end{aligned}$$

For the proof of Proposition 3.1, see Lemma 2.1 and 3.1 in [10].

We define the map \(\Phi (u,v,[W])=(\Phi _{\alpha , u_{0}}^{(1)}([W], v), \Phi _{\beta , v_{0}}^{(1)}([{\overline{W}}], u), [\Phi _{\gamma , [W_{0}]}^{(2)}(u, {\overline{v}}))])\) as

To prove the existence of the solution of (1.1), we prove that \(\Phi \) is a contraction map on \(B_R({\mathcal {X}}^s_T)\) for some \(R>0\) and \(T>0\). For a vector-valued function \(f=(f_1,f_2)\), \(\Vert f\Vert _{H^s}\) and \(\Vert f\Vert _{X^{s,b}_T}\) denote \(\Vert f_1\Vert _{H^s}+\Vert f_2\Vert _{H^s}\) and \(\Vert f_1\Vert _{X^{s,b}_T}+\Vert f_2\Vert _{X^{s,b}_T}\), respectively.

Proof of Theorem 3.1

We choose \(b>\frac{1}{2}\) as \(b=1-b'\), where \(b'\) is as in Proposition 2.1. Let \((u_{0}\), \(v_{0}\), \([W_{0}])\in B_{r}({\mathcal {H}}^s({\mathbb R}^2))\) be given. By Proposition 2.1 with \((\sigma _1,\sigma _2,\sigma _3)\in \{(\beta , \gamma , -\alpha ), (-\gamma , \alpha , -\beta ), (\alpha , -\beta , -\gamma )\}\) and Proposition 3.1 with \(\sigma \in \{\alpha , \beta , \gamma \}\), there exist constants \(C_1\), \(C_2\), \(C_3>0\) such that for any \((u,v,[W])\in B_R({\mathcal {X}}^s_T)\), we have

Similarly,

Therefore, if we choose \(R>0\) and \(T>0\) as

then \(\Phi \) is a contraction map on \(B_R({\mathcal {X}}^s_T)\). This implies the existence of the solution of system (1.1) and the uniqueness in the ball \(B_R({\mathcal {X}}^s_T)\). The Lipschitz continuity of the flow map is also proved by similar argument. \(\square \)

Next, to prove Theorem 1.3, we justify the existence of a scalar potential of \(w\in (H^s({\mathbb R}^2))^2\). Let \({\mathcal {F}}_1\) and \({\mathcal {F}}_2\) denote the Fourier transform with respect to the first component and the second component, respectively. We note that \({\mathcal {F}}_1^{-1}{\mathcal {F}}_2^{-1}={\mathcal {F}}_2^{-1}{\mathcal {F}}_1^{-1}={\mathcal {F}}_x^{-1}\) (and also \({\mathcal {F}}_1{\mathcal {F}}_2={\mathcal {F}}_2{\mathcal {F}}_1={\mathcal {F}}_x\)) holds on \(L^2({\mathbb R}^2)\).

Proposition 3.2

Let \(s>\frac{1}{2}\) and \(w=(w_1,w_2)\in (H^s({\mathbb R}^2))^2\). If \(w_1\) and \(w_2\) satisfy

then there exists \(W\in L^1_{\mathrm{loc}}({\mathbb R}^2)\)\((\subset {\mathcal {S}}'({\mathbb R}^2))\) such that

To obtain Proposition 3.2, we use the next lemma.

Lemma 3.3

Let \(s>\frac{1}{2}\). If \(f\in H^s({\mathbb R}^2)\), then it hold that

Proof

By the Cauchy–Schwarz inequality and Plancherel’s theorem, we have

for \(s>\frac{1}{2}\). Therefore, we obtain

Similarly, we have

\(\square \)

Proof of Proposition 3.2

We put

for some \(a_1\), \(a_2\in {\mathbb R}\). By \(w \in L^2({\mathbb R}^2)\), we have \(W\in L^1_{\mathrm{loc}}({\mathbb R}^2)\). Hence, it remains to show that \(\nabla W=w\). Since

hold for almost all \(x=(x_1,x_2)\in {\mathbb R}^2\), it suffices to show

Let \(h\in {\mathbb R}\). Since \({\mathcal {F}}_1[w_1](\cdot ,x_2)\in L^1({\mathbb R})\) a.e. \(x_2\in {\mathbb R}\) by Lemma 3.3, we have

by Fubini’s theorem. We put \({\mathcal {F}}_{12}^{-1}:={\mathcal {F}}_1^{-1}{\mathcal {F}}_2^{-1}\), \({\mathcal {F}}_{21}^{-1}:={\mathcal {F}}_2^{-1}{\mathcal {F}}_1^{-1}\). By using \(\xi _2\widehat{w_1}=\xi _1\widehat{w_2}\) and \({\mathcal {F}}_{12}^{-1}={\mathcal {F}}_{21}^{-1}\), we have

Since \({\mathcal {F}}_2[w_2](x_1,\cdot )\in L^1({\mathbb R})\) a.e. \(x_1\in {\mathbb R}\) by Lemma 3.3, we have

by Lebesgue’s dominant convergence theorem. Therefore, we obtain (3.1). \(\square \)

Remark 3.2

In the proof of Proposition 3.2, we also used

This implies

and

Remark 3.3

If \(w=(w_1,w_2)\in (H^s({\mathbb R}^2))^2\) for \(s>\frac{1}{2}\) satisfies

additionally in Proposition 3.2, then \(W\in L^1_{\mathrm{loc}}({\mathbb R}^2)\) given in the proof of Proposition 3.2 is radial. Indeed, this condition with \(\nabla W(x)=w(x)\) yields (1.10).

Remark 3.4

For \(s\le \frac{1}{2}\), we do not know whether there exists a scalar potential of \(w\in (H^s({\mathbb R}^2))^2\) or not. But we point out that if \(s<\frac{1}{2}\), then the 1D delta function appears in \(\partial _2w_1-\partial _1w_2\) for some \(w\in (H^s({\mathbb R}^2))^2\). Then, the irrotational condition does not make sense for pointwise.

Next, we prove that \({\mathcal {A}}^s({\mathbb R}^2)\) is a Banach space.

Proposition 3.4

For \(s\ge 0\), \({\mathcal {A}}^s({\mathbb R}^2)\) is a closed subspace of \((H^s({\mathbb R}^2))^2\).

Proof

Let \(f^{(n)}=(f_1^{(n)}, f_2^{(n)})\in {\mathcal {A}}^s({\mathbb R}^2)\)\((n=1,2,3,\ldots )\) and \(f=(f_1,f_2)\in (H^s({\mathbb R}^2))^2\). Assume that \(f^{(n)}\) convergences to f in \((H^s({\mathbb R}^2))^2\) as \(n\rightarrow \infty \). We prove \(f\in A^s({\mathbb R}^2)\); namely, f satisfies (1.8). By the triangle inequality, we have

Since \(f^{(n)}\) satisfies (1.8) and \(f^{(n)}\rightarrow f\) in \((L^2({\mathbb R}^2))^2\) as \(n\rightarrow \infty \), we obtain

Therefore, we get

It implies \(x_2f_1(x)-x_1f_2(x)=0\) a.e. \(x\in {\mathbb R}^2\). Similarly, we obtain \(\xi _2\widehat{f_1}(\xi )-\xi _1\widehat{f_2}(\xi )=0\) a.e. \(\xi \in {\mathbb R}^2\). \(\square \)

Proof of Theorem 1.3

Let \((u_0,v_0,w_0)\in B_r((H_{\mathrm{rad}}^s({\mathbb R}^2))^2\times (H_{\mathrm{rad}}^s({\mathbb R}^2))^2 \times {\mathcal {A}}^s({\mathbb R}^2))\) be given. We first prove the existence of solution to (1.1). Since \(w_0\) satisfies (1.8), by Proposition 3.2, there exists \([W_0]\in {\widetilde{H}}^{s+1}_{\mathrm{rad}}\) such that \(\nabla W_0=w_0\). From Theorem 1.1, there exists \(T>0\) and a solution \((u,v,[W])\in {\mathcal {X}}^{s}_T\) to (1.12) with \((u,v,[W])|_{t=0}=(u_0,v_0,[W_0])\). Since

the existence time T is decided by r. We put \(w=\nabla W\). Then, \(w\in X^{s,b}_{\gamma , T}\) satisfying

where R is as in the proof of Theorem 1.1, and (u, v, w) satisfies (1.1) since \(\Delta W=\nabla \cdot w\). Furthermore, we have

and

because W is radial with respect to x. Therefore, \(w(t)\in {\mathcal {A}}^s({\mathbb R}^2)\) for any \(t\in [0,T]\).

Next, we prove the uniqueness of the solution in \(B_R({\mathcal {Y}}^{s,b}_T)\), where

Let \((u^{(1)},v^{(1)},w^{(1)})\), \((u^{(2)},v^{(2)},w^{(2)})\in B_R({\mathcal {Y}}^{s,b}_T)\) are solution to (1.1) with initial data \((u_0,v_0,w_0)\). Then by Proposition 3.2, there exists \([W^{(1)}]\), \([W^{(2)}]\in {\widetilde{X}}^{s+1,b}_{\gamma ,\mathrm{rad},T}\) such that \(w^{(1)}=\nabla W^{(1)}\), \(w^{(2)}=\nabla W^{(2)}\). By substituting \(w^{(j)}=\nabla W^{(j)}\) in both sides of the integral form of (1.1), \((u^{(j)},v^{(j)},W^{(j)})\)\((j=1,2)\) satisfy

Therefore, by the same argument as in the proof of Theorem 1.1, we have

since \(w^{(1)}-w^{(2)}=\nabla (W^{(1)}-W^{(2)})\). This implies

The continuous dependence on initial data can be obtained by the similar argument. \(\square \)

4 The Lack of the Twice Differentiability of the Flow Map

The following proposition implies Theorem 1.2.

Proposition 4.1

Let \(d=2\) and \(0<T\ll 1\). Assume \(\theta =0\) and \(s<\frac{1}{2}\). For every \(C>0\), there exist f, \(g\in H_{\mathrm{rad}}^{s}({\mathbb R}^{2})\) such that

Proof

Let \(N\gg 1\) and \(p:=\frac{\gamma }{\alpha -\gamma }\)\((\ne 0)\). We note that p is well defined since \(\theta =0\) implies \(\kappa \ne 0\) for \(\alpha \), \(\beta \), \(\gamma \in {\mathbb R}\backslash \{0\}\). For simplicity, we assume \(p>0\). Put

We define the functions f and g as

Clearly, we have \(\Vert f\Vert _{H^s}\sim \Vert g\Vert _{H^s}\sim 1\) and f, g are radial. For \(\xi =(\xi _1,\xi _2)\in {\mathbb R}^2\) and \(\eta =(\eta _1,\eta _2)\in {\mathbb R}^2\), we define

because \(\theta =0\) implies \(\frac{\beta +\gamma }{\alpha -\gamma }=-\left( \frac{\gamma }{\alpha -\gamma }\right) ^2\). We will show

We calculate that

Let \(R:{\mathbb R}^2\rightarrow {\mathbb R}^2\) be a rotation operator. Since \(\Phi (\xi ,\eta )=\Phi (R\xi ,R\eta )\) and \(\varvec{1}_D\), \(\varvec{1}_{D_1}\), \(\varvec{1}_{D_2}\) are radial, we can see

It implies that F is radial. Therefore, there exists \(G:{\mathbb R}\rightarrow {\mathbb R}\) such that \(F(\xi )=G(|\xi |)\). We note that

since \(\mathrm{supp}G\subset [(1+p^{-1})N+1, (1+p^{-1})N+1+2^{-10}]\). Hence, it suffices to show that

for any \(c\in [0,2^{-10}]\) and some \(0\le t\le T\), where \(\xi _c:=(\xi _{c1},0)\in {\mathbb R}^2\), \(\xi _{c1}:=(1+p^{-1})N+1+c\). Simple calculation gives

We also observe that

Let \(\epsilon >0\) be small. We define a new set E as

and we decompose E into four sets:

We can easily show that \(E_i\cap E_j=\emptyset \) if \(i\ne j\). Furthermore, we can obtain \(E_1\subset E\) and

for any \(\eta \in E_1\). We observe that

We first consider \(I_1\). Let

Obviously, it holds \(c'\sim c''\sim N^{-1+2\epsilon }\). We calculate that

where \(\tau :=|\alpha -\gamma |t\) and \(q(\eta _1):=\left( (1+p)(\eta _1-N)-p(1+c)\right) ^2\). Therefore, if we obtain

then we get \(I_1 > rsim t^{\frac{1}{2}}\). Let \(t>0\) be small. We fix \(\eta _1\in [N+c'+c'',N+1-c'']\) and write \(q(\eta _1)=q\) for simplicity. Clearly, we have \(0\le q\lesssim 1\). We easily verify that if we restrict \(\eta _2\) as \(0\le \eta _2\le \sqrt{\pi \tau ^{-1}-q}\), then we have \(\sin (\tau (q+\eta _2^2))\ge 0\) and \(\frac{\sin (\tau (q+\eta _2^2))}{q+\eta _2^2}\) is monotone decreasing. Similarly, if \(\sqrt{\pi \tau ^{-1}-q}\le \eta _2\le \sqrt{2\pi \tau ^{-1}-q}\), then we see \(\sin (\tau (q+\eta _2^2))\le 0\). We calculate

The last estimate is verified by the smallness of \(\tau =|\alpha -\gamma |t\). We also see

for any \(n\in {\mathbb N}\). Therefore, we obtain (4.4).

Next, we consider \(I_2\), \(I_3\), and \(I_4\). Since \(|E_2|\), \(|E_3|\lesssim N^{-1+3\epsilon }\), we easily observe that

For \(I_4\), we observe that

By the above argument, we obtain

If we choose \(N\gg 1\) satisfying \(N^{-\epsilon }\ll T\), then for any \(t\in [0,T]\) with \(N^{-\epsilon }\ll t\), we have (4.2). \(\square \)

References

Bejenaru, I., Herr, S., Holmer, J., Tataru, D.: On the 2D Zakharov system with \(L^2\) Schrödinger data. Nonlinearity 22, 1063–1089 (2009)

Bejenaru, I., Herr, S., Tataru, D.: A convolution estimate for two-dimensional hypersurfaces. Rev. Mat. Iberoam. 26, 707–728 (2010)

Bennett, J., Carbery, A., Wright, J.: A non-linear generalisation of the Loomis–Whitney inequality and applications. Math. Res. Lett. 12, 443–457 (2005)

Bourgain, J.: Fourier transform restriction phenomena for certain lattice subsets and applications to nonlinear evolution equations I, II. Geom. Funct. Anal. 3(107–156), 209–262 (1993)

Christ, M.: Illposedness of a Schrödinger equation with derivative nonlinearity, preprint http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.70.1363

Colin, M., Colin, T.: On a quasilinear Zakharov system describing laser-plasma interactions. Differ. Integral Equ. 17, 297–330 (2004)

Colin, M., Colin, T.: A numerical model for the Raman amplification for laser-plasma interaction. J. Comput. Appl. Math. 193, 535–562 (2006)

Colin, M., Colin, T., Ohta, M.: Stability of solitary waves for a system of nonlinear Schrödinger equations with three wave interaction. Ann. Inst. Henri Poincaré Anal. Non linéaire 6, 2211–2226 (2009)

Colliander, J., Delort, J., Kenig, C., Staffilani, G.: Bilinear estimates and applications to 2D NLS. Trans. Am. Math. Soc. 353(8), 3307–3325 (2001)

Ginibre, J., Tsutsumi, Y., Velo, G.: On the Cauchy problem for the Zakharov system. J. Funct. Anal. 151, 384–436 (1997)

Ginibre, J., Velo, G.: The global Cauchy problem for the non linear Schrödinger equation revisited. Ann. Inst. Henri Poincaré Anal. Non linéaie 2(4), 309–327 (1985)

Hayashi, N., Li, C., Naumkin, P.: On a system of nonlinear Schrödinger equations in 2d. Differ. Integral Equ. 24, 417–434 (2011)

Hayashi, N., Li, C., Ozawa, T.: Small data scattering for a system of nonlinear Schrödinger equations. Differ. Equ. Appl. 3(2011), 415–426 (2011)

Hayashi, N., Ozawa, T., Tanaka, K.: On a system of nonlinear Schrödinger equations with quadratic interaction. Ann. Inst. Henri Poincaré 30, 661–690 (2013)

Hirayama, H.: Well-posedness and scattering for a system of quadratic derivative nonlinear Schrödinger equations with low regularity initial data. Commun. Pure Appl. Anal. 13, 1563–1591 (2014)

Hirayama, H., Kinoshita, S.: Sharp bilinear estimates and its application to a system of quadratic derivative nonlinear Schrödinger equations. Nonlinear Anal. 178, 205–226 (2019)

Ikeda, M., Katayama, S., Sunagawa, H.: Null structure in a system of quadratic derivative nonlinear Schrödinger equations. Ann. Inst. Henri Poincaré 16, 535–567 (2015)

Ikeda, M., Kishimoto, N., Okamoto, M.: Well-posedness for a quadratic derivative nonlinear Schrödinger system at the critical regularity. J. Funct. Anal. 271, 747–798 (2016)

Keel, M., Tao, T.: Endpoint Strichartz estimates. Am. J. Math. 120(5), 955–980 (1998)

Loomis, L., Whitney, H.: An inequality related to the isoperimetric inequality. Bull. Am. Math. Soc. 55, 961–962 (1949)

Mizohata, S.: On the Cauchy Problem. Notes and Reports in Mathematics in Science and Engineering. Science Press & Academic Press, Cambridge (1985)

Ozawa, T., Sunagawa, H.: Small data blow-up for a system of nonlinear Schrodinger equations. J. Math. Anal. Appl. 399, 147–155 (2013)

Sakoda, D., Sunagawa, H.: Small data global existence for a class of quadratic derivative nonlinear Schrödinger systems in two space dimensions. J. Differ. Equ. 268, 1722–1749 (2020)

Shao, S.: Sharp linear and bilinear restriction estimates for paraboloids in the cylindrically symmetric case. Rev. Mat. Imeroam. 25, 1127–1168 (2009)

Acknowledgements

This work was financially supported by JSPS KAKENHI Grant Numbers JP16K17624, JP17K14220, and JP20K14342, Program to Disseminate Tenure Tracking System from the Ministry of Education, Culture, Sports, Science and Technology, and the DFG through the CRC 1283 “Taming uncertainty and profiting from randomness and low regularity in analysis, stochastics and their applications”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Nader Masmoudi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hirayama, H., Kinoshita, S. & Okamoto, M. Well-Posedness for a System of Quadratic Derivative Nonlinear Schrödinger Equations with Radial Initial Data. Ann. Henri Poincaré 21, 2611–2636 (2020). https://doi.org/10.1007/s00023-020-00931-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-020-00931-3