Abstract

Human Leukocyte Antigen class II (HLA II) proteins are crucial for the activation of adaptive immune response. In HLA class II molecules, high rate of polymorphisms has been observed. Hence, the accurate prediction of HLA II-peptide interactions is a challenging task that can both improve the understanding of immunological processes and facilitate decision-making in vaccine design. In this regard, during the last decade various computational tools have been developed, which were mainly focused on the binding activity prediction of different HLA II isotypes (such as DP, DQ and DR) separately. This fact motivated us to make a humble contribution towards the prediction of isotypes binding propensity as a multiclass classification task. In this regard, we have analysed a binding affinity dataset, which contains the interactions of 27 HLA II proteins with 636 variable length peptides, in order to prepare new multiclass datasets for strong and weak binding peptides. Thereafter, a new ensemble based multiclass classifier, called Meta EnsembleR (MaER) is proposed to predict the activity of weak/unknown binding peptides, by integrating the results of various heterogeneous classifiers. It pre-processes the training and testing datasets by making feature subsets, bootstrap samples and creates diverse datasets using principle component analysis, which are then used to train and test the MaER. The performance of MaER with respect to other existing state-of-the-art classifiers, has been estimated using validity measures, ROC curves and gain value analysis. Finally, a statistical test called Friedman test has been conducted to judge the statistical significance of the results produced by MaER.

G. Mazzocco, S. S. Bhowmick, and I. Saha—Joint first authors and contributed equally

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

T-cells [1] are specialized immune cells, playing a crucial role in activation of the adaptive immune system. Once the HLA II proteins have established a stable binding with the exogenous peptide antigens (T-cell epitopes), they are transported on the extracellular domain of the antigen-presenting cell (APCs). The T-cell receptors (TCRs) located on the surface of the T-cells, interacts with the HLA II-peptide constituting trimeric complexes responsible for the activation of the T-cell CD4+. HLA II proteins are encoded in three different genetic loci: HLA-DP, HLA-DQ and HLA-DR (also called isotypes) and are constituted of two separate protein chains: \(\alpha \) and \(\beta \) [2]. They contain an open-ended binding cleft which allows the peptides to accommodate using multiple binding frames [3]. Thus the complexity in binding prediction problem is significantly increased.

In this regard, different computational techniques have been developed to predict HLA class II binding. Among them sequence-based and structure-based approaches are the most popular. Sequence-based methods include matrix models [4], binding motif recognition [5], artificial neural network [6], quantitative matrices [7], hidden markov models [8], support vector machines [9] and QSAR [10] based methods. Structure-based methods involve threading algorithms [11], peptide docking [12] and molecular dynamics [13]. Sophisticated methods, such as an iterative meta-search algorithm [14] and ant colony search [15] have been developed to resolve the dynamic variable length problem of HLA class II proteins prediction. Apart from this, some of the recent approaches has also significantly outperformed more traditional methods [16–18].

In this article, sequence-based information is used to predict the HLA II isotype binding propensity of peptides. For this purpose, new multiclass training and testing datasets of HLA II proteins are prepared from raw binding affinity interaction dataset of 27 HLA II proteins and 636 peptides. Thereafter, an ensemble based multiclass classifier, called Meta EnsembleR (MaER) is developed for the classification of peptides into different HLA II isotypes. The development of an ensemble based approach is basically motivated by the improving performance of similar other methods in bioinformatics [18–23]. The MaER classifier is build on the top of widely used four heterogeneous multiclass classifiers like Support Vector Machine (SVM) [24], Decision Tree (DT) [25], Naive Bayes (NB) [26] and \(K\)-Nearest Neighbor (K-NN) [27]. Before the classification task, MaER splits the training dataset into different number of rotational non-overlapping subsets. Subsequently, bootstrap sampling and principal component analysis are used for each subset. All the major principal components (in terms of coefficient and depending on eigenvalues) for all subsets are retained to create an informative set that preserve the diversity of the original training data. After that, such informative set is multiplied with original training and testing datasets before being classified. In MaER, finally a consensus of ensemble results is produced and for this purpose, selection of classifier is done randomly for different values of ensemble size. The performance of the MaER is reported in comparison with the individual classifies like SVM, DT, NB and \(K\)-NN in terms of average accuracy, precision or positive predictive value (PPV), recall, F-measure, Matthews correlation coefficient (MCC) and area under the ROC curve (AUC) values. Finally, Friedman test [28] has been conducted to judge the statistical significance of the results produced by MaER.

2 Materials and Methods

2.1 Preparation of Dataset

The dataset contains IC50 binding-affinity values originally measured between 27 HLA II proteins and 636 peptides derived from Phleumpratense [29]. The raw affinity dataset is transformed into a binary binding matrix defining binding and non-binding events solely. The IC50 value of 1000 nM is used as a threshold, since it represents the common reference to define HLA II-peptide binding events in literature [20, 29]. Thereafter, the percentage of positive activity (PPA) is separately computed for each isotype \(\phi \) (DR, DQ and DP) from the binary binding matrix. Initially, the highest number of HLA proteins capable of binding a single peptide \(x\), is used as a reference, e.g., \(\rho (DR)\), within each isotype. Consequently, three (one for each isotype) PPAs values are calculated for each given peptide. The PPA value for a given peptide, and for a given isotype is simply defined as the proportion of positive values that this peptide has for that particular isotype, with respect to the reference value for the same isotype, e.g., \(\rho (DR)\).

where

Each peptide \({x}\), does now have three PPAs: one for each isotype \(\phi \). The maximum \({max}_{1}({x})\) and second highest maximum \({max}_{2}({x})\) PPA values are identified for each peptide. The difference value \(\varDelta {x} = {max}_{1}({x}) - {max}_{2}({x})\) is used to determine if the given peptide has either strong or weak binding properties, with respect to a given threshold \({k}\). If \(\varDelta {x} \ge {k}\) then the corresponding peptide is consider a strong binder for the class \(\phi (x) \mapsto {max}_{1}({x})\) otherwise it is considered as a weak binder for the classes \((\phi _{1}(x), \phi _{2}(x)) \mapsto ({max}_{1}({x}), {max}_{2}({x}))\).

The peptide classification into multiple isotype is defined with respect to the threshold value \(k\). As the lower \(k\) value increases the number of peptides, defined as strong HLA binders. The statistics about the effect of variation of \(k\) both in terms of isotype ratio and percentage of strong binders, are given in Table 1 and has been taken into account for the choice of \(k\). Ultimately, the value of \(k\) has been set to 0.15, in order to either maintain a comparable ratio among the isotypes, and grant a equal definition of strong and weak binding peptides.

Since the classification technique requires a common number of features for each peptide, a common length of 15 AAs is adopted. In the homogenized dataset, the edging AAs of peptides longer than 15 AA are shorted. The dissection is performed upon an accurate comparative analysis of the less conserved residues within the original peptides. In order to represent the entire pool of 636 peptides in a numerical form, a 40 high-quality AA indices (HQI40) [20, 30, 31] are used. Therefore, the length of the peptide sequence is 15\(\times \)40=600. The block diagram representation of the experiment with data generation is given in Fig. 1(a).

2.2 The Proposed MetaEnsembleR

Meta EnsembleR (MaER) is an ensemble based classifier, where four heterogeneous classifiers like support vector machine, naive bayes, decision tree and \(K\)-nearest neighbor are used. It creates a diverse set of training points by preparing different non-overlapping sets of features. In order to discuss MaER, some notations are introduced here. Let \(\mathcal {P}\) is a matrix of size \(n \times M\), which consists of \(M\) input attributes or features values for each training instance and \(\mathcal {Q}\) be an one dimensional column vector contains the output attribute of each training instance in \(\hbar \). Therefore, \(\hbar \) can be expressed as after concatenating \(\mathcal {P}\) and \(\mathcal {Q}\) horizontally, i.e., \(\hbar = [\mathcal {P} \mathcal {Q}]\). Also let \(\mathcal {F}\) = \(\lbrace \mathcal {P}_1, \mathcal {P}_2,\ldots , \mathcal {P}_M \rbrace \) and \(\mathcal {T}\) are the set of features (\(M\ge 4\)) and ensemble size. Therefore, it can be assumed that a training set of \(n\) labelled instances \(\hbar =\lbrace p_j, q_j\rbrace _{j=1}^n\) in which each instance \((p_j,q_j)\) is described by \(M\) input attributes or features and an output attribute, i.e., \(p \in \mathbb {R}^n\) and \(q \in \mathbb {R}\) where \(q\) takes a value from the label space \(\lbrace L_1,L_2,\ldots ,L_c \rbrace \). In a classification task, the goal is to use the information only from \(\hbar \) to construct a classifier which can perform well on the unseen data. Note that in MaER, the feature set, \(\mathcal {F}\) = \(\lbrace \mathcal {P}_1, \mathcal {P}_2,\ldots , \mathcal {P}_M \rbrace \), splits into \(\mathcal {S}\) number of feature subsets, where \(\mathcal {S} \in [ 2, \lfloor \frac{M}{2} \rfloor ]\). Also from the pool of classifiers, one classifier is randomly selected for the each value of \(\mathcal {T}\). In order to construct the training and testing datasets for a classifier in ensemble, the following necessary steps are performed.

-

Step1: Randomly split \(\mathcal {F}\) into \(\mathcal {S}\) number of subsets, i.e.,\(\mathcal {S}_{s,t}\) for simplicity, where \(t\) counts the ensemble size and \(s\) signifies the current attribute or feature subset. As \(\mathcal {S} \in [ 2, \lfloor \frac{M}{2} \rfloor ]\), therefore, the minimum number of subsets is 2 with at least 2 features in each subset is considered.

-

Step2: Repeat the following steps \(\mathcal {S}\) times for each subset, i.e., \(s = 1, 2,\ldots ,\mathcal {S}\).

-

(a) A new submatrix \(\mathcal {P}_{s,t}\) is constructed which corresponds to the data in matrix \(\mathcal {P}\).

-

(b) From this new submatrix a bootstrap sample \(\mathcal {P}^{\prime }_{s,t}\) is drawn where the sample size is generally smaller than \(\mathcal {P}_{s,t}\).

-

(c) Thereafter, \(\mathcal {P}^{\prime }_{s,t}\) is used for PCA and the coefficients of all computed principal components are stored into a new matrix \(\mathcal {C}_{t,s}\).

-

-

Step3: In order to have a matrix of same size of feature, arrange each \(\mathcal {C}_{t,s}\) into a block diagonal sparse matrix \(\mathcal {D}_t\). Once the coefficients in \(\mathcal {C}_{t,s}\) are placed in to the block diagonal sparse matrix \(\mathcal {D}_t\), the rows of \(\mathcal {D}_t\) are rearranged so that the order of them corresponds to the original attributes in \(\mathcal {F}\). During this rearrangement, columns with all zero values are removed from the sparse matrix.

-

Step4: The rearranged rotation matrix \(\mathcal {D}^r_t\) is then used as \([\mathcal {P}\mathcal {D}^r_t; \mathcal {Q}]\) and \([\mathcal {I}\mathcal {D}^r_t]\) for training and test sets of classifier, where \(\mathcal {I}\) is a given test sample.

-

Step5: In the testing phase, let \( MaER _{v,t}(\mathcal {I}\mathcal {D}^r_t)\) be the posterior probability produced by the classifier \( MaER _t\) on the hypothesis that \(\mathcal {I}\) belongs to class \(L_v\). Then the confidence for a class is calculated by the average posterior probability of combination base classifiers:

$$\begin{aligned} \mathcal {Q}_v (\mathcal {I})=\frac{1}{\mathcal {T}} \sum _{t=1}^\mathcal {T} MaER _{v,t} (\mathcal {I}\mathcal {D}^r_t),~~ where ~~v=1,2,\ldots ,c \end{aligned}$$(3)Thereafter, \(\mathcal {I}\) is assigned to the class with the largest confidence. Note that all the five steps will repeat for \(t= 1,2,\ldots ,\mathcal {T}\).

This is to be noted that due to the process of random feature subdivision, in each iteration, the selected classifier in MaER will have new sets of training and testing data, which will help to diversify the ensemble of classifiers in order to get better classification results. The MaER is applied to predict the multiclass binding activity of HLA class II protein, i.e., DP, DQ and DR at a time. For this purpose, based on threshold value \(k\)=0.15, multiclass dataset of strong binding peptides is used to train the MaER to predict the classes of weak binding peptides. Note that here the train and test datasets are normalized, where each input data is normalized to the range [0,1]. The flowchart of MaER is shown in Fig. 1(b).

3 Results and Discussions

The MaER contains four basic classifiers like SVM, ND, DT and \(K\)-NN. Therefore, setting the parameters of these classifiers is equivalent to set the parameters of MaER. Thus the parameters of SVM such as \(\gamma \) for kernel function and the soft margin \(\mathcal {C}\) (cost parameter), are set to be \(0.5\) and \(2.0\), respectively. Note that, for SVM and DT, RBF (Radial Basis Function) kernel and C4.5 classifier are used. The value of \(\textit{K}\) for the \(\textit{K}\)-NN classifier, ensemble size \(\mathcal {T}\) and number of feature subsets \(\mathcal {S}\) are set to 7, 10 and 50, experimentally. The MaER classifier has been executed 20 times and the performance of MaER classifier is evaluated in term of average accuracy, precision or PPV, recall or sensitivity, F-measure, MCC and area under the ROC curve (AUC) values. To compute these metrics the result of three class problem has been decomposed into three indivisible two class results by setting class-1 as an active and other classes are inactive, similarly, for class-2 and class-3. Thereafter, the average is computed and that also used to computer the average of 20 runs. Moreover, the effectiveness of MaER results has also been justified in terms of gain value. The gain is calculated in percentage as follows.

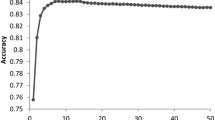

Table 2 reports the performance of all classifiers including MaER on HLA II-peptide binding prediction. From the results, it can be clearly stated that the MaER performing better than the other classifiers. Moreover, the result is also suggesting that it can be used as a potentially computation tool for discovering multiclass HLA class II binding epitopes that has a great importance in vaccinology. The best ROC curves of all the classifiers are shown in Fig. 2. The curves produced by MaER for DP, DQ and DR, are showing the average AUC values like 0.92, 0.93 and 0.91. Moreover. The MaER classifier achieves the average gain values of 2.18 %, 15.45 %, 29.25 % and 2.81 % over SVM, DT, NB and K-NN classifiers, respectively. Finally, Friedman test based has been conducted average accuracy values of all classifiers to judge the statistical significance of the predicted results. The test produced a Chi-square value of 86.81 and p-value of 0.126\(\times 10^{-5}\) at \(\alpha \)= 0.05 significance level. The results provide a strong evident in order to rejecting the null hypothesis, that means there is a significant difference in the results produced by various classifies, while MaER produced the best results among them.

4 Conclusions

In this article, the binding prediction of HLA II isotypes is considered as a multiclass problem. For this purpose, new multiclass training and testing datasets of HLA II proteins have been prepared after analysing raw binding affinity interaction dataset of 27 HLA II proteins and 636 peptides. Thereafter, an ensemble based multiclass classifier, named as Meta EnsembleR (MaER) has been developed for the same problem. MaER is an ensemble based classifier which avoids the weakness of a single classifier while improving the prediction performance by integrating the outputs of multiple heterogeneous classifiers. It generally pre-processes the original training and testing datasets by making feature subsets, bootstrap samples and creates diverse datasets using principle component analysis. The efficacy of the developed MaER has been demonstrated in comparison with support vector machine, decision tree, naive bayes and \(K\)-nearest neighbor on newly generated test data in terms of average accuracy, precision, recall, F-measure, MCC, area under the ROC curve (AUC) and gain values. It is observed that MaER achieves the maximum gain of \(29.25\,\%\) over Naive Bayes classifier. Finally, the statistical significance of the results produced by MaER has been justified by the Friedman test.

The application of the MaER method could be particularly beneficial wherever the informations about HLA isotype propensity and coverage are crucial. One example is the design of peptide-based vaccines, where the identification of the epitopes able to interact with different HLA isotypes, is a crucial factor for vaccine efficacy and population coverage. Another case is the study of autoimmune diseases, where the detection of self epitopes showing extensive cross reactivity with several HLA isotypes, is indeed a central issue. Apart from this, MaER can be used to find the potential markers from gene expression data [32, 33].

References

Flower, D.R. (ed.): Bioinformatics for Vaccinology. Wiley-Blackwel, Oxford (2008)

Janeway, C.A., Travers, P., Walport, M., Capra, J.D.: Immunobiology: The Immune System in Health and Disease. Garland Publications, New York (1999)

Rudensky, A., Janeway, C.A.: Studies on naturally processed peptides associated with MHC class II molecules. Chem. Immunol. 57, 134–351 (1993)

Sturniolo, T., Bono, E., Ding, J., Raddrizzani, L., Tuereci, O., Sahin, U., Braxenthaler, M., Gallazzi, F., Protti, M.P., Sinigaglia, F., Hammer, J.: Generation of tissue-specific and promiscuous HLA ligand databases using DNA microarrays and virtual HLA class II matrices. Nat. Biotech. 17(6), 555–561 (1999)

Sette, A., Buus, S., Appella, E., Smith, J.A., Chesnut, R., Miles, C., Colon, S.M., Grey, H.M.: Prediction of major histocompatibility complex binding regions of protein antigens by sequence pattern analysis. Proc. National Acad. Sci. 86, 3296–3300 (1989)

Brusic, V., Rudy, G., Honeyman, M., Hammer, J., Harrison, L.: Prediction of MHC class II-binding peptides using an evolutionary algorithm and artificial neural network. Bioinformatics 14(2), 121–130 (1998)

Hammer, J., Bono, E., Gallazzi, F., Belunis, C., Nagy, Z., Sinigaglia, F.: Precise prediction of major histocompatibility complex class II-peptide interaction based on peptide side chain scanning. J. Exp. Med. 180, 2353–2358 (1994)

Noguchi, H., Kato, R., Hanai, T., Matsubara, Y., Honda, H., Brusic, V., Kobayashi, T., Biosci, J.: Hidden markov model-based prediction of antigenic peptides that interact with MHC class II molecules. J. Biosci. Bioeng. 94(3), 264–270 (2002)

Wan, J., Liu, W., Xu, Q., Ren, Y., Flower, D.R., Li, T.: SVRMHC prediction server for MHC-binding peptides. BMC Bioinform. 7, 463 (2006)

Dimitrov, I., Garnev, P., Flower, D.R., Doytchinova, I.: Peptide binding to the HLA-DRB1 sypertype: a proteochemometric analysis. J. Med. Chem. 45(1), 236–243 (2010)

Adrian, P.E., Rajaseger, G., Mathura, V.S., Sakharkar, M., Kangueane, P.: Types of inter-atomic interactions at the MHC-peptide interface: Identifying commonality from accumulated data. BMC Struct. Biol. 2, 2 (2002)

Atanasova, M., Dimitrov, I., Flower, D.R., Doytchinova, I.: MHC Class II binding prediction by molecular docking. Mol. Inf. 30, 368–375 (2011)

Oytchinova, I.D., Petkov, P., Dimitrov, I., Atanasova, M., Flower, D.R.: HLA-DP2 binding prediction by molecular dynamics simulations. Protein Sci. 20, 1918–1928 (2011)

Mallios, R.R.: Predicting class II MHC peptide multi-level binding with an iterative stepwise discriminant analysis meta-algorithm. Bioinformatics 17(10), 942–948 (2001)

Karpenko, O., Shi, J., Dai, Y.: Prediction of MHC class II binders using the ant colony search strategy. Artif. Intell. Med. 35, 147–156 (2005)

Salomon, J., Flower, D.R.: Predicting class II MHC-peptide binding: a kernel based approach using similarity scores. BMC Bioinform. 7, 501 (2006)

Zhang, W., Liu, J., Niu, Y.: Quantitative prediction of MHC-II binding affinity using particle swarm optimization. Artif. Intel. Med. 50(2), 127–132 (2010)

Bhowmick, S.S., Saha, I., Mazzocco, G., Maulik, U., Rato, L., Bhattacharjee, D., Plewczynski, D.: Application of RotaSVM for HLA class II protein-peptide interaction prediction. In: Proceedings of the Fifth International Conference on Bioinformatics Models, Methods and Algorithms (BIOINFORMATICS 2014), pp. 178–185 (2014)

Bhowmick, S.S., Saha, I., Rato, L., Bhattacharjee, D.: RotaSVM: a new ensemble classifier. Adv. Intel. Syst. Comput. 227, 47–57 (2013)

Saha, I., Mazzocco, G., Plewczynski, D.: Consensus classification of human leukocyte antigen class II proteins. Immunogenetics 65, 97–105 (2013)

Pio, G., Malerba, D., D’Elia, D., Ceci, M.: Integrating microRNA target predictions for the discovery of gene regulatory networks: a semi-supervised ensemble learning approach. BMC Bioinform. 15(Suppl 1), S4 (2014)

Marbach, D., Costello, J.C., Kuffner, R., et al.: Wisdom of crowds for robust gene network inference. Nat. Methods 9, 796–804 (2012)

Saha, I., Zubek, J., Klingstrom, T., Forsberg, S., Wikander, J., Kierczak, M., Maulik, U., Plewczynski, D.: Ensemble learning prediction of protein-protein interactions using proteins functional annotations. Mol. BioSyst. 10, 820–830 (2014)

Vapnik, V.N.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Quinlan, J.R.: C4.5: Programs for Machine Learning. Morgan Kaufma, California (1993)

George, H., Langley, J.P.: Estimating continuous distributions in bayesian classifiers. In: Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence, pp. 338–345 (1995)

Cover, T., Hart, P.: Nearest neighbor pattern classification. IEEE Trans. Inf. Theor. 13(1), 21–27 (1967)

Friedman, M.: A comparison of alternative tests of significance for the problem of m rankings. Ann. Math. Stat. 11, 86–92 (1940)

Greenbaum, J., Sidney, J., Chung, J., Brander, C., Peters, B., Sette, A.: Functional classification of class II human leukocyte antigen (HLA) molecules reveals seven different supertypes and a surprising degree of repertoire sharing across supertypes. Immunogenetics 63(6), 325–335 (2011)

Saha, I., Maulik, U., Bandyopadhyay, S., Plewczynski, D.: Fuzzy clustering of physicochemical and biochemical properties of amino acids. Amino Acid 43(2), 583–594 (2011)

Plewczynski, D., Basu, S., Saha, I.: AMS 4.0: consensus prediction of post-translational modifications in protein sequences. Amino Acid 43(2), 573–582 (2012)

Saha, I., Maulik, U., Bandyopadhyay, S., Plewczynski, D.: Improvement of new automatic differential fuzzy clustering using SVM classifier for microarray analysis. Expert Syst. Appl. 38(12), 15122–15133 (2011)

Saha, I., Plewczynski, D., Maulik, U., Bandyopadhyay, S.: Improved differential evolution for microarray analysis. Int. J. Data Min. Bioinform. 6(1), 86–103 (2012)

Acknowledgments

This work was supported by grants from the Polish National Science Centre (2014/15/B/ST6/05082 and UMO-2013/09/B/NZ2/00121), COST BM1405 EU action and European Union Seventh Framework Program (FP7/2007-2013) under the grant agreement no.: 246016.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Mazzocco, G., Bhowmick, S.S., Saha, I., Maulik, U., Bhattacharjee, D., Plewczynski, D. (2015). MaER: A New Ensemble Based Multiclass Classifier for Binding Activity Prediction of HLA Class II Proteins. In: Kryszkiewicz, M., Bandyopadhyay, S., Rybinski, H., Pal, S. (eds) Pattern Recognition and Machine Intelligence. PReMI 2015. Lecture Notes in Computer Science(), vol 9124. Springer, Cham. https://doi.org/10.1007/978-3-319-19941-2_44

Download citation

DOI: https://doi.org/10.1007/978-3-319-19941-2_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-19940-5

Online ISBN: 978-3-319-19941-2

eBook Packages: Computer ScienceComputer Science (R0)