Abstract

This paper addresses the problem of failing \(\mathtt {RDF}\) queries. Query relaxation is one of the cooperative techniques that allows providing users with alternative answers instead of an empty result. While previous works on query relaxation over \(\mathtt {RDF}\) data have focused on defining new relaxation operators, we investigate in this paper techniques to find the parts of an \(\mathtt {RDF}\) query that are responsible of its failure. Finding such subqueries, named Minimal Failing Subqueries (\(\mathtt {MFSs}\)), is of great interest to efficiently perform the relaxation process. We propose two algorithmic approaches for computing \(\mathtt {MFSs}\). The first approach (\(\mathtt {LBA}\)) intelligently leverages the subquery lattice of the initial \(\mathtt {RDF}\) query while the second approach (\(\mathtt {MBA}\)) is based on a particular matrix that improves the performance of \(\mathtt {LBA}\). Our approaches also compute a particular kind of relaxed RDF queries, called Ma x imal Succeeding Subqueries (\(\mathtt {XSSs}\)). \(\mathtt {XSSs}\) are subqueries with a maximal number of triple patterns of the initial query. To validate our approaches, a set of thorough experiments is conducted on the \(\mathtt {LUBM}\) benchmark and a comparative study with other approaches is done.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

With the extensive adoption of \(\mathtt {RDF}\), specialized databases called \(\mathtt {RDF}\) databases (or triple-store) have been developed to manage large amounts of \(\mathtt {RDF}\) data (e.g., Jena [1]). \(\mathtt {RDF}\) databases are based on a generic representation (a triples table or one of its variants) that can manage a set of diverse \(\mathtt {RDF}\) data, ranging from structured data to unstructured data. This flexibility makes it difficult for users to correctly formulate RDF queries that return the desired answers. This is why user RDF queries often return an empty result.

Query relaxation is one of the cooperative techniques that allows providing users with alternative answers instead of an empty result. Several works have been proposed to relax queries in the \(\mathtt {RDF}\) context [2–8]. They mainly focus either on introducing new relaxation operators or on the efficient processing of top-k RDF queries. Usually, only some parts of a failing \(\mathtt {RDF}\) query are responsible of its failure. Finding such subqueries, named Minimal Failing Subqueries (\(\mathtt {MFSs}\)), provides the user with an explanation of the empty result returned and a guide to relax his/her query.

To the best of our knowledge, no work exists in the literature that addresses the issue of computing \(\mathtt {MFSs}\) of failing \(\mathtt {RDF}\) queries. Inspired by some previous works in relational databases [9] and recommendation systems [10], we propose in this paper two algorithmic approaches for searching \(\mathtt {MFSs}\) of failing \(\mathtt {RDF}\) queries. The first one is a smart exploration of the subquery lattice of the failing query, while the second one relies on a particular matrix obtained by executing each triple pattern involved in the query. These algorithms also compute a particular kind of relaxed queries, called Ma x imal Succeeding Subqueries (\(\mathtt {XSSs}\)), that return non-empty answers. Each \(\mathtt {XSS}\) provides a simple way to relax a query by removing or making optional the set of triple patterns that are not in an \(\mathtt {XSS}\). Our contributions are summarized as follows.

-

1.

We propose an adapted and extended variant of Godfrey’s approach [9], called \(\mathtt {LBA}\), for computing both the \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) of a failing \(\mathtt {RDF}\) query. Both properties and algorithmic aspects of \(\mathtt {LBA}\) are investigated.

-

2.

Inspired by the work done in [10], we devise a second approach, called \(\mathtt {MBA}\), which only requires \(n\) queries over the target \(\mathtt {RDF}\) database, where \(n\) is the number of query triple patterns. The skyline of the matrix on which this approach is based, directly provides the \(\mathtt {XSSs}\) of a query. This matrix can also improve the performance of \(\mathtt {LBA}\).

-

3.

We study the efficiency and effectiveness of the above approaches through a set of experiments conducted on two datasets of the \(\mathtt {LUBM}\) benchmark. We also compare our propositions with existing similar approaches on the basis of the experimental results obtained.

The paper is structured as follows. Section 2 introduces some basic notions and formalizes the problem we consider. Sections 3 and 4 present our approaches \(\mathtt {LBA}\) and \(\mathtt {MBA}\) to find the \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) of a failing \(\mathtt {RDF}\) query. We present our experimental evaluation in Sect. 5 and conclude in Sect. 6.

2 Preliminaries and Problem Statement

This section formally describes the parts of \(\mathtt {RDF}\) and \(\mathtt {SPARQL}\) that are necessary to this paper. We use the notations and definitions given in [11].

Data Model. An \(\mathtt {RDF}\) triple is a triple (subject, predicate, object) \( \in (U \cup B) \times U \times (U \cup B \cup L)\) where \(U\) is a set of \(\mathtt {URIs}\), \(B\) is a set of blank nodes and \(L\) is a set of literals. We denote by \(T\) the union \(U \cup B \cup L\). An \(\mathtt {RDF}\) database stores a set of \(\mathtt {RDF}\) triples in a triples table or one of its variants.

Query. An \(\mathtt {RDF}\) triple pattern \(t\) is a triple (subject, predicate, object) \( \in (U \cup V) \times (U \cup V) \times (U \cup V \cup L)\), where \(V\) is a set of variables disjoint from the sets \(U\), \(B\) and \(L\). We denote by \(var(t)\) the set of variables occurring in \(t\). We consider \(\mathtt {RDF}\) queries defined as a conjunction of triple patterns: \( Q = t_{1} \wedge \cdots \wedge t_{n}\). The number of triple patterns of a query \(Q\) is denoted by \(|Q|\).

Query Evaluation. A mapping \(\mu \) from \(V\) to \(T\) is a partial function \( \mu : V \rightarrow T\). For a triple pattern \(t\), we denote by \(\mu (t)\) the triple obtained by replacing the variables in \(t\) according to \(\mu \). The domain of \(\mu \), \(dom(\mu )\), is the subset of \(V\) where \(\mu \) is defined. Two mappings \(\mu _{1}\) and \(\mu _{2}\) are compatible when for all \( x \in dom(\mu _{1}) \ \cap \ dom(\mu _{2})\), it is the case that \(\mu _{1}(x) = \mu _{2}(x)\) i.e., when \(\mu _{1} \cup \mu _{2}\) is also a mapping. Let \(\varOmega _{1}\) and \(\varOmega _{2}\) be sets of mappings, we define the join of \(\varOmega _{1}\) and \(\varOmega _{2}\) as: \(\varOmega _{1} \bowtie \varOmega _{2} = \{ \mu _{1} \cup \mu _{2} \ | \ \mu _{1} \in \varOmega _{1}, \mu _{2} \in \varOmega _{2} \ are \ compatible \ mappings \}\). Let \(D\) be an \(\mathtt {RDF}\) database, \(t\) a triple pattern. The evaluation of the triple pattern \(t\) over \(D\) denoted by \([[t]]_{D}\) is defined by: \([[t]]_{D} = \{\mu \ | \ dom(\mu ) = var(t) \wedge \mu (t) \in D\}\). Let \(Q\) be a query, the evaluation of \(Q\) over \(D\) is defined by: \([[Q]]_{D} = [[t_{1}]]_{D} \bowtie \cdots \bowtie [[t_{n}]]_{D}\). This evaluation can be done under different entailment regimes as defined in the \(\mathtt {SPARQL}\) specification. In this paper, the examples as well as our implementation are based on the simple entailment regime. However, the proposed algorithms can be used with any entailment regime.

\(\mathtt {MFS}\) and \(\mathtt {XSS}\) . Given a query \( Q = t_{1} \wedge \cdots \wedge t_{n}\), a query \( Q' = t_{i} \wedge \cdots \wedge t_{j}\) is a subquery of \(Q\), \(Q' \subseteq Q\), iff \( \{i, \cdots , j\} \subseteq \{1, \cdots , n\}\). If \( \{i, \cdots , j\} \subset \{1, \cdots , n\}\), we say that \(Q'\) is a proper subquery of \(Q\) (\(Q' \subset Q\)). If a subquery \(Q'\) of \(Q\) fails, then the query \(Q\) fails.

A Minimal Failing Subquery MFS of a query \(Q\) is defined as follows: \([[MFS]]_{D}= \emptyset \ \wedge \not \exists \ Q' \subset MFS \ such \ that \ [[Q']]_{D} = \emptyset \). The set of all \(\mathtt {MFSs}\) of a query \(Q\) is denoted by \(mfs(Q)\). Each \(\mathtt {MFS}\) is a minimal part of the query that fails.

A Maximal Succeeding Subquery \(XSS\) of a query \(Q\) is defined as follows: \([[XSS]]_{D} \ne \emptyset \ \wedge \not \exists \ Q' \ such \ that \ XSS \subset Q' \wedge [[Q']]_{D} \ne \emptyset \). The set of all \(\mathtt {XSSs}\) of a query \(Q\) is denoted by \(xss(Q)\). Each \(\mathtt {XSS}\) is a maximal (in terms of triple patterns) non-failing subquery viewed as a relaxed query.

Problem Statement. We are concerned with computing the \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) of a failing \(\mathtt {RDF}\) query over an \(\mathtt {RDF}\) database efficiently.

3 Lattice-Based Approach (LBA)

LBA is an algorithm to compute simultaneously both the sets \(mfs(Q)\) and \(xss(Q)\) of a failing \(\mathtt {RDF}\) query \(Q\). It is a three steps procedure: (1) find an MFS of \(Q\), (2) compute the potential XSSs, i.e., the maximal queries that do not include the MFS previously found and (3) execute potential XSSs; if they return results, they are XSSs, else this process will be applied recursively on failing potential XSSs.

Finding an MFS. This step is performed with the a_mel_fast algorithm proposed in [9]. This algorithm is based on the following proposition (proved in [9]). Let \(Q=t_1 \wedge ... \wedge t_n \) be a failing query and \(Q_i=Q-t_i\) a proper subquery of \(Q\). If \([[Q]]_{D} = \emptyset \) and \([[Q_{i}]]_{D} \ne \emptyset \) then any MFS of \(Q\) contains \(t_i\).

This property is leveraged in the Algorithm 1 to find an MFS in \(n\) steps (i.e., its complexity is then \(\mathcal {O}(n)\)). The algorithm removes a triple pattern \(t_i\) from \(Q\) resulting in the proper subquery \(Q'\). If \([[Q']]_D\) is not empty, \(t_i\) is part of any MFS (thanks to the previous proposition) and it is added to the result \(Q^{*}\). Else, \(Q'\) has an MFS that does not contain \(t_i\). Then, the algorithm iterates over another triple pattern of \(Q\) to find an MFS in \(Q' \wedge Q^*\). This process stops when all the triple patterns of \(Q\) have been processed.

Figure 1 shows an execution of the Algorithm 1 to compute an MFS of the following query \(Q=t_1 \wedge t_2 \wedge t_3 \wedge t_4 \):

The algorithm removes the triple pattern \(t_1\) from \(Q\), resulting in the subquery \(Q'\). As this subquery returns an empty result, the algorithm iterates over the triple pattern \(t_2\) to find an MFS in \(t_2 \wedge t_3 \wedge t_4 \). The subquery \(t_3 \wedge t_4\) is successful, hence \(t_2\) is part of the MFS \(Q^*\). The same result is obtained for \(t_3\), which is added to \(Q^*\). For \(t_4\), the subquery \(t_2 \wedge t_3\) returns an empty result and thus \(t_4\) does not belong to \(Q^*\). As all the triple patterns of \(Q\) have been processed, the algorithm stops and returns the MFS \(Q^* = t_2 \wedge t_3\).

Computing Potential XSSs. By definition, all queries that include the MFS \(Q^*\), found in the previous step, return an empty set of answers. Thus, they can be neither MFS nor XSS of \(Q\) and they are pruned from the search space. The exploration of the subquery lattice continues with the largest subqueries of \(Q\) that do not include \(Q^*\). If these subqueries are successful, they are XSSs of \(Q\). Thus, we call them potential XSSs and we denote this set of queries by \(pxss(Q, Q^*)\). This set can be computed as follows:

Indeed, for each triple pattern \(t_i\) of \(Q^*\), a subquery of the form \(Q_m \leftarrow Q - t_i\) does not include \(Q^*\) and, in addition, it is maximal due to its large size, i.e., \(|Q_m|=|Q|-1\). Following the previous definition, \(pxss(Q, Q^*)\) is computed with a simple algorithm running in linear time (\(\mathcal {O}(n^*)\) where \(n^* = |Q^*|\)).

Figure 2 illustrates \(pxss(Q,Q^*)\) of our running example (\(Q=t_1 \wedge t_2 \wedge t_3 \wedge t_4\) and \(Q^*=t_2 \wedge t_3\)) on the lattice of subqueries. The maximal subqueries of \(Q\) that do not contain \(t_2 \wedge t_3\) are \(t_1 \wedge t_2 \wedge t_4\) and \(t_1 \wedge t_3 \wedge t_4\).

Finding all XSSs and MFSs (Algorithm 2). If \(Q\) has only a single \(\mathtt {MFS}\) \(Q^*\) (which includes the case where \(Q\) is itself an MFS), then \(xss(Q)= pxss(Q, Q^*)\).

Proof. Assume that \( \exists Q' \in pxss(Q, Q^*)\) such that \( [[Q']]_D= \emptyset \). Since \(Q\) has a single MFS, \(Q^*\) is a subset of \(Q'\). Contradiction with the definition of \(pxss(Q, Q^*)\).

We now consider the general case, i.e., when \(Q\) has several \(\mathtt {MFSs}\). For each query \(Q' \in pxss(Q,Q^*)\), if \([[Q']]_{D} \ne \emptyset \) then \(Q'\) is an effective \(\mathtt {XSS}\) of \(Q\), i.e., \(Q' \in xss(Q)\). Otherwise, \(Q'\) has (at least) an \(\mathtt {MFS}\), which is also an \(\mathtt {MFS}\) of \(Q\), different from \(Q^*\). This MFS can be identified with the FindAnMFS algorithm (see Algorithm 1) and thus the complete process can be recursively applied on each failing query of \(pxss(Q,Q^*)\). However, as different queries of \(pxss(Q,Q^*)\) may contain the same MFS, this process may identify the same MFS several times and thus be inefficient. Algorithm 2 improves this approach by incrementally computing potential XSSs that do not contain the set of identified MFSs. When a second MFS \(Q^{**}\) is identified, this algorithm iterates over the previously found potential XSSs \(pxss\) that contain \(Q^{**}\). To avoid finding again this MFS, the algorithm replaces them by their largest subqueries that do not contain \(Q^{**}\) (i.e., their own potential XSSs) and are not included in any query of \(pxss\) (otherwise they are not the largest potential XSSs of \(Q\)).

Figure 3 shows an execution of Algorithm 2 to compute the MFSs and XSSs of our running example: \(Q=t_1 \wedge t_2 \wedge t_3 \wedge t_4\), \(Q^*=t_2 \wedge t_3\) and \(pxss(Q,Q^*)=\{t_1 \wedge t_2 \wedge t_4\), \(t_1 \wedge t_3 \wedge t_4\}\). The algorithm executes the query \(t_1 \wedge t_3 \wedge t_4\). As an empty set of answers is obtained, the Algorithm 1 is applied on this query to find a second MFS \(Q^{**} = t_1\). The two potential XSSs contain this MFS and thus they are replaced with their largest subqueries that do not contain \(Q^{**}\), i.e., \(t_3 \wedge t_4\) and \(t_2 \wedge t_4\). By executing these two queries, the algorithm finds that these potential XSSs are effectively XSSs. The algorithm stops and returns these two XSSs and the MFSs previously found (see Fig. 2).

4 Matrix-Based Approach (MBA)

In the approach proposed in the previous section, the theoretical search space exponentially increases with the number of triple patterns of the original query. Jannach [10] has proposed a solution to avoid this problem in the context of recommender systems. This approach is based on a matrix, called the relaxed matrix, computed in a preprocessing step with \(n\) queries where \(n\) is the number of query atoms. This matrix gives, for each potential solution of a query, the set of query atoms satisfied by this solution. The \(\mathtt {XSSs}\) of the query can then be obtained from this matrix without the need for further database queries.

In this section, we adapt this approach to \(\mathtt {RDF}\) databases to compute both the \(\mathtt {XSSs}\) and \(\mathtt {MFSs}\) of a query. Compared to [10], the main difficulty is to compute the set of potential solutions of a query. Indeed, in the context of recommender systems, these solutions are already known as they are the set of products described in the product catalog. This is not the case in the context of \(\mathtt {RDF}\) databases.

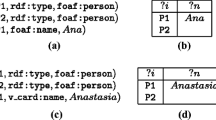

The Relaxed Matrix of a Query. We first informally define the notion of relaxed matrix through an example. Figure 4(c) presents the relaxed matrix of the query \(Q\) given in Fig. 4(b) when it is executed on the \(\mathtt {RDF}\) dataset presented in Fig. 4(a). Each row of the matrix is a mapping (as defined in Sect. 2) that satisfies at least one triple pattern. For example, the first row corresponds to the mapping \(\mu : \ ?p \rightarrow p_{1}\). A mapping \(\mu \) has the value 1 in the column \(t_{i}\), if \(\mu \) satisfies \(t_{i}\). Thus the matrix entry that lies in the first row and the \(t_{1}\) column is set to 1 as \(p_{1}\) is a professor in the considered \(\mathtt {RDF}\) dataset.

As we have seen in Sect. 2, the evaluation of a query consists in finding the mappings that satisfy all its triple patterns using join operations. The relaxed matrix contains the mappings that satisfy at least one triple pattern. Intuitively, one can think of using the OPTIONAL operator of \(\mathtt {SPARQL}\) to compute these mappings. However, the semantics of this operator is based on the outer join operation [11], which eliminates from its operands the mappings that satisfy the inner join operation [12]. In our case, we need to keep these mappings as they may be compatible with the mappings of another triple pattern. For example, the operation  eliminates the mapping \(\mu : \ ?p \rightarrow p_{1}\) from the relaxed matrix in the example presented in Fig. 4. This mapping is needed to find other mappings such as \(\mu : \ ?p \rightarrow p_{1} \ ?s \rightarrow s_{2}\). As a consequence, we have defined an extended join operation, which is defined as follows.

eliminates the mapping \(\mu : \ ?p \rightarrow p_{1}\) from the relaxed matrix in the example presented in Fig. 4. This mapping is needed to find other mappings such as \(\mu : \ ?p \rightarrow p_{1} \ ?s \rightarrow s_{2}\). As a consequence, we have defined an extended join operation, which is defined as follows.

Formal Definition of the Relaxed Matrix. Let \(\varOmega _{1}\) and \(\varOmega _{2}\) be sets of mappings, the extended join of \(\varOmega _{1}\) and \(\varOmega _{2}\) is defined by:  Let \(Q\) be a query, the relaxed evaluation of \(Q\) over \(D\) is defined by:

Let \(Q\) be a query, the relaxed evaluation of \(Q\) over \(D\) is defined by:  . We define the relaxed matrix \(M\) of a query \(Q\) over an \(\mathtt {RDF}\) database \(D\) as a two-dimensional table with \(|Q|\) columns (one for each triple pattern of the query) and \(|[[Q]]_{D}^{R}|\) rows (one for each mapping of \([[Q]]_{D}^{R} \)). For a mapping \(\mu \in [[Q]]_{D}^{R} \) and a triple pattern \(t_i \in Q\), \(M[\mu ][t_i]=1 \Leftrightarrow \mu (t_i) \in D , else \ M[\mu ][t_i]=0\).

. We define the relaxed matrix \(M\) of a query \(Q\) over an \(\mathtt {RDF}\) database \(D\) as a two-dimensional table with \(|Q|\) columns (one for each triple pattern of the query) and \(|[[Q]]_{D}^{R}|\) rows (one for each mapping of \([[Q]]_{D}^{R} \)). For a mapping \(\mu \in [[Q]]_{D}^{R} \) and a triple pattern \(t_i \in Q\), \(M[\mu ][t_i]=1 \Leftrightarrow \mu (t_i) \in D , else \ M[\mu ][t_i]=0\).

Computing the Relaxed Matrix. Thus, to obtain the relaxed matrix, we need first to evaluate each triple pattern \(t_{i}\) over \(D\) to obtain \([[t_{i}]]_D\). Then, we compute the extended joins of all the \([[t_{i}]]_D\) while keeping track of the matched triple patterns to get the matrix values. The Algorithm 3 follows this approach using a nested loop algorithm. This algorithm only requires \(n\) queries where \(n\) is the number of triple patterns. Yet, our experiments conducted on the \(\mathtt {LUBM}\) benchmark (see Sect. 5) show that this algorithm can still take a notable amount of time as the size of the matrix can be large for queries over large datasets involving triple patterns that are not selective. Moreover, proper subqueries of the initial query can lead to Cartesian products (the triple patterns do not share any variable), which imply an expensive computation cost as well as a matrix of a large size (see Sect. 5 for details). As a first step to improve this approach, we have specialized this approach for star-shaped queries (i.e., a set of triple patterns with a shared join variable in the subject position) as they are often found in the query logs of real datasets [13].

Optimized Computation for Star-Shaped Queries. The computation of star-shaped queries is simpler than in the general case. First, subqueries of a star-shaped query cannot be Cartesian products. Second, a single variable is used to join all the triple patterns. Thanks to this latter property we can use full outer joins to compute the relaxed matrix as depicted in the Algorithm 4. This algorithm executes one query for each triple pattern. For each result \(\mu \) of such a subquery, the value of the join variable (i.e., the restriction of the function \(\mu \) to \(\{x\}\) denoted by \(\mu _{|\{x\}}\)) is added to the matrix, if it is not already in it, and the value of this row is set to 1 for the corresponding triple pattern.

The Algorithm 4, called \(NQ\), can be used for any \(\mathtt {RDF}\) database (implemented on a relational database management system (\(\mathtt {RDBMS}\)) or not). If we consider an \(\mathtt {RDF}\) database implemented as a triples table \(t(s,p,o)\) in an \(\mathtt {RDBMS}\), we can use a single \(\mathtt {SQL}\) query to compute the relaxed matrix. This query is roughly the translation of the  expression. Inspired by the work of Cyganiak conducted on the translation of \(\mathtt {SPARQL}\) queries into \(\mathtt {SQL}\) [14], we use \(\mathtt {SQL}\) outer join operators to compute this expression and the \(coalesce\) functionFootnote 1 to manage unbound values. In addition, we use the \(case\) operator to test if a triple pattern is matched and thus to get the matrix values (1 if it is matched, else 0). For example, the \(\mathtt {SQL}\) query used to compute the relaxed matrix of the query \(t_{1} \wedge t_{2}\) (Fig. 4) is:

expression. Inspired by the work of Cyganiak conducted on the translation of \(\mathtt {SPARQL}\) queries into \(\mathtt {SQL}\) [14], we use \(\mathtt {SQL}\) outer join operators to compute this expression and the \(coalesce\) functionFootnote 1 to manage unbound values. In addition, we use the \(case\) operator to test if a triple pattern is matched and thus to get the matrix values (1 if it is matched, else 0). For example, the \(\mathtt {SQL}\) query used to compute the relaxed matrix of the query \(t_{1} \wedge t_{2}\) (Fig. 4) is:

This approach, called 1Q, has two advantages: (1) a single query is used to compute the relaxed matrix, (2) the \(\mathtt {RDBMS}\) chooses the adequate join algorithm.

Computing the XSSs from the Relaxed Matrix. Abusing notation, we denote by \(xss(\mu )\) the proper subquery of \(Q\) that can be executed to retrieve \(\mu \). It can be directly obtained from the relaxed matrix: \(xss(\mu ) = \{ t_{i} \ | \ M[\mu ][t_{i}]=1 \} \). Finding the \(\mathtt {XSSs}\) of a query \(Q\) can be done in two steps:

-

1.

Computing the skyline \(SKY\) of the relaxed matrix: \( SKY (M) = \{ \mu \in [[Q]]_{D}^{R} \ | \ \not \exists \mu ' \in [[Q]]_{D}^{R} \ such \ that \ \mu \prec \mu ' \}\) where \(\mu \prec \mu '\) if (i) on every triple pattern \(t_{i}\), \(M[\mu ][t_{i}] \le M[\mu '][t_{i}]\) and (ii) on at least one triple pattern \(t_{j}\), \(M[\mu ][t_{j}] < M[\mu '][t_{j}]\). This step can be done by using one of the numerous algorithms defined to efficiently compute the skyline of a table (see [15] for a survey). In Fig. 4(c), all the rows composing the skyline of the relaxed matrix are marked with \(*\).

-

2.

Retrieving the distinct proper subqueries of \(Q\) that can be executed to retrieve an element of the skyline: \(xss(Q)=\{ xss(\mu ) \ | \ \mu \in SKY(M) \}\). Each such proper subquery is an \(\mathtt {XSS}\). The \(\mathtt {XSSs}\) of our example are given in Fig. 4(d) and appear in bold in the relaxed matrix.

Using the Relaxed Matrix as an Index for the LBA Approach. In the \(\mathtt {LBA}\) algorithm, subqueries are executed on the RDF database to find whether they return an empty set of answers or not. Instead of executing a subquery, one can compute the intersection of the matrix columns corresponding to the subquery triple patterns. If the resulting column is empty, the subquery returns an empty set of answers and conversely. Thus, the MBA approach can be seen as an index to improve the performance of the \(\mathtt {LBA}\) approach. This approach still requires exploring a search space that exponentially increases with the number of triple patterns, but this search space does not require the execution of any database query.

5 Experimental Evaluation

Experimental Setup. We have implemented the proposed algorithms in JAVA 1.7 64 bits on top of Jena TDB. Our implementation is available at http://www.lias-lab.fr/forge/projects/qars. These algorithms take as input a failing \(\mathtt {SPARQL}\) query and return the set of \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) of this query. We run these algorithms on a Windows 7 Pro system with Intel Core i7 CPU and 8 GB RAM. All times presented in this paper are the average of five runs of the algorithms. The results of algorithms are not shown for queries when they consumed too many resources i.e., when they took more than one hour to execute or when the memory used exceeded the size of the \(\mathtt {JVM}\) (set to 4 GB in our experiments).

Dataset and Queries. Due to the lack of an \(\mathtt {RDF}\) query relaxation benchmark, Huang et al. [6] have designed 7 queries based on the \(\mathtt {LUBM}\) benchmark. These queries cover the main query patterns (star, chain and composite) but they only have between 2 and 5 triple patterns. Yet the study proposed by Arias and al. [13] has shown that real-world SPARQL queries executed on the \(\mathtt {DBPedia}\) and \(\mathtt {SWDF}\) datasets range from 1 to 15 triple patterns. As a consequence, we have modified the 7 queries proposed in [6] to reflect this diversity. The modified versions of these queriesFootnote 2 have respectively 1, 5, 7, 9, 11, 13 and 15 triple patterns. Q1, Q2 are chain, Q3, Q5, Q7 are star and Q4, Q6 are composite query patterns. We used two generated datasets to evaluate the performances of our algorithms on these queries: \(\mathtt {LUBM20}\) (3 M triples) and \(\mathtt {LUBM100}\) (13 M triples).

Relaxed Matrix Size and Computation Time. The MBA approach relies on the relaxed matrix. To define the data structure of this matrix, we have leveraged the similarity between this matrix and bitmap indexes used in \(\mathtt {RDBMS}\). Thus, the matrix is defined as a set of compressed bitmaps, one for each column. We have used the Roaring bitmap library version 0.4.8 for this purpose [16]. As Table 1 shows, this data structure ensures that the matrix size remains small even if the number of matrix rows is large (less than 2 MB for 2 M rows). Table 1 only includes results for star-shaped queries as other queries required too many resources due to Cartesian products.

For the computation of the \(\mathtt {MBA}\) relaxed matrix, we have tested the two algorithms 1Q and NQ described in Sect. 4. As the 1Q approach requires an \(\mathtt {RDF}\) database implemented on top of an \(\mathtt {RDBMS}\), we have used the Oracle 12c \(\mathtt {RDBMS}\) to implement the triples table and test this algorithm. As Table 1 shows, the 1Q algorithm is about 25 % faster than NQ. Even with this optimization, which is only possible for specific RDF databases, the computation time of the matrix is important: around 6 s on LUBM20 and 35 s on LUBM100. Despite this important computation time, the MBA approach can still be interesting as the matrix can be precomputed for usual failing queries identified with query logs. Moreover, the next experiment shows that MBA is faster than other algorithms for large queries even if the matrix is computed at runtime.

XSS and MFS Computation Time. We compare the performance of the following algorithms for computing the XSSs and MFSs of the benchmark queries.

-

LBA: the algorithm described in Sect. 3.

-

MBA+M: this algorithm first computes the relaxed matrix using Algorithm 4 for star-shaped queries and Algorithm 3 for other queries. Then, it computes XSSs and MFSs of the query with the LBA algorithm that uses the relaxed matrix instead of executing queries.

-

MBA-M: same as MBA+M but without the computation of the relaxed matrix.

-

DFS: a depth-first search algorithm of the subquery lattice that we modified to prune the search space when no more \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) can be found.

-

ISHMAEL: the algorithm proposed in [9] that we have tailored to return both the \(\mathtt {XSSs}\) and \(\mathtt {MFSs}\) of a query.

Figure 5 shows the performance of each algorithm displayed in logarithmic scale for readability. An algorithm that evaluates most of the subqueries such as \(\mathtt {DFS}\) can be used for queries with only a few triple patterns (Q1 and Q2). For larger queries, the number of subqueries exponentially increases and thus the performance of \(\mathtt {DFS}\) quickly decreases.

In this case, the smart exploration of the search space provided by the \(\mathtt {LBA}\) and \(\mathtt {ISHMAEL}\) algorithms is more efficient. Their response times are between 1 and 10 seconds for queries that do not have more than 11 triple patterns (Q1-Q5). The performance of \(\mathtt {LBA}\) and \(\mathtt {ISHMAEL}\) are close for queries Q1-Q5 and \(\mathtt {LBA}\) outperforms \(\mathtt {ISHMAEL}\) on Q6 and Q7 (recall that the results are presented in logarithmic scale). We have identified that the performance difference is due to the simplified computation of the potential \(\mathtt {XSSs}\) and to the order in which these potential \(\mathtt {XSSs}\) are evaluated. Indeed, according to this order, the caching performed by Jena TDB can be more or less efficient. For example, we have found some cases where the same query can be executed with a response time differing by a factor of 2 according to the caching usage. Thus, a perspective is to find the best ordering of the potential \(\mathtt {XSSs}\) to maximize the cache usage.

Finally, the \(\mathtt {MBA}\) approach can only be used for star-shaped queries. \(\mathtt {MBA-M}\) provides response times of some milliseconds even for Q7, which has 15 triple patterns. This is due to the fact that this approach just needs to compute the intersection of bitmaps using bitwise operations instead of executing subqueries. However, this approach makes a strong assumption: the matrix must be precomputed i.e., the query must have been identified as a usual failing query (e.g., using query logs). If the matrix is computed at runtime (\(\mathtt {MBA+M}\)), this computation time is important (see Table 1) and thus \(\mathtt {MBA+M}\) is only interesting for queries with a large number of triple patterns or with only selective triple patterns (they can be identified using database statistics). As a consequence, the \(\mathtt {MBA}\) approach is complementary with an approach such as \(\mathtt {LBA}\): it should be used when \(\mathtt {LBA}\) does not scale anymore.

Performance as the Number of Triple Patterns Scales. The previous experiments show that the number of triple patterns plays an important role in the performance of the proposed algorithms. In order to explore this further, we have decomposed Q7 in 15 subqueries ranging from 1 to 15 triple patterns. The first subquery only includes the first triple pattern of Q7, the second subquery includes the first two triple patterns and so on. The result of this experiment is shown in Fig. 6. This experiment confirms our previous observation. \(\mathtt {DFS}\) does not scale when a query exceeds 5 triple patterns. \(\mathtt {LBA}\) and ISHMAEL can be used with a response time between 1 and 10 s for queries with less than 13 triple patterns. The \(\mathtt {MBA-M}\) scales well for star queries even with a large number of triple patterns. The \(\mathtt {MBA+M}\) is only interesting when the query has more than 13 triple patterns as the cost of computing the matrix is important.

6 Related Work

We review here the closest works related to our proposal done both in the context of \(\mathtt {RDF}\) and relational databases. In the first setting, Hurtado et al. [5] proposed some rules and operators for relaxing RDF queries. Adding to these rules, Huang et al. [6] specified a method for relaxing \(\mathtt {SPARQL}\) queries using a semantic similarity measure based on statistics. In our previous work [7], we have proposed a set of primitive relaxation operators and have shown how these operators can be integrated in \(\mathtt {SPARQL}\) in a simple or combined way. Cali et al. [8] have also extended a fragment of this language with query approximation and relaxation operators. As an alternative to query relaxation, there have been works on query auto-completion (e.g., [17]), which check the data during query formulation to avoid empty answers. But, none of the previous works has considered the issue related to the causes of \(\mathtt {RDF}\) query failure and then the issue of \(\mathtt {MFS}\) computation.

As for relational databases, many works have been proposed for query relaxation (see Bosc et al. [18] for an overview). In particular, Godfrey [9] has defined the algorithmic complexity of the problem of identifying the \(\mathtt {MFS}\)s of failing relational query and developed the \(\mathtt {ISHMAEL}\) algorithm for retrieving them. The \(\mathtt {LBA}\) approach is inspired by this algorithm. Compared with \(\mathtt {ISHMAEL}\), \(\mathtt {LBA}\) computes both the \(\mathtt {MFSs}\) and the potential \(\mathtt {XSSs}\) in one time. Moreover, \(\mathtt {LBA}\) proposes a simplified computation of the potential XSSs. Bosc et al. [18] and Pivert et al. [19] extended Godfrey’s approach to the fuzzy query context. Jannach [10] studied the concept of \(\mathtt {MFS}\) in the recommendation system setting. The \(\mathtt {MBA}\) approach is inspired by this approach. Contrary to [10], the computation of the matrix rows is not straightforward in the context of \(\mathtt {RDF}\) queries. Moreover, in [10], the matrix is only used to retrieve the \(\mathtt {XSSs}\) of the query while, in our work, we used and stored this matrix as a bitmap index to improve the performance of \(\mathtt {LBA}\).

7 Conclusion and Discussion

In this paper we have proposed two approaches to efficiently compute the \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) of an \(\mathtt {RDF}\) query. The first approach, called \(\mathtt {LBA}\), is a smart exploration of the subquery lattice of the failing query that leverages the properties of \(\mathtt {MFS}\) and \(\mathtt {XSS}\). The second approach, called \(\mathtt {MBA}\), is based on the precomputation of a matrix, which records, for each potential solution of the query, the set of triple patterns that it satisfies. The \(\mathtt {XSSs}\) of a query can be found without any database access by computing the skyline of this matrix. Interestingly, this matrix looks like a bitmap index and can also improve the performance of the \(\mathtt {LBA}\) algorithm. We have done a complete implementation of our propositions and evaluated their performances on two datasets generated with the \(\mathtt {LUBM}\) benchmark. While a straightforward algorithm does not scale for queries with more than 5 triple patterns, the \(\mathtt {LBA}\) approach scales up to approximatively 11 triple patterns in our experiments. The \(\mathtt {MBA}\) approach is only interesting for star-shaped queries. If the matrix is precomputed, which assumes that the query has been identified as a usual failing query, \(\mathtt {XSSs}\) and \(\mathtt {MFSs}\) can be found in some milliseconds even for queries with many triple patterns (a maximum of 15 in our experiments). If the matrix is computed at runtime, this approach can still be interesting for large queries as the cost of computing the matrix becomes acceptable in comparison with the optimization of \(\mathtt {LBA}\) it permits. Optimizing the \(\mathtt {MBA}\) approach for other kinds of \(\mathtt {RDF}\) query is part of our future work. We also plan to define query relaxation strategies based on the \(\mathtt {MFSs}\) and \(\mathtt {XSSs}\) of a failing RDF query.

Notes

- 1.

The \(coalesce\) function returns the first non-null expression in the list of parameters.

- 2.

References

Wilkinson, K.: Jena property table implementation. In: SSWS (2006)

Dolog, P., Stuckenschmidt, H., Wache, H., Diederich, J.: Relaxing RDF queries based on user and domain preferences. IJIIS 33(3), 239–260 (2009)

Elbassuoni, S., Ramanath, M., Weikum, G.: Query relaxation for entity-relationship search. In: Antoniou, G., Grobelnik, M., Simperl, E., Parsia, B., Plexousakis, D., De Leenheer, P., Pan, J. (eds.) ESWC 2011, Part II. LNCS, vol. 6644, pp. 62–76. Springer, Heidelberg (2011)

Hogan, A., Mellotte, M., Powell, G., Stampouli, D.: Towards fuzzy query-relaxation for RDF. In: Simperl, E., Cimiano, P., Polleres, A., Corcho, O., Presutti, V. (eds.) ESWC 2012. LNCS, vol. 7295, pp. 687–702. Springer, Heidelberg (2012)

Hurtado, C.A., Poulovassilis, A., Wood, P.T.: Query relaxation in RDF. In: Spaccapietra, S. (ed.) Journal on Data Semantics X. LNCS, vol. 4900, pp. 31–61. Springer, Heidelberg (2008)

Huang, H., Liu, C., Zhou, X.: Approximating query answering on RDF databases. J. World Wide Web 15(1), 89–114 (2012)

Fokou, G., Jean, S., Hadjali, A.: Endowing semantic query languages with advanced relaxation capabilities. In: Andreasen, T., Christiansen, H., Cubero, J.-C., Raś, Z.W. (eds.) ISMIS 2014. LNCS, vol. 8502, pp. 512–517. Springer, Heidelberg (2014)

Calí, A., Frosini, R., Poulovassilis, A., Wood, P.T.: Flexible querying for SPARQL. In: Meersman, R., Panetto, H., Dillon, T., Missikoff, M., Liu, L., Pastor, O., Cuzzocrea, A., Sellis, T. (eds.) OTM 2014. LNCS, vol. 8841, pp. 473–490. Springer, Heidelberg (2014)

Godfrey, P.: Minimization in cooperative response to failing database queries. Int. J. Coop. Inf. Syst. 6(2), 95–149 (1997)

Jannach, D.: Fast computation of query relaxations for knowledge-based recommenders. AI Commun. 22(4), 235–248 (2009)

Pérez, J., Arenas, M., Gutierrez, C.: Semantics and complexity of SPARQL. ACM Trans. Database Syst. 34(3), 16:1–16:45 (2009)

Galindo-Legaria, C.A.: Algebraic optimization of outerjoin queries. Ph.D thesis, Harvard University, Technical report TR-12-92 (1992)

Arias, M., Fernández, J.D., Martínez-Prieto, M.A., de la Fuente, P.: An empirical study of real-world SPARQL queries. In: USEWOD (2011)

Cyganiak, R.: A relational algebra for sparql. HP-Labs, HPL-2005-170 (2005)

Hose, K., Vlachou, A.: A survey of skyline processing in highly distributed environments. VLDB J. 21(3), 359–384 (2012)

Chambi, S., Lemire, D., Kaser, O., Godin, R.: Better bitmap performance with roaring bitmaps (2014). arXiv preprint arXiv:1402.6407

Campinas, S.: Live SPARQL auto-completion. In: ISWC 2014 (Posters & Demos), pp. 477–480 (2014)

Bosc, P., Hadjali, A., Pivert, O.: Incremental controlled relaxation of failing flexible queries. JIIS 33(3), 261–283 (2009)

Pivert, O., Smits, G., Hadjali, A., Jaudoin, H.: Efficient detection of minimal failing subqueries in a fuzzy querying context. In: Eder, J., Bielikova, M., Tjoa, A.M. (eds.) ADBIS 2011. LNCS, vol. 6909, pp. 243–256. Springer, Heidelberg (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Fokou, G., Jean, S., Hadjali, A., Baron, M. (2015). Cooperative Techniques for SPARQL Query Relaxation in RDF Databases. In: Gandon, F., Sabou, M., Sack, H., d’Amato, C., Cudré-Mauroux, P., Zimmermann, A. (eds) The Semantic Web. Latest Advances and New Domains. ESWC 2015. Lecture Notes in Computer Science(), vol 9088. Springer, Cham. https://doi.org/10.1007/978-3-319-18818-8_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-18818-8_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18817-1

Online ISBN: 978-3-319-18818-8

eBook Packages: Computer ScienceComputer Science (R0)