Abstract

Geoscientific data analysis has to face some challenges regarding seamless data analysis chains, reuse of methods and tools, interdisciplinary approaches and digitalization. Computer science and data science offer concepts to face these challenges. We took the concepts of scientific workflows and component-based software engineering and adapted it to the field of geoscience. In close collaboration of computer and geo-experts, we set up an expedient approach and technology to develop and implement scientific workflows on a conceptual and digital level. We applied the approach in the showcase “Cross-disciplinary Investigation of Flood Events” to introduce and prove the concepts in our geoscientific work environment, and assess how the approach tackles the posed challenges. This is exemplarily demonstrated with the Flood Event Explorer which has been developed in Digital Earth.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

5.1 Challenges and Needs

The digitalization of science offers computer science and data science approaches that can improve scientific data analysis and exploration from several perspectives. Digital Earth applies and adapts the concepts of scientific workflows and component-based software engineering to address the following challenges and needs:

-

1.

Scientific data analysis and exploration can be seen as a process where scientists fulfil several analytical tasks with a variety of methods and tools. Currently, scientific data analysis is often characterized by performing the analytical tasks in single isolated steps with several isolated tools. This requires many efforts for scientists to bring data from one tool to the others, to integrate and analyse data from several sources and to combine several analysis methods. This isolated work environment hinders scientists to extensively exploit and analyse the available data. We need enhanced work environments that integrate methods and tools into seamless data analysis chains and that allow scientists to comprehensively analyse and explore spatio-temporal, multivariate datasets from various sources that are common in geoscience.

-

2.

Scientific data analysis and exploration often requires specific, highly tailored methods and tools; many of them are developed by geoscientists themselves. Often the methods and tools can hardly be shared since they miss state-of-the-art concepts and techniques from computer science. In consequence, the analysis methods and tools are not available for others and have to be invented again and again. This costs time and money that cannot be spent for scientific discovery. Therefore, a further requirement for advanced scientific data analysis is to facilitate the sharing and reuse of specific analytical methods and tools.

-

3.

Scientific data analysis and exploration is more and more embedded in an interdisciplinary context. To answer complex questions relevant to society, such as concerning drivers and consequences of global change, sustainable use of resources, or causes and impacts of natural hazards, needs to integrate knowledge from different scientific communities. Data from various sources have to be integrated, but also the data analysis approaches itself that extract information from the data have to be linked across communities. This is similar to the concept of coupling physics-based models which is a well-established method in geoscience to investigate and understand related processes in the Earth system. Integrating the analytical approaches across disciplines requires efforts at two levels: integration on the technical executable level, but also integration on the conceptual scientific level. On the one hand, we need technical environments that facilitate the integration of methods and tools; on the other hand, we need suitable means to support the exchange of scientific knowledge. Means are required that make apparent the data analysis approaches of other scientific communities, their scientific objective, the data that are needed as input, and the output that is generated, the methods that are applied, and the results that are created.

-

4.

The transformation of science into digital science has been an ongoing process for many years. To get the best possible results out of the process, a close collaboration of geo- and computer experts is needed. Geoscientists have to clarify and communicate comprehensibly their scientific needs, and computer and data scientists have to understand these requirements and transform them into their own approaches and solutions. Suitable means are required to facilitate this transformation. This is especially true since both disciplines have rather different working concepts: geoscientists work mostly application-oriented, and computer scientists work on a more generic, formal and abstract basis.

The concepts of scientific workflows and component-based software engineering provide a suitable frame to tackle these needs. Workflows have been applied in science for more than a decade; today, they extend to data-intensive workflows exploiting diverse data sources in distributed computing platforms (Atkinson 2017). Component-based software engineering is a reuse-based approach to defining, implementing and composing loosely coupled independent software components into larger software environments such as scientific workflows (McIlroy 1969; Heineman et al. 2001). We applied the concepts in a showcase, the cross-disciplinary investigation of flood events to introduce and prove the concepts in our geoscientific work environment, and assess how the approaches can tackle the addressed challenges.

5.2 Scientific Workflows

5.2.1 The Concept of Scientific Workflows

The concept of workflow was formally defined by the Workflow Management Coalition (WfMC) as “the computerized facilitation or automation of a business process, in whole or part” (Hollingsworth 1994). Workflows consist of a series of activities with input and output data and are directed to a certain objective. Originally geared towards the description of business processes, workflows have been increasingly used to describe scientific experiments and data analysis processes (Cerezo et al 2013). At the beginning, scientific workflows were focused on authoring and adapting processing tasks to distributed high performance computing. Today, they extend to data-intensive workflows exploiting rich and diverse data sources in distributed computing platforms (Atkinson et al 2017).

Scientific workflows provide a systematic way of describing data analysis with its analytical activities, methods and data needed. Cerezo et al (2013) distinguish three abstraction levels of scientific workflow descriptions: the conceptual, abstract and concrete levels (see Fig. 5.1). On the conceptual level, a workflow is described as a series of activities with input and output data in the language and concepts of the scientist; on the abstract level, the conceptual workflow is mapped to methods and tools to execute the activities; the concrete level provides an executable workflow within a concrete IT infrastructure.

adapted from Cerezo et al. (2013)

Three abstraction levels of scientific workflow descriptions

The description of workflows on different abstraction levels provides a number of benefits. It enables scientists to document and communicate scientific approaches and knowledge creation processes in a structured way. It provides the interface between scientific approaches and computing infrastructures. And it allows for sharing, reuse and discovery of workflows or parts of it (Cerezo et al. 2013).

A recent review paper about past, presence and future of scientific workflows points out the strength and needs of scientific data-intensive workflows: “With the dramatic increase of primary data volumes and diversity in every domain, workflows play an ever more significant role, enabling researchers to formulate processing and analysis methods to extract latent information from multiple data sources and to exploit a very broad range of data and computational platforms” (Atkinson et al. 2017, p. 2016).

Several scientific workflow systems have been developed so far to enable scientists making use of the mentioned advantages. Examples are Galaxy, Kepler, Taverna and Pegasus [Workflow Systems 2021].

5.2.2 Scientific Workflows in Digital Earth

We predominantly applied the concept of workflows to structure and describe scientific data analysis approaches in a systematic way and to implement seamless data analysis chains as executable digital workflows. Our objective was not to develop or introduce big workflow engines to model and automatically create scientific workflows. Our focus was the elicitation and description of conceptual workflows and the development of digital workflows facilitating the integration of any data analysis method and tool.

We described and implemented scientific workflows according to the three abstraction levels shown in Fig. 5.1. We slightly adapted the second level to our needs. Since we do not apply workflow engines but manually transform and implement scientific workflows, we consider it as a digital implementation level. To implement the digital workflows, we developed the component-based Data Analytics Software Framework (DASF) (chapter 5.2.3). It facilitates the definition of reusable software components and integrates them into seamless digital workflows.

Our process of developing workflows covered the following steps. First, we created conceptual workflows. Several approaches exist to model and describe conceptual workflows such as flowcharts, Petri-Nets, BPMN- or UML-Diagrams (BPMN = Business Process Model and Notation, UML= Unified Modeling Language). We decided on flowcharts since geoscientists are familiar with this straightforward type of presentation. The conceptual workflows are clearly structured records of scientific approaches and knowledge. We used them for communication and discussion between geoscientists, and to figure out how data analysis approaches can be integrated across disciplines. Conceptual workflow descriptions also served as communication means to bridge approaches from geo- and computer science. To elicit the conceptual workflows, we conducted a structured task analysis to determine the scientists’ goals, the analytical tasks (which are the activities in a workflow) and the input/output data. Structured task analysis is a well-established approach in requirement analysis (Jonassen et al 1998; Schraagen et al 2000). Interviews, record keeping and activity sampling are methods to gather the required information; tables and diagrams are means to document the results. Figure 5.2 shows exemplarily the River Plume Workflow as one of the conceptual workflows we have modelled and implemented for the Digital Earth Flood Event Explorer (Sect. 5.3).

In the next step, we transformed the conceptual workflow into a digital workflow. First, we mapped each analytical task of the conceptual workflow to a suitable method that fulfils the task. We documented this in a mapping list. Table 5.1 shows the mapped steps for the “DETECT” task of the River Plume Workflow. In order to address the goal of the task “detection of the river plume”, it is broken down into three subtasks. Each of the subtasks is mapped to a certain method, e.g. “Manually define the river plume extent” is mapped to “clicking a polygon on a map” with its corresponding input and output data. All methods defined by this mapping are then implemented as components of the digital workflow based on the Data Analytics Software Framework (DASF) (chapter 5.2.3). Methods that are implemented with the DASF can be shared and reused in other digital workflows. Finally, we deployed the digital workflows to the available infrastructure. In our Digital Earth project, the workflows of the Flood Event Explorer have been deployed to the distributed infrastructure of the various Helmholtz research centres. Figure 5.3 shows the concrete deployment of the River Plume Workflow.

5.2.3 Digital Implementation of Scientific Workflows with the Component-Based Data Analytics Software Framework (DASF)

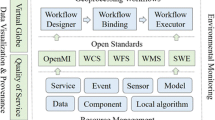

In the Digital Earth project, we decided not to apply the already existing workflow engines such as Galaxy, Kepler, Taverna and Pegasus [Workflow Systems 2021] to model and implement scientific workflows. Although these workflow systems are powerful tools, they have some shortcomings. The intellectual hurdles to be mastered when dealing with workflow systems are high and the systems often do not offer the flexibility that is necessary for the highly exploratory data analysis workflows we have in science (Atkinson et al 2017). For that reason, we decided to follow another approach that is less complex and of higher flexibility. We developed the Data Analytics Software Framework (DASF) to manually implement digital workflows. DASF facilitates the integration of data analysis methods into seamless data analysis chains on the basis of the component-based software engineering paradigm and it enables extensive data exploration by interactive visualization. The integration of data analysis methods into seamless data analysis chains on the basis of the component-based software engineering is described in this chapter. The extensive data exploration by interactive visualization is presented in chapter 3.2 in this book.

The Digital Earth “Data Analytics Software Framework DASF” is targeted to specific requirements of scientific data analysis workflows derived from the challenges formulated in chapter 5. This is the ability to (a) deal with highly explorative scientific workflows, (b) define single data analysis methods and combine them into digital workflows, (c) reuse single methods and workflows or parts of it, (d) integrate already existing and established methods, tools and data into the workflows, (e) deal with heterogeneous software development and execution environments and (f) support parallel and distributed development and processing of methods and software.

In order to meet these requirements, we combined several approaches that are well established in computer science. The basic concept for our Data Analytics Software Framework (DASF) is the paradigm of “separation of concerns, SoC” (Dijkstra 1982) which is well established in computer science and many other fields. This paradigm is based on the idea that almost anything can be broken down into smaller pieces and each piece is addressing a distinct concern. A concern in this scope can belong to any level of abstraction; it can be a single workflow component (from web services and resources to atomic functions), a workflow or also a more complex linked workflow. Since this paradigm can cover any degree of complexity, it is one of the most important and fundamental principles in sustainable software engineering. One technique applying the SoC paradigm is component-based software engineering, CBSE (McIlroy 1969; Heineman 2001). An individual software component is a software package, a web service, a web resource or a module that encapsulates a set of related functions (or data); each component provides interfaces to utilize it. It is a reuse-based approach to defining, implementing and composing loosely coupled independent components into systems.

We adapted the CBSE concept to the specific needs of data analysis and developed the Digital Earth Data Analytics Software Framework (DASF). DASF supports to define single data analysis components and to connect them to scientific workflows. As initially described, the concept of a component can be applied to any degree of abstraction. Within the DASF, we define six levels of abstraction, as shown in Fig. 5.4. They are, from bottom to top, methods, packages, modules, workflows, coupled workflows and applications. This means, everything is considered a component, while each component uses or accesses various other components from the levels below, indicated by the dashed lines. The usage of individual components is not exclusive, meaning a component can be used by multiple other components from higher levels; for example, Module X and Module Y are using Package A.

An implementation of this general component-based approach is presented in Fig. 5.5. It shows the components involved in the Flood Similarity Workflow as part of the Flood Event Explorer application. It allows the comparison of flood events on the basis of several flood indicators (chapter 5.3.3). On the bottom level, several methods for flood event extraction, event indicator calculation and statistical analysis are implemented, they are combined into packages, and packages are wrapped into modules, which are finally linked by the workflow “Flood Similarity”. The Flood Similarity Workflow can also be coupled with other workflows, such as the Climate Change Workflow in the example below. The coupled workflows allow for answering the more complex scientific questions “How could hydro-meteorological controls of flood events develop under projected climate change” (Chapter 5.3.3).

Since all components are independent entities, we need to provide one or several techniques of combining and integrating these components (implementing the dashed lines from Fig. 5.4 and 5.5). For this, we rely on different techniques depending on the abstraction level of the component. The main focus of DASF is the combination of methods to create an integrated workflow. In order to provide a standardized access to any kind of method implementation, we introduce a module layer that wraps packages with their methods. These “wrapper” modules provide a remote procedure call (RPC, White 1976) protocol implementation, harmonizing the access to individual methods across platforms and programming languages. Once the needed methods are provided via individual RPC modules, a workflow can utilize/integrate them by connecting their corresponding inputs and outputs. In the case of other abstraction levels, we rely on programming language-specific integration techniques, like object-oriented programming. Figure 5.6 shows a comprehensive example of a workflow connecting various methods via RPC modules. Step 1 of the workflow involves the connection of Methods I and II, which are provided by Module X, while Step 2 and 3 are covered by Methods III and IV, respectively. The individual modules expose the methods only through the DASF RPC protocol, so the corresponding input and output data of the combined methods will not be directly passed to one another but always through a connecting mediator.

As discussed above, the DASF RPC approach relies on an appropriate mediator entity passing the actual data between different modules and their exposed methods. In order to support heterogeneous and distributed deployment environments, our RPC communication approach uses a Publish-Subscribe Message Broker technique as a mediator. Within DASF, we use Apache Pulsar [Pulsar 2021] as a ready-to-use message broker implementation. The RPC/Message Broker approach also provides a certain flexibility when it comes to deploy and execute individual components on different IT platforms and systems and to execute an individual component in a distributed IT environment, in our case various Helmholtz centres (compare Fig. 5.3). It also supports reusability and integration of new and existing distributed components and facilitates collaborative development of several methods at the same time, without interfering with each other; the “clear separation of concerns” allows for the design and implementation of any number of components in parallel.

The presented CBSE approach implemented by DASF enables us to address the initially formulated requirements. In contrast to common workflow engines, the shown approach requires some additional effort to implement a workflow and its methods. Yet it provides the necessary flexibility for the highly exploratory data analysis workflows we have in science. In order to show the framework’s capabilities, we used it to implement the Digital Earth Flood Event Explorer, presented below. All DASF components (Eggert 2021) are registered in the corresponding language-specific package repositories, like npm (e.g. https://www.npmjs.com/package/dasf-web) and pypi (e.g. https://pypi.org/project/demessaging/). The framework’ sources are available via gitlab (https://git.geomar.de/digital-earth/dasf) and licensed under the Apache-2.0 license.

5.3 The Digital Earth Flood Event Explorer—A Showcase for Data Analysis and Exploration with Scientific Workflows

5.3.1 The Showcase Setting

We applied the concept of scientific workflows and the component-based Data Analytics Software Framework (DASF) to an exemplary showcase, the Digital Earth Flood Event Explorer (Eggert et al. 2022). The Flood Event Explorer should support geoscientists and experts to analyse flood events along the process cascade event generation, evolution and impact across atmospheric, terrestrial and marine disciplines. It aims at answering the following geoscientific questions:

-

How does precipitation change over the course of the twenty-first century under different climate scenarios over a certain region?

-

What are the main hydro-meteorological controls of a specific flood event?

-

What are useful indicators to assess socio-economic flood impacts?

-

How do flood events impact the marine environment?

-

What are the best monitoring sites for upcoming flood events?

Our aim was to develop scientific workflows providing enhanced analysis methods from statistics, machine learning and visual data exploration that are implemented in different languages and software environments, and that access data from a variety of distributed databases. Within the showcase, we wanted to investigate how the concept of scientific workflows and component-based software engineering can be adapted to a “real-world” setting and what the benefits and limitations are.

We chose the Elbe River in Germany as a concrete test site since data are available for several severe and less severe flood events in this catchment. The collaborating scientists are from different Helmholtz research centres and belong to different scientific fields such as hydrology, climate, marine, and environmental science, and computer science and data science.

5.3.2 Developing and Implementing Scientific Workflows for the Flood Event Explorer

We developed and implemented scientific workflows for each question that has to be answered within the Flood Event Explorer; Figure 5.7 gives an overview about the workflows.

We established interdisciplinary teams with geo- and computer scientists to provide all the particular expertise that is needed. The development and implementation of the scientific workflows was an iterative co-design process. Geoscientists developed the conceptual workflows. They had to deal with the following issues: What analytical approaches are suitable to answer the geoscientific questions? What data and analysis tasks and methods are needed? What enhanced methods can improve traditional analysis approaches? The conceptual workflows are documented in flowcharts; chapter 5.3.3 gives an overview about it. Computer scientists had to contribute their particular expertise: What approaches are suitable to integrate the analysis methods? How to realize the highly interactive exploration of data and results? How to enable reusability of the methods? They developed the Data Analytics Software Framework (DASF) with its integration and visualization module (chapter 5.2.3 and 3.2) to implement exploratory scientific workflows. They also transformed the conceptual workflows into digital ones.

Additionally, geo- and data scientists developed and implemented several enhanced analysis methods as part of the workflows that go beyond traditional analysis approaches. They are described in this book in chapters 3.2 and 4.5.1.

A further idea we followed in the showcase was to combine single workflows to answer more complex geoscientific questions within the Flood Event Explorer. By coupling data and methods of single analysis workflows into a larger data analysis chain—a combined workflow—we want to integrate knowledge from different scientific communities to give answers to more complex questions that go beyond single perspectives. Combined workflows have the potential to facilitate a more comprehensive view to processes in the Earth system, in our case flood events. Based on the conceptual workflow descriptions, we identified the following three complex questions that can be answered by combining single workflows:

-

How could hydro-meteorological controls of flood events develop under projected climate change?

-

Can large-scale flood events be detected as exceptional nutrient inputs that lead to algae blooms in the North Sea?

-

How should an optimal future groundwater monitoring network be designed by incorporating future climate scenarios?

The flowcharts presented at the end of chapter 5.3.3 show the combination of single workflows to give answers to the questions.

5.3.3 The Workflows of the Flood Event Explorer

This chapter gives a brief overview about the workflows we have developed for the Flood Event Explorer. The first part presents the workflows shown in Fig. 5.7 with (a) a short textual description of each workflow’s objective, functionality and added value, (b) the flowcharts of the conceptual workflows and (c) the visual user interface of the digital workflows. The second part introduces the combined workflows that allow us to answer more complex geoscientific questions.

Access to all workflows as well as their documentation, additional media and references is provided via a mutual landing page (http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/). The landing page provides an interactive overview of the environmental compartments along the process chain of flood events and the involved workflows.

5.3.3.1 The Climate Change Workflow

The goal of the Climate Change Workflow (Fig. 5.8, Fig. 5.9) is to support the analysis of climate-driven changes in flood-generating climate variables, such as precipitation or soil moisture, using regional climate model simulations from the Earth System Grid Federation (ESGF) data archive. It should support to answer the geoscientific question “How does precipitation change over the course of the 21st century under different climate scenarios, compared to a 30-year reference period over a certain region?”

Extraction of locally relevant data over a region of interest (ROI) requires climate expert knowledge and data processing training to correctly process large ensembles of climate model simulations; the Climate Change Workflow tackles this problem. It supports scientists to define the regions of interest, customize their ensembles from the climate model simulations available on the Earth System Grid Federation (ESGF) and define variables of interest and relevant time ranges.

The Climate Change Workflow provides: (1) a weighted mask of the ROI; (2) weighted climate data of the ROI; (3) time series evolution of the climate over the ROI for each ensemble member; (4) ensemble statistics of the projected change; and lastly, (5) an interactive visualization of the region’s precipitation change projected by the ensemble of selected climate model simulations for different Representative Concentration Pathways (RCPs). The visualization includes the temporal evolution of precipitation change over the course of the twenty-first century and statistical characteristics of the ensembles for two selected 30-year time periods for the mid- and the end of the twenty-first century (e.g. median and various percentiles).

The added value of the Climate Change Workflow is threefold. First, there is a reduction in the number of different software programs necessary to extract locally relevant data. Second, the intuitive generation and access to the weighted mask allow for the further development of locally relevant climate indices. Third, by allowing access to the locally relevant data at different stages of the data processing chain, scientists can work with a vastly reduced data volume allowing for a greater number of climate model ensembles to be studied, which translates into greater scientific robustness. Thus, the Climate Change Workflow provides much easier access to an ensemble of high-resolution simulations of precipitationover a given ROI, presenting the region’s projected precipitation change using standardized approaches, and supporting the development of additional locally relevant climate indices.

5.3.3.1.1 Additional Climate Change Workflow Media

Workflow-In-Action Video:

Conceptual workflow description Chart:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/images/1_climate_change_flowchart.svg

Source Code Repository:

https://git.geomar.de/digital-earth/flood-event-explorer/fee-climate-change-workflow

Registered Software DOIs:

de-esgf-download (https://doi.org/10.5281/zenodo.5793278)

de-climate-change-analysis (https://doi.org/10.5281/zenodo.5833043)

Digital Earth Climate Change Backend Module (https://doi.org/10.5281/zenodo.5833258)

The Climate Change Workflow (https://doi.org/10.5880/GFZ.1.4.2022.003)

Accessible via the Flood Event Explorer Landing Page:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/

5.3.3.2 The Flood Similarity Workflow

River floods and associated adverse consequences are caused by complex interactions of hydro-meteorological and socio-economic preconditions and event characteristics. The Flood Similarity Workflow (Fig. 5.10, Fig. 5.11) supports the identification, assessment and comparison of hydro-meteorological controls of flood events.

The analysis of flood events requires the exploration of discharge time series data for hundreds of gauging stations and their auxiliary data. Data availability and accessibility and standard processing techniques are common challenges in that application and addressed by this workflow.

The Flood Similarity Workflow allows the assessment and comparison of arbitrary flood events. The workflow includes around 500 gauging stations in Germany comprising discharge data and the associated extreme value statistics as well as precipitation and soil moisture data. This provides the basis to identify and compare flood events based on antecedent catchment conditions, catchment precipitation, discharge hydrographs and inundation maps. The workflow also enables the analysis of multidimensional flood characteristics including aggregated indicators (in space and time), spatial patterns and time series signatures.

The added value of the Flood Event Explorer comprises two major points. First, scientists work on a common, homogenized database of flood events and their hydro-meteorological controls for a large spatial and temporal domain, with fast and standardized interfaces to access the data. Second, the standardized computation of common flood indicators allows a consistent comparison and exploration of flood events.

5.3.3.2.1 Additional Flood Similarity Workflow Media

Workflow-In-Action Video:

Conceptual workflow description Chart:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/images/2_flood_similarity_flowchart.svg

Source Code Repository:

https://git.geomar.de/digital-earth/flood-event-explorer/fee-flood-similarity-workflow

Registered Software DOIs:

Digital Earth Similarity Backend Module (https://doi.org/10.5281/zenodo.5801319)

The Flood Similarity Workflow (https://doi.org/10.5880/GFZ.1.4.2022.003)

Accessible via the Flood Event Explorer Landing Page:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/

5.3.3.3 The Socio-Economic Flood Impacts Workflow

The Socio-Economic Flood Impacts Workflow (Fig. 5.12) aims to support the identification of relevant controls and useful indicators for the assessment of flood impacts. It should support answering the question “What are useful indicators to assess socio-economic flood impacts?”. Floods impact individuals and communities and may have significant social, economic and environmental consequences. These impacts result from the interplay of hazard—the meteo-hydrological processes leading to high water levels and inundation of usually dry land; exposure—the elements affected by flooding such as people, build environment or infrastructure; and vulnerability—the susceptibility of exposed elements to be harmed by flooding.

In view of the complex interactions of hazard and impact processes, a broad range of data from disparate sources need to be compiled and analysed across the boundaries of climate and atmosphere, catchment and river network, and socio-economic domains. The workflow approaches this problem and supports scientists to integrate observations, model outputs and other datasets for further analysis in the region of interest.

The workflow provides functionalities to select the region of interest, access hazard, exposure and vulnerability-related data from different sources, identifying flood periods as relevant time ranges, and calculate defined indices. The integrated input dataset is further filtered for the relevant flood event periods in the region of interest to obtain a new comprehensive flood dataset. This spatio-temporal dataset is analysed using data science methods such as clustering, classification or correlation algorithms to explore and identify useful indicators for flood impacts. For instance, the importance of different factors or the interrelationships amongst multiple variables to shape flood impacts can be explored.

The added value of the Socio-Economic Flood Impacts Workflow is twofold. First, it integrates scattered data from disparate sources and makes it accessible for further analysis. As such, the effort to compile, harmonize and combine a broad range of spatio-temporal data is clearly reduced. Also, the integration of new datasets from additional sources is much more straightforward. Second, it enables a flexible analysis of multivariate data, and by reusing algorithms from other workflows, it fosters a more efficient scientific work that can focus on data analysis instead of tedious data wrangling.

5.3.3.3.1 Additional Socio-Economic Flood Impacts Workflow Media

Conceptual workflow description Chart:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/images/4_impact_flowchart.svg

Source Code Repository:

https://git.geomar.de/digital-earth/flood-event-explorer/fee-socio-impact-workflow

Registered Software DOIs:

Digital Earth 'Controls and Indicators for Flood Impacts' Backend Module (https://doi.org/10.5281/zenodo.5801815)

The Socio-Economic Flood Impacts Workflow (https://doi.org/10.5880/GFZ.1.4.2022.005)

Accessible via the Flood Event Explorer Landing Page:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/

5.3.3.4 The River Plume Workflow

The focus of the River Plume Workflow (Fig. 5.13, Fig. 5.14) is the impact of riverine flood events on the marine environment. At the end of a flood event chain, an unusual amount of nutrients and pollutants is washed into the North Sea, which can have consequences, such as increased algae blooms. The workflow aims to enable users to detect a river plume in the North Sea and to determine its spatio-temporal extent.

Identifying river plume candidates can either happen manually in the visual interface (chapter 3.2) or also through an automatic anomaly detection algorithm, using Gaussian regression (chapter 4.5.1). In both cases, a combination of observational data, namely FerryBox transects and satellite data, and model data is used. Once a river plume candidate is found, a statistical analysis supplies additional detail on the anomaly and helps to compare the suspected river plume to the surrounding data. Simulated trajectories of particles starting on the FerryBox transect at the time of the original observation and modelled backwards and forwards in time help to verify the origin of the river plume and allow users to follow the anomaly across the North Sea. An interactive map enables users to load additional observational data into the workflow, such as ocean colour satellite maps, and provides them with an overview of the flood impacts and the river plume’s development on its way through the North Sea. In addition, the workflow offers the functionality to assemble satellite-based chlorophyll observations along model trajectories as time series. They allow scientists to understand processes inside the river plume and to determine the timescales on which these developments happen. For example, chlorophyll degradation rates in the Elbe River plume are currently investigated using these time series.

The workflow’s added value lies in the ease with which users can combine observational FerryBox data with relevant model data and other datasets of their choice. Furthermore, the workflow allows users to visually explore the combined data and contains methods to find and highlight anomalies. The workflow’s functionalities also enable users to map the spatio-temporal extent of the river plume and investigate the changes in productivity that occur in the plume. All in all, the River Plume Workflow simplifies the investigation and monitoring of flood events and their impacts in marine environments.

5.3.3.4.1 Additional River Plume Workflow Media

Workflow-In-Action Video:

Conceptual workflow description Chart:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/images/5_river_plume_flowchart.svg

Source Code Repository:

https://git.geomar.de/digital-earth/flood-event-explorer/fee-river-plume-workflow

Registered Software DOIs:

The River Plume Workflow (https://doi.org/10.5880/GFZ.1.4.2022.006)

Accessible via the Flood Event Explorer Landing Page:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/

5.3.3.5 The Smart Monitoring Workflow

A deeper understanding of the Earth system as a whole and its interacting sub-systems depends not only on accurate mathematical approximations of the physical processes but also on the availability of environmental data across time and spatial scales. Even though advanced numerical simulations and satellite-based remote sensing in conjunction with sophisticated algorithms such as machine learning tools can provide 4D environmental datasets, local and mesoscale measurements continue to be the backbone in many disciplines such as hydrology. Considering the limitations of human and technical resources, monitoring strategies for these types of measurements should be well designed to increase the information gain provided. One helpful set of tools to address these tasks is data exploration frameworks providing qualified data from different sources and tailoring available computational and visual methods to explore and analyse multi-parameter datasets. In this context, we developed a Smart Monitoring Workflow (Fig. 5.15, Fig. 5.16) to determine the most suitable time and location for event-driven, ad hoc monitoring in hydrology using soil moisture measurements as our target variable.

Visual User Interface of the Smart Monitoring Workflow (from Nixdorf et al, 2022)

The Smart Monitoring Workflow (Nixdorf et al. 2022) consists of three main steps. First is the identification of the region of interest, either via user selection or recommendation based on spatial environmental parameters provided by the user. Statistical filters and different colour schemes can be applied to highlight different regions. The second step is accessing time-dependent environmental parameters (e.g. rainfall and soil moisture estimates of the recent past, weather predictions from numerical weather models and swath forecasts from Earth observation satellites) for the region of interest and visualizing the results. Lastly, a detailed assessment of the region of interest is conducted by applying filter and weight functions in combination with multiple linear regressions on selected input parameters. Depending on the measurement objective (e.g. highest/lowest values, highest/lowest change), most suitable areas for monitoring will subsequently be visually highlighted. In combination with the provided background map, an efficient route for monitoring can be planned directly in the exploration environment.

The added value of the Smart Monitoring Workflow is multifold. The workflow gives the user a set of tools to visualize and process their data on a background map and in combination with data from public environmental datasets. For raster data from public databases, tailor-made routines are provided to access the data in the spatio-temporal limits required by the user. Aiming to facilitate the design of terrestrial monitoring campaigns, the platform and device-independent approach of the workflow give the user the flexibility to design a campaign at the desktop computer first and to refine it later in the field using mobile devices. In this context, the ability of the workflow to plot time series of forecast data for the region of interest empowers the user to react quickly to changing conditions, e.g. thunderstorm showers, by adapting the monitoring strategy, if necessary.

Finally, the integrated routing algorithm assists to calculate the duration of a planned campaign as well as the optimal driving route between often scattered monitoring locations.

5.3.3.5.1 Additional Smart Monitoring Workflow Media

Workflow-In-Action Video:

Conceptual workflow description Chart:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/images/3_smart_monitoring_flowchart.svg

Source Code Repository:

https://git.geomar.de/digital-earth/flood-event-explorer/fee-smart-monitoring-workflow

Registered Software DOIs:

Digital Earth Smart Monitoring Backend Module (Tocap) (https://doi.org/10.5281/zenodo.5824566)

The Smart Monitoring Workflow (Tocap) (https://doi.org/10.5880/GFZ.1.4.2022.004)

Accessible via the Flood Event Explorer Landing Page:

http://rz-vm154.gfz-potsdam.de:8080/de-flood-event-explorer/

5.3.3.6 Workflow Combinations

The combination of scientific workflows to foster data analysis and knowledge creation across disciplines was one idea we followed in Digital Earth. The following examples show how the workflows presented above can be combined to give answers to more complex questions.

5.3.3.6.1 Combination of the Flood Similarity Workflow and the Climate Change Workflow

A combination of the Flood Similarity Workflow and the Climate Change Workflow (Fig. 5.17) can give an answer to the question “How could hydro-meteorological controls of flood events develop under projected climate change?”. The Flood Similarity Workflow provides a set of flood indices that allow for a comparison of flood events. These indices describe the antecedent catchment soil moisture and catchment precipitation, amongst other properties. The Flood Similarity Workflow determines the flood indices for the historical and present period for a region of interest. These flood indices are then calculated from climate projections from the Climate Change Workflow. The Climate Change Workflow provides information for projected future conditions regarding how the precipitation and soil moisture characteristics change under different future scenarios for mid- and end of the twenty-first century over a region of interest. The combined workflows show how controls of flood events, described by the indices, develop and how the probability of floods of a given magnitude changes over a certain region.

5.3.3.6.2 Combination of the Flood Similarity Workflow and the River Plume Workflow

Combining the Flood Similarity Workflow and the River Plume Workflow (Fig. 5.18) addresses the question, whether large-scale flood events can be detected as exceptional nutrient inputs into the North Sea that lead to algae blooms. Next to the start and end dates of the flood event, the Flood Similarity Workflow provides characteristics like the return period and the total water volume during the event. Given the temporal and hydrological characteristics, the River Plume Workflow evaluates the impact of fluvial floods on water quality anomalies in the North Sea. The combination of both workflows allows investigating the connection between flood event indicators and the marine impacts caused by said flood event. Results from this analysis can be used to improve the classification of flood events in future.

5.3.3.6.3 Combination of the Climate Change and the Smart Monitoring Workflow

Groundwater monitoring stations are essential for protecting the groundwater from harmful pollutants and ensuring good water quality. As precipitation and temperature patterns change under different climate evolutions, so will groundwater recharging rates and quality. These changes need to be monitored adequately to ensure that countermeasures start on time and are efficient. Currently, groundwater monitoring wells are unevenly distributed across the German aquifer systems. By combining the Climate Change Workflow and the Smart Monitoring Workflow (Fig. 5.19), we can assist to improve the groundwater monitoring network design required in future for different climate projections.

The Climate Change Workflow provides information on precipitation changes over a region of interest based on regional climate model projections at a resolution of 144 km2. These data help to assess where the largest climate-driven changes in groundwater resources are expected and combined with the Smart Monitoring Workflow; it can determine whether there are a sufficient number of groundwater monitoring wells installed and can further assist in determining suitable locations for the installation of new monitoring wells.

5.4 Assessment of the Scientific Workflow Concept

Our assessment of the concept of scientific workflows focuses on the challenges and needs we addressed in chapter 5.1. Along these challenges, we present our experiences with benefits, limitations and efforts of scientific workflows.

Challenge 1: Seamless data analysis chains to extensively analyse and explore spatio-temporal and multivariate data.

As we could show in our Flood Event Explorer, scientific workflows provide an analysis environment that integrates different analysis methods and tools into seamless data analysis chains and facilitates integration of data from various sources; the visual front ends of the digital workflows enable detailed exploration of the multivariate spatio-temporal data. This is a clear benefit. In more detail, our exemplary workflows of the Flood Event Explorer attain the following benefits:

-

Reduction in the number of different software programs;

-

Much easier access to data and ensembles of simulations;

-

Ease with which users can combine datasets of their choice;

-

Intuitive generation and access to spatial or temporal subsets of data allow for further analysis;

-

By allowing access to spatial subsets of data (e.g. locally relevant data) at different stages of the data processing chain, scientists can work with a vastly reduced data volume allowing for a more comprehensive analysis (e.g. a greater number of model ensembles to be studied), which translates into greater scientific robustness.

Scientific workflows have proven to be helpful means to create improved work environments with seamless data analysis chains to extensively analyse and explore spatio-temporal and multivariate data.

Besides this benefit, scientific workflows have one major limitation; this is the effort for its development and implementation. The use of workflow engines and the manual implementation of workflows need additional effort and expertise. The manual implementation we have chosen in the Digital Earth project requires knowledge from software engineering and consequently various expertise. Although our Data Analytics Software Framework (DASF) allows reuse of workflow components and thus eases the implementation process, a minimum of software development expertise is still required.

Another limitation of our scientific workflows is their predefined data analysis approach with a set of predefined analysis methods. Due to the component-based approach of our Data Analytics Software Framework (DASF), methods can be substituted easily; however, this also needs particular expertise in software development.

Challenge 2: Sharing and reuse of methods and software tools.

The systematic description of scientific workflows and its implementation with the Data Analytics Software Framework (DASF) enable sharing and reuse of all workflows and workflow components we have developed for the Flood Event Explorer. Several of our workflow components (data analysis and visualization methods) could already be used again in other projects without any difficulties and so could save time and costs for reimplementation. Examples are the GFZ Earthquake Explorer (https://geofon.gfz-potsdam.de/eqexplorer/test/) or the Geochemical Explorer (http://rz-vm154.gfz-potsdam.de:8080/gcex/). The DASF also allowed reusing already existing data analysis software and integrating it into the workflows of the Flood Event Explorer independent of the programming language. A further benefit is the sharing of extensive analysis approaches with other scientists. Scientific workflows go beyond sharing single methods and tools; they implement whole analysis approaches and so support more standardized and comparable analysis and results. Examples within our Flood Event Explorer are the approaches to determine projected precipitation change of defined regions, to generate common flood indicators for a consistent comparison and exploration of flood events or to identify the best monitoring sites and time (chapter 5.3.3). Conceptual workflow descriptions can also be used as metadata providing suitable information for reuse and sharing.

The benefits that scientific workflows and the Data Analytics Software Framework (DASF) provide for sharing and reuse of single analysis methods and extensive analysis approaches require some additional effort and work. First the RPC wrapper module, described in chapter 5.2.3, has to be added to the actual method. The effort to do so depends on the used programming language. In case of the widely used Python programming language, we provide an easy-to-use annotation-based interface for this. Furthermore, a message broker instance, as described in chapter 5.2.3, has to be provided and maintained. Finally, the module wrapping the developed method has to be deployed on a suitable host. In addition to the technical effort, a shared, reusable module needs to be documented properly to ease the workload of the reusing party. In general, we consider reuse and sharing of methods on the module level. However, this always requires the use of the DASF in the scope of the reusing context. Since we applied CBSE on all levels of abstraction, the individual methods could also directly be reused without the advantages and disadvantages of DASF.

Challenge 3: Communicate and combine scientific approaches across disciplines.

The conceptual workflows we described in flow charts are a systematic documentation of scientific knowledge and approaches; they show how scientists proceed to answer a geoscientific question. In the Digital Earth project, it became very clear that conceptual workflow descriptions can serve as significant means to understand approaches of other scientists and to discuss how various approaches can be combined. Examples in our Flood Event Explorer are the combined workflows presented in chapter 5.3.3. Once it is clear on the conceptual level how scientific approaches and workflows can be combined, they also can be linked on the implementation level for the digital workflows. The concept of scientific workflows supports linking approaches across disciplines on the conceptual as well as on the digital level.

Our experience also showed that describing conceptual scientific workflows is not an easy task for geoscientists. The systematic documentation of scientific approaches needs some experience. Scientists have to learn to represent their approaches in a systematic way either in flow charts or in other forms; this requires additional effort from geoscientists.

Challenge 4: Suitable interface between geo- and computer science.

The conceptual workflow descriptions largely support the communication between geo- and computer scientists; they are recognizable means of translation between the two worlds. The geo- and computer scientists collaborating in Digital Earth assessed the task analysis and flowcharts to elicitate and document the conceptual workflows as essential for collaboration. Geoscientists are forced to systematically capture their requirements and analytical tasks; computer scientists receive a sound basis to map analytical tasks to suitable methods that fulfil the task in the digital workflows.

To summarize: The experience we made in Digital Earth with adopting the concept of scientific workflows to geosciences can be summarized as following: The concept of scientific workflows provides a suitable frame to tackle the needs we have addressed for data analysis and exploration in Digital Earth: digital workflows enable seamless executable data analysis chains (challenge 1), and they also support sharing and reuse of data analysis methods and tools (challenge 2). Conceptual workflow descriptions are suitable means to exchange and combine scientific approaches and knowledge (challenge 3); they also serve as a suitable interface between approaches from geo- and computer science (challenge 4). To utilize this potential of workflows, some additional effort is necessary. First, geoscientists have to force thinking in workflows and gain skills and experience in describing conceptual scientific workflows. Second, state-of-the-art concepts from computer science are needed to develop and implement highly explorative digital scientific workflows. Third, a close collaboration and co-design of geo- and computer scientists is required to develop suitable scientific workflows. This requires people who are willing and able for border crossing and thinking out of the box. To shape such people was one aim and success of the Digital Earth project.

References

Atkinson M, Geins S, Montagnat J, Taylor I (2017) Scientific workflows: past, present, future. Futur Gener Comput Syst 75(2017):216–227

Cerezo N, Montagnat J, Blay-Fornarino M (2013) Computer-assisted scientific workflow design. J Grid Computing 11:585–612. https://doi.org/10.1007/s10723-013-9264-5

Dijkstra, Edsger W (1982) On the role of scientific thought. Selected writings on computing: a personal perspective. New York, NY, USA: Springer-Verlag. pp 60–66. ISBN 0-387-90652-5

Eggert D, Dransch D (2021) DASF: a data analytics software framework for distributed environments. GFZ Data Services. https://doi.org/10.5880/GFZ.1.4.2021.004

Eggert D, Rabe D, Dransch D, Lüdtke S, Schröter K, Nam C, Nixdorf E, Wichert V, Abraham N, Merz B (2022) Digital Earth Flood Event Explorer: a showcase for data analysis and exploration with scientific workflows. GFZ Data Services. https://doi.org/10.5880/GFZ.1.4.2022.001

Heineman, George T and Councill, William T (2001) Component-based software engineering: putting the pieces together. Addison-Wesley Professional, Reading 2001 ISBN 0-201-70485-4

Hollingsworth D (1995). Workflow Management Coalition: the Workflow Reference Model.

Jonassen DH, Tessmer M, Hannum WH (eds) (1998) Task analysis methods for instructional design, Routledge, Taylor and Francis Group

McIlroy, Malcolm Douglas (1969, January). Mass produced software components (PDF). Software engineering: report of a conference sponsored by the NATO Science Committee, Garmisch, Germany, 7–11 October 1968. Scientific Affairs Division, NATO, p 79

Nixdorf E, Eggert D, Morstein P, Kalbacher T, Dransch D (2022) Tocap: a web tool for ad-hoc campaign planning in terrestrial hydrology. J Hydroinformatic. https://doi.org/10.2166/hydro.2022.057

Pulsar2021: https://pulsar.apache.org/

Schraagen JM, Chipman SF, Valerie L, Shalin VL (eds) (2000) Cognitive task analysis, Psychology Press

White, James E (1976, January 14) RFC 707. A high-level framework for network-based resource sharing. Proceedings of the 1976 National Computer Conference

Workflow Systems (2021) https://galaxyproject.org/, https://kepler-project.org/, https://taverna.incubator.apache.org/, https://pegasus.isi.edu/

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Dransch, D. et al. (2022). Data Analysis and Exploration with Scientific Workflows. In: Bouwer, L.M., Dransch, D., Ruhnke, R., Rechid, D., Frickenhaus, S., Greinert, J. (eds) Integrating Data Science and Earth Science. SpringerBriefs in Earth System Sciences. Springer, Cham. https://doi.org/10.1007/978-3-030-99546-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-99546-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-99545-4

Online ISBN: 978-3-030-99546-1

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)