Abstract

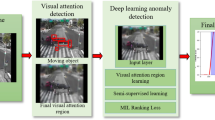

Nowadays, the visual information captured by CCTV surveillance and body worn cameras is continuously increasing. Such visual information is often used for security purposes, such as the recognition of suspicious activities, including potential crime- and terrorism-related activities and violent behaviours. To this end, specific tools have been developed in order to provide law enforcement with better investigation capabilities and to improve their crime and terrorism detection and prevention strategies. This work proposes a novel framework for recognising abnormal activities where the continuous recognition of such activities in visual streams is carried out using state-of-the-art deep learning techniques. Specifically, the proposed method is based on an adaptable (near) real-time image processing strategy followed by the widely used 3D convolution architecture. The proposed framework is evaluated using the publicly available diverse dataset VIRAT for activity detection and recognition in outdoor environments. Taking into account the non-batch image processing and the advantage of 3D convolution approaches, the proposed method achieves satisfactory results on the recognition of human-centred activities and vehicle actions in (near) real time.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Bibliography

Caba Heilbron, F., Escorcia, V., Ghanem, B., & Carlos Niebles, J. (2015). Activitynet: A large-scale video benchmark for human activity understanding. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR) (pp. 961–970). IEEE.

Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR) (pp. 248–255). IEEE.

Awad, G., Butt, A. A., Curtis, K., Lee, Y., Fiscus, J., Godil, A., ... & Quenot, G. (2020). Trecvid 2019: An evaluation campaign to benchmark video activity detection, video captioning and matching, and video search & retrieval. arXiv preprint arXiv:2009.09984.

Hara, K., Kataoka, H., & Satoh, Y. (2018). Can spatiotemporal 3D CNNs retrace the history of 2D CNNs and ImageNet? In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR) (pp. 6546–6555). IEEE.

Ji, S., Xu, W., Yang, M., & Yu, K. (2012). 3D convolutional neural networks for human action recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 35(1), 221–231.

Kay, W., Carreira, J., Simonyan, K., Zhang, B., Hillier, C., Vijayanarasimhan, S., Viola, F., Green, T., Back, T., Natsev, P., et al. (2017). The kinetics human action video dataset. arXiv preprint arXiv:1705.06950.

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., & Serre, T. (2011). HMDB: a large video database for human motion recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 2556–2563). IEEE.

Oh, S., Hoogs, A., Perera, A., Cuntoor, N., Chen, C. C., Lee, J. T., Mukherjee, S., Aggarwal, J., Lee, H., Davis, L., et al. (2011). A large-scale benchmark dataset for event recognition in surveillance video. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3153–3160). IEEE.

Simonyan, K., & Zisserman, A. (2014). Two-stream convolutional networks for action recognition in videos. In Proceedings of the international conference of advances in Neural Information Processing Systems (NIPS), (pp. 568–576), NeurIPS foundation.

Singh, D., Merdivan, E., Psychoula, I., Kropf, J., Hanke, S., Geist, M., & Holzinger, A. (2017). Human activity recognition using recurrent neural networks. In Proceedings of the international Cross-Domain conference for Machine Learning and Knowledge Extraction (CD-MAKE) (pp. 267–274). Springer.

Sutskever, I., Martens, J., Dahl, G., & Hinton, G. (2013). On the importance of initialization and momentum in deep learning. In Proceedings of the International Conference on Machine Learning (ICML) (pp. 1139–1147).

Tran, D., Bourdev, L., Fergus, R., Torresani, L., & Paluri, M. (2015). Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 4489–4497). IEEE.

Acknowledgements

| This research has received funding from the European Union’s H2020 research and innovation programme as part of the CONNEXIONs (H2020-786731) project. |

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Gkountakos, K., Ioannidis, K., Tsikrika, T., Vrochidis, S., Kompatsiaris, I. (2021). Visual Recognition of Abnormal Activities in Video Streams. In: Akhgar, B., Kavallieros, D., Sdongos, E. (eds) Technology Development for Security Practitioners. Security Informatics and Law Enforcement. Springer, Cham. https://doi.org/10.1007/978-3-030-69460-9_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-69460-9_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-69459-3

Online ISBN: 978-3-030-69460-9

eBook Packages: EngineeringEngineering (R0)