Abstract

This chapter introduces several state of the art techniques that could help to make deep underwater archaeological photogrammetric surveys easier, faster, more accurate, and to provide more visually appealing representations in 2D and 3D for both experts and public. We detail how the 3D captured data is analysed and then represented using ontologies, and how this facilitates interdisciplinary interpretation and cooperation. Towards more automation, we present a new method that adopts a deep learning approach for the detection and the recognition of objects of interest, amphorae for example. In order to provide more readable, direct and clearer illustrations, we describe several techniques that generate different styles of sketches out of orthophotos developed using neural networks. In the same direction, we present the Non-Photorealistic Rendering (NPR) technique, which converts a 3D model into a more readable 2D representation that is more useful to communicate and simplifies the identification of objects of interest. Regarding public dissemination, we demonstrate how recent advances in virtual reality to provide an accurate, high resolution, amusing and appropriate visualization tool that offers the public the possibility to ‘visit’ an unreachable archaeological site. Finally, we conclude by introducing the plenoptic approach, a new promising technology that can change the future of the photogrammetry by making it easier and less time consuming and that allows a user to create a 3D model using only one camera shot. Here, we introduce the concepts, the developing process, and some results, which we obtained with underwater imaging.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

9.1 Introduction

Archaeological excavations are irreversibly destructive, and it is thus important to accompany them with detailed, accurate, and relevant documentation, because what is left of a disturbed archaeological complex is the knowledge and record collected. This kind of documentation is mainly iconographic and textual. Reflecting on archaeological sites is almost always done using recording such as drawings, sketches, photographs, maps and sections, topographies, photogrammetry, maps, and a vast array of physical samples that are analyzed for their chemical, physical, and biological characteristics. These records are a core part of the archaeological survey and offer a context and a frame of reference within which artifacts can be analyzed, interpreted, and reconstructed. As noted by Buchsenschutz (2007, 5) in the introduction to the Symposium “Images et relevés archéologiques, de la preuve à la démonstration” in Arles in 2007, ‘Even when very accurate, drawings only retain certain observations to support a demonstration, just as a speech only retains certain arguments, but this selection is not generally explicit.’ This is the cornerstone of archaeological work: the survey is both a metric representation of the site and an interpretation of the site by the archaeologist.

In the last century, huge progress was made on collecting 3D data and archaeologists adopted analogue and then digital photography as well as photogrammetry. This was first developed by A. Laussedat in 1849, and the first stereo plotter was built by C. Pulfrich in 1901 (Kraus 1997). Furthermore, we saw the beginning of underwater archaeological photogrammetry (Bass 1966) and finally the dense 3D point cloud point generation based on automatic homologous point description and matching (Lowe 1999). In a way, building a 3D facsimile of an archaeological site is not itself a matter of research even in an underwater context. Creation of a model does not solve the problem of producing a real survey and interpretation of the site according to a certain point of view – a teleological approach able to produce several graphical representations of the same site, according to the final goal of the survey. Indeed, the production of such a survey is a complex process, involving several disciplines and emergent approaches. In this chapter we present the work of an interdisciplinary team, merging photogrammetry and computer vision, knowledge representation, web semantic, deep learning, computational geometry, lightfield cameras dedicated to underwater archaeology (Castro and Drap 2017).

9.1.1 The Archaeological Context

This work is centered on the Xlendi shipwreck, named after its location, found off the Gozo coast in Malta. The shipwreck was located by the Aurora Trust, an expert in deep-sea inspection systems, during a survey campaign in 2008. The shipwreck is located near a coastline known for its limestone cliffs that plunge into the sea and whose foundation rests on a continental shelf at an average depth of 100 m below sea level. The shipwreck itself is therefore exceptional; first due to its configuration and its state of preservation which is particularly well-suited for our experimental 3D modelling project. The examination of the first layer of amphorae also reveals a mixed cargo, consisting of items from Western Phoenicia, and Tyrrhenian-style containers which are both well-dated to the period situated between the end of the eighth and the first half of the seventh centuries BC. The historical interest of this wreck, highlighted by our work, which is the first to be performed on this site, creates added value in terms of innovation and the international profile of the project (Drap et al. 2015).

9.2 Underwater Survey by Photogrammetry

The survey was done using optical sensors: photogrammetry is the best way to collect both accurate 3D data and color information in a full contactless approach and reduced the time on site to the necessary time to take the photographs. The survey had two goals: measuring the entire visible seabed where the wreck is located and extracting known artefacts (amphorae), in order to position them in space and accurately represent them after laboratory study. The photogrammetric system used in 2014 (Drap et al. 2015), was mounted on the submarine Remora 2000. In this version, a connection is established between the embedded sub system, fixed on the submarine and the pilot (inside the submarine) to ensure that the survey is fully controlled by the pilot. The photogrammetric system uses a synchronized acquisition of high and low-resolution images by video cameras forming a trifocal system. The three cameras are independently mounted in separate waterproof housings. This requires two separate calibration phases; the first one is carried out on each set of camera/housing in order to compute intrinsic parameters and the second one is done to determine the relative position of the three cameras which are securely mounted on a rigid platform. The second calibration can be done easily before each mission and it affects the final 3D model scale. This allow us to obtain a 3D model at the right scale without any interaction on site. More in detail, the trifocal system is composed of one high-resolution, full-frame camera synchronized at 2 Hz and two low-resolution cameras synchronized at 10 Hz (Drap 2016).

The lighting, a crucial part in photogrammetry, must meet two criteria: the homogeneity of exposure for each image and its consistency between images (Drap et al. 2013). Of course, using only one vehicle, the lights are fixed on the submarine as far as possible from the camera. Hydrargyrum medium-arc iodide (HMI) lamps were used with an appropriate diffuser (note: in the current version overvolted LEDs are used as they are significantly more energy efficient). The trifocal system has two different goals: The first one is the real-time computation of system pose and the 3D reconstruction of the zone of seabed visible from the cameras. The operator can pilot the submarine using a dedicated application that displays the position of the vehicle in real time. A remote video connection also enables the operator to see the live images captured by the cameras. Using the available data, the operator can assist the pilot to ensure the complete coverage of the zone to be surveyed. The pose is estimated based on the movement of the vehicle between two consecutive frames. We developed a system for computing visual odometry in real time and producing a sparse point cloud of 3D points on the fly (Nawaf et al. 2016, 2017).

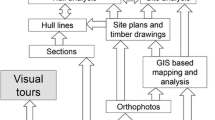

The second goal is to perform an offline 3D reconstruction of a high-resolution metric model. This process uses the high-resolution images to produce a dense model, scaled based on baseline distances. We developed a set of tools to bridge our visual odometry software to the commercial software Agisoft Photoscan/Metashape in order to use the densification capabilities. After this step we obtained a dense pointcloud and a set of oriented high-resolution photographs describing accurately the entire site. This is enough to produce a high resolution orthophoto of the site (1 pixel/0.5 mm), as well as accurate 3D models. The ultimate goal of this process is to study the cargo, hull remains, and remaining artifact collection.

The second problem is to extract known objects for these data. We defined the amphorae typology and the corresponding theoretical 3D models. The recognition process is composed of two different phases: the first one is the artifact detection; and then the position and orientation estimation of each artefact is undertaken in order to calculate the exact size and location. The amphorae detection is done in 2D using the full orthophoto. We used a deep learning approach and obtained 98% of good results (Pasquet et al. 2017). This allows us to extract the relevant part of the 3D data where the artifact is located. We then apply a 3D matching approach to compute the position, orientation and dimension of the known artifact.

It is important to note that during the last decade several excellent works have been done in this context for both underwater archaeology and marine archaeology (Aragón et al. 2018; Balletti et al. 2016; Bodenmann et al. 2017; Bruno et al. 2015; Capra et al. 2015; Martorelli et al. 2014; McCarthy and Benjamin 2014; Pizarro et al. 2017; Secci 2017). Indeed, more technical details of this survey have been published (Drap et al. 2015). More generally, we can observe that entire workshops are now dedicated to photogrammetric survey for underwater archaeology (for example the workshop organized by CIPA/ISPRS, entitled ‘Underwater 3D recording and modeling’ in Sorrento, Italy in April 2015), hundreds of articles are written on photogrammetric underwater survey for archaeology and substantial research is done on technical aspects, such as calibration (Shortis 2015; Telem and Filin 2010), stereo system (O’Byrne et al. 2018; Shortis et al. 2009) using structured light (Bruno et al. 2011; Roman et al. 2010) or more generally on underwater image processing (Ancuti et al. 2017; Chen et al. 2018; Hu et al. 2018; Yang et al. 2017a, b). In the last few years this discipline has attracted the attention of the industrial world and has been used to record and analyse complex objects of large dimensions (Menna et al. 2015; Moisan et al. 2015). The new challenge for tomorrow is producing accurate and detailed surveys from ROV and AUV in complex environments (Ozog et al. 2015; Zapata-Ramírez et al. 2016).

9.3 The Use of Ontologies

9.3.1 In Underwater Archaeology

The main focus behind this research is the link between measurement and knowledge. All underwater archaeological survey is based on the study of a well-established corpus of knowledge, in a discipline which continues to redefine its standard practices. The knowledge formalization approach is based on ontologies; the survey approach proposed here implies a formalization of the existing practice, which will drive the survey process.

The photogrammetric survey was done with the help of a specific instrumental infrastructure provided by COMEX, a partner in the GROPLAN project (Drap et al. 2015; GROPLAN 2018). Both this photogrammetry process and the body of surveyed objects were ontologically formalized and expressed in OWL2. The use of ontologies to manage cultural heritage advances every year and generates interesting perspectives for its continued study (Bing et al. 2014; Lodi et al. 2017; Niang et al. 2017; Noardo 2017). The ontology developed within the framework of this project takes into account the manufactured items surveyed and the photogrammetry process which is used to measure them. Each modelled item is therefore represented from a measurement point of view and linked to all the photogrammetric data that contributed to the measurement process. To this extent we developed two ontologies: one dedicated to photogrammetric measurement and georeferencing the measured items, and another dedicated to the measured items, principally the archaeological artefacts. The latter describes their dimensional properties, ratios between main dimensions, and default values. Within this project, these two ontologies were aligned in order to provide one common ontology that covers the two topics at the same time. The development architecture of these ontologies was performed with a close link to the Java class data structure, which manages the photogrammetric process as well as the measured items. Each concept or relationship in the ontology has a counterpart in Java (the opposite is not necessarily true). Moreover, the surveyed resources are archaeological items studied and possibly managed by archaeologists or conservators in a museum. It is therefore important to be able to connect the knowledge acquired when measuring the item with the ontology designed to manage the associated archaeological knowledge.

The modelling work of our ontology started from the premise that collections of measured items are marred by a lack of precision concerning their measurement, assumptions about their reconstruction, their age, and origin. It was therefore important to ensure the coherence of the measured items and potentially propose a possible revision. This collection work was presented in a previous study in the context of underwater archaeology with similar problems (Curé et al. 2010; Hué et al. 2011; Seinturier 2007; Serayet 2010; Serayet et al. 2009).

Amongst the advantages of the photogrammetric process is the possibility of providing several 2D representations of the measured artefacts. Our ontology makes use of this advantage to represent the concepts used in photogrammetry, and to be able to use an ontology reasoner on the ABox representing photogrammetric data. In other words, this photogrammetric survey is expressed as an ontology describing the photogrammetric process, as well as the measured objects, and that was populated both by the measurements of each artefact and by a set of corresponding data. In this context, we developed a mapping from an Object Oriented (OO) formalism to a Description Logic (DL). This mapping is relatively easy to accomplish because we have to map a poor semantic formalism toward a richer one (Roy and Yan 2012). We need to manage both the computational aspects (often heavy in photogrammetry) implanted in the artefacts measurable by photogrammetry, and the ontological representation of the same photogrammetric process and surveyed artefacts.

The architecture of the developed framework is based on a close link between, on the one hand, the software engineering aspects and the operative modelling of the photogrammetry process, artefacts measured by photogrammetry in the context of this project and, on the other hand, with the ontological conceptualization of the same photogrammetry process and surveyed artefacts. The present implementation is based on a double formalism, JAVA, used for computation, photogrammetric algorithms, 3D visualization of photogrammetric models, and cultural heritage objects, and then for the definition of ontologies describing the concepts involved in this photogrammetric process, as well as on the surveyed artefacts.

To implement our ontological model, we opted for OWL2 (Web Ontology Language), which has been used for decades as a standard for the implementation of ontologies (McGuinness and Harmelen 2004). This web ontology language allows for modelling concepts (classes), instances (individuals), attributes (data properties) and relations (object properties). In fact, the main concern during the modelling process is the representation of accurate knowledge from a measurement point of view for each concept in the ontology. On the other hand, the same issue presides over the elaboration of the JAVA taxonomy, where we have to manage constraints involving differences in the two hierarchies of concepts within the engineering software side. For this purpose, we developed a procedural attachment method for each concept in the ontology. This homologous aspect of our architecture leads to the fact that each individual of the ontology can produce a JAVA instance since each concept present in the ontology has a homologous class in the JAVA tree. Note here that the adoption of an automatic binding between the ontology construction in OWL and the JAVA taxonomy cannot be produced automatically in our case. Hence, we have abandoned an automatic mapping using JAVA annotation and JAVA beans for a manual extraction, even if this is a common way in literature (Horridge et al. 2004; Ježek and Mouček 2015; Kalyanpur et al. 2004; Roy and Yan 2012; Stevenson and Dobson 2011).

The current implementation is based on a two aspects: JAVA, used for computation, photogrammetric algorithms, 3D visualization of photogrammetric data and patrimonial objects, and OWL for the definition of ontologies describing the concepts involved in the measurement process and the link with the measured objects. In this way, reading an XML file used to serialize a JAVA instance set representing a statement can immediately (upon reading) populate the ontology; similarly reading an OWL file can generate a set of JAVA instance counterparts of the individuals present in the ontology. Furthermore, the link between individuals and instances persists and it can be used dynamically. The huge advantage of this approach is that it is possible to perform logical queries for both aspects of the ontology and the JAVA representation, i.e. to perform semantic queries over ontology instances while benefiting from the computational capabilities in the homologous JAVA side. We can thus read the ontology, visualize in 3D the artefacts present in the ontology, and graphically visualize the result of SQWRL queries in the JAVA viewer.

A further step, after developing and populating the ontology, is to find target ontologies to link to, following semantic web recommendations (Bizer et al. 2009); linking the newly published ontology to other existent ontologies in the web in order to allow ontologies sharing, exchanging and reusing information between them. In cultural heritage contexts, CIDOC CRM is our main target ontology since it is now well adopted by CH actors from theoretical point of view (Gaitanou et al. 2016; Niccolucci 2016; Niccolucci and Hermon 2016) as well as applicative works (Araújo et al. 2018) and an interesting direction toward GIS application based on some connection with photogrammetric survey (Hiebel et al. 2014, 2016).

Several methodologies can be chosen regarding mapping these two ontologies. For example, Amico et al. (2013) choose to model the survey location with an activity (E7) in CRM. They also developed a formalism for the digital survey tool mapping the digital camera definition with (D7 Digital Machine Event). We see here that the mapping problem is close to an alignment problem, which is an issue in this case. Aligning two ontologies dealing with digital camera definition is not obvious; a simple observation of the lack of interoperability between photogrammetric software shows the scale of the problem. We are currently working on an alignment/extension process with Sensor ML which is an ontology dedicated to sensors. Although some work has already yielded results (Hiebel et al. 2010; Xueming et al. 2010), it is not enough to support the close-range photogrammetry process, from image measurement to artefact representation.

Linking our ontology to CIDOC-CRM can provide more integrity between cultural heritage datasets and will allow more flexibility for performing federated queries cross different datasets in this community. Being a generic ontology, however, the current state of CIDOC-CRM does not support the items that it represents from a photogrammetric point of view, a simple mapping would not be sufficient and an extension with new concepts and new relationships would be necessary. Our extension of the CIDOC-CRM ontology is structured around the triple <E18_Physical_Thing, P53_has_former_or_current_location, E53_Place>, which provides a description of an instance of E53_Place as the former or current location of an instance of E18_Physical_Thing. The current version of this extension relates only to the TBox part of the two ontologies where we used the hierarchical properties rdfs:subClassOf and rdfs:subPropertyOf to extend the triple <SpatialObject, hasTransformation3D, transformation3D>, developed in this project. Note that the mapping operation is done in JAVA by interpreting a set of data held by the JAVA classes as a current identification of the object: 3D bounding box, specific dimension. These attributes are then computed in order to express the right CRM properties.

Our architecture is based on the procedural attachment where the ontology is considered as a homologous side of the JAVA class structure that manages the photogrammetric survey and the measurement of artefacts. This approach ensures that all the measured artefacts are linked with all the observations used to measure and identify them.

A further advantage of adopting ontology is to benefit from the reasoning over the semantic of its intentional and extensional knowledge. For this purpose, the approach that we adopted so far, using the OWLAPI and the Pellet reasoner, allows for performing SQWRL queries using an extension of SWRL Built-In (O’Connor and Das 2006) packages. SWRL provides a powerful extension mechanism that allows for implementing user-defined methods in the rules (Keßler et al. 2009). For this purpose, we have built some spatial operators allowing us to express spatial queries in SWRL (Arpenteur 2018), as for example the operator isCloseTo with three arguments which allows for selecting all the amphorae present in a sphere centred on a specific amphora and belonging to a certain typology. A representation of the artefacts measured on the Xlendi wreck, as well as an answer to a SWRL query, is shown in Fig. 9.1.

3D visualization of a spatial resquest in SWRL: Amphorae(?a) ^ swrlArp:isCloseTo(?a, “IdTargetAmphora”, 6.2) ^ hasTypologyName(?a, “Pitecusse_365”) -> sqwrl:select(?a). Means select all amphorae with the typology Pitecusse_365 and at a maximum distance of 6.2 m from the amphorae labelled IdTargetAmphora

Our ontology also provides a spatial description as georeferencing of each artefact and all the archaeological knowledge, including relationships provided by archaeologists. Based on our procedural attachment approach, we built a mechanism which allows for the evaluation and visualization of spatial queries from SWRL rules. We are currently extending this approach in a 3D information system dedicated to archaeological survey based on photogrammetric survey and knowledge representation for spatial reasoning.

Finally, we draw the reader’s attention to the fact that our ontology has been recently published in the Linked Open Vocabulary (LOV 2018; Vandenbussche et al. 2017) which offers users a keywords search service indexing more than 600 vocabularies in its current version. The LOV indexed all terms in our ontology and provides an online profile metadata ARP (2018) that offers our work a better visibility and allows terms reuse for the ontology meta-designers in different communities.

9.3.2 Application in Nautical Archaeology

The applications to query, visualize, and evaluate survey data acquired through photogrammetric survey methods have the potential to revolutionize nautical archaeology (as evidenced by many chapters in this volume). Even the simplest utilization of off-the-shelf photogrammetry software combined with consumer-grade portable computers and without the need for special graphics hardware, can greatly simplify and expedite the recording of underwater archaeological sites (Yamafune et al. 2016). The development of a theory of knowledge in nautical archaeology will certainly change the paradigm of this discipline, which is hardly half a century old and is still struggling to define its aims and methodologies.

The first and most obvious implication of any process of automation of underwater recording, independent from the operating depths, is the economic benefit. Automated survey methods save time and can be more precise. The second implication is the treatment and storing of primary data, which is still artisanal and performed according to the taste and means of the archaeologists in charge; it is seldom stored with consideration for the inevitable reanalysis that new paradigms and the development of new equipment will eventually dictate. Primary data are traditionally treated in nautical archaeology as propriety of the principal investigator and are often lost, as archaeologists move on, retire, or die. The lack of a methodology to record shipwrecks (Castro et al. 2017) makes it difficult to develop comparative studies, aiming at finding patterns in trade, sailing techniques, and shipbuilding, to cite only three examples where data from shipwreck excavations are almost always truncated or unpublished.

The application of an ontology and a set of logical rules for the identification, definition, and classification of measurable objects is a promising methodology to assess, gather, classify, relate, and analyse large sets of data. Nautical archaeology is a recent sub-discipline of archaeology and attempts to record shipwrecks under the standards commonly used in land archaeology started after 1960. The earliest steps of this discipline were concerned with recording methodology and accuracy in underwater environments. These environments are difficult and impose a number of practical constraints, such as reduced bottom working time, long decompression periods, cold, low visibility, a narrower field of vision, surge, current, or depth. Since the inception of nautical archaeology, theoretical studies aimed at identifying patterns and attempting to address larger anthropological questions related to culture change have emerged. The number of shipwrecks excavated and published, however, makes the sample sizes too small to allow for broad generalizations. Few seafaring cultures have been studied and understood well enough to allow a deep understanding of their history, culture, and development. Classical and Viking seafaring cultures provide two European examples where data from land excavations and historical documents help archaeologists to understand the ships and cargoes excavated, but there is a lack of an organized body of data pertaining to most maritime landscapes and cultures, and a lack of organization of the material culture in relational libraries. Through our work we hope to provide researchers from different marine sciences with both better archives and appropriate tools for the facilitation of their work. This in turn will improve the conditions to the development of broader anthropological studies, for instance, evaluating and relating cultural change in particular areas and time periods.

The study of the history of seafaring is the study of the relations of humans with rivers, lakes, and seas, which started in the Palaeolithic. An understanding of this part of our past entails the recovery, analysis, and publication of large amounts of data, mostly through non-intrusive survey methods. The methodology proposed in GROPLAN aims at simplifying the collection and analysis of archaeological data, and at developing relations between measurable objects and concepts. It builds upon the work of Steffy (1994), who in the mid-1990s developed a database of ship components. This shipbuilding information, segmented in units of knowledge, tried to encompass a wide array of western shipbuilding traditions—which developed through time and space—and establish relations between conception and construction traits in a manner that allowed comparisons between objects and concepts. Around a decade later Carlos Monroy transformed Steffy’s database into an ontological representation in RDF-OWL, and expanded its scope to potentially include other archaeological materials (Monroy 2010; Monroy et al. 2011). After establishing a preliminary ontology, completed through a number of interviews with naval and maritime archaeologists, Monroy combined the database with a multi-lingual glossary and built a series of relational links to textual evidence that aimed at contextualizing the archaeological information contained in the database. His work proposed the development of a digital library that combined a body of texts on early modem shipbuilding technology, tools to analyse and tag illustrations, a multi-lingual glossary, and a set of informatics tools to query and retrieve data (Monroy 2010; Monroy et al. 2006, 2007, 2008, 2009, 2011).

Our approach extends these efforts into the collection of data, expands the analysis of measurable objects, and lays the base for the construction of extensive taxonomies of archaeological items. The applications of this theoretical approach are obvious. It simplifies the acquisition, analysis, storage, and sharing of data in a rigorous and logically supported framework. These two advantages are particularly relevant in the present political and economic world context, brought about by the so-called globalization and the general trend it entailed to reduce public spending in cultural heritage projects. The immediate future of naval and maritime archaeology depends on a paradigm change. Archaeology is no longer the activity of a few elected scholars with the means and the power to define their own publication agendas. The survival of the discipline depends more than ever on the public recognition of its social value. Cost, accuracy, reliability (for instance established through the sharing of primary data), and its relationship with society’s values, memories and amnesias, are already influencing the amount of resources available for research in this area. Archaeologists construct and deconstruct past narratives and have the power to impact society by making narratives available that illustrate the diversity of the human experience in a world that is less diverse and more dependent on the needs of world commerce, labour, and capital.

The main objective of this work is the development of an information system based on ontologies and capable of establishing a methodology to acquire, integrate, analyse, generate, and share numeric contents and associated knowledge in a standardized and homogenous form. In 2001 the UNESCO Convention on the Protection of the Underwater Cultural Heritage established the necessity of making all archaeological data available to the public (UNESCO 2001). According to UNESCO around 97% of the children of the planet are in school, and 50% have some access to the internet. In one generation archaeology ceases to be a closed discipline and it is likely that a diverse pool of archaeologists from all over the world will multiply our narratives of the past and enrich our experience with new viewpoints and better values. This work is fully built upon this philosophy, and shows a way to share and analyse archaeological data widely and in an organized manner.

9.4 Artefact Recognition: The Use of Deep Learning

9.4.1 The Overall Process Using a Deep Learning Approach

This work aims to detect amphorae on the orthophoto of the Xlendi shipwreck. This image, however, does not contain a lot of examples and so it is complicated to train a machine learning model. Moreover, we cannot easily train the model from another shipwreck to learn an amphorae model because it is difficult to find another orthophoto which contains amphorae with the same topology. So, we propose to use a deep learning approach that is proving its worth in many research fields and shows the best performance on different competitions as ImageNet (Russakovsky et al. 2014) with deep networks (He et al. 2015; Simonyan and Zisserman 2014; Szegedy et al. 2014). We use a Convolution Neural Network (CNN) in order to train the shape of various and different amphorae and the context of the ground. Then we propose to use a transfer learning process to fine-tune our model over the Xlendi shipwreck amphorae. This approach allows us to train the model using a small part of the Xlendi database. Underwater objects are rarely found in a perfect state. Indeed, they can be covered by sediments or biological growth, or by another object, and they are often broken. It is common that amphora necks are separated from an amphora’s body. We want to detect all the amphora pieces by performing a pixel segmentation which consists of adopting a pixel-wise classification approach on the orthophoto (Badrinarayanan et al. 2015; Shelhamer et al. 2016). To improve the model, we define three classes: the underground, the body of the amphora and the head of the amphora; which are the rim, the neck and the handles respectively. After the pixel segmentation, we group pixels with similar probabilities together to get an object segmentation.

9.4.2 The Proposed Convolution Neural Network

The CNN is composed of a series of layers in which each layer takes as input the output of the previous layer. The first layer is named the input layer and takes as input the testing or the training image. The last layer is the output of the network and gives a prediction map. The output of a layer, noted l in the network, is called a feature map and is noted f l. In this work, we use four different types of layers: convolution layers, pooling layers, normalization layers and deconvolution layers. We explain the different types of layers in the following:

Convolution layers are composed of convolutional neurons. Each convolutional neuron applies the sum of 2D convolutions between the input feature maps and its kernel. In the simple case where only one feature map is passed to the input convolutional neuron, the 2D convolution between the kernel noted Kof size w × h and the input feature map I ∈ ℝ 2 is I ∗ K and is defined as:

where (x,y) are the coordinates of a given pixel into the output feature map.

In the case of neural convolutional neural networks, a neuron takes as input each of p feature maps of the previously layer noted Il with I ∈ {0. . p}. The resulting feature map is the sum of p2D convolutions between the kernel Kland the map Iland is defined as:

Basically, we apply a nonlinear transformation after the convolution step in order to solve nonlinear classification problems. The most commonly used function is the Rectify Linear Unit (ReLU) which is defined by f(x) = max (x, 0) (Krizhevsky et al. 2012).

Pooling layers quantify the information while reducing the data volume. They apply a sliding window on the image which processes a specific operation. The two most used methods consist in selecting only the maximum or the mean value between different data in the sliding window.

Normalization layers scale the feature maps. The two most used methods dedicated to normalizing are the Local Response Normalization (LRN) and the Batch Normalization (BN) (Ioffe and Szegedy 2015). In this work we use the batch normalization which scales each value of the feature maps depending on the mean and the variance of this value in the batch of images. Moreover, the batch normalization has two hyperparameters which are learned during the training step to scale the importance of the different feature maps in a layer and to add a bias value to the feature map.

Deconvolution layers are the transpose of the convolution layers.

To perform the learning step, parameters of convolution and deconvolution are tuned using a stochastic gradient descent (Bottou 1998). This optimization process is costly but is compatible with parallel processing. In this work we use the Caffe (Jia et al. 2014) framework to train our CNN. The results are obtained using a GTX 1080 card, packed in 2560 cores with a 1.733 GHz base. Our CNN architecture is composed of seven convolution layers, three pooling layers and three deconvolution layers.

9.4.3 Classification Results

We train our CNN on images coming from another site and then we use a small part of the Xlendi image to fine-tune the weights of the CNN. On the Xlendi Image we have only used 20 amphorae as training examples. Results are given on Fig. 9.2 where we can see that all the amphorae in the testing image are detected. The false positives are mainly located on the grind stones. This error is due to the small size of the training database. Indeed, during the pre-training step there are not grinding stone examples in the used images, then during the tuning step only a few grind stone examples are represented. On the segmentation pixel image, the recall is around 57% and the precision around 71%. The recall is low because the edges of the amphorae are rarely detected since the probability is the highest at the middle of each amphora and then it decreases rapidly toward the edges. For the object detection map, the noise is removed and so the recall is close to 100% and the precision is around 80% (Fig. 9.3).

9.5 2D Representation: From Orthophoto to Metric Sketch

Cultural heritage representation has been completely transformed in the last 40 years. Computer graphics introduced in this field, high resolution 3D survey, and 3D modelling and image synthesis, built on surveys results nearly indistinguishable from reality. But even if this huge production of photorealism increased during the last decade, photorealism is far from a drawing made by an expert; the interpretation phase is missing even if it is accurate and detailed. On the other hand, 2D representations are still important, easy to manipulate, transfer, publish and annotate. We are working on producing 2D accurate and detailed documents, easily accessible even if they have an important resolution. For example the high resolution orthophoto of the Xlendi site is accessible through the GROPLAN web site (Drap 2016) using IIPImage (Pitzalis and Pillay 2009).

Even if photorealistic 3D or 2D documents are still providing better quality images, we want to replicate the effects of hand-drawn documents, in 2D. These are designed to look like documents traditionally produced by archaeologists and merge two important qualities: they are detailed and accurate, and they respect a common graphical convention used by the archaeological community. These kinds of images, produced with Non-Photorealistic Rendering or Deep Learning as detailed below, have an extra meaning: ‘selection.’ Photorealism provides the same relevance to all objects in the scene. Hand-drawings and NPR images pick-up only objects with specific properties, hence a selection is made by knowledge. Here we present a work-in-progress and two research directions aimed to produce such products’; one uses 2D documents as orthophoto and the other is based on dense 3D models.

9.5.1 Style Transfer to Sketch the Orthophoto

Image style here is defined by the way of drawing without the content. The style transfer applies the style of a given image to another image. In deep learning this kind of method is well known, to transfer the style of an artist to a real image, see Fig. 9.4. Using the approach of (Gatys et al. 2016) we apply a sketch style to the image. The style A draws different patches of leafs on the output. The resulting image obtained with the style B is blurred and the edges are sheared because the sketch is composed of points, but not of a solid line.

The style D creates some horizontal patterns which are similar to the waves. The style C gives the best visual result, even if the representation of grind stones on the top of the image disappears. This type of image, however, is unusable by archaeologists because it is an artistic vision which does not spotlight the interest regions.

We propose to learn the relevant sketch using a machine learning process on a part of the Xlendi orthophoto. Then we propose to use an architecture similar to the previous one to learn the sketch process. The sketch of Xlendi created by the CNN is given on Fig. 9.7 (bottom right). The weakness of this approach is evident when objects that are not known by the model are present, because they are not in the training database. Figure 9.5 is focused on a grind stone with a yellow starfish above. Since there is no starfish in our database, the algorithm counts it as a rim of amphora. To avoid this error, we increase the number of examples in the training database.

9.5.2 From 3D Models to NPR: Non-photorealistic Rendering

Several archaeological representation styles found in technical manuals (Bianchini 2008) and published articles (Sousa et al. 2003) are in fact close to the NPR results. These kinds of representation are useful to communicate and to make illustration more readable, direct and clearer.

As of a few years ago, researchers have been trying to extract features from 3D models, such as lines, contours, ridges and valleys, apparent contours and so on, and to compare their results with sketches. This kind of representation, NPR, has been developed for illustration but it is not so much used for archaeology and cultural heritage. Several typologies of representations for 3D archaeological artefacts have been studied during recent years (DeCarlo et al. 2003; Jardim and de Figueiredo 2010; Judd et al. 2007; Raskar 2001; Roussou and Drettakis 2003; Tao et al. 2009; Xie et al. 2014). An interesting open source approach, called Suggestive Contour Software (DeCarlo and Rusinkiewicz 2007), allows visualization of 3D models built with mesh by NPR and gives several options to modify final results with various parameters (occlusion contours, suggestive contours, ridges and valley, etc.). The link between accuracy and sketch is a question considered by several scientists in the field of computer vision and virtual reality (Bénard et al. 2014). More recent research in this field now uses deep learning and AI (Bylinskii et al. 2017; Gatys et al. 2016).

Concerning cultural heritage and archaeological artefacts, some morphological properties of amphorae are well represented with NPR rendering (Fig. 9.6). It is simple to identify handles, rims and necks when we extract ‘contours’ or ‘ridges and valleys’ because archaeologists and algorithms are looking at the same properties. The curvature of the body is substantial for an amphora and is one of the keys to identify its typology, but it is also a geometrical property enhanced by NPR algorithms. In contrast to image contour extraction, the NPR renderings studied here work on 3D models, which enable the use of the normal surface to accurately extract contours from overlapping shapes.

Some amphorae on Xlendi site. On the left, an NPR image made using the called Suggestive Contour Software (DeCarlo et al. 2003). On the right, the same portion of Xlendi site mesh model, acquired with photogrammetric survey

An orthophoto of an excavation area has too much extra information, and the goal is to extract the important information contained in the image and to leave out the rest. NPR algorithms can be useful for that because NPR acts as a filter, using information that is not available within the images: the surface normals. Beginning from a 3D model and a point of view, NPR rendering produces an image that is fairly close those obtained through expert interpretation (Fig. 9.7).

Nevertheless, these positive results from NPR are partially caused by a specific configuration of the scene: in Xlendi, amphorae are lying on a flat seabed or are partially covered by sediment. The seabed is mainly uniform and flat so the normal analysis done by the NPR process focus on protruding amphorae and tend to minimize the seabed. These morphological differences can be enhanced by tuning the numerous NPR parameters to highlight the relevant part of the 3D model. It seems that each kind of scene requires a bespoke set of parameters, so the most promising results need to involve knowledge of the process and the experiments in deep learning appear to present an opportunity to progress these ideas.

9.6 Virtual Reality for the General Public

Public dissemination is also important. Once we are able to offer an accurate and appropriate visualization tool to experts, with the correct graphical form, NPR for example, a visualization tool for the general public is possible. The collected data on site and photogrammetry can be used both to extract relevant knowledge, and to produce a facsimile of the site. Even if this approach does not generate specific archaeological knowledge, it offers the possibility to ‘visit’ an unreachable archaeological site. This can be an interesting feature for the general public, but of course it also allows experts from around the world to have access to an exceptional underwater archaeological site by means of a high resolution and accurate 3D modelling. We also propose to that users visualize and explore an archaeological site using Virtual Reality (VR) technology. This visualization is made of both photorealistic and semantic representations of the observed objects including amphorae and other archaeological material. A user can freely navigate the site, switch from one representation to another, and interact with the objects by means of dedicated controllers. The different representations that are visualized in the tool, can be seen as queries mixing geometry, photorealistic rendering, and knowledge (for example: display the amphorae colorized by type on the site).

Although software rendering packages such as Unity, Sketchfab and Unreal Engine, or APIs such as OpenVR, HTC Vive, SDK already exist, we have chosen to develop our own solution.

The work presented here is based on the Arpenteur project (2018; Drap 2017) that provides geometrical computation capabilities, photogrammetric features and representation and processing of knowledge within ontologies. We chose to use jMonkeyEngine (jME 2018) as a basis for our development, which is a game engine made especially for modern 3D development, as it uses shader technology. 3D games can be written for a large set of devices using this engine. jMonkeyEngine is written in Java and uses Lightweight Java Game Library (LWJGL 2018) as its renderer. Indeed, it provides high level functionalities for scene description while retaining a power of display comparable to native libraries.

A first experiment of such a tool was presented during a CNRS symposium in Marseilles in May 2017. The innovative exhibition (IE 2018) can be seen on Fig. 9.8. This demonstrator enables the display of an underwater archaeological site in Malta. Inside the demonstrator, the user can view the site in a photorealistic mode. It can even simulate underwater conditions using a filter and for example visualize the site as if it was underwater or as if it was outdoors. The user can also display the cargo of the wreck as described by the archaeologists and mix the photorealism and the NPR. This demonstrator will be enhanced for visualizing the result of ontology-based queries and allowing an archaeologist to formulate and verify hypotheses with the impression of being on the site. For the general public, the immersion capacity can be used to visit inaccessible sites.

9.7 New 3D Technologies: The Plenoptic Approach

In traditional photogrammetry, it is necessary to completely scan the target area with many redundant photos taken from different points of view. This procedure is time consuming and frustrating for large sites. For example, thousands of photos have been captured to cover Xlendi shipwreck. Whereas many 3D reconstruction technologies are developed for terrestrial sites relying mostly on active sensors such as laser scanners, solutions for underwater sites are limited. The idea of plenoptic camera (also called lightfield camera) is to simulate a 2D array of aligned tiny cameras. In practice, this can be achieved by placing an array of a micro-lenses between the image sensor and main lens as shown in Fig. 9.9. In this way, the raw image obtained contains information about the position and direction of all light beams present in the image field, so that the scene can be refocused at any depth plane, but it is also possible to obtain different views from a single image after capturing. The first demonstration of this technique was published by Ng et al. (2005).

In a plenoptic camera, pairs of close micro lenses can be considered as a stereo pair under the condition of having parts of the scene appearing in both acquired micro images.Footnote 1 By applying a stereo feature points matching to those micro images it is possible to estimate a corresponding depth map. It is essential during image acquisition to respect a certain distance to the scene to remain within the working depth range. This working depth range can be enlarged but at a price of depth levels accuracy.Footnote 2 In practice, the main lens focus should be set beyond the image sensor, in this case, each micro lens will produce a slightly different micro image for the object with respect to its neighbours. This allows the operator to have a depth estimation of the object.

Recent advances in digital imaging resulted in the development and manufacture of high quality commercial plenoptic cameras. The main manufacturers are Raytrix (2017) and Lytro (Lytro 2017). While the latter is concentrating on image refocusing and off-line enhancement, the first is focusing on 3D reconstruction and modelling. The plenoptic camera used in this project is a modified version of Nikon D800 by Raytrix. A layer that is composed of around 18,000 micro-lenses is placed in front of the 36.3 mega pixels CMOS original image sensor. The micro-lenses are of three types, which differ in their focal distance. This helps to enlarge the working depth range, by combining three zones that correspond to each lens type; namely; near, middle and far range lens. The projection size of micro lens (micro images) can be controlled by changing the aperture of the camera. In our case, the maximum diameter of a micro image is around 38–45 pixels depending on lens type. Figure 9.10 illustrates two examples of captured plenoptic images showing micro images taken at two different aperture settings.

Our goal is to make this approach work in the marine environment so that only one shot is enough to obtain a properly scaled 3D model, which is a great advantage underwater, where diving time and battery capacity are limited. The procedure to work with a plenoptic camera is described in the following. Figure 9.11 shows examples of plenoptic underwater images and reconstructed 3D model.

Micro-lens Array (MLA)—Calibration step: computes the camera’s intrinsic parameters, which include the position and the alignment of the MLA. The lightfield camera returns an uncalibrated raw image containing the pixels’ intensity values read by the sensor during shooting. The position of each individual micro lens is to be identified in the raw image. To localize the exact position of each the micro lens in the raw image, a calibration image is taken using a white diffusive filter generating continuous illumination to highlight the edges and vignetting of each micro lens as shown in Fig. 9.10 (left). Using simple image processing techniques, it is possible to localize the exact position of each micro-lens which corresponds to its optical centre up to subpixel accuracy. In the same way, by taking another calibration image after changing the aperture of the camera so that the exterior edges of micro-lens are touching (Fig. 9.10), we could detect the micro-lens outer edges by circle fitting with the help of the computed centres positions. Hence, for any new image without the filter it is possible to extract micro images easily. Finally, a metric calibration must also be performed to convert from pixels to metric units. Here, the use of a calibration grid enables to determine accurately the internal geometry of the micro-lens array. The calibration results provide many parameters: intrinsic parameters, orientation of MLA, tilt of the MLA with respect to image sensor as well as distortion parameters.

MLA Imaging—each micro lens will produce an image for a small part of the scene, from the opposite side, every part of the captured scene is projected to several micro lenses at different angles. Hence, it is possible to produce a synthesized image with a focus plane considered at some distance. This is done by defining for each point in the object space the micro lenses where a certain point is projected. By selecting the projected points in each micro image, the average pixel value is considered the final pixel colour in the focused image.

Total focus processing—in the used plenoptic camera from Raytrix (this holds also for other brands such as Lytro) there are three types of micro lenses with different focal throughout the MLA. Each type of micro lens is considered as a sub-array and it has certain depth of focus. So that the three depths of focus associated to the three types are added together for more depth range. Here, it is possible to reconstruct a full focus image with a large field of depth using the all types of lenses to compute a single sharp image. For more details on the total focus process, we refer to Perwass and Wietzke (2012).

Depth map computation—a brute force stereo points matching is performed among neighbouring micro-lenses. The neighbourhood is an input parameter that defines the maximum distance on the MLA between two neighbouring micro-lenses. This process can be easily run in parallel. This is achieved using Raytrix RxLive software which relies totally on the GPU. The total processing time does not exceed 400 milliseconds per image. Next, using the computed calibration parameters, each matched point is triangulated in 3D in order to obtain sparse point cloud.

Dense point cloud and surface reconstruction—in this step, all pixel data in the image are used to reconstruct the surface in 3D using iterative filling and bilateral filtering for smoother depth intervals.

9.8 Conclusions

This chapter addresses a number of relevant problems related to the acquisition, analysis, and dissemination of archaeological data from underwater contexts. Underwater archaeology is expensive and computers are streamlining its processes and, perhaps more importantly, promise to increase the accuracy of the recording process and make it available to a wider number of scholars. This trend is changing the enduring individualistic paradigm and pushing archaeology to a team-based discipline, where knowledge is acquired and narratives are constructed in a continuous, iterative process, more similar to that of the hard sciences. The circulation of primary data is a fundamental step in this trend, expected to transform archaeological interpretations into something closer to community projects, where narratives are constructed and deconstructed in a much more exciting and dynamic process than the traditional ones, where publications often took decades to appear. We have presented several techniques that have the potential to make successful underwater archaeological surveys quicker, cheaper, and more accurate.

Data acquisition and processing using photogrammetry allow the capture of an impressive amount of underwater site features and details. The representation of photogrammetry data using ontologies has two main benefits. The first is to facilitate data sharing between researchers with different backgrounds, such as archaeologists and computer scientists. The second is to improve and expand data analysis and to identify patterns or to generate different statistics using a simple query language that is close to natural language. The proposed set of tools also allows researchers to create sketched images that are close to what is commonly used and produced by archaeologists. The proposed automatic detection and recognition method, using deep learning, promises to be tremendously useful, particularly at larger sites, given the amount of effort it saves. Our experiment with plenoptic cameras are is one of few attempts found in literature so far to apply this technique to underwater archaeology and appears fruitful. This is an avenue of research that we intend to pursue in the near future.

Finally, using virtual reality to visualize the 3D data has produced a countless number of applications, both for pedagogical purposes and as a means to share archaeological discoveries with the public, inviting a wider audience to participate in the production process, and promoting and raising the awareness of the underwater heritage. Survey and representation are always guided by intentions, like archaeological excavation: we try to find, or find out, to measure, and record. This human action is based on choices and selections, even if they appear to be unconscious. The goal is not objectivity, but how we can guide and make those choices and selections explicit. Our answer is to enlarge the knowledge base using several resources: ontologies to create relations between measurable objects and concepts, improve analysis and sharing knowledge, and NPR and Deep Learning to improve object recognition and representation of artefacts.

Notes

- 1.

There is no common term in literature used for the projection of a single micro lens. In this book, we refer to it as micro image.

- 2.

Despite the usage of subpixel accuracy, recovered depth is roughly discrete.

References

Amico N, Ronzino P, Felicetti A, Niccolucci F (2013) Quality management of 3D cultural heritage replicas with CIDOC-CRM. In: Paper presented at the CEUR workshop, La Valetta, Malta, 26 September 2013, vol 1117, pp 61–69. CEUR-WS.org

Ancuti CO, Ancuti C, De Vleeschouwer C, Garcia R (2017) A semi-global color correction for underwater image restoration. ACM SIGGRAPH 2017 Posters, Los Angeles

Aragón E, Munar S, Rodríguez J, Yamafune K (2018) Underwater photogrammetric monitoring techniques for mid-depth shipwrecks. J Cult Herit. https://doi.org/10.1016/j.culher.2017.12.007. (in press)

Araújo C, Martini RG, Rangel Henriques P, Almeida JJ (2018) Annotated documents and expanded CIDOC-CRM ontology in the automatic construction of a virtual museum. In: Rocha Á, Reis LP (eds) Developments and advances in intelligent systems and applications. Studies in computational intelligence, vol 718. Springer, pp 91–110. https://doi.org/10.1007/978-3-319-58965-7

ARP (2018) Arpenteur ontology, linked open vocabularies. http://lov.okfn.org/dataset/lov/vocabs/arp. Accessed 6 Sept 2018

Arpenteur (2018) Built-in operators. http://www.arpenteur.org/ontology/ArpenteurBuiltInLibrary.owl. Accessed 6 Sept 2018

Badrinarayanan V, Kendall A, Cipolla R (2015) SegNet: a deep convolutional encoder-decoder architecture for image segmentation. arXiv:1511.00561 [cs]

Balletti C, Beltrame C, Costa E, Guerra F, Vernier P (2016) 3D reconstruction of marble shipwreck cargoes based on underwater multi-image photogrammetry. Digit Appl Archaeol Cult Herit 3(1):1–8. https://doi.org/10.1016/j.daach.2015.11.003

Bass GF (1966) Archaeology under water. Thames and Hudson, Bristol

Bénard P, Hertzmann A, Kass M (2014) Computing smooth surface contours with accurate topology. ACM Trans Graph 33(2):19. https://doi.org/10.1145/2558307

Bianchini M (2008) Manuale di rilievo e di documentazione digitale in archeologia. Aracne

Bing L, Chan KCC, Carr L (2014) Using aligned ontology model to convert cultural heritage resources into semantic web. In: Paper presented at the 2014 IEEE international conference on Semantic Computing, Newport Beach, CA, 16–18 June 2014, pp 120–123. https://doi.org/10.1109/ICSC.2014.39

Bizer C, Heath T, Berners-Lee T (2009) Linked data-the story so far. In: Sheth A (ed) Semantic services, interoperability and web applications: emerging concepts. Kno.e.sis Center, Wright State University, Dayton, pp 205–227

Bodenmann A, Thornton B, Nakajima R, Ura T (2017) Methods for quantitative studies of seafloor hydrothermal systems using 3D visual reconstructions. ROBOMECH J 4:22. https://doi.org/10.1186/s40648-017-0091-5

Bottou L (1998) On-line learning and stochastic approximations. In: Saad D (ed) On-line learning in neural networks. Cambridge University Press, Cambridge, pp 9–42

Bruno F, Bianco G, Muzzupappa M, Barone S, Razionale AV (2011) Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J Photogramm Remote Sens 66(4):508–518

Bruno F, Lagudi A, Gallo A, Muzzupappa M, Davidde Petriaggi B, Passaro S (2015) 3D documentation of archaeological remains in the underwater park of Baiae. Int Arch Photogramm, Remote Sens Spat Inf Sci XL-5/W5:41–46. https://doi.org/10.5194/isprsarchives-XL-5-W5-7-2015

Buchsenschutz O (2007) Images et relevés archéologiques, de la preuve à la démonstration. Papers presented at the 132e congrès national des sociétés historiques et scientifiques, Arles. Les éditions du comité des travaux historiques et scientifiques

Bylinskii Z, Wook Kim N, O’Donovan P, Alsheikh S, Madan S, Pfister H, Durand F, Russell B, Hertzmann A (2017) Learning visual importance for graphic designs and data visualizations. In: Paper presented at the 30th Annual ACM symposium on user interface software & technology. arXiv:1708.02660 [cs.HC] https://doi.org/10.1145/3126594.3126653

Capra A, Dubbini M, Bertacchini E, Castagnetti C, Mancini F (2015) 3d reconstruction of an underwater archaeological site: comparison between low cost cameras. Int Arch Photogramm Remote Sens Spat Inf Sci XL-5/W5:67–72. https://doi.org/10.5194/isprsarchives-XL-5-W5-67-2015

Castro F, Drap P (2017) A arqueologia marítima e o future/Maritime archaeology and the future. VESTÍGIOS—Rev Lat-Am Arqueologia Hist 11(1):40–55

Castro F, Bendig C, Bérubé M, Borrero R, Budsberg N, Dostal C, Monteiro A, Smith C, Torres R, Yamafune K (2017) Recording, publishing, and reconstructing wooden shipwrecks. J Marit Archaeol 13(1):55–66. https://doi.org/10.1007/s11457-017-9185-8

Chen Z, Zhang Z, Bu Y, Dai F, Fan T, Wang H (2018) Underwater object segmentation based on optical features. Sensors 18(1):196. https://doi.org/10.3390/s18010196

Curé O, Sérayet M, Papini O, Drap P (2010) Toward a novel application of CIDOC CRM to underwater archaeological surveys. In: Paper presented at the 4th IEEE international conference on Semantic Computing, ICSC 2010, Pittsburgh, 22–24 September 2010, pp 519–524. https://doi.org/10.1109/ICSC.2010.104

Decarlo D, Rusinkiewicz S (2007) Highlight lines for conveying shape. In: Paper presented at the international symposium on Non-Photorealistic Animation and Rendering (NPAR), August 2007

Decarlo D, Finkelstein A, Rusinkiewicz S, Santella A (2003) Suggestive contours for conveying shape. ACM Trans Graph (Proc SIGGRAPH) 22(3):848–855

Drap P, Merad DD, Mahiddine A, Seinturier J, Peloso D, Boï J-M, Chemisky B, Long L (2013) Underwater photogrammetry for archaeology: what will be the next step? Int J Herit Digit Era 2(3):375–394. https://doi.org/10.1260/2047-4970.2.3.375

Drap P, Merad D, Hijazi B, Gaoua L, Saccone MMNM, Chemisky B, Seinturier J, Sourisseau J-C, Gambin T, Castro F (2015) Underwater photogrammetry and object modeling: a case study of Xlendi wreck in Malta. Sensors 15:30351–30384. https://doi.org/10.3390/s151229802

Drap P (2016) GROPLAN web site: GROPLAN ontology and photogrammetry; Generalizing surveys in underwater and nautical archaeology. The GROPLAN project web site: http://www.groplan.eu. Web page available: http://www.groplan.eu. Last Access date: 2018-12-06

Drap P (2017) ARPENTEUR, an Architectural PhotogrammEtry Network Tool for EdUcation and Research, The ARPENTEUR project web site: http://www.arpenteur.org. Web page available: http://www.arpenteur.org. Last Access date: 2018-12-06

Gaitanou P, Gergatsoulis M, Spanoudakis D, Bountouri L, Papatheodorou C (2016) Mapping the hierarchy of EAD to VRA Core 4.0 through CIDOC CRM. In: Garoufallou E, Subirats Coll I, Stellato A, Greenberg J (eds) Metadata and semantics research: proceedings of the 10th international conference, MTSR 2016, Göttingen, Germany, 22–25 November 2016. Springer, pp 193–204. https://doi.org/10.1007/978-3-319-49157-8

Gatys LA, Ecker AS, Bethge M, Hertzmann A, Shechtman E (2016) Controlling perceptual factors in neural style transfer. arXiv:1611.07865 [cs.CV]

GROPLAN (2018) GROPLAN: Généralisation du relevé, avec ontologies et photogrammétrie, pour l’archéologie navale et sous-marine. http://www.groplan.eu. Accessed 6 Sept 2018

He K, Zhang X, Ren S, Sun J (2015) Deep residual learning for image recognition. arXiv:1512.03385 [cs]

Hiebel G, Hanke K, Hayek I (2010) Methodology for CIDOC CRM based data integration with spatial data. Contreras F, Melero FJ (eds) CAA’2010 fusion of cultures: proceedings of the 38th conference on computer applications and quantitative methods in archaeology, Granada, April 2010, pp 1–8

Hiebel G, Doerr M, Hanke K, Masur A (2014) How to put archaeological geometric data into context? Representing mining history research with CIDOC CRM and extensions. Int J Herit Digit Era 3(3):557–578. https://doi.org/10.1260/2047-4970.3.3.557

Hiebel G, Doerr M, Eide Ø (2016) CRMgeo: a spatiotemporal extension of CIDOC-CRM. Int J Digit Libr 18(4):271–279. https://doi.org/10.1007/s00799-016-0192-4

Horridge M, Knublauch H, Rector A, Stevens R, Wroe C (2004) A practical guide to building OWL ontologies using the protege-OWL plugin and CO-ODE tools edition 1.0

Hu H, Zhao L, Li X, Wang H, Liu T (2018) Underwater image recovery under the nonuniform optical field based on polarimetric imaging. IEEE Photon J 10(1):1–9. https://doi.org/10.1109/JPHOT.2018.2791517

Hué J, Sérayet M, Drap P, Papini O, Würbel E (2011) Underwater archaeological 3D surveys validation within the removed sets framework. In: Liu W (ed) Symbolic and quantitative approaches to reasoning with uncertainty, ECSQARU 2011. Lecture notes in computer science, vol 6717. Springer, Berlin, pp 663–674. https://doi.org/10.1007/978-3-642-22152-1_56

IE (2018) Innovative exhibition. http://innovatives.cnrs.fr/. Accessed 6 Sept 2018

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv:1502.03167 [cs]

jME (2018) jMonkeyEngine. http://jmonkeyengine.org/. Accessed 6 Sept 2018

Jardim E, De Figueiredo LH (2010) A hybrid method for computing apparent ridges. In: 23rd conference on graphics, patterns and images (SIBGRAPI), Gramado, 30 August–3 September 2010. https://doi.org/10.1109/SIBGRAPI.2010.24

Ježek P, Mouček R (2015) Semantic framework for mapping object-oriented model to semantic web languages. Front Neuroinform 9(3):1–15. https://doi.org/10.3389/fninf.2015.00003

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, Guadarrama S, Darrell T (2014) Caffe: convolutional architecture for fast feature embedding. In: MM’14 proceedings of the 22nd ACM international conference on Multimedia. Orlando, 3–7 November 2014. ACM, New York, pp 675–678. https://doi.org/10.1145/2647868.2654889

Judd T, Durand F, Adelson E (2007) Apparent ridges for line drawing. ACM Trans Graph 26(3):19. https://doi.org/10.1145/1276377.1276401

Kalyanpur A, Pastor DJ, Battle S, Padget JA (2004) Automatic mapping of OWL ontologies into java. In: Paper presented at the SEKE, pp 98–103

Keßler C, Raubal M, Wosniok C (2009) Semantic rules for context-aware geographical information retrieval. In: Barnaghi P, Moessner K, Presser M, Meissner S (eds) Proceedings of smart sensing and context: 4th European conference, EuroSSC 2009, Guildford, UK, 16–18 September 2009. Springer, Berlin, pp 77–92

Kraus K (1997) Photogrammetry: advanced methods and applications (vols 1 and 2). Dummlerbush, Bonn

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Pereira F, Burges CJC, Bottou L, Weinberger KQ (eds) NIPS’12 Proceedings of the 25th international conference on neural information processing systems, vol 1. Curran Associates, pp 1097–1105

Lodi G, Asprino L, Nuzzolese AG, Presutti V, Gangemi A, Recupero DR, Veninata C, Orsini A (2017) Semantic web for cultural heritage valorisation. In: Hai-Jew S (ed) Data analytics in digital humanities. Multimedia systems and applications. Springer, pp. 3–37. https://doi.org/10.1007/978-3-319-54499-1

LOV (2018) Linked open vocabularies. http://lov.okfn.org/dataset/lov/. Accessed 6 Sept 2018

Lowe D (1999) Object recognition from local scale-invariant features. In: Paper presented at the international conference on computer vision, Corfu, Greece, September 1999, pp 1150–1157

LWJGL (2018) Lightweight Java Game Library. https://www.lwjgl.org/. Accessed 6 Sept 2018

Lytro (2017) Lytro ILLUM https://www.lytro.com/. Accessed 17 Aug 2017

Martorelli M, Pensa C, Speranza D (2014) Digital photogrammetry for documentation of maritime heritage. J Marit Archaeol 9(1):81–93. https://doi.org/10.1007/s11457-014-9124-x

McCarthy JK, Benjamin J (2014) Multi-image photogrammetry for underwater archaeological site recording: an accessible, diver-based approach. J Marit Archaeol 9(1):95–114. https://doi.org/10.1007/s11457-014-9127-7

McGuinness DL, van Harmelen F (2004) OWL web ontology language overview. http://www.w3.org/TR/owl-features/, February 2004. World Wide Web Consortium (W3C) recommendation

Menna F, Nocerino E, Troisi S, Remondino F (2015) Joint alignment of underwater and above—the photogrammetric 3d models by independent models adjustment. International archives of the photogrammetry, remote sensing and spatial information sciences, XL-5/W5:143–151. https://doi.org/10.5194/isprsarchives-XL-5-W5-143-2015

Moisan E, Charbonnier P, Foucher P, Grussenmeyer P, Guillemin S, Koehl M (2015) Adjustment of sonar and laser acquisition data for building the 3D reference model of a canal tunnel. In: Paper presented at the Sensors

Monroy C (2010) A digital library approach to the reconstruction of ancient sunken ships. PhD dissertation, Texas A&M University

Monroy C, Parks N, Furuta R, Castro F (2006) The nautical archaeology digital library. In: Proceedings of the 10th European conference on research and advanced technology for digital libraries, Alicante, Spain

Monroy C, Furuta R, Castro F (2007) A multilingual approach to technical manuscripts: 16th- and 17th-century Portuguese shipbuilding treatises. In: Proceedings of the 7th ACM/IEEE-CS joint conference on Digital libraries, Vancouver, BC, Canada, 8–23 June 2007, pp 413–414. https://doi.org/10.1145/1255175.1255258

Monroy C, Furuta R, Castro F (2008) Design of a computer-based frame to store, manage, and divulge information from underwater archaeological excavations: the Pepper wreck case. In: Paper presented at the Society for Historical Archaeology annual meeting, Sacramento

Monroy C, Furuta R, Castro F (2009) Ask not what your text can do for you. Ask what you can do for your text (a dictionary’s perspective). Digital Humanities, pp 344–347

Monroy C, Furuta R, Castro F (2011) Synthesizing and storing maritime archaeological data for assisting in ship reconstruction. In: Ford CB, Alexis (eds) Oxford handbook of maritime archaeology. Oxford University Press (Pub.), pp 327–346

Nawaf MM, Hijazi B, Merad D, Drap P (2016) Guided underwater survey using semi-global visual odometry. In: Paper presented at the COMPIT 15th international conference on computer applications and information technology in the maritime industries, Lecce, pp 287–301

Nawaf MM, Drap P, Royer J-P, Saccone M, Merad D (2017) Towards guided underwater survey using light visual odometry. Int Arch Photogramm, Remote Sens Spat Inf Sci Arch XLII-2/W3:527–533. https://doi.org/10.5194/isprs-archives-XLII-2-W3-527-2017

Ng R, Levoy M, Brédif M, Duval G, Horowitz M, Hanrahan P (2005) Light field photography with a hand-held plenoptic camera. Stanford University Computer Science Tech Report CSTR 2005-02:1–10

Niang C, Marinica C, Markhoff B, Leboucher E, Malavergne O, Bouiller L, Darrieumerlou C, Francois Laissus F (2017) Supporting semantic interoperability in conservation-restoration domain: the PARCOURS project. J Comput Cult Herit 10(3):1–20. Special Issue on Digital Infrastructure for Cultural Heritage, Part 2. https://doi.org/10.1145/3097571

Niccolucci F (2016) Documenting archaeological science with CIDOC CRM. Int J Digit Libr 18(3):223–231. https://doi.org/10.1007/s00799-016-0199-x

Niccolucci F, Hermon S (2016) Expressing reliability with CIDOC CRM. Int J Digit Libr 18(4):281–287. https://doi.org/10.1007/s00799-016-0195-1

Noardo F (2017) A spatial ontology for architectural heritage information. In: Grueau C, Laurini R, Rocha JG (eds) Geographical information systems theory, applications and management: second international conference, GISTAM 2016, Rome, Italy, 26–27 April 2016, Revised Selected Papers. Communications in computer and information science book series, vol 741. Springer, pp 143–163. https://doi.org/10.1007/978-3-319-62618-5

O’Byrne M, Pakrashi V, Schoefs F, Ghosh B (2018) A stereo-matching technique for recovering 3D information from underwater inspection imagery. Comput Aided Civ Inf Eng 33(3):193–208. https://doi.org/10.1111/mice.12307

O’Connor MJ, Das A (2006) A mechanism to define and execute SWRL Built-ins in Protégé-OWL

Ozog P, Troni G, Kaess M, Eustice RM, Johnson-Roberson M (2015) Building 3D mosaics from an autonomous underwater vehicle, doppler velocity log, and 2D imaging sonar. In: 2015 IEEE international conference on robotics and automation (ICRA), Seattle, WA, 26–30 May 2015, pp 1137–1143. https://doi.org/10.1109/ICRA.2015.7139334

Pasquet J, Demesticha S, Skarlatos D, Merad D, Drap P (2017) Amphora detection based on a gradient weighted error in a convolution neuronal network. In: Paper presented at the IMEKO international conference on Metrology for Archaeology and Cultural Heritage, Lecce, Italy, 23–25 October 2017, pp 691–695

Perwass C, Wietzke L (2012) Single lens 3D-camera with extended depth-of-field. In: Paper presented at the Human Vision and Electronic Imaging

Pitzalis D, Pillay R (2009) Il sistema IIPImage: un nuovo concetto di esplorazione di immagini ad alta risoluzione. Archeol Calcolatori Suppl 2:239–244

Pizarro O, Friedman A, Bryson M, Williams SB, Madin J (2017) A simple, fast, and repeatable survey method for underwater visual 3D benthic mapping and monitoring. Ecol Evol 7(6):1770–1782. https://doi.org/10.1002/ece3.2701

Raskar R (2001) Hardware support for non-photorealistic rendering. ACM Trans Graph/Proc ACM SIGGRAPH 2001:41–47. https://doi.org/10.1145/383507.383525

Raytrix (2017) Raytrix, 3D light field camera technology. https://www.raytrix.de/. Accessed 17 Aug 2017

Roman C, Inglis G, Rutter J (2010) Application of structured light imaging for high resolution mapping of underwater archaeological sites. In: Paper presented at the OCEANS 2010 IEEE, Sydney, 24–27 May 2010, pp 1–9

Roussou M, Drettakis G (2003) Photorealism and non-photorealism in virtual heritage representation. In: Proceedings of the 4th international conference on virtual reality, archaeology and intelligent cultural heritage, Brighton

Roy S, Yan MF (2012) Method and system for creating owl ontology from java. In: Limited infosys technologies editor, Google Patents

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2014) ImageNet large scale visual recognition challenge. arXiv:1409.0575 [cs]

Secci M (2017) Survey and recording technologies in Italian underwater cultural heritage: research and public access within the framework of the 2001 UNESCO Convention. J Marit Archaeol 12:109–123. https://doi.org/10.1007/s11457-017-9174-y

Seinturier J (2007) Fusion de connaissances: applications aux relevés photogrammétriques de fouilles archéologiques sous-marines. PhD dissertation, Université du Sud Toulon Var

Sérayet M (2010) Raisonnement à partir d’information structurées et hiérarchisées: application à l’information archéologique. PhD dissertation, Université de la Méditerranée

Sérayet M, Drap P, Papini O (2009) Encoding the revision of partially preordered information in answer set programming. In: Sossai C, Chemello G (eds) Symbolic and quantitative approaches to reasoning with uncertainty: 10th European Conference, ECSQARU 2009, Verona, Italy, 1–3 July 2009, ECSQARU 2009. Lecture notes in computer science, vol 5590. Springer, Berlin, pp 421–433. https://doi.org/10.1007/978-3-642-02906-6_37

Shelhamer E, Long J, Darrell T (2016) Fully convolutional networks for semantic segmentation. arXiv:1605.06211 [cs]

Shortis MR (2015) Calibration techniques for accurate measurements by underwater camera systems. Sensors 15(12):30810–30826. https://doi.org/10.3390/s151229831

Shortis MR, Harvey ES, Abdo DA (2009) A review of underwater stereo-image measurement for marine biology and ecology applications. In: Gibson RN, Atkinson RJA, Gordon JDM (eds) Oceanography and marine biology: an annual review, vol 47. CRC Press, Boca Raton, pp 257–292

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 [cs]

Sousa MC, Foster K, Wyvill B, Samavati F (2003) Precise ink drawing of 3D models. Comput Graph Forum 22:369–379

Steffy JR (1994) Wooden ship building and the interpretation of shipwrecks. Texas A&M University Press, College Station

Stevenson G, Dobson S (2011) Sapphire: generating Java Runtime artefacts from OWL ontologies. In: Salinesi C, Pastor O (eds) Proceedings of the advanced information systems engineering workshops: CAiSE 2011 International Workshops, London, UK, 20–24 June 2011. Springer, Berlin, pp 425–436

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2014) Going deeper with convolutions. arXiv:1409.4842 [cs]

Tao L, Renju L, Hongbin Z (2009) 3D line drawing for archaeological illustration. In: Paper presented at the computer vision workshops (ICCV Workshops), 2009 IEEE 12th international conference, 27 September–4 October 2009, pp 907–914