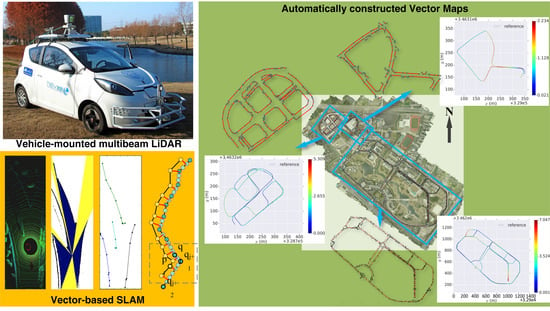

Automatic Vector-Based Road Structure Mapping Using Multibeam LiDAR

Abstract

:1. Introduction

- A vector-based SLAM method is explored for the automatic road structure mapping.

- A fast and precise polyline-based matching method is proposed to align vector-based local maps generated by multiframe probabilistic fusion.

- The optimization and reconstruction of local vector-based maps into a global vector map of the road structure is achieved.

2. Related Work

2.1. Vector-Based SLAM

2.2. Road Structure Detection

2.3. Road Structure Matching

2.4. Road Structure Vectorization

3. Approach

3.1. Road Boundary Extraction

3.1.1. Single-Frame Road Boundary Extraction

3.1.2. Multiframe Probabilistic Fusion

3.2. Vector-Based SLAM Method

3.2.1. Local Vectorization of Road boundaries

3.2.2. Polyline-Based Matching between LVMs

3.2.3. Loop Detection and Optimization

3.3. LVM Fusion by Reconstruction

3.3.1. Probe-Based Sampling

3.3.2. Fusion by Reconstruction

4. Experimental Analysis

4.1. Experiment I—Road Boundary Mapping of a Loop Road

4.1.1. Road Boundary Extraction and Matching

4.1.2. Evaluation of the Trajectory

4.1.3. Evaluation of the Map

4.2. Experiment II—Road Boundary Mapping of the IV Evaluation Field

4.2.1. Evaluation of the Trajectory

4.2.2. Evaluation of the Map

4.3. Experiment III—Road Boundary Mapping of the Campus

4.3.1. Evaluation of the Trajectory

4.3.2. Evaluation of the Map

5. Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Seif, H.G.; Hu, X. Autonomous Driving in the iCity—HD Maps as a Key Challenge of the Automotive Industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Joshi, A.; James, M.R. Generation of Accurate Lane-Level Maps from Coarse Prior Maps and Lidar. IEEE Intell. Transp. Syst. Mag. 2015, 7, 19–29. [Google Scholar] [CrossRef]

- Zhao, J.; Ye, C.; Wu, Y.; Guan, L.; Cai, L.; Sun, L.; Yang, T.; He, X.; Li, J.; Ding, Y.; et al. TiEV: The Tongji Intelligent Electric Vehicle in the Intelligent Vehicle Future Challenge of China. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1303–1309. [Google Scholar]

- Bender, P.; Ziegler, J.; Stiller, C. Lanelets: Efficient map representation for autonomous driving. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Ypsilanti, MI, USA, 8–11 June 2014; pp. 420–425. [Google Scholar]

- Aeberhard, M.; Rauch, S.; Bahram, M.; Tanzmeister, G. Experience, Results and Lessons Learned from Automated Driving on Germany’s Highways. IEEE Intell. Transp. Syst. Mag. 2015, 7, 42–57. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2017, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Hata, A.; Wolf, D. Road marking detection using LIDAR reflective intensity data and its application to vehicle localization. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014; pp. 584–589. [Google Scholar]

- Jeong, J.; Cho, Y.; Kim, A. Road-SLAM: Road marking based SLAM with lane-level accuracy. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Redondo Beach, CA, USA, 11–14 June 2017; pp. 1736–1743. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweeden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Hata, A.Y.; Wolf, D.F. Feature Detection for Vehicle Localization in Urban Environments Using a Multilayer LIDAR. IEEE Trans. Intell. Transp. Syst. 2016, 17, 420–429. [Google Scholar] [CrossRef]

- Sohn, H.J.; Kim. VecSLAM: An Efficient Vector-Based SLAM Algorithm for Indoor Environments. J. Intell. Robot. Syst. 2009, 56, 301–318. [Google Scholar] [CrossRef]

- Kuo, B.W.; Chang, H.H.; Chen, Y.C.; Huang, S.Y. A Light-and-Fast SLAM Algorithm for Robots in Indoor Environments Using Line Segment Map. J. Robot. 2011, 2011, 12. [Google Scholar] [CrossRef]

- Jelinek, A. Vector maps in mobile robotics. Acta Polytech. Ctu Proc. 2015, 2, 22–28. [Google Scholar] [CrossRef]

- Dichtl, J.; Le, X.S.; Lozenguez, G.; Fabresse, L.; Bouraqadi, N. PolySLAM: A 2D Polygon-based SLAM Algorithm. In Proceedings of the IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Porto-Gondomar, Portugal, 24–26 April 2019; pp. 1–6. [Google Scholar]

- He, X.; Zhao, J.; Sun, L.; Huang, Y.; Zhang, X.; Li, J.; Ye, C. Automatic Vector-based Road Structure Mapping Using Multi-beam LiDAR. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems (ITSC), Auckland, New Zealand, 27–30 October 2018; pp. 417–422. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Dichtl, J.; Luc, F. PolyMap: A 2D Polygon-Based Map Format for Multi-robot Autonomous Indoor Localization and Mapping. In Intelligent Robotics and Applications; Springer: Cham, Switzerland, 2018; pp. 120–131. [Google Scholar]

- Borkar, A.; Hayes, M.; Smith, M.T. A Novel Lane Detection System With Efficient Ground Truth Generation. IEEE Trans. Intell. Transp. Syst. 2012, 13, 365–374. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Hata, A.Y.; Osorio, F.S.; Wolf, D.F. Robust curb detection and vehicle localization in urban environments. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Ypsilanti, MI, USA, 8–11 June 2014; pp. 1257–1262. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X.; Dolan, J.M. Road-Segmentation-Based Curb Detection Method for Self-Driving via a 3D-LiDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3981–3991. [Google Scholar] [CrossRef]

- Caltagirone, L.; Scheidegger, S.; Svensson, L.; Wahde, M. Fast LIDAR-based road detection using fully convolutional neural networks. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Redondo Beach, CA, USA, 11–14 June 2017; pp. 1019–1024. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 611–625. [Google Scholar] [CrossRef]

- Olson, E.B. Real-time correlative scan matching. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 1233–1239. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Hahnel, D.; Burgard, W.; Fox, D.; Thrun, S. A highly efficient FastSLAM algorithm for generating cyclic maps of large-scale environments from raw laser range measurements. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; Volume 1, pp. 206–211. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Schreiber, M.; Knöppel, C.; Franke, U. LaneLoc: Lane marking based localization using highly accurate maps. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 449–454. [Google Scholar]

- Barrow, H.G.; Tenenbaum, J.M.; Bolles, R.C.; Wolf, H.C. Parametric Correspondence and Chamfer Matching: Two New Techniques for Image Matching. Proc. Int. Jt. Conf. Artif. Intell. 1977, 2, 1961. [Google Scholar]

- Floros, G.; Zander, B.V.D.; Leibe, B. OpenStreetSLAM: Global vehicle localization using OpenStreetMaps. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1054–1059. [Google Scholar]

- Alshawa, M. lCL: Iterative closest line A novel point cloud registration algorithm based on linear features. Ekscentar 2007, 10, 53–59. [Google Scholar]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Pasadena, CA, USA, 19–23 May 2008; pp. 19–25. [Google Scholar]

- Gwon, G.P.; Hur, W.S.; Kim, S.W.; Seo, S.W. Generation of a Precise and Efficient Lane-Level Road Map for Intelligent Vehicle Systems. IEEE Trans. Veh. Technol. 2017, 66, 4517–4533. [Google Scholar] [CrossRef]

- Haklay, M.; Weber, P. Openstreetmap: User-generated street maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Betaille, D.; Toledo-Moreo, R. Creating Enhanced Maps for Lane-Level Vehicle Navigation. IEEE Trans. Intell. Transp. Syst. 2010, 11, 786–798. [Google Scholar] [CrossRef]

- Jo, K.; Sunwoo, M. Generation of a precise roadway map for autonomous cars. IEEE Trans. Intell. Transp. Syst. 2014, 15, 925–937. [Google Scholar] [CrossRef]

- Foroutan, M.; Zimbelman, J. Semi-automatic mapping of linear-trending bedforms using Self-Organizing Maps algorithm. Geomorphology 2017, 293, 156–166. [Google Scholar] [CrossRef]

- Montemerlo, M.; Becker, J.; Bhat, S.; Dahlkamp, H.; Dolgov, D.; Ettinger, S.; Haehnel, D.; Hilden, T.; Hoffmann, G.; Huhnke, B., Jr. The Stanford entry in the Urban Challenge. J. Field Robot. 2008, 25, 569–597. [Google Scholar] [CrossRef]

- Prasad, D.K.; Leung, M.K.; Quek, C.; Cho, S.Y. A novel framework for making dominant point detection methods non-parametric. Image Vis. Comput. 2012, 30, 843–859. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, H.D. A Method for Registration of 3D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- De Goes, F.; Cohen-Steiner, D.; Alliez, P.; Desbrun, M. An optimal transport approach to robust reconstruction and simplification of 2D shapes. Comput. Graph. Forum 2011, 30, 1593–1602. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Pandey, G.; McBride, J.R.; Eustice, R.M. Ford campus vision and lidar data set. Int. J. Robot. Res. 2011, 30, 1543–1552. [Google Scholar] [CrossRef]

- Grupp, M. evo: Python Package for the Evaluation of Odometry and SLAM. 2017. Available online: https://github.com/MichaelGrupp/evo (accessed on 21 June 2019).

- Čelko, J.; Kováč, M.; Kotek, P. Analysis of the Pavement Surface Texture by 3D Scanner. Transp. Res. Procedia 2016, 14, 2994–3003. [Google Scholar] [CrossRef] [Green Version]

| ICP-Based | Raw-LVM-Based | Simplified-LVM-Based | |

|---|---|---|---|

| Mean Absolute Error | 0.65 | 0.55 | 0.07 |

| Time Cost (ms) | 98.05 | 85.39 | 3.98 |

| max | Mean | Median | min | rmse | std | |

|---|---|---|---|---|---|---|

| Reckoning-based | 10.070 | 4.82 | 5.34 | 0.0148 | 5.448 | 2.536 |

| Optimized-based | 2.23 | 1.12 | 1.05 | 0.0212 | 1.232 | 0.512 |

| max | Mean | Median | min | rmse | std | |

|---|---|---|---|---|---|---|

| Reckoning-based | 7.91 | 2.47 | 2.37 | 0 | 2.972 | 1.652 |

| Optimized-based | 5.30 | 1.74 | 1.75 | 0.000212 | 1.896 | 0.741 |

| max | Mean | Median | min | rmse | std | |

|---|---|---|---|---|---|---|

| Reckoning-based | 104 | 38 | 36.470 | 0.000651 | 46.507 | 26.802 |

| Optimized-based | 7.04 | 2.61 | 2.43 | 0.000651 | 2.978 | 1.430 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, J.; He, X.; Li, J.; Feng, T.; Ye, C.; Xiong, L. Automatic Vector-Based Road Structure Mapping Using Multibeam LiDAR. Remote Sens. 2019, 11, 1726. https://doi.org/10.3390/rs11141726

Zhao J, He X, Li J, Feng T, Ye C, Xiong L. Automatic Vector-Based Road Structure Mapping Using Multibeam LiDAR. Remote Sensing. 2019; 11(14):1726. https://doi.org/10.3390/rs11141726

Chicago/Turabian StyleZhao, Junqiao, Xudong He, Jun Li, Tiantian Feng, Chen Ye, and Lu Xiong. 2019. "Automatic Vector-Based Road Structure Mapping Using Multibeam LiDAR" Remote Sensing 11, no. 14: 1726. https://doi.org/10.3390/rs11141726